Abstract

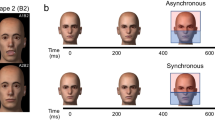

Human faces are fundamentally dynamic, but experimental investigations of face perception have traditionally relied on static images of faces. Although naturalistic videos of actors have been used with success in some contexts, much research in neuroscience and psychophysics demands carefully controlled stimuli. In this article, we describe a novel set of computer-generated, dynamic face stimuli. These grayscale faces are tightly controlled for low- and high-level visual properties. All faces are standardized in terms of size, luminance, location, and the size of facial features. Each face begins with a neutral pose and transitions to an expression over the course of 30 frames. Altogether, 222 stimuli were created, spanning three different categories of movement: (1) an affective movement (fearful face), (2) a neutral movement (close-lipped, puffed cheeks with open eyes), and (3) a biologically impossible movement (upward dislocation of eyes and mouth). To determine whether early brain responses sensitive to low-level visual features differed between the expressions, we measured the occipital P100 event-related potential, which is known to reflect differences in early stages of visual processing, and the N170, which reflects structural encoding of faces. We found no differences between the faces at the P100, indicating that different face categories were well matched on low-level image properties. This database provides researchers with a well-controlled set of dynamic faces, controlled for low-level image characteristics, that are applicable to a range of research questions in social perception.

Similar content being viewed by others

Notes

The impossible movement is not consistent with human face structure.

References

Autodesk. (2011). Softimage [Software]. CA: San Rafael. Author.

Balas, B., & Nelson, C. A. (2010). The role of face shape and pigmentation in other-race face perception: An electrophysiological study. Neuropsychologia, 48, 498–506. doi:10.1016/j.neuropsychologia.2009.10.007

Bassili, J. N. (1979). Emotion recognition: The role of facial movement and the relative importance of upper and lower areas of the face. Journal of Personality and Social Psychology, 37, 2049–2058.

Bentin, S., Allison, T., Puce, A., Perez, E., & McCarthy, G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8, 551–565. doi:10.1162/jocn.1996.8.6.551

Bentin, S., Sagiv, N., Mecklinger, A., Friederici, A., & von Cramon, D. Y. (2002). Priming visual face-processing mechanisms: Electrophysiological evidence. Psychological Science, 13, 190–193.

Bieniek, M. M., Frei, L. S., & Rousselet, G. A. (2013). Early ERPs to faces: Aging, luminance, and individual differences. Frontiers in Psychology, 4, 268. doi:10.3389/fpsyg.2013.00268

Bindemann, M., Burton, A. M., Hooge, I. T. C., Jenkins, R., & De Haan, E. H. F. (2005). Faces retain attention. Psychonomic Bulletin & Review, 12, 1048–1053.

Birmingham, E., Bischof, W. F., & Kingstone, A. (2008). Gaze selection in complex social scenes. Visual Cognition, 16, 341–355. doi:10.1080/13506280701434532

Blanz, V., & Vetter, T. (1999). A morphable model for the synthesis of 3D faces. In SIGGRAPH ’99: Proceedings of the 26th Annual Conference on Computer Graphics And Interactive Techniques (pp. 187–194). New York, NY: ACM Press.

Blau, V. C., Maurer, U., Tottenham, N., & McCandliss, B. D. (2007). The face-specific N170 component is modulated by emotional facial expression. Behavioral and Brain Functions, 3, 7. doi:10.1186/1744-9081-3-7

Bolling, D. Z., Pitskel, N. B., Deen, B., Crowley, M. J., McPartland, J. C., Kaiser, M. D., & Pelphrey, K. A. (2011). Enhanced neural responses to rule violation in children with autism: A comparison to social exclusion. Developmental Cognitive Neuroscience, 1, 280–294. doi:10.1016/j.dcn.2011.02.002

Cheung, C. H., Rutherford, H. J., Mayes, L. C., & McPartland, J. C. (2010). Neural responses to faces reflect social personality traits. Social Neuroscience, 5, 351–359. doi:10.1080/17470911003597377

Curio, C. B., Bülthoff, H. H., & Giese, M. A. (2011). Dynamic faces: Insights from experiments and computation. Cambridge, MA: MIT Press.

Edwards, J., Jackson, H. J., & Pattison, P. E. (2002). Emotion recognition via facial expression and affective prosody in schizophrenia: A methodological review. Clinical Psychology Review, 22, 1267–1285. doi:10.1016/S0272-7358(02)00162-9

Eimer, M., & Holmes, A. (2002). An ERP study on the time course of emotional face processing. NeuroReport, 13, 427–431.

Ekman, P., & Friesen, W. V. (1971). Constants across cultures in the face and emotion. Journal of Personality and Social Psychology, 17, 124–129. doi:10.1037/h0030377

Ekman, P., & Friesen, W. V. (1975). Unmasking the face: A guide to recognizing emotions from facial clues. Englewood Cliffs, NJ: Prentice-Hall.

Frank, M. C., Vul, E., & Johnson, S. P. (2009). Development of infants’ attention to faces during the first year. Cognition, 110, 160–170. doi:10.1016/j.cognition.2008.11.010

Freeman, J. B., Pauker, K., Apfelbaum, E. P., & Ambady, N. (2010). Continuous dynamics in the real-time perception of race. Journal of Experimental Social Psychology, 46, 179–185. doi:10.1016/j.jesp.2009.10.002

Gosselin, P., Perron, M., & Beaupre, M. (2010). The voluntary control of facial action units in adults. Emotion, 10, 266–271. doi:10.1037/A0017748

Gronenschild, E. H. B. M., Smeets, F., Vuurman, E. F. P. M., van Boxtel, M. P. J., & Jolles, J. (2009). The use of faces as stimuli in neuroimaging and psychological experiments: A procedure to standardize stimulus features. Behavior Research Methods, 41, 1053–1060. doi:10.3758/BRM.41.4.1053

Holmes, A., Vuilleumier, P., & Eimer, M. (2003). The processing of emotional facial expression is gated by spatial attention: Evidence from event-related brain potentials. Cognitive Brain Research, 16, 174–184.

Horley, K., Williams, L. M., Gonsalvez, C., & Gordon, E. (2004). Face to face: Visual scanpath evidence for abnormal processing of facial expressions in social phobia. Psychiatry Research, 127, 43–53. doi:10.1016/j.psychres.2004.02.016

Jack, R. E., Garrod, O. G., & Schyns, P. G. (2014). Dynamic facial expressions of emotion transmit an evolving hierarchy of signals over time. Current Biology, 24, 187–192. doi:10.1016/j.cub.2013.11.064

Klin, A., Jones, W., Schultz, R., Volkmar, F., & Cohen, D. (2002). Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Archives of General Psychiatry, 59, 809–816.

Krumhuber, E. G., Tamarit, L., Roesch, E. B., & Scherer, K. R. (2012). FACSGen 2.0 animation software: Generating Three-dimensional FACS-valid facial expressions for emotion research. Emotion, 12, 351–363. doi:10.1037/A0026632

Lindsen, J. P., Jones, R., Shimojo, S., & Bhattacharya, J. (2010). Neural components underlying subjective preferential decision making. NeuroImage, 50, 1626–1632. doi:10.1016/j.neuroimage.2010.01.079

Machado-de-Sousa, J. P., Arrais, K. C., Alves, N. T., Chagas, M. H., de Meneses-Gaya, C., Crippa, J. A., & Hallak, J. E. (2010). Facial affect processing in social anxiety: Tasks and stimuli. Journal of Neuroscience Methods, 193, 1–6. doi:10.1016/j.jneumeth.2010.08.013

Matheson, H. E., & McMullen, P. A. (2011). A computer-generated face database with ratings on realism, masculinity, race, and stereotypy. Behavior Research Methods, 43, 224–228. doi:10.3758/S13428-010-0029-9

McPartland, J., Dawson, G., Webb, S. J., Panagiotides, H., & Carver, L. J. (2004). Event-related brain potentials reveal anomalies in temporal processing of faces in autism spectrum disorder. Journal of Child Psychology & Psychiatry, 45, 1235–1245. doi:10.1111/J.1469-7610.2004.00318.X

McPartland, J., Cheung, C. H. M., Perszyk, D., & Mayes, L. C. (2010). Face-related ERPs are modulated by point of gaze. Neuropsychologia, 48, 3657–3660. doi:10.1016/j.neuropsychologia.2010.07.020

McPartland, J. C., Wu, J., Bailey, C. A., Mayes, L. C., Schultz, R. T., & Klin, A. (2011). Atypical neural specialization for social percepts in autism spectrum disorder. Social Neuroscience, 6, 436–451. doi:10.1080/17470919.2011.586880

Mosconi, M. W., Mack, P. B., McCarthy, G., & Pelphrey, K. A. (2005). Taking an “intentional stance” on eye-gaze shifts: a functional neuroimaging study of social perception in children. NeuroImage, 27, 247–252. doi:10.1016/j.neuroimage.2005.03.027

Picton, T. W., Bentin, S., Berg, P., Donchin, E., Hillyard, S. A., Johnson, R., & Taylor, M. J. (2000). Guidelines for using human event-related potentials to study cognition: Recording standards and publication criteria. Psychophysiology, 37, 127–152. doi:10.1017/S0048577200000305

Pike, G. E., Kemp, R. I., Towell, N. A., & Phillips, K. C. (1997). Recognizing moving faces: The relative contribution of motion and perspective view information. Visual Cognition, 4, 409–438.

Pitcher, D., Dilks, D. D., Saxe, R. R., Triantafyllou, C., & Kanwisher, N. (2011). Differential selectivity for dynamic versus static information in face-selective cortical regions. NeuroImage, 56, 2356–2363. doi:10.1016/j.neuroimage.2011.03.067

Recio, G., Sommer, W., & Schacht, A. (2011). Electrophysiological correlates of perceiving and evaluating static and dynamic facial emotional expressions. Brain Research, 1376, 66–75. doi:10.1016/j.brainres.2010.12.041

Roesch, E. B., Tamarit, L., Reveret, L., Grandjean, D., Sander, D., & Scherer, K. R. (2011). FACSGen: A tool to synthesize emotional facial expressions through systematic manipulation of facial action units. Journal of Nonverbal Behavior, 35, 1–16. doi:10.1007/S10919-010-0095-9

Rossion, B., & Caharel, S. (2011). ERP evidence for the speed of face categorization in the human brain: Disentangling the contribution of low-level visual cues from face perception. Vision Research, 51, 1297–1311. doi:10.1016/j.visres.2011.04.003

Rousselet, G. A., & Pernet, C. R. (2011). Quantifying the time course of visual object processing using ERPs: It’s time to up the game. Frontiers in Psychology, 2, 107. doi:10.3389/fpsyg.2011.00107

Sasson, N., Tsuchiya, N., Hurley, R., Couture, S. M., Penn, D. L., Adolphs, R., & Piven, J. (2007). Orienting to social stimuli differentiates social cognitive impairment in autism and schizophrenia. Neuropsychologia, 45, 2580–2588. doi:10.1016/j.neuropsychologia.2007.03.009

Schneider, W., Eschman, A., & Zuccolotto, A. (2002). E-Prime user’s guide: Psychology Software Tools, Inc.

Schyns, P. G., Bonnar, L., & Gosselin, F. (2002). Show me the features! Understanding recognition from the use of visual information. Psychological Science, 13, 402–409.

Schyns, P. G., Petro, L. S., & Smith, M. L. (2007). Dynamics of visual information integration in the brain for categorizing facial expressions. Current Biology, 17, 1580–1585. doi:10.1016/j.cub.2007.08.048

Singular Inversions. (2010). FaceGen [Software]. Toronto, ON: Author.

Tolles, T. (2008). Practical considerations for facial motion capture. In Z. Deng & U. Neumann (Eds.), Data-driven 3D facial animation. London: Springer.

Tsao, D. Y., & Livingstone, M. S. (2008). Mechanisms of face perception. Annual Review of Neuroscience, 31, 411–437. doi:10.1146/annurev.neuro.30.051606.094238

Tucker, D. M. (1993). Spatial sampling of head electrical fields: The geodesic sensor net. Electroencephalography and Clinical Neurophysiology, 87, 154–163.

Vuilleumier, P., Armony, J. L., Driver, J., & Dolan, R. J. (2003). Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nature Neuroscience, 6, 624–631. doi:10.1038/nn1057

Wann, J., Nimmo-Smith, I., & Wing, A. M. (1988). Relation between velocity and curvature in movement: Equivalence and divergence between a power law and a minimum-jerk model. Journal of Experimental Psychology: Human Perception and Performance, 14, 622–637. doi:10.1037/0096-1523.14.4.622

Wheatley, T., Weinberg, A., Looser, C., Moran, T., & Hajcak, G. (2011). Mind perception: Real but not artificial faces sustain neural activity beyond the N170/VPP. PLoS ONE, 6, e17960. doi:10.1371/journal.pone.0017960

Yang, E., Zald, D. H., & Blake, R. (2007). Fearful expressions gain preferential access to awareness during continuous flash suppression. Emotion, 7(4), 882.

Yu, H., Garrod, O. G. B., & Schyns, P. G. (2012). Perception-driven facial expression synthesis. Computers & Graphics-UK, 36, 152–162. doi:10.1016/j.cag.2011.12.002

Yue, X., Cassidy, B. S., Devaney, K. J., Holt, D. J., & Tootell, R. B. H. (2011). Lower-level stimulus features strongly influence responses in the fusiform face area. Cerebral Cortex, 21, 35–47. doi:10.1093/cercor/bhq050

Author information

Authors and Affiliations

Corresponding authors

Appendix: Converting image sequences to movie files

Appendix: Converting image sequences to movie files

Several free and commercial software packages can convert numbered image sequences into movies. We recommend Virtualdub (www.virtualDub.org), which is free and open-source. For instructions on opening an image sequence as a movie, instructions are available here:

Rights and permissions

About this article

Cite this article

Naples, A., Nguyen-Phuc, A., Coffman, M. et al. A computer-generated animated face stimulus set for psychophysiological research. Behav Res 47, 562–570 (2015). https://doi.org/10.3758/s13428-014-0491-x

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13428-014-0491-x