Abstract

Depending on the goal, one can selectively process the metric depth or the ordinal depth information in the same scene. It is unknown whether the metric depth and ordinal depth information are processed through a shared or different underlying mechanisms. Here, we investigated the processing of the metric depth and ordinal depth using visual search. Items were presented at multiple depth planes defined by the binocular disparity, with one item per depth plane. In the metric-search task, participants were required to search for the target on a particular depth plane, among one to three distractors. In the ordinal-search task, the target was specified by its depth order indicated by numbers (smaller numbers indicated nearer depth planes). We found that the ordinal search was faster and more accurate than the metric search, and the data showed a pattern of dissociation. Metric search, but not ordinal search, was slowed when the target and distractors were closer in depth, while ordinal search was slower for the middle than the edge positions but metric search was unaffected. These two opposite effects suggest that metric depth and ordinal depth may be processed differently.

Similar content being viewed by others

Introduction

When searching in a scene, visual information is actively selected for further processing by bottom-up processes, usually referring to the influence of salience captured in low-level factors (e.g., color, contrast, and texture), and top-down processes, reflecting the influence of high-level internal factors such as endogenous attention, motivation and task requirements (Awh et al., 2012; Gazzaley & Nobre, 2012; Wolfe, 2021). The top-down modulation on visual processing not only affects where or what is to be attended, but also how the information is processed and represented in the mind. Depending on the goal of a task, one may interpret the same information in different ways. For example, in a task of judging an object’s exact distance from the observer, it is crucial to perceive its metric depth (e.g., it is 60 cm away from the observer). However, in the situation of understanding the layout of a scene, such as figure-ground segregation, it is more important to perceive the ordinal depth, i.e., knowing which one is nearer and which is farther (Moore et al., 2001). The present study investigated the top-down modulation effects on depth processing.

Depth information can be derived from a variety of depth cues, such as binocular disparity, relative size, occlusion, etc. (Norman & Todd, 1998). In this study, we focus on stereoscopic depth perception, which allows us to examine both metric and ordinal depths generated from binocular disparity. Past studies have shown that our ability to discriminate the metric stereoscopic depth interval is excellent (the threshold is about 10 arcsec; McKee & Taylor, 2010), and the ability to discriminate the order of two disparity-defined depth positions is even more precise (Norman & Todd, 1998).

One explanation for the better performance on ordinal depth is that the precision required for encoding ordinal depth is less than for metric depth and thus the task is easier. Since obtaining the metric depth requires registering the exact retinal disparities and scaling the disparities by viewing distance, while depth order can be judged directly from the relative disparity between two items, the task of discriminating metric depth would demand more precise encoding of depth information and thus is more difficult than the task of discriminating ordinal depth. Recent studies showed that performance in detecting changes in metric depth was poor (Qian & Zhang, 2019; Zhang et al., 2021; also see review by Reeves & Qian, 2021), however, it was greatly improved when ordinal depth change is involved (Qian et al., 2020). Since their participants received no instruction to attend to changes in depth order and the change magnitude on retinal images remained constant with or without ordinal depth change, participants should encode the depth information with a fixed precision, regardless of ordinal depth change that might occur during the test stage. Therefore, the enhanced performance for detecting ordinal depth change suggested differences in processing between metric and ordinal depths beyond the precision required for encoding (also see Fang et al., 2022).

The same depth information in a scene can be encoded and interpreted as both metric depth and ordinal depth, which may evoke different processing mechanisms. Since no previous study has examined the effects of interpreting the same depth as different types of information, it is unclear whether the processing of metric and ordinal depths would show patterns of dissociation under the top-down modulation of task. The present study aimed to address this question. Using a visual search task, participants were required to find a target presented at a particular metric depth position or at a particular depth order. The search array exhibited the exact same visual information under different task instructions. By comparing the search efficiency, we were able to find out how top-down modulation affects the way in which depth information is processed.

To investigate this question, the potential factors that may affect the processing of metric and ordinal depths need to be identified. If different processing mechanisms underlie the two types of information, we should be able to observe patterns of dissociation: there should be factor(s) that can affect the processing of metric depth, but not the processing of the ordinal depth, and vice versa. Conversely, if these factors influence the processing of both types of depth information, whether similarly or differently, this suggests that the two types of depth may involve the same or shared processing mechanism.

For metric depth information, one such particular factor may be the spatial separation between depth positions, since the distance between items determines how spatially distinguishable and separable the two items are, and thus may affect the precision of processing metric depth. de la Rosa et al. (2008) found that when searching for a target presented among distractors that were distributed on two depth planes, the speed of search increased with the separation of the two planes. However, this finding cannot differentiate whether the magnitude of spatial separation affects the processing of metric depth or ordinal depth, or both. Because their task involved only two different depths, the participants could identify the two planes either based on their exact metric positions or just based on their depth order (the nearer one and the farther one). Therefore, we examined this factor in the present study.

The factor that primarily affects ordinal depth can be related to the specific type of spatial relation in depth among items, since ordinal depth information defines the spatial relation of an item’s depth position with respect to the other positions. To our knowledge, no previous study has provided a clear distinction between different types of spatial relation, but there have been some hints: edge locations play an important role in perceiving the form or the spatial volume of an object (Grossberg & Mingolla, 1985), and therefore may involve prioritized processing and encoding compared to the other less distinct spatial locations. For depth perception, the farthest objects may serve as an anchor that helps to enhance the precision of perceiving the intermediate depths (Sousa et al., 2011). In addition, studies on working memory suggested that edge depth locations are better memorized than middle ones (Qian & Zhang, 2019), which may be related to the enhanced allocation of attention and memory resource at the boundaries (Wang et al., 2021). Based on the evidence, we proposed that an item’s spatial relation can be classified into one of two types – whether it is at the edge of the stimuli sequence (nearest and farthest), or at the middle positions that are sandwiched by the others. We examined this factor in the present study.

To summarize, the present study investigated whether the top-down modulation of task affected the processing of depth information using a visual search task. We examined whether the efficiency of searching metric/ordinal depth depends on the depth separation and spatial relation between the target and distractors. Our hypotheses were: (1) spatial separation affects search efficiency for metric depth but not ordinal depth; (2) spatial relation affects search efficiency for ordinal depth but not metric depth. This pattern of dissociation, if confirmed, can shed light on the different underlying mechanisms of processing the two types of depth, which can be evoked by top-down modulation.

Method

Participants

Sixteen participants (three males; mean age = 25.5 years) with normal or corrected-to-normal vision took part in this study. All participants passed a screening test (for details, see Qian & Zhang, 2019) to ensure that they could differentiate different stereoscopic depths tested in the experiments. We received ethical approval for this investigation from the Institutional Review Board (IRB), Department of Psychology, Sun Yat-Sen University. Written informed consent was obtained from each participant prior to all the experiments.

Stimuli

The stimuli were viewed against a uniform gray background (102 cd/m2) through a Wheatstone stereoscope on a pair of 21-in. ViewSonic monitors. The display resolution was set to 1,920 × 1,080 pixels, with a refresh rate of 60 Hz. The viewing distance was 75 cm.

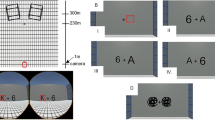

The search array was composed of a set of blue squares (0.8° × 0.8° each, 10.5 cd/m2) and a fixation cross placed at the center of the screen (see Fig. 1). There were two, three, or four items (set size) in the search array, among which there was one target item. Each item was shown at the upper left, lower left, upper right, or lower right of the screen, which was 3.5° away from the central fixation. All items were located at different depth planes perpendicular to the line of sight. The depth position of the target (target depth) was selected from one of four depth planes at the retinal disparities of -0.24°, -0.08°, 0.08°, and 0.24° (corresponding to -3.5, -1.2, 1.2, and 3.8 cm from the monitor screen with a typical inter-pupillary distance of 6.5 cm). These disparities were selected so that the left- and right-eye images could be reliably fused and the items clearly appeared to be separated in depth (Rawlings & Shipley, 1969). There was an equal number of trials for each depth plane to be selected as the target depth.

Stimuli and procedure in the metric-search task and the ordinal-search task. Top: Task sequences. Bottom: The side view of the search array. The items are outlined with various types of lines to indicate their different depth positions. Target depth was outlined by a red line. No line was presented in the formal experiments

We manipulated the depth separation between the target and its nearest distractor. In the small separation condition, the depth position of the nearest distractors had a relative disparity of either +0.16° or -0.16° away from the target; in the large separation condition, the nearest distractor depth has a relative disparity of either +0.40° or -0.40° away from the target. For set sizes of 3 and 4, the other distractor depth(s) was randomly selected from a range of -0.56° to +0.56° with the constraint that the depth separation between the distractor(s) and the target should be larger than that between the target and its nearest distractor, and any two distractors were separated by a disparity of at least 0.16°.

To examine the effect of the spatial relation on the visual search task, we defined the edge condition as the situation where the target was either at the nearest or farthest depth position in the search array, and defined the middle condition as the situation where the target was located in between the two distractors. However, since there were only two items presented for a set size of 2 and the two items were both at the edges (edge condition), we did not include the performance at the set size of 2 in the main analysis.

Procedure

Observers were seated in a dark room to complete experiment tasks. They were trained for a short time (2–5 min) to become acquainted with the stimuli and the task. At the beginning of each trial, a target array that indicated the target depth was presented. In the metric-search task, the target array was otherwise identical to the search array (there were the same number of items), except that all squares were presented at the target depth. This ensured that the participants were able to accurately perceive and note the target depth that they needed to search for at the specified potential two-dimensional (2-D) planar locations, without alerting them as to which one would be the actual target. In the ordinal-search task, the squares in the target array were replaced with Arabic numerals, which represented the depth order of the target – "1" indicated the nearest depth position in the search array, "2" indicated the second nearest, and so on. Observers were free to become familiarized with the target array for as long as they wished, and then confirmed that they felt they had accurately perceived the target depth/depth order by pressing a key. A mask display was presented for 200 ms immediately following the disappearance of the target array. The mask display was composed of bundles of 2 × 2 blue squares (0.8° × 0.8° for each square, 1.6° × 1.6° for each bundle), with the center of each bundle aligned with the center of each item in the target array. The depth of each blue square in a bundle randomly occupied one of the four possible target depths without replacement. Following the mask display, the search array was presented. The observers were asked to find the target as quickly as they could, and pressed a key upon target detection. To test whether the participants had successfully found the target, the search array was then replaced by a frame array with all boxes presented at 0°, and the observers were asked to indicate the 2-D planar location of the target by pressing a key corresponding to the location. During the whole trial, the participants were instructed to fixate at a central black cross subtended 1.3° × 1.3°. A diagram of the task sequences is shown in Fig. 1.

Each observer completed a metric-search block and an ordinal-search block. There was a total of 192 trials in each block, with 32 trials per separation per set size. For set sizes 3 and 4, there was an equal number of trials in which the target was at the edge and at the middle. The order of the experimental blocks was balanced across observers and the trials were randomized in order.

Data analysis

The response time (RT) and search accuracy (percent correct) were recorded. Participants' median RTs for each condition were used for the subsequent analyses. For the aforementioned reason, the median RTs for set size 2 were reported but not included in the further analyses.

For the metric-search task and the ordinal-search task, we conducted a 2 × 2 × 2 (spatial separation × spatial relation × set size) repeated-measures ANOVA on RT for set sizes 3 and 4. Greenhouse-Geisser corrections were used if the assumption of sphericity was violated. The Bayes factors (BFs) were also reported to provide additional information regarding whether evidence supported the null hypothesis (H0) when p > 0.05. The BF analyses were run using the default priors (Rouder et al., 2012) of the BayesFactor package (Morey et al., 2018) implemented in R, with the number of iterations of the Markov chain Monte Carlo (MCMC) set at 500,000 (Atkinson et al., 2022). A BF10 > 3 indicates substantial evidence for the presence of an effect (i.e., alternative hypothesis, H1), while BF10 < 1/3 indicates evidence for the absence of an effect (i.e., H0) (Jeffreys, 1961).

Since the boundaries were composed of the nearest (front) and farthest (rear) depth positions, we also conducted a 2 (spatial separation) × 2 (edge: front vs. rear) × 3 (set size: 2 vs. 3 vs. 4) repeated-measures ANOVA for each task to test whether the two edge positions had differential effects on RT. The main results were consistent with the previously conducted ANOVAs and the effects of different edge conditions did not interact with the effects of spatial separation or set size (see Online Supplementary Material (OSM) for detailed reports), suggesting that both the front and the rear positions can be considered jointly as boundaries.

Results

The average median RT in the metric-search task was 1,424±860 ms, and 1,104±650 ms in the ordinal-search task. The mean accuracy was 0.89 ± 0.06 in the metric-search task and 0.97±0.03 in the ordinal-search task. These results suggest that there was no speed-accuracy trade-off.

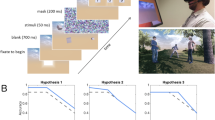

The metric-search task

The results are shown in Fig. 2. The average median RTs for set size 2 were 1,363 ± 725 ms in the small separation condition and 1,153 ±766 ms in the large separation condition. The 2 × 2 × 2 repeated-measures ANOVA showed that the main effect of spatial separation (small separation: 1,629 ± 977 ms; large separation: 1,476 ±929 ms) was significant, F(1, 15) = 7.39, p = 0.016; ηp2 = 0.33; BF10 = 4.02. The main effect of set size (set size 3: 1 434 ±846 ms; set size 4: 1,670 ±1,048 ms) was significant, F(1, 15) = 18.79, p = 0.001; ηp2 = 0.56; BF10 = 390.91. The main effect of spatial relation was not significant, F(1, 15) = 0.75, p = 0.399; ηp2 = 0.05; BF10 = 0.28. All the two-way and three-way interactions were not significant, Fs < 1.68, ps > 0.215, BF10s < 0.47.

Median response times (RTs) in the metric-search task as a function of set size. a RTs for the small and large spatial separation conditions (collapsed across the spatial relation conditions). b RTs for the edge or middle conditions (collapsed across spatial separation conditions). c RTs in the small separation and large separation conditions when the target was at the front (edge) depth position. d RTs in the small separation and large separation conditions when the target was at the middle. e RTs in the small separation and large separation conditions when the target was at the rear (edge). Error bars represent ± 1 SEMnorm (Franz & Loftus, 2012)

The ordinal-search task

The results are shown in Fig. 3. The average median RTs for set size 2 were 1,041 ±694 ms in the small separation condition and 921 ±621 ms in the large separation condition. The 2 × 2 × 2 repeated-measures ANOVA showed that the main effect of spatial separation was not significant, F(1, 15) = 0.02, p = 0.889; ηp2 = 0.001; BF10 = 0.19. The main effect of spatial relation was significant, F(1, 15) = 6.55, p = 0.022; ηp2 = 0.3; BF10 = 5348.14. The average median RT was 1 072 ±535 ms in the edge condition and 1,362 ±934 ms in the middle condition. The main effect of set size (set size 3: 1,161 ±670 ms; set size 4: 1,273 ±789 ms) was significant, F(1, 15) = 6.69, p = 0.021; ηp2 = 0.31; BF10 = 0.75. More importantly, the interaction between spatial relation and set size was significant, F(1, 15) = 20.24, p < 0.001; ηp2 = 0.574; BF10 = 1.02. Post hoc tests showed that when the target was at the edge, the average median RT for set size 4 (1078±589 ms) was not significantly different from that for set size 3 (1,027 ± 502 ms), F(1, 15) = 1.59, p = 0.226; ηp2 = 0.1; BF10 = 0.59. When the target was at the middle, the mean RT for set size 4 (1,473 ±997 ms) was significantly longer than the mean RT for set size 3 (1,243 ± 838 ms), F(1, 15) = 19.24, p = 0.001; ηp2 = 0.56; BF10 = 40.63. All the other two-way and three-way interactions were not significant, Fs < 4.29, ps > 0.056; BF10 < 0.45.

Median response times (RTs) in the ordinal-search task as a function of set size. a RTs for the small and large spatial separation conditions (collapsed across the spatial relation conditions). b RTs for the edge or middle conditions (collapsed across spatial separation conditions). c RTs in the small separation and large separation conditions when the target was at the front (edge) depth position. d RTs in the small separation and large separation conditions when the target was at the middle. e RTs in the small separation and large separation conditions when the target was at the rear (edge). Error bars represent ± 1 SEMnorm (Franz & Loftus, 2012)

Discussion

In the present study, participants were instructed to intentionally process the depth information as metric or ordinal in order to perform the search task. Participants searched faster and more accurately for targets defined by depth order than for targets defined by metric depth position. Moreover, the results demonstrated a pattern of dissociation. When depth information was perceived as a metric variable, the search time significantly decreased with an increasing spatial separation between the target and the distractor(s) (BF10 = 4.02, indicating that evidence favors the existence of an effect), but it was not significantly affected by the type of spatial relation between the target and the distractors (BF10 = 0.28, indicating that evidence favors the no-effect hypothesis). When depth information was perceived as an ordinal variable, the RT did not significantly change with spatial separation (BF10 = 0.19, indicating that evidence favors the no-effect hypothesis), but was significantly affected by the type of spatial relation (BF10 = 5348.14, indicating that substantial evidence favors the existence of an effect): search was much faster for the edge positions than for the middle ones.

Comparing the overall search times and the accuracies between the two tasks, we found that searching for a target defined by depth order is faster and more accurate than searching for a target defined by metric depth, even though defining a depth order requires processing multiple positions while defining a metric depth does not. It has been shown that humans are more sensitive in detecting the difference in depth between two objects than detecting the absolute metric depth of a single object (Norman & Todd, 1998). It is also possible that perceiving the depth order of an object does not necessarily involve the processing of metric depth, but involves a process of rapid comparisons of relative depths. Another possibility is that perceiving the depth order does involve processing metric depths first, yet only at a “coarse encoding” level of processing. In other words, the process of searching for ordinal depth can be terminated at an early “coarse” level of encoding, whereas searching for metric depth requires a “fine” level of encoding, and thus the RT for ordinal search is quicker.

The “coarse-to-fine” theory for visual search may explain the results that the search time for metric depth decreased with spatial separation. The theory states that scanning starts on a global, or “coarse,” scale, and then progressively focuses on a more local, or “fine” scale (Godwin et al., 2014; Over et al., 2007). In our study, if the separation was large, the participants might quickly locate the target and decide to end searching at an early “coarse” scanning stage, and therefore the RT was shorter. Conversely, if the separation was small, the participants might have to progress into the late “fine” scanning stage in order to discriminate the target from the distractor, and therefore the RT was longer.

However, we did not find evidence that searching for a target at a particular depth order can be affected by the spatial separation. In this task, instead of a spatial location that a participant needed to hold in his/her search template, an abstract number (or other possible verbal representations) was held, which may indicate a semantic category for spatial relation in depth. Studies have shown that categorical information can direct attention rapidly (Nako et al., 2014), and the search is more efficient when the target and distractors belong to different categories, with visual similarity being controlled (Smilek et al., 2006). In our study, when the target is indicated by its depth order, visual search is possibly guided by attention based on the categorical ordinal information. In this case, the spatial separation between the target and distractors is not essential because the target can already be distinguished from the distractors by their different depth orders.

The spatial relation showed no significant effect on searching for a target defined by its metric depth. It has been shown that the perception of a spatial coordinate (metric information; e.g., one object is 20 cm away from the other object) and categorical spatial relationship (ordinal information; e.g., one object is to the left of the other object) may be mainly processed in the right and left hemispheres, respectively (Van der Ham et al., 2014), suggesting that the categorical and coordinate information can be considered perceptually independent (also see Lopez et al., 2020). This may explain our finding that the spatial relation does not significantly affect the processing for metric depth. On the other hand, the spatial relation significantly affected searching for a target defined by its ordinal depth. Since the ordinal depth essentially represents an item’s spatial relation with respect to other items, the top-down modulation processes are more likely to focus on the global configurational structure of the search display. Hence, the edge positions, which confine the search process to a space defined by the nearest (median RT: 1,038 ± 528 ms) and farthest depths (median RT = 1,157 ± 587 ms; see OSM), might be prioritized in processing. Previous research has shown that the boundaries or contours of planar shapes can constrain the distribution of attention (Avrahami, 1999; Hollingworth et al., 2012). Like the 2D planar boundaries, the nearest and farthest depth positions in our study may serve to constrain the scope of attention when searching for ordinal depth, and therefore are processed with priority. Indeed, a previous study on working memory showed that the performances for the edge depth positions are better than those at the middle (e.g., Qian & Zhang, 2019), suggesting that boundaries do play a role in visual perceptual and cognitive tasks.

An intriguing finding for ordinal depth search is that the search time significantly increases with the number of distractors when the target is at a middle position (BF10 = 40.63, indicating that substantial evidence favors the existence of an effect), whereas the search time seemed to be invariant with the number of distractors when the target was at the edges (BF10 = 0.59, indicating a lack of evidence supporting either H0 or H1). The increase in RT with number of distractors can be expected, since the search display was composed of homogenous blue squares and either an item-by-item serial search model or a parallel search model with noise can explain this pattern of results (Narbutas et al., 2017). Interestingly, searching for a nearest or farthest item was not significantly affected by adding distractors. This may indicate that edge processing is pre-attentive and automatic. Research suggests that the segregation of figure and ground in a scene proceeds pre-attentively (Appelbaum & Norcia, 2009). It is possible that since the edge position helps to identify the search space from the scene, it may receive prioritized processing and function similarly as figure-ground segregation. Therefore, searching for an edge is faster and easier, whereas searching for a middle plane is slower and more difficult.

It should be noted that the current study was conducted within a peri-personal near space and a limited number of items was tested in the search task. It is possible that with a larger set size of stimuli and even greater spatial separations, the non-significant effect of separation or the effect of set size for edge positions in the ordinal-search task might become significant. Further examinations on depth processing are required to validate whether the present findings can be generalized to a more distant viewing condition, or experimental settings with a different set of parameters.

To summarize, we found that visual searching for metric depth is affected by spatial separation between items. The ordinal depth describes the relative position of an individual item within the structure of the whole stimuli set, and thus its processing is sensitive to the spatial relations between items. Given the pattern of dissociation, we suggest that there are at least partially separate processing mechanisms for metric and ordinal depth information.

Data availability

The data that support the findings of this study are available from the corresponding author upon request.

References

Appelbaum, L. G., & Norcia, A. M. (2009). Attentive and pre-attentive aspects of figural processing. Journal of Vision, 9(11), 18–18.

Atkinson, A. L., Oberauer, K., Allen, R. J., & Souza, A. S. (2022). Why does the probe value effect emerge in working memory? Examining the biased attentional refreshing account. Psychonomic Bulletin & Review, 29(3), 891–900.

Avrahami, J. (1999). Objects of attention, objects of perception. Perception & Psychophysics, 61(8), 1604–1612.

Awh, E., Belopolsky, A. V., & Theeuwes, J. (2012). Top-down versus bottom-up attentional control: A failed theoretical dichotomy. Trends in Cognitive Sciences, 16(8), 437–443.

de la Rosa, S., Moraglia, G., & Schneider, B. A. (2008). The magnitude of binocular disparity modulates search time for targets defined by a conjunction of depth and colour. Canadian Journal of Experimental Psychology/Revue Canadienne de Psychologie Expérimentale, 62(3), 150.

Fang, W., Wang, K., Zhang, K., & Qian, J. (2022). Spatial attention based on 2D location and relative depth order modulates visual working memory in a 3D environment. British Journal of Psychology. https://doi.org/10.1111/bjop.12599

Franz, V. H., & Loftus, G. R. (2012). Standard errors and confidence intervals in within-subjects designs: Generalizing Loftus and Masson (1994) and avoiding the biases of alternative accounts. Psychonomic Bulletin & Review, 19(3), 395–404.

Gazzaley, A., & Nobre, A. C. (2012). Top-down modulation: Bridging selective attention and working memory. Trends in Cognitive Sciences, 16(2), 129–135.

Godwin, H. J., Reichle, E. D., & Menneer, T. (2014). Coarse-to-fine eye movement behavior during visual search. Psychonomic Bulletin & Review, 21(5), 1244–1249.

Grossberg, S., & Mingolla, E. (1985). Neural dynamics of form perception: Boundary completion, illusory figures, and neon color spreading. Psychological Review, 92(2), 173.

Hollingworth, A., Maxcey-Richard, A. M., & Vecera, S. P. (2012). The spatial distribution of attention within and across objects. Journal of Experimental Psychology: Human Perception and Performance, 38(1), 135.

Jeffreys, H. (1961). Theory of probability. Oxford University Press.

Lopez, A., Caffò, A. O., Postma, A., & Bosco, A. (2020). How to separate coordinate and categorical spatial relation components in integrated spatial representations: A new methodology for analysing sketch maps. Scandinavian Journal of Psychology, 61(5), 607–615.

McKee, S. P., & Taylor, D. G. (2010). The precision of binocular and monocular depth judgments in natural settings. Journal of Vision, 10(10), 5–5.

Moore, C. M., Elsinger, C. L., & Lleras, A. (2001). Visual attention and the apprehension of spatial relations: The case of depth. Perception & Psychophysics, 63(4), 595–606.

Morey, R. D., Rouder, J.N., Jamil, T., Urbanek, S., Forner, K., & Ly, A. (2018). Package ‘BayesFactor’ (Version 0.9.12-4.2). Retrieved from https://cran.rproject.org/web/packages/BayesFactor/BayesFactor.pdf. Accessed 25 Oct 2022.

Nako, R., Wu, R., & Eimer, M. (2014). Rapid guidance of visual search by object categories. Journal of Experimental Psychology: Human Perception and Performance, 40(1), 50.

Narbutas, Y.-S., Lin, M. K., & Heinke, D. (2017). Serial versus parallel search: A model comparison approach based on reaction time distributions. Visual Cognition, 25(1-3), 306–325.

Norman, J. F., & Todd, J. T. (1998). Stereoscopic discrimination of interval and ordinal depth relations on smooth surfaces and in empty space. Perception, 27(3), 257–272.

Over, E. A. B., Hooge, I. T. C., Vlaskamp, B. N. S., & Erkelens, C. J. (2007). Coarse-to-fine eye movement strategy in visual search. Vision Research, 47(17), 2272–2280.

Qian, J., & Zhang, K. (2019). Working memory for stereoscopic depth is limited and imprecise—Evidence from a change detection task. Psychonomic Bulletin & Review, 26(5), 1657–1665.

Qian, J., Li, Z., Zhang, K., & Lei, Q. (2020). Relation matters: Relative depth order is stored in working memory for depth. Psychonomic Bulletin & Review, 27(2), 341–349.

Rawlings, S. C., & Shipley, T. (1969). Stereoscopic acuity and horizontal angular distance from fixation. JOSA, 59(8), 991–993.

Reeves, A., & Qian, J. (2021). The short-term retention of depth. Vision, 5(4), 59.

Rouder, J. N., Morey, R. D., Speckman, P. L., & Province, J. M. (2012). Default Bayes factors for ANOVA designs. Journal of Mathematical Psychology, 56(5), 356–374.

Smilek, D., Dixon, M. J., & Merikle, P. M. (2006). Revisiting the category effect: The influence of meaning and search strategy on the efficiency of visual search. Brain Research, 1080(1), 73–90.

Sousa, R., Brenner, E., & Smeets, J. B. (2011). Objects can be localized at positions that are inconsistent with the relative disparity between them. Journal of Vision, 11(2), 1–18.

Van der Ham, I. J., Postma, A., & Laeng, B. (2014). Lateralized perception: The role of attention in spatial relation processing. Neuroscience & Biobehavioral Reviews, 45, 142–148.

Wang, K., Jiang, Z., Huang, S., & Qian, J. (2021). Increasing perceptual separateness affects working memory for depth–re-allocation of attention from boundaries to the fixated center. Journal of Vision, 21(7), 8–8.

Wolfe, J. M. (2021). Guided Search 6.0: An updated model of visual search. Psychonomic Bulletin & Review, 28(4), 1060–1092.

Zhang, K., Gao, D., & Qian, J. (2021). Overestimation and contraction biases of depth information stored in working memory depend on spatial configuration. British Journal of Psychology, 112(1), 230–246.

Acknowledgements

This research was supported by the National Natural Science Foundation of China (32271100), the Guangdong Basic and Applied Basic Research Foundation (2021A1515010840), and the Fundamental Research Funds for the Central Universities (20wkzd12). The authors have no competing financial interests that might be perceived to influence the results and/or discussion reported in this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Open practices statement

The data and materials for all experiments are available upon request and none of the experiments was preregistered.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

ESM 1

(PDF 165 kb)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Zhang, K., Qian, J. Top-down modulation on depth processing: Visual searches for metric and ordinal depth information show a pattern of dissociation. Psychon Bull Rev 30, 1380–1387 (2023). https://doi.org/10.3758/s13423-022-02232-8

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-022-02232-8