Abstract

Path integration is a process in which navigators estimate their position and orientation relative to a known location by using body-based internal sensory cues that arise from navigation-related bodily motion (e.g., vestibular and proprioceptive signals). Although humans are capable of navigating via path integration in small-scale space, a question has been raised as to whether path integration plays any role in human navigation in large-scale space because it is inherently prone to accumulating error. In this review, we examined whether there is evidence that path integration contributes to large-scale human navigation. It was found that navigation with path integration (e.g., walking in a large-scale environment) can enhance learning of the layout of the environment as compared with mere exposure to the environment without path integration (e.g., viewing a walk-through video while sitting), suggesting that the body-based cues are reliably processed and encoded through path integration when they are present during navigation. This facilitatory effect is clearer when proprioceptive cues are available than when the navigators receive vestibular cues only (e.g., driving or being pushed in a wheelchair). More specifically, path integration with proprioceptive cues may help build survey knowledge of the environment in which metric distance and direction between landmarks are represented. Overall, the existing data are indicative of path integration’s contributions to large-scale navigation. This suggests that instead of dismissing it as too error-prone, path integration should be characterised as a fundamental mechanism of human navigation irrespective of the scale of a space in which it is carried out.

Similar content being viewed by others

Spatial navigation is crucial for human interaction and survival. Sensory guidance of navigation is achieved via two complementary processes: piloting and path integration (Loomis et al., 1999). In piloting, navigators determine their position by perceiving visual, auditory, and tactile landmarks in an environment. In this method, external sensing of the landmarks is necessary, but the navigators do not need to process information about their movement. In path integration (also known as dead reckoning), on the other hand, the navigators derive the velocity and acceleration of their locomotion from internal cues that are generated by the motion of the body (i.e., vestibular, proprioceptive, and efference-copy signals—collectively referred to as idiothetic signals; Mittelstaedt & Mittelstaedt, 2001),Footnote 1 and use this information to track and update their location and orientation. There is abundant literature investigating the mechanisms of path integration and how it is utilised by humans in small-scale experimental environments (e.g., Chance et al., 1998; Klatzky et al., 1998; Mittelstaedt & Mittelstaedt, 2001). However, the involvement of path integration in real-world navigational situations remains poorly understood.

A current position on this issue highlights the error in estimating self-location when human navigators use path integration by itself. Generating, sensing, and integrating body-based cues each come with their own errors, and since the computation of the self-location is continuously updated as the navigators take every step, these errors carry over and accumulate as a locomotion path progresses (Cheung et al., 2007, 2008). According to this position, the rate of error accumulation is too rapid, and therefore path integration alone should be useful only for brief periods. Indeed, when Souman et al. (2009) had blindfolded participants attempt to walk straight in a large field for 50 minutes, on average, they could not go farther than 100 m from the starting location due to random veering. They reached this asymptotic level of displacement within just a few minutes, suggesting that beyond this point they were not able to maintain any systematic estimation of their location and orientation by path integration. On the basis of findings like this, path integration is recognised as a primitive mechanism that does not contribute much to navigation in the real-world context (Dudchenko, 2010; Eichenbaum & Cohen, 2014). When piloting is simultaneously possible, the navigators might predominantly rely on external landmarks and make little use of body-based cues (Foo et al., 2005, 2007).

However, after controlling for or resetting the accumulated error, path integration has demonstrated to be capable of letting blindfolded navigators accurately walk to a goal that is up to 20 m away (Andre & Rogers, 2006; Rieser et al., 1990; Thomson, 1983). Furthermore, although the error increases beyond this distance, the primary mechanisms of path integration seem to remain consistent up to 500 m, generating less accurate but still systematic estimation of direction and distance of travel (Cornell & Greidanus, 2006; Harootonian et al., 2020; Hecht et al., 2018). Thus, if it is the accumulation of error that makes navigation by path integration impractical, all it takes for navigators to utilise path integration may be to clear or reduce the error periodically (e.g., approximately every 20 m). The error is corrected when the navigators can determine their location and orientation using external landmarks (Etienne et al., 2004; Philbeck & O’Leary, 2005; Zhang & Mou, 2017; Zhao & Warren, 2015), which occurs frequently during navigation in everyday environments. For example, for the last author of this article to go from his desk to the post room, he walks 3 m to reach his office door, at which path integration error is reduced to zero; then he walks 17 m in the corridor to come to a corner, and by turning there he obtains another opportunity to reset the error; in the following segment he walks 5 m to arrive at the post room. In this manner, even though the entire trajectory may go beyond the limit of path integration, it often consists of a series of short segments, within each of which path integration may well offer a reliable navigation strategy. Indeed, in small-scale space that includes external cues, it has been shown that internal cues are not disregarded but combined, often in a nearly optimal (i.e., Bayesian) fashion, with the external cues to mediate various aspects of navigational behaviour (Chen et al., 2017; Kalia et al., 2013; McNamara & Chen, 2022; Nardini et al., 2008; Newman & McNamara, 2021; Qi et al., 2021; Sjolund et al., 2018; Tcheang et al., 2011; ter Horst et al., 2015; Zhang et al., 2020; see also Harootonian et al., 2022; Zhao & Warren, 2015, 2018).

Furthermore, in the animal literature, there is recent emphasis on the role of proximal, as opposed to distal, landmarks in determining the contribution of body-based cues to driving both location-learning behaviour and spatial tuning of hippocampal place cells (Jayakumar et al., 2019; Knierim & Hamilton, 2011; Knierim & Rao, 2003; Sanchez et al., 2016). Similar findings are available from human behavioural studies as well (albeit limited yet; e.g., Jabbari et al., 2021; Zhang & Mou, 2019), suggesting that path integration does interact with local external cues that identify specific locations in the immediate surroundings. Taken together, these ideas lead to the proposal that instead of dismissing path integration as too error-prone, human navigation in real-world situations may better be conceptualised as a multimodal process to which both landmarks and body-based cues contribute. From this point of view, this focussed review examines whether the role of path integration in large-scale navigation by humans is demonstrated in the current literature.

Scope of this article and related previous reviews

Chrastil and Warren (2012) provided a broad review of active and passive spatial learning, and as a part of it, they discussed roles of body-based cues in navigation and other related processes. Compared with their work, the current review is more narrowly focussed on possible contributions of path integration to navigation in large-scale space, which is defined here as an environment that is large enough to let navigators travel farther than the presumable limit of reliable path integration in the absence of error resetting (i.e., approximately 20 m; Andre & Rogers, 2006; Rieser et al., 1990; Thomson, 1983). Such navigation is referred to as large-scale navigation in this article. To meet this definition, the environment does not need to have an expanse that accommodates a straight-line distance of 20 m. Even when the environment itself is smaller, it can still contain a path that is longer than 20 m (e.g., a maze-like environment). Typically, large-scale navigation involves going beyond locations that can be directly perceived from a single vantage point (Ittelson, 1973; Montello, 1993). This specific focus is justified because, as summarised above, this is the kind of navigation in which the utility of path integration is most debatable.

Ruddle (2013) and Waller and Hodgson (2013) also reviewed similar topics with the overarching goal of assessing whether and how body-based cues enhance spatial orientation and navigation in virtual environments. The scope of the current review is different from theirs—this review is concerned with path integration and its role in large-scale navigation in general, whereas the aims of their reviews were to contribute specifically to understanding and development of virtual-reality technology.

Although some key studies that predate the previous reviews (Chrastil & Warren, 2012; Ruddle, 2013; Waller & Hodgson, 2013) are discussed in this article, an emphasis was given to recent data that became available after publication of those reviews. In addition, other than a few references that are particularly relevant to discussions below, this review is centred on findings about human spatial navigation. Comprehensive reviews are available for research on path integration by nonhuman species (Collett & Collett, 2000; Etienne & Jeffery, 2004; Heinze et al., 2018; McNaughton et al., 1996; McNaughton et al., 2006; Moser et al., 2014).

While this review was primarily developed through the authors’ knowledge of the literature, it was complemented with a systematic search of the PubMed database (https://pubmed.ncbi.nlm.nih.gov) for ensuring comprehensive coverage of previous studies. The search was run in December 2021, with the following combination of keywords: (‘path integration’ or ‘dead reckoning’ or body-based or idiothetic or proprioceptive or vestibular or proprioception) and (navigation or wayfinding) and human. This search returned 513 items, 31 of which had already been included in the initial draft of this review. The remaining 482 items were screened by using their titles, abstracts, and method sections. The screening was carried out with the goal of identifying empirical papers that described studies on large-scale navigation by humans. To be considered for this review, the studies had to include independent variables through which the effects of body-based cues on large-scale navigation could be inferred (e.g., comparing navigation performance with and without those cues). This screening resulted in excluding 491 papers; most of them were excluded because navigation was not large-scale enough or no body-based cues were involved (e.g., navigation in a virtual environment using visual cues only). These processes were repeated in August 2022 and found two more papers (out of 15 new items) that were deemed relevant. Thus, the total of 24 papers were added to this review as a result of conducting the PubMed search.

The current understanding of path integration in large-scale navigation

To begin this review, we first give an outline of the current knowledge about the contribution of path integration to everyday human navigation. The goal of this section is to broadly survey the literature to set up a working hypothesis about possible roles of path integration in large-scale navigation. To this end, relevant previous studies are grouped into four types and summarised below.

Assessing spatial memory that results from navigation with or without path integration

In previous studies that aimed to evaluate human navigation in a large-scale environment, a common approach was to test spatial memory that participants formed after they navigated in the environment. In this approach, memory performance was used as a measure for assessing the degree to which a given kind of spatial information was acquired and processed in the service of navigation. When applied to research on path integration, these studies examined whether the presence of body-based cues during navigation enhanced learning of the environmental layout.

Waller et al. (2004) examined the effects of vestibular and proprioceptive cues on the acquisition of landmark locations in a university campus. Among several manipulations made in this study, critical conditions involved participants who navigated the campus either by walking in it (which allowed them to obtain vestibular, proprioceptive, and visual cues) or by watching a walk-through video of the campus while sitting still in a laboratory (which resulted in the visual cues only). Notably, the two groups of participants received the identical visual cues because those who walked shot the video through a camera attached to their head and watched it in real time through a head-mounted display (Fig. 1). Their memory for the landmark locations was tested by having them point to the landmarks and measuring absolute angular error in pointing. The walking group performed this test significantly better than the video group, showing that the vestibular and proprioceptive cues benefited spatial learning over and above what the visual cues did. Comparable patterns were found when participants learnt the layout of a building via either navigating in it by foot, being pushed in a wheelchair, or viewing a video captured by the participants who walked (Waller & Greenauer, 2007): Whereas the wheelchair and video groups (that had either visual and vestibular cues or visual cues only) did not differ from each other in a memory task in which they pointed target locations along complex paths they experienced, the walking group (that had visual, vestibular, and proprioceptive cues) outperformed the other two groups. Together, these results indicate that body-based (particularly proprioceptive) cues were processed and encoded in mental spatial representations, which in turn suggests that path integration yielded reliable output during large-scale navigation.

A participant and the experimenter in the Waller et al. (2004) study. The participant was in the condition in which he walked in the to-be-learnt environment. The participant wore a head-mounted display while walking. A camera was attached to the front of the display, and the image it captured was projected in real time to the participant’s eyes. The experimenter guided the participant while he also carried the equipment for video recording and power supply. Reprinted from “Body-Based Senses Enhance Knowledge of Directions in Large-Scale Environments,” by D. Waller et al., 2004, Psychonomic Bulletin & Review, 11(1), p. 160 (https://doi.org/10.3758/BF03206476), with permission from Springer Nature Customer Service Centre GmbH. Copyright 2004 by the Psychonomic Society, Inc.

More recently, Bonavita et al. (2022) took a similar approach by using two sets of spatial learning tasks, one involved experiencing a hospital campus via actual navigation and the other required watching a video that simulated driving through a city. Participants’ spatial memories for the hospital campus and the city were assessed by having them indicate the direction of travel at each intersection, recognise individual landmarks, and specify the order in which the landmarks were encountered. Additionally, the participants either drew the navigated path on a blank map of the hospital campus or placed the pictures of the landmarks on a map of the city in which the simulated driving path was already indicated. Performance in these tasks was evaluated in such a way that higher scores corresponded to more accurate memories for the environments (e.g., counting the number of correctly recognised landmarks). When scores of each task were compared between the two learning conditions (actual navigation versus simulated driving), they showed statistically significant positive correlations (rs > .45, ps < .01), with the exception that the scores from the direction indication task were not related to each other (r = .02, p = .46). Bonavita et al. interpreted this pattern of correlations by postulating that the presence of body-based cues during spatial learning was important for memorising specific turn directions, but for other aspects of navigation that were captured by their tasks, the body-based cues were not crucial and the participants formed comparable representations of large-scale space with or without these cues. However, the validity of this interpretation is unclear because the learning conditions were not equated. Actual navigation was performed only on the hospital campus and simulated driving was carried out only in the city, creating multiple differences in what the participants learnt in each condition (e.g., different numbers of intersections and landmarks). The tasks also differed in their details between the conditions. For example, while the participants were first shown the correct path in the navigation condition, they acquired it by trial and error in the simulated driving condition (i.e., they guessed the travel direction and received corrective feedback). Thus, although it is interesting that only the direction indication task did not yield a reliable correlation, the dissimilarities between the conditions and the tasks do not permit drawing a clear conclusion as to whether the body-based cues contributed to large-scale navigation in the Bonavita et al. study.

Using the same design as in the Waller et al. (2004) and Waller and Greenauer (2007) studies, Waller et al. (2003) specifically examined the effects of vestibular cues through the comparison between participants who were in the back seat of a car that drove through a city neighbourhood (which allowed them to obtain both vestibular and visual cues) and those who were seated in a room and watched a video of the neighbourhood taken from this car (which gave them the visual cues only). The two groups were matched in the visual cues they received—those in the car viewed the same video through a head-mounted display as it was recorded. All participants performed memory tests in which they pointed to landmarks in the neighbourhood, estimated straight-line distances between them, and indicated the landmark locations by placing markers on a grid sheet. In any of these measures, the car group did not show enhancement of spatial memory relative to the video group. These results are consistent with those from Waller and Greenauer (2007) and suggest that path integration on the basis of vestibular signals alone might not always provide spatial information about the environment above and beyond what is obtainable through vision.

It should be noted, however, that there are instances in the literature that are suggestive of vestibular contributions to large-scale navigation. For example, Jabbari et al. (2021) had participants drive along predetermined paths in a virtual town with or without vestibular cues by using a driving simulator on a motion platform that was capable of delivering vestibular stimulation according to the simulated vehicle’s movement. The participants learnt the paths by following signs, and then at test, they were to reach the same destinations as in the learning phase without the signs. The rate of success in arriving at the destinations within a given time limit was significantly higher in the presence of the vestibular cues, but this pattern was observed only when the virtual town contained proximal landmarks (as opposed to distal or no landmarks). Although it is yet to be specified how the vestibular cues interacted with the proximal landmarks in this driving task, results like this demonstrate that there are situations in which the vestibular signals can exert observable effects on navigation in large-scale space.

Another notable pattern of findings in the literature is that patients with vestibular damage are impaired in navigation in large-scale space. Biju et al. (2021) had those patients and age- and gender-matched control participants walk paths in a hospital floor that were approximately 30-m long. The paths led them to designated destinations via circuitous routes. When the patients and controls were asked to retrace the learnt paths and also to go directly to the destinations, the patients showed less optimal performance than the controls by walking longer distances in both tasks. These results suggest that vestibular dysfunction negatively affected the patients’ navigation in the hospital floor. In interpreting them, it should be pointed out that these patients exhibited the same impairments when they performed the tasks by navigating in an equivalent virtual environment using a joystick (i.e., under the condition in which navigation did not evoke vestibular self-motion cues). Thus, it is possible that the impaired navigation shown by the patients was not due to the lack of incoming vestibular signals during locomotion, but instead it could be attributed to the abnormality in higher-order navigation-related computation that could have resulted from the prolonged absence of vestibular stimulation (Bigelow & Agrawal, 2015; Schautzer et al., 2003; Vitte et al., 1996). Indeed, the reduced volume of cortical and hippocampal grey matter has been reported in vestibular patients (Brandt et al., 2005; Göttlich et al., 2016; Hüfner et al., 2009; Kamil et al., 2018; Kremmyda et al., 2016), which can cause decline in spatial memory and navigation abilities (Guderian et al., 2015; Nedelska et al., 2012). Nevertheless, regardless of whether the patients’ navigational behaviour was accounted for by the loss of online vestibular information or abnormal higher-order functions, the fact remains that there is a direct or indirect consequence of vestibular deprivation, suggesting that a certain role is played by the vestibular system in large-scale navigation.

Taken together, it can be inferred from the above studies that path integration contributes to navigation in large-scale space, in so far as proprioceptive input is available. It is possible that vestibular cues also play a part in large-scale navigation, but at this point, their role is less clearly characterised and it may well be less salient than that of proprioceptive cues. These inferences are consistent with findings from research on path integration in small-scale space in which blindfolded navigators track their location and orientation well while walking multisegment paths (i.e., with proprioception; Klatzky et al., 1990; Loomis et al., 1993; Philbeck et al., 2001; Yamamoto et al., 2014), but their self-tracking performance is poor and can even be indicative of disorientation when following the paths while sitting in a wheelchair (i.e., with vestibular cues alone; Sholl, 1989), showing the importance of the proprioceptive cues for staying oriented and localised in the surroundings.

Looking for modality-specific effects of path integration on navigation

In the behavioural studies reviewed above, participants in varying conditions (e.g., walking, being in a car, and viewing a video) navigated an environment the same number of times, and differential memory performance that resulted was taken as evidence that different cues contributed to navigation differently. By contrast, Huffman and Ekstrom (2019) equated participants’ learning of the locations of landmarks across various cue conditions and examined brain activation patterns while the participants retrieved the landmark locations from memory. A strength of this approach is that if the patterns of activation differed between the conditions, this outcome would not be confounded by dissimilar levels of spatial learning and therefore could be unequivocally attributed to the effects of varied sensory cues.

Specifically, Huffman and Ekstrom (2019) had participants navigate in large-scale immersive virtual environments through various methods that differed in the types of spatial cues they afforded. For example, participants in the enriched group walked and turned on an omnidirectional treadmill (Fig. 2). This allowed them to receive visual, vestibular, and proprioceptive cues because unlike conventional linear treadmills on which users can walk only in a single direction (usually along their sagittal axis), omnidirectional treadmills allow for walking in any direction, enabling the users to make not only translational but also rotational body movements (Harootonian et al., 2020; Souman et al., 2011). On the other hand, participants in the impoverished group controlled movement entirely by a joystick, receiving mostly visual cues only. All groups of participants repeatedly learnt the environments until their accuracy in pointing relative directions among landmarks reached a common criterion. Subsequently, the participants performed the same pointing task one more time while neuronal activation in their brain was recorded via functional magnetic resonance imaging (fMRI). Neuroimaging data showed very similar patterns of activation irrespective of which cues were available during navigation. In addition, behavioural performance in the pointing task did not reveal any effects of the cues—pointing accuracy was statistically indistinguishable between the groups even after learning each environment just once, and there was no group difference in the number of repetition required to reach the criterion. These results led to the conclusion that path integration, even with proprioceptive cues, did not play any unique roles in this study.

An example of an omnidirectional treadmill. A coloured version of the figure is available online. Adapted from “A Modality-Independent Network Underlies the Retrieval of Large-Scale Spatial Environments in the Human Brain,” by D. J. Huffman and A. D. Ekstrom, 2019, Neuron, 104(3), p. 613 (https://doi.org/10.1016/j.neuron.2019.08.012), with permission from Elsevier. Copyright 2019 by Elsevier Inc.

This conclusion is consistent with evidence from behavioural studies in which comparable performance in spatial memory retrieval was found after experiencing environmental layouts through different sensory modalities, supporting the view that spatial representations are at least functionally equivalent, or perhaps even fully amodal (i.e., independent of the encoding modality), regardless of the way they are encoded in long-term memory (Avraamides et al., 2004; Bryant, 1997; Eilan, 1993; Giudice et al., 2011; Loomis et al., 2002; Valiquette et al., 2003; Wolbers et al., 2011; Yamamoto & Shelton, 2009). It should be noted, however, that findings that are not readily compatible with this view also exist in the spatial memory literature, showing that after learning object locations to criterion, participants still exhibited dissimilar levels of performance in remembering the layouts of the objects depending on encoding modalities (Newell et al., 2005; Yamamoto & Philbeck, 2013; Yamamoto & Shelton, 2007). For example, Yamamoto and Philbeck (2013) showed that when participants learnt object locations in a room to criterion by viewing them with or without eye movements (i.e., with or without proprioceptive cues from extraocular muscles), accuracy and response latency in pointing relative directions among them still differed between the two learning conditions—the presence of the extraocular proprioceptive cues during memory encoding facilitated subsequent retrieval and mental manipulation of spatial representations. Thus, although Huffman and Ekstrom’s (2019) results put forth the view that path integration does not make distinctive contributions to the learning of large-scale environmental layout, the debate as to whether the encoding modality leaves unique traces in spatial memory has not been settled (Shelton & Yamamoto, 2009).

In addition, although Huffman and Ekstrom’s (2019) approach had the advantage discussed above, it also had a disadvantage: For learning conditions to be equated as to memory performance they afford, the study must be designed in such a way that the most cue-impoverished vision-only condition provides sufficient information for navigating and learning the environments as well as for retrieving memory for the environments later. This design is inherently conducive to finding no effects of information encoded via nonvisual modalities because this information is added to the already sufficient visual information. Indeed, it has been suggested that for the merit of multimodal spatial learning to become observable through comparison against purely visual learning, to-be-learnt environments or tasks that assess spatial knowledge of the environments must be sufficiently complex (e.g., Grant & Magee, 1998; Richardson et al., 1999; Sun et al., 2004; Yamamoto & Shelton, 2005, 2007). Thus, it is possible that the null result in the Huffman and Ekstrom (2019) study was a consequence of making the nonvisual information supplied by path integration noncritical or even irrelevant to the task.

Relating path integration ability to performance in large-scale navigation

Another approach to investigating path integration’s role in large-scale navigation is to measure abilities in path integration and navigation separately and examine the relationship between them. Two studies took this approach and yielded apparently inconsistent results: Hegarty et al. (2002) showed that better performance of path integration correlated with greater self-reported proficiency of navigation, whereas Ishikawa and Zhou (2020) claimed that improved path integration skills did not help construct more accurate spatial memories when participants navigated in a city.

Hegarty et al. (2002) measured participants’ path integration by guiding them along 60- or 180-ft multisegment paths of various configurations without vision and then asking them to point to the origin of each path. Subsequently, the participants’ preference and experience in everyday navigation were assessed using the Santa Barbara Sense of Direction (SBSOD) scale, a self-report questionnaire that has been shown to correlate with individuals’ spatial aptitude in large-scale environments (Hegarty et al., 2006; Labate et al., 2014; Schinazi et al., 2013; Sholl et al., 2006). Results showed that absolute angular error in pointing in the path integration task significantly correlated with the self-rating of navigation ability (the smaller the error, the higher the self-evaluation; r = −.40), suggesting that heightened sensitivity to body-based self-motion cues is one contributing factor for proficient navigation in large-scale space.

Ishikawa and Zhou’s (2020) path integration task was similar to Hegarty et al.’s (2002), except that paths were shorter (approximately 3–16 m) and participants walked without guidance from the end of each path to the origin of the path. In addition, when the participants stopped at the location that they thought was the origin, they pointed the direction of North. Some of the participants, whose sense of direction was poor as determined by the SBSOD scale (Montello & Xiao, 2011), were trained on the path integration task such that they performed it repeatedly with feedback. Subsequently, they were compared against other participants without such training on tasks in which all participants walked 450-m urban paths and learnt landmark locations along them. There were two groups of the untrained participants, one in which participants’ sense of direction was as poor as that of the trained participants, and the other in which participants had a better sense of direction that was considered average, both according to their scores on the SBSOD scale. The training significantly increased accuracy of path integration within the trained group, particularly in the residual distance from each participant’s stopping point to the origin. However, it did not help achieve competent learning of the landmark locations. Specifically, the trained participants made smaller error in judging directions between the landmarks than the untrained poor-sense-of-direction participants, but the effect size of this group difference was small, and the trained participants still performed the task poorly—that is, they were still less accurate than the untrained participants with the average sense of direction. In estimating pathway and straight-line distances between the landmarks and drawing maps of the travelled paths, the two poor-sense-of-direction groups did not differ from each other, showing no benefit of the training. Ishikawa and Zhou interpreted these results to mean that the improved accuracy of path integration in small-scale space had limited effects on large-scale navigation performance in naturalistic settings.

The approach taken by these studies was promising, but its implementation had several issues. Hegarty et al. (2002) administered the SBSOD scale after participants performed the path integration task. Thus, it is possible that those who thought they did well on the path integration task rated their navigation ability higher in the questionnaire, leading to the observed correlation. However, given that items on the scale are largely focussed on episodes in large-scale navigation (e.g., ‘I very easily get lost in a new city’) and none of them explicitly ask about the experience of tracking a location while walking, it is not very likely that the study design caused the suspected carryover effect. On the other hand, a more serious issue in the Ishikawa and Zhou (2020) study is that they did not give the untrained participants the path integration task at all. This left it unclear what post-training difference was present between the trained and untrained poor-sense-of-direction groups as well as between the trained poor-sense-of-direction and untrained average-sense-of-direction groups in their baseline-level path integration performance. For example, the possibility remains that despite the significant improvement within the trained group, the training did not sufficiently differentiate the trained and untrained poor-sense-of-direction groups. If this was the case, the mostly similar results these two groups yielded from the landmark-learning tasks might not have been surprising. Until this issue is resolved, it is difficult to draw any firm conclusions from these results.

Inferring the roles of body-based cues through manipulation of bodily self-consciousness

To examine the effects of path integration on navigation, a straightforward method is to manipulate sensory self-motion cues so that navigators carry out path integration to different degrees. Indeed, most of the studies reviewed in this article followed this methodology. On the other hand, Moon et al. (2022) took a unique approach in which they inferred the roles of body-based cues by having participants navigate in a virtual environment with or without an avatar while lying in an MRI scanner (i.e., in the absence of the body-based cues). The avatar was designed such that the participants felt some sense of ownership of the avatar’s body through mechanisms that were similar to those of rubber-hand and full-body illusions (Botvinick & Cohen, 1998; Ehrsson, 2007; Lenggenhager et al., 2007)—that is, by viewing the avatar’s posture and hand movements that were synchronous to the participants’ supine posture and hand movements for moving a joystick in the scanner, the participants experienced illusory self-identification with the avatar (Fig. 3). This illusion helped psychologically simulate navigation with body-based cues because under normal conditions bodily self-consciousness is thought to be achieved by having coherent visual, vestibular, and proprioceptive signals that are all anchored in one’s own body (Blanke et al., 2015).

Virtual navigation with or without an avatar in the Moon et al. (2022) study. While navigating in a virtual environment, participants saw an avatar that lay supine and moved its right hand (the body condition; the left panel). Both the posture and the hand movements were congruent with those of the participants who controlled their navigation by manipulating a joystick within a magnetic resonance imaging scanner. The same task was performed without the avatar too (the no-body condition; the right panel). A coloured version of the figure is available online. Adapted from “Sense of Self Impacts Spatial Navigation and Hexadirectional Coding in Human Entorhinal Cortex,” by H.-J. Moon et al., 2022, Communications Biology, 5, Article 406, p. 3 (https://doi.org/10.1038/s42003-022-03361-5). Copyright 2022 by H.-J. Moon, B. Gauthier, H.-D. Park, N. Faivre, and O. Blanke under a Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0/)

Participants in the Moon et al. (2022) study first learnt object locations by freely exploring a large circular field (110 m in diameter in the virtual space) that only contained distal landmarks other than the target objects (Fig. 3). Subsequently, the targets disappeared from the field, and the participants were asked to navigate back to the location of each target. The presence of an avatar enhanced performance in this task—compared with when the participants performed the same task without the avatar, they moved closer to the actual target location and also took a more efficient (i.e., shorter) path in doing so. In addition, the participants stayed farther away from the border of the field while navigating with the avatar. This result suggested that the avatar shifted the participants’ perceived self-location in the field (i.e., it was moved forward to the avatar’s location that was shown in front of the participants, which in turn had the effect of making them stop sooner when approaching the border), providing evidence that the avatar did induce the intended illusion (Dieguez & Lopez, 2017). Furthermore, the behavioural improvement in the task was associated with increased neural activity in the right retrosplenial cortex, a brain area that contributes to encoding and retrieval of spatial information about a large-scale environment during first-person navigation (Baumann & Mattingley, 2013; Byrne et al., 2007; Chrastil, 2018; Sherrill et al., 2013; Vann et al., 2009). Taken together, these results suggest that the simulated presence of body-based cues improved the participants’ learning of the object locations, showing promise of Moon et al.’s approach that can facilitate neuroimaging investigation of these cues’ roles in large-scale navigation.

Interim summary

In sum, there are several studies that specifically investigated the contribution of path integration to navigation in large-scale space. Although they have yet to converge on a clear conclusion, the evidence they present is sufficiently strong for drawing out a working hypothesis that path integration does take part in large-scale navigation when proprioceptive cues are available (Waller & Greenauer, 2007; Waller et al., 2004). On the other hand, more research is needed to clarify whether vestibular cues are crucial for competent performance in everyday navigation. While data from patients with vestibular loss are indicative of important roles played by the vestibular cues (Biju et al., 2021), those from participants with intact vestibular functions often identify limited benefits of having the vestibular cues on top of visual cues (Jabbari et al., 2021; Waller & Greenauer, 2007; Waller et al., 2003), suggesting that the vestibular roles may not be primarily defined by online spatial information the vestibular system provides to ongoing navigation.

Possible specific roles of path integration in large-scale navigation

Building on the working hypothesis formulated above, we now examine whether path integration makes any specific contributions to navigation in large-scale space. That is, if there are any unique roles that path integration plays, what can they be? Three possibilities regarding this question are considered below with the aim of generating more precise hypotheses about how path integration contributes to large-scale navigation in humans.

As shown below, the three ideas discussed in this section are interrelated. First, we consider the possibility that the chief role of path integration is to help acquire metric properties of a large-scale space. Second, we elaborate on this idea by specifying that path integration can be more important for encoding distance information than direction information during large-scale navigation. Third, we describe a claim by Wang (2016), in which she pushed the above ideas further by arguing that spatial information obtained through path integration is sufficient for constructing a detailed mental representation of an environment.

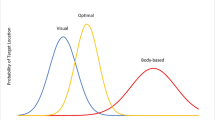

Providing metric information about an environment

To understand how path integration may uniquely contribute to human spatial navigation, it is useful to consider certain forms of mental spatial representation: route and survey knowledge (Siegel & White, 1975; Fig. 4). Route knowledge is composed of information about identities of landmarks and specific routes between them. For example, when navigators have travelled from Landmark A to Landmark B on one occasion and from Landmark A to Landmark C on another occasion, they can learn what the landmarks are and how two of the three landmarks are connected (e.g., ‘to go from King George Square Station to the Library, turn right immediately after coming out of the station and walk one block; to go from King George Square Station to the Botanic Garden, walk straight from the station exit until the street ends’). With this coarse knowledge, the navigators would have trouble going directly from Landmark B to Landmark C because deriving this untravelled direct path requires metric information about the distance and direction of A–B and A–C pairs, but the route knowledge only consists of propositional and topological relationships between the experienced landmark pairs. When the navigators have come to acquire such metric details that allow them to take novel shortcuts between places, they are said to possess survey knowledge, or a cognitive map (O’Keefe & Nadel, 1978; Tolman, 1948), of the environment. As shown below, there is evidence that path integration, particularly with proprioceptive cues, can provide the metric information required for the acquisition of survey knowledge (Chrastil & Warren, 2012; Gallistel, 1990).

Schematic diagram of route and survey knowledge. A, B, and C denote landmarks or other salient locations in an environment. Route knowledge consists of specific navigational paths to be taken for travelling from one landmark to another. From this type of knowledge, navigators are not able to derive paths that they have not experienced yet (e.g., directly going from B to C). Survey knowledge represents landmark locations with metric details of inter-landmark relationships, enabling the navigators to take such novel paths. Numbers in the bottom panel are for illustration purposes only and thus approximate—they do not follow trigonometry strictly. Directions can be specified using navigator-centred (egocentric) or environment-fixed (allocentric) frames of reference

Precise definitions of survey knowledge vary in the literature in terms of how strictly it must follow the principles of Euclidean geometry (Chrastil, 2013; Chrastil & Warren, 2014; Gallistel, 1990; Gillner & Mallot, 1998; O’Keefe & Nadel, 1978; Peer et al., 2021; Poucet, 1993; Warren, 2019; Warren et al., 2017; Weisberg & Newcombe, 2016; Widdowson & Wang, 2022; Zetzsche et al., 2009). Performance in spatial memory tasks that tap into metric properties of large-scale environments often violates the Euclidean principles (e.g., McNamara & Diwadkar, 1997; Moar & Bower, 1983; Sadalla et al., 1980). This is accounted for either by permitting some biases and distortions in map-like representations (Shelton & Yamamoto, 2009) or by postulating an intermediate stage between route and survey knowledge (i.e., graph knowledge; Warren, 2019). Notably, Chrastil and Warren (2014) proposed the concept of a cognitive graph, which represents topological connections between places in a node-and-edge structure and local metric information about direction and distance of each connection using node labels and edge weights. In this manner, the exact nature of the representation of metric information continues to be debated. However, delineating the difference between these views goes beyond the scope of the current review. Rather, for its purpose, it is sufficient to broadly define survey knowledge as an internal representation of space that contains some forms of metric details of an environment in a way that allows for flexible navigational behaviour such as shortcutting.

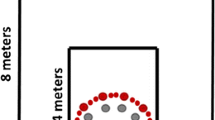

Using a maze-like immersive virtual environment that was in the scale of a large room (11 × 12 m), Chrastil and Warren (2013) examined whether having vestibular and proprioceptive cues while navigating in the environment would help participants take shortcuts between landmarks later (Fig. 5). The participants first learnt the landmark locations by either walking in the maze, moving through it while sitting in a wheelchair, or viewing dynamic images that resulted from movements in the environment while seated in a stationary chair. All participants received similar visual cues, those who were in the wheelchair additionally obtained vestibular cues, and those who walked had proprioceptive cues on top of the vestibular cues. At test, the participants were brought back to a landmark and asked to go to another designated landmark by making a single turn and walking one straight path while the maze and all objects contained in it were made invisible. Results showed that the participants who walked during the learning phase turned more accurately when taking novel shortcuts than those who only viewed the dynamic images. The wheelchair and viewing-only groups did not differ from each other, suggesting that proprioceptive but not vestibular cues provided metric information about the environment that aided the participants in estimating unexperienced directions between the landmarks.

The virtual environment used by Chrastil and Warren (2013). It contained 12 landmarks (shown by red rectangles and blue circles in the left panel), eight of which were used for testing participants’ shortcutting performance. The ideal shortcutting paths are indicated by dashed lines, against which the participants’ turns and walked distances during shortcutting were evaluated. The right panel shows an example of the participants’ view of the environment during the learning phase. A coloured version of the figure is available online. Adapted from “From Cognitive Maps to Cognitive Graphs,” by E. R. Chrastil and W. H. Warren, 2014, PLOS ONE, 9(11), Article e112544, p. 3 (https://doi.org/10.1371/journal.pone.0112544). Copyright 2014 by E. R. Chrastil and W. H. Warren under a Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0/)

He et al. (2019) conducted a similar experiment by having participants navigate virtual shopping precincts (50 × 50 m) with or without body-based rotational self-motion cues. Each precinct contained nine square buildings that were arranged in a 3 × 3 grid pattern. Four sides of a building were occupied by different shops, creating 36 unique shopfronts. Nine of them were designated as targets and the participants were asked to go to the target shops by taking the shortest possible path. Half the participants viewed the virtual environments on a desktop computer monitor and controlled their navigation entirely via a joystick, receiving no body-based self-motion cues. The other half wore a head-mounted display to view the environments, and executed translational movements by using the joystick and rotational movements by physically turning their bodies. Thus, the latter group received rotational body-based cues (and visual cues). An interesting manipulation in this experiment was that it largely consisted of trials in which the participants were able to penetrate the buildings (penetrable trials; a video demonstration is provided by Qiliang He at https://osf.io/cmsug). In these trials, to achieve the shortest possible path, the participants had to move between shops in a straight line by going through the buildings. This manipulation presumably facilitated development of survey knowledge because it encouraged the participants to focus more on global distances and directions among the shops than on specific routes that would have been taken when moving from one shop to another in an ordinary fashion without penetrating the buildings. Results showed that the participants with the body-based cues travelled shorter distances in reaching the targets than those without the body-based cues, suggesting the benefit of having body-based self-motion signals in acquiring metric details of the environments. However, there is one caveat in this interpretation: The two groups differed not only in the availability of the body-based cues but also in the quality of visual cues they were presented with. By being immersed in the virtual environments, those with the body-based cues most likely had richer visual cues as well, making it unclear whether the superior performance exhibited by these participants was ascribed to the body-based cues themselves.

Notably, the experimental design employed by Chrastil and Warren (2013) helped separate the effects of path integration per se from those of other processes that often co-occur when path integration is carried out. For one thing, when navigators actively move in an environment (and therefore they can perform path integration), they make decisions about their movement (e.g., when to turn; how long they travel). Thus, cognitive decision making, not body-based sensory information, could be the primary cause of performance enhancement in navigation tasks that involve active exploration. However, it is likely that the role of decision making is limited—although half the participants in each group of the Chrastil and Warren (2013) study freely moved in the maze as they made navigational decisions during the learning phase, their performance in the shortcutting task did not differ from that of the other half who passively followed experimenters to learn the environment (for similar findings, see also Wan et al., 2010). In a follow-up study, Chrastil and Warren (2015) changed the task such that participants attempted to go from one landmark to another in the maze by taking the shortest route between them, which was not always experienced during learning (Fig. 6). As in the previous study, the participants either walked or viewed dynamic images for learning the maze (the wheelchair group was omitted in this study), and each group was split into two according to whether they controlled their navigation during the learning phase. In this case, participants who exercised their own navigational decisions did select ideal routes more frequently at test, but the benefit of decision making was confined to the walking group. Comparable facilitatory effects of decision making on spatial learning were reported by Guo et al. (2019) who had participants navigate in immersive virtual environments with rotational body-based cues (evoked by physically turning the body). These results, together with those of Chrastil and Warren (2013), suggest that being an active agent of navigation is advantageous to acquiring certain forms of metric environmental information, but its role in facilitating the formation of survey knowledge is not as fundamental as that of path integration.

Diagram showing the procedure of the Chrastil and Warren (2015) experiment. In this example, participants attempted to go from A to B via the shortest route in the test phase. If they had learnt the environment well during the preceding learning phase, they would find that the ideal route was blocked by a barrier (shown by the white rectangle). Upon finding this, they would take the best detour to the destination (shown by the dashed line). The eight landmarks shown by blue circles were covered by identical blocks during the test phase. The other four landmarks on the wall shown by red rectangles remained visible. A coloured version of the figure is available online. Adapted from “From Cognitive Maps to Cognitive Graphs,” by E. R. Chrastil and W. H. Warren, 2014, PLOS ONE, 9(11), Article e112544, p. 3 (https://doi.org/10.1371/journal.pone.0112544). Copyright 2014 by E. R. Chrastil and W. H. Warren under a Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0/)

For another, walking in an environment raises the level of physiological arousal, which may have general facilitatory effects on learning and memory regardless of the types of information being remembered (Salas et al., 2011). Therefore, the presumable contribution of path integration to spatial learning might better be explained by the effects of physiological arousal instead. This is a real possibility because the benefit of doing path integration seems to be greater when navigators walk than when they do not move their bodies by themselves (e.g., being pushed in a wheelchair). This pattern has been attributed to the difference in body-based (i.e., proprioceptive versus vestibular) cues the navigators receive during navigation, but it is also consistent with the alternative possibility. To address this issue, Lhuillier et al. (2021) varied when and how much participants walked in their experiment—they either (a) walked on a linear treadmill not only while viewing a walk-through video of a virtual city but also while performing memory tests in which they retrieved locations of landmarks in the city; (b) walked only while viewing the video; (c) walked only while doing the memory tests; or (d) stood still on the unmoving treadmill throughout the experiment. Whereas all participants who walked experienced some increase of physiological arousal, only those in the first two groups obtained proprioceptive cues that corresponded to the virtual walk in the city. Results showed that the participants with the proprioceptive cues indicated the landmark locations more accurately on a blank map of the city than the other groups of participants, indicating that path integration during the learning phase, not heightened physiological arousal that accompanied physical walking, was the main contributor to the enhanced spatial memory. Interestingly, the groups did not differ in another test in which the participants specified the directions of turning at given intersections that led to designated landmarks. Compared with the map-based test that assessed memory for the overall layout of the landmarks, this test focussed on remembering particular navigational actions that took place between two specific locations (i.e., route knowledge). These results further suggest that the role of path integration is more pronounced in the acquisition of survey than route knowledge.

Encoding distance information

The previous section concluded that path integration may be an important source of metric information about a large-scale environment. While it is a distinct claim in and of itself, it also calls for a further question: If path integration supplies metric information, what metric information is it, exactly—is it about distance, direction, or both?

In many of the studies reviewed above, when effects of body-based (in particular, proprioceptive) cues on spatial learning and navigation were found in large-scale space, they tended to be more pronounced in tasks that involved judging direction of landmarks than those that required estimating distance between them (Chrastil & Warren, 2013; Lhuillier et al., 2021; Waller & Greenauer, 2007; Waller et al., 2004). This pattern of results could mean that proprioceptive information feeds more into direction than distance representations in memory, but it should be noted that more accurate encoding of inter-landmark distances can lead to better estimation of relative directions between the landmarks via trigonometric computation. Thus, it is possible that the proprioceptive cues contribute metric distance information to survey knowledge, and the biased results were consequences of unspecified task demands that differed between direction and distance judgements (e.g., the extent of error in any direction judgement is limited to ±180°, whereas it is less constrained and can be unlimited in distance judgement). Indeed, there are both theoretical and empirical reasons to hypothesise that path integration with proprioceptive signals may help acquire distance information about a large-scale environment.

The theoretical element that underlies this hypothesis is concerned with possible differential contributions of vestibular and proprioceptive signals, the two major sources of self-motion sensory cues in path integration, to encoding rotational and translational movements of navigators. For the rotational encoding, sensing head and gaze directions in the horizontal plane would be important, and the vestibular system may play a primary role here because it includes the sensory apparatus that is specialised for transducing changes of the head direction (i.e., semicircular canals). These changes also affect the gaze direction through the connection between the semicircular canals and extraocular muscles (e.g., the vestibulo-ocular reflex; Bronstein et al., 2015). On the other hand, although some proprioceptors in the neck and extraocular muscles may be closely involved in controlling the head and gaze directions (Crowell et al., 1998; Donaldson, 2000; Pettorossi & Schieppati, 2014), the majority of them in the other parts of the body would not directly encode these directions.Footnote 2 By contrast, for the translational encoding, proprioceptive signals generated from gait-related body motion should be critical. The vestibular system has the otolith organs that can sense linear acceleration of the body, and there is evidence that they do participate in tracking translational movements in small-scale space (Campos et al., 2012; Israël et al., 1997). However, it has been shown that impairment of these vestibular signals does not prevent participants from walking straight to remembered locations without vision (Arthur et al., 2012; Glasauer et al., 1994; Péruch et al., 2005), suggesting that the vestibular cues are not necessary for perceiving travelled distance in the presence of ambulatory proprioceptive cues. Considering that the effects of path integration on survey knowledge acquisition seem to primarily stem from proprioceptive cues (Chrastil & Warren, 2013), these characteristics of the vestibular and proprioceptive systems make it likely that path integration in large-scale space, when it is carried out via walking that evokes proprioceptive cues, provides distance information about the environment.

The empirical foundation for the hypothesis has been provided by studies that attempted to dissociate the rotational and translational components of path integration in the context of large-scale navigation. For example, Ruddle et al. (2011) had participants learn locations of targets by searching them in maze-like immersive virtual environments that varied in size (9.75 × 6.75 or 65 × 45 m), and subsequently asked them to estimate straight-line distances between the targets. Some of the participants walked either in a real room or on an omnidirectional treadmill to move about the virtual environments, evoking both vestibular and proprioceptive signals that conveyed information about rotational and translational self-motion in the environments. Others of them made rotational movements by physically turning in place and translational movements by manipulating a joystick, receiving body-based self-motion cues that were mostly restricted to vestibular rotational signals. Additionally, for navigating in the larger environment only, another group of participants walked on a linear treadmill for moving straight and used the joystick to make turns. These participants had proprioceptive cues but few vestibular cues.Footnote 3 All of the participants viewed the environments through a head-mounted display and received comparable visual cues. Regardless of the size of the environments and the mode of walking (i.e., walking freely or on the treadmills), the walking groups were significantly more accurate in estimating the distances than the turning-in-place groups. These results showed the benefit of having the translational body-based cues, which should have been largely based on the proprioceptive signals as summarised above, to incorporating metric distance information into survey knowledge of large-scale space.

It should be noted, however, that findings about the role of proprioceptive cues in acquiring metric distance information have not been fully consistent in the literature. The inconsistency is notable when participants walked on treadmills instead of actually moving through space. On the one hand, Ruddle et al. (2011) found that translational body-based cues obtained through treadmill walking were useful for estimating straight-line distances between previously visited locations. Similarly, as discussed earlier, Lhuillier et al. (2021) showed that participants who walked on a treadmill during learning later placed landmarks on a blank map more accurately, demonstrating the benefit of treadmill-based proprioceptive signals to acquisition of inter-landmark distance (and direction). On the other hand, when Li et al. (2021) had participants view the floor of a virtual shopping centre (2,964 m2 of navigable space; Fig. 7) through a head-mounted display and navigate in it either by walking on an omnidirectional treadmill or by making translational movement with a controller and rotational movement via turning a head while otherwise stationary, the two navigation methods yielded equivalent estimates of direct and pathway distances between locations along travelled routes. These results showed no merit of having proprioceptive cues in building survey knowledge of the shopping centre, contrasting with those of Ruddle et al. and Lhuillier et al. The findings of Li et al. could be due to the particular method used in their study—Li et al. made categorical assessment of the distance estimates by classifying them as correct or incorrect, instead of analysing quantified measures of the estimates. Nevertheless, further research should be conducted to resolve the conflict. In particular, it should be examined whether body-based cues that result from less naturalistic ambulatory movement on an omnidirectional treadmill could make participants rely more on other (probably visual) cues, particularly when the other cues are of high fidelity as in the Li et al. study (Chen et al., 2017; Foo et al., 2005; Nardini et al., 2008; Zhao & Warren, 2015).

The virtual environment used in the Li et al. (2021) study and the real environment from which the virtual environment was created. Virtual environments used in navigation studies vary in how realistically they copy real environments. Some are greatly simplified and devoid of pictorial and other details (e.g., Fig. 5), while others are high-fidelity replicas of existing or hypothetical physical environments, sometimes even including dynamic elements such as simulated crowds, as in the case of the Li et al. (2021) study. A coloured version of the figure is available online. Adapted from “The Effect of Navigation Method and Visual Display on Distance Perception in a Large-Scale Virtual Building,” by H. Li et al., 2021, Cognitive Processing, 22(2), p. 244 (https://doi.org/10.1007/s10339-020-01011-4). Copyright 2021 by H. Li, P. Mavros, J. Krukar, and C. Hölscher under a Creative Commons Attribution 4.0 International License (https://creativecommons.org/licenses/by/4.0/)

Interestingly, when studies included a condition in which participants physically walked in a real environment, they often learnt the environment better than those who navigated in a virtual replica of the environment using an omnidirectional treadmill (Hejtmanek et al., 2020; Li et al., 2021). Thus, although virtual environments combined with (omnidirectional) treadmills bring many advantages to spatial navigation research (e.g., enabling presentation of a large-scale environment in a physically limited space), it should be carefully examined what is critically different in navigation via treadmills as compared with unconstrained ambulation (Huffman & Ekstrom, 2021; Steel et al., 2021). Clarifying this difference might provide a clue to explaining the seemingly incompatible outcomes from the studies discussed in the previous paragraph.

Building survey knowledge

Previous studies reviewed in the above sections showed that path integration can feed metric details of an environment into survey-level representations. Wang (2016) advanced this idea further by proposing computational mechanisms that would allow for the construction of cognitive maps solely from path integration. Specifically, she argued that humans (and some other animals) should be able to maintain multiple path integrators, each of which is used to track a landmark location as they move about the environment. For example, when navigators traverse from Landmark A to Landmark B, they establish Path Integrator A that holds and updates the location of Landmark A. As they continue travelling from Landmark B to Landmark C, they set up Path Integrator B independently of Path Integrator A so that they can keep track of both Landmark A and Landmark B during their subsequent travel. By repeating this process, the navigators create a collection of path integrators that record locations of several landmarks. These path integrators enable the navigators to take a novel shortcut from Landmark C to Landmark A because Path Integrator A still monitors the location of Landmark A when the navigators arrive at Landmark C. The number of path integrators that can be run in parallel would be limited by each navigator’s working memory capacity, but this limitation may be overcome by storing outputs of active path integrators into long-term memory from time to time and retrieving them later when needed. In fact, at least in small-scale space, it has been shown that the number of locations that are to be tracked while moving without vision does not always affect how well the locations are mentally updated (i.e., there is no effect of set size), suggesting that some of the updating processes are carried out using enduring representations of the locations in long-term memory (Hodgson & Waller, 2006; Lu et al., 2020; Wang et al., 2006). Thus, theoretically, it is possible that path integration is not just one of many contributors to construction of survey knowledge; rather, it may even be developed directly out of the path integration system.

Wang’s (2016) theory is unique in that it provides a mechanistic account of how the path integration system could play a fundamental role in building survey knowledge (or cognitive mapping; Downs & Stea, 1973) beyond what has been suggested in the literature (Foo et al., 2005, 2007; Ishikawa & Zhou, 2020). To our knowledge, direct tests of the theory have not been done yet, but there are some empirical data that are consistent with the theory. For example, if navigators maintain multiple path integrators for each of landmarks they encounter during a trip, they should be able to go directly back to any of the landmarks from the end of the trip, not just to the beginning of the trip (the latter is called homing, which has traditionally been studied in the path integration literature, particularly in nonhuman species; Etienne & Jeffery, 2004; Heinze et al., 2018; Loomis et al., 1999). Humans are certainly capable of returning to intermediate landmarks via direct paths when they navigate by path integration (e.g., walking without vision)—indeed, children as young as five years old demonstrated this capacity after learning four locations in an 8 × 8-m room (Bostelmann et al., 2020). Similarly, Wan et al. (2012) showed that adult navigators were able to move straight back to one of two landmarks they passed while travelling multisegment paths of 15–25 m without external cues. These results suggest that at least in small-scale space with a limited number of landmarks, it is possible to construct survey-like knowledge from path integration alone. It remains to be seen whether Wang’s theory holds when navigators travel in large-scale space while dealing with a number of landmarks that exceeds their working memory capacity.

Concluding remarks

This review examined whether and how path integration would contribute to human navigation in large-scale environments. Although numerous studies have been conducted to characterise basic mechanisms of human path integration under controlled conditions, research on path integration’s role in navigation that goes beyond small laboratory spaces is still new. Now that the contribution of body-based cues to small-scale navigation has been well established (Chance et al., 1998; Chen et al., 2017; Kalia et al., 2013; Klatzky et al., 1998; Nardini et al., 2008; Qi et al., 2021; Sjolund et al., 2018; Tcheang et al., 2011; Zhang et al., 2020), it is time to extend the scope of investigation to explore what effects these cues have on navigation and other related processes that take place in more expansive naturalistic settings.

Previous studies that investigated this topic suggested that body-based, in particular proprioceptive, cues can enhance spatial learning that occurs during navigation in a large-scale environment (Waller & Greenauer, 2007; Waller et al., 2004). More specifically, navigators may acquire metric details of their movement through these cues, which play a vital role in constructing survey knowledge of the environment (Chrastil & Warren, 2013, 2015; Lhuillier et al., 2021). At this stage, however, findings from these studies are yet to be conclusive. Although it is theoretically and empirically possible that path integration with the proprioceptive cues primarily helps encode metric information about distance as opposed to direction in the environment, the previous studies have not attained this level of specificity in their conclusions. Results from relevant studies displayed apparent discrepancy, which is largely ascribed to the use of virtual environments and treadmills that is common in this research (Lhuillier et al., 2021; Li et al., 2021; Ruddle et al., 2011). That is, they created differences in the methods of walking as well as in the richness of external (particularly visual) cues, which prevented the studies from converging on a specific outcome. Considering that future research will most likely employ these technologies more frequently, and doing so is even necessary for investigating navigation in large-scale space with systematic manipulation of sensory and other cues, it is essential to clarify what peculiarities they bring to navigation as compared with natural walking in real environments (Steinicke et al., 2013). With a clearer understanding of the methodologies, the important next step is to scrutinise conditions that determine what metric information path integration provides.

Another important issue that needs to be resolved in future research, which is also related to the above point, is that conditions of previous studies typically overlapped in terms of what cues they afforded (e.g., walking—proprioceptive, vestibular, and visual cues; being pushed in a wheelchair—vestibular and visual cues). Therefore, unless it is assumed that contributions of different kinds of cues would be linearly additive, comparison between these conditions does not necessarily allow for isolating effects that are unique to one particular cue type. For example, if a walking condition yielded better memory performance than a wheelchair condition, it could mean either that proprioception made a difference by itself or that proprioceptive and vestibular information interacted and this interaction was crucial for enhancing spatial memory. Given that different types of body-based cues are tightly coupled in the somatosensory system (Cabolis et al., 2018; Cullen, 2012; Ferrè et al., 2011; Ferrè et al., 2013), the assumption of linear additivity may be too simplistic. Thus, for now, the conclusion drawn from the studies reviewed in this article should be qualified accordingly—that is, it seems likely that path integration in the presence of proprioceptive cues does contribute to large-scale human navigation by facilitating encoding of metric environmental information, but it remains to be seen whether it is proprioception itself or the amalgamation of body-based cues including proprioception that brings this benefit to navigators.

As shown in this review, past research in this field generally focussed on assessing spatial memory that resulted from navigation in large-scale space. Although it is a valid and useful approach to inferring what mental operations are carried out during navigation, it inevitably creates room for possible confounds with any post-navigation processes that might occur in the course of retaining, consolidating, and retrieving spatial information from memory. Considering that spatial memories, particularly those of large-scale environments, are known to be susceptible to some stereotypical biases (Hirtle & Jonides, 1985; Mark, 1992; McNamara, 1986; McNamara & Diwadkar, 1997; Moar & Bower, 1983; Sadalla et al., 1980; Stevens & Coupe, 1978), it is important to devise paradigms through which effects of body-based cues on navigation can be measured en route as they unfold. Whether behavioural or neuroscientific, such paradigms would offer new insights into what contributions path integration makes to large-scale human navigation and whether they are attributed to path integration per se. Some initial attempts in this regard have already emerged in the literature (e.g., Moon et al., 2022), and these efforts should be expended further in the future.

Notes

Another important source of input into the path integration system is optic flow, which is considered a special class of self-motion cues (referred to as allothetic cues; Loomis et al., 1999), because it is based on external visual information. However, possible contributions of optic flow to large-scale navigation are not discussed in this article for maintaining a clear focus on the roles of idiothetic cues. A brief review of path integration by optic flow is available in Shelton and Yamamoto (2009).

There are leg and body muscles that make systematic movements while curvilinear walking, some of which may occur through dynamic interaction with head and gaze directions (Becker et al., 2002; Chia Bejarano et al., 2017; Courtine et al., 2006; Courtine & Schieppati, 2003; Hicheur et al., 2005; Imai et al., 2001). Thus, there should be proprioceptive components in encoding rotational body motion. However, to our knowledge, the role of these rotational proprioceptive cues in path integration has not been well characterised.

Strictly speaking, walking on a linear treadmill might evoke some vestibular signals. For example, as walkers step in place, their head moves up and down systematically according to their gait pattern (Hirasaki et al., 1999). Thus, if this head oscillation can be encoded by the vestibular system, it can inform the walkers about their supposed speed of forward locomotion in a virtual environment (Bossard & Mestre, 2018; Tiwari et al., 2021). However, compared with rich proprioceptive cues elicited by full-body ambulatory motion, these vestibular signals presumably carry much less information about the walkers’ translational movements.

References

Andre, J., & Rogers, S. (2006). Using verbal and blind-walking distance estimates to investigate the two visual systems hypothesis. Perception & Psychophysics, 68(3), 353–361. https://doi.org/10.3758/BF03193682

Arthur, J. C., Kortte, K. B., Shelhamer, M., & Schubert, M. C. (2012). Linear path integration deficits in patients with abnormal vestibular afference. Seeing and Perceiving, 25(2), 155–178. https://doi.org/10.1163/187847612X629928

Avraamides, M. N., Loomis, J. M., Klatzky, R. L., & Golledge, R. G. (2004). Functional equivalence of spatial representations derived from vision and language. Journal of Experimental Psychology: Learning, Memory, and Cognition, 30(4), 801–814. https://doi.org/10.1037/0278-7393.30.4.801

Baumann, O., & Mattingley, J. B. (2013). Dissociable representations of environmental size and complexity in the human hippocampus. Journal of Neuroscience, 33(25), 10526–10533. https://doi.org/10.1523/JNEUROSCI.0350-13.2013

Becker, W., Nasios, G., Raab, S., & Jürgens, R. (2002). Fusion of vestibular and podokinesthetic information during self-turning towards instructed targets. Experimental Brain Research, 144(4), 458–474. https://doi.org/10.1007/s00221-002-1053-5

Bigelow, R. T., & Agrawal, Y. (2015). Vestibular involvement in cognition: Visuospatial ability, attention, executive function, and memory. Journal of Vestibular Research, 25(2), 73–89. https://doi.org/10.3233/VES-150544

Biju, K., Wei, E. X., Rebello, E., Matthews, J., He, Q., McNamara, T. P., & Agrawal, Y. (2021). Performance in real world- and virtual reality-based spatial navigation tasks in patients with vestibular dysfunction. Otology & Neurotology, 42(10), e1524–e1531. https://doi.org/10.1097/MAO.0000000000003289

Blanke, O., Slater, M., & Serino, A. (2015). Behavioral, neural, and computational principles of bodily self-consciousness. Neuron, 88(1), 145–166. https://doi.org/10.1016/j.neuron.2015.09.029

Bonavita, A., Teghil, A., Pesola, M. C., Guariglia, C., D’Antonio, F., Di Vita, A., & Boccia, M. (2022). Overcoming navigational challenges: A novel approach to the study and assessment of topographical orientation. Behavior Research Methods, 54(2), 752–762. https://doi.org/10.3758/s13428-021-01666-7

Bossard, M., & Mestre, D. R. (2018). The relative contributions of various viewpoint oscillation frequencies to the perception of distance traveled. Journal of Vision, 18(2), Article 3. https://doi.org/10.1167/18.2.3

Bostelmann, M., Lavenex, P., & Banta Lavenex, P. (2020). Children five-to-nine years old can use path integration to build a cognitive map without vision. Cognitive Psychology, 121, Article 101307. https://doi.org/10.1016/j.cogpsych.2020.101307

Botvinick, M., & Cohen, J. (1998). Rubber hands ‘feel’ touch that eyes see. Nature, 391(6669), 756–756. https://doi.org/10.1038/35784