Abstract

We used the visual world paradigm to examine interlingual lexical competition when Dutch–English bilinguals listened to low-constraining sentences in their nonnative (L2; Experiment 1) and native (L1; Experiment 2) languages. Additionally, we investigated the influence of the degree of cross-lingual phonological similarity. When listening in L2, participants fixated more on competitor pictures of which the onset of the name was phonologically related to the onset of the name of the target in the nontarget language (e.g., fles, “bottle”, given target flower) than on phonologically unrelated distractor pictures. Even when they listened in L1, this effect was also observed when the onsets of the names of the target picture (in L1) and the competitor picture (in L2) were phonologically very similar. These findings provide evidence for interlingual competition during the comprehension of spoken sentences, both in L2 and in L1.

Similar content being viewed by others

Many studies on visual word recognition by bilinguals have pointed out that a bilingual is not merely the sum of two monolinguals. Instead, learning a second language (L2) changes the cognitive system in several ways. For instance, bilinguals are not able to “turn off” the irrelevant language when reading, and so a bilingual’s native language (L1) and L2 constantly compete with each other for recognition, both when reading in L2 and when reading in L1 (e.g., Dijkstra & Van Heuven, 1998; Duyck, 2005; Duyck, Van Assche, Drieghe, & Hartsuiker, 2007; Van Assche, Duyck, Hartsuiker, & Diependaele, 2009). However, the story may be different for auditory word recognition: In contrast to written language, speech contains subsegmental and suprasegmental cues that are highly indicative of the (target) language in use. Even monolingual word recognition is highly sensitive to fine-grained phonetic details (Andruski, Blumstein, & Burton, 1994; McMurray, Tanenhaus, & Aslin, 2002; Salverda, Dahan, & McQueen, 2003), which is predicted by models of monolingual spoken word recognition (e.g., exemplar models; Goldinger, 1998). Hence, these approaches would predict that bilinguals are also able to use such fine-grained detail to constrain lexical access.

Accordingly, evidence for nontarget language activation in the auditory domain is more mixed. There is consistent evidence in favor of language nonselectivity when isolated words are listened to in L2 (e.g., Marian & Spivey, 2003; Schulpen, Dijkstra, Schriefers, & Hasper, 2003). But in isolated L1 auditory word recognition, evidence for cross-lingual lexical interactions is inconsistent, without a clear explanation. Moreover, although some data exist for L2 word recognition in meaningful sentence contexts, such data are lacking for L1, so that it also remains unclear whether top-down factors may modulate such influences. The present study addressed both issues. We tested whether lexical items from L1 and L2 compete with each other for activation when semantically coherent (but low-constraining) sentences are listened to in L2 (Experiment 1) and in L1 (Experiment 2), even though such sentences constitute strong and reliable cues for the language of words appearing in those sentences. Additionally, we investigated the role of cross-lingual phonological similarity and asked whether this might explain the divergent L1 findings reported in earlier studies on isolated word recognition.

A good method for examining lexical competitor activation in auditory word recognition is the visual world paradigm (e.g., Altmann, 2004; Tanenhaus, Spivey-Knowlton, Eberhard, & Sedivy, 1995). In this paradigm, eye movements to objects in a scene are continuously recorded, so that target word recognition may be monitored as speech unfolds over time. In a seminal study, Tanenhaus et al. investigated within-language lexical competition using this paradigm. Each critical display contained four objects, two of which had phonologically similar beginnings (e.g., candy and candle). Tanenhaus et al. found that it took longer to initiate eye movements to the correct object when an object with a phonologically similar name was presented.

Visual world studies on isolated L2 word recognition have yielded consistent cross-lingual lexical competition effects. In the studies of Marian and Spivey (2003) and Weber and Cutler (2004), bilinguals fixated more on competitor pictures with a name that was phonologically related to the onset of the name of the target in the nontarget language (e.g., marka, “stamp,” given target marker) than on unrelated distractor pictures. Importantly, studies that embedded target words in spoken sentences found that such cross-lingual interactions are influenced by sentence context (Chambers & Cooke, 2009; FitzPatrick & Indefrey, 2010). When the semantic information in a sentence was compatible with both the target (e.g., poule) and the interlingual competitor (e.g., pool), Chambers and Cooke found that competitors were fixated more often during L2 recognition than controls (e.g., Marie va decrire la poule [Marie will describe the chicken/pool]). This was not the case when the prior semantic information in the sentence was incompatible with the competitor meaning (e.g., Marie va nourrir la poule [Marie will feed the chicken/pool]). In an EEG study by FitzPatrick and Indefrey, sentences could be (1) congruent (e.g., “The goods from Ikea arrived in a large cardboard box”), (2) incongruent (e.g., “He unpacked the computer, but the printer is still in the towel”), (3) initially congruent within L2 (e.g., “When we moved house, I had to put all my books in a bottle”), or (4) initially overlapping with a congruent L1 translation equivalent (e.g., “My Christmas present came in a bright-orange doughnut,” which shares phonemes with the Dutch doos , “box”). The EEG results demonstrated an N400 component on all incongruent sentences that was delayed for L2 words, but not for L1 translation equivalents. This indicates that those L1 competitors that were initially congruent with the sentence context were not activated when bilinguals listened to sentences in L2. Hence, these studies seem to suggest that sentence context influences cross-lingual lexical interactions, even for L2 recognition, which shows consistent interference effects from L1 in isolated word recognition.

For L1 word recognition, the evidence for language nonselectivity is mixed, and the question of whether this nonselectivity can be affected by top-down factors remains unaddressed. Whereas Spivey and Marian (1999) observed lexical competition from L2 during L1 recognition, Weber and Cutler (2004) did not replicate this finding. Because bilinguals in the former study were immersed in an L2-dominant setting, whereas the latter bilinguals were not, this suggests that between-language lexical competition may depend on language profile factors. When it comes to L1 auditory word recognition in a meaningful sentence context, there are currently even no studies investigating cross-lingual interactions. Table 1 summarizes the different studies on isolated L2 and L1 word recognition and those using sentence context.

These results raise the question of which factors may constrain lexical access. For L2 word recognition, the studies above suggest that sentence context may be a constraining factor, whereas for isolated L1 word recognition, cross-lingual interactions seem to require sufficient language proficiency/dominance. A further factor may be phonology. In a study of isolated L2 word recognition by Schulpen et al. (2003), the influence of subphonemic cues on lexical access was investigated. In this study, Dutch–English bilinguals completed a cross-modal priming task with auditory primes and visual targets. Reaction times were longer for interlingual homophone pairs (e.g., /li:s/–LEASE; /li:s/ is the Dutch translation of groin) than for monolingual controls (e.g., /freIm/–FRAME), which suggests that bilinguals activated both meanings of the homophones. Crucially, the English pronunciation of the homophone led to shorter decision times on the related English target word than did the Dutch version of the homophone, which indicates that cross-lingual interactions are affected by subphonemic differences between homophones. For isolated L1 word recognition in a visual world paradigm, Ju and Luce (2004) found that Spanish–English bilinguals fixated interlingual distractors (nontarget pictures whose English names shared a phonological similarity with the Spanish targets) more frequently than control distractors, but only when the Spanish target words were altered to contain English-appropriate voice onset times.

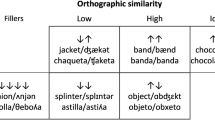

In the present study, we investigated whether a meaningful, but low-constraining sentence context may restrict cross-lingual interactions in L1 auditory word recognition. We also tested whether differences with respect to the phonological similarity between phonemes influence cross-lingual lexical activation transfer. In contrast with Schulpen et al. (2003) and Ju and Luce (2004), we used existing natural variation in phonological similarity between L1 and L2 representations, instead of experimentally manipulating pronunciations. In order to confirm previous results on L2 word recognition and extend them with the results on the influence of subphonemic cues, we first investigated these questions when participants listened in L2. In Experiment 1, unbalanced Dutch–English bilinguals, immersed in an L1-dominant environment, listened to low-constraining sentences in L2. We investigated whether they fixated competitor pictures with a name in the irrelevant L1 that was phonologically related to the target name (e.g., fles, “bottle”, given target flower) more than distractor pictures with an L1 name that was unrelated to the L2 target name. In Experiment 2, we investigated whether we could also observe between-language competition when these bilinguals were listening to L1 sentences. Additionally, we also analyzed effects of cross-lingual phoneme similarity. Typically, high-similarity items shared a consonant cluster such as /fl/, /sl/, or /sp/; low-similarity items tended to have a vowel as the second phoneme or had a consonant cluster involving /r/; vowel space is different between Dutch and English, and these languages realize /r/ with different phones. Even if phonological cues are not used as a strict cue to limit lexical activation to only one lexicon, between-language competition may still be stronger when the target and the competitor are perceived as sounding more similar.

Experiment 1: L2

Method

Participants

Twenty-two Ghent University students participated. All were unbalanced Dutch–English bilinguals. They started to learn English (L2) around age 14 at secondary school. Although they use their L1 (Dutch) most of the time, they were regularly exposed to their L2 through popular media and English university textbooks.

Stimulus materials

Twenty target nouns were embedded in low-constraining L2 sentences (see Appendix 1). All sentences were semantically compatible with both the competitor and distractor and were pronounced by a native speaker of British English. The names of targets, competitors, and distractors were plausible but not predictable from the sentence context. This was assessed in a sentence completion study with 20 further participants. Production probabilities for targets, competitors, and distractors were low (targets: .007; competitors: .005; distractors: .002). Each target was paired with a competitor picture, where the onset of the name of the L1 translation overlapped phonologically with the onset of the target name. Target names were up to three syllables long, and all target–competitor pairs shared two or three phonemes. Names of targets and competitors did not differ (dependent samples t-tests yielded ps > .23) with respect to number of phonemes, number of syllables, word frequency, neighborhood size, and age of acquisition (see Table 2).

To calculate a phonological similarity score for each target–competitor pair, 15 other participants from the same population listened to the phonemes that were shared between target and competitor names (i.e., the onset in the pair), once when listening to these phonemes extracted from the target, and once extracted from the competitor. Next, they judged phonological similarity on a scale ranging from 1 (very different) to 9 (very similar). Using a median-split procedure, target-competitor pairs were classified either as very similar (M = 7.47, SD = 0.69) (e.g., fl ower /flaʊз/–fl es /flɛs/ [bottle]) or less similar (M = 4.90, SD = 0.76) (e.g., co mb /kəʊm/–ko ffer /kɔfə/ [suitcase]).

In addition to the target and competitor, each display (for an example, see Fig. 1) contained two phonologically unrelated distractors (e.g., dog, orange). Thirty new filler displays that were semantically and phonologically unrelated were included in the experiment. Pictures were selected from Severens, Van Lommel, Ratinckx, and Hartsuiker (2005). All pictures were black-and-white drawings, arranged in a two-by-two grid.

Apparatus

Using WaveLab software, sentences were recorded in a sound-attenuated booth by means of an SE Electronics USB1000A microphone with a sampling rate of 44.1 kHz (16-bit). Eye movements were recorded from the right eye with an Eyelink 1000 eye-tracking device (SR Research). Viewing was binocular. Participants’ fixations were sampled every millisecond. Calibration occurred through a 9-point grid.

Procedure

Participants were instructed to listen carefully to the L2 sentences and were not required to perform any explicit task. We chose a look-and-listen paradigm because we were interested in the question of whether between-language competition is a general feature of language–vision interactions (see Altmann, 2004), although we acknowledge that task-based experiments may also sometimes have advantages (see Salverda, Brown, & Tanenhaus, 2011, for a discussion). Display presentation was synchronized with the auditory presentation of the sentences. On average, the onset of the target started 1,219 ms after sentence onset. A new trial started 1,000 ms after sentence offset.

Results

Time window 0–300 ms after target onset

To investigate whether there are baseline effects during this time window, an ANOVA with picture (competitor vs. distractor) and phonological overlap (high vs. low) as within-participants variables and proportion of fixations as the dependent variable was conducted. The main effect of picture was not significant, F 1(1, 21) = 2.05, p = .17; F 2(1, 18) = 1.51, p = .23, nor was the main effect of phonological overlap, F 1(1, 21) = 1.08, p = .31; F 2 < 1. The interaction between picture and phonological overlap was also not significant, F 1 < 1; F 2 < 1. This ensures that competitor and distractor pictures did not differ in their intrinsic capture of visual attention.

Time window 300–800 ms after target onset Footnote 1

We carried out an ANOVA on fixations in the time window between 300 and 800 ms after target onset (for a graphical presentation of the fixations in this interval, see Fig. 2), with picture (competitor vs. distractor) and phonological overlap (high vs. low) as within-participants variables and proportion of fixations as the dependent variable. This showed a main effect of picture, F 1(1, 21) = 8.01, p < .01; F 2(1, 18) = 7.04, p < .05. Participants fixated significantly more on competitor pictures with Dutch names phonologically related to English target names (M = 19.83 %) than to unrelated distractors (M = 16.43 %). The main effect of phonological overlap was not significant, F 1(1, 21) = 1.56, p = .25; F 2(1, 18) = 0.34, p = .57, nor was the interaction between picture and phonological overlap, F 1 < 1; F 2 < 1.

Fixation proportions for English targets, competitors, and averaged distractors in Experiment 1 for high (a) and low (b) phonological overlap with the Dutch competitor name

To investigate the effect of phonological similarity further, we ran three different linear regression analyses. First, we investigated whether the subjective phonological onset similarity ratings of pairs predicted the lexical competition effect for that pair. This was not the case, F < 1; R 2 = .01; β = −.007, r = −.11 (see Fig. 3a). Second, we calculated the proportion of overlapping phonemes for each target–competitor pair (i.e., for the pair broom–broek (pants), three out of four phonemes of “broom” overlap with the competitor “broek”). Again, this variable did not predict the lexical competition effect, F(1, 19) = 2.46, p = .14; R 2 = .12; β = −.22, r = .24 (see Fig. 3b). Third, we also calculated the duration (in milliseconds) of onset overlap. For example, for the pair flower–fles (bottle), the duration of the overlapping part /fl/ is 185 ms, whereas the total duration of the target “flower” is 546 ms. This implies that the proportion of overlap here is 34.08 %. Again, the regression analysis revealed that this measure did not predict the lexical competition effect, F < 1; R 2 = .0001; β = −.01, r = −.02 (see Fig. 3c). This implies that nonnative listeners experience competition from L1 competitors when listening to low-constraining sentences in L2 (even though L1 is irrelevant), independently of the degree of phonological similarity between the target and the competitor.

Scatterplots representing the lexical competition effect (in percentages) in L2 (Experiment 1) as a function of three different measures of phonological similarity between items—namely, self-ratings (a), overlap in phonemes (b), and overlap in time (c)

Experiment 2: L1

In Experiment 2, we tested for between-language competition when semantically coherent sentences were listened to in L1. Again, we also investigated whether this effect is influenced by the degree of phonological similarity between languages.

Method

Twenty-two students from the same bilingual population participated. Stimulus materials, apparatus, and procedure were identical to Experiment 1, except that targets were now competitors (and vice versa), and sentences were translated into Dutch (see Appendix 2). This ensures comparability of L1 and L2 experiments. On average, the onset of the name of the target started 1,388 ms after sentence onset. Sentences were now pronounced by a native Dutch speaker. Targets, competitors, and distractors were again plausible but not predictable from the sentence context. This was assessed in a sentence completion study with 17 further participants. In this completion study, participants were asked to complete the 30 critical sentences with a Dutch target. Production probabilities for targets, competitors, and distractors were again low (targets: .00; competitors: .01; distractors: .00).

Results

Time window 0–300 ms after target onset

Baseline picture effects in the time window between 0 and 300 ms after target onset were assessed as in Experiment 1. The main effect of picture was not significant, F 1 < 1; F 2 < 1, as was the main effect of phonological overlap, F 1 < 1; F 2 < 1. The interaction between picture and phonological overlap was also not significant, F 1(1, 21) = 1.16, p = .29; F 2 < 1.

Time window 300–800 ms after target onset

We ran an ANOVA on fixations in the time window between 300 and 800 ms after target onset (for a graphical presentation of the fixations in this interval, see Fig. 4), with picture (competitor vs. distractor) and phonological overlap (high vs. low) as within-participants variables and proportion of fixations as the dependent variable. This showed that the main effect of picture was not significant, F 1 < 1; F 2 < 1, nor was the main effect of phonological overlap, F 1(1, 21) = 2.85, p = .11; F 2(1, 18) = 2.14, p = .16. However, the interaction between picture and phonological overlap was significant, F 1(1, 21) = 5.71, p < .05; F 2(1, 18) = 7.47, p < .05. Closer inspection revealed that participants fixated more on competitors than on unrelated distractors only when the phonological overlap between the English competitor name and the Dutch target name was very high, F 1(1, 21) = 6.37, p < .05; F 2(1, 18) = 6.78, p < .05. When the English competitor name and the Dutch target name were phonologically less similar, fixation proportions on competitors and unrelated distracters did not differ, F 1 < 1; F 2 < 1.

Fixation proportions for Dutch targets, competitors, and averaged distractors in Experiment 2 for high (a) and low (b) phonological overlap with the English competitor name

To investigate the effect of phonological similarity further, we ran the same three different linear regression analyses. First, subjective phonological onset similarity ratings predicted lexical competitions effect for that pair (see Fig. 5a), F(1, 19) = 5.58, p < .05; R 2 = .24; β = .49, r = .49. Second, the proportion of overlapping phonemes (see Fig. 5b) also predicted the lexical competition effect, F(1, 19) = 5.16, p < .05; R 2 = .22; β = −.30, r = .47. Third, also the duration of onset overlap (see Fig. 5c) correlated strongly with the lexical competition effect, F(1, 19) = 5.93, p < .05; R 2 = .25; β = −.42, r = .49. This provides evidence for language nonselectivity when sentences are listened to in L1, but with the constraint that the L2 representations appear to influence L1 recognition only when the phonological overlap between the relevant L1 and L2 representations is relatively large.

Scatterplots representing the lexical competition effect (in percentages) in L2 (Experiment 1) as a function of three different measures of phonological similarity between items—namely, self-ratings (a), overlap in phonemes (b), and overlap in time (c)

Discussion

The present study provides evidence for cross-lingual lexical interactions in bilingual auditory word recognition. When listening to sentences in L2, participants fixated more on competitor pictures with names that were phonologically similar in L1 than on phonologically unrelated distractor pictures. This effect was independent of the degree of cross-lingual onset overlap. This is consistent with the results of isolation studies by Marian and Spivey (2003) and Weber and Cutler (2004) but contrasts with sentence studies by Chambers and Cooke (2009) and FitzPatrick and Indefrey (2010). Hence, cross-lingual interactions are not necessarily eliminated, even though sentences provide a strong language cue for lexical search, not present in isolated word recognition.

Crucially, we also showed for the first time that cross-lingual lexical interactions are not annulled by a sentence context when people listen in the native language. Participants fixated significantly more on pictures with a name with an overlapping L2 onset than on phonologically unrelated distractors, but only when the phonological similarity was relatively large (e.g., flower–fles [bottle]). If the onset overlap of the Dutch target and the English competitor was smaller (e.g., comb–koffer [suitcase]), distractor pictures did not interfere with L1 target recognition.

In addition to testing for cross-linguistic competition in an L1 spoken sentence context, this study is also important in explaining the inconsistent findings on the influence of L2 knowledge on L1 recognition in isolation. Because cross-lingual competition in L1 processing is heavily influenced by even a small variation in the phonological overlap between the lexical representations of both languages, this could explain why Weber and Cutler (2004) did not find an L1 effect, unlike Marian and colleagues (in addition to language profile factors). Indeed, the Weber and Cutler stimuli would correspond to the stimuli with partial overlap in the present study, also showing no effect. It is plausible that such an effect emerges especially in L1 processing: Overall activation is much weaker in L2 representations, so that cross-lingual competition arising from them is more susceptible to variations in the degree of activation transfer.

At a theoretical level, the between-language competition effect is consistent with monolingual models of auditory word recognition such as the distributed model of speech perception (Gaskell & Marslen-Wilson, 1997), the neighborhood activation model (Luce & Pisoni, 1998), Shortlist (Norris, 1994), and exemplar models (Goldinger, 1998) if they are extended with the assumption that L2 representations are part of the same system as, and interact with, L1 representations. Moreover, the fact that L2 knowledge competes with L1 recognition only when the phonological similarity between languages is sufficiently strong may be explained if these models assume that language-specific subphonemic cues result in (bottom-up) higher activation in the lexical representations of the target language. However, this subphonemic information could also be working through top-down competition if it is the case that language-specific phonemes and variations activate specific language nodes before lexical representations are activated. The latter would be predicted by interactive activation models such as TRACE (Elman & McClelland, 1988; McClelland & Elman, 1986).

To conclude, the present study reports lexical competition effects between languages, when unilingual sentences are listened to in both L2 and L1. This suggests that bilingual listeners do not use the cues that are inherent to the speech or the language of a sentence to restrict activation to a single lexicon. The resulting cross-lingual competition effects are highly sensitive to phonological similarity, especially in L1 processing, when competitive L2 activation is weak.

Notes

To increase comparability between our study and the work of Weber and Cutler (2004), we chose the time window between 300 and 800 ms after target onset to analyze the proportion of fixations, because it is estimated that an eye movement is programmed about 200 ms before it is launched (e.g., Fischer, 1992; Matin et al. 1993; Saslow, 1967). As a consequence, 300 ms after target onset is approximately the point at which fixations driven by the first 100 ms of acoustic information from the target word can be seen.

References

Altmann, G. T. M. (2004). Language-mediated eye movements in the absence of a visual world: The ‘blank screen paradigm’. Cognition, 93, 79–87.

Andruski, J. E., Blumstein, S. E., & Burton, M. W. (1994). The effect of subphonetic differences on lexical access. Cognition, 52, 163–187.

Baayen, R., Piepenbrock, R., & Van Rijn, H. (1993). The CELEX lexical database [CD-ROM]. Philadelphia: University of Pennsylvania Linguistic Data Consortium.

Chambers, C. G., & Cooke, H. (2009). Lexical competition during second-language listening: Sentence context, but not proficiency, constrains interference from the native lexicon. Journal of Experimental Psychology: Learning, Memory, and Cognition, 35, 1029–1040.

Dijkstra, T., & Van Heuven, W. J. B. (1998). The BIA model and bilingual word recognition. In J. Grainger & A. M. Jacobs (Eds.), Localist Connectionist Approaches to Human Cognition (pp. 189–225). Mahwah, NJ, USA: Lawrence Erlbaum Associates.

Duyck, W. (2005). Translation and associative priming with cross-lingual pseudohomophones: Evidence for nonselective phonological activation in bilinguals. Journal of Experimental Psychology: Learning, Memory, and Cognition, 31, 1340–1359.

Duyck, W., Desmet, T., Verbeke, L. P. C., & Brysbaert, M. (2004). WordGen: A tool for word selection and nonword generation in Dutch, English, German, and French. Behavior Research Methods, Instruments, & Computers, 36, 488–499.

Duyck, W., Van Assche, E., Drieghe, D., & Hartsuiker, R. J. (2007). Visual word recognition by bilinguals in a sentence context: Evidence for nonselective lexical access. Journal of Experimental Psychology: Learning, Memory, and Cognition, 33, 663–679.

Elman, J. L., & McClelland, J. L. (1988). Cognitive penetration of the mechanisms of perception: Compensation for coarticulation of lexically restored phonemes. Journal of Memory and Language, 27, 143–165.

Fischer, B. (1992). Saccadic reaction time: Implications for reading, dyslexia and visual cognition. In K. Rayner (Ed.), Eye Movements and Visual Cognition: Scene Perception and Reading (pp. 31–45). New York: Springer Verlag.

FitzPatrick, I., & Indefrey, P. (2010). Lexical competition in nonnative speech comprehension. Journal of Cognitive Neuroscience, 22, 1165–1178.

Gaskell, M. G., & Marslen-Wilson, W. D. (1997). Integrating form and meaning: A distributed model of speech perception. Language and Cognitive Processes, 12, 613–656.

Ghyselinck, M., Custers, R., & Brysbaert, M. (2003). Age-of acquisition ratings for 2332 Dutch words from 49 different semantic categories. Psychologica Belgica, 43, 181–214.

Goldinger, S. D. (1998). Echoes of echos? An episodic theory of lexical access. Psychological Review, 105, 251–279.

Ju, M., & Luce, P. A. (2004). Falling on sensitive ears - Constraints on bilingual lexical activation. Psychological Science, 15(5), 314–318.

Luce, P. A., & Pisoni, D. B. (1998). Recognizing spoken words: The neighborhood activation model. Ear and hearing, 19, 1–36.

Marian, V., & Spivey, M. (2003). Bilingual and monolingual processing of competing lexical items. Applied Psycholinguistics, 24, 173–193.

Matin, E., Shao, K., & Boff, K. (1993). Saccadic overhead: Information processing time with and without saccades. Perception and Psychophysics, 53, 372–380.

McClelland, J. L., & Elman, J. L. (1986). The TRACE model of speech perception. Cognitive Psychology, 18, 1–86.

McMurray, B., Tanenhaus, M. K., & Aslin, R. N. (2002). Gradient effects of within-category phonetic variation on lexical access. Cognition, 86, B33–B42.

Norris, D. G. (1994). Shortlist: A connectionist model of continuous speech recognition. Cognition, 52, 189–234.

Salverda, A. P., Brown, M., & Tanenhaus, M. K. (2011). A goal-based perspective on eye movements in visual world studies. Acta Psychologica, 137, 172–180.

Salverda, A. P., Dahan, D., & McQueen, J. (2003). The role of prosodic boundaries in the resolution of lexical embedding in speech comprehension. Cognition, 90, 51–89.

Saslow, M. (1967). Latency for saccadic eye movement. Journal of the Optical Society of America, 57, 1030–1033.

Schulpen, B., Dijkstra, T., Schriefers, H. J., & Hasper, M. (2003). Recognition of interlingual homophones in bilingual auditory word recognition. Journal of Experimental Psychology: Human Perception and Performance, 29, 1155–1178.

Severens, E., Van Lommel, S., Ratinckx, E., & Hartsuiker, R. J. (2005). Timed picture naming norms for 590 pictures in Dutch. Acta Psychologica, 119, 159–187.

Spivey, M. J., & Marian, V. (1999). Cross talk between native and second languages: Partial activation of an irrelevant lexicon. Psychological Science, 10, 281–284.

Tanenhaus, M., Spivey-Knowlton, M., Eberhard, K., & Sedivy, J. (1995). Integration of visual and linguistic information during spoken language comprehension. Science, 268, 1632–1634.

Van Assche, E., Duyck, W., Hartsuiker, R. J., & Diependaele, K. (2009). Does bilingualism change native-language reading? Cognate effects in a sentence context. Psychological Science, 20, 923–927.

Weber, A., & Cutler, A. (2004). Lexical competition in non-native spoken-word recognition. Journal of Memory and Language, 50, 1–25.

Acknowledgments

This research was made possible by the Research Foundation-Flanders (FWO), of which Evelyne Lagrou is a research fellow.

Author information

Authors and Affiliations

Corresponding author

Appendices

Appendix 1

Experimental stimuli used in Experiment 1 (overlapping onsets of target–competitor pairs are underlined)

Very similar phonological overlap | |||||

|---|---|---|---|---|---|

English target | Dutch competitor | Translation competitor | Unrelated distracter | Unrelated distracter | |

box | bokaal | goblet | skirt | tail | The shot of the man hit a box and missed the target. |

curtain | kussen | pillow | pig | shirt | My friend Hannah was looking at a curtain in that neighborhood. |

duck | duim | thumb | cloud | tape | Her little brother has drawn a duck and is now playing outside. |

flower | fles | bottle | dog | orange | That man finally got a flower, and that’s why he is happy |

slide | sleutel | key | king | lobster | One day she found a slide in the garden. |

speaker | spook | ghost | mouse | seal | I had a dream about a speaker during my sleep. |

spoon | spiegel | mirror | floor | truck | For his design of the the spoon the designer won the first prize. |

stairs | stoel | chair | neck | cell | To have a better look at the stairs she walked to the other side. |

stamp | steen | rock | feather | peanut | When Josh showed up with a stamp, everybody laughed. |

strawberry | strik | bow | toe | flashlight | She discovered a strawberry when she opened the box. |

Less similar phonological overlap | |||||

|---|---|---|---|---|---|

English target | Dutch competitor | Translation competitor | Unrelated distracter | Unrelated distracter | |

broom | broek | pants | peach | record | During a walk in the city, he saw a broom in the store. |

carpet | kast | cupboard | hammer | button | They almost found everything, but were still looking for a carpet in that house. |

carrot | kerk | church | spider | dentist | The stranger could not believe his eyes when he saw a carrot in that village. |

comb | koffer | suitcase | shoe | nail | The woman made a painting of the comb with the red paint. |

drill | draaimolen | carrousel | house | bird | Lucie took her bike and bought a drill at the market. |

farm | fakkel | torch | basket | brush | She received a farm as a birthday present. |

road | rolstoel | wheelchair | cheese | boy | Because I wanted to see the road I looked outside. |

scissors | citroen | lemon | chicken | trash | The only thing she wanted were scissors to throw with. |

tree | trommel | drum | necklace | bucket | She saw a tree at the corner of the street. |

wallet | wasknijper | clothespin | canopener | train | He says that a wallet is the most stupid invention. |

Appendix 2

Experimental stimuli used in Experiment 2 (overlapping onsets of target—competitor pairs are underlined)

Very similar phonological overlap | |||||

|---|---|---|---|---|---|

Dutch target | Translation target | English competitor | Unrelated distracter | Unrelated distracter | |

bokaal | goblet | box | skirt | tail | Het schot van de man raakte een bokaal en miste het doel. |

kussen | pillow | curtain | pig | shirt | Mijn vriendin Hannah bekeek een kussen in die buurt. |

duim | thumb | duck | cloud | tape | Haar kleine broer maakte een tekening van een duim, en speelt nu buiten. |

fles | bottle | flower | dog | orange | Die man kreeg eindelijk een fles, en was daarom gelukkig. |

sleutel | key | slide | king | lobster | Op een dag vond ze een sleutel in de tuin. |

spook | ghost | speaker | mouse | seal | 1 k had een droom over een spook tijdens mijn slaap. |

spiegel | mirror | spoon | floor | truck | Voor ziln ontwerp van een spiegel won de onwerper de eerste prijs. |

stoel | chair | stairs | neck | cell | Opdat ze beter kon kijken naar een stoel wandelde ze naar de andere kant. |

steen | rock | stamp | feather | peanut | Toen Jos aankwam met een steen, lachte iedereen. |

strik | bow | strawberry | toe | flashlight | Ze ontdekte een strik toen ze de doos opende. |

Less similar phonological overlap | |||||

|---|---|---|---|---|---|

Dutch target | Translation target | English competitor | Unrelated distracter | Unrelated distracter | |

broek | pants | broom | peach | record | Tijdens een wandeling in de stad zag hij een broek in de winkel. |

kast | cupboard | carpet | hammer | button | Ze vonden bijna alles, maar waren nog op zoek naar een kast in dat huis. |

kerk | church | carrot | spider | dentist | De vreemde kon zijn ogen niet geloven bij het zien van een kerk in dat dorp. |

koffer | suitcase | comb | shoe | nail | De kunstenares schilderde een koffer met de rode verf. |

draaimolen | carrousel | drill | house | bird | Lucie nam haar fiets en zag een draaimolen op de markt. |

fakkel | torch | farm | basket | brush | Ze kreeg een fakkel als geschenk voor haar verjaardag. |

rolstoel | wheelchair | road | cheese | boy | Omdat ik wou kijken naar een rolstoel keek ik naar buiten. |

citroen | lemon | scissors | chicken | trash | Ze wilde niets anders dan citroen om mee te gooien. |

trommel | drum | tree | necklace | bucket | Ze zag een trommel op de hoek van de straat. |

wasknijper | clothespin | wallet | canopener | train | Volgens hem is een wasknijper de stomste uitvinding. |

Rights and permissions

About this article

Cite this article

Lagrou, E., Hartsuiker, R.J. & Duyck, W. Interlingual lexical competition in a spoken sentence context: Evidence from the visual world paradigm. Psychon Bull Rev 20, 963–972 (2013). https://doi.org/10.3758/s13423-013-0405-4

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-013-0405-4