Abstract

The Effortfulness Hypothesis suggests that sensory impairment (either simulated or age-related) may decrease capacity for semantic integration in language comprehension. We directly tested this hypothesis by measuring resource allocation to different levels of processing during reading (i.e., word vs. semantic analysis). College students read three sets of passages word-by-word, one at each of three levels of dynamic visual noise. There was a reliable interaction between processing level and noise, such that visual noise increased resources allocated to word-level processing, at the cost of attention paid to semantic analysis. Recall of the most important ideas also decreased with increasing visual noise. Results suggest that sensory challenge can impair higher-level cognitive functions in learning from text, supporting the Effortfulness Hypothesis.

Similar content being viewed by others

Introduction

Both environmental noise and age-graded sensory declines (Speranza, Daneman, & Schneider, 2000) compromise sensory encoding of the linguistic stimuli in everyday communication. According to the Effortfulness Hypothesis (Wingfield, Tun, & McCoy, 2005), sensory challenge created by a muddy signal or by aging that makes perceptual processing effortful consumes attentional resources that would otherwise be used for higher cognitive functions. The current study was designed to directly test this hypothesis with healthy college students by adding visual noise (Speranza, Daneman, & Schneider, 2000) to the text.

The Effortfulness Hypothesis

The Effortfulness Hypothesis was first proposed by Rabbitt (1968), who tested young adults with normal hearing in a digit recall task. The first list was presented in a quiet background and the second one was heard either in quiet or in a noisy background. He found that recall for the first list was impaired when followed by a list embedded in noise. He concluded that poor memory for the first list was due to the deprivation of processing resources for rehearsal, which were allocated to the challenge of decoding the second list under noisy conditions. Similarly, Wingfield et al. (2005; McCoy, Tun, Cox, Colangelo, Stewart, & Wingfield, 2005) found that when older adults with mild-to-moderate hearing loss and those with relatively better hearing were required to recall an auditorily presented three-word list, both groups performed equally well at recalling the final word, implying that the hearing-impaired elders could identify the auditory input. However, they showed poorer recall of the earlier words in the list. These counterintuitive findings (see also Heinrich, Schneider, & Craik, 2008) provided support for the Effortfulness Hypothesis that sensory challenge taxes resources for higher-order cognition (i.e., memory) and creates performance deficits that are not directly due to data-limited processing (Norman & Bobrow, 1975) of the signal itself.

The Effortfulness Hypothesis and sentence processing

There is some evidence that the Effortfulness Hypothesis may apply to language processing as well. Using a simulated visual impairment technique to test normally sighted younger adults, Dickinson and Rabbitt (1991) found that “sensory declines” not only slowed down sentence reading speed, but also reduced participants’ recall performance. They concluded that, “sensory impairment can have significant secondary effects on higher level processes, such as memory, because it demands additional information processing capacity, which becomes unavailable for inference, rehearsal and association” (p. 301). However, there was no direct measure in their study of the underlying mechanisms impacted by impaired sensory processing.

There is evidence that environmental noise impairs the ability to instantiate word meanings and integrate them in text. For example, research with ERPs has revealed that, compared to intact conditions, acoustic distortion reduced the semantic priming effect measured by N400 amplitudes (i.e., the difference between incongruent/unrelated target words and congruent/related target words) (Aydelott, Dick, & Mills, 2006). In addition, N400 latency has also been shown to be significantly delayed under conditions of auditory and visual distraction (Aydelott et al., 2006; Holcomb, 1993).

Sentence processing requires both decoding of the surface form (e.g., word-level processing) and deep-level conceptual integration that forms the basis for semantic analysis to represent the meaning of the sentence (e.g., textbase processing) (McNamara & Magliano, 2009). Attentional allocation to the surface form is obligatory and prerequisite for textbase-level analysis, while allocation of resources to textbase processing shows more variability from individual to individual and as a function of task demands and is often correlated with immediate recall performance (Haberlandt, Graesser, Schneider, & Kiely, 1986; Stine-Morrow, Miller, & Hertzog, 2006).

Current study

Evidence supporting the Effortfulness Hypothesis in sentence processing has generally relied on memory performance and has not directly measured underlying processes. In the current study, a resource allocation approach (Stine-Morrow et al., 2006; Stine-Morrow & Miller, 2009) was used for this purpose. Participants read short text passages embedded in visual noise as reading times were measured. These reading times within each noise condition were regressed onto selected text features that operationalized particular linguistic processes. In this way, reading times were decomposed into attention/time allocated to particular computations related to word- and textbase-level processing. The resulting array of regression coefficients provide indicators of the time (in ms) that are spent on specific processes, and can be interpreted as the individual reader’s allocation policy in regulating language comprehension (see Stine-Morrow et al., 2006 for a review). For example, the coefficient relating reading time to log word frequency represents the time spent on lexical access (Millis, Simon, & tenBroek, 1998), whereas the coefficient relating reading time to newly introduced concepts represents the extra time spent on immediate conceptual instantiation (Stine-Morrow, Noh, & Shake, 2010). These coefficients, especially those representing conceptual processing, are somewhat reliable across time (Stine-Morrow, Milinder, Pullara, & Herman 2001) and across different types of text (Stine-Morrow, Miller, Gagne, & Hertzog, 2008). Coefficients representing time allocated to conceptual processing are predictive of text memory, suggesting that such attentional regulation is a critical aspect of creating a semantic representation of text to support memory (e.g., Haberlandt et al., 1986; Stine-Morrow et al., 2010).

Measuring resources allocated to various levels of sentence processing as a function of noise provides a direct test of the Effortfulness Hypothesis in reading comprehension. It was hypothesized that visual noise would increase attention required for word-level processing, drawing resources away from textbase-level processing to accommodate the demands at the word level. As a consequence, semantic organization of ideas and sentence memory were expected to be disrupted by noise (Dickinson & Rabbitt, 1991), so as to compromise recall performance.

Methods

Participants

Subjects in the study were 36 undergraduates (aged 18–24 years, M = 20.1 years), with a mean loaded working memory (WM) span task score (reading and listening; Stine & Hindman, 1994) of 5.2 (SD = 1.3). All participants had normal or corrected-to-normal vision based on a screening test using both the Snellen and the Rosenbaum vision charts.

Materials, design, and equipment

Text materials for this study were three sets (A, B, C) of 24 test sentences dealing with diverse topics in science, nature and history, which were adapted from those used by Stine-Morrow et al. (2001). These three sets of target sentences were equated in terms of length (18 words) and mean number of propositions (M A = 8.1, SD A = 1.4; M B = 8.4, SD B = 1.0; M C = 8.0, SD C = 1.4). The three sets of sentences were counterbalanced across three noise conditions, and the order of noise conditions was counterbalanced across subjects, creating nine unique stimulus sets. Each participant read all three sets of sentences, with one set of materials presented at each of three levels of visual noise. Each test sentence was followed by an unanalyzed filler sentence for obtaining an accurate estimate of conceptual wrap-up at the sentence boundary that was not affected by retrieval planning.

Text was embedded in visual noise by using two computer displays. The displays were arranged perpendicularly with a beamsplitter (a 2” optical cube) positioned to combine the visual noise on one monitor (driven by a G3 Mac) with the text on the other (projected by an iMac). Participants viewed the displays through the beamsplitter cube in an otherwise-darkened room. The brightness of both monitors as measured through the cube was equated, with mean luminance 15.5 cd/m2 for each monitor (measured using a PhotoResearch PR650 spectroradiometer). The distance from the center of the cube to each monitor was equal (43.8 cm). In this way, we insured that the two screens smoothly bonded together as though the text and the noise were presented in a single image. The physics of the beamsplitter guaranteed that the two images were linearly combined.

Dynamic visual noise was generated using Matlab software on the G3 Mac (Apple 17” CRT Studio Display with 256 colors, OS 8.6). The noise display was corrected for intensity nonlinearity (gamma correction) using software lookup tables. The noise was a 128 × 128 rectangular array of pixels, each subtending approximately 8 arcmin of visual angle at the eye, updated at the frame rate of the monitor (75 Hz). The noise had a Gaussian amplitude distribution (truncated at ±2 standard deviations) with mean luminance at the background level of the display, 15.5 cd/m2. Three noise power levels were created by varying the contrast (DL/L) of the noise pixels (Westheimer, 1985). Here, L is the mean luminance (15.5 cd/m2) and DL is the change about the mean. The mean brightness was set at half of the available range, so DL/L can vary between –1 (the pixel is black; DL = –L) to 1 (the pixel is twice L; DL = L). Noise pixel luminance then had a potential range of 0 to 31, corresponding to a –1 to +1 range for contrast. Noise power was scaled in terms of the standard deviation of contrasts on this two-fold range (σ = 0.0, 0.5, 0.7).Footnote 1

Passages were presented using the moving window paradigm programmed in SuperLab on the iMac (iMac 17” LCD monitor 1440*900 with 32-bit color, OS 10.4.10). The space for each character (Monaco 24-point nonproportional font) subtended approximately 21 × 14 arcmin at the eye.

To insure that participants could identify isolated words in the noisy background and that any deficit in text memory was not due to perceptual or data-limited processes (Norman & Bobrow, 1975), a lexical decision task was administered. Eighteen high-frequency, 18 low-frequency, and 18 non-words controlled for length were randomly assigned to three lists, resulting in six words from each category in each list. Participants only saw one list of words at each level of noise.

Procedure

Participants were tested individually and the entire experimental session lasted about 90 min. For the reading task, each participant was instructed to read through the cube using the left eye, with the right eye covered by an eye pad. This was done so that subjects could read through the 2” cube while avoiding binocular competition. Participants kept their heads still on a chin-rest; the distance from the eye to the center of the cube was fixed at 2.5”.

First, participants completed a lexical decision task, before which they were told that some of the words would be “presented with some distractions like the static or snow on a fuzzy television picture.” Afterwards, participants read the experimental texts and were encouraged to read as naturally as possible and “to remember as much of the information from the passages as possible,” because they would be occasionally asked to recall some of these passages. A “READY?” signal was presented in the center of the screen at the beginning of each trial. The participants pressed the space bar, which removed this signal and triggered a fixation point (+) at the top left corner of the screen, indicating the spot where the first word of the passage would appear. The configuration of the entire two-sentence passage was indicated by dashes and punctuations that followed the fixation point. Successive key presses caused the text to be presented one word at a time.

There were three practice trials in each noise condition to familiarize participants with the procedure. The phrase “PLEASE RECALL NOW” appeared on the screen after a randomly selected third of the passages, signaling that the participant should recall aloud the passage they had just read. Participants’ recall protocols were recorded and later transcribed and scored, using a gist criterion for propositional recall. Two independent raters scored a subset of protocols (from three randomly chosen participants) with good reliability (r = 0.95).

Results and discussion

Lexical decision task

Accuracy and response time data from the lexical decision task were each analyzed in a noise by word frequency ANOVA. There was no effect of noise on accuracy (M none = 96.8%, se = 1.1%, M low = 95.1%, se = .1.8%, M high = 97.2%, se = 0.7%), F < 1; but accuracy was higher for high-frequency words (M H = 98.5%, se = 0.8%, M L = 94.3%, se = 1.0%), F(1, 35) = 26.50, p < 0.001. For response time (based on correct responses), the main effects of noise (M none = 893 ms, se = 41, M low = 977 ms, se = 50, M high = 1,195 ms, se = 67), F(2, 70) = 14.58, p < 0.001, and word frequency (M H = 926ms, se = 43, M L = 1,116 ms, se = 45), F(1, 35) = 53.79, p < 0.001, were reliable; but the interaction was not, F < 1. These findings indicated that readers could precisely identify isolated words in noise, but that noise decreased lexical processing speed, an effect likely to occur at the character recognition stage of processing (Pelli, Farell, & Moore, 2003).

Reading time

Reading time per word increased as noise increased, F(2, 70) = 14.10, p < 0.001, with reading times of 561 ms (se = 25), 581ms (se = 22), and 637 ms (se = 29) for no-, low-, and high-noise conditions, respectively.

Patterns of resource allocation

Regression analysis of reading times onto linguistic features was used to decompose the reading time into attention (time) allocated to component processes (e.g., Lorch, & Myers, 1990) underlying sentence understanding. In order to estimate resources allocated to different computations needed for sentence processing, each word in the sentence was coded in terms of an array of word-level and textbase-level features. The word-level features included the number of syllables and log word frequency of each word in the sentence, in order to estimate the time allocated to orthographic decoding and to word meaning access, respectively. Conceptual integration of the textbase was operationalized as the responsiveness of reading time to two variables. First, each word was dummy-coded (0/1) for whether it was a newly introduced noun concept in the sentence. An increase in reading time as a function of this variable provides an estimate of the time allocated to immediate processing of conceptual information (35.1% of the words were coded as new concepts). Second, the cumulative conceptual load at sentence boundaries was calculated by multiplying the total number of concepts introduced in the sentence by the dummy-coded variable for the sentence-final word (1 out of 18 = 5.6% of the total words), to estimate conceptual integration at sentence wrap-up (Haberlandt et al., 1986; Stine-Morrow et al., 2010). Reading times for each participant within each visual noise condition were decomposed by regressing them onto these four features to operationalize attention allocated to various levels of sentence processing. Note that using the product term to isolate wrap-up term produces a coefficient that represents the time per new concept allocated at that point; thus, estimates of resource allocation for immediacy in conceptual integration and wrap-up were on the same scale. This collection of variables represented essential sources of engagement for word and textbase processing; these variables were also minimally correlated to avoid multicollinearity.

Individual parameters

The coefficients from individual regressions were trimmed such that regression coefficients greater than 3SD away from the group mean within each condition were replaced with that mean (<2.1% data). Means and standard errors for individual parameters are presented in Table 1. Each coefficient may be interpreted as an estimate of time allocated to a particular type of computation while controlling for the impact of other demands. For example, referring to Table 1, reading time in the no-noise increased by 30 ms per syllable, controlling for the effects of word frequency, new concepts and sentence boundary wrap-up on reading time.

Consistent with the Effortfulness Hypothesis, the effect of word frequency on resource allocation was exaggerated by noise, F(2, 70) = 3.50, p < 0.05, and the sentence wrap-up effect was reduced, F(2, 70) = 3.62, p < 0.05. With noise, more time was allocated to lexical access and less time was allocated to conceptual integration. There were also nonsignificant trends for increased allocation to orthographic decoding and decreased allocation for processing new concepts with increasing noise, F < 1 for both.

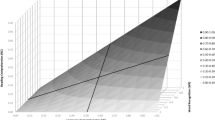

Construct-level analyses

To specifically test the key implication of the Effortfulness Hypothesis that word-level and textbase-level processing would diverge in noise, composite scores were created in order to get reliable estimates of word-level and textbase-level processing at the construct level. We obtained the index of word-level processing by averaging z-scores of syllable and reverse-coded log word frequency parameters and the index of textbase-level processing by averaging z-scores of new concept and sentence wrap-up parameters. These indices were analyzed in a 3 (noise intensity) × 2 (level of processing) repeated measures ANOVAFootnote 2, in which both noise and level of sentence processing were within-subject variables. The interaction between noise and level was reliable, F(2, 70) = 5.21, p < 0.01 (Fig. 1). Consistent with the Effortfulness Hypothesis, noise tended to increase word-level processing, F(2, 70) = 2.96, p = 0.06, and to significantly decrease textbase-level processing, F(2, 70) = 3.95, p < 0.05.

Recall performance

A propositional analysis of the 24 sentences yielded 172 propositions. Visual noise did not have an effect on overall propositional recall, F1/2 < 1. To assess the effects of noise on recall in a more fine-grained way, we examined the quality of recall with a memorability analysisFootnote 3 (Hartley, 1993; Rubin, 1985; Stine & Wingfield, 1988). We divided the propositions into three memorability groups based on the normative probability of recall in the no-noise condition. Recall was then analyzed in 3 (Noise: none, low, high) × 3 (MemorabilityFootnote 4: low, medium, high) repeated measures ANOVA. Noise did not affect overall recall, F1/2 < 1. However, the Noise by Memorability interaction was highly reliable in both subject and item analyses, F1(4, 136) = 3.21, p < 0.05; F2(4, 338) = 4.80, p = .001. Visual noise tended to disproportionally disrupt recall of more core propositions, F1(2, 70) = 1.70, p = 0.19; F2(2, 100) = 4.52, p < 0.05, and concomitantly increased recall of less central propositions in text, F1(2, 70) = 3.99, p < 0.05; F2(2, 114) = 5.87, p < 0.01, whereas recall of moderately memorable propositions remained unaffected, F1/2 < 1 (Table 2).Footnote 5

Conclusions

Faced with a degenerated linguistic signal, readers reallocated attentional resources so as to encode the surface form at the cost of resources for text-level semantic analysis. Lexical decision data showed that participants could clearly identify individual words in noise (cf. McCoy et al., 2005). The increased allocation to lexical analysis needed to overcome this difficulty (presumably an effect at a character recognition stage; Pelli et al., 2003) reduced the availability of resources for textbase processing and compromised the quality of text recall so that readers were less able to distinguish between more central ideas and details. These findings are consistent with the Effortfulness Hypothesis (Wingfield et al., 2005), highlighting the importance of sensory challenge (e.g., such as with aging) in learning from text.

Notes

This is the same basic definition of noise contrast used by Pelli et al. (2003), but since our standard deviations are defined with respect to a two-fold range of contrasts, our standard deviation values are twice the size of theirs.

To test whether WM might moderate the noise effect on resource allocation, we divided participants into high- and low-WM groups based on a median split, and reanalyzed the data with WM treated as a between-participant variable in a three-way ANOVA. The WM by level interaction was marginally significant, F(1, 34) = 3.65, p = 0.065: high-WM participants tended to allocate more attention to textbase processing (M word = –0.10, se = 0.13; M textbase = 0.10, se = 0.16), while low-WM participants allocated more attention to word-level processing (M word = 0.10, se = 0.13; M textbase = –0.10, se = 0.16), as also shown by Stine-Morrow et al. (2008). This interaction did not vary with noise, F < 1, however.

Idea units or propositions conveyed in a sentence vary in the probability of being remembered (i.e., memorability; Rubin, 1985; Stine & Wingfield, 1988). Normatively, more memorable propositions are often more thoroughly processed because of their being more central to the meaning of the sentence (Kintsch & van Dijk, 1978; Miller & Kintsch, 1980), as well as many other features that are sometimes hard to identify (Rubin, 1985). Without specifying why certain propositions are more memorable than others, memorability analysis is an empirically driven approach that simply assumes that normatively more memorable propositions were more deeply encoded.

We divided the propositions into three memorability groups based on the normative probability of recall for each proposition in the no-noise condition, collapsing across subjects. We based our coding of memorability based on recall in the no-noise condition, because this was the control condition in which it was expected that reading would most closely resemble typical text processing. Memorability bins were approximately equal in size with the constraint that boundaries were shifted to accommodate multiple propositions with equal likelihoods of recall. Fifty-eight propositions with p(recall)<.50 were in the “low memorability” group; 51 propositions with p (recall) > 0.78 were in the “high memorability” group; and the remaining 63 propositions were in the “medium memorability” group.

We also analyzed recall in a three-way (Noise by Memorability by WM) ANOVA. Individuals with higher working memory capacity recalled more than those with lower working memory, F1(1, 34) = 4.99, p < 0.05; F2(1, 169) = 72.69, p < 0.001. None of the interactions was statistically significant.

References

Aydelott, J., Dick, F., & Mills, D. L. (2006). Effects of acoustic distortion and semantic context on event-related potentials to spoken words. Psychophysiology, 43, 454–464.

Dickinson, C. V. M., & Rabbitt, P. M. A. (1991). Simulated visual impairment: Effects on text comprehension and reading speed. Clinical Vision Sciences, 6, 301–308.

Haberlandt, K., Graesser, A. C., Schneider, N. J., & Kiely, J. (1986). Effects of task and new arguments on word reading times. Journal of Memory and Language, 25, 314–322.

Hartley, J. (1993). Aging and prose memory: Tests of the resource-deficit hypothesis. Psychology and Aging, 8, 538–551.

Heinrich, A., Schneider, B. A., & Craik, F. I. M. (2008). Investigating the influence of continuous babble on auditory short-term memory performance. The Quarterly Journal of Experimental Psychology, 61, 735–751.

Holcomb, P. J. (1993). Semantic priming and stimulus degradation: Implications for the role of the N400 in language processing. Psychophysiology, 30, 47–61.

Kintsch, W., & van Dijk, T. A. (1978). Toward a model of text comprehension and production. Psychological Review, 85, 363–394.

Lorch, R. F., & Myers, J. L. (1990). Regression analyses of repeated measures data in cognitive research. Journal of Experimental Psychology. Learning, Memory, and Cognition, 16, 149–157.

McCoy, S. L., Tun, P. A., Cox, L. C., Colangelo, M., Stewart, R. A., & Wingfield, A. (2005). Hearing loss and perceptual effort: Downstream effects on older adults’ memory for speech. The Quarterly Journal of Experimental Psychology, 58A, 22–33.

McNamara, D. S., & Magliano, J. (2009). Toward a comprehensive model of comprehension. In B. H. Ross (Ed.), Psychology of learning and motivation (Vol. 51, pp. 297-384). New York: Elsevier

Miller, J. R., & Kintsch, W. (1980). Readability and recall of short prose passages: A theoretical analysis. Journal of Experimental Psychology: Human Learning and Memory, 6, 335–354.

Millis, K. K., Simon, S., & tenBroek, N. S. (1998). Resource allocation during the rereading of scientific texts. Memory & Cognition, 26, 232–246.

Norman, D. A., & Bobrow, D. G. (1975). On data-limited and resource-limited processes. Cognitive Psychology, 7, 44–64.

Pelli, D. G., Farell, B., & Moore, D. C. (2003). The remarkable inefficiency of word recognition. Nature, 423, 752–756.

Rabbitt, P. M. A. (1968). Channel capacity, intelligibility and immediate memory. The Quarterly Journal of Experimental Psychology, 20, 241–248.

Rubin, D. C. (1985). Memorability as a measure of processing: A unit analysis of prose and list learning. Journal of Experimental Psychology: General, 114, 213–238.

Speranza, F., Daneman, M., & Schneider, B. A. (2000). How aging affects the reading of words in noisy backgrounds. Psychology and Aging, 15, 253–258.

Stine, E. A. L., & Hindman, J. (1994). Age differences in reading time allocation for propositionally dense sentences. Aging and Cognition, 1, 2–16.

Stine, E. A. L., & Wingfield, A. (1988). Memorability functions as an indicator of qualitative age differences in text recall. Psychology and Aging, 3, 179–183.

Stine-Morrow, E. A. L., Milinder, L., Pullara, P., & Herman, B. (2001). Patterns of resource allocation are reliable among younger and older readers. Psychology and Aging, 16, 69–84.

Stine-Morrow, E. A. L., & Miller, L. M. S. (2009). Aging, self-regulation, and learning from text. In B. H. Ross (Ed.), Psychology of learning and motivation (Vol. 51, pp. 255-296). New York: Elsevier

Stine-Morrow, E. A. L., Miller, L. M. S., Gagne, D. D., & Hertzog, C. (2008). Self-regulated reading in adulthood. Psychology and Aging, 23, 131–153.

Stine-Morrow, E. A. L., Miller, L. M. S., & Hertzog, C. (2006). Aging and self-regulated language processing. Psychological Bulletin, 132, 582–606.

Stine-Morrow, E. A. L., Noh, S. R., & Shake, M. C. (2010). Age differences in the effects of conceptual integration training on resource allocation in sentence processing. The Quarterly Journal of Experimental Psychology, 63, 1430–1455.

Westheimer, G. (1985). The oscilloscopic view: Retinal illuminance and contrasts of point and line targets. Vision Research, 25, 1097–1103.

Wingfield, A., Tun, P. A., & McCoy, S. L. (2005). Hearing loss in older adults: What it is and how it interacts with cognitive performance. Current Directions in Psychological Science, 14, 144–148.

Author Notes

This study was supported by the National Institute on Aging (Grant R01 AG13935) to whom we are most grateful. We also would like to thank Kiel Christianson, Kara Federmeier, and Matthew Shake for insightful discussions and comments on earlier drafts of this paper. Appreciation also goes to Shoshana Hindin, Micaela Chan and Minerva Dorant for assistance with testing participants and transcribing auditory files. Correspondence concerning this article should be addressed to: Xuefei Gao, Beckman Institute, University of Illinois, Urbana, IL, 61801, gao5@illinois.edu, or Elizabeth A. L. Stine-Morrow, Department of Educational Psychology, University of Illinois, 226 Education Building, 1310 South Sixth Street, Champaign, IL, 61820, eals@illinois.edu.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gao, X., Stine-Morrow, E.A.L., Noh, S.R. et al. Visual noise disrupts conceptual integration in reading. Psychon Bull Rev 18, 83–88 (2011). https://doi.org/10.3758/s13423-010-0014-4

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-010-0014-4