Abstract

Previous research with the ratio-bias task found larger response latencies for conflict trials where the heuristic- and analytic-based responses are assumed to be in opposition (e.g., choosing between 1/10 and 9/100 ratios of success) when compared to no-conflict trials where both processes converge on the same response (e.g., choosing between 1/10 and 11/100). This pattern is consistent with parallel dual-process models, which assume that there is effective, rather than lax, monitoring of the output of heuristic processing. It is, however, unclear why conflict resolution sometimes fails. Ratio-biased choices may increase because of a decline in analytical reasoning (leaving heuristic-based responses unopposed) or to a rise in heuristic processing (making it more difficult for analytic processes to override the heuristic preferences). Using the process-dissociation procedure, we found that instructions to respond logically and response speed affected analytic (controlled) processing (C), leaving heuristic processing (H) unchanged, whereas the intuitive preference for large nominators (as assessed by responses to equal ratio trials) affected H but not C. These findings create new challenges to the debate between dual-process and single-process accounts, which are discussed.

Similar content being viewed by others

Should two courses be judged equal, then the will cannot break the deadlock, all it can do is to suspend judgement until the circumstances change, and the right course of action is clear.

— Jean Buridan, 1340

Imagine you are given a choice between two trays containing red and white marbles. In each tray, there are always more white than red marbles. The small tray contains one red marble out of a total of 10. The large tray contains nine red marbles out of a total of 100. If the drawn marble is red, you win a prize. Although most people can easily realize that the smaller tray provides a better winning chance, they still feel an intuitive preference for the large tray (i.e., the one with more red marbles). This so-called ratio-bias effect (Kirkpatrick & Epstein, 1992; Miller, Turnbull, & McFarland, 1989) has been shown to be strong enough to lead to “irrational” choices (e.g., choosing 9/100 over 1/10 probability of success). From a dual-process perspective, the preference for the large tray is the result of intuitive, heuristic, or Type 1 processing, whereas the realization that this tray provides worse winning chances is achieved via more analytic, deliberative or Type 2 processing (Denes-Raj & Epstein, 1994; Evans & Stanovich, 2013). It is, however, less clear whether these biased choices are made because of a decline in analytical reasoning (leaving intuitive preferences unopposed), or to a rise in heuristic processing (making it more difficult to override the intuitive preferences), or both.

Now, imagine that the small tray contains one red marble out of a total of 10, and the large tray contains 10 red marbles out of a total of 100. Because the two trays offer the same chances of winning (10 %), analytical processes provide no cue about how to decide between the two normatively equivalent prospects. Thus, similar to the famous thought experiment usually referred to as Buridan’s paradox, a purely rational agent facing the two equally good alternative courses of action offered by this equal-ratio problem would be (at least momentarily) unable to decide between them. Given that it does not have one logically correct solution, the responses to equal-ratio problems may be seen as reflecting the intuitive preference for the larger numerator.

In this paper, we use the ratio-bias task to better understand how heuristic and analytic processes interact to determine judgments and choices. We experimentally test the extent to which the biased choices in the ratio-bias task are the result of a decline in analytic reasoning or of a rise in heuristic processing.

Our goal is to contribute to an eagerly debated issue among dual-process theories of reasoning and judgment: namely, how heuristic and analytic processes interact to determine judgments and choices. In this respect, two different views can be distinguished. One view postulates that heuristic bias can be attributed to a failure in monitoring intuition and in detecting conflict (e.g., Kahneman & Frederick, 2002; Stanovich & West, 2000). The other view argues that conflict between heuristic and analytic reasoning is usually detected, but we then fail to override the intuitive appeal of the heuristics, eventually leading us to behave against our better judgment (De Neys, 2012; Denes-Raj & Epstein, 1994).

Research aimed at testing these alternative perspectives typically uses reasoning problems (e.g., ratio-bias problems, base-rate problems, belief-bias syllogisms) that present a conflict between heuristic and analytic responses, along with “no-conflict” versions of the same problems where both heuristic and analytic processes converge on the same answer (e.g., De Neys & Glumicic, 2008). The underlying idea is that, if people detect some degree of conflict between heuristics and the relevant logical norms, the two versions of the problem should be processed differently.

Several studies using different measures and different kinds of reasoning problems have shown that reasoners, even biased ones, are sensitive to conflict (i.e., process the conflict and no-conflict problems differently; for a review, see De Neys, 2012). Other studies have put into question the generalizability of this conclusion by showing that biased reasoners often fail to correctly represent the conflict posed by the premises of certain reasoning problems (Mata, Shubert, & Ferreira, 2014; see also Mata & Almeida, 2014; Mata, Ferreira, & Sherman, 2013, Study 3) and have identified boundary conditions to the biased reasoners’ conflict sensitivity by showing that a sizable proportion of these reasoners failed to detect the conflict in the ratio-bias problems (Mevel et al., 2015). Moreover, the detection of conflict by biased reasoners reported by De Neys and Glumicic (2008) using base-rate problems seems to occur mostly in the presence of extreme probabilities (Pennycook, Fugelsang, & Koehler, 2012).

Recently, Pennycook, Fugelsang, and Koehler (2015) proposed a three-stage process model of analytic engagement, according to which reasoning problems may potentially cue multiple competing Type 1 outputs even if some of these initial outputs are more fluently processed than others (Thompson, 2009). If no conflict is detected (either because Type 1 processes did not produce competing outputs or because of conflict-detection failures) the first initial (more fluently processed) Type 1 output will be accepted with cursory analytic (Type 2) processes. If a conflict is detected, Type 2 processes may be used to justify the type 1 output that first came to mind (rationalization) or to inhibit and override the intuitive response (decoupling). Conflict detection is indicated by longer response times for heuristic responses to conflict trials when compared to correct responses to no-conflict trials (the baseline). Decoupling is indicated by longer response times for analytic responses to conflict trials when compared to correct responses to no-conflict trials.

By allowing for the possibility of different (opposing) Type 1 outputs and by considering a less-than-perfect conflict detection stage, this model provides a new look on the bottom-up factors that determine when reasoners will think analytically or rely on their intuitions, which is conciliatory of the failure-in-detection (Kahneman & Frederick, 2002) and the failure-in-inhibition (De Neys & Glumicic, 2008) accounts.

The significant progress already accomplished notwithstanding, most of the previous research tends to confound types of reasoning (heuristic vs. analytical) with responses to the inferential problems, disregarding that the two responses may differ in a number of ways beyond the extent to which they tap into heuristic versus analytical processes. The more general point is that no task is “process pure” (Conrey, Sherman, Gawronsky, Hugenberg, & Groom, 2005; Ferreira, Garcia-Marques, Sherman, & Sherman, 2006; Jacoby, 1991). It is highly unlikely that so-called heuristic responses to an inferential task depend entirely on heuristic processes and not at all on analytical processes, or vice versa. In most, if not all, cases, responses are influenced by both intuition and deliberation (Wegner & Bargh, 1998).

The process-pure problem is not specific to the study of inferential processes, but it emerges whenever processes are to be measured in terms of particular experimental tasks (Hollender, 1986; Jacoby, 1991). As a consequence, selective influences of empirical variables can rarely be measured directly. It is therefore important to try to obtain uncontaminated measures of processes through procedures that do not require or assume a one-to-one relation between tasks and processes. We use one such solution by applying the process dissociation procedure (PD) to the ratio-bias task.

The PD was originally designed to separate automatic and conscious contributions to memory task performance (Jacoby, 1991; Jacoby, Toth, & Yonelinas, 1993). However, its logic has been applied to different experimental contexts as a general methodological tool for separating the contributions of automatic and controlled processes (see Payne & Bishara, 2009; Yonelinas & Jacoby, 2012). The procedure makes use of a facilitation paradigm, or inclusion condition, in which automatic and controlled processes act in concert, and an interference paradigm, or exclusion condition, in which the two processes act in opposition. Assuming that both processes contribute to performance and operate independently, estimates of each can be obtained by comparing performance across the two conditions.

In previous research using the PD, Ferreira et al. (2006) used the classic reasoning problems as interference paradigms (exclusion conditions), as they oppose heuristic to analytical processes, creating a conflict between them. Examples include syllogisms, where the believability of the conclusion and its logical necessity are at odds (e.g., De Neys & Franssens, 2009), and base-rate (BR) problems, where the information about the individual is inconsistent with the majority of the population (e.g., De Neys & Franssens,). Such reasoning problems can be relatively easily transformed into facilitation paradigms by aligning the heuristic response with the analytical response. In the aforementioned examples, syllogisms can be created where the believability of the conclusions may correspond to the logical valid response; in BR problems, the individual description may be consistent with the base rates. By contrasting reasoning performance in the exclusion and inclusion conditions, Ferreira et al. (2006) were able to obtain estimates of both analytic and heuristic processes and gain further insight into the nature of the interaction between them (see also Mata, Ferreira, & Reis, 2013; Mata, Fiedler, Ferreira, & Almeida, 2013). In the present research, we extend the use of the PD procedure by applying it to the ratio-bias task (Bonner & Newell, 2010) and obtain estimates of both analytic (controlled) processes (C) and heuristic processes (H).

Experiment 1

Aim and hypotheses

Experiment 1 aimed at replicating Bonner and Newell’s (2010) main results while using the PD procedure to test specific predictions concerning whether the conditions in which the biased choices in the ratio-bias task are the result of a decline in analytic reasoning or of a rise in heuristic processing.

Adapting the PD to the ratio-bias task is quite straightforward. Conflict trials such as “1/10 vs. 9/100” correspond to the exclusion condition (i.e., interference paradigm) because analytic reasoning (i.e., controlled processing; C) leads to the large-ratio option (1/10), whereas heuristic processing (H) leads to the opposing large-numerator option (9/100). No-conflict trials (e.g., 1/10 vs. 11/100) correspond to the inclusion condition (i.e., facilitation paradigm) because in these trials the ratio with the large numerator is also the larger ratio, which may be chosen either via analytic reasoning (C) or via heuristic processing (H).

In the inclusion condition, because the processes concur, the probability of choosing the large numerator is given by: C + H (1 - C). In the exclusion condition, where the two processes work in opposition, the ratio with the large numerator (the smaller ratio in this case) will be chosen only if controlled processing fails and as a result of heuristic influences: H (1 – C). On the basis of these equations, it is possible to obtain estimates of heuristic and analytic processes: C can be estimated on the basis of the difference in performance in the inclusion and exclusion conditions (C = inclusion - exclusion); and H = exclusion/(1 – C).

Analytical reasoning is believed to be under participants’ control, whereas heuristic processing is assumed to be more automatic. Accordingly, varying participants’ goals should affect C but leave H unchanged. We test for this possibility by manipulating participants’ goals via instructions. Participants were asked to respond to the ratio-bias task according to “what would logical people do” versus “what would most people do.” We assume that the instruction to respond like most people (when compared to an instruction to respond logically) should increase ratio-bias responses because participants are given “permission” to relax their analytic mindsets and go with their heuristic response (Epstein & Pacini, 2000−2001). Thus, these instructions are predicted to decrease analytic processing, without affecting heuristic processing.

The quantitative difference between small and large ratios in conflict trials could be thought of as a proxy for the amount of conflict. The larger this difference is, the lower the number of ratio-bias responses (Bonner & Newell, 2010). It is less clear, however, the extent to which heuristic and analytic processes contribute to this outcome. Here, we test the relative contribution of heuristic and analytic processes by using small (1 %–3 %), medium (4 %–6 %), and large (7 %–9 %) ratio differences, just as Bonner and Newell did. We envisage two possibilities. The expected decrease in the number of biased responses as the ratio differences increase could be due to a gradual increase in analytic processing, because it becomes easier to compute the analytical response. Alternatively, the decrease in biased responses could also be due to a decrease in the intuitive appeal of the heuristic answer (i.e., decline of heuristic processing), as the difference between the ratios’ numerators decreases. It could also be that both factors play a role.

Given that equal-ratio trials do not have a logically preferable solution, they may better reflect a preference for the heuristic response (i.e., choosing the large numerator). Thus, a greater proportion of large-numerator responses in equal-ratio trials should be associated with more ratio-bias answers in both the conflict and no-conflict trials. We predict that this increase in ratio-bias answers will stem from an increase in heuristic processing.Footnote 1

Furthermore, given the deliberative nature of analytical reasoning and the relatively automatic nature of heuristic processing, we further predict that analytic processes as measured by the controlled component (C) of the PD should be more demanding of general cognitive resources and hence more time-consuming. In contrast, H measures of heuristic processing are not expected to correlate with response time.

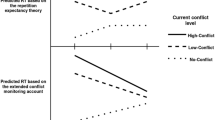

Finally, in their analysis of response times, Bonner and Newell (2010) found longer response times for conflict than for no-conflict problems both for low-bias and for high-bias participants (defined by a median split on the proportion of nonoptimal responses to conflict trials). They interpreted these results as suggesting that nonoptimal responses (i.e., choosing the smallest ratio in conflict trials) are caused not by a lack of conflict detection but by a failure to resolve the conflict after conflict detection. However, failures of conflict detection and conflict inhibition can be better evaluated by respectively comparing the response times of nonoptimal and optimal responses to conflict trials to the response times of optimal responses to no-conflict trials (De Neys, 2012; Pennycook et al., 2015). We expect to replicate the main features of Bonner and Newell’s response-time analysis, but we will also analyze response time as a function of accuracy to look for the hallmarks of conflict detection and conflict inhibition in the ratio-bias task.

Method

Participants

Seventy undergraduates from Indiana University (65 % female; M age = 20.5, SD = 1.2) participated in return for credit course.

Design

The experiment was a 2 × (2 × 3) mixed design. Instructions were manipulated between subjects: What would most people do (most people condition) or what would completely logical people do (logical people condition). The two within-subjects variables were trial type (conflict vs. no-conflict) and size of the difference between ratios: 1 %–3 % (small), 4 %–6 % (medium), or 7 %–9 % (large).

Materials

The ratio pairs were developed using the same percentage ranges used by Bonner and Newell (2010) . The small tray contained 1, 2, or 3 red jelly beans out of a total 10 jelly beans (i.e., 10 %–30 %). The large tray contained 100 jelly beans, and the proportion of red jelly beans differed from the small tray by a range of -9 % to +9 %. This resulted in 57 combinations: 27 conflict trials (e.g., 1/10 vs. 9/100), 27 no-conflict trials (e.g., 1/10 vs. 11/100), and three equal trials (e.g., 1/10 vs. 10/100). The three equal trials were duplicated to counterbalance the position of the large and small tray (e.g., 1/10 vs. 10/100 and 10/100 vs. 1/100). The resulting 60 trials were repeated in three sequential blocks for a total of 180 trials. Thus, each participant responded to 81 conflict trials, 81 no-conflict trials, and 18 equal-ratio trials.

Procedure

The participants completed the experiment on a computer. They began by reading the following instructions adapted from Bonner and Newell (2010):

In this experiment you will be shown pairs of trays containing red and white jelly beans.

These pairs of trays are used in another experiment, where the participant chooses a tray, then one jelly bean is randomly selected from the chosen tray by the experimenter. If the selected jelly bean is red, then the participant wins money. If it is white, they win nothing.

The jelly beans are mixed up and flattened into one layer before the selection is made so that the red ones could end up anywhere within the tray.

The interesting thing about this experiment is that some participants show a distinct preference for one tray or the other.

The goal of the latter paragraph was to reduce participants’ desire to “appear” rational by making them feel that it was acceptable to indicate that others might have an irrational tray preference (Epstein & Pacini, 2000−2001).

Participants were then told that their task was to choose the tray that most people or completely logical people select in the described jelly-bean experiment. They then completed 10 practice trials (eight no-conflict trials and two conflict trials) to become familiar with the task. After the practice trials, the 180 experimental trials were completed in randomized order within each block of 60 trials. A brief summary of the introduction was visible throughout all trials. On each trial, the question “Which tray do you think most/completely logical people choose?” appeared above the pictures of the small tray (10 jelly beans) and the large tray (100 jelly beans). The numerical ratio of red jelly beans to total jelly beans (e.g., 1/10) was always shown above each tray picture. The position (left or right) of the small and large trays was randomized on each trial. The participants indicated their tray choice using the left or right response key. No response time deadline was imposed.

Dependent measures

We analyze the proportion of nonoptimal responses to the no-conflict and conflict trials and the proportion of large numerator responses to equal trials. We also examine the mean response times for no-conflict and conflict trials (for all trials, and for trials on which the correct response was given). We also compute the C and H parameters of the PD procedure. In particular, we subtract the proportion of nonoptimal responses to conflict trials (p NO|C) from the proportion of optimal responses to no-conflict trials (p O|NC), such that C = p O|NC − p NO|C . Then, H is given by \( \mathrm{H}=\raisebox{1ex}{${p}_{NO\Big|C}$}\!\left/ \!\raisebox{-1ex}{$1-\mathrm{C}$}\right. \).

Results

Preemptive responses (<200 ms) were excluded, which affected 0.16 % of the data. For the response-time analysis, outliers (>2.5 SDs from the mean = slower than 5.99 seconds) were excluded, which affected 2.44 % of the data. Table 1 displays the mean proportion of nonoptimal responses and mean response time in each condition (across conflict and no-conflict trials). A 2 × (2 × 3) repeated-measures ANOVA was conducted on the proportion of nonoptimal responses, based on the 162 conflict and no-conflict trials (i.e., excluding the 18 equal trials). The variables were instructions (most people vs. logical people), direction (conflict or no-conflict), and size (small, medium, or large). A nonoptimal response was defined as choosing the tray with the smaller percentage chance of winning. There were two main effects. A main effect of instructions, F(1, 68) = 7.70, p = .007, ηp 2 = .10, indicated that the most-people group (M = 19.2 %, SE = 2.7 %) gave more nonoptimal responses than the logical-people group (M = 8.5 %, SE = 2.7 %). A main effect of size, F(2, 136) = 6.06, p = .002, ηp 2 = .09, showed the proportion of nonoptimal responses decreased as the difference between the ratios increased (M small = 15.4 %, SE = 3.0 %; M medium = 13.6 %, SE = 2.9 %; M large = 12.5 %, SE = 2.5 %), with a significant difference between small and large ratios (Scheffe test). Furthermore, instructions interacted with direction, F(1, 68) = 6.06, p = .016, ηp 2 = .08. Planned comparisons showed more nonoptimal responses to conflict trials (M = 27.5 %, SE = 7.2 %) compared to no-conflict trials (M = 10.9 %, SE = 6.4 %) for the most-people group, F(1, 68) = 8.67, p < .001, indicating a ratio-bias effect; but there were no differences between conflict (M = 6.9 %, SE = 7.2 %) and no-conflict trials (M = 10.0 %, SE = 6.4 %) for the logical-people group (F < 1).

PD analysis

Fourteen participants (five from the most-people and nine from the logical-people condition) gave zero nonoptimal responses to conflict trials. This perfect performance mathematically constrains individual estimates of H to be zero (H = 0/[1 – RB] = 0). For the within-participants factor size, the total number of conflict trials is partitioned into three levels (small, medium, and large), which increases the perfect performance problem, since it will appear every time a participant gives zero errors in conflict trials. In order to keep all participants in the analyses, we replaced the zero errors in conflict trials for the minimum proportion of errors found in the sample (.0123).Footnote 2

We first test for learning or practice effects that might have resulted from the relatively high number of trials (180). Two separate ANOVAs were run to compare C and H as obtained for each block of trials. The C (CBlock1 = .70, SE = .04; CBlock2 = .71, SE = .04; CBlock3 = .73, SE = .04) and H (HBlock1 = .75, SE = .04; HBlock2 = .74, SE = .04; HBlock3 = .72, SE = .04) parameters did not significantly change across blocks (both Fs < 1).

Table 2 shows the effect of instructions and size on the controlled (C) and heuristic (H) parameters. Two separate ANOVAs (Instructions × Size) were computed with C and H as dependent measures. As expected, analytical processing was affected by instructions, F(1, 68) = 7.69, p = .007, ηp 2 = .10, and by size, F(2, 136) = 7.14, p = .001, ηp 2 = .10. The controlled parameter was greater for the logical-people instructions (C = .82, SE = .05) when compared with other-people instructions (C = .61, SE = .05), and increased as the difference between the ratios increased (CSmall =.69, SE = .04; CMedium =.72, SE = .04; CLarge = .74, SE = .04). In contrast, heuristic processing remained largely invariant across logical-people (H = .75, SE = .05) and other-people (H = .74, SE = .05) instructions (F < 1), and it was not significantly affected by size (HSmall =.71, SE = .05; HMedium =.77, SE = .04; HLarge = .76, SE = .04), F(2, 136) = 1.62, p = .201, ηp 2 = .02. Instructions and size did not interact for either C or H (both Fs < 1).

Correlations between the PD parameter estimates and response time showed, as predicted, a positive correlation between C and response time, r(98) = .67, p < .001, and no correlation between H and response time, r(98) = .001, p = .956.

Responses times

Following Bonner and Newell (2010), the response-time analysis was based on a median split of the proportion of ratio-bias responses to conflict trials. A 2 × (2 × 3) repeated-measures ANOVA conducted on response time revealed two main effects and two interactions: A bias-level main effect, F(1, 68) = 6.76, p = .011, ηp 2 = .09, indicating that the high-bias group responded faster (M = 1,470 ms, SE = 71 ms) than the low-bias group (M = 1,724 ms, SE = 67 ms); a direction main effect, F(1, 68) = 58.10, p < .001, ηp 2 = .46, indicating that conflict trials (M = 1,665 ms, SE = 86 ms) took longer than no-conflict trials (M = 1,529 ms, SE = 87 ms); a Direction × Bias-level interaction, F(1, 68) = 5.96, p = .017, ηp 2 = .08, indicating that, although both high- and low-bias responders were faster for no-conflict versus conflict trials, this difference was more pronounced for high-bias responders; and a Direction × Size interaction, F(2, 136) = 7.45, p = .001, ηp 2 = .10, indicating that responses to conflict trials became slower as the ratio difference became larger. Planned comparisons showed that responses were slower for the 7 % to 9 % than for the 1 % to 3 % differences, F(1, 68) = 13.10, p < .001. No such size effect was found for no-conflict trials, F(1, 68) = 2.28, p = .136.Footnote 3

Longer response latencies for conflict trials may be the result of conflict detection (i.e., participants detect the conflict between analytic and the intuitive answers but do not manage to inhibit the intuitive answer) or inhibition (i.e., participants not only detect the conflict but they also manage to inhibit the intuitive answer and replace it by the analytic answer). A 2 (bias level) × 3 (response level: optimal responses to conflict trials; nonoptimal responses to conflict trials; optimal response to no-conflict trials) repeated-measures ANOVA was conducted to disentangle the two possibilities. There was a main effect of bias level such that the high-bias group responded faster (M = 1,483 ms, SE = 94 ms) than the low-bias group (M = 1,753 ms, SE = 89 ms), F(1, 45) = 4.35, p = .043, ηp 2 = .09; and a main effect of response level, F(2, 90) = 6.02, p = .004, ηp 2 = .12. Planned comparisons showed that optimal responses to conflict trials (M = 1,831 ms, SE = 70 ms) took longer than optimal responses to no-conflict trials (M = 1,529 ms, SE = 63 ms), F(1, 45) = 19.05, p < .001. There was no difference between nonoptimal responses to conflict trials (M = 1,495 ms, SE = 121 ms) and optimal responses to no-conflict trials (F < 1).Footnote 4 Bias level and response level did not interact (F < 1).

Equal-ratio trials

To test the hypothesis that preference for the large numerator in equal trials is associated with more ratio-bias answers in the remaining trials, we computed the zero-order correlations between the proportion of large-numerator responses to equal trials and the proportion of nonoptimal responses to conflict and no-conflict trials. As predicted, the preference for the large numerator in equal trials showed a positive correlation with nonoptimal responses on conflict trials, r(68) = .47, p < .001, and a negative correlation with nonoptimal responses on no-conflict trials, r(68) = -.28, p = .018. This was expected because the ratio bias (i.e., opting for the larger numerator) leads to nonoptimal responses in conflict trials (where the smaller numerator is the analytically correct choice) but it points to the optimal response for no-conflict trials (where the larger numerator is also the analytically correct response).

Furthermore, if the proportion of large-numerator answers to equal trials reflects, as assumed, a preference for the heuristic response, it should affect the H parameter scores but not the C scores (as measured by the performance in conflict and no-conflict trials). Two linear regressions tested these predictions. The ratio-bias in equal trials predicted the H scores, b = .39, t(68) = 3.48, p < .001, but it did not predict the C scores, b = -.18, t(68) = -1.57, p = .120.

Discussion

Instructions to respond as most other people do led to worse performance (i.e., more nonoptimal responses) compared to instructions to respond as logical people do, particularly in conflict trials. Given analytical processing’s controlled and intentional nature and heuristic processing’s more spontaneous nature, we predicted that this drop in performance via instructions that allow participants to relax their analytic mindsets would translate into less analytic processing (C parameter) without affecting heuristic processing (H parameter). The PD analysis corroborated this hypothesis. The PD controlled parameter also increased with the difference between small and large ratios, suggesting that the smaller the difference, the harder it is to overcome the heuristic response.

The response-time analysis showed slower processing for conflict trials compared to no-conflict trials. Response times also slowed down (in the case of conflict trials) as the difference between the large and small ratio increased. Moreover, response times were shown to be positively correlated with analytic but not with heuristic processing. This is consistent with the idea that, even if the high-bias group was faster to respond than the low-bias group (signaling lower levels of analytic processing), whenever participants (from both groups) experienced a conflict triggered by competing heuristic and analytic responses, they inhibited and overrode the heuristic responses. Further analyses of response times as a function of accuracy showed that optimal responses to conflict trials took longer than optimal responses to no-conflict trials, confirming the successful inhibition and override of the heuristic answer regardless of bias level. However, response times for nonoptimal responses to conflict trials did not differ from response times for optimal responses to no-conflict trials. Thus, there was no indication of detecting the conflict between the analytic and the intuitive answers while failing to inhibit the intuitive answer for either low- or high-bias participants.

Finally, the preference for the large numerator in equal trials was associated with more ratio-bias answers in the remaining trials, and more importantly, they predicted participants’ heuristic processing (H parameter) without significantly affecting analytic processing (C parameter).

Experiment 2

Aim and hypotheses

Experiment 2 aims to replicate the results of the first experiment (i.e., response times associated with C but not H; proportion of large-numerator choices in equal trials associated with H but not C) without any special instructions about what most people, or logical people, would do—simply having participants responding from their perspective.

Furthermore, to make sure that the results in Experiment 1 are not due to large differences between ratios (e.g., 9 %), which might greatly facilitate analytical reasoning; this study used smaller, more subtle ratio differences.

Therefore, in Experiment 2, instructions were not manipulated. All participants were asked to respond from their own perspective instead of responding as others do. Moreover, only two levels of size difference (1 % and 4 %) were used. In contrast to the fast nature of heuristic reasoning, analytical reasoning is assumed to take more time to unfold. Thus, slow responders compared to fast responders were expected to show fewer nonoptimal responses. Importantly, this better performance should stem from an increase in analytical processing without significantly affecting heuristic processing. Furthermore, as in Experiment 1, a greater preference for the large numerator in equal trials is expected to be associated to more ratio-bias answers in the conflict and no-conflict trials, and this increase in ratio-bias answers is predicted to stem from an increase in heuristic processing without significantly affecting analytical processing.

Method

Participants

One hundred undergraduates from Indiana University (62 % female; M age = 21.1, SD = 1.0) participated in return for credit course. None of the participants included in Experiment 1 participated in Experiment 2.

Design

The experiment followed a 2 × 2 within-subjects design, with direction (conflict trials vs. so-conflict trials) and size of the difference between ratios (1 % vs. 4 %).

Materials

The ratio pairs were developed on the basis of percentage ranges used in Experiment 1. The small tray contained 1, 2, or 3 red jelly beans out of a total 10 jelly beans (i.e., 10 %/30 %). The large tray had a total of 100 jelly beans, and the proportion of red jelly beans differed from the small tray by a range of -4 % to 4 %. This resulted in 15 combinations: six conflict trials (e.g., 1/10 vs. 9/100), six no-conflict (e.g., 1/10 vs. 11/100) trials, and three equal trials (e.g., 1/10 vs. 10/100). Each of these combinations was repeated 17 times. This resulted in 255 trials altogether: 102 conflict trials (e.g., 2/10 vs. 19/100), 102 no-conflict trials (e.g., 2/10 vs. 21/100), and 51 equal trials (e.g., 2/10 vs. 20/100).

Procedure

All participants received the same instructions: “Imagine that you are playing a game. . . . To win you must draw a red ball . . . from which tray do you want to draw a ball?” The rest of the procedure was the same as Experiment 1, the only difference being that the 255 trials were divided into four blocks, in the middle of which participants completed tasks for another research project.Footnote 5

Dependent measures

Dependent measures were the same as for Experiment 1.

Results

Table 3 presents the mean proportion of nonoptimal responses and mean response times in each condition (across conflict and no-conflict trials). Preemptive responses (<200 ms) were excluded, which affected 5.82 % of the data. For the response-time analysis, outliers (>2.5 SDs from the mean = slower than 5.16 seconds) were excluded, which affected 1.66 % of the data.

A median split of the mean reaction times dividing participants in two groups based on response speed (fast and slow responders) was used as a proxy for deliberation. Those participants who take longer to respond are assumed to more often engage in logical reasoning than do faster responders. A 2 (response speed) × 2 (direction) × 2 (size) ANOVA was conducted on the proportion of nonoptimal responses. There was only one main effect of response speed, F(1, 98) = 5.98, p = .016, ηp 2 = .12. Fast responders gave more nonoptimal responses (M = .16, SE = .02) than did slow responders (M = .08, SE = .02).

PD analysis

Sixteen participants (five from the fast-responders and 11 from the slow-responders group) gave zero nonoptimal responses to conflict trials. To avoid removing these cases of perfect performance from the analysis, we replaced the zero errors in conflict trials with the minimum proportion of errors found in the sample (.099).Footnote 6

As in Experiment 1, we begin by testing for practice effects that might have resulted from the relatively high number of trials (255). Two separate ANOVAs compared C and H as obtained for each block of trials. The C (CBlock1 = .78, SE = .03; CBlock2 = .78, SE = .03; CBlock3 = .79, SE = .03; CBlock4 = .76, SE = .03) and H (HBlock1 = .51, SE = .07; HBlock2 = .45, SE = .07; HBlock3 = .57, SE = .07; HBlock4 = .54, SE = .07) parameters did not significantly change across blocks (both Fs < 1).

Table 4 shows the effect of response speed on the controlled (C) and heuristic (H) parameters. Two separate ANOVAs (Response Speed × Size) were computed with C and H as dependent measures. As expected, the analytic processing was greater for slow responders (C = .81, SE = .04) when compared to fast responders (C = .67, SE = .04), F(1, 98) = 4.98, p = .028, ηp 2 = .05. There was also a marginally significant Response Speed × Size interaction, F(1, 98) = 3.40, p = .068, ηp 2 = .03. Planned comparisons revealed a tendency for slow responders to show more controlled processing for the 4 % ratio difference (C = .82; SE = .05) compared to the 1 % ratio difference (C = .78; SE = .05), F(1, 98) = 3.43, p = .067. No differences were found for fast responders, F < 1.

For the H parameter there were no significant main effects and no Response Speed × Size interaction (all Fs < 1). Heuristic processing was largely invariant across fast responders (H = .70, SE = .04) and slow responders (H = .69, SE = .05) and across small (H = .71, SE = .03) and large (H = .68, SE = .03) differences between ratios.

Response times

As in Study 1, the response-time analysis was based on a median split of the proportion of ratio-bias responses to conflict trials. A 2 × (2 × 2) repeated-measures ANOVA conducted on response time revealed three main effects: a bias-level main effect, F(1, 98) = 5.23, p = .024, ηp 2 = .09, indicating that the high-bias group responded faster (M = 1,211 ms, SE = 44 ms) than the low-bias group (M = 1,354 ms, SE = 44 ms); a direction main effect, F(1, 98) = 40.37, p < .001, ηp 2 = .29, indicating that conflict trials (M = 1,335 ms, SE = 46 ms) took longer to respond to than no-conflict trials (M = 1,230 ms, SE = 45 ms); and a size main effect, F(1, 98) = 24.08, p < .001, ηp 2 = .19, indicating that 4 % size-difference trials took longer to respond to (M = 1,311 ms, SE = 46 ms) than 1 % size difference trials (M = 1,255 ms, SE = 44 ms). The latter results suggesting once more that larger ratio differences facilitate the overcoming of the heuristic response, which is in line with the PD analysis reported above.

To check whether longer response latencies for conflict trials were the result of conflict detection (without inhibition of the heuristic answer) or inhibition (i.e., detection of conflict and replacement of the heuristic answer by the analytic answer), we further run a 2 (bias level) × 3 (response level: optimal responses to conflict trials; nonoptimal responses to conflict trials; optimal response to no-conflict trials) repeated-measures ANOVA. There was a main effect of response level, F(2, 164) = 7.18, p = .001, ηp 2 = .08. Planned comparisons showed that optimal responses to conflict trials (M = 1,357 ms, SE = 41 ms) took longer than optimal responses to no-conflict trials (M = 1,218 ms, SE = 34 ms), F(1, 82) = 35.41, p < .001. There was no difference between nonoptimal responses to conflict trials (M = 1,194 ms, SE = 60 ms), and optimal responses to no-conflict trials (F < 1). Although the high-bias group responded nominally faster (M = 1,195 ms, SE = 49 ms) than the low-bias group (M = 1,317 ms, SE = 57 ms), the main effect of bias level did not reach statistical significance, F(1, 82) = 2.68, p = .105, ηp 2 = .03Footnote 7. Bias level and response level did not interact (F < 1).

Equal-ratio trials

As in Experiment 1, to test the hypothesis that a preference for the large numerator in equal trials is associated with more ratio-bias answers in the remaining trials, we computed the zero-order correlations between the proportion of large-numerator responses on equal trials and the proportion of nonoptimal responses to conflict and no-conflict trials. The preference for the large numerator in equal trials was positively correlated to nonoptimal responses on conflict trials r(98) = .36, p < .001, and negatively albeit nonsignificantly correlated to nonoptimal responses on no-conflict trials r(98) = -.16, p = .115.

Furthermore, the proportion of large-numerator answers on equal trials was predicted to affect the H parameter scores but not the C scores. Two linear regressions tested for these predictions. Preference for the large numerator in equal trials predicted the H scores, b = .33, t(98) = 3.47, p < .001, but it did not predict the C scores, b = -.004, t(98) < 1.

Discussion

The results provide supporting evidence for the main predictions of Experiment 2. Slow responders gave fewer nonoptimal responses compared to fast responders, and this improvement in performance was associated with greater analytical reasoning as measured by the C parameter of the PD. Fast and slow responders showed no differences in heuristic processing (H parameter). In contrast, the proportion of large-numerator answers on equal trials, which is taken as an estimate of the prevalence of heuristic processing, was found to affect the H but not the C parameter of the PD.

As in Experiment 1, response times for optimal responses to conflict trials were longer than for optimal responses to no-conflict trials, which is a sign of successful inhibition and override of the heuristic answer whenever a conflict was detected. However, response times for nonoptimal responses for conflict problems and optimal responses for no-conflict problems did not differ, providing no evidence of conflict detection in case of error. Taken together, these results suggest that whenever a conflict was triggered by competing heuristic and analytic responses, participants dealt with this conflict by inhibiting and overriding the heuristic response.

General discussion

An eagerly debated issue among dual-process theories of reasoning and judgment is how heuristic and analytic processes interact to determine judgments and choices. In this paper, we aimed to contribute to this debate by revisiting a simple task that previous research has shown to be well suited for investigating the conflict between intuitive and analytical processes (Bonner & Newell, 2010; Mevel et al., 2015). Experiment 1 replicated Bonner and Newell’s essential results both in terms of nonoptimal responses and response times. Instructions to respond as logical people versus most others decreased the number of nonoptimal responses to conflict trials and increased response times (to both conflict and no-conflict trials). Conflict trials took longer to respond than no-conflict trials for both low- and high-bias groups. This results pattern was taken by Bonner and Newell as indirect evidence supporting the notion that even biased reasoners are sensitive to the presence of conflict between the two types of responses.

However, longer response times for conflict trials may be the result of conflict detection (i.e., participants detect the conflict between analytic and the intuitive answers but do not manage to inhibit the intuitive answer) or inhibition (i.e., participants detect the conflict and manage to inhibit the intuitive answer and replace it by the analytic answer). To disentangle between the two possibilities, we computed the response times for nonoptimal and optimal responses to conflict trials and compared them with the response time for optimal responses to no-conflict trials (e.g., De Neys, 2012; De Neys & Bonnefon, 2013; De Neys & Glumicic, 2008; Pennycook et al., 2015). In both experiments, optimal responses to conflict trials took longer than optimal responses to no-conflict trials, confirming the successful conflict inhibition and override of the heuristic answer across high- and low-bias reasoners. However, we found no evidence of conflict detection in case of error, because response times for nonoptimal responses to conflict trials and optimal responses to no-conflict trials did not differ. In light of the three-stage process model (Pennycook et al., 2015), it could be argued that the pairs of ratios that compose the trials of the ratio-bias task often cue two initial responses that are in conflict to each other in conflict trials: a ratio-bias response (preference for the largest numerator) and a logically correct response (preference for the largest ratio), even if the first one is likely to come to mind more fluently than the latter. Whenever this conflict is detected, participants engage in cognitive decoupling, inhibiting and overriding the more fluent heuristic response to give the logically correct response (Stanovich, 2009). In contrast, the ratio-bias responses seem to be given only when the conflict is not detected (alternatively, they could also come from trials where only the heuristic response is cued—in these trials, there would be no conflict to detect in the first place). These results suggest that conflict detection may not be as common as previously claimed (De Neys, 2012, 2014). Pennycook et al. (2012) already showed no evidence of overall conflict detection (as indexed by response times) in responses to base-rates problems given moderate base rates. More recently, the same authors were able to identify subgroups of participants who were highly biased precisely because they failed to detect the conflict between base rates and stereotypes (Pennycook et al., 2015). Mevel et al. (2015) also identified a subgroup of participants who showed systematic failures in detecting the conflict in the ratio-bias task (as indexed by confidence measure). Given these diverse results, the question now seems to be which type of failure is more common (De Neys, 2014). Pennycook et al. (2015) recently suggested that detection failures may characterize biased reasoners in the context of more complex reasoning tasks (e.g., the Wason task) where the probability of competing intuitions is low; whereas inhibition failures are the source of the bias exhibited by most biased reasoners in the context of less complex reasoning tasks. However, our results cast doubt onto this possibility by showing virtually no conflict detection in a quite simple reasoning task where two conflicting responses are, in principle, more likely to occur (but see De Neys, 2014). This debate will surely continue to fuel future research.

By applying the process dissociation procedure (Ferreira et al., 2006; Jacoby, 1991), we were able to show that the improvement in performance of the logical-people group was associated with an increase in analytical processing while leaving heuristic processing largely unchanged. In other words, an equally appealing heuristic response was more often overcome and replaced by an analytical response when responders were encouraged to behave logically. This invariance of heuristic processing across instructions settings is in agreement with the notion that heuristics are often triggered by the features of the problems and are unaffected by participants’ intentions or goals (e.g., Kahneman & Frederick, 2002; Sherman & Corty, 1984). Furthermore, analytical processing but not heuristic processing (as measured by the C and H parameters of the PD, respectively) was correlated positively with response times. Experiment 2 contrasted fast and slow responders (based on a median split of response times) and found not only that the latter performed better but also that this improvement in performance was associated with an increase in analytical reasoning. In contrast, heuristic reasoning was largely invariant across fast and slow responders. This pattern is consistent with the notion that heuristic processing is highly efficient whereas analytical processing is time-consuming (e.g., Evans, 2003). Additionally, in the two experiments, larger differences between the two ratios increased the C parameter (without systematically affecting H), suggesting that the larger the difference between ratios, the easier it is to engage in analytic processing and to overcome the heuristic response.

Since equal ratio trials do not have a logically better answer, a preference for the large numerator (i.e., heuristic response) in these trials is likely to reflect heuristic processing while being unrelated to analytical processing. In agreement with this, regression analyses of the proportion of nonoptimal responses on the H and C parameters of the PD consistently found that the proportion of large-numerator answers to equal trials was associated with greater heuristic processing (H) but not significantly with analytical processing (C).

In sum, concerning the initial question of whether biased choices in the ratio-bias task result from a decline in analytic reasoning or a rise in heuristic processing, variables historically associated with controlled processes such as instructions and speed of response were shown to affect analytical processing (as estimated by the C parameter of the PD) while leaving heuristic processing largely unchanged. In contrast, the intuitive appeal of larger numerators in the ratio-bias task was assessed via responders’ preference for the large numerator in equal trials (where both response options are equally valid from a logical point of view), and it was shown to affect heuristic (H) but not analytical processing (C). Such double dissociation and findings of process invariance were found across the two experiments. They give empirical support for a dual-process interpretation inasmuch as they suggest that heuristic-based, intuitive responses may coexist with more deliberate, analytical-based responses. However, except for instructions, the variables used to dissociate heuristic from analytical processing were not manipulated. Future research should directly manipulate the time available to respond (e.g., including response deadlines), cognitive resources (e.g., adding a cognitive load task), and responders’ preference levels for the large numerator in equal ratio trials (e.g., via priming of the intuitive option).

The ratio-bias effect

Conflict trials only led to more nonoptimal responses than to no-conflict trials (indicating a ratio bias effect) in Experiment 1 under instructions to respond as most others do. When asked to respond as logical people do (Experiment 1) or to respond from participants’ own perspective (Experiment 2), there were no differences in accuracy between conflict and no-conflict trials. These results are aligned with previous research showing that the ratio-bias effect tends to be largely dependent on instructions that somehow reduce participants’ desire to “appear” rational (Epstein & Pacini, 2000−2001) and invite participants to express their intuitions (Lefebvre, Vieider, & Villeval, 2011; Passerini, Machi, & Bagassi, 2012). Mevel et al.’s (2015) study is particularly relevant to our case since it used the same ratio bias paradigm, with similar instructions to respond from the participants’ own perspective. Under such conditions they also found no differences in nonoptimal responses between no-conflict and conflict trials.

Methodological issues

Both experiments presented participants with a substantially larger number of trials (180 in Experiment 1 and 255 in Experiment 2) than previous studies with the ratio-bias task (e.g., Bonner & Newell, 2010; Mevel et al., 2015). Although necessary to obtain reliable estimates of the PD parameters, such lengthy testing might lead some processes to be automatized or to the development of specific response strategies. This could have an impact on the contribution of heuristic and analytic processing. To test for learning or practice effects, we compared estimates of C and H parameters across blocks of trials (three blocks in Experiment 1 and four blocks in Experiment 2). Both C and H were remarkably stable across blocks in both experiments, indicating that practice effects did not affect our results.

Comparing single- and dual-processes accounts of the results

According to the logic underlying the process dissociation procedure, analytical and heuristic processing are always involved in determining responses to reasoning problems. In the present case, these processes act in concert in no-conflict trials and oppose each other in conflict trials. Analytical processing has to inhibit the intuitively appealing heuristic response while computing an alternative response in the case of conflict trials but not in the case of no-conflict trials, which may account for the increase in response times in conflict relative to no-conflict trials (the conflict detection effect), and for the longer response times for participants who give more optimal responses to conflict trials (the conflict resolution effect). Besides these processing differences between no-conflict and conflict trials, a tendency to engage in analytical processing is always more time consuming, even in the case of no-conflict trials (where the optimal answer may be obtained by both heuristic and analytical processes). Thus, greater analytical processing increases response times not only for conflict but also for no-conflict trials.

According to De Neys and Glumicic (2008), it makes little sense for the mind to always engage the two processing modes. This is because when both heuristic and analytic processing lead to the same answer (no-conflict trials, in the present case), analytic processes would be redundant. In other words, continuous parallel activation is implausible from an evolutionary perspective because continuously engaging in demanding analytic processing while the heuristic system provides an equally good but effortless alternative would imply wasting scarce cognitive resources most of the time.

Instead, those authors proposed a hybrid two-stage model, according to which shallow analytic processing is always engaged and deeper analytic processing is invoked only when a detected conflict needs to be resolved (i.e., conflict trials). Based on this model, longer response times on conflict trials are the result of the activation of this deeper analytic processing, also causing longer response times for those responders who successfully complete this conflict resolution more often.

It is, however, less clear how a hybrid two-stage model accounts for longer response times for no-conflict trials as a function of instructions to respond as logical people do vs. as most other people do (i.e., a top-down manipulation of analytic engagement). It would seem to imply that the shallow detection process is also more or less engaged depending on instructions (or other variables), which defeats to a certain extent the whole purpose of having separate processes for detection and overcoming of conflict. In contrast, given the within-subjects manipulation of conflict and no-conflict trials, participants cannot anticipate the trial type. As a result, the engagement of analytic processes triggered by instructions to respond logically is likely to occur across trials in parallel with heuristic processes, increasing response latencies for both conflict and no-conflict trials. By distinguishing between top-down and bottom-up sources of analytic engagement (instructions are a top-down source whereas conflict detection is a bottom-up source), the three-stage model (Pennycook et al., 2015) can easily accommodate the proposed account.

Unfortunately, the C parameter of the PD involves both the detection and overcoming of conflict, making it impossible to empirically test the notion that analytical processing may be meaningfully separated into the two. However, more complex polynomial models, such as the quad model (Conrey et al., 2005), which allow for the estimation of conflict overcoming and conflict detection separately, may be used in future research to further test this idea and try to disentangle these two components of analytical processing.

Single-system models, such as Hammond’s cognitive continuum theory (CCT; e.g., Hammond, 1988, 1996), can also account for at least some of the reported response-time results. As pointed out by Bonner and Newell (2010), conflict trials are inherently more analysis inducing than no-conflict trials, exactly because they offer two opposing possible answers. According to the CCT, such task features would suffice to place conflict trials more toward the analytic end of a cognitive continuum that runs from fast intuitive thinking to slower analytic thinking. This would slow processing on conflict, relative to no-conflict, trials (conflict detection) and simultaneously lead responders to more often give the optimal response to conflict trials (conflict resolution) without assuming a causal relation between time to respond and performance.

Furthermore, assuming that instructions to respond logically are also a general feature of the task, it is reasonable to conclude that responders under these instructions are placed closer to the analytic end of the continuum compared to responders instructed to behave as most others do. This would account for longer response times for no-conflict trials as a function of instructions. However, by proposing what seems to be a hydraulic relation between heuristic and analytical processing (as one moves along the intuitive-analytical continuum, more analytical thinking implies less intuitive processing and vice versa), the CCT may face greater difficulties in accounting for the process dissociations and findings of process invariance reported here. Instructions were shown to affect analytical processing in the expected direction without affecting heuristic processing. A preference for the large numerator in equal-ratio trials was found to be associated with greater heuristic processing without significantly affecting analytic processing. Yet, a relatively simple dual-process model embodied in the process dissociation procedure, which assumes that heuristic and analytical processing work in parallel and that heuristic responses are given only when analytical processing fails, is able to account for these findings.

The process dissociation procedure

It is interesting to note that the process dissociation procedure was first used in the study of implicit memory by researchers (e.g., Jacoby, 1991; Jacoby et al., 1993) who favor single-system approaches of human cognition (in opposition to dual or multiple systems approaches; e.g., Squire, 1987). Dissociations between implicit and explicit tests within a unitary memory are explained in terms of the interaction between the different processes (e.g., familiarity and recollection) involved. Since then, the PD has been shown to be a useful procedure to disentangle controlled (analytical) from automatic (heuristic) processes across a wide range of domains (for a review, see Payne & Bishara, 2009; Yonelinas & Jacoby, 2012), but it is certainly not without shortcomings. One that might be of concern for any application of the PD procedure is the validity of the independence assumption. Whether this assumption is met depends on the experimental paradigms that are used. Since the processes are not directly observable, the independence assumption is usually tested by looking for selective effects of variables conceptually identified with cognitive control or automaticity (Jacoby, 1991; Jacoby, Yonelinas, & Jennings, 1997; Payne & Bishara, 2009). As mentioned previously, in the studies reported here, two variables associated with controlled processing—instructions and speed of response—were shown to affect the C parameter while leaving H invariant; whereas responders’ intuitive preference for the large numerator in equal trials affected H parameter but left C invariant. If the independence assumption had been violated and the two processes had shown substantial co-variation, the reported process dissociations would be highly unlikely and difficult to account for. However, this is not to say that heuristic and analytical processes are generally independent. Several conditions may introduce dependency between the two types of processes. We just claim to have found good enough-experimental conditions where the independence assumption was not badly violated.

Also of theoretical relevance for our present discussion is the comparison of single- and dual-process models of recognition memory carried out by Ratcliff, Zandt, and McKoon (1995). They showed that the PD attributed simulated data to two processes even when these data were produced by a single process model: the search of associative memory model (SAM; Gillund & Shiffrin, 1984)—the PD automatic parameter captured residual strength in SAM (see Ratcliff et al.). Of course, SAM was never expected to account for all recognition memory situations (and can be shown to make incorrect predictions). The general point we would like to make is that what is learned about cognitive processes underlying human cognition (including reasoning) is theory dependent, and the PD (or any other method) does not provide a theory-independent means of examining reasoning processes. We claim that, besides analytical reasoning, heuristic processes that are not fully open to introspection may contribute to human judgment and decisions, regardless of whether these processes are themselves encompassed by distinct systems. Apparently, what separates our approach from a single-system account such as the CCT is the proposal of a relatively independent relation between analytical and heuristic processing. It seems unlikely that the nature of this relation is fixed. Instead, it may well vary depending on different conditions and task features. More than having endless debates about seemingly irreconcilable one versus two (or more) systems approaches, empirically exploring such conditions may be a fruitful avenue for future research.

Notes

Equal trials were not included in the designs of this experiment and Experiment 2 because the size of the difference between ratios in these trials is by definition zero and because these trials do not have a nonoptimal response. Statistical analyses involving these trials are presented in a separate section of the Results.

We also computed the PD estimates after removing the participants with perfect performance in conflict trials (see Jacoby, Toth, & Yonelinas, 1993) and found the same pattern of results. The C (F < 1) and H, F(1, 64) = 1.25, p = .292 ηp 2 = .04, parameters did not change across blocks. Instructions affected the C parameter, which was higher for the logical-people (M = 76 %, SE = 6.7 %) compared to the most-people condition (M = 55 %, SE = 6.1 %), t(53) = 2.28, p = .027, D = 0.63, without affecting the H parameter (M most people = 67% and M logical people = 69 %), t(53) = 1.05, p = .298, D = 0.29. When including size in two 2 (most vs. logical people) × 3 (small vs. medium vs. large size) separate ANOVAs (with C and A as dependent variables), the number of participants to be removed because of perfect performance increased to 40 (57% of the sample). Instructions still affected C (M most people = 26 %, SE = 5.8 % and M logical people = 67 %, SE = 6.7 %), F(1, 24) = 21.37, p < .001, ηp 2 = .47, but not H (M most people = 84 %, SE = 6.5 % and M logical people = 70 %, SE = 7.5 %), F(1, 24) = 2.35, p = .139, ηp 2 = .09. Size affected C, F(2, 48) = 8.16, p = .001, ηp 2 = .25, as expected (M Small = 41 %, SE = 4.9 %; M Medium = 46 %, SE = 4.9 %; M Large = .52 %, SE = 4.4 %), but not H, F < 1 (76 % < Ms < 77 %, 5.3 % < SE < 5.7 %). Instructions and size did not interact (Fs < 1).

Repeating the current analysis using instructions (most others vs. logical people) instead of bias level (high- and low-bias groups) led to an equivalent results pattern: an instructions main effect (the most people group responded faster than the logical people group) and the same direction main effect and Instructions × Size interaction. The Instructions × Direction interaction was not statistically significant, F(1, 68) = 1.107, p = .296.

The analysis including the variable response level comprises a smaller number of participants (n = 48; 26 low-bias and 22 high-bias responders) because the remaining participants gave no incorrect responses to conflict trials. When we run an ANOVA including the variable size, the reduction in participants’ number naturally increases (n = 22; 12 low-bias and 10 high-bias responders). In any case, a 2 (bias level) × 3 (size) × 3 (response level: correct responses to conflict trials; incorrect responses to conflict trials; correct response to no-conflict trials) repeated measures ANOVA showed a main effect of response level, F(2, 40) = 14.40, p < .001, ηp 2 = .42. Planned comparisons revealed that correct responses to conflict trials (M = 1,986 ms, SE = 174 ms) took longer than correct responses to no-conflict trials (M = 1,415 ms, SE = 154 ms), F(1, 20) = 21.29, p < .001. There was no difference between incorrect responses to conflict trials (M = 1,340 ms, SE = 215 ms) and correct responses to no-conflict trials (F < 0). There was also a marginally significant Bias-level × Size × Response-level interaction, F(4, 80) = 2.09, p = .090. Planned comparisons showed that response latencies of incorrect responses to conflict trials for low bias participants were slower for the 7 % to 9 % than for the 1 % to 3 % differences, F(1, 20) = 7.35, p < .013. The remaining planned comparisons contrasting small (1 %–3 %) and large (7 %–9 %) difference trials—incorrect responses to conflict trials of high-bias participants; correct responses to conflict trials of high- and low-bias participants; and correct responses to no-conflict trials of high- and low-bias participants—showed no significant differences (all Fs < 1). Finally, although the high-bias group responded nominally faster (M = 1,494 ms, SE = 108 ms) than the low-bias group (M = 1,666 ms, SE = 97 ms), there was no main effect of bias level, F(1, 20) = 1.38, p = .25, ηp 2 = .06, in this reduced sample.

There was no influence from these filler tasks on performance, because (1) Experiment 2 successfully replicated Experiment 1’s key results (i.e., response times correlated with C but not H, whereas the proportion of large-numerator choices in equal trials was associated with H but not C), and (2) more importantly, both C and H scores were remarkably stable across blocks, including the first block, where no interference could have taken place.

We also computed the PD estimates after removing the participants with perfect performance in conflict trials and found the same pattern of results. The C and H parameters did not change across blocks (both Fs < 1). Response speed affected the C parameter, which was higher for the slow responders (M = 88 %, SE = 4.7 %) compared to fast responders (M = 68 %, SE = 6.1 %), t(82) = 3.18, p = .002, d = 0.70, without affecting the H parameter (M Fast responders = 59 % and M Slow responders = 62 %), t < 1. When including size in two 2 (response speed) × 2 (size) separate ANOVAs (with C and A as dependent variables, respectively), the number of participants to be removed due to perfect performance increased to 43% of the sample. Response speed still affected C (M Fast responders = 57 %, SE = 5.6 % and M Slow responders = 83%, SE = 6.5 %), F(1, 55) = 8.99, p = .004, ηp 2 = .14, but not H (M Fast responders = 68 %, SE = 3.7 % and M Slow responders = 65 %, SE = 4.3 %), F < 1. There was no main effect of size, and size did not interact with any of the parameters (all Fs < 1.88, ps > 1.88).

The analysis including the variable response level comprises a smaller number of participants (n = 84; 36 low-bias and 48 high-bias responders) because the remaining participants gave no incorrect responses to conflict trials. When we run an ANOVA including also the variable size, the reduction in participants’ number increases (n = 56; 19 low-bias and 37 high-bias responders). In any case, a 2 (bias level) × 2 (size) × 3 (response level: correct responses to conflict trials; incorrect responses to conflict trials; correct response to no-conflict trials) repeated measures ANOVA showed a similar results pattern: one main effect of response level, F(2, 108) = 5.91, p = .004, ηp 2 = .10. Planned comparisons revealed that correct responses to conflict trials (M = 1,378 ms, SE = 81 ms) took longer than correct responses to no-conflict trials (M = 1,230 ms, SE = 57 ms), F(1, 54) = 17.98, p < .001. There was no difference between incorrect responses to conflict trials (M = 1,194 ms, SE = 108 ms) and correct responses to no-conflict trials (F < 0). There was also a marginally significant bias-level main effect, F(1, 54) = 3.03, p = .087, ηp 2 = .05. The high-bias group responded faster (M = 1,180 ms, SE = 58 ms) than the low-bias group (M = 1,355 ms, SE = 81 ms).

References

Bonner, C., & Newell, B. R. (2010). In conflict with ourselves? An investigation of heuristic and analytic processes in decision making. Memory & Cognition, 38, 186–196.

Conrey, F. R., Sherman, J. W., Gawronski, B., Hugenberg, K., & Groom, C. (2005). Separating multiple processes in implicit social cognition: The quad-model of implicit task performance. Journal of Personality and Social Psychology, 89, 469–487.

De Neys, W. (2012). Bias and conflict: A case for logical intuitions. Perspectives on Psychological Science, 7, 28–38.

De Neys, W. (2014). Conflict detection, dual processes, and logical intuitions: Some clarifications. Thinking & Reasoning, 20, 169–187.

De Neys, W., & Bonnefon, J. F. (2013). The whys and whens of individual differences in thinking biases. Trends in Cognitive Sciences, 17, 172–178.

De Neys, W., & Franssens, S. (2009). Belief inhibition during thinking: Not always winning but at least taking part. Cognition, 113, 45–61.

De Neys, W., & Glumicic, T. (2008). Conflict monitoring in dual process theories of thinking. Cognition, 106, 1248–1299.

Denes-Raj, V., & Epstein, S. (1994). Conflict between intuitive and rational processing: When people behave against their better judgment. Journal of Personality & Social Psychology, 66, 819–829.

Epstein, S., & Pacini, R. (2000–2001). The influence of visualization on intuitive and analytical information processing. Imagination, Cognition & Personality, 20, 195–216.

Evans, J. S. B. T. (2003). In two minds: Dual-process accounts of reasoning. Trends in Cognitive Sciences, 7, 454–459.

Evans, J. S. B. T., & Stanovich, K. E. (2013). Dual-process theories of higher cognition: Advancing the debate. Perspectives on Psychological Science, 8, 223–241.

Ferreira, M. B., Garcia-Marques, L., Sherman, S. J., & Sherman, J. W. (2006). Automatic and controlled components of judgment and decision making. Journal of Personality and Social Psychology, 91, 797–813.

Gillund, G., & Shiffrin, R. M. (1984). A retrieval model for both recognition and recall. Psychological Review, 91, 1–67.

Hammond, K. R. (1988). Judgment and decision making in dynamic tasks. Information and Decision Technologies, 14, 3–14.

Hammond, K. R. (1996). Human judgment and social policy. New York: Oxford University Press.

Hollender, D. (1986). Semantic activation without conscious identification in dichotic listening, parafoveal vision, and visual masking: A survey and appraisal. Behavioral and Brain Sciences, 9, 1–66.

Jacoby, L. L. (1991). A process dissociation framework: Separating automatic from intentional uses of memory. Journal of Memory and Language, 30, 513–541.

Jacoby, L. L., Toth, J. P., & Yonelinas, A. P. (1993). Separating conscious and unconscious influences of memory: Measuring recollection. Journal of Experimental Psychology: General, 122, 139–154.

Jacoby, L. L., Yonelinas, A. P., & Jennings, J. M. (1997). The relation between conscious and unconscious (automatic) influences: A declaration of independence. In J. D. Cohen & J. W. Schooler (Eds.), Scientific approaches to consciousness (pp. 13–47). Mahwah: Erlbaum.

Kahneman, D., & Frederick, S. (2002). Representativeness revisited: Attribute substitution in intuitive judgment. In T. Gilovich, D. Griffin, & D. Kahneman (Eds.), Heuristics and biases: The psychology of intuitive judgment (pp. 49–81). Cambridge: Cambridge University Press.

Kirkpatrick, L. A., & Epstein, S. (1992). Cognitive–experiential self-theory and subjective probability: Further evidence for two conceptual systems. Journal of Personality and Social Psychology, 63, 534–544.

Lefebvre, M., Vieider, F. M, & Villeval, M. C. (2011). The Ratio Bias Phenomenon: Fact or Artifact? Theory & Decision 71, 615-641.

Mata, A., & Almeida, T. (2014). Using metacognitive cues to infer others’ thinking. Judgment and Decision Making, 9, 349–359.

Mata, A., Ferreira, M. B., & Reis, J. (2013). A process-dissociation analysis of semantic illusions. Acta Psychologica, 144, 433–443.

Mata, A., Ferreira, M. B., & Sherman, S. J. (2013). The metacognitive advantage of deliberative thinkers: A dual-process perspective on overconfidence. Journal of Personality and Social Psychology, 105, 353–373.

Mata, A., Fiedler, K., Ferreira, M. B., & Almeida, T. (2013). Reasoning about others’ reasoning. Journal of Experimental Social Psychology, 49, 486–491.

Mata, A., Schubert, A., & Ferreira, M. B. (2014). The role of language comprehension in reasoning: How “good-enough” representations induce biases. Cognition, 133, 457–463.

Mevel, K., Poirel, N., Rossi, S., Cassotti, M., Simon, G., Houdé, O., & De Neys, W. (2015). Bias detection: Response confidence evidence for conflict sensitivity in the ratio bias task. Journal of Cognitive Psychology, 27, 227–237.

Miller, D. T., Turnbull, W., & McFarland, C. (1989). When a coincidence is suspicious: The role of mental simulation. Journal of Personality and Social Psychology, 57, 581–589.

Passerini, G., Macchi, L., & Bagassi, M. (2012). A methodological approach to ratio bias. Judgement and Decision Making, 7, 602-617.

Payne, B. K., & Bishara, A. J. (2009). An integrative review of process dissociation and related models in social cognition. European Review of Social Psychology, 20, 272–314.

Pennycook, G., Fugelsang, J. A., & Koehler, D. J. (2012). Are we good at detecting conflict during reasoning? Cognition, 124, 101–106.

Pennycook, G., Fugelsang, J. A., & Koehler, D. J. (2015). What makes us think? A three-stage dual-process model of analytic engagement. Cognitive Psychology, 80, 34–72.

Ratcliff, R., Van Zandt, T., & McKoon, G. (1995). Process dissociation, single-process theories, and recognition memory. Journal of Experimental Psychology: General, 124, 352–374.

Sherman, S. J., & Corty, E. (1984). Cognitive heuristics. In R. S. Wyer & T. K. Srull (Eds.), Handbook of social cognition (Vol. 1, pp. 189–286). Mahwah: Erlbaum.

Squire, L. R. (1987). Memory and brain. New York: Oxford University Press.

Stanovich, K. E. (2009). Distinguishing the reflective, algorithmic, and autonomous minds: Is it time for a tri-process theory? In J. Evans & K. Frankish (Eds.), In two minds: Dual processes and beyond (pp. 55–88). Oxford: Oxford University Press.

Stanovich, K. E., & West, R. F. (2000). Individual differences in reasoning: Implications for the rationality debate. Behavioral and Brain Sciences, 23, 645–726.

Thompson, V. A. (2009). Dual process theories: A metacognitive perspective. In J. B. S. T. Evans & K. Frankish (Eds.), In two minds: Dual processes and beyond. Oxford: Oxford University Press.

Wegner, D. M., & Bargh, J. A. (1998). Control and automaticity in social life. In D. T. Gilbert, S. T. Fiske, & G. Lindzey (Eds.), Handbook of social psychology (4th ed., Vol. 1, pp. 446–496). New York: McGraw-Hill.

Yonelinas, A. P., & Jacoby, L. L. (2012). The process-dissociation approach two decades later: Convergence, boundary conditions, and new directions. Memory & Cognition, 40, 663–680.

Author Note

This research was partially supported by the Grants PTDC/PSI-PSO/117009/2010 awarded to the first author; UID/PSI/04810/2013 and IF/01612/2014 from the Foundation for Science and Technology of the Ministry of Science and Higher Education (Portugal); and by a Research fellowship for experienced researchers awarded by the Alexander von Humboldt-Stiftung to the first author.

We thank Ben Newell for providing us with the trial combinations and the instructions used in Bonner and Newell (2010).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Ferreira, M.B., Mata, A., Donkin, C. et al. Analytic and heuristic processes in the detection and resolution of conflict. Mem Cogn 44, 1050–1063 (2016). https://doi.org/10.3758/s13421-016-0618-7

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13421-016-0618-7