Abstract

The impact of Parkinson’s disease (PD) on rule-guided behavior has received considerable attention in cognitive neuroscience. The majority of research has used PD as a model of dysfunction in frontostriatal networks, but very few attempts have been made to investigate the possibility of adapting common experimental techniques in an effort to identify the conditions that are most likely to facilitate successful performance. The present study investigated a targeted training paradigm designed to facilitate rule learning and application using rule-based categorization as a model task. Participants received targeted training in which there was no selective-attention demand (i.e., stimuli varied along a single, relevant dimension) or nontargeted training in which there was selective-attention demand (i.e., stimuli varied along a relevant dimension as well as an irrelevant dimension). Following training, all participants were tested on a rule-based task with selective-attention demand. During the test phase, PD patients who received targeted training performed similarly to control participants and outperformed patients who did not receive targeted training. As a preliminary test of the generalizability of the benefit of targeted training, a subset of the PD patients were tested on the Wisconsin card sorting task (WCST). PD patients who received targeted training outperformed PD patients who did not receive targeted training on several WCST performance measures. These data further characterize the contribution of frontostriatal circuitry to rule-guided behavior. Importantly, these data also suggest that PD patient impairment, on selective-attention-demanding tasks of rule-guided behavior, is not inevitable and highlight the potential benefit of targeted training.

Similar content being viewed by others

Introduction

The contribution of frontostriatal circuitry to rule-guided behavior has been an area of intense research in recent years. Neuropsychological work has focused largely on patients with Parkinson’s disease (PD), a neurodegenerative disease affecting several neurotransmitter systems that are critical for normal frontostriatal function (Braak et al., 2003). Although much has been learned about frontostriatal contributions to rule-guided behavior from the study of individuals with PD, very few attempts have been made to investigate the possibility of adapting common experimental techniques in an effort to identify the conditions that are most likely to facilitate successful performance. Moreover, with few exceptions, previous attempts to improve the ability of PD patients to cope with the cognitive symptoms of the disease have used fairly coarse training protocols, making it difficult to determine which aspects of training were critical for improvement and what cognitive processes were affected (Hindle, Petrelli, Clare, & Kalbe, 2013). The goals of the present work are to further characterize the PD impairment in a task of rule-guided behavior and to conduct an initial investigation of the impact of targeted training on tasks of rule-guided behavior.

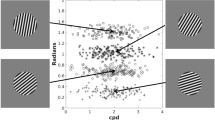

Rule-guided behavior is a very broad construct and, not surprisingly, has been studied using a variety of techniques (e.g., Bunge & Wallis, 2007). The approach taken here is to operationalize rule-guided behavior in the context of rule-based categorization. Rule-based categorization tasks are often designed such that optimal performance can be obtained if participants learn to attend to the relevant stimulus dimensions, ignore the irrelevant stimulus dimensions (if necessary), and learn the placement of decision criteria on the relevant dimensions (Ashby & Ell, 2001). An example of a category structure for a unidimensional, rule-based task is plotted in Fig. 1. Each of the points in Fig. 1 represents a sine-wave grating of a particular spatial frequency and orientation from one of two contrasting categories. To learn this category structure, participants would need to learn to attend selectively to spatial frequency and learn the placement of the criterion on spatial frequency (i.e., decisional selective attention; Ashby & Townsend, 1986; Maddox, 1992; Maddox, Ashby, & Waldron, 2002). Thus, in the present work, rule-guided behavior refers to the learning and application of a decision criterion on a perceptual dimension (i.e., spatial frequency in Fig. 1) that can be used to support classification. Furthermore, selective-attention demand refers to the requirement to ignore variability along an irrelevant stimulus dimension (i.e., orientation in Fig. 1).

An example of a unidimensional, rule-based category structure. Each point in the graph represents a sine-wave grating of a particular spatial frequency (bar width) and orientation (bar angle). “+” symbols represent category A stimuli, and “o” symbols represent category B stimuli. The vertical line is the optimal decision criterion. The decision rule could be described as “Respond A if the bars are thick; otherwise, respond B.” The insets are example stimuli

PD patients have demonstrated a remarkably consistent impairment in unidimensional tasks (Ashby, Noble, Filoteo, Waldron, & Ell, 2003; Ell, Weinstein, & Ivry, 2010; Filoteo, Maddox, Ing, & Song, 2007; Filoteo, Maddox, Ing, Zizak, & Song, 2005; Price, Filoteo, & Maddox, 2009). Interestingly, analyses of individual differences in decision strategy revealed that this impairment is characterized not by a total failure of selective attention (i.e., an inability to distinguish between relevant and irrelevant information) but, rather, by instability in the representation of the decision rule (Ell et al., 2010; Filoteo et al., 2007). For example, Ell et al. (2010) found that estimates of internal variability in the representation of the decision rule were larger for PD patients than for matched controls in a unidimensional task similar to the one described in Fig. 1. There appears to be some specificity of this effect, since there have been reports that this impairment may be absent (Filoteo et al., 2007), or not related to decision rule variability (Ell et al., 2010), in rule-based tasks without selective-attention demand.

Targeted training of rule-guided behavior

Although limited, previous work suggests optimism for using behavioral training paradigms to improve cognition in PD patients (see Hindle et al., 2013, for a review). The vast majority of these paradigms, however, have used nontargeted approaches that make it unclear which cognitive processes should be the focus of future interventions. For instance, Sinforiani, Banchieri, Zucchella, Pacchetti, and Sandrini (2004) trained a group of PD patients using a set of computerized tasks designed to provide training in a variety of cognitive domains (e.g., attention, reasoning, memory). Relative to pretraining baseline, PD patients demonstrated improvement in reasoning and verbal fluency, but there was no benefit for working memory (e.g., digit span) or executive functioning (e.g., WCST, Stroop). Sammer, Reuter, Hullmann, Kaps, and Vaitl (2006) took a slightly more focused approach by training a group of PD patients in a collection of working memory and executive function tasks. Relative to pretraining baseline levels, PD patients demonstrated some limited improvement in executive functioning. There was no improvement, however, relative to a group of PD patients who did not receive cognitive training (i.e., receiving physical and occupational therapy). Although these studies suggest that it may be possible to improve some aspects of cognition via nontargeted training, they do not permit a detailed characterization of the affected cognitive processes or the critical components of the training paradigm.

More recent work by Disbrow and colleagues (Disbrow et al., 2012) suggests that targeted training of a specific cognitive process (i.e., sequence generation) can benefit more general aspects of cognition (i.e., executive function) that depend on the trained process. The goal of the present study is to take a related approach—more specifically, to investigate the impact of targeted versus nontargeted training on a subsequent unidimensional, rule-based categorization task. An additional goal is to present a preliminary investigation of generalization to another task of rule-guided behavior—that is, the WCST (Grant & Berg, 1948; Heaton, Chelune, Talley, Kay, & Curtiss, 1993). This study is an important step in comparing the efficacy of targeted versus nontargeted training and has the potential to inform the design of future interventions for improving cognition in individuals with PD.

The extant data suggest that pretraining PD patients on the decision rule with reduced selective-attention demand may be one way in which to target training. Indeed, the PD patient impairment in the ability to maintain a stable representation of the decision rule has been observed only in the presence of selective-attention demand (Ell et al., 2010; Filoteo et al., 2007). Moreover, several studies have found that PD patients perform normally in rule-based tasks when selective-attention demand is reduced (Filoteo et al., 2007; Maddox, Filoteo, Delis, & Salmon, 1996).

Would PD patients who receive targeted training of the decision rule have any advantage over PD patients who receive nontargeted training on a subsequent unidimensional, rule-based task? Although this hypothesis has not been directly tested, several studies suggest that providing strategic information can mitigate the negative impact of selective-attention demand. First, informing patients of the optimal rule appears to reduce the magnitude of the PD impairment in rule-based tasks (Maddox et al., 1996), relative to studies in which participants have no a priori knowledge of the optimal rule (Filoteo et al., 2007). Second, PD patients perform normally when they have a priori knowledge of the target in a selective-attention-demanding visual search task (Horowitz, Choi, Horvitz, Côté, & Mangels, 2006). Thus, it seems plausible that PD patients would be able to learn the optimal rule with reduced selective-attention demand and apply this rule during a subsequent test phase in which selective-attention demand is increased.

To test this hypothesis, patients and controls will participate in one of three training conditions (Fig. 2). In the control (CON) condition, participants will be trained on a prototypical unidimensional task (i.e., both spatial frequency and orientation will vary during training). In the relevant-dimension variation (RDV) condition, the stimuli will vary only along the relevant dimension, thereby providing an opportunity for the patients to learn the optimal decision rule without selective-attention demand (i.e., orientation will be constant during training). The CON and RDV categories differ only in the variance along the irrelevant dimension. To control for the possibility that simply reducing variance improves PD patient performance, we will also include an irrelevant-dimension variation (IDV) condition in which variance along the irrelevant dimension is the same as in the CON condition, but the stimuli will be binary-valued along the relevant dimension. Following training, all participants will complete a test phase using the CON categories. Thus, for participants in the CON condition, the categories will not change. For participants in the RDV condition, selective-attention demand will be increased. For participants in the IDV condition, variance will increase along the relevant dimension. The category structure will vary across conditions during the training phase, but the category structure will be identical for all participants during the test phase.

The category structures for the three training conditions investigated in the present experiment. CON, control; RDV, relevant dimension variation; IDV, irrelevant dimension variation. “+” symbols represent category A stimuli, and “o” symbols represent category B stimuli. The vertical line is the optimal decision rule and is identical across the three conditions. Participants were trained on one of these three category structures and then immediately tested on the CON category structure

In the nontargeted training conditions (i.e., the CON and IDV conditions), patients will be trained in the presence of selective-attention demand. Therefore, PD patients are expected to be impaired during the training and test phases. In contrast, in the targeted training condition (i.e., the RDV condition), participants will receive training in the absence of selective-attention demand. On the basis of previous work, PD patients are expected to learn the decision rule in the absence of selective-attention demand and perform similar to control participants during training. As participants transition to the test phase, selective-attention demand will be increased. If increasing selective-attention demand disrupts the application of a learned decision rule, PD patients would be impaired, relative to controls, during the test phase. If, instead, the negative impact of selective-attention demand on unidimensional tasks is restricted to learning of the decision rule, PD patients would be expected to continue to perform similar to control participants during the test phase. In addition, if targeted training is successful, PD patients receiving targeted training would be expected to perform better than PD patients receiving nontargeted training. This approach has the advantage of enabling the characterization of specific aspects of cognitive dysfunction in PD patients (e.g., the use of a suboptimal decision rule vs. the failure to maintain a stable representation of a decision rule; Ell et al., 2010).

Will any benefit of targeted training generalize to other tasks of rule-guided behavior? As a preliminary investigation of this question, a subset of the PD patients completed the WCST immediately following the test phase. The WCST is ideal because successful performance depends upon rule-guided behavior in the presence of selective-attention demand and has been extensively used as a neuropsychological instrument to assess cognition in PD. PD patients receiving targeted training are expected to perform better than PD patients receiving nontargeted training on the WCST.

Method

Participants

The study procedures were approved by the University of Maine Institutional Review Board and are consistent with the Helsinki Declaration. Thirty-eight patients (15 female) with idiopathic PD were recruited for participation. The patients were recruited by referrals from neurologists or through Parkinson’s support groups throughout Maine. Patients were screened for a history of neurological dysfunction unrelated to PD, dementia (scores < 25 on the Mini Mental State Exam; Folstein, Folstein, & McHugh, 1975), and symptoms of depression (scores >20 on the Beck Depression Inventory; Beck, Steer, & Brown, 1996), resulting in the exclusion of 2 patients on the basis of high depression scores.

Disease stage based on Hoehn and Yahr (1967) ratings indicated that the patients were in the mild-to-moderate stages of the disease, with 32 of the 36 patients at stage 1 or 2 (on the 5-point scale). Disease severity was evaluated with the motor subscale of the Unified Parkinson’s Disease Rating Scale (UPDRS; Fahn, Elton, & Members of the UPDRS Development Committee, 1987). See Table 1 for patient demographics and assessments of disease stage and severity.

Given that most PD patients take some form of dopaminergic medication and that PD impairment on the task shown in Fig. 1 has been shown to be insensitive to withdrawal of dopaminergic medication (Ell et al., 2010), the PD patients were tested while on their normal medication regimen. At the time of the experiment, 35 of the 36 patients were taking daily doses of L-dopa medications. Seventeen of the patients were also taking a mixed D2/D3 receptor agonist. Several of the PD patients were taking additional medications: MAO-B inhibitor (n = 9), COMT inhibitor (n = 6), antidepressants (n = 11), and anticholinergic (n = 5). One PD patient was not taking any medication. The time since the last dose of dopaminergic medications prior to testing is given in Table 1.

A control group (n = 35, 26 female) was recruited from the communities surrounding the University of Maine. As with the patients, controls were screened for a history of neurological dysfunction, dementia, and symptoms of depression, resulting in the exclusion of one control participant on the basis of a high depression score. None of the controls reported a history of neurological disorders. Six controls were being medicated for symptoms of depression at the time of testing. See Table 1 for control demographics.

Neuropsychological assessment

A battery of neuropsychological tests was used to assess different aspects of cognitive function in both patients and controls. The National Adult Reading Test (NART; Nelson, 1982) was used to provide an estimate of premorbid verbal intelligence. In rule-based tasks, learning is argued to be highly dependent upon working memory and executive function (Ashby & Maddox, 2005). Thus, neuropsychological tests were included to assess these processes. The digit span subtest (backward) of the Wechsler Adult Intelligence Scale–Third Edition (Wechsler, 1997a) and the spatial span subtest (backward) of the Wechsler Memory Scale–Third Edition (Wechsler, 1997b) provided an index of working memory.

Executive functions were evaluated with the Color–Word Interference (CWI) subtest from the Delis–Kaplan Executive Function System (DKEFS; Delis, Kaplan, & Kramer, 2001). The CWI comprises four subtests. The first two were baseline measures of the time to name a list of colors and the time to read a list of color words. The third was a modified version of the traditional Stroop task (Stroop, 1935), designed to assess the role of response conflict and inhibitory processes when naming the ink color of dissonant color words (e.g., the word “green” in red ink). The fourth subtest incorporated a task-switching component in which participants were asked to alternate (irregularly) between naming the ink color and reading the word. The third (i.e., inhibition) and fourth (i.e., switching + inhibition) subtests were used as indices of executive functioning. Inhibition scores were computed by subtracting the average time to complete the two baseline subtests. Switching scores were computed by subtracting the time to complete the inhibition subtest from the time for the switching + inhibition subtest. For both measures, higher numbers indicated a greater cost or reduced executive functioning.

Experimental tasks

The patients (n CON = 13, n RDV = 11, n IDV = 12) and controls (n CON = 10, n RDV = 11, n IDV = 13) were randomly assigned to complete one of the three categorization training conditions. The stimuli were sine-wave gratings that varied across trials in spatial frequency and orientation (counterclockwise from horizontal). Eighty stimuli were used in each condition, with 40 assigned to each of the two response categories. To create these category structures, a variation of the randomization technique introduced by Ashby and Gott (1988) was used. Each category was defined as a bivariate normal distribution with a mean and a variance on each dimension and by a covariance between dimensions (see Table 2 for the category parameters and Fig. 2 for the category structures). The category means for the IDV condition were selected to equate the observed minimum intercategory distance across conditions as distance to the category boundary is a critical determinant of performance (Ashby & Maddox, 1994; Ell & Ashby, 2006, 2012).

To generate the stimuli, 40 pseudorandom samples (x 1 , x 2) were drawn from each category distribution. Each random sample (x 1 , x 2) was converted to a stimulus by deriving the frequency, f = .25 + (x 1/50) cycles/degree of visual angle, and orientation, o = x 2(π/500) radians. The scaling factors were chosen from previous work (e.g., Ell, Ing, & Maddox, 2009) in an attempt to equate the salience of frequency and orientation. Each stimulus was presented on a gray background and subtended a visual angle of 4.35° at a viewing distance of approximately 51 cm. The stimuli were generated and presented using the Psychophysics Toolbox extensions (Brainard, 1997; Pelli, 1997) for MATLAB. The stimuli were displayed on either a 20-in. LCD with a 1,600 × 1,200 resolution in a dimly lit room or on a 17-in. laptop LCD with a 1,680 × 1,050 resolution when testing was conducted in the participants' home. In the latter case, the stimuli were scaled to equate the visual angle.

On each trial, a single stimulus was presented, and the participant was instructed to make a category assignment by pressing one of two response keys (labeled “A” or “B”) with either the left or the right index finger. A standard keyboard was used to collect responses. The keyboard characters “d” and “k” were assigned to categories A and B, respectively (the assignment of category labels to response keys was counterbalanced across participants). We did not expect performance to vary between the two hands, given that the response requirements were minimal (e.g., speed was not emphasized) and that all of the patients had no overt difficulty producing the finger movements. Participants were instructed that their goal was to learn the categories by trial and error. Participants were informed that there were two equally likely categories and that the best possible accuracy was 100 % (i.e., optimal accuracy). The instructions emphasized accuracy, and there was no response time limit. After responding, feedback was provided. When the response was correct, the word “CORRECT” appeared in green and was accompanied by a 1-s, 500-Hz tone; when incorrect, the word “WRONG” appeared in red and was accompanied by a 1-s, 200-Hz tone. The screen was then blanked for 500 ms prior to the appearance of the next stimulus. In addition to trial-by-trial feedback, summary feedback was given at the end of each 80-trial block, indicating overall accuracy for that block. The presentation order of the 80 stimuli was randomized within each block, separately for each participant.

Each participant was trained for three blocks of 80 trials on the CON, RDV, or IDV category structures (Fig. 2). At the completion of training, participants were informed that they would now complete three more blocks designed to test the knowledge they gained during training. Feedback was omitted during the first test block in an effort to determine the impact of training on the test categories while minimizing new learning. Participants were informed that trial-by-trial feedback would be omitted during the first test block and that trial-by-trial feedback would be reinstated during the final two test blocks.

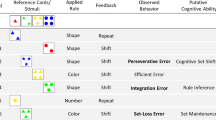

In order to investigate whether the benefits of targeted training would generalize to another task of rule-guided behavior, a subset (n = 15) of the patients completed the WCST immediately after the categorization task. It was not possible to test all PD patients on the WCST, because the WCST was added to the protocol midway through data collection. In the WCST, the participant attempted to learn the correct rule for matching multidimensional card stimuli (varying across trials in color, form, and number) to one of four key cards by trial and error. On each trial, the participant indicated which of the four key cards was the correct match for the current stimulus and immediately received feedback indicating whether their response was correct or incorrect. Unbeknownst to the participant, once the rule was learned (i.e., after achieving 10 consecutive correct trials), the rule was changed. The WCST was selected because successful performance depends upon rule-guided behavior in the presence of selective-attention demand and because the WCST has been extensively used as a neuropsychological instrument to assess cognition in PD patients (e.g., Price et al., 2009). Five common performance measures from the WCST were computed: the number of trials to learn the first rule, the number of total errors (on 128 trials), the number of rules learned (on 128 trials), the number of sets (i.e., five consecutive correct responses), and the number of set-loss errors (i.e., the number of errors following the acquisition of a set).

In an experimental session, participants also completed neuropsychological testing either directly before or after the experimental tasks. Due to time constraints during testing, not all participants completed all neuropsychological assessments. As a result, the degrees-of-freedom vary by neuropsychological assessment in the analysis of the Table 1 data. Each session lasted approximately 2.5 h, including neuropsychological testing and multiple breaks.

Results

Preliminary analyses

In order to proceed with testing the primary hypotheses, it is necessary to first determine whether there were any baseline differences in demographic, neuropsychological, or neuropathological variables. Gender (see the Method section) was not equally represented across the patient and control groups,χ 2 (75) = 10.14, p < .05.The relevant statistics for all other baseline comparisons are reported in Table 1. With the exception of the number of depressive symptoms, PD patients and controls did not differ on any of the baseline measures. For the PD-patient-specific variables, disease duration was the only variable to significantly differ across conditions, with patients in the IDV condition having a longer disease duration than those in the CON condition (p = .02). Baseline differences on these three variables, however, were not consistent with the pattern of condition and group differences on accuracy during the critical test phase (see subsequent analyses and the General Discussion section).

Categorization accuracy

Participants were randomly assigned to be trained on one of the Fig. 2 category structures. Following training, all participants were tested on the CON category structure (top panel of Fig. 2). It was predicted that during the test phase, PD patients who received targeted training in the absence of selective-attention demand (RDV condition) would not be impaired, whereas PD patients that received nontargeted training (CON and IDV conditions) would be impaired (relative to neurologically healthy control participants). Because the primary predictions were focused on within-condition comparisons between PD patients and control participants during the test phase, we opted to use the focused, planned comparisons approach recommended by Rosenthal and colleagues (Rosenthal, Rosnow, & Rubin, 2000) to analyze the accuracy data. More specifically, we conducted participant group (control vs. PD patient) × test block mixed ANOVAs separately for each training condition (CON, RDV, IDV). For consistency, the same analysis strategy was used for the training data.

Training phase

Inspection of training accuracy (Fig. 3) suggests that PD patients were less accurate than controls only in the CON condition.Footnote 1 In the CON condition, the main effects of group and block were significant [group, F(1, 21) = 8.34, p < .05, η 2 P = .28; block, F(1.58, 33.18) = 9.24, p < .05, η 2 P = .31 group × block, F(1.58, 33.18) = 0.43, p = .61, η 2 P = .02].Footnote 2 In the RDV condition, only the main effect of block was significant [group, F(1, 20) = 0.67, p = .42, η 2 P = .03; block, F(1.60, 31.92) = 6.30, p < .05, η 2 P = .24; group × block, F(1.60, 31.92) = 0.12, p = .84, η 2 P = .006]. The same pattern was observed in the IDV condition [group, F(1, 22) = 0.30, p = .59, η 2 P = .01; block, F(1.66, 36.59) = 6.32, p < .05, η 2 P = .22; group × block, F(1.66, 36.59) = 0.76, p = .45, η 2 P = .03]. Relative to control participants, PD patients were impaired in the CON condition, but their performance was spared in the IDV condition, suggesting that the mere presence of selective-attention demand is insufficient to impair categorization accuracy. As was expected, PD patients performed similar to controls in the absence of selective-attention demand (RDV condition).

Test phase

Inspection of test accuracy (Fig. 3) suggests that that the PD patients who received nontargeted training (CON, IDV) were impaired, whereas the PD patients who received targeted training (RDV) were not impaired. In the CON condition, the main effect of group was significant [group, F(1, 21) = 6.01, p < .05, η 2 P = .22; block, F(2, 42) = 1.09, p = .35, η 2 P = .05; group × block, F(2, 42) = 0.30, p = .75, η 2 P = .01]. In the RDV condition, none of the effects were significant [group, F (1, 20) = 1.57, p = .22, η 2 P = .07; block, F(1.38, 27.41) = 1.82, p = .19, η 2 P = .08; group × block, F(1.38, 27.41) = 2.28, p = .14, η 2 P = .10]. In contrast, to the assessment based on the initial inspection of the Fig. 3 data, the main effect of group was not significant in the IDV condition [group, F(1, 22) = 2.91, p = .10, η 2 P = .12; block, F(2, 44) = 3.07, p = .06, η 2 P = .12; group × block: F(2, 44) = 1.65, p = .20, η 2 P = .07]. This may be a consequence of the similar performance of patients and controls during the first test block (in which corrective feedback was omitted) relative to the subsequent test blocks (when feedback was introduced). Consistent with this argument, a 2 group × 2 block ANOVA focusing on the final test blocks suggested that there may have been a more subtle PD impairment in the IDV condition [main effect of group, F(1, 22) = 4.40, p < .05, η 2 P = .17]. In sum, consistent with predictions, PD patients who received targeted training in the RDV condition were not impaired at test, whereas PD patients in the CON condition were impaired at test. The data from the IDV condition were less clear, but the results suggest that the PD patients may have been impaired in this condition as well.

The above analyses suggest that PD patients who received targeted training performed as well as matched controls. Another prediction was that PD patients who received targeted training would perform better than patients in the nontargeted conditions (i.e., CON and IDV). This a priori prediction was tested by comparing test phase accuracy (i.e., average accuracy during the test blocks when corrective feedback was provided) for patients in the CON and IDV conditions with test phase accuracy for patients in the RDV condition. Consistent with this prediction, PD patients in the RDV condition outperformed PD patients in the CON condition, t(15.35) = 2.16, p < .05, d = 1.1, and PD patients in the IDV condition, t(12.97) = 2.57, p < .05, d = 1.43.Footnote 3 In sum, PD patients who received targeted training were as accurate as control participants and more accurate than PD patients who received nontargeted training.

Categorization decision strategy

The analysis of the categorization accuracy during the test phase suggests that the PD patients may have benefited from targeted training. To further explore these data, we used model-based analyses to evaluate variability in the decision strategies used to perform the categorization task. For example, patients may have differed across conditions in their ability to attend selectively to spatial frequency (e.g., resulting in a decision rule that was sensitive to both dimensions) or their ability to consistently apply a decision rule. Alternatively, some patients may demonstrate a more general inability to perform the categorization task. The following analyses represent a quantitative approach to evaluating these hypotheses.

Three different types of models were evaluated, each based on a different assumption concerning the participant's strategy. The rule-based models assume that the participant attends selectively to one dimension (unidimensional classifiers; e.g., if the bars are thin, respond B; otherwise, respond A). There were two versions of the unidimensional classifier, one assuming that participants used the optimal decision rule in Fig. 2 (optimal classifier, OC) and one assuming that participants used a unidimensional rule with a suboptimal intercept on the spatial frequency dimension (unidimensional classifier, UC). Information-integration (II) models (linear classifier and minimum-distance classifiers) assume that the participant combines the stimulus information from both dimensions prior to making a categorization decision. Finally, random-responder (RR) models assume that the participant simply guesses or frequently switches among a number of different strategies. Each of these models was fit separately to the data from every block for all participants using a standard maximum likelihood procedure for parameter estimation (Ashby, 1992b; Wickens, 1982) and the Bayes information criterion for goodness of fit (Schwarz, 1978). See the Appendix for a description of the models and fitting procedures.

The percentage of participants best fit by each model is given in Fig. 4, but for brevity, we focus this analysis on the final test block. There were no significant differences in the distribution of best-fitting models between patients and controls in any of the conditions, but the difference approached significance in the IDV condition [CON, χ 2 (3) = 3.06, p = .38; RDV, χ 2 (2) = 1.25, p = .54; IDV, χ 2 (3) = 7.53, p = .06]. Notably, the RR models provided the best fit to the PD patient data only in the CON and IDV conditions. Thus, although the distribution of best-fitting models did not significantly differ between patients and controls, the numerically greater use of suboptimal strategies by PD patients in the CON and IDV conditions may partially explain the lower test accuracy by these patients. Consistent with this interpretation, in the CON and IDV conditions, patients best fit by a UC (CON, M = 84.79, SEM = 8.33; IDV, M = 80.71, SEM = 14.25) or a RR (CON, M = 53.28, SEM = 5.19; IDV, M = 47.92, SEM = 5.51) model had lower accuracy than patients best fit by the OC (CON, M = 93.24, SEM = 1.41; IDV, M = 88.84, SEM = 5.78).

Percentage of participants in the control (CON), relevant dimension variation (RDV), and irrelevant dimension variation (IDV) conditions whose data were best fit by the optimal classifier (OC), the suboptimal unidimensional classifier on spatial frequency (UC), an information integration model (II), or a model assuming that participants were responding randomly (RR). For ease of presentation, the unidimensional classifier on orientation has been omitted, since the data from only 1 participant (a PD participant in the RDV condition during the first test block) was best fit by this model

The PD impairment in the CON and IDV conditions during test may have also been driven by instability in the representation of the decision rule. This could arise from an increase in trial-by-trial variability in the representation and/or application of the decision rule (i.e., internal noise).Footnote 4 This was investigated by conducting a series of 2 group × 2 block (test block 2, test block 3) mixed ANOVAs on the average noise parameter estimate, excluding participants who were best fit by an RR model (Fig. 5). Consistent with the hypothesis of increased decision rule variability, the average noise parameter estimate was consistently higher for the PD patients than for the controls in the CON condition [group, F(1, 18) = 4.58, p < .05, η = 2 P = .20 ; block, F(1, 18) = 1.99, p = .87, η 2 P = .002; group × block, F(1, 18) = 0.33, p = .56, η 2 P = .02], but not in the RDV condition [group, F(1, 20) = 2.43, p = .14, η 2 P = .11; block, F(1, 20) = 0.64, p = .43, η 2 P = .03 ; group × block, F(1, 20) = 1.71, p = .21, η = 2 p =.08]. In the IDV condition, however, there were no group differences in rule variability [group, F (1, 16) = 0.08, p = .78, η 2 P = .01 ; block, F(1, 16) = 2.09, p = .17, η 2 P = .12 ; group × block, F(1, 16) = 0.01, p = .93, η 2 P = .00]. Thus, consistent with previous work, PD patients demonstrated increased instability in the representation of the decision rule in a prototypical unidimensional task (i.e., CON condition). In addition, PD patients who received targeted training did not significantly differ from controls.

WCST accuracy

In order to test the generalization of the benefit of targeted training on a rule-based task, a subset of the PD patients in the nontargeted training conditions (CON and IDV, n = 8) and the targeted training condition (RDV, n = 7) were tested on a modified version of the WCST immediately following completion of the test phase. Five measures were used to assess performance on the WCST and are plotted in Fig. 6. PD patients who received targeted training numerically outperformed PD patients who received nontargeted training on all but the number of set-loss errors. These differences reached statistical significance, however, for the number of trials needed to learn the first rule, t(13) = 2.12, p = .05, d = 1.10, total number of errors, t(13) = 2.27, p = .04, d = 1.18, and total number of sets, t(13) = 2.41, p = .03, d = 1.26. The number of rules learned, t(13) = 1.16, p = .27, d = .60, and the number of set-loss errors, t(13) = 0.93, p = .37, d = .48, did not significantly differ. Although limited by small sample size, these preliminary data suggest that the benefits of targeted training on a unidimensional task may generalize to another task of rule-guided behavior.

General discussion

The impact of PD on cognition has received considerable attention in cognitive neuroscience. The majority of research has used PD as a model of dysfunction in frontostriatal circuitry, thereby emphasizing its contribution to cognition in neurologically healthy individuals. Often overlooked, however, are the implications of such research for identifying the specific manipulations most likely to promote cognitive functioning. The present study addressed this issue in the context of rule-guided behavior, using rule-based categorization as a model task. PD patients who received targeted training of the decision rule (i.e., an opportunity to learn a decision rule in the absence of selective-attention demand) performed similarly to healthy control participants during a test phase in which selective-attention demand was increased. These PD patients also outperformed PD patients who received nontargeted training (i.e., an opportunity to learn the decision rule in the presence of selective-attention demand). Moreover, patients who received targeted training outperformed patients who received nontargeted training on a subsequent task of rule-guided behavior. This study is an important step in comparing the efficacy of targeted versus nontargeted training and has the potential to inform the design of more targeted interventions for improving cognition in individuals with PD.

Consistent with previous work (Ashby et al., 2003; Ell et al., 2010; Filoteo et al., 2007; Filoteo et al., 2005; Price et al., 2009), PD patients were impaired relative to control participants throughout the training and test phases in the CON condition. As predicted from the results of Maddox and colleagues (Maddox et al., 1996), PD patients who received targeted training were able to learn the categorization rule. Importantly, PD patients who received targeted training were able to continue to use the categorization rule during the test phase, when selective-attention demand was increased; resulting in test phase performance that was similar to that of control participants and that exceeded the performance of PD patients who received nontargeted training.

Targeted training may have shielded the decision rule from the interfering effects of irrelevant information introduced during the test phase. Recall that during targeted training, there was no irrelevant dimension (i.e., stimuli varied only on spatial frequency); thus, it would seem reasonable to assume that the benefit of targeted training was mediated by enhanced selective attention to the relevant dimension. During the test phase, however, there was variation on orientation that would have been novel for participants who received targeted training. Given that at least some PD patients demonstrate enhanced sensitivity to novel stimuli (e.g., Djamshidian, O'Sullivan, Wittmann, Lees, & Averbeck, 2011), it seems unlikely that PD patients would have completely ignored novel variation in orientation during the test phase. Rather, the benefit of targeted training may have facilitated the ability to learn to ignore irrelevant variation on orientation during the test phase.

Interestingly, training phase performance varied across the nontargeted training conditions. Patients in the CON condition, but not the IDV condition, were impaired relative to controls throughout the training phase, suggesting that the mere presence of selective-attention demand is not sufficient to impair PD patient performance. Recall that the category means for the IDV training categories were selected to equate the observed intercategory distance across conditions because distance to the category boundary is a critical determinant of performance (Ashby & Maddox, 1994; Ell & Ashby, 2006, 2012). One consequence of this choice is that training accuracy was lower for control participants in the IDV condition than for control participants in the CON condition [average training accuracy, t(20) = 5.26, p < .05, d = 2.35]. Nevertheless, it would still have been possible to detect an impairment given that control participant accuracy was well above chance (Fig. 3). During the IDV test phase, PD patients were impaired relative to control participants (when focusing on the feedback blocks), suggesting that simply reducing variance during training is not sufficient to benefit subsequent performance at test. It may be possible, however, that PD patients would have benefitted from a variant of IDV training in which task difficulty was reduced (e.g., by increasing intercategory distance).

The results of the model-based analyses suggest that the test phase accuracy impairment for patients that received nontargeted training may be related to the use of highly suboptimal decision rules by a subset of the patients. In addition, PD patients in one of the nontargeted training conditions (i.e., CON) demonstrated increased variability in the representation of the decision rule, relative to control participants, during the test phase [note, however, that the difference between PD patients in the CON and RDV conditions was not statistically significant; average variability across blocks, t(20) = 1.38, p = .18, d = 0.62]. Increased rule variability for PD patients in the CON condition could have been driven by impaired memory for the current rule, frequent adjustment in the decision rule, and/or impaired application of the decision rule. The relative contribution of these possible sources of increased rule variability cannot be directly assessed with the present data, but previous work on the impact of PD on cognition provides some guidance. For instance, working memory would seem to be an unlikely cause, given the absence of a general impairment in working memory in the present patient sample (see Table 1) and the fact that working memory is generally spared in medicated PD patients (Cools, Miyakawa, Sheridan, & D'Esposito, 2010; Kehagia, Barker, & Robbins, 2010; Lewis, Slabosz, Robbins, Barker, & Owen, 2005; Slabosz et al., 2006). In addition, the ability of PD patients to adjust a decision rule along a stimulus dimension in order to classify highly discriminable stimuli (i.e., intradimensional shifting) is also typically spared, but preliminary data suggest that PD patients may have an intradimensional shifting impairment when stimulus discriminability is reduced, as in the present tasks (Ell & Zilioli, 2010). Thus, PD patients in the CON condition may have had an impaired ability to shift and/or apply a decision rule, relative to control participants.

Potential limitations of the present study

With the exception of gender and self-reported depressive symptomotology, there were no significant differences in baseline demographic or neuropsychological variables between the patient and control groups. Neither of these differences, however, can explain the benefit of targeted training. For instance, gender was not predictive of performance during the critical test phase for either the patient, r(36) = .16, p = .36, or control, r(34) = −.11, p = .54, group. In addition, depressive symptomotology did not differ by condition, as would have been expected given the superior performance of the patients who received targeted training, relative to patients who received nontargeted training.

Given the small sample size, however, it is likely that the analyses were underpowered for detecting differences in demographic and neuropsychological variables. For example, the patients in the CON condition were older than control participants in the CON condition and patients who received targeted training, but the group × condition interaction did not reach statistical significance. A power analysis conducted using G*power 3 (Faul, Erdfelder, Lang, & Buchner, 2007) indicated that 235 total participants (i.e., approximately 40 participants in each level of group and condition) would be required to achieve an appropriate level of power (i.e., 1 − β = .8) to detect the interaction, given the present effect size. In addition, the small sample size should also be considered when interpreting the statistically significant results, since it is possible that the present data could represent extreme values (e.g., sampling from extreme regions of the distribution of PD patients).

For the categorization data, it is possible that the relatively normal test phase performance of the PD patients who received targeted training was driven by a ceiling effect, since control participant accuracy was near perfect throughout the training and test phases. It is important to note, however, that the test categories were identical across conditions. Thus, a potential ceiling effect cannot account for the finding that PD patients who received targeted training outperformed PD patients who received nontargeted training.

The category structure used for targeted training was designed to equate objective accuracy (e.g., ideal observer accuracy) with the CON category structure. There was, however, a clear subjective task difficulty difference across conditions. It would have been possible to artificially increase task difficulty in the targeted training condition by increasing category overlap. Given that previous work has not investigated the impact of category overlap (Ashby et al., 2003; Ell et al., 2010; Filoteo et al., 2007; Filoteo et al., 2005; Price et al., 2009) and that category overlap can impact decision processes in healthy individuals (e.g., Ell & Ashby, 2006, 2012), such an option was not pursued in the present study.

Nevertheless, the difference in task difficulty suggests at least two possible alternative interpretations of the present data. First, differences between targeted and nontargeted training could be due to variability across conditions in categorization ability. In an effort to limit patient fatigue due to extended task performance, the patients were not pretested on the test categories prior to training. One consequence of this approach, however, is that it is unclear whether the apparent benefit of targeted training was driven by the structure of the training categories or by an inherent difference in categorization ability across conditions for these patients (i.e., a failure of random assignment). The latter interpretation would seem unlikely, given that were no significant differences between the targeted and nontargeted training groups in working memory and executive function (cognitive abilities demonstrated to be critical for rule-based categorization) (Ashby & Maddox, 2005).

Second, differences between targeted and nontargeted training could be due to nonspecific effects of task success. In other words, targeted training may have trained the patients to be successful, and this success is contagious. A similar concept, “learning-to-learn,” has been described in the context of animal behavior (Harlow, 1949) and motor learning (Braun, Aertsen, Wolpert, & Mehring, 2009) and has been associated with a similar sort of nonspecific practice effect. A related argument has been made in the cognitive rehabilitation literature, in which it has been suggested that an effective method of rehabilitating individuals with neurological dysfunction is to begin with stimuli that minimize the likelihood of making an error and steadily increase task difficulty (i.e., the method of errorless learning; Baddeley, 1992; Terrace, 1964; Wilson, Baddeley, Evans, & Shiel, 1994). The impact of successful performance cannot be completely ruled out, given that higher average training accuracy was associated with higher average test accuracy for PD patients in the CON, r(13) = .92, p < .05, and RDV conditions, r(12) = .82, p < .05. Nevertheless, successful performance does not provide a complete account of the present data, since the association between training and test accuracy was not significant for PD patients in the IDV condition, r(13) = .17, p = .56.

Targeted training of cognition

The efficacy of cognitive training has received considerable attention in recent years. In the context of neurologically healthy individuals, there is an ongoing debate on whether cognitive training benefits performance (e.g., Jaeggi, Buschkuehl, Jonides, & Shah, 2011; Redick et al., 2012). Many of the experimental design issues raised in this debate (e.g., selecting the appropriate comparison conditions) have been echoed in the neuropsychological literature on cognitive training (Hart, Fann, & Novack, 2008; Kennedy & Turkstra, 2006; Schutz & Trainor, 2007). Despite the numerous challenges for investigating the efficacy of cognitive training as a nonpharmacological tool for treating neurological dysfunction, there are examples of well-designed experiments that have utilized cognitive training (e.g., Constantinidou, Thomas, & Robinson, 2008; Constantinidou et al., 2005; Disbrow et al., 2012). For example, Constantinidou and colleagues demonstrated that an expanded training protocol (including perceptual discrimination, object recognition, and categorization) benefitted categorization performance and generalized to a variety of tasks of rule-guided behavior in patients with traumatic brain injury.

It could be argued that many of the attempts to apply cognitive training to individuals with PD suffer from many of the experimental design limitations that have been discussed in the broader literature on the efficacy of cognitive training (see Hindle et al., 2013, for a review). Furthermore, the majority of these studies have used nontargeted approaches that make it unclear which cognitive processes should be the focus of future interventions. Consistent with a more recent intervention (Disbrow et al., 2012), the approach investigated in the present study was to use targeted training of a specific aspect of cognition. The emphasis on rule-guided behavior was driven by its importance for cognition in general (Bunge & Wallis, 2007) and the consistent findings of PD patient impairment on a model task of rule-guided behavior (i.e., rule-based categorization; Ashby et al., 2003; Ell et al., 2010; Filoteo et al., 2007; Filoteo et al., 2005; Price, 2006). The present data suggest that rule-guided behavior may be a cognitive process on which to focus future intervention work.

Practical considerations regarding the use of cognitive training in applied settings require that the effects of cognitive training protocols generalize beyond the specific context of training. This consideration was the motivation for including a preliminary investigation of the generalizability of targeted training to the WCST. The WCST was selected because successful performance depends upon rule-guided behavior in the presence of selective-attention demand and because of its frequent use in the study of frontostriatal dysfunction. In addition, the WCST is predictive of future cognitive decline (Woods & Tröster, 2003) and health status (Schieshser et al., in press) in PD patients, making it an important marker of functioning. Although limited by a small sample size, the preliminary WCST data suggest that targeted training may have a more general effect on rule-guided behavior and highlight the potential benefit of focusing future interventions on specific cognitive processes.

Implications for the study of rule-guided behavior

An assumption underlying the interpretation of the present data is that participants are, in fact, learning a rule in the unidimensional task. This is a potentially critical issue, given that computational models with quite different representational assumptions can provide equivalent accounts of the data in many categorization tasks (Ashby & Maddox, 1998; Townsend, 1992). The Fig. 2 category structures were not designed to test between competing theories of categorization, so the evidence supporting the use of rule-based strategies should be carefully considered. First, the model-based analyses indicate that rule-based models consistently provided a better account of individual response profiles than did an information-integration model that assumed that participants represented category prototypes (i.e., the minimum distance classifier; see the Appendix for details). Second, given that the category means for the CON and RDV training categories are identical, it seems reasonable to assume that the representation of the category prototypes would be similar. If so, in contrast with the results, prototype theory would seem to predict equivalent performance for these conditions during the test phase (but see Anderson, 1991; Love, Medin, & Gureckis, 2004; Minda & Smith, 2001; Pothos & Chater, 2002, for possible alternatives). Finally, if PD patients were representing exemplars (e.g., as assumed by the generalized context model; Nosofsky, 1986) rather than rules, test phase performance should have been greater in the CON condition than in the RDV condition, because the stimuli were identical. These arguments suggest that the assumption that PD patients are learning rules is reasonable, but the arguments themselves are indirect, and more experimentation will be necessary to rule out alternative representational assumptions.

In the context of rule-based categorization, frontostriatal networks have been argued to support the maintenance and updating of rules necessary for learning (Ashby, Alfonso-Reese, Turken, & Waldron, 1998; Ashby, Ell, Valentin, & Casale, 2005; Hélie, Paul, & Ashby, 2012). For instance, the COVIS (competition between verbal and implicit systems; Ashby, et al., 1998) model of category learning assumes that dopamine depletion resulting from PD would impair the switching of attention away from the currently attended stimulus dimension, the selection of a new stimulus dimension to which to attend, and decision rule variability on the selected dimension (Hélie et al., 2012). The latter is most relevant to the present discussion, given that the PD impairment in unidimensional tasks has often been associated with increased decision rule variability (Ell et al., 2010; Filoteo et al., 2007). The PD impairment in the Fig. 1 task, however, does not appear to be sensitive to the withdrawal of dopaminergic medication in PD patients (Ell et al., 2010). Thus, it may be the case that the PD impairment in unidimensional tasks is associated with dysfunction in other neurotransmitter systems that are critical for normal frontostriatal functioning (Braak et al., 2003; Kehagia et al., 2010) and/or abnormalities in frontal functioning in PD patients (Monchi, Petrides, Mejia-Constain, & Strafella, 2007). Furthermore, because COVIS was not designed to model transfer across tasks with different stimulus sets, it does not make a prediction for the generalizability of targeted training to the WCST that was observed in the present study.

These data are generally consistent with the idea that rule-guided behavior is mediated, in part, by frontostriatal networks (Badre & Frank, 2012; Bunge, 2004; Chudasama & Robbins, 2006; Pasupathy & Miller, 2005; Seger & Miller, 2010). More specifically, the present data suggest that targeted training benefits decision making in the presence of selective-attention demand, but the specific neural locus of this effect is unclear. Broadly speaking, targeted training could have facilitated the use of a fundamentally different neural network and/or increased the efficiency of the network that typically mediates rule-based categorization (DeGutis & D'Esposito, 2009; Kelly & Garavan, 2005). For instance, deconstructing the task by allowing participants to first learn the criterion with minimal selective-attention demand then apply the criterion as selective-attention demand is increased, and finally, perform another rule-based task may have facilitated a rule abstraction process argued to depend upon the interaction of frontostriatal networks (Badre & Frank, 2012; Badre, Kayser, & D'Esposito, 2010).

Conclusions

Consistent with previous work, PD patients were impaired in rule-based categorization in the presence of selective-attention demand. This impairment, however, was not inevitable, since patients who received targeted training of the decision rule under conditions of reduced selective-attention demand outperformed patients who received nontargeted training during a test phase in which selective-attention demand was present. Moreover, In contrast to some of the patients who received nontargeted training, PD patients who received targeted training demonstrated variability in the representation of the decision rule that was similar to that of control participants, possibly reflecting an improved ability to shift and/or apply a decision rule. Patients who received targeted training also outperformed patients who received nontargeted training on a subsequent task of rule-guided behavior (i.e., the WCST). These data suggest the potential benefit of targeted training and may be useful in the development of large-scale intervention studies.

Notes

The data were screened for outliers by comparing each participant’s average accuracy across the test phase and final test block accuracy to the means for their condition (e.g., healthy controls in the IDV condition). Participants were excluded from further analysis if they were more than 2 SDs away from the mean on both accuracy measures. This criterion resulted in the exclusion of 1 control participant from the IDV condition.

A Huynh–Feldt correction for violation of the sphericity assumption has been applied to all mixed ANOVAs (when appropriate). Post hoc comparisons were Sidak corrected.

Welch’s t-test was used for these, and subsequent, comparisons in which homogeneity of variance could not be assumed, resulting in noninteger degrees-of-freedom values.

The models include a parameter to reflect trial-by-trial variability in perceptual and decisional processes. Given that the duration of stimulus presentation was unlimited and there was no response deadline, it is reasonable to assume that this parameter primarily reflects variability in the decision process.

References

Anderson, J. (1991). The adaptive nature of human categorization. Psychological Review, 98, 409–429.

Ashby, F. G. (1992a). Multidimensional models of categorization. In F. G. Ashby (Ed.), Multidimensional models of perception and cognition. Hillsdale: Erlbaum.

Ashby, F. G. (1992b). Multivariate probability distributions. In F. G. Ashby (Ed.), Multidimensional models of perception and cognition (pp. 1–34). Hillsdale: Lawrence Erlbaum Associates, Inc.

Ashby, F. G., Alfonso-Reese, L. A., Turken, A. U., & Waldron, E. M. (1998). A neuropsychological theory of multiple systems in category learning. Psychological Review, 105, 442–481.

Ashby, F. G., & Ell, S. W. (2001). The neurobiology of human category learning. Trends in Cognitive Science, 5(5), 204–210.

Ashby, F. G., Ell, S. W., Valentin, V. V., & Casale, M. B. (2005). FROST: A distributed neurocomputational model of working memory maintenance. Journal of Cognitive Neuroscience, 17, 1728–1743.

Ashby, F. G., & Gott, R. E. (1988). Decision rules in the perception and categorization of multidimensional stimuli. Journal of Experimental Psychology: Learning, Memory, and Cognition, 14, 33–53.

Ashby, F. G., & Lee, W. W. (1993). Perceptual variability as a fundamental axiom of perceptual science. In S. C. Masin (Ed.), Foundations of percpetual theory (pp. 369–399). Amsterdam: Elsevier.

Ashby, F. G., & Maddox, W. T. (1994). A response time theory of separability and integrality in speeded classification. Journal of Mathematical Psychology, 38, 423–466.

Ashby, F. G., & Maddox, W. T. (1998). Stimulus categorization. In M. H. Birnbaum (Ed.), Measurement, judgment, and decision making: Handbook of perception and cognition (pp. 251–301). San Diego: Academic Press.

Ashby, F. G., & Maddox, W. T. (2005). Human category learning. Annual Review of Psychology, 56, 149–178. doi:10.1146/annurev.psych.56.091103.070217

Ashby, F. G., Noble, S., Filoteo, J. V., Waldron, E. M., & Ell, S. W. (2003). Category learning deficits in Parkinson's disease. Neuropsychology, 17, 115–124.

Ashby, F. G., & Townsend, J. T. (1986). Varieties of perceptual independence. Psychological Review, 93, 154–179.

Ashby, F. G., & Waldron, E. M. (1999). The nature of implicit categorization. Psychonomic Bulletin & Review, 6, 363–378.

Bach, M. (1996). The Freiburg Visual Acuity test–automatic measurement of visual acuity. Optometry and Vision Science, 73(1), 49–53.

Baddeley, A. D. (1992). Implicit memory and errorless learning: A link between cognitive theory and neuropsychological rehabilitation? In L. R. Squire & N. Butters (Eds.), Neuropsychology of Memory (2nd ed., pp. 309–314). New York: Guilford.

Badre, D., & Frank, M. J. (2012). Mechanisms of hierarchical reinforcement learning in cortico-striatal circuits 2: Evidence from FMRI. Cerebral Cortex, 22, 527–536. doi:10.1093/cercor/bhr117

Badre, D., Kayser, A. S., & D'Esposito, M. (2010). Frontal cortex and the discovery of abstract action rules. Neuron, 66, 315–326. doi:10.1016/j.neuron.2010.03.025

Beck, A. T., Steer, R., & Brown, G. (1996). Beck Depression Inventory - Second edition manual. San Antonio: Psychological Corporation.

Braak, H., Del Tredici, K., Rüb, U., De Vos, R. A. I., Jansen Steur, E. N. H., & Braak, E. (2003). Staging of brain pathology related to sporadic Parkinson's disease. Neurbiology of Aging, 24, 197–210.

Brainard, D. H. (1997). Psychophysics software for use with MATLAB. Spatial Vision, 10, 433–436.

Braun, D., Aertsen, A., Wolpert, D. M., & Mehring, C. (2009). Motor task variation induces structural learning. Current Biology, 19, 352–357.

Bunge, S. A. (2004). How we use rules to select actions: A review of evidence from cognitive neuroscience. Cognitive, Affective, & Behavioral Neuroscience, 4, 564–579.

Bunge, S. A., & Wallis, J. D. (Eds.). (2007). Neuroscience of rule-guided behavior. New York: Oxford University Press.

Chudasama, Y., & Robbins, T. W. (2006). Functions of frontostriatal systems in cognition: Comparative neuropsychopharmacological studies in rats, monkeys and humans. Biological Psychology, 73, 19–38. doi:10.1016/j.biopsycho.2006.01.005

Constantinidou, F., Thomas, R. D., & Robinson, L. (2008). Benefits of categorization training in patients with traumatic brain injury during post-acute rehabilitation: Additional evidence from a randomized controlled trial. The Journal of Head Trauma Rehabilitation, 23(5), 312–328. doi:10.1097/01.HTR.0000336844.99079.2c

Constantinidou, F., Thomas, R. D., Scharp, V. L., Laske, K. M., Hammerly, M. D., & Guitonde, S. (2005). Effects of categorization training in patients with TBI during postacute rehabilitation: Preliminary findings. The Journal of Head Trauma Rehabilitation, 20(2), 143–157.

Cools, R., Miyakawa, A., Sheridan, M., & D'Esposito, M. (2010). Enhanced frontal function in Parkinson's disease. Brain, 133, 225–233. doi:10.1093/brain/awp301

DeGutis, J., & D'Esposito, M. (2009). Network changes in the transition from initial learning to well-practiced visual categorization. Frontiers in Human Neuroscience, 3, 44. doi:10.3389/neuro.09.044.2009

Delis, D. C., Kaplan, E., & Kramer, J. H. (2001). Delis-Kaplan Executive Functioning System. San Antonio: The Psychological Corporation.

Disbrow, E. A., Russo, K. A., Higginson, C. I., Yund, E. W., Ventura, M. I., Zhang, L., . . . Sigvardt, K. A. (2012). Efficacy of tailored computer-based neurorehabilitation for improvement of movement initiation in Parkinson's disease. Brain Research, 1452, 151–164. doi: 10.1016/j.brainres.2012.02.073

Djamshidian, A., O'Sullivan, S. S., Wittmann, B. C., Lees, A. J., & Averbeck, B. B. (2011). Novelty seeking behaviour in Parkinson's disease. Neuropsychologia, 49(9), 2483–2488. doi:10.1016/j.neuropsychologia.2011.04.026

Ell, S. W., & Ashby, F. G. (2006). The effects of category overlap on information-integration and rule-based category learning. Perception & Psychophysics, 68, 1013–1026.

Ell, S. W., & Ashby, F. G. (2012). The impact of category separation on unsupervised categorization. Attention, Perception, & Psychophysics, 74, 466–475.

Ell, S. W., Ing, A. D., & Maddox, W. T. (2009). Criterial noise effects on rule-based category learning: The impact of delayed feedback. Attention, Perception, & Psychophysics, 71, 1263–1275.

Ell, S. W., Weinstein, A., & Ivry, R. B. (2010). Rule-based categorization deficits in focal basal ganglia lesion and Parkinson's disease patients. Neuropsychologia, 48(10), 2974–2986. doi:10.1016/j.neuropsychologia.2010.06.006

Ell, S. W., & Zilioli, M. (2010). The impact of Parkinson's disease on intra-dimensional shifts of the decision criterion in rule-based category learning. San Diego: Paper presented at the Poster session presented at the annual meeting of the Society for Neuroscience.

Fahn, S., Elton, R., & Members of the UPDRS Development Committee. (1987). Unified Parkinson’s Disease Rating Scale. In S. Fahn, C. D. Marsden, D. B. Calne, & M. Goldstein (Eds.), Recent developments in Parkinson's disease (Vol. 2, pp. 153–163, 293–304). Florham Park: Macmillan Health Care Information.

Faul, F., Erdfelder, E., Lang, A. G., & Buchner, A. (2007). G*Power 3: A flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39, 175–191.

Filoteo, J. V., Maddox, W. T., Ing, A. D., & Song, D. D. (2007). Characterizing rule-based category learning deficits in patients with Parkinson's disease. Neuropsychologia, 45, 305–320.

Filoteo, J. V., Maddox, W. T., Ing, A. D., Zizak, V., & Song, D. D. (2005). The impact of irrelevant dimensional variation on rule-based category learning in patients with Parkinson's disease. Journal of the International Neuropsychological Society, 11, 503–513.

Folstein, M., Folstein, S. E., & McHugh, P. R. (1975). "Mini-Mental State" a practical method for grading the cognitive state of patients for the clinician. Journal of Psychiatric Research, 12(189–198).

Grant, D. A., & Berg, E. A. (1948). Behavioral analysis of degree of reinforcement and ease of shifting to new responses in a Weigl-type card-sorting problem. Journal of Experimental Psychology, 38, 404–411.

Green, D. M., & Swets, J. A. (1966). Signal detection theory and psychophysics. New York: Wiley.

Harlow, H. F. (1949). The formation of learning sets. Psychological Review, 56(1), 51–65.

Hart, T., Fann, J. R., & Novack, T. A. (2008). The dilemma of the control condition in experience-based cognitive and behavioural treatment research. Neuropsychological Rehabilitation, 18(1), 1–21. doi:10.1080/09602010601082359

Heaton, R. K., Chelune, G. J., Talley, J. L., Kay, G. G., & Curtiss, G. (1993). Wisconsin Card Sorting Test manual. Odessa: Psychological Assessment Resources, Inc.

Hélie, S., Paul, E. J., & Ashby, F. G. (2012). A neurocomputational account of cognitive deficits in Parkinson's disease. Neuropsychologia, 50, 2290–2302.

Hindle, J. V., Petrelli, A., Clare, L., & Kalbe, E. (2013). Nonpharmacological enhancement of cognitive function in Parkinson's disease: A systematic review. Movment Disorders. doi:10.1002/mds.25377

Hoehn, M. M., & Yahr, M. D. (1967). Parkinsonism: Onset, progression, and mortality. Neurology, 17, 427–442.

Horowitz, T. S., Choi, W. Y., Horvitz, J. C., Côté, L. J., & Mangels, J. A. (2006). Visual search deficits in Parkinson's disease are attenuated by bottom-up target salience and top-down information. Neuropsychologia, 44, 1962–1977.

Jaeggi, S. M., Buschkuehl, M., Jonides, J., & Shah, P. (2011). Short- and long-term benefits of cognitive training. Proceedings of the National Academy of Sciences of the United States of America, 108(25), 10081–10086. doi:10.1073/pnas.1103228108

Kehagia, A. A., Barker, R. A., & Robbins, T. W. (2010). Neuropsychological and clinical heterogeneity of cognitive impairment and dementia in patients with Parkinson's disease. Lancet Neurology.

Kelly, A. M., & Garavan, H. (2005). Human functional neuroimaging of brain changes associated with practice. Cerebral Cortex, 15, 1089–1102. doi:10.1093/cercor/bhi005

Kennedy, M. R., & Turkstra, L. (2006). Group intervention studies in the cognitive rehabilitation of individuals with traumatic brain injury: Challenges faced by researchers. Neuropsychology Review, 16(4), 151–159. doi:10.1007/s11065-006-9012-8

Lewis, S. J. G., Slabosz, A., Robbins, T. W., Barker, R. A., & Owen, A. M. (2005). Dopaminergic basis for deficits in working memory but not attentional set-shifting in Parkinson's disease. Neuropsychologia, 43, 823–830.

Love, B. C., Medin, D. L., & Gureckis, T. M. (2004). SUSTAIN: A network model of category learning. Psychological Review, 111, 309–332.

Maddox, W. T. (1992). Percepetual and decisional separability. In F. G. Ashby (Ed.), Multidimensional models of perception and cognition (pp. 147–180). Hillsdale: Lawrence Erlbaum Associates.

Maddox, W. T., & Ashby, F. G. (1993). Comparing decision bound and exemplar models of categorization. Perception & Psychophysics, 53, 49–70.

Maddox, W. T., & Ashby, F. G. (2004). Dissociating explicit and procedural-learning based systems of perceptual category learning. Behavioral Processes, 66, 309–332.

Maddox, W. T., Ashby, F. G., & Waldron, E. M. (2002). Multiple attention systems in perceptual categorization. Memory & Cognition, 30, 325–339.

Maddox, W. T., Filoteo, J. V., Delis, D. C., & Salmon, D. P. (1996). Visual selective attention deficits in patients with Parkinson's disease: A quantitative model-based approach. Neuropsychology, 10, 197–218.

Minda, J. P., & Smith, J. D. (2001). Prototypes in category learning: The effects of category size, category structure, and stimulus complexity. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37, 775–799.

Monchi, O., Petrides, M., Mejia-Constain, B., & Strafella, A. P. (2007). Cortical activity in Parkinson's disease during executive processing depends on striatal involvement. Brain, 130(Pt 1), 233–244. doi:10.1093/brain/awl326

Nelson, H. E. (1982). National adult reading test (NART) test manual. Windsor: NFER-Nelson.

Nosofsky, R. M. (1986). Attention, similarity, and the identification categorization relationship. Journal of Experimental Psychology. General, 115, 39–57.

Pasupathy, A., & Miller, E. K. (2005). Different time courses of learning-related activity in the prefrontal cortex and striatum. Nature, 433, 873–876.

Pelli, D. G. (1997). The VideoToolbox software for visual psychophysics: Transforming numbers into movies. Spatial Vision, 10, 437–442.

Pothos, E. M., & Chater, N. (2002). A simplicity principle in unsupervised human categorization. Cognitive Science, 26, 303–343.

Price, A. (2006). Explicit category learning in Parkinson's disease: Deficits related to impaired rule generation and selection processes. Neuropsychology, 20, 249–257.

Price, A., Filoteo, J. V., & Maddox, W. T. (2009). Rule-based category learning in patients with Parkinson's disease. Neuropsychologia, 47, 1213–1226.

Redick, T. S., Shipstead, Z., Harrison, T. L., Hicks, K. L., Fried, D. E., Hambrick, D. Z., . . . Engle, R. W. (2012). No evidence of intelligence improvement after working memory training: A randomized, placebo-controlled study. Journal of Experimental Psychology: General. doi: 10.1037/a0029082

Rosenthal, R., Rosnow, R. L., & Rubin, D. B. (2000). Consrasts and effect sizes in behavioral research: A correlational approach. Cambridge: Cambridge University Press.

Sammer, G., Reuter, I., Hullmann, K., Kaps, M., & Vaitl, D. (2006). Training of executive functions in Parkinson's disease. Journal of the Neurological Sciences, 248, 115–119.

Schieshser, D. M., Han, S. D., Lessig, S., Song, D. D., Zizak, V., & Filoteo, J. V. (in press). Predictors of health status in nondepressed and nondemented invididuals with Parkinson's disease. Archives of Clinical Neuropsychology.

Schutz, L. E., & Trainor, K. (2007). Evaluation of cognitive rehabilitation as a treatment paradigm. Brain Injury, 21(6), 545–557. doi:10.1080/02699050701426923

Schwarz, G. (1978). Estimating the dimension of a model. The Annals of Statistics, 6(2), 461–464.

Seger, C. A., & Miller, E. K. (2010). Category learning in the brain. Annual Review of Neuroscience, 33, 203–219. doi:10.1146/annurev.neuro.051508.135546

Sinforiani, E., Banchieri, L., Zucchella, C., Pacchetti, C., & Sandrini, G. (2004). Cognitive rehabilitation in Parkinson's disease. Archives of Gerontology and Geriatrics, 9, 387–391.

Slabosz, A., Lewis, S. J. G., Smigasiewicz, K., Szymura, B., Barker, R. A., & Owen, A. M. (2006). The role of learned irrelevance in attentional set-shifting impairments in Parkinson's disease. Neuropsychology, 20, 578–588.

Stroop, R. J. (1935). Studies of interference in serial verbal reactions. Journal of Experimental Psychology, 18, 643–662.

Terrace, H. S. (1964). Wavelength generalization after discrimination with and without errors. Science, 144, 78–80.

Townsend, J. T. (1992). Unified theories and theories that mimic each other's predictions. The Behavioral and Brain Sciences, 15, 458–459.

Wechsler, D. (1997a). Wechsler Adult Intelligence Scale (3rd ed.). San Antonio: Harcourt Assessment.

Wechsler, D. (1997b). Wechsler Memory Scale (3rd ed.). San Antonio: Harcourt Assessment.

Wickens, T. D. (1982). Models for behavior: Stochastic processes in psychology. San Francisco: W. H. Freeman.

Wilson, B. A., Baddeley, A. D., Evans, J., & Shiel, A. (1994). Errorless learning in the rehabilitation of memory impaired people. Neuropsychological Rehabilitation, 4, 207–326.

Woods, S. P., & Tröster, A. I. (2003). Prodromal frontal/executive dysfunction predicts incident dementia in Parkinson's disease. Journal of the International Neuropsychological Society, 9, 17–24.

Author notes

This research was supported in part by a grant from the Maine Institute for Human Genetics and Health. Thanks to the patients and their caregivers for their participation and ongoing commitment to research. Thanks to Dr. Ed Drasby, Dr. William Stamey, and the Maine Parkinson’s Disease Association for their assistance in the recruitment and/or assessment of the patients. Thanks to Dr. Barch, three anonymous reviewers, and Steve Hutchinson for their helpful comments on an earlier draft of the manuscript. Thanks to Lacey Favreau, Rena Lolar, and Monica Zilioli for assistance with data collection. Correspondence concerning this article should be addressed to Shawn W. Ell, Psychology Department, University of Maine, 5742 Little Hall, Room 301, Orono, ME 04469–5742 (email: shawn.ell@umit.maine.edu).

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

To get a more detailed description of how participants categorized the stimuli, a number of different decision bound models (Ashby, 1992a; Maddox & Ashby, 1993) were fit separately to the data for each participant from every block. Decision bound models are derived from general recognition theory (Ashby & Townsend, 1986), a multivariate generalization of signal detection theory (Green & Swets, 1966). It is assumed that, on each trial, the percept can be represented as a point in a multidimensional psychological space and that each participant constructs a decision bound to partition the perceptual space into response regions. The participant determines which region the percept is in and then makes the corresponding response. While this decision strategy is deterministic, decision bound models predict probabilistic responding because of trial-by-trial perceptual and criterial noise (Ashby & Lee, 1993).

The appendix briefly describes the decision bound models. For more details, see Ashby (1992a) or Maddox and Ashby (1993). The classification of these models as either rule-based or information-integration models is designed to reflect current theories of how these strategies are learned (e.g., Ashby et al., 1998) and has received considerable empirical support (see Ashby & Maddox, 2005; Maddox & Ashby, 2004, for reviews).

Rule-based models

Unidimensional classifier

The unidimensional classifier (UC) model assumes that the stimulus space is partitioned into two regions by setting a criterion on one of the stimulus dimensions. Two versions of the UC were fit to these data. One version assumes that participants attended selectively to spatial frequency, and the other version assumes participants attended selectively to orientation. The UC has two free parameters; one corresponds to the decision criterion on the relevant dimension, and the other corresponds to the variance of internal (perceptual and criterial) noise (σ 2). For the unidimensional task, a special case of the UC, the optimal unidimensional classifier, assumes that participants use the unidimensional decision bound that maximizes accuracy. This special case has one free parameter (σ 2).

Information-integration models

The linear classifier

The linear classifier (LC) model assumes that a linear decision bound partitions the stimulus space into two regions. This produces an information-integration decision strategy because it requires linear integration of the perceived values on the stimulus dimensions. The LC has three parameters: slope and intercept of the linear bound and σ 2.

The minimum distance classifier