Abstract

Previous comparative work has suggested that the mechanisms of object categorization differ importantly for birds and primates. However, behavioral and neurobiological differences do not preclude the possibility that at least some of those mechanisms are shared across these evolutionarily distant groups. The present study integrates behavioral, neurobiological, and computational evidence concerning the “general processes” that are involved in object recognition in vertebrates. We start by reviewing work implicating error-driven learning in object categorization by birds and primates, and also consider neurobiological evidence suggesting that the basal ganglia might implement this process. We then turn to work with a computational model showing that principles of visual processing discovered in the primate brain can account for key behavioral findings in object recognition by pigeons, including cases in which pigeons’ behavior differs from that of people. These results provide a proof of concept that the basic principles of visual shape processing are similar across distantly related vertebrate species, thereby offering important insights into the evolution of visual cognition.

Similar content being viewed by others

Many species must visually recognize and categorize objects to successfully adapt to their environments. Considerable comparative research has been conducted in object recognition, especially involving pigeons and people, whose visual systems have independently evolved from a common ancestor, from which their lineages diverged more than 300 million years ago. The results of behavioral studies have sometimes disclosed striking similarities between these species, and at other times have disclosed notable disparities, especially pointing toward a lower ability of pigeons to recognize transformed versions of familiar objects (for reviews, see Kirkpatrick, 2001; Spetch & Friedman, 2006).

Similarly, the results of neurobiological studies have revealed both similarities and disparities in the structures that underlie visual object processing. The overall organization of the two visual systems is quite similar, with the most notable shared feature being their subdivision into parallel pathways. All amniotes (mammals, birds, and reptiles) have two main visual pathways from retina to telencephalon: the thalamofugal and tectofugal pathways (see Fig. 1; Shimizu & Bowers, 1999; Wylie, Gutierrez-Ibanez, Pakan, & Iwaniuk, 2009). Furthermore, the avian tectofugal pathway and its pallial targets seem to be separated into at least two parallel subdivisions, one specialized for processing motion and the other specialized for processing shape (Fredes, Tapia, Letelier, Marín, & Mpodozis, 2010; Laverghetta & Shimizu, 2003; Nguyen et al., 2004; Shimizu & Bowers, 1999; Wang, Jiang, & Frost, 1993)—similar to the organization of the primate thalamofugal system and its cortical targets (Mishkin, Ungerleider, & Macko, 1983; Ungerleider & Haxby, 1994).

On the other hand, in birds, the tectofugal pathway is more developed and plays a dominant role in visual discrimination tasks, whereas in primates, the thalamofugal pathway plays the dominant part (Shimizu & Bowers, 1999; Wylie et al., 2009). Thus, the visual pathways that are responsible for object recognition and categorization are not homologous in primates and birds. As well, the optic tectum and other midbrain structures play a central role in visual processing in birds, but not in mammals.

How should these similarities and disparities be interpreted? Is it possible that similar cognitive and perceptual processes underlie object recognition by birds and primates, regardless of these behavioral and neuroanatomical disparities? Given the disparities, can studies in object recognition by birds provide any insights into human visual cognition? The present study integrates behavioral, neurobiological, and computational evidence within an evolutionary framework to offer answers to these three questions. We propose that differences between birds and primates should not be taken as evidence of completely specialized visual systems, that these two taxa likely share common processes of object recognition, and that birds can and should be used by the cognitive neuroscience community as an animal model for the study of some of the processes that are involved in object recognition by people.

To support these three claims, we begin by presenting an overview of contemporary thinking in comparative cognition, proposing that neither behavioral nor neuroanatomical differences between birds and primates should stop us from using these different animals to pursue the study of general processes of object categorization. Then, we review evidence that error-driven associative learning is involved in object categorization learning by birds and primates. Finally, we present new computational evidence that principles of computation discovered in the primate visual system can explain many aspects of avian object perception, including cases in which pigeons’ behavior differs from people’s behavior, thereby suggesting that processes other than categorization learning and visual shape processing might underlie these disparities.

A comparative approach to the study of object categorization

According to a comparative approach, cognition, like any other biological process, should be studied and understood within the context of evolutionary theory (Shettleworth, 2010b). Two different perspectives have arisen in comparative cognition as the consequence of such evolutionary thinking: one focusing on general processes, and a second focusing on adaptive specializations (Riley & Langley, 1993).

According to the general-processes approach (Bitterman, 2000; Macphail & Bolhuis, 2001; Wasserman, 1993), some principles of cognitive processing are both widely distributed across species and highly useful for the solution of various environmental tasks. The main evolutionary argument for this proposal is that even evolutionary diversity implies shared ancestry and that some selective pressures are spread so widely across environments that they affect the selection of characters above the species level (see Papini, 2002).

According to the specialized-adaptations approach (Gallistel, 1999; Shettleworth, 1993, 2000), many of the cognitive processes underlying adaptive behavior are better understood as species-specific mechanisms. The main evolutionary argument for this proposal is that there is so much variability in the ways in which information must be used by different species to adapt to their different environments that it is impossible for general processing mechanisms to solve all of these environmental tasks (Shettleworth, 2000).

Note that, according to these definitions, the issue of whether a process is relatively general or specialized has to do with how widespread the process is across species and with the kind of computational problem that the process might solve. General processes are relatively widespread across species; they are likely to solve computational problems that are shared by the environments to which such species have adapted. Adaptive specializations are relatively specific to one or a few species; they are likely to solve computational problems that are idiosyncratic to the particular environments to which such species have adapted. Importantly, the issue of generality and adaptive specialization, as it is defined here, is different from the issue of whether the mind is composed of domain-general or domain-specific mechanisms (e.g., Atkinson & Wheeler, 2004; Cosmides & Tooby, 1994).

Currently, it is accepted that the study of cognition from an evolutionary standpoint should encompass both general processes and adaptive specializations (Papini, 2002; Riley & Langley, 1993; Shettleworth, 2010b). The complex behavior and cognition that animals exhibit in their natural environments can be broken down into more basic processes, with some of them being quite general and others quite specific. Even if a complex behavior is unique to a single species, the subprocesses that underlie this behavior may be more widespread (Shettleworth, 2000). Thus, the study of complex cognition should be carried out within an atomistic and “bottom-up” approach (de Waal & Ferrari, 2010; Shettleworth, 2010b) focused on identifying and investigating the basic mechanisms that underlie complex cognition and how they may have evolved.

What this consideration means for interpreting studies of object recognition in birds is that this form of cognition is likely to arise from a number of processes, including mechanisms of visual shape extraction, attention, associative learning, perceptual learning, and decision making. Adaptive specializations in any of these systems could be responsible for behavioral differences between species, but it is likely that many other processes are shared, especially if they provide solutions to problems that are general to many visual environments. As for the neuroanatomical differences between birds and primates, two points must be considered. First, many processes other than visual perception are involved in high-level visual cognition. For example, object categorization might also involve the selection of those outputs from the visual system that are useful for the recognition of a class of objects. In the following section, we will propose that a common mechanism, implemented in homologous structures, carries out this selection process in birds and primates.

Second, even when the structures underlying object perception are not homologous in people and birds, it is still possible that they implement similar processes. If what defines a general process is that it is shared across many species and that it solves a common environmental problem, then any nondivergent form of evolutionary change can lead to the evolution of a general process. In contrast, adaptive specializations can be produced only by divergent evolution. Avian and primate visual systems have evolved from a common ancestor, through exposure to a similar pressure to solve the computational problems that are posed by object recognition (see Rust & Stocker, 2010). Thus, homoplasies may have evolved through either parallel or convergent evolution, for example (see Papini, 2002). If similar mechanisms have evolved for object recognition in birds and primates, regardless of whether they are due to homology or homoplasy, then studying the neurobiological substrates of behavior in birds offers an opportunity to increase our understanding of the same processes in humans. A good example of the successful application of such an approach is using song learning in birds as an animal model of human language acquisition (for a recent review, see Bolhuis, Okanoya, & Scharff, 2010). Although most similarities between song learning and language acquisition are likely to be the result of convergent evolution, considerable insight has been gained about the latter by studying the former.

The neuroscience community has focused almost exclusively on the macaque monkey for studying the neuroscience of visual cognition, since this species has higher face validity as a good animal model for human vision than do birds. From the point of view of comparative cognition, however, birds are just as useful as nonhuman primates for the study of the general processes of object categorization. Only in the study of more specialized processes should nonhuman primates be considered a better animal model than birds. We next review evidence implicating the involvement of general processes in object recognition by primates and birds.

Object categorization and general learning processes

The psychological principles of associative learning have proven to be quite general (Bitterman, 2000; Macphail & Bolhuis, 2001; Siegel & Allan, 1996), regardless of the various tasks and species that have been used for their investigation. Studying object recognition from a general-processes view leads naturally to the question of whether associative-learning processes participate in this form of cognition.

Researchers generally agree that prediction error plays an important part in associative learning. We have recently proposed a model of object categorization that is based on error-driven associative learning (Soto & Wasserman, 2010a). The idea behind this model is simple: Each image is represented as a collection of “elements” that vary in their levels of specificity and invariance with respect to the stimuli that they represent. Some elements tend to be activated by a single image, representing its stimulus-specific properties; other elements tend to be activated by several different images depicting objects from the same category, representing category-specific properties. These two kinds of elements are associated with responses in any categorization task depending on each of their abilities to predict reward via an error-driven learning rule, in which the change in the strength of the association between a stimulus element s i and an action a j , or Δv ij , is determined by an equation similar to the following:

where δ j represents the reward prediction error for action a j , equal to the difference between the actual reward received and the prediction of reward estimated through the sum of the associative values of all of the active elements:

A consequence of making learning proportional to prediction error is that stimulus-specific and category-specific properties enter into a competition for learning; only those properties that are predictive of rewarded responses are selected to control performance.

The common-elements model can explain many key empirical results in the literature on object categorization by birds (as reviewed in Soto & Wasserman, 2010a). More importantly, the model generates precise predictions as to the conditions that should foster or hinder categorization learning. For example, the error-driven learning rule predicts that learning about stimulus-specific properties can block subsequent category learning. In agreement with this prediction, when pigeons (Soto & Wasserman, 2010a) and people (Soto & Wasserman, 2010b) are trained to solve a discrimination task by memorizing individual objects in photographs and their assigned responses, both species are impaired in detecting a change in the training circumstances in which all of the presented objects are sorted according to their basic-level categories. This impairment arises because learning of the identification task promotes good performance and low prediction error in the subsequent categorization task.

What we now know about the neurobiology of associative learning accords with the idea that birds and people rely on an evolutionarily conserved learning mechanism to solve object categorization tasks. There is considerable evidence of error-driven learning in the basal ganglia, which are homologous structures in birds and mammals (Reiner, 2002; Reiner, Yamamoto, & Karten, 2005). A diagram of the main structures in the basal ganglia is shown on the left of Fig. 2.

Main structures of the basal ganglia (left) and a diagram of the cortical and nigral inputs to striatal neurons (right). A solid line represents an excitatory/glutamatergic projection, a dashed line represents an inhibitory/GABAergic projection, and a dotted line represents a modulatory/dopaminergic projection. VTA, ventral tegmental area; SNc, substantia nigra pars compacta; GPe, external globus pallidus; VP, ventral pallidum; GPi, internal globus pallidus; SNr, substantia nigra pars reticula

The main input site to the basal ganglia is the striatum, which receives projections from most areas of the primate neocortex (Bolam, Hanley, Booth, & Bevan, 2000) and from most areas of the avian pallium (Veenman, Wild, & Reiner, 1995). Neurons in the striatum send projections to the output nuclei of the basal ganglia: ventral pallidum, the internal segment of globus pallidus, and substantia nigra pars reticula. These output nuclei influence motor control through descending projections to the motor thalamus.

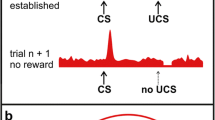

The basal ganglia might learn, through error-driven learning, which responses are appropriate given a pattern of sensory stimulation in visual categorization tasks (see Ashby & Ennis, 2006; Seger, 2008; Shohamy, Myers, Kalanithi, & Gluck, 2008). Work by Schultz and colleagues (Montague, Dayan, & Sejnowski, 1996; Schultz, 1998, 2002; Schultz, Dayan, & Montague, 1997; Waelti, Dickinson, & Schultz, 2001) has shown that the activity of dopaminergic neurons in the substantia nigra pars compacta (SNc) and the ventral tegmental area (VTA) is well described through error-driven learning algorithms. The striatum is highly enriched in dopaminergic terminals arising from VTA/SNc (Durstewitz, Kröner, & Güntürkün, 1999; Nicola, Surmeier, & Malenka, 2000), and it has been shown that the plasticity of cortical–striatal synapses depends on the presence of dopamine in the synapse (Centonze, Picconi, Gubellini, Bernardi, & Calabresi, 2001; Reynolds & Wickens, 2002). These observations suggest that cortical–striatal synapses may mediate error-driven learning of stimulus–response associations (Schultz, 1998, 2002), such as those proposed by the three-term learning algorithm in our model (see Eq. 1). Object classification learning in the striatum would require activity of the presynaptic visual neurons (s i ), activity of the postsynaptic striatal neurons (a j ), and the presence of a dopaminergic signal coming from VTA/SNc (δ—see the subpanel in Fig. 2).

Most of the reviewed research has been done with mammals. But the avian basal ganglia may also play a role in visual discrimination learning (Izawa, Zachar, Aoki, Koga, & Matsushima, 2002) and in the prediction of reward from visual cues (Izawa, Aoki, & Matsushima, 2005; Yanagihara, Izawa, Koga, & Matsushima, 2001).

Although some researchers (Freedman, Riesenhuber, Poggio, & Miller, 2003; Jiang et al., 2007; Serre, Oliva, & Poggio, 2007) have suggested that the critical site for category learning in primates might be the prefrontal cortex (PFC), recent evidence has suggested that lateral PFC, the PFC area that has been more strongly related to categorization learning, is not necessary for learning and generalization in some object categorization tasks (Minamimoto, Saunders, & Richmond, 2010). The basal ganglia might be a more important site for categorization learning in primates than PFC, especially for learning processes that are shared with other species. An interesting possibility is that learning of abstract category representations in PFC is trained by the output of the basal ganglia (Antzoulatos & Miller, 2011).

In summary, evidence indicates that general principles of associative learning can explain object categorization learning in birds and people. We suggest that the basal ganglia may be a candidate site to implement such general processes. We now turn to the question of whether there might in addition be general visual processes involved in object categorization.

General visual processes? Hierarchical shape processing in the primate and avian brains

The ventral stream of the primate visual cortex is specialized for shape processing and object recognition (Mishkin et al., 1983; Ungerleider & Haxby, 1994). The ventral stream comprises several sequentially organized areas, starting at the primary visual cortex (V1), going to extrastriate areas V2 and V4, and then to the inferior temporal lobe (IT).

Several observations have indicated that the ventral pathway is hierarchically organized (Felleman & Van Essen, 1991; Grill-Spector & Malach, 2004). Areas along the pathway implement visual processing over information of increasing complexity, with each level integrating information from the previous one, discarding irrelevant information, and generating a more complex and abstract representation that is passed to the next level for further processing.

Hubel and Wiesel (1962, 1968) first proposed a hierarchical functional architecture to explain the neural response properties of cells in primary visual cortex. This model has received substantial empirical support over the years (Ferster & Miller, 2000; Martinez & Alonso, 2003; Reid & Usrey, 2004). Furthermore, it has been extended to explain the response properties of cells across the whole ventral pathway, including V2 (Anzai, Peng, & Van Essen, 2007; Boynton & Hegde, 2004), V4 (Cadieu et al., 2007), and IT neurons (Riesenhuber & Poggio, 1999). Consequently, a family of primate object recognition models has arisen (e.g., Fukushima, 1980; Perrett & Oram, 1993; Riesenhuber & Poggio, 1999, 2000; Rolls & Milward, 2000; Serre, 2006; Serre et al., 2005; Serre, Oliva, & Poggio, 2007; Wersing & Körner, 2003), each proposing that processing in the ventral stream is hierarchical and feedforward, involving an increase in the complexity of features to which neurons are selective and in the invariance of their responses to several variables from early to late stages of processing.

Although birds and primates use nonhomologous structures for visual pattern discriminations, there is direct evidence of hierarchical feedforward visual processing in the avian tectofugal pathway. The receptive fields of many neurons in the tectum have a center–surround structure similar to that shown by LGN neurons (Frost, Scilley, & Wong, 1981; Gu, Wang, & Wang, 2000; Jassik-Gerschenfeld & Guichard, 1972). Li, Xiao, and Wang (2007) found that the receptive fields of neurons in the nucleus isthmus, a structure that has reciprocal connections with the tectum, are constructed by feedforward convergence of tectal receptive fields, as proposed by the hierarchical model of Hubel and Wiesel (1962, 1968). Also in accord with hierarchical processing, there is a large increase in receptive field size from the optic tectum to the entopallium (Engelage & Bischof, 1996).

Thus, the main mechanism that is thought to be implicated in visual shape processing in the primate brain also seems to be at work in the avian tectofugal pathway. However, as noted earlier, comparative studies have found important behavioral disparities between people and pigeons in object recognition tasks. Here, we argue that these disparities arise from specialization of processes other than categorization learning and visual shape processing. Our argument is that it is plausible and in accord with the available behavioral and neurophysiological data that the avian tectofugal pathway, like the primate ventral stream, implements hierarchical and feedforward processing of shape information.

To support this claim, we present the results of several simulations with a “general-processes” model of object categorization in pigeons, which implements both the principles of visual processing discovered in studies of the primate ventral stream and the principles of error-driven categorization learning described earlier. The results of these simulations provide a proof of concept for the hypothesis that the basic principles of visual shape processing are alike in primates and birds, despite the known behavioral disparities.

Explaining avian object perception through principles of visual computation in the primate brain

Model

Visual shape processing in the tectofugal pathway

Here, we use a hierarchical model of object recognition in primate cortex recently proposed by Serre and colleagues (Serre, 2006; Serre et al., 2005; Serre et al., 2007), which extends previous work by Riesenhuber and Poggio (1999, 2000). This model has several advantages for our purposes.

First, it was developed with the goal of more faithfully reflecting the anatomy and physiology of primate visual cortex than had previous models. Second, the two main operations deployed by the model (see below) can be implemented by biologically plausible neural microcircuits (Carandini & Heeger, 1994; Kouh & Poggio, 2008; Yu, Giese, & Poggio, 2002); evidence suggests that these operations are used in the visual cortex (Gawne & Martin, 2002; Lampl, Ferster, Poggio, & Riesenhuber, 2004). Third, the model includes a dictionary of about 2.3 × 107 shape feature detectors, whose selectivities have been learned through exposure to natural images (see Serre, 2006). Finally, this model can explain empirical results at several levels of analysis, including behavioral data (Serre et al., 2007). This explanatory success is important, because the present work will focus on behavioral studies of avian object recognition.

Despite these advantages, we caution that this is neither a definitive model of the primate ventral pathway nor a realistic model of the avian tectofugal pathway. Some details about the mechanisms that are propose in the model are debatable and may be incorrect (see Kayaert, Biederman, Op de Beeck, & Vogels, 2005; Kriegeskorte, 2009; Yamane, Tsunoda, Matsumoto, Phillips, & Tanifuji, 2006). However, we do believe that the model grasps some of the processes that are implemented by the primate ventral pathway. Specifically, the model implements feedforward, hierarchical processing of visual shape information—the process that we wish to test here. If feedforward and hierarchical processing is important for extracting shape information by the avian tectofugal pathway, the model should be able to explain basic behavioral findings in avian object perception.

We will only sketch the model here. More detailed descriptions can be found in the original articles by Serre and colleagues (Serre, 2006; Serre et al., 2005; Serre et al., 2007) and in the supplementary materials. The model (Fig. 3) is organized into eight layers of two types: layers with “simple” units (S) and layers with “complex” units (C). The units in each layer perform different computations over their inputs.

Simple units perform a feature selection operation, responding to higher-level features that result from a combination of inputs from units in the prior layer tuned to various lower-level features. Each simple unit receives inputs from \( {n_{{{S_k}}}} \) units in the prior layer, and it has a preferred pattern of stimulation that is represented by the vector of weights w. Given a vector x representing the actual input received by the unit, its response y is a Gaussian-like tuning operation:

where σ represents the sharpness of the tuning function. Thus, the response of a given unit is a function of the mismatch between the input to the unit and its preferred feature. The weights for units in the first layer of simple units (S 1) take the form of a Gabor function, which has been shown to be a good model for the response properties of V1 simple neurons (Daugman, 1985; Jones & Palmer, 1987). A battery of such Gabor functions is used to directly filter the image that is presented to the model. The values of weights w j for units in subsequent simple units were learned from exposure to patches of natural images (see Serre, 2006, and the supplementary materials).

Complex units carry out a pooling operation, combining inputs from \( {n_{{{C_k}}}} \) units in the prior layer tuned to the same feature over a range of positions and scales. The response y of the complex unit is equal to the maximum value among its inputs:

For each simulation, 1,000 units were sampled randomly from each of the four C layers, and their responses were used as input to the classification learning system. Note that, although the pool of feature detectors had a size of 4,000 units, only a very small proportion of these detectors were strongly activated by any image (i.e., a sparse representation), because detectors that were tuned to simple and common features (e.g., C1) had high spatial and scale specificity, whereas detectors exhibiting spatial and scale invariance (e.g., C3) were sensitive to complex feature configurations that were present in only a limited number of objects.

Classification learning in the basal ganglia

For classification learning, complex units across the hierarchy project directly to a reinforcement learning stage, which is assumed to be implemented in the basal ganglia. This assumption accords with evidence indicating the existence of projections to the striatum from all areas in the pallium (Veenman et al., 1995).

The classification learning system that was used here is a rendition of our previous model (Soto & Wasserman, 2010a) in terms of a reinforcement learning architecture. Several of the functions that are ascribed to the basal ganglia, together with the role of prediction error in each of these functions, can be captured through reinforcement learning models (Chakravarthy, Joseph, & Bapi, 2010).

Here, we model animal behavior on a trial-by-trial basis. Each trial consists of presentation of a visual stimulus, selection of an action, and updating of weights depending on the reward received. At the beginning of a trial, the stimulus is processed by the visual system, which gives as its output a distributed representation in terms of shape features (the responses of selected complex units, as described earlier). The response of the ith feature detector to the stimulus is represented as x i . The weight of the association between x i and action j is represented as v ij . These values are multiplied by the response of each feature detector to determine the input to the response selection system. As in our prior model (Soto & Wasserman, 2010a), these inputs sum their influence to determine the value of performing action j given the presentation of stimulus s, or Q sj :

Thus, action values can be computed by the activity of striatal neurons that additively combine the inputs from multiple pallial neurons. Then, these values can be used for action selection in the basal ganglia. In our model, once the value of each action is computed, it is transformed to a response probability through a softmax choice rule:

where the parameter β (set to 3.0 in all of our simulations, as in our prior modeling work) represents the decisiveness of the choice rule, with higher values leading to stronger preferences for the choice with the larger incentive value. The probabilities that are obtained from Eq. 6 are used to probabilistically sample an action on a particular trial, in what is known as Boltzmann exploration (Kaelbling, Littman, & Moore, 1996).

Once an action is selected, the animal can either receive a reward (r = 1) if the choice is correct or no reward (r = 0) if the choice is incorrect. This information is used to compute an action-specific prediction error, which is the discrepancy between the value that is computed for the selected action and the actual reward received:

It has recently been found that this type of error is indeed signaled by dopaminergic midbrain neurons (Morris, Nevet, Arkadir, Vaadia, & Bergman, 2006; Roesch, Calu, & Schoenbaum, 2007). Given that dopaminergic neurons send projections to striatal neurons, the error signal that they carry could be used for error-driven learning at the pallial–striatal synapses to which they project. This process is captured in our model by updating Q values according to an error-driven learning rule:

where α is a learning rate parameter that was set to 0.001 in all our simulations. This learning rate was chosen because it allowed for fast learning of the tasks, with relatively stable trial-by-trial performance at the end of training.

We did not attempt to fit the free parameters of the model to the data or to perform a systematic search of the parameter space to find those values that would yield the most accurate predictions. Rather, we ran all of the simulations with the model parameters fixed to specific values, with the aim of documenting the model’s ability to qualitatively reproduce the behavioral patterns that were observed in the experimental data, even with the constraint of using the same parameters in every simulation.

For each simulation, we present the average of 10 model runs. In general, the results varied little across runs of the model (standard errors for all of the simulations can be found in Supplementary Table 1).

Ideal-observer model

An important problem in vision research is determining whether the effect of a particular stimulus manipulation on performance could be due to stimulus and task factors instead of to any processing carried out by a visual system. In our case, if the behavior shown by pigeons in a particular task could be explained as arising from the similarities among the stimuli or the structure of the experimental task, our finding that the general-processes model could explain such a behavioral pattern would be uninformative as to its appropriateness as a model of the avian visual system. To provide a benchmark against which to test the performance of the general-processes model, we also simulated each experiment using an ideal-observer model (Tjan, Braje, Legge, & Kersten, 1995). The behavior of this ideal observer represents the optimal performance in each experimental task for an agent relying on the pixel-by-pixel similarities among the images to solve it. The ideal-observer model allowed us to determine which aspects of an experimental outcome could be explained as resulting directly from task and stimulus demands and not from any visual processing carried out by the pigeons. A more detailed description of this model can be found in the supplementary materials.

Simulations

Here, we present the results of five simulations with our general-processes model. Each simulated experiment represents a sample from a different line of research that has received considerable attention in the pigeon literature: view invariance, image properties that control object recognition, the role of spatial information in object recognition, invariance across changes in surface features, and size invariance. These examples have been chosen either because pigeons behave differently from humans in that experiment or because of the perceived importance of the experiment to characterize object recognition in birds.

Generalization to stimuli rotated in depth

Several experiments have tested whether pigeons can recognize objects that are seen at a single viewpoint when they are later rotated in depth. The results of these experiments have uniformly found that pigeons do not show one-shot viewpoint invariance (Cerella, 1977; Lumsden, 1977; Peissig, Young, Wasserman, & Biederman, 2000; Spetch, Friedman, & Reid, 2001; Wasserman et al., 1996). Yet pigeons do show above-chance generalization of performance to novel views of the training object after training with just one view, and they exhibit generalization behavior that is closer to true viewpoint invariance as the number of training views is increased (Peissig, Wasserman, Young, & Biederman, 2002; Peissig et al., 2000; Wasserman et al., 1996).

As is discussed by Kirkpatrick (2001), these results argue against structural description theories (e.g., Biederman, 1987; Hummel & Stankiewicz, 1998) as a full account of pigeons’ recognition of objects across viewpoints. Specifically, structural description theories assume that the identity of some simple volumes, called geons, should be recoverable by the visual system from almost any viewpoint. Contrary to this assumption, several studies in which pigeons have been trained to discriminate geons have found strongly viewpoint-dependent behavior (Peissig et al., 2002; Peissig et al., 2000; Spetch et al., 2001). These results accord better with image-based theories (e.g., Poggio & Edelman, 1990; Tarr & Pinker, 1989; Ullman, 1989), which predict both a drop in accuracy to novel views of the training object and a reduction of this generalization decrement as the number of training views is increased.

In a study conducted by Spetch et al. (2001), pigeons were trained to discriminate two objects in four conditions. In the zero-part condition, both objects were composed of five elongated cylinders that were joined in their extremes at different angles (i.e., “paperclip” objects). All of the other conditions involved the replacement of one, three, or all five cylinders in each object by distinctive geons (see Fig. 4a); these were the one-, three-, and five-part conditions, respectively. The birds were trained with two views (0° and 90° of rotation) of each object, and they were tested with six different views (0°, 30°, 90°, 135°, 160°, and 225° of rotation). As discussed by Tarr, Bülthoff, Zabinski, and Blanz (1997), image-based theories predict viewpoint-invariant recognition when a diagnostic feature is available, as in the one-part condition, but not when a diagnostic feature is absent, as in all of the other conditions. Importantly, this is exactly the result that was found with people. Structural description theories predict viewpoint-invariant recognition whenever an object can be easily decomposed into simple volumes that are arranged in a particular way, as in all of the conditions except the zero-part condition.

(a) Examples of the stimuli used by Spetch et al. (2001) to test the effect of adding distinctive parts to an object on the object’s recognition from novel viewpoints. (b) Pigeon data (top) and results simulated with the general-processes model (middle) and an ideal-observer model (bottom) for the experiment carried out by Spetch et al., testing the effect of adding distinctive parts to an object on its recognition from novel viewpoints

The experimental results are shown in the top panel of Fig. 4b. It can be seen that pigeons’ accuracy with novel views of the objects (30°, 135°, 160°, and 225° of rotation) progressively decreased as a function of distance from the training views in all four conditions. This result cannot be explained by either structural description models or image-based models, and it is different from what was found with human participants, who experienced the same experimental procedures. Is it possible that a model based on mechanisms known to function in primates could explain the pigeons’ results, despite their being different from humans’ results?

The answer is “yes,” as indicated by the results of a simulation with the general-processes model that is shown in the middle panel of Fig. 4b. Just as was observed for the birds, the model yielded essentially the same pattern of results in all of the conditions: high levels of performance at the training views, and a decrement in accuracy as a function of distance from the training views. The model did not yield generalization levels quite as high as those observed for the pigeons. Also, the model did not reproduce the order of performances across conditions within each of the test orientations. For example, performance in the five-part condition was higher than in the other conditions at 30° and 225° of rotation. The authors did not report statistical tests for these effects, so it is unknown whether any of them are reliable. The most important effect reported in Spetch et al. (2001)—a decrement in performance as a function of rotational distance, regardless of condition—is reproduced by the general-processes model. Thus, a model that is based on mechanisms that are known to function in primates behaved more similarly to pigeons than to people in this experiment.

The results of a simulation with the ideal observer, shown in the bottom panel of Fig. 4b, suggest that most aspects of the experimental results can arise from the pattern of low-level similarities in the stimuli. Other aspects of the experimental results, however, argue against this explanation. For example, the ideal observer cannot explain why pigeons showed above-chance performance with the 30° and 135° testing views in the zero-part condition. Thus, good performance with these testing views cannot be due to physical similarity between the training and testing stimuli.

More recent results have also argued against an explanation of object recognition in birds in terms of an image-based model involving either mental rotation (Tarr & Pinker, 1989) or interpolation between viewpoints in memory (Bülthoff & Edelman, 1992). Peissig et al. (2002) trained pigeons to discriminate grayscale images of four geons. They found that training with multiple views of an object along one axis of rotation enhanced generalization to novel views of the object along both the training axis and an orthogonal axis. The results from their most important test, involving novel views of the objects rotated along an orthogonal axis, are plotted in the left panel of Fig. 5. The radial coordinate in the polar plot represents the proportion of correct responses, whereas the angular coordinate represents the degree of rotation from the training image (assigned to 0º of rotation). The chance level for this test was .25 for correct responses, which is indicated by the small circular lines in the radial charts displayed in Fig. 5. It can be seen that training with multiple views of the objects enhanced recognition at all of the novel viewpoints. The pigeons could not have used direct interpolation between the training views to aid performance with novel views along the orthogonal axis. Also, the amount of mental rotation that was required to recognize novel views along the orthogonal axis from the nearest training view was the same for pigeons that were given either one view or five views of the objects along the training axis. Thus, this pattern of results cannot be captured by several traditional cognitive models of object recognition. Although data from adult humans are not available for this experimental design, human infants have exhibited the same pattern of results shown by the pigeons in this study (Mash et al., 2007).

Pigeon data (left) and results simulated with the general-processes model (middle) and an ideal-observer model (right) for the experiment performed by Peissig et al. (2002), testing the effect of training with views of an object rotated in depth along one axis on its recognition when rotated along an orthogonal axis. The radial coordinate in each polar plot represents the proportion of correct responses, whereas the angular coordinates represent the degrees of rotation from the training image, the latter corresponding to 0° of rotation. The chance level for this test was .25 for correct responses and is indicated by the small circular line in each of the radial charts

The results of a simulation with the general-processes model are depicted in the middle panel of Fig. 5. Although the model again does not achieve the high levels of generalization that were shown by the pigeons, it does successfully reproduce the consistent increase in generalization performance after training with multiple views. This increase occurs because it is likely that those properties of objects that are invariant across training views are also present in many other novel testing views, regardless of the axis chosen to rotate the object and produce the testing views. Increasing the number of training views increases the likelihood of common properties between the testing and training objects; it also increases behavioral control by those properties that are common to several training images, because these properties are presented and associated with the correct responses more often than those properties that are idiosyncratic to each image.

The results of a simulation with the ideal observer, shown in the right panel of Fig. 5, suggest that low-level stimulus similarity may have boosted generalization performance in the multiple-views group to some of the novel views, but not to all of them. Specifically, performance levels were actually lower in the five-views condition than in the one-view condition at 72°, 108°, 144°, and –108° of rotation.

In summary, although the general-processes model does not promote the same high levels of generalization to novel object views that were exhibited by the birds, it does reproduce the qualitative patterns of results that were observed in studies of rotational invariance.

Bias to rely on nonaccidental properties of images for object recognition

Structural description theories of object recognition (e.g., Biederman, 1987; Hummel & Stankiewicz, 1998) propose that humans extract the three-dimensional structure of objects from nonaccidental edge properties in retinal images, such as parallelism and cotermination. Indeed, empirical results indicate that nonaccidental properties are particularly important features for object recognition by primates and birds (Biederman & Bar, 1999; Gibson, Lazareva, Gosselin, Schyns, & Wasserman, 2007; Kayaert, Biederman, & Vogels, 2003; Lazareva, Wasserman, & Biederman, 2008; Vogels, Biederman, Bar, & Lorincz, 2001).

Gibson et al. (2007) trained pigeons and people to discriminate four geons that were shown at a single viewpoint. Once high performance levels were reached, the researchers used the Bubbles procedure (Gosselin & Schyns, 2001) to determine which properties of the images were used for recognition. With this procedure, the information in images is partially revealed to an observer through a number of randomly located Gaussian apertures, or “bubbles,” and the observer’s response is used to determine which areas in an image are used to correctly recognize an object. Gibson et al. defined regions of interest in their images containing information about edges, shading, and edge cotermination. Figure 6a shows some examples of these different types of regions in one of the geons. This manipulation made it possible to determine the extent to which the information used by the subjects, as revealed through the Bubbles procedure, was contained in each region of interest. The left section of Fig. 6b shows the reanalyzed pigeon data from the Gibson et al. experiment. Pigeons relied on cotermination information more strongly than on other edge properties, and on those two edge properties much more strongly than on shading. The human data (not included in Fig. 6b) showed the same qualitative pattern of results.

(a) Examples of regions of a geon that were classified as containing edges, edge coterminations, and shading information in the Gibson et al. (2007) study. (b) Relative uses of cotermination, edge, and shading regions by pigeons (left), the general-processes model (middle), and an ideal-observer model (right) when trained with the procedures and stimuli from the Gibson et al. study

As is illustrated in the center section of Fig. 6b, the general-processes model correctly reproduces the bias that was observed in pigeons and people to rely on cotermination information for the discrimination of simple volumes. The results from the ideal observer, shown in the right section of Fig. 6b, confirmed the conclusion reached in the original study that cotermination information was not the most informative nonaccidental property in this particular task. According to the ideal observer, in this task, the most diagnostic property for recognition was the objects’ edges. These results are particularly important, as they suggest that the bias that was shown by pigeons and people to rely on nonaccidental properties does not require the explicit representation of three-dimensional volumes by the visual system, as proposed by structural description theories, but emerge from quite simple principles of biological visual computing.

The general-processes model combines the inputs from units that are sensitive to oriented edges, which are located early in the hierarchy of feature detectors, to produce the receptive fields of units in later stages. Because the preferred stimuli for units in later stages have been learned through experience with natural images, it is likely that they include both coterminations and elongated edges, which are frequently found in natural objects.

It is less clear why cotermination information was preferred over other edge properties to solve the task, especially because, in natural images, elongated edges are the more common of those two properties (Geisler, Perry, Super, & Gallogly, 2001). One possibility is that tolerance to image translation and scaling in the model renders the elongated contours of an object less reliable for identification, but the same process does not affect object coterminations to the same extent. Whereas two parallel edges can be perfectly aligned after translation, two corners are less likely to have the same local configuration after translation. In other words, two close, approximately parallel edges are very likely to be confused as the same feature by an invariant edge detector. Two coterminations belonging to the same object, however, are more likely to have a different configuration (e.g., a corner pointing to the left vs. a corner pointing to the right), which would resolve the ambiguity added by invariant feature detection. Thus, translation-invariant detectors are more likely to confuse two edges than two coterminations if they are shown through slightly translated “bubbles.”

Recognition of complementary and scrambled object contours

The results of several experiments led Cerella (1986) to conclude that pigeons recognize objects using local features, while ignoring their spatial relations and other global properties. This proposal stimulated several studies showing that pigeons do in fact represent spatial relations among features (Kirkpatrick-Steger & Wasserman, 1996; Kirkpatrick-Steger, Wasserman, & Biederman, 1998; Van Hamme, Wasserman, & Biederman, 1992; Wasserman, Kirkpatrick-Steger, Van Hamme, & Biederman, 1993).

In one of these experiments (Van Hamme et al., 1992), pigeons were trained to recognize line drawings of four objects, such as those in the left and center of Fig. 7a. The stimuli were created by deleting half of an object’s contour, which allowed the investigators to train the pigeons to recognize one partial object contour and to test with the complementary contour. If the pigeons represented these objects using only the local lines and vertices that were available in the training images, then they should fail to accurately recognize the complementary contours, and their performance should be close to the chance level of .25 correct choices. However, as shown in Fig. 7b, pigeons recognized these complementary contours at a high level of accuracy, suggesting that their visual system could infer object structure from the partial contours that were seen during training. To control for the possibility that this result was due to similarity between local features in the original and complementary contours, the pigeons were also tested with stimuli in which the complementary contours were spatially scrambled, as in the image shown in the right part of Fig. 7a. Pigeons’ performance dropped precipitously to the scrambled stimuli (see Fig. 7b), although it was still a bit above chance, suggesting that both local features and spatial structure are important in pigeons’ recognition of object contours.

(a) Some of the stimuli used by Van Hamme et al. (1992) to study the transfer of performance across complementary contours of an image (left, middle), and the scrambled version of the contours (right). (b) Pigeon data (left) and results simulated with the general-processes model (middle) and an ideal-observer model (right) for Van Hamme et al.’s Experiment 2, in which they evaluated transfer of recognition performance across complementary contours of the training images and their scrambled versions. (c) Pigeon data (left) and results simulated with the general-processes model (middle) and an ideal-observer model (right) for Van Hamme et al.’s Experiment 3, testing the effect of different degrees of spatial scrambling on the recognition of object line drawings

A follow-up experiment, in which pigeons were tested with scrambled versions of the original training stimuli, strengthened this conclusion and further suggested that the intact spatial organization of an object contour might be more important for recognition than local features. As is shown in Fig. 7c, accuracy with scrambled versions of the original stimuli—which retained all of the local features and disrupted the drawings’ spatial organization—was lower than with their complementary contours (see Fig. 7b), which retained all of the spatial organization and disrupted the drawings’ local features. The test results also indicated that the effect of scrambling image features depended on whether the spatial translation of features was mild or heavy. Mild scrambling involved translating the original features within their original quadrants, whereas heavy scrambling involved translating half of the features to the adjacent (clockwise) quadrant. Heavier spatial translation of features led to larger drops in performance.

These and other experiments in which pigeons were tested with scrambled versions of object contours have led to the rejection of an explanation of avian object recognition via a “bag-of-features” representation, as proposed by Cerella (1986). Although the general-processes model used here also uses a bag-of-features representation, it retains information about the spatial structure of objects by using a large bank of overlapping features. The question is, however, whether using a bank of overlapping features is enough to support the generalization of performance across complementary contours, which do not share any local features.

As shown in Fig. 7b and c, the answer is “yes.” The general-processes model can reproduce all of the key aspects of the results reported by Van Hamme et al. (1992). In contrast, the ideal-observer model yielded performance that was at or near chance to all of the testing stimuli in both experiments. The high levels of generalization to complementary contours that were shown by the general-processes model could be due to two mechanisms. First, units at the end of the processing hierarchy are both selective to complex shapes and relatively invariant to spatial translation and scaling. Some line contours in the two complementary images were quite similar, but they appeared in slightly different positions in the image. In the example shown in Fig. 7a, the complementary contours in the chest and belly of the penguin are quite similar and are located in only slightly different spatial positions. Line contours such as these could have activated the same feature detectors in the two complementary images. Second, two contours in the complementary images could have moderately activated the same unit if they both overlapped with different sections of its preferred stimulus. In the example shown in Fig. 7a, a unit that is selective for a long vertical curve could have been activated by the two halves of the penguin’s back that were present in the two complementary images.

The effects of scrambling are reproduced because of the large number of overlapping feature detectors that were included in the general-processes model, which retain considerable information about the spatial organization of each object. Importantly, this model can also reproduce the above-chance performance that was observed with the scrambled stimuli, which results from the fact that some diagnostic visual features are not affected by scrambling.

The results of these simulations suggest a way to reconcile the contradictory results found by Cerella (1980), on the one hand, and by more recent studies (e.g., Kirkpatrick-Steger et al., 1998; Wasserman et al., 1993), on the other. One important aspect of Cerella’s (1980) experiment is that he divided cartoon objects into only three parts (legs, torso, and head) that preserved substantial local spatial information; furthermore, these parts were horizontally aligned when they were scrambled, which retained some global properties, such as the object’s aspect ratio. Thus, Cerella’s (1980) manipulation might have left untouched much of the spatial structure to which the pigeons were sensitive, not affecting their performance to a substantial degree. On the other hand, in more recent studies, objects were divided into many parts and their scramblings were misaligned. These stimulus manipulations might have disrupted spatial structure information at several different scales. If, as proposed here, pigeons represent objects through a large bank of overlapping feature detectors, the effects of the spatial rearrangement of an object’s parts would depend on exactly how the rearrangement was accomplished, because spatial information is implicitly represented and distributed across all of the feature detectors. Some of these detectors implicitly code for the arrangement of local features, whereas others code for the more global arrangement of object parts. Because all of these aspects of the stimulus can gain control over behavior during learning, the results of a scrambling test critically depend on what type of spatial information is affected by the scrambling procedure.

Effect of changes in surface features

Pigeons are very sensitive to transformations of an object that leave its outline intact, while changing only its internal surface features. Several studies (Cabe, 1976; Cook, Wright, & Kendrick, 1990; Young, Peissig, Wasserman, & Biederman, 2001) have provided evidence that the drop in object recognition performance that is caused by the deletion of surface features is larger when pigeons are tested with line drawings than when they are tested with silhouettes.

Young et al. (2001, Exp. 1) trained pigeons to discriminate four geons that were rendered from a single viewpoint. After they achieved high levels of performance in the task, the birds were tested with the same geons transformed in three ways (see Fig. 8a): (1) under a change in the direction of illumination; (2) with all of the internal shading removed and replaced by the average luminance of the object, producing a silhouette; or (3) with the object’s internal shading removed, but with all of the edge information retained, producing a line drawing. The results are presented in the left part of Fig. 8b and show that all of the transformations produced large drops in accuracy. Performance with the line drawings was the poorest, being close to the chance level of .25, whereas performance levels with the silhouette and light change stimuli were somewhat better and similar. This pattern of results is rather surprising, because both the line drawing and the light change stimuli contained much more information about the three-dimensional structure of the objects than did the silhouette stimuli, while conserving the same contour information.

(a) Examples of the types of testing stimuli used by Young et al. (2001) to test the recognition of geons by pigeons across variations in surface features: illumination change, silhouette, and line drawing versions of the original training stimuli. (b) Pigeon data (left) and results simulated with the general-processes model (middle) and an ideal-observer model (right) for Young et al.’s Experiment 1, which tested recognition of geons across variations in surface features: silhouette, illumination change, and line drawing versions of the original training stimuli

The middle part of Fig. 8b shows that this qualitative pattern of results is reproduced by the general-processes model, which produces large drops in accuracy to all of the testing stimuli, with the largest drop to the line drawings. The right part of Fig. 8b shows that large drops in performance were not invariably predicted by the ideal-observer model; indeed, this model predicted a large drop in performance only for the line drawings.

Why does the general-processes model produce such large drops in performance with these test stimuli? The answer lies in the fact that, at the beginning of the hierarchy of processing, the image is filtered using a battery of Gabor functions at several different scales. In this wavelet-like decomposition of the image (Field, 1999; Stevens, 2004), which is carried out by V1 neurons in the primate brain, filters at the largest scales can detect changes in luminance such as those produced by a gray object over a white background; filters at smaller scales can detect changes in luminance such as those produced by the reflection of light on the smooth surface of the object; and filters at the smallest scales can detect local changes in luminance such as those produced by the object’s edges. During learning, the model relies on all of this information for recognition. The more information about luminance changes that is removed from the original object, regardless of the spatial scale of these changes, the more performance will suffer.

One aspect of the results that was not reproduced by the general-processes model was that the pigeons’ performance with line drawings was at chance. Because pigeons can readily discriminate line drawings of complex objects (Kirkpatrick, 2001), this result cannot be due to the birds’ inability to detect the fine-edge information in the images. Instead, this recognition failure might result from a bias, not reproduced by the model, toward relying more heavily on luminance changes at larger scales whenever this information is available. That is, pigeons may rely much more heavily than the model on the coarse disparities in luminance that exist between the inside and the outside of each object, so that when this information is removed, performance drops precipitously.

Effects of exponential and linear variations in size

Many studies have tested pigeons’ recognition of objects varying in size (Larsen & Bundesen, 1978; Peissig, Kirkpatrick, Young, Wasserman, & Biederman, 2006; Pisacreta, Potter, & Lefave, 1984). In general, pigeons show generalization of performance to known objects at novel sizes. Nevertheless, they also show decrements in accuracy to novel sizes, with larger decrements to more disparate sizes.

Peissig et al. (2006) also explored which types of size transformations yield symmetrical generalization gradients. Four geons were linearly varied in size, by increasing size in constant steps, or exponentially varied in size, by increasing size in steps approximately proportional to the current size. Examples of both transformations are shown in Fig. 9a. Pigeons were trained with the size in the middle of both scales (geons labeled “0” in Fig. 9a) in a four-alternative forced choice task until they reached high levels of recognition accuracy. Then they were tested with the linear and exponential size variations, producing the generalization gradients shown in the left part of Fig. 9b. The exponential scale produced a more symmetrical generalization gradient, whereas the linear scale yielded smaller drops in performance for stimuli that increased in size than for stimuli that decreased in size by the same amount. These results suggest that visual size conforms to Fechner’s law: namely, a logarithmic relation between physical and perceived size.

(a) Examples of the types of stimuli used by Peissig et al. (2006) to test the recognition of geons by pigeons across linear and exponential size variations. Numbers represent scaling steps from the original training images (denoted by 0). (b) Pigeon data (left) and results simulated with the general-processes model (middle) and an ideal-observer model (right) for Experiment 2 in Peissig et al. (2006), which tested the recognition of geons across linear and exponential size variations

The gradients resulting from a simulation of this experiment with the general-processes model are shown in the middle part of Fig. 9b, and the ideal-observer results are shown in the right part of this figure. The general-processes model reproduced all of the important aspects of the behavioral results: above-chance performance for all sizes, which decreased monotonically as a function of the difference from the original size; a symmetrical gradient for stimuli that changed along an exponential scale; and an asymmetrical gradient for stimuli that changed along a linear scale. None of these results was predicted by the ideal observer. The general-processes model did not reproduce the small generalization decrement that was observed with sizes –1 and 1 in the linear transformation condition. However, the qualitative pattern of results was nicely reproduced, including the specific relation between the physical image changes and the corresponding changes in performance.

The complexity of the model makes it difficult to specify why the experimental results were reproduced. One possibility is that, in a linear size transformation, more feature detectors that respond to the training image reduce their response with an increase in size than with a decrease in size. Imagine a simplified, unidimensional model, in which a set of edge detectors, all of the same size and scale, are collinearly arranged. The model is presented with a line, and a number of units respond vigorously and are associated with a response. If we linearly increase the size of this line, the same edge detectors will still respond, and high generalization will be observed. However, if we linearly decrease the size of this line, a number of edge detectors that had previously responded will stop doing so, and a response decrement will be observed. This process could be transpiring, at a larger scale, with the linear size transformation. In the exponential size transformation, though, decrements are smaller than increments. In our example, the size increments might be so dramatic as to “misalign” the edges in the object with the original detectors, producing a stronger generalization decrement because the object’s edges are now outside the detectors’ receptive fields.

Discussion

This article integrates neurobiological, behavioral, and computational evidence for the involvement of “general processes” in object recognition by people and pigeons. Our main goal here was to present arguments and evidence suggesting (1) that differences between birds and primates should not be taken as evidence of completely specialized visual systems, (2) that it is likely that these two types of animals share common processes of object recognition, and (3) that birds can and should be used by the cognitive neuroscience community as a model for the study of some of the processes that are involved in object recognition by people.

Specifically, we reviewed prior work suggesting a role for error-driven associative learning in visual object categorization and we presented new computational evidence that some of the principles of visual processing discovered in the primate ventral stream can account for a wide range of behavioral results from studies of avian object perception. The successful series of simulations provides a proof of concept that general mechanisms of visual shape processing underlie key aspects of object recognition across even distantly related vertebrate species. The computational evidence adds to a wealth of empirical results that point to the existence and significance of such general processes.

We do caution that these results should not be taken as more than a proof of concept, unless future research on the neurophysiology of the avian tectofugal visual pathway reveals that the mechanisms embedded in feedforward hierarchical object recognition architectures are indeed at work in birds, as they are in primates. As we noted earlier, there is some empirical support for this assertion (Li et al., 2007), but evidence from neural recordings across all stages of the tectofugal pathway and its pallial targets will be needed in order to reach a stronger conclusion.

Relatedly, we emphasize that we have used the model of Serre and colleagues (Serre, 2006; Serre et al., 2005; Serre et al., 2007) simply as a tool to provide such proof of concept. It is likely that this model does not capture the full complexity of visual processing in the ventral stream. However, we have used this model because it implements feedforward and hierarchical processing of shape information, a mechanism that seems to be backed up by an important amount of evidence from primate neurophysiology (reviewed earlier). Our conclusions will remain valid unless new data disprove the central role that is currently assigned to feedforward and hierarchical processing in the construction of receptive-field selectivity and invariance for neurons in the ventral stream.

Regardless of these considerations, it is noteworthy that a model rooted in primate neurobiology does such a good job explaining avian object recognition, given the behavioral and neurobiological differences in object recognition by birds and primates reviewed in the introduction. We hope that the present computational work, together with our previous empirical work, will encourage neuroscientists to further test the hypothesis of common neurocomputational mechanisms and to consider birds as potentially fruitful models for the study of biological object recognition.

If, as we have suggested, feedforward and hierarchical visual shape processing is common to such distantly related taxa as birds and primates, then two important questions immediately arise. The first and most obvious question, from a comparative standpoint, is whether these common processes are the results of conservation or of independent evolution. Some might propose that the results of studies of avian object recognition can only inform the study of human object recognition if the underlying structures are homologous. We, on the contrary, believe that even if the neural structures underlying object recognition in birds and primates are not homologous, but do implement similar mechanisms of visual processing, then the study of such mechanisms in birds at both behavioral and neurobiological levels can give us important insights into biological vision, in general, and human vision, in particular.

The fact that visual environments seem to select one particular form of visual computation and not others prompts the second question, which is even more important for our understanding the evolution of cognition: Namely, what gives this form of computation its “generality” for solving visual problems across different environments? If feedforward hierarchical processing is truly a mechanism that is shared by many vertebrate species, then it will be critical for future work to provide insight into which aspects of the varied visual tasks that are faced by these species have shaped the way in which this form of visual processing works.

A key point of discussion is that the model that we have used yielded results that more closely approximated pigeon than human behavior in several experimental designs. The model was sensitive to a number of stimulus transformations to which pigeons, but not people, are also sensitive. People exhibit viewpoint-invariant object recognition when they are tested with the appropriate stimuli (Biederman & Gerhardstein, 1993) and with interpolated novel views of an object (Spetch & Friedman, 2003; Spetch et al., 2001); they exhibit scale-invariant recognition (Biederman & Cooper, 1992) and they do not exhibit dramatic drops in performance after the deletion of internal surface features (Biederman & Ju, 1988; Hayward, 1998). If our interpretation of the simulation results presented here in terms of general processes is correct, then the behavioral disparities that have been found between pigeons and people are likely to be the consequence of differences in processes other than the extraction of visual shape information and error-driven categorization learning. That is, whereas these two mechanisms may be common to both birds and primates, both types of animals may also show more specialized processes that have an impact on object recognition. As was noted earlier, this pattern of results is exactly what we would expect by studying most forms of cognition from an evolutionary perspective.

For example, better recognition with interpolated views of an object, reported in people but not in pigeons (Spetch & Friedman, 2003; Spetch et al., 2001), might require the ability to manipulate representations of objects through either “mental rotation” (Tarr & Pinker, 1989) or view interpolation (Bülthoff & Edelman, 1992); these processes might be absent in birds.

Regarding specialized adaptations in categorization learning, Ashby and colleagues (e.g., Ashby, Alfonso-Reese, Turken, & Waldron, 1998; Ashby & Valentin, 2005) have hypothesized two categorization learning systems in people. The implicit system is based on procedural learning and is believed to be implemented by the circuitry of the basal ganglia, similar to the categorization system that we have proposed. The explicit system is based on logical reasoning, working memory, and executive attention, and is believed to be implemented by the frontal cortex. Recent evidence (Smith et al., 2011) has suggested that pigeons, unlike people and other primates, might only possess the implicit system, which they use to learn any categorization task. If this conclusion is correct, it is an open question how the explicit system might influence visual categorization tasks that are usually encountered in natural environments, such as object recognition across changes in viewpoint. The explicit categorization system, or any of its components, could underlie many of the disparities that have been observed between people and pigeons in object recognition.

Note, however, that we have found evidence suggesting that error-driven learning is involved in human categorization of natural objects, even when an explicit verbal rule can be used to learn a particular task (see Soto & Wasserman, 2010b). Thus, error-driven learning may underlie not only the strengthening of stimulus–response associations, but also the competition between the outputs of these different systems for control over performance.

The strictly feedforward model that we have used does not take into account the fact that connections across the ventral pathway are bidirectional (Salin & Bullier, 1995). Whereas feedforward connections are thought to be responsible for driving the properties of neuronal responses to external stimuli, feedback connections—which are more diffuse—are thought to have a modulatory effect on the activity of neurons (Kveraga, Ghuman, & Bar, 2007).

One possibility is that feedback projections implement attentional processes that facilitate the processing of the information that is relevant for a particular task (see Gilbert & Sigman, 2007; Kastner & Ungerleider, 2000). Attention and other processes that are carried out through the feedback visual system might be very important for some aspects of human object recognition, such as the manipulation of object representations and the selective filtering of object information that is irrelevant for a particular task (such as surface features or viewpoint-dependent shape properties). This role of the feedback system might explain why the model that we used cannot explain some aspects of human behavior. It also suggests the interesting possibility that the model does so well in explaining pigeons’ behavior because the feedback system in these animals does not carry out the same functions that it does in primates.

Regardless of the actual underlying cause(s) of the behavioral disparities that were seen between primates and birds, our modeling results underscore the importance of adopting an atomistic and “bottom-up” approach (de Waal & Ferrari, 2010; Shettleworth, 2010a) to the comparative study of cognition. Even in the face of behavioral and neuroanatomical disparities between species, one cannot dismiss the possibility that some of the subprocesses underlying a complex behavior are shared. In the case of object recognition, some discrepancies suggest that the mechanisms of object recognition might be different in primates and birds. Our simulations suggest that this possibility is not necessarily the case for visual shape processing mechanisms: Most aspects of object recognition that seem to be unique to birds can emerge from computational principles that are known to exist in the primate visual system.

The proposal that complex cognition can arise from the interaction of simple, basic processes is widespread among theories of human cognition. Comparative cognition can be instrumental in the discovery of the basic computational processes that bring about complex cognition in biological systems. We believe that this search should focus on general processes that are common to many species. Such processes, selected by diverse environments throughout evolution, are a solution to what are likely to be the most vital aspects of the computational problems that are faced by biological systems; these processes should be deemed the cornerstone on which biological cognition rests.

References

Antzoulatos, E. G., & Miller, E. K. (2011). Differences between neural activity in prefrontal cortex and striatum during learning of novel abstract categories. Neuron, 71, 243–249. doi:10.1016/j.neuron.2011.05.040.

Anzai, A., Peng, X., & Van Essen, D. C. (2007). Neurons in monkey visual area V2 encode combinations of orientations. Nature Neuroscience, 10, 1313–1321. doi:10.1038/nn1975.

Ashby, F. G., Alfonso-Reese, L. A., Turken, A. U., & Waldron, E. M. (1998). A neuropsychological theory of multiple systems in category learning. Psychological Review, 105, 442–481. doi:10.1037/0033-295X.105.3.442.

Ashby, F. G., & Ennis, J. M. (2006). The role of the basal ganglia in category learning. In B. H. Ross (Ed.), The psychology of learning and motivation (Vol. 46, pp. 1–36). New York: Academic Press.

Ashby, F. G., & Valentin, V. V. (2005). Multiple systems of perceptual category learning: Theory and cognitive tests. In H. Cohen & C. Lefebvre (Eds.), Categorization in cognitive science (pp. 548–572). New York: Elsevier.

Atkinson, A. P., & Wheeler, M. (2004). The grain of domains: The evolutionary–psychological case against domain-general cognition. Mind and Language, 19, 147–176. doi:10.1111/j.1468-0017.2004.00252.x.

Biederman, I. (1987). Recognition-by-components: A theory of human image understanding. Psychological Review, 94, 115–147. doi:10.1037/0033-295X.94.2.115.

Biederman, I., & Bar, M. (1999). One-shot viewpoint invariance in matching novel objects. Vision Research, 39, 2885–2899.

Biederman, I., & Cooper, E. E. (1992). Size invariance in visual object priming. Journal of Experimental Psychology. Human Perception and Performance, 18, 121–133. doi:10.1037/0096-1523.18.1.121.

Biederman, I., & Gerhardstein, P. C. (1993). Recognizing depth-rotated objects: Evidence and conditions for three-dimensional viewpoint invariance. Journal of Experimental Psychology. Human Perception and Performance, 19, 1162–1182. doi:10.1037/0096-1523.19.6.1162.

Biederman, I., & Ju, G. (1988). Surface versus edge-based determinants of visual recognition. Cognitive Psychology, 20, 38–64.

Bitterman, M. E. (2000). Cognitive evolution: A psychological perspective. In C. M. Heyes & L. Huber (Eds.), The evolution of cognition (pp. 61–79). Cambridge, MA: MIT Press.