Abstract

During scene viewing, semantic information in the scene has been shown to play a dominant role in guiding fixations compared to visual salience (e.g., Henderson & Hayes, 2017). However, scene viewing is sometimes disrupted by cognitive processes unrelated to the scene. For example, viewers sometimes engage in mind-wandering, or having thoughts unrelated to the current task. How do meaning and visual salience account for fixation allocation when the viewer is mind-wandering, and does it differ from when the viewer is on-task? We asked participants to study a series of real-world scenes in preparation for a later memory test. Thought probes occasionally occurred after a subset of scenes to assess whether participants were on-task or mind-wandering. We used salience maps (Graph-Based Visual Saliency; Harel, Koch, & Perona, 2007) and meaning maps (Henderson & Hayes, 2017) to represent the distribution of visual salience and semantic richness in the scene, respectively. Because visual salience and meaning were represented similarly, we could directly compare how well they predicted fixation allocation. Our results indicate that fixations prioritized meaningful over visually salient regions in the scene during mind-wandering just as during attentive viewing. These results held across the entire viewing time. A re-analysis of an independent study (Krasich, Huffman, Faber, & Brockmole Journal of Vision, 20(9), 10, 2020) showed similar results. Therefore, viewers appear to prioritize meaningful regions over visually salient regions in real-world scenes even during mind-wandering.

Similar content being viewed by others

During scene viewing, human attention prioritizes certain scene regions for in-depth processing. Which regions in the scene are selected and which are not? There is an extensive body of research on whether, and to what extent, bottom-up and top-down factors guide visual attention during scene perception (e.g., Itti & Koch, 2000; Henderson, 2003; Henderson, Brockmole, Castelhano, & Mack, 2007, Henderson, Malcolm, & Schandl, 2009; Parkhurst, Law, & Niebur, 2002). Some have argued that visual attention is guided by visual salience, that is, contrasts in low-level image features such as luminance, color, and orientation (e.g., Itti & Koch, 2000; Parkhurst et al., 2002; Theeuwes, 2010). Salience maps, as a computational implementation of this idea, quantify the distribution of visual salience across the entire scene (e.g., Harel et al., 2007; Itti & Koch, 2000). Regions that are more visually distinctive relative to others receive higher salience values. However, salience maps are only modest at predicting human fixations in real-world scenes (Tatler, Hayhoe, Land, & Ballard, 2011). It is well established that top-down factors such as scene semantics also affect eye movements and often override the influence of visual salience (e.g., Henderson et al., 2007; Anderson, Ort, Kruijne, Meeter, & Donk, 2015; Underwood, Foulsham, van Loon, Humphreys, & Bloyce, 2006).

In an attempt to directly compare the role of scene semantics and visual salience in guiding fixations, Henderson and Hayes (2017) introduced “meaning maps” to represent the semantic richness of scene regions. Specifically, they cut each scene into a large number of small patches and asked people to rate how informative or recognizable each patch is. These individual ratings were averaged, smoothed, and combined to produce a distribution of meaning for each scene. The resulting meaning maps represent the distribution of meaning in a way that is consistent with how salience maps represent the distribution of visual salience. This allows the researchers to directly compare how well meaning and visual salience could predict real fixations, as represented by fixation density maps. Across several studies (Hayes & Henderson, 2019; Henderson & Hayes, 2017; Peacock, Hayes, & Henderson, 2019b), meaning maps explained significantly more variance in fixation allocation than did salience maps (represented by the Graph-Based Visual Saliency (GBVS) model; Harel et al., 2007), even after controlling for the shared variance between meaning and visual salience. These results, according to the authors, support the idea that meaning plays a dominant role in guiding fixations during scene perception.

Scene perception during mind-wandering

Theories and models of scene perception often implicitly assume that the viewer is engaged in processing the scene without any interference. However, this assumption may be overly simplistic. That is, while people are actively looking around a scene, they may not necessarily be paying attention to the scene’s content. People spend 20–50% of their waking hours engaging in mind-wandering, or having thoughts unrelated to the current task (Kane, Brown, McVay, Silvia, Myin-Germeys, & Kwapil, 2007, Kane, Gross, Chun, Smeekens, Meier, Silvia, & Kwapil, 2017; Killingsworth & Gilbert, 2010). A prominent view in the literature characterizes mind-wandering as a state of “perceptual decoupling”, defined as a dissociation between the mind and perceptual input (Schooler, Smallwood, Christoff, Handy, Reichle, & Sayette, 2011; Smallwood, 2013). By this account, domain-general processes that normally support perceptual processing are recruited during mind-wandering to support the internal train of thought. Consistent with this idea, mind-wandering was found to be associated with weaker task-evoked potentials to the onset of external stimuli compared to when participants were on-task (e.g., Kam, Dao, Farley, Fitzpatrick, Smallwood, Schooler, & Handy, 2011). Presumably, mind-wandering may substantially impact eye movements during scene perception. Successful visual processing often depends on making moment-to-moment decisions of when and where to move the eyes. However, the “normal” gaze control mechanism may falter during mind-wandering as the same domain-general processes that support visual processing turns to the generation of task-unrelated thoughts.

Then, to what extent would the meaning-based allocation of fixations, as documented by Henderson and colleagues, be affected during mind-wandering? One possibility is that fixations are guided by meaning only during attentive viewing. Indeed, one study using a reading task found that lexical variables known to modulate fixations during normal reading (e.g., word frequency) ceased to modulate fixations during mind-wandering (Reichle, Reineberg, & Schooler, 2010; also see Foulsham, Farley, & Kingstone, 2013). This has been taken as evidence for perceptual decoupling in the context of reading comprehension (Schooler et al., 2011). Extending this logic to scene perception, it seems plausible to assume that meaning should cease to be associated with the allocation of fixations during mind-wandering. However, it is worth noting that several other studies (Frank, Nara, Zavagnin, Touron, & Kane, 2015; Steindorf & Rummel, 2020) with slight differences in experimental design did not replicate the exact finding by Reichle et al. (2010).

Suppose that fixations are less associated with meaning during mind-wandering, would they be more associated with visual salience instead? One possibility is that fixations during mind-wandering are globally less associated with meaning and visual salience. However, an alternative possibility is that fixations during mind-wandering are selectively less associated with meaning. There is some evidence, not specifically related to mind-wandering, that different tasks may lead to different reliance on low-level visual features. For example, in Anderson et al. (2015), an experiment in which participants were given no specific viewing task led to greater influence of visual salience on eye movements, compared to an experiment where participants were required to search for a target. The idea that top-down influence varies over time is not new (Tatler, Baddeley, & Gilchrist, 2005). The “strategic divergence” hypothesis posits that low-level image features continuously influence eye movement behavior, but top-down involvement varies over time. It could be, that in the absence of a specific task (i.e., during mind-wandering), visual salience plays a bigger role in guiding fixations compared to meaning.

Alternatively, there are reasons to believe that meaning would still outperform visual salience in predicting fixation allocation even during mind-wandering. Studies have shown that even in tasks where meaning is irrelevant and visual salience is relevant (e.g., brightness rating), fixations are still primarily guided by meaning rather than visual salience (Hayes & Henderson, 2019; Peacock, Hayes, & Henderson, 2019a). These results, according to the authors, suggest an involuntary viewing bias towards meaningful regions in the scene. Therefore, even when the viewer is mind-wandering and not actively processing the visual input, fixations may still be biased towards meaningful regions in the scene.

Existing studies looking at eye movement correlates of mind-wandering during scene perception mostly focused on global measures (i.e., the calculation of these measures does not depend on local scene semantics or visual salience; Faber, Krasich, Bixler, Brockmole, & D’Mello, 2020; Krasich, McManus, Hutt, Faber, D’Mello, & Brockmole, 2018; Zhang, Anderson, & Miller, 2021), so they provide limited information regarding the relationship between mind-wandering and fixation allocation to specific content in the scene. However, one exception is a recent study by Krasich et al. (2020, based on data from 2018). In their study, 51 participants were asked to study 12 urban scenes in preparation for a later memory test. A thought probe occasionally occurred after some trials, asking participants whether they were just on-task or mind-wandering. The authors used multiple ways to represent the distribution of meaning and visual salience in each scene. In particular, the meaning map approach and the GBVS salience model, which were used by Henderson and colleagues to compare meaning and visual salience, were both included. The authors extracted meaning and salience values at fixated locations and compared them between mind-wandering and on-task trials. Mind-wandering and on-task trials did not significantly differ in meaning values (as measured by meaning maps) or salience values (as measured by GBVS) at fixated locations. The authors did find that fixations during mind-wandering occurred in regions with higher visual salience when visual salience was measured by alternative models. A subsequent replication by the authors obtained universally null findings, although the significant results were retained by pooling data from both studies.

Overall, the results of Krasich et al. (2020) suggest that fixations are still associated with meaning during mind-wandering. However, these results are inconclusive in several aspects. First, in their study, meaning was not directly compared against visual salience, so it remains unclear which feature was a stronger predictor of fixations when participants were on-task, and whether it was the same feature during mind-wandering. Second, the usually high correlation between meaning and visual salience in real-world scenes was not addressed. Meaning and visual salience are intricately related (e.g., what is meaningful to the viewer is often visually conspicuous). Therefore, to assess the strength of the relationship between fixations and meaning, it is important to control for the variance explained by visual salience (and vice versa). Finally, some of the results in Krasich et al. (2020) rest on interpreting null findings; it may be useful to use Bayes factors to quantify support for or against the null hypothesis.

The current study

The primary goal of the current study is to examine if the meaning-based fixation guidance during scene perception, as suggested by Henderson and colleagues (e.g., Henderson & Hayes, 2017), depends on the viewer’s attentional state. We anticipate that fixations during attentive viewing prioritize meaningful regions over visually salient regions. However, previous research does not allow us to make clear hypotheses regarding how fixations would be associated with meaning and visual salience during mind-wandering. On the one hand, results like those in Reichle et al. (2010) and Foulsham et al. (2013) seem to suggest that the association between fixations and meaning would be reduced during mind-wandering. On the other hand, there is evidence that fixations are involuntarily biased towards meaningful regions, even when meaning is irrelevant to the task. Finally, the results of Krasich et al. (2020) seem to suggest that mind-wandering does not change how fixations are allocated based on meaning, but those results are inconclusive for the reasons listed above.

Given the various possibilities of fixation guidance during mind-wandering, we opted for an exploratory-confirmatory approach. In the main study, we shall explore how do meaning and visual salience predict fixations during attentive viewing and mind-wandering. In the second study, we seek to confirm key findings of the main study using identical analyses on an independent dataset. Specifically, we present a re-analysis of the first study in Krasich et al. (2020). Upon request, the authors kindly shared their full data, stimuli, and meaning maps. Because of the similarity in study designs between the present work and Krasich et al. (2020), we were able to perform identical analyses on their data. These analyses provide additional insight beyond those in Krasich et al. (2020) in that we (1) directly compare meaning and visual salience in predicting fixation allocation, (2) assess the unique predictive power of meaning and visual salience by controlling for their shared variance, and (3) use Bayes factors to assess evidence for or against the null hypothesis.

Method

Participants

Sixty-four undergraduate students (age mean = 18.84, SD = .79; 64% female; all with normal eyesight) from the University of Michigan participated in this study for course credits. After discarding data from seven participants who had low tracking ratios (75%), the final sample consisted of 57 participants. Note that analyses performed with the same sample, but which examined distinct research questions from those in the current study, are reported elsewhere (Zhang et al., 2021).

Apparatus and stimuli

We selected 180 real-world scenes (60 exteriors, 60 interiors, and 60 landscapes) as the encoding stimuli from the SUN database (Xiao, Hays, Ehinger, Oliva, & Torralba, 2010) and the LabelMe database (Russell, Torralba, Murphy, & Freeman, 2008). Another 72 scenes from the same databases (24 for each type) were presented as lures in the memory test. From the encoding stimuli, we randomly chose a fixed set of 36 scenes (12 for each type) as probed scenes (followed by a thought probe). These probed scenes were the same for all participants. All scenes were presented in 1024*768 pixels on a 20.1-inch screen at approximately 70 cm to the participant. Thus, each scene subtended about 32∘*25∘ of visual angle.

Monocular eye movements were recorded by an EyeLink 1000 tracker at a sampling rate of 500 Hz. No chin rest was used and head movement was adjusted by tracking a sticker on the participant’s forehead. The experiment was implemented by the OpenSesame software (Mathôt, Schreij, & Theeuwes, 2012) with functions from the PyGaze package (Dalmaijer, Mathôt, & van der Stigchel, 2014).

Procedure

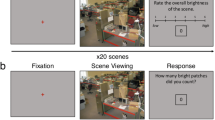

After signing the consent form, participants were asked to study scenes in preparation for a memory test. Participants learned that a thought probe would occasionally occur after some of the scenes and ask them whether they were mind-wandering or on-task during their viewing of the scenes just presented. The definitions of being on-task and mind-wandering were introduced (see Appendix for full instructions). Participants first completed a practice block that consisted of five example trials and an example recognition test. Participants also saw a thought probe after one of the five example trials. The experimenter instructed the participants on how to answer the thought probe and explained any remaining questions from the participants. Then, the experimental blocks began. Exteriors, interiors, and landscapes were presented in three separate blocks, with block order counterbalanced across participants. Each block had a “study-test” structure. In the study phase of each block, participants studied 60 scenes consecutively. Each trial started with a 500-ms display of “Next Picture”, and a 1-s display of a fixation cross. After the fixation cross, each scene was presented for 10 s. After that, the screen went black for 100 ms. A thought probe was presented after each of the to-be-probed scenes (Fig. 1). Trial order was randomized with the constraint that any two probed scenes were separated by at least three non-probed scenes. In the test phase of each block, participants indicated whether each scene was an old one or a new one from a set of 12 probed scenes, 12 randomly selected non-probed scenes, and 24 new scenes. The eye-tracker was calibrated using a 5-point calibration before each block. Eye movements were recorded during both the study and the test phase but only data from the 36 probed scenes during the study phase were analyzed and reported.

An example trial during the study phase. A trial started with a 500-ms display of “Next Picture”, followed by a 1-s fixation cross. Then the picture appeared for 10 s, followed by a black screen of 100 ms. For probed trials, a thought probe would then appear to ask whether participants were mind-wandering during the picture they just saw

Thought probes

At the beginning of the experiment, participants learned the definition of on-task (“you were focused on completing the task and were not thinking about anything unrelated to the task”) and mind-wandering (“you were thinking about something completely unrelated to the task”). Participants also learned intentional mind-wandering as “you intentionally decided to think about things that are unrelated to the task” and unintentional mind-wandering as “your thoughts drifted away despite your best intentions to focus on the task” (Seli, Risko, & Smilek, 2016). During the experiment, the thought probe asks “where was your attention during the last picture?”. Participants were asked to choose either “I was focusing on the picture” to indicate that they were on-task or “I was thinking about something else” to indicate that they were mind-wandering. If the latter was chosen, participants were further asked to indicate whether they were intentionally mind-wandering or unintentionally mind-wandering. In data analysis, intentional and unintentional mind-wandering were collapsed into a single “mind-wandering” category because intentional mind-wandering only took about 5% of the responses (in comparison, unintentional mind-wandering took about 22% of the responses).

Map creation

Salience maps

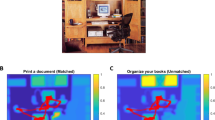

We generated salience maps for the 36 probed scenes using the GBVS toolbox with default parameter settings. For each scene, the toolbox computes an arbitrary salience value for each pixel from 0 to 1, with greater values indicating relatively higher visual salience. Figure 2 shows the salience map for an example picture. We chose to use GBVS to represent visual salience because it is a fairly successful salience model that is completely based on low-level features (Harel et al., 2007). It also allows us to compare our results with previous results by Henderson and colleagues.

Prediction map and fixation map examples. Panel a shows an example scene. Panel b shows the GBVS salience map. Panel c shows the meaning map (with the GBVS center bias). Panel d shows the fixation map based on all fixations of participants who reported being mind-wandering. Panel e shows the fixation map based on all fixations of participants who reported being on-task. Panel f shows the fixation map based on a random subset of “on-task” fixations, which has the same number of fixations as the total number of “mind-wandering” fixations associated with this scene. For panels d, e, and f, the squared linear correlations with meaning (\({R^{2}_{M}}\)) and visual salience (\({R^{2}_{S}}\)) are also shown

Meaning maps

Meaning maps were created following Henderson and Hayes (2017) using author-provided scripts. Each scene was cut into 300 fine (diameter = 87 pixels) and 108 coarse (diameter = 205 pixels) circular patches, producing 10800 unique fine patches and 3888 unique coarse patches in total. We recruited an independent sample of 147 people from Prolific.co to rate these patches for a 3 USD reward. Inclusion criteria included (1) age, 18–35, (2) nationality, US or Canada, (3) fluent language, English, (4) approval rate, >= 99%, (5) number of previous submissions, >= 100. As in Henderson and Hayes (2017), workers were asked to rate each scene patch on a six-point scale (1 - very low, 6 - very high) based on how informative or recognizable they thought it was. Patches were presented to the raters without showing the entire scene (i.e., context-free). Each worker rated a random set of 300 unique scene patches. Each patch was rated three times by three unique workers. Meaning map for each scene was created by averaging and smoothing the rating scores corresponding to the original pixels. As in Henderson and Hayes (2017), we applied the GBVS center bias (1 - “invCenterBias.mat” in the GBVS toolbox) to the meaning maps using pixel-wise multiplication so that the centers of the meaning and visual salience were equally weighted. The final meaning maps had each pixel taking a value from 0 to 1, with greater values indicating relatively higher meaningfulness. Figure 2 shows the meaning map for an example picture.

Fixation maps

For each scene, we created a fixation frequency matrix that represents how many times each pixel was fixated among participants who reported being on-task on that scene. Similarly, we created another fixation frequency matrix for each scene based on fixations of participants who reported mind-wandering. Both frequency matrices were smoothed using a Gaussian low-pass filter with a circular boundary and a cutoff frequency of -6 dB. These fixation maps represent the density of fixations during attentive viewing and mind-wandering, with each pixel taking a value between 0 to 1.

The construction of scene-level fixation density maps is affected by the number of fixations available. Fixation maps generated from a small number of fixations can be “noisier” compared to those generated from a large number of fixations. Thus, fixation maps based on a relatively small number of fixations may be more weakly associated with meaning and visual salience to begin with. In our case, the number of fixations available to construct an “on-task” fixation map was on average 1000 (SD = 128), whereas the number of fixations available to construct a “mind-wandering” fixation map for the same scene was on average 293 (SD = 92). This difference in total fixation count arose because (1) mind-wandering reports were relatively infrequent than were on-task reports and (2) scenes received fewer fixations if they were viewed during mind-wandering compared to when being on-task (more in the Results section).

To control for differences in the number of fixations, we created a third type of fixation map for each scene based on a subset of on-task fixations. This subset was randomly sampled without replacement from all on-task fixations, with its total number equal to the number of mind-wandering fixations on the same scene. The resulting fixation maps are directly comparable to the mind-wandering fixation maps because they are based on the same number of fixations. We hereafter refer to fixation maps based on all on-task fixations as “on-task (full)” and fixation maps based on a subset of fixations as “on-task (subset)”. Figure 2 shows the fixation maps created for an example picture.

Data analysis

We discarded fixations greater than 2000 ms or shorter than 80 ms (8.1% of data) and fixations outside of the screen (1.82% of data). Trials with no fixations (1.82% of trials) were also discarded. The remaining fixations were used to generate the fixation maps.

We used correlation-based metrics to evaluate the performance of prediction maps. The main advantages of correlation over other evaluation metrics are that it has a straightforward interpretation, treats false positives and false negatives equally, and makes relatively few assumptions about input format (Bylinskii, Judd, Oliva, Torralba, & Durand, 2017). It also allows us to compare our results to those of Henderson and colleagues.

We computed the linear correlations based on the https://github.com/cvzoya/saliency/blob/master/code_forMetrics/CC.mCC.m function from the MIT Saliency Benchmark project. The code was adapted to Python for the current study. The function first normalizes the to-be-correlated maps to have zero mean and unit variance. Then, it converts both maps into one-dimensional arrays and computes their Pearson correlation. Finally, we squared the resulted correlations to obtain the proportion of variance (R2) explained by the prediction map, as in Henderson and Hayes (2017).

After applying the GBVS center bias, meaning maps and salience maps were correlated at r = .84Footnote 1. Therefore, we also computed semipartial correlations to quantify the unique variance in fixation maps explained by meaning and visual salience. A semipartial correlation indicates the unique association between a predictor and a dependent variable while controlling for another predictor. The squared semipartial correlation thus indicates the proportion of variance in the dependent variable that is uniquely associated with one predictor. In our case, the squared semipartial correlation can be used to quantify the predictive power of meaning independent of visual salience, and vice versa. Semipartial correlations were calculated using the partial_corr function in the pingouin package.

Statistical analysis was conducted using R (Version 4.0.5; R Core Team, 2019)Footnote 2. All analyses were conducted at the scene level, as the creation of fixation maps requires pooling fixations across participants. For key results, we supplemented frequentist tests with Bayes factors (BF) to quantify support for or against the null hypothesis. BF s for t tests and ANOVAs were calculated using functions from the BayesFactor package with the default JZS priors. BF s for linear mixed models were calculated based on the BIC approximation (Wagenmakers, 2007). We interpret BF using the following criteria: 1: no evidence; 1–3: anecdotal evidence; 3–10: moderate evidence; 10–30: strong evidence; 30–100: very strong evidence; > 100: extreme evidence.

Results

Mind-wandering rate and global eye movement measures

On average across scenes, 26.50% (SD = 7.38%) of the participants reported that they were mind-wandering. There were fewer fixations on a scene when it was viewed during mind-wandering (Mind-wandering: M = 19.81, SD = 2.65; On-task: M = 24.59, SD = 1.78; t(35) = -12.40, p < .001, d = -2.12). Fixation durations during mind-wandering tended to be longer (Mind-wandering: M = 362.83 ms, SD = 47.52; On-task: M = 313.54 ms, SD = 27.16; t(35) = 6.38, p < .001, d = 1.27). Finally, a scene was less likely to be recognized later if it was viewed while mind-wandering (Mind-wandering: M = 71.02%, SD = 16.56%; On-task: M = 89.27%, SD = 6.54%; t(35) = -7.04, p < .001, d = -1.45). Therefore, mind-wandering differed from attentive viewing in several global eye movement measures and was associated with worse memory of the scenes.

Squared linear correlations (R 2)

Figure 3a shows squared linear correlations by prediction map (meaning/salience) and fixation map (mind-wandering/on-task: subset/on-task: full). The means and standard deviations of each group are presented in Table 1. Overall, meaning explained about 40% of the variance in the fixation maps, whereas visual salience explained about 30% of the variance. These results are comparable with those of Henderson and Hayes (2017), who also used a memory task and found that meaning explained 53% of the variance and visual salience explained 38% of the variance. Two-tailed t tests (see Table 1) confirmed that meaning explained significantly more variance than did visual salience in all the fixation maps.

Squared linear correlations (a) and squared semipartial correlations (b) by prediction map (meaning/salience) and fixation map (mind-wandering/on-task: subset/on-task: full). Each level on the x-axis displays individual data points (left), the smoothed probability distribution (right), and the mean value with 95% confidence interval (middle). “Meaning | Salience”: Meaning, controlling for visual salience. “Salience | Meaning”: Visual salience, controlling for meaning

To directly compare mind-wandering and attentive viewing, we conducted a 2 (prediction map: meaning/salience)*2 (fixation map: mind-wandering/on-task: subset) ANOVA. To reiterate, we used the on-task (subset) fixation maps to compare with the mind-wandering fixation maps because they were based on the same number of fixations. To assess support for the null hypotheses, we also conducted a Bayes factor top-down analysis: for each effect in the ANOVA, we calculated a Bayes factor for dropping the effect from the full model. A larger BF, then, would indicate stronger evidence for the absence of the effect. The results are shown in Table 2. There was a robust main effect of prediction map, such that meaning overall explained more variance compared to visual salience. The main effect of fixation map was not significant, with a BF indicating moderate evidence for the absence of the effect. Thus, fixations during mind-wandering did not seem to be globally less associated with meaning and visual salience compared to on-task fixations. Importantly, the interaction between fixation map and prediction map was not significant, with a BF indicating moderate evidence for the absence of the effect. This suggests that the advantage of meaning over visual salience in predicting fixations did not differ between attentive viewing and mind-wandering.

Squared semipartial correlations (unique R 2)

To examine the unique variance explained by meaning and visual salience while controlling for their shared variance, we computed the squared semipartial correlations. These data are presented in Fig. 3b and Table 1. Overall, meaning explained about 13% of unique variance in fixation maps over and above visual salience, and visual salience explained about 3% of unique variance in fixation maps over and above meaning. These results are also comparable with those in Henderson and Hayes (2017), who found that meaning and visual salience explained 19% and 4% of unique variance, respectively. Two-tailed t tests (see Table 1) revealed that meaning explained significantly more unique variance than did visual salience in all the fixation mapsFootnote 3.

Next, we conducted a 2 (prediction map: meaning/salience)*2 (fixation map: mind-wandering/on-task: subset) ANOVA on the squared semipartial correlations. The results are presented in Table 1. We again found a robust main effect of prediction map. The main effect of fixation map was not significant, with a BF indicating moderate evidence for the absence of the effect. The interaction between prediction map and fixation map was also not significant, with a BF indicating moderate evidence for the absence of the effect. Thus, when looking at the unique variance only, the advantage of meaning and visual salience still did not differ between attentive viewing and mind-wandering.

Overall, the results indicate that meaning, compared to visual salience, was a better predictor of fixation allocation during both attentive viewing and mind-wandering.

Temporal analyses

Previous studies suggest that the most robust changes in gaze parameters occur immediately before self-reported mind-wandering reports, and these changes diminish further back in time (Krasich et al., 2018; Reichle et al., 2010; Zhang, Miller, Sun, & Cortina, 2020). Thus, one could argue that the effect of mind-wandering only emerged towards the end of the trial (perhaps because participants finished processing the scene and started to mind-wander). If so, then analyses that summarized over the whole trial might have missed periods where mind-wandering-related changes were most likely to occur.

We assigned fixations to ten 1-s bins based on their end times in each trial. Then, a fixation map was generated for each time bin, each scene, and each attentional state. We constructed the fixation maps as previously described, but this time also for each time bin.

Data of the squared linear correlations are presented in Fig. 4a. We fit linear mixed models, separately for each fixation map, to estimate the variance explained by meaning and visual salience as a function of trial time. In each model, fixed effects included prediction map (meaning [reference level] vs. salience), time (continuous variable, z-scored), and their interaction term. (formula: PredMap ∗ time). Each model also included random intercepts by scene identity (formula: (1|Scene)). If the variance explained by meaning and visual salience followed different time trends, we would expect a significant interaction between prediction map and time.

Squared linear and semipartial correlations over time. Panel a shows results for the squared linear correlations. Panel b shows results for the squared semipartial correlations. “Meaning | Salience”: Meaning, controlling for visual salience. “Salience | Meaning”: Visual salience, controlling for meaning. Error bars indicate 95% confidence intervals

Results of the linear mixed models are presented in Table 3. In all three models, visual salience on average explained significantly less variance compared to meaning, replicating results summarized over the whole trial. More importantly, time did not significantly interact with prediction map in any of the models. Bayes factor top-down analyses indicate strong evidence in favor of dropping the interaction term from each model. Therefore, the advantage of meaning over visual salience in predicting fixation allocation seems to hold for the entire viewing time, during both attentive viewing and mind-wandering.

Data of the squared semipartial correlations are presented in Fig. 4b. We fit the same set of linear mixed models to estimate the unique variance explained by meaning and visual salience as a function of time. The results are presented in Table 4. In all models, visual salience on average explained less unique variance compared to meaning, replicating results summarized over the whole trial. Again, we did not find a significant interaction between prediction map and time in any of the models. Bayes factor top-down analyses indicate strong evidence in favor of dropping the interaction term from each model. Therefore, after controlling for their shared variance, the advantage of meaning over visual salience in predicting fixation allocation still held for the entire viewing time, during both attentive viewing and mind-wandering.

Overall, the temporal analyses show that meaning was consistently a better predictor compared to visual salience over the entire viewing time, during both mind-wandering and attentive viewing.

Re-analysis of Krasich et al. (2020)

To confirm findings in the main study, we performed the same analyses on part of the data from Krasich et al., (2020) that is related to meaning maps and GBVS salience maps. We anticipate a replication of two key findings of the main study: (1) meaning, compared to visual salience, was a better predictor of fixation allocation during both attentive viewing and mind-wandering, and (2) these patterns held for the entire viewing time.

In Krasich et al. (2020), 51 participants viewed 12 urban scenes in preparation for a later memory test. Each image was presented for 45 ˜ 75 s. A thought probe occurred after eight randomly selected trials, asking “In the moments right before this message, were you paying attention to the picture or were you zoning out?”. “Zoning out” was defined as “looking at the picture but thinking of something else entirely”. Data analyses included 40 s of data before the end of the trial.

We were able to obtain all data, stimuli, and unbiased meaning maps from the original authors. We opted to use the same analysis window (i.e., 40 s) in the current analyses. As in the main study, for each image, we created three types of fixation maps by pooling fixations across participants during the 40-s period: mind-wandering, on-task (full), and on-task (subset). The creation of on-task (subset) maps was necessary because in Krasich et al. (2020), mind-wandering was also infrequently reported (27%) and scenes associated with mind-wandering received fewer fixations.

GBVS salience maps were generated using the GBVS toolbox with default settings. The final salience maps had each pixel taking a value from 0 to 1, with greater values indicating relatively higher visual salience. Krasich et al. (2020) also followed the steps of Henderson and Hayes (2017) to create the meaning maps. One exception is that Krasich et al. (2020) did not apply the GBVS center bias to the meaning maps. However, this is necessary when the goal is to directly compare meaning and visual salience. Therefore, we applied the GBVS center bias to the meaning maps in Krasich et al. (2020). The final meaning maps had each pixel taking a value from 0 to 1, with greater values indicating relatively higher meaningfulness.

Squared linear correlations (R 2)

Results of squared linear correlations are presented in Fig. 5a and Table 5. As in the main study, two-tailed t tests showed that meaning explained significantly more variance than did visual salience in all types of fixation maps.

Squared linear and semipartial correlations associated with each scene, based on data from Krasich et al., (2020). a Raincloud plots showing the squared linear correlations. b Raincloud plots showing the squared semipartial correlations

To directly compare mind-wandering and attentive viewing, we conducted a 2 (prediction map: meaning/salience)*2 (fixation map: mind-wandering/on-task: subset) ANOVA. The results are presented in Table 6. As in the main study, we found a significant main effect of prediction map, a non-significant main effect of fixation map, and a non-significant interaction between prediction map and fixation map. Bayes factor top-down analysis found very strong evidence against the absence of prediction map, moderate evidence for the absence of fixation map, and anecdotal evidence for the absence of the interaction term.

Squared semipartial correlations (unique R 2)

Results of squared semipartial correlations are presented in Fig. 5b and Table 5. As shown in Table 5, after removing their shared variance, meaning was still a stronger predictor than visual salience; and this was the case for all types of fixation maps.

Next, we conducted a 2 (prediction map: meaning/salience)*2 (fixation map: mind-wandering/on-task: subset) ANOVA. The results are presented in Table 6. Again, we found a significant main effect of prediction map, a non-significant main effect of fixation map, and a non-significant interaction between prediction map and fixation map. Bayes factor top-down analysis found extreme evidence against the absence of the effect of prediction map, moderate evidence for the absence of the effect of fixation map. and anecdotal evidence for the absence of the interaction effect.

Temporal analyses

Next, we examined if the predictive power of meaning and visual salience changed as a function of time. We assigned fixations during the 40-s period to forty 1-s time bins based on their ending time. Then, we generated the three types of fixation maps for each bin and each scene. By using forty 1-s bins, we can examine the fine-grained time trends of meaning and visual salience on a broader time scale (vs. 10 s in the main study).

Figure 6a shows data for the squared linear correlations over time. As in the main study, we fit linear mixed models, separately for each fixation map, to estimate the variance explained by meaning and visual salience over time. The results are presented in Table 7. In all three models, visual salience explained significantly less variance compared to meaning, on average, across all time bins. Moreover, none of the models showed a significant interaction between prediction map and time. Bayes factor top-down analyses indicate strong to very strong evidence in favor of dropping the interaction term from each model. Therefore, the predictive power of meaning and visual salience did not seem to change over time (with meaning outperforming visual salience overall), regardless of which fixation map they were predicting.

The average of squared linear and semipartial correlations of meaning and visual salience over time, based on data from Krasich et al., (2020). Panel a shows results for the squared linear correlations. Panel b shows results for the squared semipartial correlations. “Meaning | Salience”: Meaning, controlling for visual salience. “Salience | Meaning”: Visual salience, controlling for meaning. Error bars indicate 95% confidence intervals

Figure 6b shows data for the squared semipartial correlations. We fit the same set of linear mixed models to estimate the unique variance explained by meaning and visual salience over time. The results, as presented in Table 8, show a robust overall difference between meaning and visual salience in all models. Moreover, the interaction between prediction map and time was not significant in any of the models. Bayes factor top-down analyses indicate strong to very strong evidence in favor of dropping the interaction term from each model.

Overall, the re-analysis of data from Krasich et al. (2020) confirmed that meaning, compared to visual salience, was a significantly better predictor of the allocation of fixations during both mind-wandering and attentive viewing. In addition, this pattern seems to hold for an extended viewing time (40 s).

Discussion

Results of two independent studies converged to show a clear pattern: viewers preferred meaningful regions over visually salient regions during both attentive viewing and mind-wandering. This relationship held even after controlling for the substantial shared variance between meaning and visual salience, and it did not seem to vary based on viewing time. These results are consistent with previous findings showing that attention is guided by meaning even in situations where meaning is irrelevant, such as in a brightness rating task and a brightness search task (Peacock et al., 2019a). Together, these results suggest an involuntary semantic bias in real-world scene perception regardless of the nature of the task or the attentional state of the viewer.

The current results provide some clues about the nature of perceptual decoupling (Schooler et al., 2011; Smallwood, 2013) in the context of scene perception. One of the potential ways the decoupling process could unfold is that it breaks down the association between fixation allocation and scene meaning. Put simply, participants may scan the scene haphazardly during mind-wandering. However, our results did not support this idea. Across two studies, both meaning and visual salience explained significant portions of unique variance in fixation allocation during mind-wandering, with meaning consistently outperforming visual salience. On the other hand, we found that mind-wandering was associated with changes in global measures, including fewer fixations and longer fixation durations (so did Krasich et al., 2018). Zhang et al. (2021), based on the same data set in the main study, found that mind-wandering (unintentional mind-wandering specifically) was associated with changes in several other global measures, including reduced fixation dispersion (but see Krasich et al., 2018), increased eye-blinks, and repetitive scanpaths. Thus, existing evidence seems to suggest that perceptual decoupling during scene perception may be best characterized as a change in global eye movement patterns rather than changes in associations with local features (at least defined by GBVS and meaning maps). For example, viewers during mind-wandering may scan the scene less actively (fewer fixations and longer durations). But whenever a saccade is made, its landing position will be biased towards meaningful regions in the scene just as during attentive viewing.

Why would scene semantics continue to affect landing positions even during mind-wandering? One possible explanation has to do with the rapid and automatic extraction of scene gist. A scene gist provides information about the scene’s category, its spatial layout, specific objects it may contain, etc., and this information has been shown to strongly bias the deployment of attention (e.g., Oliva & Torralba, 2006). There is ample evidence that scene gist can be extracted rapidly with minimal attention (e.g., Greene & Fei-Fei, 2014; Joubert, Rousselet, Fize, & Fabre-Thorpe, 2007; Oliva & Torralba, 2006; Torralba, Oliva, Castelhano, & Henderson, 2006; Võ & Henderson, 2010). Hayes and Henderson (2019) suggested that scene gist initially provides a coarse representation of where meaningful information is likely to occur, and subsequent fixations are used to refine this coarse semantic representation. We speculate that participants were able to extract scene gists even during mind-wandering. Once the gist of a scene is formed, it helps to constrain eye movements even in the presence of task-unrelated thoughtsFootnote 4.

The current study used GBVS to represent visual salience. GBVS is just one of the many models available to represent salience (Borji & Itti, 2012). Different salience models make different assumptions about how the visual system works and compute salience in unique ways. The GBVS model was selected because it is quite successful and has been used in previous research to compare against meaning (e.g., Henderson & Hayes, 2017). That said, it is unclear if idiosyncratic procedures for salience computation would affect the current results. In their main study, Krasich et al. (2020) found that fixations during mind-wandering tended to land on more visually salient regions compared to attentive viewing, but only when salience was measured by the Adaptive Whitening Saliency Model (AWS; Garcia-Diaz, Fdez-Vidal, Pardo, & Dosil, 2012) and RARE2012 (Riche, Mancas, Duvinage, Mibulumukini, Gosselin, & Dutoit, 2013). One way in which AWS and RARE2012 differ from GBVS is that they do not incorporate a center bias in their salience computation, among many other differences (Riche et al., 2013). Thus, these models may capture different aspects of salience that are processed differently depending on the viewer’s attentional state.

Similarly, there is an emerging debate on what kind of semantic information meaning maps can and cannot capture. The current study used context-free meaning maps, that is, scene patches were rated in isolation without seeing the full scene (Hayes & Henderson, 2019; Henderson & Hayes, 2017). However, the meaning of local scene regions can depend on the global context. For example, if a scene shows a pair of shoes on a dinner table, the pair of shoes will likely to be rated as highly meaningful if the full scene is given. However, context-free meaning maps are insensitive to object-scene semantic relationships (Pedziwiatr, Kümmerer, Wallis, Bethge, & Teufel, 2021), although this does not follow that meaning maps do not capture any semantic information at all (Henderson, Hayes, Peacock, & Rehrig, 2021). Then, it is possible that viewers during mind-wandering are less sensitive to certain aspects of scene meaningfulness that cannot be captured by context-free meaning maps (e.g., object-scene inconsistencies). In sum, it remains an open question if mind-wandering would alter certain aspects of scene perception that are not captured by the GBVS model or meaning maps.

Another question is whether meaning-based fixation guidance may falter only during some particular types of mind-wandering. The current study (main study) did measure intentional and unintentional mind-wandering (Seli et al., 2016). But the number of cases of intentional mind-wandering (5% of thought probe responses) was likely to be too low to produce any reliable findings. It is, however, good that the viewer usually does not deliberately disengage from the scene. Besides the intentionality of mind-wandering, other studies suggest that mind-wandering episodes may vary in their levels of absorbedness (Schad, Nuthmann, & Engbert, 2012), intensity (Jubera-García, Gevers, & Van Opstal, 2019), arousal (Unsworth & Robison, 2018), and the extent to which individuals are aware of their mind-wandering state (Schooler et al., 2011). It is unclear whether any of these variables would modulate the role of meaning and visual salience in predicting fixation allocation. To detect any of these effects reliably, a much larger sample size and more trials may be necessary.

Conclusion

Overall, the current study shows that viewers prioritize meaningful regions (as measured by context-free meaning maps) over visually salient regions (as measured by GBVS) in real-world scenes during both mind-wandering and attentive viewing. While most studies on mind-wandering focus on how it is different from when people are attentive, the current results suggest that certain aspects of human cognition remain intact. Assessing both what is changed and what is not changed during mind-wandering will help paint a more comprehensive picture of this intriguing phenomenon.

Notes

The unbiased meaning maps were correlated with salience maps at r = .60.

We, furthermore, used the R-packages BayesFactor (Version 0.9.12.4.2; Morey & Rouder, 2018), dplyr (Version 1.0.5; Wickham, François, Henry, & Müller, 2020), easystats (Version 0.4.0; Makowski, Ben-Shachar, & Lüdecke, 2020), knitr (Version 1.33.1; Xie, 2015), lme4 (Version 1.1.26; Bates, Mächler, Bolker, & Walker, 2015), papaja (Version 0.1.0.9997; Aust & Barth, 2020), patchwork (Version 1.1.1; Pedersen, 2019), raincloudplots (Version 0.2.0; Allen, Poggiali, Whitaker, Marshall, van Langen, & Kievit, 2021), and tidyr (Version 1.1.3; Wickham & Henry, 2020).

We also conducted one-sample t tests to examine if the unique variance explained by meaning and visual salience, after removing their shared variance, was significantly greater than zero. The results (see the Appendix) show that both meaning and visual salience explained statistically significant amount of unique variance in all fixation maps.

It is also worth noting that the extraction of scene gist may be facilitated by the fact that in the present work, the same type of scenes were presented in groups. We did so out of the concern that mixing different types of scenes would induce a novelty that reduce the mind-wandering rate (Faber, Radvansky, & D’Mello, 2018).

References

Allen, M., Poggiali, D., Whitaker, K., Marshall, T. R., van Langen, J., & Kievit, R. A. (2021). Raincloud plots: a multi-platform tool for robust data visualization [version 2; peer review: 2 approved] Wellcome Open Research 2021, 4:63. https://doi.org/10.12688/wellcomeopenres.15191.2

Anderson, N. C., Ort, E., Kruijne, W., Meeter, M., & Donk, M. (2015). It depends on when you look at it: Salience influences eye movements in natural scene viewing and search early in time. Journal of Vision, 15(5), 9. https://doi.org/10.1167/15.5.9

Aust, F., & Barth, M. (2020). papaja: Create APA manuscripts with R Markdown. https://github.com/crsh/papaja

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1–48. https://doi.org/10.18637/jss.v067.i01

Borji, A., & Itti, L. (2012). State-of-the-art in visual attention modeling. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(1), 185–207.

Bylinskii, Z., Judd, T., Oliva, A., Torralba, A., & Durand, F. (2017). What do different evaluation metrics tell us about saliency models? arXiv:1604.03605[Cs].

Dalmaijer, E. S., Mathôt, S., & van der Stigchel, S. (2014). Pygaze: An open-source, cross-platform toolbox for minimal-effort programming of eyetracking experiments. Behavior Research Methods, 46(4), 913–921.

Faber, M., Krasich, K., Bixler, R., Brockmole, J., & D’Mello, S. (2020). The eye-mind wandering link: Identifying gaze indices of mind wandering across tasks. Journal of Experimental Psychology: Human Perception and Performance.

Faber, M., Radvansky, G. A., & D’Mello, S. K. (2018). Driven to distraction: A lack of change gives rise to mind wandering. Cognition, 173, 133–137. https://doi.org/10.1016/j.cognition.2018.01.007

Foulsham, T., Farley, J., & Kingstone, A. (2013). Mind wandering in sentence reading: Decoupling the link between mind and eye. Canadian Journal of Experimental Psychology/Revue Canadienne de Psychologie Experimentalé, 67(1), 51.

Frank, D. J., Nara, B., Zavagnin, M., Touron, D. R., & Kane, M. J. (2015). Validating older adults’ reports of less mind-wandering: An examination of eye movements and dispositional influences. Psychology and Aging, 30(2), 266–278. https://doi.org/10.1037/pag0000031

Garcia-Diaz, A., Fdez-Vidal, X. R., Pardo, X. M., & Dosil, R. (2012). Saliency from hierarchical adaptation through decorrelation and variance normalization. Image and Vision Computing, 30(1), 51–64.

Greene, M. R., & Fei-Fei, L. (2014). Visual categorization is automatic and obligatory: Evidence from Stroop-like paradigm. Journal of Vision, 14(1), 14–14.

Harel, J., Koch, C., & Perona, P. (2007). Graph-based visual saliency. Advances in Neural Information Processing Systems, 545–552.

Hayes, T. R., & Henderson, J. M. (2019). Scene semantics involuntarily guide attention during visual search. Psychonomic Bulletin & Review, 26(5), 1683–1689. https://doi.org/10.3758/s13423-019-01642-5

Henderson, J. M. (2003). Human gaze control during real-world scene perception. Trends in Cognitive Sciences, 7(11), 498–504.

Henderson, J. M., Brockmole, J. R., Castelhano, M. S., & Mack, M. (2007). Visual saliency does not account for eye movements during visual search in real-world scenes. In Eye movements (pp. 537–III): Elsevier.

Henderson, J. M., & Hayes, T. R. (2017). Meaning-based guidance of attention in scenes as revealed by meaning maps. Nature Human Behaviour, 1, 7.

Henderson, J. M., Hayes, T. R., Peacock, C. E., & Rehrig, G. (2021). Meaning maps capture the density of local semantic features in scenes: A reply to Pedziwiatr, Kümmerer, Wallis, Bethge & Teufel (2021). Cognition, 104742. https://doi.org/10.1016/j.cognition.2021.104742

Henderson, J. M., Malcolm, G. L., & Schandl, C. (2009). Searching in the dark: Cognitive relevance drives attention in real-world scenes. Psychonomic Bulletin & Review, 16(5), 850–856.

Itti, L., & Koch, C. (2000). A saliency-based search mechanism for overt and covert shifts of visual attention. Vision Research, 40(10), 1489–1506.

Joubert, O. R., Rousselet, G. A., Fize, D., & Fabre-Thorpe, M. (2007). Processing scene context: Fast categorization and object interference. Vision Research, 47(26), 3286–3297. https://doi.org/10.1016/j.visres.2007.09.013

Jubera-García, E., Gevers, W., & Van Opstal, F. (2019). Influence of content and intensity of thought on behavioral and pupil changes during active mind-wandering, off-focus and on-task states. Attention, Perception, & Psychophysics. https://doi.org/10.3758/s13414-019-01865-7

Kam, J. W. Y., Dao, E., Farley, J., Fitzpatrick, K., Smallwood, J., Schooler, J. W., & Handy, T. C. (2011). Slow fluctuations in attentional control of sensory cortex. Journal of Cognitive Neuroscience, 23(2), 460–470. https://doi.org/10.1162/jocn.2010.21443

Kane, M. J., Brown, L. H., McVay, J. C., Silvia, P. J., Myin-Germeys, I., & Kwapil, T. R. (2007). For whom the mind wanders, and when: An experience-sampling study of working memory and executive control in daily life. Psychological Science, 18(7), 614–621.

Kane, M. J., Gross, G. M., Chun, C. A., Smeekens, B. A., Meier, M. E., Silvia, P. J., & Kwapil, T. R. (2017). For whom the mind wanders, and when, varies across laboratory and daily-life settings. Psychological Science, 28(9), 1271–1289. https://doi.org/10.1177/0956797617706086

Killingsworth, M. A., & Gilbert, D. T. (2010). A wandering mind is an unhappy mind. Science, 330(6006), 932–932. https://doi.org/10.1126/science.1192439

Krasich, K., Huffman, G., Faber, M., & Brockmole, J. R. (2020). Where the eyes wander: The relationship between mind wandering and fixation allocation to visually salient and semantically informative static scene content. Journal of Vision, 20(9), 10. https://doi.org/10.1167/jov.20.9.10

Krasich, K., McManus, R., Hutt, S., Faber, M., D’Mello, S. K., & Brockmole, J. R. (2018). Gaze-based signatures of mind wandering during real-world scene processing. Journal of Experimental Psychology: General, 147(8), 1111–1124. https://doi.org/10.1037/xge0000411

Makowski, D., Ben-Shachar, M. S., & Lüdecke, D. (2020). The easystats collection of r packages. GitHub. https://github.com/easystats/easystats

Mathôt, S., Schreij, D., & Theeuwes, J. (2012). Opensesame: An open-source, graphical experiment builder for the social sciences. Behavior Research Methods, 44(2), 314–324.

Morey, R. D., & Rouder, J. N. (2018). BayesFactor: Computation of bayes factors for common designs. https://CRAN.R-project.org/package=BayesFactor

Oliva, A., & Torralba, A. (2006). Building the gist of a scene: The role of global image features in recognition. Progress in Brain Research, 155, 23–36.

Parkhurst, D., Law, K., & Niebur, E. (2002). Modeling the role of salience in the allocation of overt visual attention. Vision Research, 42(1), 107–123.

Peacock, C. E., Hayes, T. R., & Henderson, J. M. (2019a). Meaning guides attention during scene viewing, even when it is irrelevant. Attention, Perception, & Psychophysics, 81(1), 20–34. https://doi.org/10.3758/s13414-018-1607-7

Peacock, C. E., Hayes, T. R., & Henderson, J. M. (2019b). The role of meaning in attentional guidance during free viewing of real-world scenes. Acta Psychologica, 198, 102889. https://doi.org/10.1016/j.actpsy.2019.102889

Pedersen, T. L. (2019). Patchwork: The composer of plots. https://CRAN.R-project.org/package=patchwork

Pedziwiatr, M. A., Kümmerer, M., Wallis, T. S., Bethge, M., & Teufel, C. (2021). Meaning maps and saliency models based on deep convolutional neural networks are insensitive to image meaning when predicting human fixations. Cognition, 206, 104465.

R Core Team (2019). R: A language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org/

Reichle, E. D., Reineberg, A. E., & Schooler, J. W. (2010). Eye movements during mindless reading. Psychological Science, 21(9), 1300–1310.

Riche, N., Mancas, M., Duvinage, M., Mibulumukini, M., Gosselin, B., & Dutoit, T. (2013). Rare2012: a multi-scale rarity-based saliency detection with its comparative statistical analysis. Signal Processing: Image Communication, 28(6), 642–658.

Russell, B. C., Torralba, A., Murphy, K. P., & Freeman, W. T. (2008). Labelme: A database and web-based tool for image annotation. International Journal of Computer Vision, 77(1), 157–173.

Schad, D. J., Nuthmann, A., & Engbert, R. (2012). Your mind wanders weakly, your mind wanders deeply: Objective measures reveal mindless reading at different levels. Cognition, 125(2), 179–194. https://doi.org/10.1016/j.cognition.2012.07.004

Schooler, J. W., Smallwood, J., Christoff, K., Handy, T. C., Reichle, E. D., & Sayette, M. A. (2011). Meta-awareness, perceptual decoupling and the wandering mind. Trends in Cognitive Sciences, 15(7), 319–326. https://doi.org/10.1016/j.tics.2011.05.006

Seli, P., Risko, E. F., & Smilek, D. (2016). On the necessity of distinguishing between unintentional and intentional mind wandering. Psychological Science, 27(5), 685–691. https://doi.org/10.1177/0956797616634068

Smallwood, J. (2013). Distinguishing how from why the mind wanders: A process–occurrence framework for self-generated mental activity. Psychological Bulletin, 139(3), 519–535. https://doi.org/10.1037/a0030010

Steindorf, L., & Rummel, J. (2020). Do your eyes give you away? a validation study of eye-movement measures used as indicators for mindless reading. Behavior Research Methods, 52(1), 162–176.

Tatler, B. W., Baddeley, R. J., & Gilchrist, I. D. (2005). Visual correlates of fixation selection: Effects of scale and time. Vision Research, 45(5), 643–659. https://doi.org/10.1016/j.visres.2004.09.017

Tatler, B. W., Hayhoe, M. M., Land, M. F., & Ballard, D. H. (2011). Eye guidance in natural vision: Reinterpreting salience. Journal of Vision, 11(5), 5–5.

Theeuwes, J. (2010). Top–down and bottom–up control of visual selection. Acta Psychologica, 135(2), 77–99. https://doi.org/10.1016/j.actpsy.2010.02.006

Torralba, A., Oliva, A., Castelhano, M. S., & Henderson, J. M. (2006). Contextual guidance of eye movements and attention in real-world scenes: The role of global features in object search. Psychological Review, 113(4), 766.

Underwood, G., Foulsham, T., van Loon, E., Humphreys, L., & Bloyce, J. (2006). Eye movements during scene inspection: A test of the saliency map hypothesis. European Journal of Cognitive Psychology, 18 (3), 321–342. https://doi.org/10.1080/09541440500236661

Unsworth, N., & Robison, M. K. (2018). Tracking arousal state and mind wandering with pupillometry. Cognitive, Affective, & Behavioral Neuroscience, 18 (4), 638–664. https://doi.org/10.3758/s13415-018-0594-4

Võ, M. L.-H., & Henderson, J. M. (2010). The time course of initial scene processing for eye movement guidance in natural scene search. Journal of Vision, 10(3), 14–14. https://doi.org/10.1167/10.3.14

Wagenmakers, E. -J. (2007). A practical solution to the pervasive problems of p values. Psychonomic Bulletin & Review, 14(5), 779–804. https://doi.org/10.3758/BF03194105

Wickham, H., François, R., Henry, L., & Müller, K. (2020). Dplyr: A grammar of data manipulation. https://CRAN.R-project.org/package=dplyr

Wickham, H., & Henry, L. (2020). Tidyr: Tidy messy data. https://CRAN.R-project.org/package=tidyr

Xiao, J., Hays, J., Ehinger, K. A., Oliva, A., & Torralba, A. (2010). Sun database: Large-scale scene recognition from abbey to zoo. 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, 3485–3492.

Xie, Y. (2015) Dynamic documents with R and knitr, (2nd edn.) Boca Raton: Chapman; Hall/CRC. https://yihui.org/knitr/

Zhang, H., Anderson, N. C., & Miller, K. F. (2021). Refixation patterns of mind-wandering during real-world scene perception. Journal of Experimental Psychology: Human Perception and Performance, 47(1), 36.

Zhang, H., Miller, K. F., Sun, X., & Cortina, K. S. (2020). Wandering eyes: Eye movements during mind wandering in video lectures. Applied Cognitive Psychology, 34(2), acp. 3632. https://doi.org/10.1002/acp.3632

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open Practices Statement

Data, code, and stimuli for this paper are accessible at https://osf.io/jf65u/ (eye-tracking analyses) and https://github.com/HanZhang-psych/SceneMeaningMapping (creating meaning maps). None of the experiments were preregistered.

Appendices

Appendix A: One-sample t tests of the squared semipartial correlations (unique R 2)

Main Study

Krasich et al. (2020)

Appendix B: Performance indices of linear mixed models in the paper

The Main Study

The Re-analysis of Krasich et al. (2020)

Appendix C: Instructions

At the beginning of the study, the experimenter announced the following to the participant:

In this task, we will show you a series of pictures on the screen. Your task is to remember each picture for a later memory test. There will be three types of pictures: exteriors (outside of a building, for example, street views), interiors (inside of a building, for example, a bedroom), and natural views (for example, mountains). These three types of pictures will be divided into three blocks. In each block, you will see only one type of pictures. Each block has a study phase and a test phase. In the study phase, we will show you 60 pictures of the same type one by one. You will have 10 s to remember each picture. In the test phase, your memory on these pictures will be tested. We will present a series of pictures, and you need to indicate whether you just saw each picture. Then, we will move on to the next block (next type of pictures). You can have a rest between blocks.

During the study, your eye movements will be recorded. We would like you to reduce your body and head movement for better tracking quality.

One last thing: during the study phase, occasionally there will be a “thought-probe” asking if you were “mind-wandering”. Here is more information (Give the following to the participant, ask them to read it, and ask them if they have any questions).

Every once in a while, the task will temporarily stop and you will be presented with a screen asking you to indicate whether you were on-task or mind-wandering just before the screen appeared. Being on-task means that, just before the screen appeared, you were focused on completing the task and were not thinking about anything unrelated to the task. Some examples of on-task thoughts include thoughts about the picture, or thoughts about your performance on the task.

On the other hand, mind-wandering means that, just before the screen appeared, you were thinking about something completely unrelated to the task. Some examples of mind-wandering include thoughts about what to eat for dinner, thoughts about plans you have with friends, or thoughts about an upcoming test.

Importantly, mind-wandering can occur either because you intentionally decided to think about things that are unrelated to the task, or because your thoughts unintentionally drifted away to task-unrelated thoughts, despite your best intentions to focus on the task. When the thought-sampling screen is presented, we would like you to indicate whether any mind-wandering you might experience is intentional or unintentional.

Please be honest in reporting your thoughts. It is perfectly normal to mind-wander during the task. Your participation credit will not be affected by those mind-wandering reports. Also, the location of the thought probes is random. Please complete the task just as usual.

Do you have any remaining questions?

Appendix D: Meaning and salience maps for each probed picture

Rights and permissions

About this article

Cite this article

Zhang, H., Anderson, N.C. & Miller, K.F. Scene meaningfulness guides eye movements even during mind-wandering. Atten Percept Psychophys 84, 1130–1150 (2022). https://doi.org/10.3758/s13414-021-02370-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-021-02370-6