Abstract

Theories of visual attention hypothesize that target selection depends upon matching visual inputs to a memory representation of the target – i.e., the target or attentional template. Most theories assume that the template contains a veridical copy of target features, but recent studies suggest that target representations may shift "off veridical" from actual target features to increase target-to-distractor distinctiveness. However, these studies have been limited to simple visual features (e.g., orientation, color), which leaves open the question of whether similar principles apply to complex stimuli, such as a face depicting an emotion, the perception of which is known to be shaped by conceptual knowledge. In three studies, we find confirmatory evidence for the hypothesis that attention modulates the representation of an emotional face to increase target-to-distractor distinctiveness. This occurs over-and-above strong pre-existing conceptual and perceptual biases in the representation of individual faces. The results are consistent with the view that visual search accuracy is determined by the representational distance between the target template in memory and distractor information in the environment, not the veridical target and distractor features.

Similar content being viewed by others

Introduction

Our subjective experience of looking for something, such as a friend in a crowd, involves choosing a feature from the object of interest (e.g., the orange color of her jacket) and then scanning the scene until a match is found (Duncan & Humphreys, 1989b; Treisman & Gelade, 1980; Wolfe & Horowitz, 2017a). Models of visual search posit that the ability to do this involves the maintenance of the color “orange” in memory within a target (or attentional) template that is used to enhance sensory gain in task-relevant neurons (Chelazzi, Miller, Duncan, & Desimone, 1993; Desimone & Duncan, 1995; Treue & Martinez-Trujillo, 2003). Recent work has suggested, however, that the target template may not always contain veridical sensory features when distractors are linearly separable from targets (e.g., all more yellow than the orange target). Under these conditions, the target representation is shifted away from distractor features (i.e., is “redder”), increasing target-to-distractor discriminability (Geng & Witkowski, 2019).

Evidence for non-veridical information within the template has thus far come from studies using simple feature dimensions. For example, Navalpakkam and Itti (2007) found that observers were more likely to identify a 60° oriented line as the target when engaged in visual search for a 55° target line amongst 50° distractor. The remembered target was estimated using target identification “probe” trials interleaved with visual search trials. On probe trials, observers selected the target from an array of oriented lines that ranged from 30°to 80°. The shift in target representation was attributed to optimal modulations of sensory gain in neurons that selectively encode target, but not distractor, features (Navalpakkam & Itti, 2005). Others have argued that the shifts in target representation are due to encoding the relational property of the target, for example that target is the “reddest” object (Becker, Folk, & Remington, 2010), but there is wide agreement that attention can be tuned towards “off-veridical” features and doing so improves visual search accuracy (Bauer, Jolicoeur, & Cowan, 1996; D’Zmura, 1991; Geng, DiQuattro, & Helm, 2017; Hodsoll & Humphreys, 2001; Scolari, Byers, & Serences, 2012; Scolari & Serences, 2009; Yu & Geng, 2018).

These previous findings suggest that visual search performance is impacted by the representational distinctiveness of targets in memory and distractors in the visual environment, not just the veridical target features (Geng & Witkowski, 2019; Hout & Goldinger, 2015; Myers et al., 2015; Myers, Stokes, & Nobre, 2017). The principle of distinctiveness has been shown to be an important principle for target search of both simple features and complex objects (Bravo & Farid, 2009, 2016; Hout et al., 2016; Hout & Goldinger, 2015). However, it remains unknown if shifts in target representations in response to distractor stimuli occur when searching for "high level" objects such as a face depicting an emotion. Faces depicting emotion are an interesting test case because they vary in terms of perceptual information (e.g., the degree to which teeth are exposed or sclera are visible), but their perception is heavily influenced by conceptual knowledge (Gendron & Barrett, 2018; Lindquist & Gendron, 2013; Lindquist, Gendron, Barrett, & Dickerson, 2014; Lindquist et al., 2015). As a result, faces depicting emotions that are perceptually dissimilar (e.g., disgust in which facial features are contracted resulting in a reduced lip-nose space and squinted eyes, and fear in which facial features are expanded resulting in widened eyes and lips) may be more conceptually similar (e.g., representing negative, high-arousal states) than faces that are perceptually more similar but conceptually distant (e.g., fear and excitement/elation/joy in which eyes are wide and mouth is open). It therefore remains unclear if attentional shifting should occur on the perceptual features of an object that is so heavily determined by conceptual knowledge (Brooks & Freeman, 2018).

The current studies investigate whether the template representation of a face depicting emotions is systematically shifted in response to the distractor context despite perception being biased by pre-existing conceptual knowledge. In order to distinguish between the contents of the target template in memory and the use of that information during active visual search, we use the interleaved task procedure of Navalpakkam and Itti (2007) that mixes visual search “training” trials and target identification “probe” trials that query observer memory for the current target.

Using this procedure, we first acquire baseline “probe” trials interleaved with target-alone “visual search” trials (no distractors present). These data provide a baseline measurement of the target representation in isolation of distractor faces. This is important because we use face morphs that depict a combination of emotions and therefore expect the representation of each target face to be idiosyncratic and shaped by conceptual knowledge of the emotions depicted. In a separate block, we acquire data from probe trials interleaved with target search amongst distractor faces that always depict a more extreme emotion than the target (e.g., sadder). The critical question is whether the target representation will be shifted by the distractor faces.

By measuring the contents of a target template before and after the introduction of visual search distractors, we test the hypotheses that: (1) the attentional template for faces depicting emotions is shaped by conceptual knowledge, but is still subject to attentional shifting when distractors are present; and (2) that the distinctiveness of the target representation in memory and actual distractors (i.e., the template-to-distractor distinctiveness) will dictate visual search accuracy.

Experiment 1

Methods

Participants

Thirty undergraduates from UC Davis participated for course credit (mean age = 21.7 years, SD = 4.9, age range = 19–46; five males, two left-handed). Thirty participants were recruited for this and each subsequent experiment based on an estimate for Pearson’s correlation with a medium effect size (.5) with power of .8 and significance level of .05. This power analysis was used to anticipate the number of subjects necessary to estimate the correlation of interest in each study between an individual’s accuracy on visual search trials and their template representation estimated from face-wheel probe trials. All participants had normal or corrected-to-normal vision and provided informed consent in accordance with NIH guidelines provided through the UCD Institutional Review Board.

Apparatus

Stimuli were displayed on a 24-in. Dell LCD monitor (1,920 × 1,080 pixels), with a refresh rate of 60 Hz. Stimuli were generated using PsychoPy (Peirce, 2007, 2009). Each participant sat in a sound-attenuated, dimly lit room, 60 cm from the monitor.

Stimuli

Stimuli were composed of a set of faces in which prototypes of the facial expressions representing happiness, sadness, and anger were morphed continuously. The stimulus set originated from a single individual taken from the Karolinska Directed Emotional Faces database (KDEF; Lundqvist et al., 1998) displaying three emotional expressions: angry, happy, and sad. The images were first gray-scaled and then morphed from one expression to the next using linear interpolation (MorphAge, version 4.1.3, Creaceed). This morphing procedure generated a circular distribution of 360 images going from angry to happy to sad and back to angry again (ZeeAbrahamsen & Haberman, 2018).

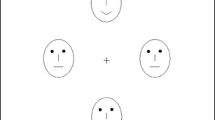

Visual search display

Face stimuli used during visual search “training” trials subtended 3.85° × 5° of visual angle. Stimuli were always in four locations, each centered 6° of horizontal and vertical visual angle from the fixation cross. The location of the target and distractors were randomly determined on each trial.

The two target stimuli were chosen from a separate categorization task through an online experiment (testable.org/t/9590fb61e) in which each of the 360 face images were presented one at a time and subjects had to select one of three buttons (labeled happy, sad, angry) to describe the face. This resulted in selection of a happy-sad morph and a sad-angry morph each of which had average classification rates of 50% for each of the two emotion categories (HappySad target: happy = 50.9% ± .07%, sad = 47.0% ± .07%; SadAngry target: sad = 49.0% ± .07%, angry = 50.9% ± .07%). We only used a happy-sad morph and a sad-angry morph as HappySad target and SadAngry target, respectively, not happy-angry morph due to ambiguous categorization at the border: the face with the most equivalent categorization probabilities for happiness and anger was also just as likely to be categorized as being sad (see Fig. 1B, face image 59: happy = 33.3% ± .07%, angry = 31.4% ± .06%, sad = 35.3% ± .07%).

(A) Illustration of a trial from the categorization task. (B) Results from the categorization task. Percentage of trials for each face classified as "happy” (green circles), "sad” (blue squares), or "angry” (red triangles). Target faces were chosen at points of greatest ambiguity between the happy and sad expressions (HappySad target) and sad and angry expressions (SadAngry target)

The three distractor stimuli used during visual search trials were 20°, 40°, and 60° away from the target face in the “rightward” direction – for the HappySad target, distractors were all sadder, and for the SadAngry target, distractors were all angrier (Fig. 2AB). The farthest distractor at 60° was the prototypical face depicting the full-strength emotion category prototype. The directionality of the distractors was randomly chosen but kept consistent across all target faces and subjects to avoid overlap in the distractor sets across targets and to avoid spurious differences in performance due to inherent asymmetries in the perception and memory of emotional faces (see Introduction). Search targets were shown sequentially and counterbalanced across participants.

Face-wheel probe display

On face-wheel "probe" trials, 30 faces appeared in a face-wheel. The stimuli subtended 1.9° × 2.5° of visual angle and the wheel subtended a radius of 10.8° of visual angle. The 30 faces were selected from the 360 original stimuli and arranged to change from happy to sad to angry and back again to happy when moving clockwise around the wheel (Fig. 2C). The target face was always in the face-wheel (referred to as the 0° stimulus) and each adjacent stimulus incremented by 12° increments to evenly sample the entire original wheel of 360 faces. While the wheel did not include actual distractor faces, the nearest faces included within the face-wheel were within 1 JND (just noticeable distance) from the actual distractors (ZeeAbrahamsen & Haberman, 2018),Footnote 1 and they were always located as “rightward” rotations from the target face on the face-wheel.

Experimental parameters for Experiment 1. (A) The relative positions of target (blue line) and three distractor faces (red lines) on the face-wheel. Black dotted lines indicate the location of faces depicting prototypical emotions. All face stimuli were morphs of equal steps between the prototypical stimuli. (B) Target and distractor face stimuli. (C) Illustration of the visual search “training” trials and face-wheel “probe” trials in target-alone and target w/distractor blocks. Visual search trials were interleaved with face-wheel trials at a 2:1 ratio throughout the experiment. On visual search trials, participants were asked to click on the target face and feedback was given for accuracy. Distractors on w/distractor trials were always 20°, 40°, and 60° from the target in one direction. Each face subtended 3.85° × 5° of horizontal and vertical visual angle. On probe trials, participants clicked on the remembered target face on the face-wheel. The wheel contained 30 faces, each subtending 1.9° × 2.5° of visual angle and the wheel subtended a radius of 10.8°. The target was always present within the wheel. No feedback was given. Selection of faces on probe trials that were to the left of the true target are labeled as “leftward” clicks; and selection of faces to the right of the true target are labeled as “rightward” clicks. Visual search distractors were all “rightward” rotations from the true target face. The face-wheel was randomly rotated on every trial to avoid motor regularities in selecting the target face

The face-wheel was randomly rotated on each trial in order to prevent strategic selection of the target based on spatial location on the screen, but the relative position of each face on the wheel was fixed (i.e., continuously morphed faces). The target face is always referred to as the 0° face, but its physical location was unpredictable on each face-wheel “probe” trial.

Design and procedure

Similar to the design by Navalpakkam and Itti (2007) (see also Geng et al., 2017; Scolari & Serences, 2009; Yu & Geng, 2018), all experiments incorporated two trial types that were interleaved: visual search “training” trials provided a target-distractor search context; and face-wheel “probe” trials measured the remembered target face within a visual search context. The use of separate visual search “training” trials and the face-wheel “probe” trials was necessary for obtaining a measurement of an observer’s remembered target that is uncontaminated by concurrent attentional competition.

The experiment began with an image of the first target face and instructions to remember the face. Observers were then instructed to find the target face on each subsequent visual search trial and click on it using the computer mouse with their right hand as soon as possible without sacrificing accuracy. Upon response, the display was removed, and auditory feedback – a high-pitch tone (700 Hz) for correct responses and a low-pitch tone (300 Hz) for incorrect responses – was provided. The target face appeared alone on the first 20 visual search trials (interleaved with ten face-wheel trials). The purpose of the “baseline” block was twofold: to further familiarize subjects with the target face and to acquire baseline data from the face-wheel “probe” trials to estimate the representation of the target face on its own. After the baseline block, an instruction screen informing subjects that distractors would now appear with the target was displayed. The next 60 visual search trials in all experiments always contained the same target face with three distractor faces. Visual search trials were interspersed with 30 “probe” face-wheel trials. The second part of the experiment began with instructions for the new target face, but was otherwise identical to the first part. Ordering of the target face was counterbalanced across subjects.

On the face-wheel “probe” trials, subjects were instructed to click on the remembered target face among 30 faces arranged in a circle. No auditory feedback was provided on the probe trials. Visual search “training” and face-wheel “probe” trials were randomly interleaved at a 2:1 ratio in every block of the experiment (Fig. 2C).

Results

Data from probe and visual search trials were analyzed separately for the two target faces depicting ambiguous emotions (i.e., a mildly sad and mildly angry “SadAngry” target; and mildly happy and mildly sad “HappySad” target) (Fig. 2). The probe data were transformed into an index of template bias based on the average proportion of total "rightward" clicks (i.e., clicks on non-target faces to the right of the target towards visual search distractors) minus the "leftward" clicks (i.e., clicks on non-target faces to the left of the target away from distractors). The proportion of clicks on the actual target face were excluded from this analysis since they would not affect calculation of the relative rightward versus leftward bias. Positive template bias values indicate a bias in probe responses towards visual search distractors; negative values indicate a bias in the direction opposite to visual search distractors. A zero value would indicate no overall bias (Fig. 2).

The template bias values were entered into a 2 target face (HappySad, SadAngry) × 2 visual search block (alone, w/distractor) repeated-measures ANOVA. First, there was an expected significant main effect of target face, F(1, 29) = 46.23, p < .001, η2= .62. This main effect was due to large differences in the representation of the two target faces in probe responses during both the target alone and w/distractor blocks (i.e., there was an overall leftward bias for HappySad and a rightward bias for SadAngry) (Fig. 3). This face-idiosyncratic pattern is consistent with previously reported biases in perception and memory of emotional faces (e.g., Brooks & Freeman, 2018). Second, there was a main effect of visual search block, F(1, 29) = 19. 38, p < .001, η2=.41, which resulted from the remembered target face shifting away from distractors during the w/distractor block (Fig. 3A). This confirms the primary hypothesis tested that the target representation would shift away from distractor faces once they were introduced. The interaction between the target face and visual search block was not significant, F(1, 29) = 2.2, p =.15, η2= .07.

Experiment 1 click probability on face-wheel “probe” trials for each of the two targets. (A) Average template bias, defined by probability of rightward clicks minus leftward clicks on face-wheel probe trials as function of visual search block. Positive values indicate bias towards visual search distractors and negative values indicate bias away from distractors. Despite large differences between target faces, both showed a more negative shift once distractors were introduced. Error bars are standard error of the mean. (B) Histogram data from probe trials interleaved with target-alone visual search blocks. (C) Histogram data from probe trials interleaved with target w/distractor visual search blocks. Orange bars are leftward faces from the target on the face-wheel; purple bars are faces rightward faces from the target; the gray bar is target face; black vertical line is location of nearest prototypical emotion face; pink horizontal bar denotes range of faces that were distractors on visual search trials

The primary hypothesis of interest was confirmed by the finding that the probe responses were shifted away from both target faces despite differences in the baseline probe performance during the target-alone block. We next examined the target-alone data more carefully in order to better understand the representational biases of each target face with and without distractors to see if the absolute directionality of the bias would predict visual search performance. When the target appeared alone, the HappySad target was remembered with a negative bias compared to zero (no bias), mean ± SD: -0.35 ± 0.39, t(29) = -4.8, p < .001, Cohen’s d = -0.89 (i.e., remembered as being happier and further away from sadder distractors), but the SadAngry target was remembered with a stronger positive bias (i.e., angrier, towards distractors, mean ± SD: 0.36 ± 0.32, t(29)=5.55, p < .001, Cohen’s d = 1.01). This difference in directionality belies the idiosyncratic influence of emotional concepts on perception and memory of emotional face stimuli (e.g., Brooks & Freeman, 2018). However, for both targets, the overall directionality of the biases shifted leftward with the introduction of distractor faces. Specifically, the leftward bias for the HappySad target was exaggerated farther leftward, t(29) = -7.66, p < .001, Cohen’s d = -1.40; and the rightward bias for the SadAngry target was no longer different from zero, t(29) = 0.22, p = .83, Cohen’s d = 0.04. These differing baseline patterns in the two target faces lead to the prediction that visual search performance should be better for the HappySad target than the SadAngry target because the mental template of the former is more distinct from distractors, even at baseline.

To test this prediction, the accuracy data from the visual search trials were analyzed using a 2 target face (HappySad, SadAngry) × 2 visual search block (target-alone, w/distractor) repeated-measures ANOVA. Both main effects were significant, target face: F(1,29)= 52.06, p < .001 η2=.64, visual search block: F(1,29)= 116.56, p < .001 η2=.801, as was the interaction, F(1,29)= 52.72, p < .001 η2=.65. The interaction was due to accuracy being at ceiling in the both target-alone blocks (mean ± SD: HappySad = .998 ±.009, SadAngry=1.0 ± 0.0), but significantly worse for the SadAngry target than the HappySad target during the w/distractor blocks (mean ± SD: HappySad=.84 ± .15, SadAngry=.53 ±.24; Fig. 4A). Poorer accuracy for the SadAngry target during the w/distractor visual search block is consistent with the greater overlap between the SadAngry probe responses and distractors. The pattern of results from RT was similar but only the main effect of distractor presence was significant, F(1,29) = 143.28, p < .001, η2= .83 (mean ± SD: target-alone = 1,351.8 ± 503.3 ms, target w/distractor = 3,025.5 ± 1,136.2 ms; interaction: F(1,29) = 1.24, p =.27, η2 = .07). The fact that the effects of interest manifest mostly in accuracy indicates that the target template determined the ability to pick out the correct target rather than the time to discriminate the target.

(A) Experiment 1 accuracy and reaction time (RT) on w/distractor visual search trials only. Accuracy was substantially lower for the SadAngry target, as predicted by the overlap between the target representation, obtained on probe trials, and distractors. Chance performance = 25%. Performance on the target-alone blocks was at ceiling (see text). (B) Correlation between average click on face-wheel probe trials and visual search accuracy. The correlation was significant for the SadAngry target (right) but not the HappySad target (left)

To further test this relationship between accuracy and the template bias, we correlated individual visual search accuracy on w/distractor trials with the average click response on face-wheel probe trials for each target face. There was a significant negative correlation for the SadAngry face, Pearson's r = -.47, n = 30, p < .01 (Fig. 4B). The significant correlation was driven by both individuals with less of a positive bias (i.e., less angry representation) having higher visual search accuracy and individuals with more positive biases (i.e., target representations that were angrier) having lower visual search accuracy. Note that some subjects were performing near chance, suggesting that those subjects could not differentiate between the target and distractors; those same subjects had positive biases in target representations near distractor stimuli. This result is consistent with our expectations that a target representation that is shifted away from distractors will lead to better visual search performance, particularly when target-distractor confusability is high.

There was no significant correlation for the HappySad target face (Pearson's r = .22, n = 30, p = .24; Fig. 4B), but almost all individuals had target representations with a negative bias (i.e., towards happier and away from the sadder distractors) and also had high visual search accuracies (Fig. 4A). This is consistent with the notion that visual search accuracy will be high as long as the template representation is sufficiently distinct from distractors.

Discussion

The results from Experiment 1 demonstrated that each target face had an idiosyncratic representation that was biased by conceptual knowledge of emotions. The baseline template bias for each target face was related to visual search performance. The SadAngry target was overall perceived as being more angry than sad, which led to greater confusability with “angrier” distractors during visual search and poor search performance. However, individuals with template biases that overlapped less with distractors did better in visual search. Baseline representations of the HappySad target were already biased away from distractors and visual search accuracy was high. Despite differences between the baseline perception of the two target faces, there was a shift in the representation away from distractors in both faces. This provides evidence that attentional shifting of the target representation away from distractor occurs even for complex stimuli. The shift in representation could have been based on either a shift in low-level perceptual features away from the distractor set (e.g., eyebrow distance) or it could have been based on a conceptual shift away from the distractor emotion (e.g., sadder). Either way, the data suggest that templates that are more confusable with distractors result in poorer visual search performance and that, overall, the target representation is repelled away from distractor faces.

Experiment 2

Our original hypothesis was that the target representation would shift away from distractors, as an attentional mechanism to increase the psychological distinctiveness of the target from potentially confusable distractors. The results from Experiment 1 were consistent despite large differences in the baseline representation of the two target faces. However, one alternative possibility is that the shift was actually a movement towards the prototypical emotion opposite to distractors, as a heuristic for remembering the target (e.g., the target is the “happy” face). In Experiment 2 we tested this alternative by selecting target and distractor faces that straddled a prototypical face depicting a single emotion (Fig. 5). If the previous results were a shift away from distractors in order to increase representational distinctiveness (as we hypothesized), then we should continue to see a leftward shift of the target representation (away from distractors) in this study. However, if the previous results reflected use of a category heuristic, then we should see a rightward shift once distractors appear, towards the nearest prototypical face even though it means the target template shifts toward distractors, which makes decreased representational distinctiveness.

(A) The relative positions of the target (blue line) and three distractor faces (pink = Experiment 2; red = Experiment 3). The location of faces on a continuous face-wheel with prototypical emotions are illustrated in black dotted lines. In both experiments, the nearest prototypical emotional face was located 30° from the target in the rightward direction (towards distractors). This example illustrates the Happy target face and the associated distractors in Experiments 2 and 3, but the same relative distances were used for “Angry” and “Sad” target faces and their distractor faces. (B) Target and distractor faces in Experiment 2 and 3

Methods

Participants

Thirty undergraduates from UC Davis participated for course credit (mean age = 21.5 years, SD = 1.8, age range = 19–25; 17 males, three left-handed). All participants had normal or corrected-to-normal vision and provided informed consent in accordance with NIH guidelines provided through the UCD Institutional Review Board.

Stimuli and apparatus

Experiment 2 was designed to test if the pattern of results in Experiment 1 could be due to a memory heuristic biased towards the face most prototypical of an emotional category (i.e., the prototypical face). The stimuli and procedures were identical to Experiment 1, but now the target and distractors faces were chosen to straddle the prototypical face depicting the full-strength prototype for one emotional category. Three target stimuli – “Happy,” “Sad,” and “Angry” – were chosen based on the categorization results in zeeAbrahamsen and Haberman (2018). The target was always referred to as the 0° stimulus. Three distractor stimuli used per target were 60°, 75°, and 90° away from the target face in one direction. The distractors were farther away from the target compared to Experiment 1 (20°, 40°, and 60°) in order for the target and distractor to straddle the prototypical face (30° from the target and the nearest distractor) without being perceptually indistinguishable from the prototypical face. Despite the increase in physical distance, we expected the psychological distance to be closer because the target and distractors straddle a face with a prototypical expression and share the nearest emotional category. Figure 5 illustrates the relative position of one target (Happy) and its associated distractors in a continuous face wheel.

Design and procedure

The design and procedures were identical to Experiment 1 with the exception that the number of target face-alone trials was increased to the first 24 visual search trials (interleaved with 12 face-wheel trials).

Results

Using the same strategy for analysis as Experiment 1, the face-wheel click data were first used to calculate an index of template bias by taking the difference between the probability of making a rightward click and that of making a leftward click. Again, positive values indicate a template bias towards visual search distractors and negative values index a template bias away from distractors. The index of template bias was entered into a 3 target face (Happy, Sad, Angry) × 2 visual search block (alone, w/distractor) repeated-measures ANOVA. Both main effects and the interaction were significant.

The main effect of target face was also significant, F(2,58) = 11.28, p < .001, η2 = .28, again showing that the perception of target faces was idiosyncratic and strongly biased by their emotional category. Bonferroni-corrected post hoc comparisons showed that the main effect of face was due to an overall stronger positive bias for the “Happy” face compared to the two others, t(29) > 3.70, p < .01, Cohen’s d = .67, but no difference between the “Sad” and “Angry” faces, t(29) = 1.42, Cohen’s d = .26. All three faces had numerically positive biases, likely reflecting the overall bias to remember the target face as being closer to the nearest prototypical emotional face (i.e., category bias). This effect was the most extreme for the Happy target, which predicts that it will be the most confusable with distractors during visual search (see below).

As before, there was also a main effect of visual search block (F(2,58) = 30.42, p < .001, η2 = .51) due to an overall negative shift in the template bias in the w/distractor block. This finding replicates Experiment 1 and suggests that experience with linearly separable distractors caused the target representation to shift away from confusable distractor emotions in order to increase conceptual distinctiveness of the target from distractor faces.

The interaction between target face and visual search block was also significant, F(2,58) = 9.52, p < .001, η2 = .25, and was due to a large significant change in the directionality of the template bias for the “Sad” target, t(29) = 6.90, p < .001, Cohen’s d = 1.26, a moderate change for the Happy face, t(29) = 2.50, p < .02, Cohen’s d = 0.46, and no change for the Angry face, t(29) = 1.55, p = 0.13, Cohen’s d = 0.28 (Fig. 6A) (mean ± SD: happy from 0.66 ± 0.25 to 0.52 ± 0.39; sad: from 0.37 ± 0.50 to 0.07 ± 0.50; angry from 0.36 ± 0.42 to 0.25 ± 0.44). All faces were biased towards the prototypical face to begin with but all shifted farther away from distractors once they were appeared, albeit to different degrees (Fig. 6). Together, these results rule out the alternative hypothesis that shifting in Experiment 1 was due only to a movement in the target representation towards the prototypical face depicting the nearest emotion. Instead, they demonstrate that the target representation shifted away from visual search distractors irrespective of exactly where the target and distractors lie within an emotional category.

Experiment 2. (A) Template bias, calculated from the rightward minus leftward click probabilities on probe trials, for each of the three target faces. The template bias shifted in the negative direction between visual search blocks for all three target faces, although the absolute values of bias were very different. (B) Histogram of click distributions from probe trials occurring with target-alone visual search. (C) Histogram of clicks target w/distractor visual search trials. Orange bars are leftward faces from the target on the face-wheel; purple bars are rightward faces from the target; the gray bar is target face; black vertical line is nearest prototypical facial emotion; pink bar denotes range of faces that were distractors on w/distractor visual search trials

Next, we conducted a one-way ANOVA of visual search accuracy for the three target faces. The target-alone trials were not included in this analysis because accuracy was at ceiling for all three faces when no distractors were present (see Experiment 1). Consistent with the probe data showing the greatest positive bias for the “Happy” target, accuracy was significantly lower for the Happy face than the other two faces, F(2,58)=86.80, p < .001, η2 =.75, both pairwise ts(29) > 9.7, ps < .001, Cohen’s ds > 1.7 with Bonferroni correction, although performance was still well above chance (25%) (Fig. 6). There was no difference between the Sad and Angry faces, t(29) = 0.43, p = 1, Cohen’s d = 0.08. A similar pattern was found in reaction time, (F(2,58) = 4.84, p < .05, η2 = .14) with post hoc comparisons revealing a marginal differences between the Happy target and the two other targets (both ts(29) > 2.35, ps = .07 with Bonferroni correction, Cohen’s d > .43). There was no difference between RT for the Sad and Angry targets, t(29) = 0.62, p =1.0, Cohen’s d = 0.11.

Also, as in Experiment 1, we tested for a correlation between visual search accuracy and the average click on face-wheel probe trials for each target. The average click distance from the target was calculated from all the face-wheel probe data. The correlation was significant for the Happy and Sad targets, but not the Angry target (Happy: Pearson’s r = -.63, n = 30, p < .001; Sad: r = -.49, n = 30, p < .01; Angry: r = -.07, n = 30, p =.70). The negative correlations again indicated that individuals with target representations that were more distinct from distractors tended to perform better on visual search, particularly when the target was conceptually confusable with distractors.

Discussion

The purpose of Experiment 2 was to provide a conceptual replication for Experiment 1 and to differentiate between two alternative possibilities for the shift in target representation. Despite very different baseline profiles for these target stimuli, the results replicated Experiment 1 and ruled out the possibility that the leftward shift was due to the target memory being biased towards the most prototypical face for an emotional category. Instead, the results suggest that shifting occurred away from distractor stimuli, effectively increasing the distinctiveness of targets from distractors. The size of the shift was most important for search (accuracy) performance when the baseline target representation was most confusable with distractors, suggesting that the distance between the target representation and distractors has functional significance.

Experiment 3

An assumption of the previous studies was that shifts in the target representation only occur when targets and distractors are potentially confusable (e.g., depicting a shared emotional concept). To test this more directly, we conducted another experiment that was identical to Experiment 2 in all regards except that the distractors were now 105°, 120°, and 135° from the target stimulus (see Fig. 5). As in Experiment 2, the face with the prototypical emotion was always a 30° rightward rotation from the target face, in the same direction as the distractors, but now distractors were closer to a prototypical face depicting a different emotional category (e.g., target appears sadder but distractors angrier). We hypothesized that the shift-away on w/distractor trials seen in the previous two experiments would now disappear since the distance between the target and distractors is sufficiently large (i.e., across conceptual categories) that possible confusability between targets and distractors should be low.

Methods

Participants

Thirty undergraduates from UC Davis participated for course credit (mean age = 21.7 years, SD = 5.9, age range = 18–47; eight males, five left-handed). All participants had normal or corrected-to-normal vision and provided informed consent in accordance with NIH guidelines provided through the UCD Institutional Review Board.

Stimuli and apparatus

The stimuli and procedures for Experiment 3 were identical to those in Experiment 2 with the exception that distractors during the visual search trials were now 105°, 120°, and 135° from the target stimulus (Fig. 5). The prototypical expression was still 30° from the target face, in the same direction as the distractors.

Design and procedure

All aspects were identical to Experiment 2.

Results

As before, the template bias data from the probe trials (probability of positive clicks minus negative clicks) were entered into a 3 target face (happy, sad, angry) × 2 visual search block (target alone, w/distractor) repeated measures ANOVA. Similar to Experiment 2, there was a main effect of target face, F(2, 58) = 10.13, p < .001, η2 = .26. The target faces were identical to those used in Experiment 2, and the pattern of the main effect was similar such that the template bias for the Happy face was the most extreme, and significantly more positive than the other two, both ts(29) > 3.6, ps < .01, with Bonferroni correction, Cohen’s ds > 0.67. The Sad and Angry faces were not different from each other, t(29)=.93, p = 1.0, Cohen’s d = 0.17 (Fig. 7A). These results replicate Experiment 2 and demonstrate that the idiosyncratic representation of target faces was stable across experiments.

(A) Visual search accuracy and RT for w/distractor visual search trials. Performance was substantially worse for the Happy target compared to the other two target faces suggesting that the rightward bias in the target face made it harder to distinguish targets from equally “happy” distractors. Chance = .25. (B) Correlations between an individual’s accuracy on visual search trials and average click on face-wheel probe trials. Gray line farthest left is the location of the target face; black middle line is the nearest prototypical emotional face; pink right line is the nearest distractor face on w/distractor visual search trials

Experiment 3. (A) Template bias, calculated from the rightward minus leftward click probabilities on probe trials, for each of the three target faces. There was no shift in the target template across blocks. Compare results with those from Experiment 2 (Fig. 6). (B) Histogram of face-wheel clicks during target-alone visual search. These data replicate Experiment 2, demonstrating stability in the representation of individual target faces. (C) Histogram of face-wheel clicks during the target w/distractor visual search block. Orange bars are leftward faces from the target on the face-wheel; purple bars are rightward faces from the target; the gray bar is target face; black vertical line is nearest prototypical emotional face; pink horizontal bar denotes range of distractor faces on w/distractor visual search trials

In contrast to Experiment 2, there was no main effect of visual search block, F(2, 58) = .16, p = .69, η2=0.006. The interaction was also not significant, F(2, 58) =. 94, p = .40, η2=0.03. The target representation did not change when very distant distractors were introduced to the visual search displays. This evidence is consistent with the idea that target representations only shift in response to distractor presence when there is potential competition for attention between the target and distractors.

Further evidence that the distractors did not compete with targets in this experiment was found in ceiling performance in visual search accuracy on w/distractor trials, all > 98.5% ± 2.5% (Fig. 9). There were no differences between any targets based on an ANOVA of the three target faces, F(2,58) = 1.5, p = .22, , η2 =. 0.05. There was a significant effect in RT, F(2,58) = 7.01, p < .01, η2 =. 20. The effect was due to significantly shorter RTs for the Sad target compared to Happy, t(29) = 4.2, p < .001 with Bonferroni correction, Cohen’s d = 0.78, marginally shorter RTs compared to Sad, t(29) = 2.05, p = .14, with correction, Cohen’s d = 0.38, and no difference between the Sad and Angry targets, t(29) = 1.46, p = .50, Cohen’s d = .27. Even though the target faces were identical to Experiment 2, performance was now at ceiling (ceiling accuracy and overall faster RT, cf. Figs. 7 and 9).

Experiment 3. (A) Visual search accuracy and RT. Scale is identical to plots from Experiment 2 to facilitate comparison (see Fig. 6). (B) Correlation between average click on the face-wheel probe trials and accuracy on visual search target w/distractor trials. Black left line indicates true target face; gray line indicates nearest prototypical emotional face; pink line (only visible on Happy target plot) indicates the nearest distractor expression from visual search trials. Note ceiling accuracy in performance despite variability in average click distance from the target (cf. Fig. 7)

As expected, given that attentional competition during visual search was low and there was no change in the target template, there were also no significant correlations between average click distance from the target (on probe trials) and visual search accuracy (on distractor present trials), all r > -.2 and < 1; all n = 30; all ps > .28 (Fig. 9). This demonstrates that when distractors are conceptually dissimilar, it does not matter if the target template is shifted slightly in the direction of the distractors as long as the template is sufficiently distinct from distractors to distinguish the target during visual search.

Discussion

The purpose of Experiment 3 was to test if shifting was influenced by the distance between target and distractors. Consistent with expectations that attentional mechanisms serve to resolve competition, we found that shifting no longer occurred when distractors were sufficiently distant to be clearly categorically different. Additionally, the target faces were identical to those in Experiment 2 and reproduced the face-specific baseline representations, suggesting that emotional face stimuli are idiosyncratically, but stably, represented within the context of conceptual knowledge about emotions. The effect of attentional shifting, however, did not occur because distractors were sufficiently dissimilar as to produce little or no competition for selection. This suggests that the leftward shifting we observed in Experiments 1 and 2 were due to distractor competition.

General discussion

These studies demonstrate that target templates that contain perceptual and conceptual information may be shifted to increase the representational distinctiveness of targets from distractors to aid visual search. While the same principles have been previously shown for simple target features (e.g., color or orientation), these studies show that this also occurs for faces depicting emotions, the perception of which is strongly shaped by conceptual knowledge. Our results extend knowledge of what information is in the “attentional template” and how malleable that content is to attentional demands.

The idiosyncratic patterns of baseline probe data were consistent with the extant literature on the role of emotion concepts on their perception (Brooks & Freeman, 2018; Gendron & Barrett, 2018; Lindquist & Gendron, 2013; Lindquist et al., 2014; Lindquist et al., 2015) and the finding that emotions are treated as more similar if they share features related to affect, a neurophysiological state characterized by valence and arousal (for extended reviews and discussions, see Lisa Feldman Barrett & Bliss-Moreau, 2009; L. F. Barrett & Russell, 1999; Russell, 2003; Russell & Barrett, 1999). For example, when organized according to affective space, happiness and sadness are opposite in valence (positive versus negative) but similar in arousal; anger and sadness are more similar in valence but different in arousal. The organization along valence can explain why the HappySad target was very distinct from sad distractors and why the SadAngry face was confusable with angrier distractors in Experiment 1, but a more detailed understanding of how the baseline template representations map onto emotion concepts and affect requires further study.

This idiosyncratic face template shift between target faces suggests that the shift may occur in the conceptual aspect of facial expression (e.g., “happier” face template), rather than the local perceptual aspect of facial expression (e.g., “shorter distance” between eyes and mouth), but it is still unclear whether only conceptual aspect of facial expression attributes to the shift of face template or both of conceptual and perceptual aspect attribute to the shift of face template. It will require further research.

Most importantly, the target-alone probe trials served as a benchmark for change in the target template once distractors were introduced. All target faces, despite very different patterns in baseline representations, shifted away from distractors once they appeared in Experiments 1 and 2. The replication between Experiments 1 and 2 ruled out the possibility that shifting was an incidental memory heuristic to encode a prototypical emotion. Instead, they show that shifting is a mechanism that increases the distinctiveness of targets from distractors in support of visual search behaviors. The shifting could have been due to either changes in the representation of low-level perceptual features (e.g., at the edges of the mouth or eyes) or conceptual changes (e.g., the happiest or saddest face). Identifying the exact dimensions of change is a necessary next step in fully understanding how the shift is represented. For now, the results show a clear shift in the target representation away from distractors that are similar enough to be competitors for attention.

In addition to the global shift, the most confusable target-distractor pairings (evident in the degree of overlap between the target probe responses and distractor faces), there was a negative correlation such that individuals with target representations more distinct from distractors had higher visual search accuracy. The fact that accuracy was affected, despite unlimited viewing time and feedback, suggests that target decisions were based on matching the visual search stimuli to the remembered template representation (Duncan & Humphreys, 1989a; Wolfe & Horowitz, 2017b). The distinctiveness of the internal representation (i.e., the target template) from external distractors was key to correct target decisions.

Finally, when distractors were no longer conceptually confusable (i.e., depicting little or no overlap in emotional category from the target) in Experiment 3, visual search accuracy was uniformly high and the target template shift no longer occurred. Presumably this occurred because there was no need for exaggerating the target representation for resolving distractor competition. Future work will be necessary to more fully characterize the relative strength of distractor competition necessary for target shifting to occur for different stimulus dimensions.

It might argue that shifting away from distractors is not due to an adjustment of the target template but due to adaptation or contrast effects in the visual system. While it is possible that contrast effects play a role in creating a shift in the target representation, it is unlikely to account for the entire effect. First, Experiment 3 did not show a shift, but should have if shifting were solely a contrast effect. Second, when further analyzing click proportion data by dividing to the early and late phase, the shift became bigger in the late phase than early phase, which suggests a gradual adjustment. Finally, previous research on ensemble perception with emotional faces demonstrated when a face that depicts an emotion is presented with other faces, the perceived facial expression moves toward (not away from) the other expressions (Corbin & Crawford, 2018).

Together, our findings suggest that context-driven template shifting is a general attentional mechanism that maximizes the distinctiveness of goal-relevant representations and task-irrelevant information. Template shifting complements other well-documented attentional mechanisms that increase the ratio of the target signal to distractor noise, such as feature-based gain enhancement (Liu, Larsson, & Carrasco, 2007; Treue & Martinez-Trujillo, 2003; Zhang & Luck, 2009). The context dependence of template representations suggests that attentional efficiency is determined by recursive interactions that update the attentional template based on the environmental context, which then increases the success of selecting the target with the environment (Bravo & Farid, 2012; 2016).

Notes

ZeeAbrahamsen and Haberman (2018) measured JNDs of the face stimuli that we used in the present study and showed that the average 75% JND was 27°, which was wider than the current increments (12°).

References

Barrett, L. F., & Bliss-Moreau, E. (2009). Chapter 4 Affect as a Psychological Primitive. In (pp. 167-218).

Barrett, L. F., & Russell, A. A. (1999). The structure of current affect: Controversies and emerging consensus. Current Directions in Psychological Science, 8(1), 10-14. doi:https://doi.org/10.1111/1467-8721.00003

Bauer, B., Jolicoeur, P., & Cowan, W. B. (1996). Visual search for colour target that are or are not linearly separable from distractors. Vision Research, 36(10), 1439-1465.

Becker, S. I., Folk, C. L., & Remington, R. W. (2010). The role of relational information in contingent capture. J Exp Psychol Hum Percept Perform, 36(6), 1460-1476. doi:https://doi.org/10.1037/a0020370

Bravo, M. J., & Farid, H. (2009). The specificity of the search template. J Vis, 9(1), 34 31-39. doi:https://doi.org/10.1167/9.1.34

Bravo, M. J., & Farid, H. (2016). Observers change their target template based on expected context. Atten Percept Psychophys, 78(3), 829-837. doi:https://doi.org/10.3758/s13414-015-1051-x

Brooks, J. A., & Freeman, J. B. (2018). Conceptual knowledge predicts the representational structure of facial emotion perception. Nature Human Behaviour, 2(8), 581-591. doi:https://doi.org/10.1038/s41562-018-0376-6

Chelazzi, L., Miller, E. K., Duncan, J., & Desimone, R. (1993). A neural basis for visual search in inferior temporal cortex. Nature, 363(6427), 345-347.

D’Zmura, M. (1991). Color in visual search. Vision Research, 31, 951–966.

Desimone, R., & Duncan, J. (1995). Neural mechanisms of selective visual attention. Annu Rev Neurosci, 18, 193-222. doi:https://doi.org/10.1146/annurev.ne.18.030195.001205

Duncan, J., & Humphreys, G. K. (1989a). Visaul search and stimulus similarity. Psychological Review, 96(3), 433-458.

Duncan, J., & Humphreys, G. K. (1989b). Visual search and stimulus similarity. Psychological Review.

Gendron, M., & Barrett, L. F. (2018). Emotion perception as conceptual synchrony. Emotion Review, 10, 101-110. doi:https://doi.org/10.1177/1754073917705717

Geng, J. J., DiQuattro, N. E., & Helm, J. (2017). Distractor probability changes the shape of the attentional template. Journal of Experimental Psychology: Human Perception and Performance, 43(12), 1993-2007. doi:https://doi.org/10.1037/xhp0000430

Geng, J. J., & Witkowski, P. (2019). Template-to-distractor distinctiveness regulates visual search efficiency. Current Opinion in Psychology. doi:https://doi.org/10.1016/j.copsyc.2019.01.003

Hodsoll, J., & Humphreys, G. W. (2001). Driving attention with the top down: the relative contribution of target templates to the linear separability effect in the size dimension. Percept Psychophys, 63(5), 918-926. Retrieved from https://www.ncbi.nlm.nih.gov/pubmed/11521856

Hout, M. C., Godwin, H. J., Fitzsimmons, G., Robbins, A., Menneer, T., & Goldinger, S. D. (2016). Using multidimensional scaling to quantify similarity in visual search and beyond. Attention Perception & Psychophysics, 78(1), 3-20. doi:https://doi.org/10.3758/s13414-015-1010-6

Hout, M. C., & Goldinger, S. D. (2015). Target templates: the precision of mental representations affects attentional guidance and decision-making in visual search. Atten Percept Psychophys, 77(1), 128-149. doi:https://doi.org/10.3758/s13414-014-0764-6

Lindquist, K. A., & Gendron, M. (2013). What’s in a Word? Language Constructs Emotion Perception. Emotion Review, 5(1), 66-71. doi:https://doi.org/10.1177/1754073912451351

Lindquist, K. A., Gendron, M., Barrett, L. F., & Dickerson, B. C. (2014). Emotion perception, but not affect perception, is impaired with semantic memory loss. Emotion, 14(2), 375-387. doi:https://doi.org/10.1037/a0035293

Lindquist, K. A., Satpute, A. B., & Gendron, M. (2015). Does language do more than communicate emotion? Curr Dir Psychol Sci, 24(2), 99-108. doi:https://doi.org/10.1177/0963721414553440

Liu, T., Larsson, J., & Carrasco, M. (2007). Feature-based attention modulates orientation-selective responses in human visual cortex. Neuron, 55(2), 313-323. doi:https://doi.org/10.1016/j.neuron.2007.06.030

Myers, N. E., Rohenkohl, G., Wyart, V., Woolrich, M. W., Nobre, A. C., & Stokes, M. G. (2015). Testing sensory evidence against mnemonic templates. Elife, 4, e09000. doi:https://doi.org/10.7554/eLife.09000

Myers, N. E., Stokes, M. G., & Nobre, A. C. (2017). Prioritizing Information during Working Memory: Beyond Sustained Internal Attention. Trends Cogn Sci, 21(6), 449-461. doi:https://doi.org/10.1016/j.tics.2017.03.010

Navalpakkam, V., & Itti, L. (2005). Modeling the influence of task on attention. Vision Res, 45(2), 205-231. doi:https://doi.org/10.1016/j.visres.2004.07.042

Russell, J. A. (2003). Core Affect and the Psychological Construction of Emotion. Psychological Review, 110(1), 145-172.

Russell, J. A., & Barrett, L. F. (1999). Core affect, prototypical emotional episodes, and other things called emotion: Dissecting the elephant. Journal of Personality and Social Psychology, 76, 805-819.

Scolari, M., Byers, A., & Serences, J. T. (2012). Optimal deployment of attentional gain during fine discriminations. J Neurosci, 32(22), 7723-7733. doi:https://doi.org/10.1523/JNEUROSCI.5558-11.2012

Scolari, M., & Serences, J. T. (2009). Adaptive allocation of attentional gain. J Neurosci, 29(38), 11933-11942. doi:https://doi.org/10.1523/JNEUROSCI.5642-08.2009

Treisman, A., & Gelade, G. (1980). A feagure-integration theory of attention. Cognitive Psychology.

Treue, S., & Martinez-Trujillo, J. (2003). Feature-based attention influencesmotion processing gain in macaque visual cortex. Nature, 399.

Wolfe, J. M., & Horowitz, T. S. (2017a). Five factors that guide attention in visual search. Nature Human Behaviour, 1(3), 0058. doi:https://doi.org/10.1038/s41562-017-0058

Wolfe, J. M., & Horowitz, T. S. (2017b). Five factors that guide attention in visual search. Nature Human Behaviour, 1(3). doi:https://doi.org/10.1038/s41562-017-0058

Yu, X., & Geng, J. J. (2018). The Attentional Template is Shifted and Asymmetrically Sharpened by Distractor Context. Journal of Experimental Psychology: Human Perception and Performance. doi:https://doi.org/10.1037/xhp0000609

ZeeAbrahamsen, E., & Haberman, J. (2018). Correcting "confusability regions" in face morphs. Behav Res Methods, 50(4), 1686-1693. doi:https://doi.org/10.3758/s13428-018-1039-2

Zhang, W., & Luck, S. J. (2009). Feature-based attention modulates feedforward visual processing. Nat Neurosci, 12(1), 24-25. doi:https://doi.org/10.1038/nn.2223

Acknowledgements

We would like to thank Naomi Conley for helpful early discussions, and Mary Kosoyan and Alexis Oyao for help with data collection. The work was supported by NSF BCS-201502778 and 1R01MH113855 to JJG.

Open practices statement

The data and materials for all experiments are available from the authors upon request.

Author information

Authors and Affiliations

Contributions

B.W. and J.J.G developed the study concept and design, collected data, and performed the data analysis and interpretation. J.H. provided the face stimuli. All authors contributed to writing the manuscript.

Corresponding authors

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Won, BY., Haberman, J., Bliss-Moreau, E. et al. Flexible target templates improve visual search accuracy for faces depicting emotion. Atten Percept Psychophys 82, 2909–2923 (2020). https://doi.org/10.3758/s13414-019-01965-4

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-019-01965-4