Abstract

Objects in peripersonal space are of great importance for interaction with the sensory world. A variety of research exploring sensory processing in peripersonal space has produced extensive evidence for altered vision near the hands. However, visual representations of the peripersonal space surrounding the feet remain unexplored. In a set of four experiments, we investigated whether observers experience biases in visual processing for objects near the feet that mirror the alterations associated with near-hand space. Participants performed attentional-cueing tasks in which they detected targets appearing (1) near or far from a single visible foot, (2) near one of two visible feet, (3) near or far from a nonfoot visual anchor, or (4) near or far from an occluded foot. We found a temporal cost associated with detecting targets appearing far from a visible foot, but no biases were associated with targets appearing near versus far from either a nonfoot visual anchor or an occluded foot. These results provide the first evidence suggesting that objects within stepping or kicking distance are processed differently from objects outside of peripersonal foot space.

Similar content being viewed by others

A variety of neurophysiological, neuropsychological, and psychophysical evidence suggests that when observers view objects that are within peripersonal space—objects they can easily reach with their hands—they experience alterations in visual processing. The hands’ proximity to visual stimuli introduces changes in perception (Cosman & Vecera, 2010), attention (e.g., Abrams, Davoli, Du, Knapp, & Paull, 2008; Reed, Grubb, & Steele, 2006), and memory (Davoli, Brockmole, & Goujon, 2012; Kelly & Brockmole, 2014; Thomas, Davoli, & Brockmole, 2013; Tseng & Bridgeman, 2011; see Brockmole, Davoli, Abrams, & Witt, 2013, and Tseng, Bridgeman, & Juan, 2012, for reviews). The hands need not be visible to an observer for their presence to influence visual processing (Abrams et al., 2008; Reed et al., 2006), but processing changes occur specifically within the hands’ grasping space (Adam, Bovend’Eert, van Dooren, Fischer, & Pratt, 2012; Reed, Betz, Garza, & Roberts, 2010), likely reflecting an adaptive interaction between the visual system and the body that successfully guides behavior (e.g., Graziano & Cooke, 2006). Recent work has supported the idea that vision in peripersonal space is specifically tuned to facilitate the processing of action-relevant information (Thomas, 2015).

To date, the investigation of altered vision in peripersonal space has focused exclusively on comparing observers’ performance on visual-processing tasks when they viewed stimuli near their hand(s) with conditions in which stimuli appear far from the hands. However, recent observations of interactions between tactile, proprioceptive, and visual inputs near the hands and feet have shown that the interplays between vision and touch are remarkably similar for these effectors, suggesting that a representation of peripersonal space exists for the feet (Flögel, Kalveram, Christ, & Vogt, 2016; Schicke, Bauer, & Röder, 2009). Like the hands, the feet are automatically driven by visual input, rapidly adjusting position when a target for action changes location (Reynolds & Day, 2005). And, like representations of the hands’ positions, representations of the positions of the feet are not strictly tied to anatomical location, but instead appear to be mapped in external coordinates based on a visual reference frame that may aid in action production (Schicke & Röder, 2006). Weeks before they are able to reach for seen toys with their hands, infants are able to use their feet to make contact with desired objects (Galloway & Thelen, 2004). Early primates displayed similar grasping functions in both hands and feet (MacNeilage, 1990), and evidence suggests that hominin hands and feet coevolved, with changes in the toes facilitating matching evolutionary changes in the hands that may have aided the development of tool use (Rolian, Lieberman, & Hallgrimsson, 2010). The parallels between the hands and feet—and the relevance that both have for action—raise the possibility that observers may show biases in processing stimuli presented near the feet. Are objects within stepping or kicking distance viewed differently than objects outside of this peripersonal space?

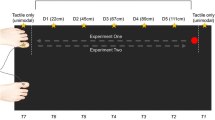

To examine visual processing near the feet, we adapted a classic attentional-orienting paradigm (Posner, 1980) that researchers have successfully used to study altered vision near the hands (Reed et al., 2010; Reed et al., 2006; Sun & Thomas, 2013; Thomas, 2013). These studies have shown that participants are faster to detect targets appearing near their hands than targets outside of the hands’ grasping space. We explored the possibility that similar biases in vision may occur near the feet. Participants detected targets appearing near or far from a single visible foot (Exp. 1), near one of two visible feet (Exp. 2), near or far from a visual anchor (Exp. 3), or near or far from an occluded foot (Exp. 4). Across experiments, we found evidence for altered vision specifically near a single visible foot.

Experiment 1

To investigate any differences in the processing of stimuli appearing near versus far from the feet, we asked participants to perform a standard covert-attention task on a display that rested in front of them on the floor. We compared participants’ performance on this task under conditions in which they rested their right or their left foot on the right or left side of the display, respectively, against a control condition in which participants instead kept both feet tucked out of view underneath their chair.

Method

Participants

Forty-eight undergraduate students from North Dakota State University participated for course credit.

Stimuli and apparatus

We used MATLAB and the Psychophysics Toolbox to present a typical Posner attentional-cueing paradigm on a 20.1-in. Dell 2007FPb monitor with a refresh rate of 60 Hz. The monitor rested face up on the floor. The stimuli were a central black fixation cross (~2°) flanked on the left and the right by black square frames (~2°) at a distance of approximately 7°, and a solid black target circle (~1°) on a white background. Participants made responses with a standard keyboard.

Procedure and design

Participants removed their shoes, were seated, and then were asked to lean forward to bring the screen into view (Fig. 1). The keyboard used for response was positioned in the lap of the participant. Participants performed a target detection task designed to match the conditions of previous experiments that had investigated visual processing near the hands (e.g., Reed et al., 2006). Each trial began with the presentation of a central fixation cross and two square frames, one on either side of fixation. After a random delay between 1,500 and 3,000 ms, the border of one of the square frames darkened, serving as a cue to the target location. On 70 % of trials the cue was valid, and the target appeared in the cued location 200 ms after cue onset. On 20 % of trials the cue was invalid, and the target instead appeared in the uncued location. The target remained on the display until participants indicated that they had detected it by pressing the “H” key on the keyboard. The remaining 10 % of trials were catch trials in which no target appeared and the cue display remained onscreen for 3,000 ms until the next trial began.

Participants began the experiment by completing one block of 20 practice trials in which they made responses on the keyboard with the right hand and kept both feet tucked under their chair. Following practice, participants completed 12 blocks of 60 trials each in three different foot placement conditions. Participants performed four blocks of trials in which they rested their right foot on the right side of the display, placing the inner side of the foot adjacent to the right target location (right foot near); four blocks of trials in which they similarly rested their left foot on the left side of the display (left foot near); and four blocks of trials in which they instead kept both feet tucked under the chair and out of view (control). For each of the foot placement conditions, participants performed two blocks in which they made target detection responses with the right hand, and two blocks in which they responded with the left hand. Before a block of trials began, participants viewed a screen with the fixation cross, square frames, and written instructions indicating the foot position and response hand required for that block. On foot-adjacent blocks, this instruction screen also displayed a row of black dots between the left or the right square frame and the edge of the display, which served as a guide to ensure consistent foot placement across participants. An experimenter ensured that participants complied with the foot placement instructions. When the participant pressed a key to start the block, these dots disappeared. Trials within a block and blocks within the experiment were presented in a randomized order.

Results and discussion

Five participants made response errors on more than 50 % of catch trials and were excluded from further analyses. The remaining 43 participants had a catch trial error rate of 16.8 %. To eliminate anticipation and inattention errors, trials with a reaction time of less than 200 ms or greater than 1,000 ms (11.4 % of the total data) were also excluded from the analyses. Mean reaction times were calculated to targets on either side of the monitor under each of the three foot placement conditions and are displayed in Fig. 2.Footnote 1 To examine the influence of foot proximity on target detection, we analyzed the data using a 3 × 2 within-subjects analysis of variance (ANOVA) with the factors Foot Placement (right, left, or no foot on display) and Target Side (target near or far from the footFootnote 2). Although the main effect of foot placement was not statistically significant [F(2, 84) = 1.697, p = .190], we did observe a significant main effect of target side [F(1, 42) = 7.910, p = .007, η p 2 = .158]. More importantly, the interaction between foot placement and target side was also significant [F(2, 84) = 5.918, p = .004, η p 2 = .123].Footnote 3 Bonferroni-adjusted post-hoc pairwise comparisons showed that participants were significantly slower to detect targets far from the right foot than targets near the right foot [t(42) = 3.318, p = .006], whereas the reaction time differences for near versus far targets in the left-foot and control conditions failed to reach significance (both adjusted p values > .17).

Results from Experiment 1. Error bars represent ±1 SEM

The results of Experiment 1 provide the first evidence that the proximity of an observer’s feet to visual information has an impact on visual processing. We found evidence of a temporal processing cost for stimuli distant from the right foot. However, the presence of the left foot on the display did not seem to impact target detection in a similar manner. Investigations of altered vision near the hands have found that right-handed observers tend to show biases exclusively for the space around their dominant hand (Le Bigot & Grosjean, 2012; Lloyd, Azañón, & Poliakoff, 2010; Tseng & Bridgeman, 2011), consistent with the notion that these biases are specifically tied to the locations where action is most likely to occur. Although we did not collect foot preference information from our participants in this experiment, the rates of right foot dominance in the population are generally high (~80 %; e.g., Gentry & Gabbard, 1995), and it is reasonable to assume the majority of our participants would show a preference for acting with the right foot over the left. Our results are therefore consistent with a deprioritization of processing for visual information outside of the foot’s action space.

Experiment 2

The results of the first experiment suggest that observers may experience changes in visual processing when viewing objects near their feet. To further investigate these processing changes in peripersonal foot space, in Experiment 2 we examined how the presence of both feet on the display influenced target detection. Although research on altered vision near the hands has uncovered biases near a single hand (e.g., Reed et al., 2006), as well as biases when both hands are held near either side of a display (e.g., Abrams et al., 2008), the changes in visual processing associated with viewing information near one versus two hands are not necessarily the same (Bush & Vecera, 2014). In Experiment 2, we directly investigated how the presence of a single foot on one side of the display versus the presence of both feet on opposite sides of the display influenced visual processing.

Method

Participants

Forty-four volunteers from North Dakota State University, recruited from a summer e-mail list, who had not participated in the previous experiment took part in Experiment 2 for a $10 monetary compensation.

Stimuli and apparatus

Experiment 2 was a direct replication of Experiment 1, with a single exception: We replaced the control condition, in which participants kept both feet away from the display, with a two-feet condition in which participants rested their right foot on the right side of the display and their left foot on the left side of the display.

Results and discussion

One participant made response errors on more than 50 % of catch trials and was excluded from further analyses. The mean catch trial error rate for the remaining 43 participants was 7.0 %. As in Experiment 1, individual trials with a reaction time of less than 200 ms or greater than 1,000 ms (7.98 % of the total data) were eliminated. Figure 3 displays mean reaction times to near and far targets under the two-feet,Footnote 4 right-foot, and left-foot conditions.

Results from Experiment 2. Error bars represent ±1 SEM

We again analyzed the data using a 3 × 2 within-subjects ANOVA with the factors Foot Placement (right foot, left foot, or two feet) and Target Side (target near or far from the foot) to examine the influence of foot proximity on target detection. A significant main effect of target side emerged [F(1, 42) = 8.754, p = .005, η p 2 = .172], but neither the main effect of foot placement [F(2, 84) = 0.373, p = .690] nor the interaction of foot placement and target side was significant [F(2, 84) = 1.389, p = .255]. Although the main effect of target side in the absence of an interaction between this factor and foot placement suggests that participants were generally faster to detect targets appearing near a single foot than targets appearing far from this foot—and in the two-feet condition, were faster to detect targets appearing near their right foot than targets appearing near their left foot—in the interest of fully replicating the analyses carried out for Experiment 1, we again performed Bonferroni-adjusted pairwise comparisons between the target-near and target-far conditions for each of the three foot placement conditions. These comparisons indicated that in the right-foot condition, participants were significantly faster to detect targets near their foot than targets far from their foot [t(42) = 3.441, p = .003], whereas in the two-feet and left-feet conditions, reaction times did not differ significantly between the near and far cases (both p values > .25).

The results of Experiment 2 replicate the key findings of our first experiment, again showing that participants show a processing cost in detecting targets appearing far from their right foot as compared to targets appearing near this foot. The participants in Experiment 2 were recruited from a somewhat different population than those in Experiment 1: Whereas the sample for the first experiment consisted primarily of young undergraduate students, the paid recruitment in the summer months for Experiment 2 ensured a broader sample of older students and community volunteers. This may in part explain why the mean reaction times for Experiment 2 were substantially slower than those for Experiment 1, as well as the lower rate of catch-trial errors in Experiment 2. However, it is important to note that despite these differences, the magnitudes of the near-foot effect for the right foot were similar across Experiments 1 and 2, pointing to the potential generality and robustness of this effect.

Experiment 3

The results of the first two experiments suggest that observers may experience changes in visual processing when viewing objects near their feet. However, an alternative explanation for our results is that the right foot served as a visual anchor, biasing visual processing not because of a special visual interaction in near-foot peripersonal space, but simply because it was a salient object on the display. Although research on altered vision near the hands has suggested that the presence of visual anchors such as boards or rods on a display does not create the same changes in processing as the presence of the hands (e.g., Cosman & Vecera, 2010; Reed et al., 2006), because of the somewhat unusual viewing conditions that we employed in Experiments 1 and 2, we chose to examine this issue with our floor display setup. In Experiment 3, we examined whether the bias against targets appearing far from the foot in the first two experiments was specifically tied to the foot’s presence, or if any similarly positioned visual anchor could create similar biases.

Method

Participants

Fifty-six undergraduate students from North Dakota State University who had not provided data for the first two experiments participated in Experiment 3 for course credit.

Stimuli, apparatus, procedure, and design

Experiment 3 was also a direct replication of Experiment 1, with a single exception: Participants performed the attentional-cueing paradigm with their feet always tucked beneath their chair and out of sight. On some blocks of trials, instead of asking participants to rest their right or left foot on the display, an experimenter instead placed a wooden block roughly equal in size to an average foot on the display in either the right or the left location (see Fig. 1c).

Results and discussion

Four participants made response errors on more than 50 % of catch trials and were excluded from further analyses. To ensure equal sample sizes across experiments, the data from the final nine participants were left unanalyzed, leaving data from 43 participants. The mean catch-trial error rate for these remaining participants was 18.1 %. As in the previous experiments, individual trials with a reaction time of less than 200 ms or greater than 1,000 ms (11.8 % of the total data) were eliminated. Figure 4 displays the mean reaction times to near and far targets under the right-block, left-block, and no-block control conditions.

Results from Experiment 3. Error bars represent ±1 SEM

A 3 × 2 within-subjects ANOVA with the factors Block Placement (right, left, or no block) and Target Side (target near or far from the block) yielded significant main effects of both block placement [F(2, 84) = 4.683, p = .012, η p 2 = .100] and target side [F(1, 42) = 7.504, p = .009, η p 2 = .152], but no significant interaction between these factors [F(2, 84) = 0.449, p = .640]. Bonferroni-adjusted pairwise comparisons indicated that the block-absent control condition did not differ significantly from either of the block-present conditions (both p values > .30), but that participants were generally slower to respond on trials in which a block was present on the left side of the display than on trials in which a block was present on the right side of the display (mean difference = 10.17, p = .021). Although the main effect of target side was significant, Bonferroni-adjusted pairwise comparisons between target far and target near for the block placement conditions suggested that participants were not facilitated in detecting targets near a block [t(42) = 0.71, p > .7, for the right-block condition; t(42) = 1.90, p > .15, for the left-block condition].

The results of Experiment 3 suggest that the findings of the first two experiments were indeed due to the presence of the participants’ feet on the display. Consistent with previous research investigating visual-processing biases associated with stimuli appearing near the hands (e.g., Reed et al. 2006), we found no evidence that participants were facilitated in detecting targets appearing near a non-body-part visual anchor.

Experiment 4

Although the results of Experiments 1 and 2 provided initial support for altered vision in near-foot peripersonal space, it was unclear whether the costs associated with detecting targets far from the foot were a result of proprioceptive or visual information about the foot’s proximity to a target. People gaze ahead of, rather than down at, their feet during typical locomotion, which raises the possibility that visual input about foot location is not a critical component of altered vision near the feet when observers can rely on proprioceptive information. In Experiment 4, we examined the influence of proprioceptive signals of foot proximity to a stimulus in the absence of a visual signal of the feet. As in Experiment 1, participants performed the covert-orienting task while resting their right or left foot on the side of the display or while keeping both feet away from the display. However, in Experiment 4, when a foot was positioned on the display, a curtain prevented participants from seeing the foot.

Method

Participants

Fifty undergraduate students from North Dakota State University who had not provided data in the first three experiments participated in Experiment 4 for course credit.

Stimuli, apparatus, procedure, and design

Experiment 4 was also a replication of Experiment 1, with a single exception: In the conditions in which participants rested a foot near the display, their view of the foot was occluded by a curtained box that fit around the thigh (see Fig. 1d).

Results and discussion

Six participants made response errors on more than 50 % of catch trials and were excluded from further analyses. To ensure equal sample sizes across experiments, the data from the 50th participant were left unanalyzed, leaving data from 43 participants. The mean catch-trial error rate for these participants was 18.0 %. As in the previous experiments, individual trials with a reaction time of less than 200 ms or greater than 1,000 ms (10.6 % of the total data) were eliminated. Figure 5 displays the mean reaction times to near and far targets under the occluded-right-foot, occluded-left-foot, and no-foot control conditions.

Results from Experiment 4. Error bars represent ±1 SEM

We again analyzed the data using a 3 × 2 within-subjects ANOVA with the factors Foot Placement (occluded right foot, occluded left foot, or no-foot control) and Target Side (target near or far from the foot) to examine the influence of proprioceptive feedback of foot proximity on target detection. Although there was a significant main effect of foot placement [F(2, 84) = 5.113, p = .008, η p 2 = .109], neither the main effect of target side [F(1, 42) = 0.189, p = .666] nor the interaction of foot placement and target side was significant [F(2, 84) = 0.793, p = .456]. Bonferroni-adjusted pairwise comparisons showed that the main effect of foot placement was driven by participants’ slower overall reaction times in the occluded-left-foot condition than in the control condition (mean difference = –9.56, p = .015; all other p values > .1).

The results of Experiment 4 suggest that proprioceptive feedback about an occluded foot’s proximity to a visual display is not sufficient to produce the costs associated with detecting targets appearing far from the foot that we observed in our first experiment. Although participants were generally slower to detect targets when they rested their left foot on the display than when their feet were tucked under the chair, whether a target was near or far from the occluded foot had no influence on reaction times. Given the facts that proprioceptive signals about the hands’ proximity to visual information are sufficient to produce altered vision near the hands (Abrams et al., 2008; Reed et al., 2006) and that one of the most common actions associated with the feet—walking—does not rely on foot visibility, this result is somewhat surprising. However, research on crossmodal congruency effects near the feet has shown that viewing the limb is important for these visual–tactile interactions (Schicke et al., 2009). And, although normal walking does not involve looking at the feet, in circumstances in which altered vision near the feet would potentially be most beneficial to action (e.g., when preparing to kick a ball or when locomoting over unsteady terrain), gaze is more likely to be directed down to the feet or to a place where they will fall (Hollands, Marple-Horvat, Henkes, & Rowan, 1995).

General discussion

Although investigation into the manner in which vision within peripersonal space is biased toward action-relevant information has flourished in the last decade, this work has focused exclusively on the region of peripersonal space near the hands. We expanded the consideration of peripersonal space to include locations near the feet, because the feet are also important effectors for action. Mirroring findings from the hand literature (e.g., Le Bigot & Grosjean, 2012), in Experiments 1 and 2 we found that participants were faster to detect simple targets appearing near their right foot than targets appearing far from this foot. In Experiment 3, we found no evidence that in our experimental setup participants prioritized targets appearing near a nonfoot visual anchor, a finding that was also consistent with previous research on altered vision near the hands (e.g., Reed et al., 2006). However, in Experiment 4, when a foot was placed behind an occluder, participants showed no facilitation in detecting targets near the foot. Although proprioceptive information about a hand’s proximity to visual information seems to be sufficient to introduce biases in processing (e.g., Abrams et al., 2008), altered vision near the feet may rely on an observer’s ability to see the relationship between the foot and the visual stimulus.

Why might observers deprioritize processing of visual information appearing outside the foot’s peripersonal space? When there are environmental constraints on foot placement, as is the case when hiking over difficult terrian or when moving to kick an object, focusing too much on visual information far from the feet could detract from processing information that has immediate relevance for maintaining balance or making contact with a target object—that is, objects outside the foot’s peripersonal space have the potential to interfere with effective action production. Although the necessity of foot–eye coordination may not be as prevalent as visually guided reaching with the hands, one need only bring to mind a hiker on a narrow suspension bridge to vividly illustrate how crucial visual control of accurate foot placement can be to successful action.

Although we have uncovered initial evidence pointing to altered vision near the feet that in some respects mirrors recent findings documenting changes in visual processing near the hands, it is unclear whether these peripersonal biases reflect a common underlying mechanism. The literature on vision near the hands lacks consensus: Some have advocated an attentional mechanism that prioritizes near-hand space (e.g., Reed et al., 2006), whereas others have advanced a theory that stimuli in near-hand space receive enhanced representation in the high-temporal-acuity magnocellular pathway (e.g., Gozli, West, & Pratt, 2012; for a recent review, see Goodhew, Edwards, Ferber, & Pratt, 2015). Our results are potentially consistent with an attentional-prioritization account—participants may have been slower to respond to targets appearing far from the foot because stimuli in near-foot space experienced a baseline attentional prioritization. However, our results are also consistent with the magnocellular-enhancement account, since the target detection task that participants performed required rapid responses to a large luminance change to which the magnocellular pathway is sensitive. Although we favor an interpretation that altered vision near the hands and feet may reflect a more general interplay between the visual system and the body that facilitates effective action, the mechanism behind this interaction requires futher investigation.

We have found preliminary evidence that the visual system may process objects in the environmental space proximal to the feet differently from more distant objects. Although altered vision near the feet may contribute to successful guidance of action, future work will be necessary to examine the specific links between visual biases and the affordances for actions associated with the feet. For example, planned locomotion over uneven terrian may influence visual processing along the planned path. A better understanding of visual processing in near-foot space may help define any role that visual processing plays in fall-related injuries in aging populations. Although research on fall-related injury has typically focused on decreased visual acuity as a result of aging, recent work has begun to identify a relationship between visual cognition and falls (Reed-Jones et al., 2013). If the aspects of visual cognition that contribute to falls are affected uniquely in the space proximal to the feet, then a better understanding of these processes could potentially lead to fall prevention strategies for older adults. Our work is only a first step in understanding vision in peripersonal space, more broadly defined, but it is clear that the relationship between the visual system and the body’s positioning extends beyond the hands’ grasping space.

Notes

Although we manipulated cue validity, we chose to collapse the data across valid and invalid trials to simplify presentation of the results. Across experiments, we found no strong evidence that cue validity influenced attentional prioritization of space near the foot. The supplemental materials to this article provide summary data and analyses incorporating the Cue Validity factor.

Note that for the control condition, in which no foot rested on the display, the assignments of the target-near and target-far designations were arbitrary. Across experiments, targets appearing on the right side of the display were assigned the label “target near,” and targets appearing on the left side of the display were assigned the label “target far” for the control condition.

This critical interaction between foot placement and target side remained significant in an analysis in which the assignments of the “near” and “far” labels were swapped in the control condition [F(2, 84) = 10.705, p < .001, η p 2 = .203], demonstrating that this result holds regardless of how the “near” and “far” labels are assigned in the control condition.

References

Abrams, R. A., Davoli, C. C., Du, F., Knapp, W. H., III, & Paull, D. (2008). Altered vision near the hands. Cognition, 107, 1035–1047. doi:10.1016/j.cognition.2007.09.006

Adam, J. J., Bovend’Eert, T. J. H., van Dooren, F. E. P., Fischer, M. H., & Pratt, J. (2012). The closer the better: Hand proximity dynamically affects letter recognition accuracy. Attention, Perception, & Psychophysics, 74, 1533–1538. doi:10.3758/s13414-012-0339-3

Brockmole, J. R., Davoli, C. C., Abrams, R. A., & Witt, J. K. (2013). The world within reach: Effects of hand posture and tool-use on visual cognition. Current Directions in Psychological Science, 22, 38–44. doi:10.1177/0963721412465065

Bush, W. S., & Vecera, S. P. (2014). Differential effect of one versus two hands on visual processing. Cognition, 133, 232–237.

Cosman, J. D., & Vecera, S. P. (2010). Attention affects visual perceptual processing near the hand. Psychological Science, 21, 1254–1258. doi:10.1177/0956797610380697

Davoli, C. C., Brockmole, J. R., & Goujon, A. (2012). A bias to detail: How hand position modulates visual learning and visual memory. Memory & Cognition, 40, 352–359. doi:10.3758/s13421-011-0147-3

Flögel, M., Kalveram, K. T., Christ, O., & Vogt, J. (2016). Application of the rubber hand illusion paradigm: Comparison between upper and lower limbs. Psychological Research, 80, 298–306. doi:10.1007/s00426-015-0650-4

Galloway, J. C., & Thelen, E. (2004). Feet first: Object exploration in young infants. Infant Behavior & Development, 27, 107–112. doi:10.1016/j.infbeh.2003.06.001

Gentry, V., & Gabbard, C. (1995). Foot preference behavior: A developmental perspective. Journal of General Psychology, 122, 37–45.

Goodhew, S. C., Edwards, M., Ferber, S., & Pratt, J. (2015). Altered visual perception near the hands: A critical review of attentional and neurophysiological models. Neuroscience & Biobehavioral Reviews, 55, 223–233. doi:10.3758/s13423-015-0844-1

Gozli, D. G., West, G. L., & Pratt, J. (2012). Hand position alters vision by biasing processing through different visual pathways. Cognition, 124, 244–250.

Graziano, M. S. A., & Cooke, D. F. (2006). Parieto-frontal interactions personal space, and defensive behavior. Neuropsychologia, 44, 845–859. doi:10.1016/j.neuropsychologia.2005.09.009

Hollands, M. A., Marple-Horvat, D. E., Henkes, S., & Rowan, A. K. (1995). Human eye movements during visually guided stepping. Journal of Motor Behavior, 27, 155–163.

Kelly, S. P., & Brockmole, J. R. (2014). Hand proximity differentially affects visual working memory for color and orientation in a binding task. Frontiers in Psychology, 5, 318. doi:10.3389/fpsyg.2014.00318

Le Bigot, N., Grosjean, M. (2012). Effects of handedness on visual sensitivity in perihand space. PLoS ONE, 7, e43150. doi:10.1371/journal.pone.0043150

Lloyd, D. M., Azañón, E., & Poliakoff, E. (2010). Right hand presence modulates shifts of exogenous visuospatial attention near perihand space. Brain and Cognition, 73, 102–109. doi:10.1016/j.bandc.2010.03.006

MacNeilage, P. F. (1990). Grasping in modern primates: The evolutionary context. In M. A. Goodale (Ed.), Vision and action: The control of grasping (pp. 1–13). Norwood: Ablex.

Posner, M. I. (1980). Orienting of attention. Quarterly Journal of Experimental Psychology, 32, 3–25. doi:10.1080/00335558008248231

Reed, C. L., Betz, R., Garza, J. P., & Roberts, R. J., Jr. (2010). Grab it! Biased attention in functional hand and tool space. Attention, Perception, & Psychophysics, 72, 236–245. doi:10.3758/APP.72.1.236

Reed, C. L., Grubb, J. D., & Steele, C. (2006). Hands up: Attentional prioritization of space near the hand. Journal of Experimental Psychology: Human Perception and Performance, 32, 166–177. doi:10.1037/0096-1523.32.1.166

Reed-Jones, R. J., Solis, G. R., Lawson, K. A., Loya, A. M., Cude-Islas, D., & Berger, C. S. (2013). Vision and falls: A multidisciplinary review of the contributions of visual impairment to falls among older adults. Maturitas, 75, 22–28. doi:10.1016/j.maturitas.2013.01.019

Reynolds, R. F., & Day, B. L. (2005). Visual guidance of the human foot during a step. Journal of Physiology, 569, 677–684. doi:10.1113/jphysiol.2005.095869

Rolian, C., Lieberman, D. E., & Hallgrimsson, B. (2010). The coevolution of human hands and feet. Evolution, 64, 1558–1568. doi:10.1111/j.1558-5646.2010.00944.x

Schicke, T., Bauer, F., & Röder, B. (2009). Interactions of different body parts in peripersonal space: How vision of the foot influences tactile perception at the hand. Experimental Brain Research, 192, 703–715.

Schicke, T., & Röder, B. (2006). Spatial remapping of touch: Confusion of perceived stimulus order across hand and foot. Proceedings of the National Academy of Sciences, 103, 11808–11813.

Sun, H. M., & Thomas, L. E. (2013). Biased attention near another’s hand following joint action. Frontiers in Psychology, 4, 443. doi:10.3389/fpsyg.2013.0043

Thomas, L. E. (2013). Grasp posture modulates attentional prioritization of space near the hands. Frontiers in Psychology, 4, 32. doi:10.3389/fpsyg.2013.00312

Thomas, L. E. (2015). Grasp posture alters visual processing biases near the hands. Psychological Science, 26, 625–632.

Thomas, L. E., Davoli, C. C., & Brockmole, J. R. (2013). Interacting with objects compresses environmental representations in spatial memory. Psychonomic Bulletin & Review, 20, 101–107. doi:10.3758/s13423-012-0325-8

Tseng, P., & Bridgeman, B. (2011). Improved change detection with nearby hands. Experimental Brain Research, 209, 257–269.

Tseng, P., Bridgeman, B., & Juan, C. H. (2012). Take the matter into your own hands: A brief review of the effect of nearby-hands on visual processing. Vision Research, 72, 74–77.

Author Note

This material is based on work supported by the National Science Foundation, under Grant No. NSF BCS 1556336, and by a Google Faculty Research Award to L.E.T. We thank Hallie Anderson, Katelynn Cox, Leah Jesser, Emma Kramlich, Maren Lack, Miranda Schmidt, Jessica Stelter, and Sylvia Ziejewski for their assistance with data collection.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(DOCX 25 kb)

Rights and permissions

About this article

Cite this article

Stettler, B.A., Thomas, L.E. Visual processing is biased in peripersonal foot space. Atten Percept Psychophys 79, 298–305 (2017). https://doi.org/10.3758/s13414-016-1225-1

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-016-1225-1