Abstract

The Gesture as Simulated Action (GSA) framework was proposed to explain how gestures arise from embodied simulations of the motor and perceptual states that occur during speaking and thinking (Hostetter & Alibali, Psychonomic Bulletin & Review, 15, 495–514, 2008). In this review, we revisit the framework’s six main predictions regarding gesture rates, gesture form, and the cognitive cost of inhibiting gesture. We find that the available evidence largely supports the main predictions of the framework. We also consider several challenges to the framework that have been raised, as well as several of the framework’s limitations as it was originally proposed. We offer additional elaborations of the framework to address those challenges that fall within the framework’s scope, and we conclude by identifying key directions for future work on how gestures arise from an embodied mind.

Similar content being viewed by others

A growing number of scholars are emphasizing the critical role of the body in cognitive processes (e.g., Glenberg, Witt, & Metcalfe, 2013). Although the embodied-cognition framework is not without its critics (e.g., Goldinger, Papesh, Barnhart, Hansen, & Hout, 2016), it provides an account of cognition and behavior in many domains, including language (e.g., Glenberg & Gallese, 2012), mathematical reasoning (e.g., Hall & Nemirovsky, 2012; Lakoff & Núñez, 2000), and music perception (e.g., Leman & Maes, 2014). One source of evidence for the embodiment of cognition in these domains is the way that people use their bodies as they describe their thinking; that is, when people describe their mathematical thinking or their musical perception, they use their hands and arms to depict the ideas they express. Such movements (hereafter, gestures) seem to indicate that the hands naturally reflect what the mind is doing. Indeed, decades of research have shown that gestures are intricately tied to language and thought (e.g., Goldin-Meadow, 2003; McNeill, 1992).

Hostetter and Alibali (2008) proposed the Gesture as Simulated Action (GSA) framework as a theoretical account of how gestures arise from an embodied cognitive system. Drawing on research from language, mental imagery, and the relations between action and perception, the GSA framework proposes that gestures reflect the motor activity that occurs automatically when people think about and speak about mental simulations of motor actions and perceptual states. The framework has been influential since its publication. It has been applied to explain a range of findings about gesture (e.g., Wartenburger et al., 2010), and it has inspired models that explain the embodiment of other sorts of behaviors (e.g., Cevasco & Ramos, 2013; Chisholm, Risko, & Kingstone, 2014; Perlman, Clark, & Johansson Falck, 2015). Furthermore, the central idea proposed in the GSA framework—that gestures reflect embodied sensorimotor simulations—has been taken as a warrant for using gestures as evidence about the nature of underlying cognitive processes or representations in a wide range of tasks and domains (e.g., Eigsti, 2013; Gerofsky, 2010; Gu, Mol, Hoetjes, & Swerts, 2017; Perlman & Gibbs, 2013; Sassenberg, Foth, Wartenburger, & van der Meer, 2011; Yannier, Hudson, Wiese, & Koedinger, 2016). But, are such claims warranted? What is the evidence that gestures actually do express sensorimotor simulations in the ways specified in the GSA framework?

The purpose of this review is to revisit the GSA framework, with an eye toward both examining the evidence to date for its predictions and identifying its limitations. In the sections that follow, we first summarize the central tenets of the GSA framework. We then review the evidence for and against each of its predictions. In light of this groundwork, we address the primary challenges that have been raised against the framework. We conclude with a discussion of limitations and future directions.

Overview of the Gesture as Simulated Action framework

The GSA framework situates gesture production within a larger embodied cognitive system. Put simply, speakers gesture because they simulate actions and perceptual states as they think, and these simulations involve motor plans that are the building blocks of gestures. The GSA framework was developed specifically to describe gestures that occur with speech, although the basic tenets also apply to gestures that occur in the absence of speech (termed co-thought gestures; Chu & Kita, 2011).

The term simulation has been used in a variety of contexts within cognitive science and neuroscience. Simulation has been contrasted with motor imagery (e.g., O’Shea & Moran, 2017; Willems, Toni, Hagoort, & Casasanto, 2009) and with emulation (e.g., Grush, 2004), and there is debate about the roles of conscious representations and internal models in simulation (e.g., Hesslow, 2012; Pezzulo, Candidi, Dindo, & Barca, 2013). When we use the term simulation, we are not taking a stance regarding these issues; by simulation we simply mean the activation of motor and perceptual systems in the absence of external input. We do assume that simulations are predictive, in that they activate the corresponding sensory experiences that result from particular actions (see Hesslow, 2012; Pouw & Hostetter, 2016), but the framework does not hinge on either whether simulations are conscious or whether they are neurally distinct from mental imagery.

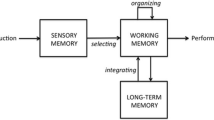

The basic architecture of the GSA framework is depicted in Fig. 1. According to this framework, the likelihood of a gesture at a particular moment depends on three factors: the producer’s mental simulation of an action or perceptual state, the activation of the motor system for speech production, and the height of the producer’s current gesture threshold. We consider each of these factors in turn.

First, if a speaker is not actively simulating actions or perceptual states as she speaks, a gesture is very unlikely to occur. This tenet is based on the idea that information can be represented in either a symbolic, verbal/propositional form or in a grounded, visuospatial or imagistic form (e.g., Paivio, 1991; Pecher, Boot, & Van Dantzig, 2011; Zwaan, 2014). The GSA framework contends that thinking that involves visuospatial or motor imagery requires activation of the motor system. Just as perception and action are intricately linked in cognition more generally (e.g., Gibson, 1979; Prinz, 1997; Profitt, 2006; Witt, 2011), people automatically engage the motor system when they activate mental images—for example, when thinking about how they would interact with imagined objects or imagining themselves moving in ways that embody particular actions. These simulations involve motor plans that can come to be expressed as gestures. In contrast, when speakers rely instead on a stored verbal or propositional code as the basis for speaking or thinking about an idea, their motor systems are activated to pronounce those codes as words, but the speakers are unlikely to produce gestures, because their motor systems are not actively engaged in simulating the spatial or motoric properties of the idea.

Under this view, the motor plan that gives rise to gesture is formed any time a thought involves the simulation of motor or perceptual information. However, people are most likely to produce gestures when they also express their thoughts in speech. Thus, the second factor that influences gesture production is the concurrent activation of the motor system for speech production. Although co-thought gestures can and do occur (e.g., Chu & Kita, 2016), the motor activation that accompanies simulations of actions and perceptual states is more likely to be expressed overtly in gestures when the motor system is also engaged in producing speech. Because the hands and the mouth are linked, both developmentally (e.g., Iverson & Thelen, 1999) and neurally (e.g., Rizzolatti et al., 1988), it may be difficult to initiate movements of the mouth and vocal articulators in the interest of speech production without also initiating movements of the hands and arms. When there is no motor plan that enacts a simulation of what is being described, this manual movement might take the form of a beat gesture (Hostetter, 2008), or a simple rhythmic movement that adds emphasis to a particular word or phrase. However, when there is an active, prepotent motor plan from the simulation of an action or perceptual state, this motor plan may be enacted by the hands and become a representational gesture. Thus, the simultaneous activation of the speech production system influences the likelihood of a gesture. Although gestures can occur in the absence of speech, they should be more prevalent when people activate simulations along with speech. This idea that it is difficult to inhibit the motor activity involved in simulation while simultaneously allowing motor activity for articulation has since been integrated into several other models that seek to explain language production more broadly (e.g., Glenberg & Gallese, 2012; Pickering & Garrod, 2013).

Third, even when the basic motor plan for a gesture has been formed, the plan will only be realized as gesture if the motor system has been activated with enough strength to surpass the speaker’s current gesture threshold. According to the GSA framework, the gesture threshold reflects a speaker’s own resistance to overtly producing a gesture. The height of this threshold can depend on a variety of dispositional and situational factors. For example, some people may hold strong beliefs that gestures are impolite or distracting, leading them to maintain a consistently high threshold. For such individuals, only very strongly activated motor plans will surpass the threshold and be realized as gesture. In contrast, in some situations speakers may believe that a gesture is likely to be particularly effective for the communicative exchange or for managing their own cognitive load, leading them to maintain a lower threshold temporarily, so that even a small amount of motor activation is strong enough to surpass the threshold. In sum, whether an individual will produce a gesture along with a particular utterance or thought depends on the dynamic relationship between how strongly the underlying simulation activates the individual’s motor system and how high the individual’s gesture threshold is at that moment.

These mechanisms give rise to several predictions regarding the rate, form, and cognitive cost of gestures. We next describe the six predictions made in the original statement of the framework (Hostetter & Alibali, 2008), and we review the relevant evidence to date for each.

Review of evidence

Prediction 1: Speakers gesture at higher rates when they activate visuospatial or motor simulations in service of language production than when they do not activate such simulations

The GSA framework contends that speakers form both imagistic representations and verbal/propositional representations, and that either can form the basis of an utterance. In some situations, speakers activate images, or grounded modal representations that resemble the experiences they represent. In other situations, speakers may activate abstract, amodal representations that symbolically represent the message, or they may activate verbal codes that instantiate the message.Footnote 1 When imagistic representations are activated, the motor and perceptual systems become activated, because sensorimotor properties of the image are simulated. By this, we mean that the same neural areas that are involved in producing the action, interacting with the object, or experiencing the perceptual state are activated. In the case of motor imagery, the motor system is activated as if to produce the action. In the case of visual imagery, the visual system is activated, but that activation can spread to motor areas as well, because of the close ties between perception and action (e.g., Prinz, 1997).

The key prediction stemming from this claim is that speakers should gesture more often when their speech is based on imagery than when their speech is based on verbal or propositional information. At the time the GSA framework was formulated, there was already some evidence for this claim (e.g., Hostetter & Hopkins, 2002). In the years since, additional studies have tested the claim more definitively. Hostetter and Skirving (2011) compared the gestures produced by speakers as they described vignettes that they had learned in one of two different ways: by listening to a description twice, or by listening to a description once and then watching the events in an animated cartoon. They found higher rates of gesture when speakers had seen the cartoon than when they had only heard the verbal description. Importantly, because speakers in both conditions heard words describing the vignette, the evidence favors the interpretation that speakers gesture more in the presence of a visuospatial image, rather than in the absence of easy lexical access to the words.

However, not all studies have supported the prediction. Parrill, Bullen, and Hoburg (2010) found that speakers both gestured and spoke more when they had seen a cartoon than when they had only read a description of its events, and speakers’ gesture rates (controlling for the amount of speech) was no higher when describing the cartoon than when describing the verbal text. This is in direct contrast to the results of Hostetter and Skirving (2011), who found a difference in gesture rate, even when controlling for the amount of speech.

Several methodological differences between the studies could account for this difference. Most notably, the audience in Parrill et al. (2010) was a friend of the speaker who was truly naïve about the events in the cartoon, whereas the audience in Hostetter and Skirving (2011) was the experimenter. There are likely motivational differences between communicating with a naïve friend and an unknown (and assumedly knowledgeable) experimenter. For example, increased motivation to be interesting and helpful to a friend who needs to understand the story could encourage speakers to generate rich images on the basis of the words they hear, thereby reducing the strength of the manipulation of words versus images. Indeed, Parrill et al. (2010) conclude that their results suggest that speakers can simulate images based on a verbal text, and when they do, their gestures look very similar to those that are produced when the events have been seen directly.

Although speculative, there is some empirical support for this explanation that the effect of stimulus presentation modality can depend on aspects of the communicative situation. Masson-Carro, Goudbeek, and Krahmer (2017) found that participants gestured more when describing an object to someone else when the object was indicated with a picture than when it was indicated with a verbal label. The effect disappeared, however, when the task was made more difficult by preventing participants from naming the object, perhaps because participants adopted a very different strategy when not allowed to use the name. Specifically, when participants could not name the object, they produced longer descriptions that included more detail about the appearance and function of the object. We argue that, to create these richer descriptions, people simulated the objects’ properties in more detail, and they then expressed these rich simulations as gestures (regardless of whether they had seen a picture of the object or a verbal label)—just as being more motivated to be clear in the Parrill et al. (2010) study may have encouraged people to form more richly detailed images, even in the text condition.

Thus far, the finding that people gesture at a higher rate when they have seen images than when they have read text could also be explained by arguing that people gesture at a higher rate when the speaking task is more difficult. Indeed, there is evidence that speakers gesture more when a task is more cognitively or linguistically complex (e.g., Kita & Davies, 2009) and that gestures can help manage increased cognitive load (e.g., Goldin-Meadow, Nusbaum, Kelly, & Wagner, 2001). Perhaps it is simply more difficult to describe information that was presented as images than to describe the same information when it was presented as words, and this increased difficulty leads to more gestures. Certainly, prohibiting speakers from naming the object they are describing (as in Masson-Carro et al., 2017) imposes an additional cognitive load that could result in more gestures.

However, converging evidence suggests that difficulty cannot fully account for speakers’ high rates of gesture when describing images. Sassenberg and van der Meer (2010) observed the gestures produced by participants as they described a route. They found that steps that were highly activated in speakers’ thinking (because they had been described before) were expressed with higher rates of gesture than steps that were newly activated, even though the highly activated steps should have been easier to describe because they had been described previously. Furthermore, Hostetter and Sullivan (2011) found that speakers gestured more on a task that involved manipulating images (i.e., how to assemble shapes to make a picture) than on a task that involved manipulating verbal information (i.e., how to assemble words to make a sentence), even though the verbal task was arguably more difficult for participants and resulted in more errors. Finally, Nicoladis, Marentette, and Navarro (2016) found that the best predictor of children’s gesture rates in a narrative task was the overall length of their narratives, and they argued that greater length indicated greater reliance on imagery. When children formed rich images of the story they were telling, they told longer stories and also gestured at higher rates. In contrast, narrative complexity—indexed by the number of discourse connectors (e.g., so, when, since)—did not predict gesture rates. Children who told more difficult and complex narratives did not necessarily gesture more, as would be expected if gestures were produced in order to manage cognitive difficulty. In all these cases, higher gesture rates did not coincide with higher difficulty, but with greater visuospatial imagery.

Individual differences in speakers’ likelihood of activating visuospatial imagery may also explain some dimensions of individual differences in gesture production. Speakers whose spatial skills outstrip their verbal skills tend to gesture more than speakers with other cognitive profiles (e.g., Hostetter & Alibali, 2007). One interpretation of this finding is that speakers who are especially likely to form an imagistic simulation of the information they are speaking about are also especially likely to produce gestures.

People also gesture more when visuospatial imagery is more strongly activated. In support of this view, Smithson and Nicoladis (2014) found that speakers gestured more as they retold a cartoon story while also watching an unrelated, complex visuospatial array than while watching a simpler array. The researchers suggested that watching the complex visuospatial array interfered with participants’ imagery of the cartoon, and therefore required them to activate that imagery more strongly. This additional activation resulted in more gesture about that imagery. Note that this explanation hinges on the fact that the cartoon retell task requires participants to activate imagery. In a different sort of task in which participants could shift to a non-imagery-based approach (i.e., a symbolic or propositional approach), participants might shift their approach to the task when confronted with visuospatial interference from the complex visuospatial array, rather than activating the imagery more strongly. In that case, they would gesture less with the complex array than with the simple array.

Whereas Smithson and Nicoladis (2014) showed participants motion unrelated to the story they were describing, an alternative approach would be to show participants a visual array that is related to what they are describing. If gestures emerge from activation in the sensorimotor system, then it may be possible to strengthen that activation through a concurrent perceptual task as participants are speaking. Hostetter, Boneff, and Alibali (2018) tested this hypothesis by asking speakers to describe vignettes about vertical motion (e.g., a rocket ship blasting off; an anchor dropping) while simultaneously watching a perceptual display of circles moving vertically across the computer screen. They found that speakers gestured about the vertical motion events at a higher rate when they were watching a perceptual display that moved in a congruent direction (e.g., watching circles moving up while describing a rocket moving up) than when watching a perceptual display that moved in an incongruent direction. Moreover, the difference could not be explained by differences in what speakers talked about, as they did not use more words to describe the vertical events when watching the congruent versus the incongruent display. It appears that the representations that give rise to gestures can also be activated through extraneous activity in the perceptual system.

In sum, the available evidence largely favors the claim that gesture rates increase when speakers describe information that is based on visuospatial images. Although this effect may be sensitive to aspects of the communicative situation, the increase in gesture rate that comes with imagery does not seem to be due to increased cognitive or lexical difficulty.

It is worth noting that, across studies, researchers operationalize gesture rate in several different ways (e.g., gestures per word, gestures per second, gestures per semantic attribute). These measures of rate are typically highly correlated, though they can also diverge (e.g., Hoetjes, Koolen, Goudbeek, Krahmer, & Swerts, 2015). If speakers produce more words in response to images than in response to verbal texts (as in Parrill et al., 2010), it may be particularly important for future research on this issue to examine gesture rates per semantic attribute rather than per word. Speakers may gesture more about each attribute they describe if they have encoded that attribute imagistically than if they have encoded it verbally, regardless of how many words they use to describe that attribute.

This important methodological issue aside, studies have yielded evidence consistent with the claim that gestures emerge with greater frequency when speakers are describing a visuospatial image than when describing a verbal text. We next explore the possibility that gesture rates are affected by the specific type of imagery involved in a simulation.

Prediction 2: Gesture rates vary depending on the type of mental imagery in which the gesturer engages

The GSA framework draws a distinction between visual imagery, which is the re-instantiation of a visual experience, and motor imagery, which is the reinstantiation of an action experience. Visual and motor imagery are conceptualized as endpoints of a continuum that varies in the strength of motor system involvement, with spatial imagery, which is thinking about the spatial relationships between points (e.g., orientation, location), at the midpoint on the continuum (see also Cornoldi & Vecchi, 2003). Importantly, all three types of imagery involve simulation of sensorimotor states, and all can activate motor plans and be expressed in gesture. In the case of motor imagery, the link to gesture is straightforward, as the simulation of the action forms the basis of the motor plan for the gesture. When speakers think about a particular action (that they performed, observed, or imagined), they create a neural experience of actually performing that action, which involves activation of the premotor and motor systems.

In the cases of visual and spatial imagery, the activation of a motor plan that can be expressed in gesture warrants more explanation. We contend that spatial imagery has close ties to action because imagining where points are located in space involves simulating how to move from one point to another. In support of this view, the ability to plan and produce movements to various locations is critical for encoding and maintaining information in spatial working memory (e.g., Lawrence, Myerson, Oonk, & Abrams, 2001; Pearson, Ball, & Smith, 2014). When someone thinks specifically about the spatial properties of an image (e.g., its location, its orientation), the motor system may be activated so as to reach and grasp the object. This activation could be manifest in gestures that indicate the general location of an object. Furthermore, when speakers imagine spatial relations between objects in a mental map or between parts of an object in a visual image, they shift their attention between locations (Kosslyn, Thompson, & Ganis, 2006). In our view, such attentional shifts among the components of an image can give rise to gestures that trace the relevant trajectories.

In contrast to spatial images that encode information about the orientation, location, and size of objects, visual images encode information about object identity (Kosslyn et al., 2006). When speakers imagine a particular visual experience, the neural experience is visual, but it can also activate the motor system because of the close ties between perception and action. There is ample evidence that perception involves automatic activation of corresponding action states—such as how to grasp, hold, or use the object that is perceived (e.g., Bub, Masson, & Cree, 2008; Masson, Bub, & Warren, 2008; Tucker & Ellis, 2004). Our claim is that these same links between perception and action also exist in the realm of mental simulation (see Hostetter & Boncoddo, 2017, for more discussion of this point).

Specifically, visual images can give rise to gesture when either the motor or spatial characteristics of the visual image are salient in the image or important for the task at hand. First, thinking about a visual image can activate motor imagery about how to interact with the imagined object or scene. For example, thinking about a hammer may activate a motor simulation of how to use a hammer, which would result in a gesture that demonstrates the pounding action one would perform with a hammer. Second, visual imagery may evoke spatial imagery about how the parts of the objects or scene are arranged in space. For example, thinking about the components of a particular hammer could evoke spatial simulations of how the various parts of the hammer are positioned relative to one another. As we described above, such spatial simulations rely on imagined movement between the locations and could result in a gesture that traces the hammer’s shape. Thus, visual images can give rise to gestures when the spatial or motor components of the image are salient, because thinking about the spatial or motor components of a visual image relies on simulations of movement. In contrast, thinking about nonspatial visual properties (e.g., color) of an imagined object should lead to very few gestures, because action is not a fundamental part of how we simulate those properties.

Under this view, the likelihood that a description is accompanied by gesture should depend on the extent to which the description evokes thoughts of action. Speakers should gesture more when their imagery is primarily motoric or primarily spatial than when it is primarily visual. Indeed, children gesture more when describing verbs than when describing nouns (Lavelli & Majorano, 2016). Moreover, speakers gesture more when describing manipulable objects (e.g., hammer) than when describing nonmanipulable ones (Hostetter, 2014; Masson-Carro, Goudbeek, & Krahmer, 2016a; Pine, Gurney, & Fletcher, 2010).

Furthermore, there is evidence that speakers’ gesture rates depend on their actual or imagined experience with the object they are describing. Hostetter and Alibali (2010) found that speakers gestured more about visual patterns when they had motor experience making those patterns (by arranging wooden disks) before describing them than when they had only viewed the patterns. Similarly, Kamermans et al. (2018) found that participants gestured more about a figure they had learned through haptic exploration than about a pattern they had learned visually. In both cases, participants gestured more when describing information they had learned through manual experience—that was thus more evocative of motor imagery—than when describing something they had learned through visual experience alone. Along similar lines, Chu and Kita (2016) found that speakers gestured less when they described the orientation of a mug that had spikes along its surface (so as to prevent grasping) than when they described the orientation of a mug without such spikes. Even imagined manual action (or the lack thereof) can affect the activation of motor imagery and, as a consequence, gesture.

Thus, it appears that visual images are particularly likely to evoke gestures when the action-relevant or spatial characteristics of the object that is seen or imagined are salient. To date, the studies testing this idea have manipulated the extent to which the motor affordances of a visual image are salient. To our knowledge, no studies have yet manipulated the salience of the spatial characteristics (such as size or location) of a visual image. We predict that gesture rates should increase when the spatial characteristics of a visual image are emphasized, just as gesture rates increase when motor affordances are emphasized. For example, learning a pattern by seeing its component parts appear sequentially might result in more gesture than would learning the pattern by viewing it holistically, because the sequential presentation would highlight the spatial relations among the parts. However, we suspect that the very act of describing the parts of a visual image likely emphasizes the spatial characteristics of the image, making it difficult to truly manipulate how strongly spatial characteristics are activated in a simulation without also changing what participants talk about.

In sum, the GSA framework explains the likelihood of gesture as being partially due to how strongly individuals activate action simulations as they think about images. People may activate action simulations more or less strongly depending on how they encode an image (i.e., as a motor, spatial, or purely visual image) and on how they think about the image when speaking or solving a problem (i.e., whether motor or spatial components are particularly salient).

What about other, nonvisual forms of imagery, such as auditory or tactile imagery? According to the GSA framework, if such imagery involves simulated actions, it should also give rise to gestures. Imagining a rabbit’s soft fur might evoke the action of petting the rabbit, and this action simulation could give rise to a gesture. Likewise, imagining the quiet sound that a gerbil makes may evoke a simulation of orienting the head or body so as to better hear the (imagined) sound. This action simulation might also give rise to a gesture. According to the GSA framework, any form of imagery that evokes action simulation is likely to be manifested in gesture.

Even when no direct action simulation is evoked by a particular image, the GSA framework proposes that imagining the image in motion may also give rise to gestures. We turn next to this issue.

Prediction 3: People gesture at higher rates when describing images that they mentally transform or manipulate than when describing static images

People sometimes imagine images in motion, such as when they mentally manipulate or transform an imagined object. This imagined motion may involve simulations of acting on the object or of the object moving, and the GSA framework proposes that this imagined manipulation or action can give rise to gesture.

One task that involves imagined manipulations of objects is mental rotation. Chu and Kita (2008, 2011, 2016) provide in-depth explorations of the gestures that people produce during mental rotation. They have found that people initially produce gestures that closely resemble actual actions on the objects, using grasping hand shapes as if physically grasping and rotating the objects. As participants gain experience with the task, their gestures shift to representing the objects themselves, with the hand acting as the object that is being rotated (Chu & Kita, 2008; see also Schwartz & Black, 1996). Furthermore, speakers who are better at the task of mental rotation rely on gestures less than speakers who are worse at it, and preventing gesture during mental rotation significantly increases reaction time (Chu & Kita, 2011). Thus, it appears that gestures play a functional role in mental rotation; enacting the mental simulation of rotation in gesture seems to improve people’s ability to perform the task.

According to the GSA framework, gestures occur with mental rotation because speakers mentally simulate the actions needed to rotate the object. As they gain experience with the task, the nature of their simulation changes, such that they no longer need to simulate the action of manipulating, and instead simulate the object as it moves. As a result, the form of the gesture changes from one that depicts acting on the object to one that depicts the object’s trajectory as it moves. In addition, as the internal computation of mental rotation becomes easier (e.g., with experience on the task), people activate the exact motion of the object and the mechanics of how to move it less strongly in their mental simulations, and they produce fewer gestures. Speakers who have extensive experience with the task may be able to imagine the end state of a given rotation, without imagining the motion or action involved in getting the object to that position. Indeed, the correlation between amount of rotation and reaction time disappears with repeated practice (Provost, Johnson, Karayanidis, Brown, & Heathcote, 2013), suggesting that mental simulation of the rotation is no longer involved.

The hypothesis that speakers gesture more when imagining how something moves than when imagining it in a static state has received some support. Hostetter, Alibali, and Bartholomew (2011) asked speakers to describe arrows either in their presented orientation or as they would look rotated a specified amount (e.g., 90 deg). Importantly, in the no-rotation condition, the arrays were presented in the orientation they would be at the end of the rotation in the rotation condition. Thus, speakers described the same information in both conditions, but in the rotation condition, they had to imagine mentally rotating the arrows in order to arrive at the correct orientation. Gesture rates were higher when describing the mentally rotated images than when describing the same images presented in final orientation, supporting the claim that gestures rates are higher when images are simulated in motion than when they are not.

Speakers sometimes need to mentally rotate their mental maps when they give directions. Sassenberg and van der Meer (2010) showed participants a map depicting several possible routes and then examined the gestures produced by speakers as they described the routes. Speakers were told to describe the routes from a “route perspective,” that is, from the point of view of a person walking through the route (e.g., using words like “left” and “right” rather than “up” or “down” to describe a turn that takes one vertically up or down the page). Sassenberg and van der Meer compared the frequency of gestures accompanying the descriptions of left and right turns in the routes. They found that gestures were more prevalent when the step had been shown on the map as a vertical move (e.g., up vs. down)—requiring mental rotation to fit the first-person perspective—than when it had been shown as a horizontal turn. This finding aligns with the prediction that gesture rates should be higher when images are mentally rotated than when they are not.

Thus far, we have considered predictions made by the GSA framework that apply exclusively to gesture rates, or the likelihood and prevalence of gestures in various situations. However, the framework also yields predictions about the form particular gestures should take. Specifically, the framework predicts that the form of a gesture should reflect particular aspects of the mental simulation that is being described.

Prediction 4: The form of a gesture should mirror the form of the underlying mental simulation

One hallmark of iconic co-speech gestures is that there is not a one-to-one correspondence between a particular representation and a particular gesture (McNeill, 1992). Gestures for the same idea can take many different forms: For example, gestures can be produced with one hand or two, they can take the perspective of a character or of an outside observer, and they can depict the shape of an object or how to use it. According to the GSA framework, the particular form a gesture takes should not be random, but instead should be a consequence of what is salient in the speaker’s simulation at the moment of speaking. As a result, there should be observable correspondences between the forms of speakers’ gestures and the specific experiences they describe or think about.

There is much evidence that the forms of speakers’ gestures reflect their experiences, and thus presumably the specifics of the simulations they have in mind as they are speaking. For example, the shape of the arc trajectory in a particular lifting gesture is related to whether the lifting action being described is a physical lifting action or a mouse movement; speakers produce more pronounced arcs in their gesture when they have had experience physically lifting objects rather than sliding them with a mouse (Cook & Tanenhaus, 2009). Furthermore, speakers often gesture about lifting small objects that are light in weight with one hand rather than two (Beilock & Goldin-Meadow, 2010), and speakers who estimate the weights of objects as heavier gesture about lifting them with a slower velocity, as though the objects are more difficult to lift (Pouw, Wassenburg, Hostetter, de Koning, & Paas, 2018). When speakers describe the motion of objects, the speed of their hands reflects the speed with which they saw the objects move (Hilliard & Cook, 2017). Thus, the specific experiences that speakers have with objects are often apparent in the forms of their gestures about those objects.

The form of gesture presumably depends on the nature of the underlying imagery. For example, when speakers form a spatial image of an object that emphasizes how the parts of the object are arranged in space, they should be particularly likely to produce gestures that move between the various parts of the shape. Indeed, Masson-Carro et al. (2017) found that such tracing or molding gestures were particularly likely to occur when speakers were describing nonmanipulable objects (e.g., a traffic light), particularly when they had seen a picture (rather than read the name) of the object. It seems likely the spatial characteristics of the objects were particularly salient after seeing the objects depicted. In contrast, Masson-Carro et al. (2017) found that participants were more likely to produce gestures that mimicked holding or interacting with objects when they described objects that are readily manipulable (e.g., a tennis racket), and they produced such gestures most often when they had read the name of the object rather than seen it depicted. It seems likely that manipulable objects activate motor imagery more strongly than spatial imagery, particularly when the objects’ spatial characteristics have not just been viewed.

The way events are imagined or simulated can also be affected by the way those events are verbally described. Reading about events in a particular way can encourage speakers to simulate those events accordingly, and thus affect the way they gesture about those events. Parrill, Bergen, and Lichtenstein (2013) presented participants with written stories that described events written either in the past perfect tense, which implied that the actions were complete (e.g., the squirrel had scampered across the path), or in the past progressive tense, which implied that the actions were ongoing (e.g., the squirrel was scampering across the path). When events were presented in the past progressive tense, speakers were more likely to use this tense in their speech than when the events were presented in past perfect tense. More to the current point, when speakers reproduced the past progressive tense in speech, they produced gestures that were longer in duration and more complex (i.e., contained repeated movements) than when they used the past perfect tense in speech. The authors argued that the past progressive tense signals a mental representation of the event that is focused on the details and internal structure of the event. This focus on the internal details leads to more detailed (i.e., complex and longer lasting) gestures. Thus, the specifics of how an individual simulates an event are reflected in the form of the corresponding gesture.

Experiences in the world often support multiple possible simulations during speaking. For example, when describing movements, speakers often describe both the path taken and the manner of motion along that trajectory. Languages differ in how they package this information syntactically (see Talmy, 1983). Satellite-framed languages, such as English, tend to express manner in the main verb and path in a satellite (e.g., he rolled down), whereas verb-framed languages, such as Turkish, tend to express path in the main verb and manner in the satellite (e.g., he descended by rolling). Investigations of how speakers of different languages gesture about path and manner suggest that adult speakers typically gesture in ways that mirror their speech (e.g., Özyürek, Kita, Allen, Furman, & Brown, 2005). If their language tends to use separate verbs to express manner and path (as in verb-framed languages), speakers typically segment path and manner into two separate gestures or leave manner information out of their gestures altogether. In contrast, if their language tends to express path and manner in a single clause with only one verb (as in satellite-framed languages), speakers typically produce gestures that express both path and manner simultaneously. This tendency to segment or conflate gestures for path and manner in the same way they are packaged in speech is present even among speakers who have been blind from birth, suggesting that experience seeing speakers gesture in a particular way is not necessary for the pattern to emerge (Özçalışkan, Lucero, & Goldin-Meadow, 2016b).

We suggest that the language spoken can bias speakers to simulate path and manner either separately or together as they are speaking. According to this account, speakers of satellite-framed languages tend to form a single simulation that involves both path and manner, and their gestures reflect this. In contrast, speakers of verb-framed languages tend to form a simulation of path followed by a simulation of manner, and they produce two separate gestures as a result. However, these biases also depend on how closely path and manner are causally related in the event itself. Kita et al. (2007) distinguished between events in which the manner of motion was inherent to the path (e.g., jumping caused the character to ascend) and events in which the manner of motion was incidental to the path (e.g., the character fell and just happened to be spinning as he went). They found that English speakers produced single-clause utterances most often for events in which manner was inherent to the path; when manner was incidental, English speakers often expressed manner and path in separate clauses. Moreover, speakers’ gestures coincided with their syntactic framing. People produced separate gestures for manner and path most often when they produced separate clauses, whereas they produced conflated gestures that expressed both path and manner most often when they produced single clauses that expressed path and manner together. Thus, whether path and manner are simulated together or separately—and thus whether they are expressed in speech and gesture together or separately—can depend on the nature of the event. Both features of the event itself and language-specific syntactic patterns can affect the ways that speakers simulate path and manner, and consequently, the gestures they produce.

It is also possible for a particular simulation to differentially emphasize path or manner, in which case gesture should reflect the dimension that is most salient. Akhavan, Nozari, and Göksun (2017) found that speakers of Farsi often gestured about path alone, regardless of whether or how they mentioned manner in speech. They argued that this is because path is a particularly salient aspect of the representation of motion events for Farsi speakers. Yeo and Alibali (2018) directly manipulated the salience of manner in motion events, by making a spider move in either a large, fast zigzag motion or a smaller, slower zigzag motion. English speakers produced more gestures that expressed the manner of the spider’s motion when that motion was more salient (i.e., when the zigzags were large and fast), and this difference was not attributable to differences in their mentioning manner in speech. Thus, how path and manner are expressed in gesture depends on what is most salient in the speaker’s simulation at the moment of speaking, which is shaped both by properties of the observed motion event and by syntactic patterns typical in the speaker’s language.

The relation between the form of a gesture and the form of the underlying simulation is also apparent in the perspective that speakers take when expressing manner information in gesture. Specifically, character viewpoint gestures are those in which the speaker’s hands and/or body represent the hands and/or body of the character they are describing. For example, a speaker who describes a character throwing a ball could produce a character viewpoint gesture by moving his hand as though throwing the ball. The GSA framework contends that such gestures arise from motor imagery, in which speakers imagine themselves performing the actions they describe. In contrast, observer viewpoint gestures are those that depict an action or object from the perspective of an outside observer. For example, a speaker who describes a character throwing a ball could produce an observer viewpoint gesture by moving her hand across her body to show the path of the ball as it moved. The GSA framework proposes that such gestures arise from action simulations activated during spatial imagery—for example, simulations that trace the trajectory of a motion or the outline of an object.

Whether a gesture is produced with a character or observer viewpoint thus depends on how the event is simulated, which may in turn depend on how the event was experienced and on what information about the event is particularly salient. For example, Parrill and Stec (2018) found that participants who saw a picture of an event from a first-person perspective were more likely to produce character viewpoint gestures about the event than participants who saw a picture of the same event from a third-person perspective. Moreover, certain kinds of events are particularly likely to be simulated from the point of view of a character. When a character performs an action with his hands, speakers very frequently gesture about such events from a character viewpoint (Parrill, 2010). Likewise, speakers tend to gesture about objects that are used with the hands by depicting how to handle the objects (character viewpoint), rather than by tracing their outlines (observer viewpoint; Masson-Carro et al., 2016a, 2017).

Of course, the relation between how an event is experienced and how that event is simulated as it is described is not absolute. Speakers can adjust their mental images of events, leading to differences in how frequently they adopt a character viewpoint in gesture. For example, speakers who have Parkinson’s disease have motor impairments that may prevent them from forming motor images (Helmich, de Lange, Bloem, & Toni, 2007). On this basis, one might expect that patients with Parkinson’s disease would be unlikely to produce character viewpoint gestures, and indeed, this is the case. One study reported that patients with Parkinson’s disease produced primarily observer viewpoint gestures when they described common actions, whereas age-matched controls produced primarily character viewpoint gestures (Humphries, Holler, Crawford, Herrera, & Poliakoff, 2016). The speakers with Parkinson’s disease also demonstrated significantly worse performance on a separate motor imagery task than the age-matched controls. The researchers concluded that, because patients with Parkinson’s disease are unable to form motor images that take a first-person perspective, they rely instead on visual images in third-person perspective, and these visual images result in gestures produced primarily from an observer viewpoint.

There is evidence of a similar relation between character viewpoint gesture and the ability to take a first-person perspective among children. Demir, Levine, and Goldin-Meadow (2015) observed variations in whether 5-year-old children adopted character viewpoint in their gestures as they narrated a cartoon. Interestingly, children who used character viewpoint at age 5 produced narratives that were more well-structured around the protagonist’s goals at ages 6, 7, and 8 than children who did not use character viewpoint gestures at age 5. The authors argued that the use of character viewpoint at age 5 indicated that the children took the character’s perspective in their mental imagery, and this ability to take another’s perspective is important for producing well-structured narratives.

In sum, much evidence has suggested that the form of people’s gestures about an event or object is related to how they simulate the event or object in question. Differences in gesture form, gesture content, gesture segmentation, and gesture viewpoint correspond (at least in part) to differences in how people have experienced what they are speaking or thinking about—a point we return to below.

One noteworthy complication in studies that examine gesture form is that it is not uncommon to find participants who do not gesture at all on a given task, and such participants cannot be included in studies examining factors that influence gesture form. However, some recent work has suggested that asking participants to gesture does not significantly alter the type or form of the gestures that they produce (Parrill, Cabot, Kent, Chen, & Payneau, 2016). Thus, we suggest that researchers who are primarily interested in questions about gesture form take steps to increase the probability that each participant produces at least some gestures, whether by giving them highly imagistic or motoric tasks to complete, instructing them to gesture, or creating a communicative context in which gestures are invaluable.

The idea that speakers adjust how much they gesture depending on the communicative context is realized in the GSA framework in terms of adjustments in the height of the gesture threshold. Next, we consider evidence for the claim that speakers can adjust their use of gesture depending on the cognitive and communicative situation.

Prediction 5: Gesture production varies dynamically as a function of both stable individual differences and more temporary aspects of the communicative and cognitive situation

To account for these variations, the framework incorporates a gesture threshold, which is the minimum level of activation that an action simulation must have in order to give rise to a gesture. If the threshold is high, even very strongly activated action simulations may not be expressed as gestures; if the threshold is low, even simulations that are weakly activated may be expressed as gestures. Some people may have a set point for their gesture threshold that is higher than other people, due to individual differences in stable characteristics, such as cognitive skills or personality, differences in cortical connectivity from premotor to motor areas, or differences in cultural beliefs about the appropriateness of gesture. In addition to these individual differences, the gesture threshold can also fluctuate around its set point in response to more temporary, situational factors, including the importance of the message, the potential benefit of the gesture for the listeners’ understanding, and aspects of the communicative situation or the cognitive task.

There are persistent individual differences in how much people gesture. According to the GSA framework, these individual differences are due in part to differences in the set points of people’s gesture thresholds. People’s set points for their gesture thresholds depend on a range of factors, including cognitive skills and personality characteristics. For example, people who tend to have difficulty maintaining visual images (e.g., Chu, Meyer, Foulkes, & Kita, 2014) or verbal information (e.g., Gillespie, James, Federmeier, & Watson, 2014) may maintain low set points for their gesture thresholds, so that they can regularly experience the cognitive benefits of gestures. Likewise, more extroverted people may tend to maintain lower set points, and consequently have higher gestures rates than less extroverted people (Hostetter & Potthoff, 2012; O’Carroll, Nicoladis, & Smithson, 2015), because extroverted people may believe (or have experienced) that gestures are generally engaging for their listeners.

Individual differences in gesture thresholds may also be evident in patterns of neural activation. Speakers who have low gesture thresholds may have more difficulty inhibiting the premotor plans involved in simulation from spreading to motor cortex, where they become expressed as gestures, and this may be manifested in neurological differences. In the only study to date to examine this issue, Wartenburger et al. (2010) found that cortical thickness in the left hemisphere of the temporal cortex and in Broca’s area was positively correlated with gesture production on a reasoning task that required imagining how geometric shapes would be repositioned. They argued that to succeed on the geometric task, speakers imagined moving the pieces, and therefore activated motor simulations. These motor simulations were particularly likely to be expressed as gesture for speakers who had high cortical thickness, because for these speakers, the motor activation involved in simulating the movement of the pieces spread more readily to motor areas. However, it is impossible to know from this study alone whether cortical thickness led to increased gesture, or whether a lifetime of increased gesturing led to increased cortical thickness. Nonetheless, the idea that neurological differences may relate to individual differences in gesture production is compatible with the predictions of the GSA framework.

There are also cultural differences in gesture rates (Kita, 2009), suggesting that speakers may have different set points for their gesture thresholds depending on norms regarding gesture in their culture. For example, monolingual speakers of Chinese gesture less than monolingual speakers of American English (So, 2010). This may be because Chinese speakers have different beliefs about the appropriateness of gesture, leading to relatively high set points for their gesture thresholds in comparison to American English speakers. Interestingly, however, learning a second language with a different cultural norm regarding gesture may lead to shifts in speakers’ thresholds; speakers who are bilingual in Chinese and American English gesture more when speaking Chinese than do monolingual Chinese speakers (So, 2010), suggesting that their experiences speaking English have led to lower thresholds across languages. Moreover, experience learning American Sign Language (ASL) may also lead to generally lower gesture thresholds; people who have a year of experience with ASL produce more speech-accompanying gestures when speaking English than do people who have a year of experience learning a second spoken language (Casey, Emmorey, & Larrabee, 2012).

Thus, there may be stable differences in the set points of people’s gesture thresholds, based on factors such as cognitive skills, personality, culture, and experience learning other languages. In addition to these more stable differences, people’s gesture thresholds also vary depending on temporary aspects of the cognitive or communicative situation. For example, bilingual French–English speakers gesture more when speaking in French, a high-gesture language, than when speaking in English (Laurent & Nicoladis, 2015), suggesting that speakers’ thresholds may shift depending on the communicative context.

Some aspects of the communicative context besides the language spoken also matter. For example, when speakers perceive the information they are communicating about to be particularly important, they gesture more than when the information is less important. This can occur across an entire conversation, with speakers gesturing more when they think the information will be highly relevant and useful to their audience than when the information has no obvious utility (Kelly, Byrne, & Holler, 2011). It can also occur within a conversation, with speakers being particularly likely to gesture about aspects of their message that are particularly important. For example, mothers gesture more when speaking to their children about safety information that is relevant to situations that the mothers perceive to be particularly unsafe (Hilliard, O’Neal, Plumert, & Cook, 2015). Likewise, teachers gesture more when describing information that is new for their students than when describing information that is being reviewed (Alibali & Nathan, 2007; Alibali, Nathan, et al., 2014). Furthermore, although speakers sometimes gesture less when they repeat old information to the same listener (e.g., Galati & Brennan, 2013; Jacobs & Garnham, 2007), speakers’ use of gesture increases with repeated information if they believe that their listeners did not understand the message the first time (e.g., Hoetjes, Krahmer, & Swerts, 2015). All of these phenomena can be conceptualized in terms of a temporary shift in speakers’ gesture thresholds.

Of course, for speakers’ gestures to be beneficial to listeners, the listeners must be able to see the gestures. Many studies have examined the effects of audience visibility on speakers’ gestures (see Bavelas & Healing, 2013, for a review), and several of these have reported that speakers gesture at higher rates when speaking to listeners who can see the gestures than when speaking to listeners who cannot see—and thus cannot benefit from—the gestures (e.g., Alibali, Heath, & Myers, 2001; Bavelas, Gerwing, Sutton, & Prevost, 2008; Hoetjes, Koolen, et al., 2015). From the perspective of the GSA framework, this effect occurs because speakers’ gesture thresholds are lower when their listeners are visible, thereby allowing more simulations to exceed threshold and be expressed as gestures. When listeners are not visible, speakers’ thresholds remain high, so that they produce gestures only for simulations that are very strongly activated. Indeed, the effect of visibility on speakers’ gestures seems to depend on speaking topic, with gestures persisting during descriptions of manipulable objects (which presumably include very strong simulations of action), even when listeners are not visible (Hostetter, 2014; Pine et al., 2010).

Although listener visibility effects are clearly predicted by the GSA framework, some recent studies have found no differences in gesture as a function of listener visibility (e.g., de Ruiter, Bangerter, & Dings, 2012; Hoetjes, Krahmer, & Swerts, 2015) or have found that speakers gesture more when their listeners cannot see them than when they can (e.g., O’Carroll et al., 2015). As Bavelas and Healing (2013) pointed out in their review, it is possible that these differences across studies can be understood by considering the tasks in more detail.

In experiments with confederates or experimenters as the audience, speakers generally receive no verbal feedback about the effectiveness of their communication. Without any feedback, speakers likely assume that their communication is effective. However, when speakers can see the audience, they may receive nonverbal feedback about the effectiveness of their communication, and this nonverbal feedback may prompt speakers to adjust their speech and gestures. As a result, studies that use confederates or experimenters as listeners are particularly likely to find audience visibility effects on gesture rate (Bavelas & Healing, 2013).

In contrast, when the audience in an experiment is a naïve participant who is allowed to engage in dialogue with the speaker, speakers receive feedback about listeners’ understanding in both the visible and the nonvisible conditions. If speakers perceive that listeners are not understanding, they may lower their thresholds—not for the listeners, who cannot see the gestures anyway—but for themselves, in order to reduce their own cognitive load as they attempt to give better, clearer verbal descriptions. The result is that gesture rates may remain high in such situations, even when speakers cannot see their listeners. Indeed, O’Carroll et al. (2015) found that speakers actually gestured more when their listeners were not visible, and they suggest that speakers increased their gesture rates in the nonvisible listener condition in order to help themselves describe the highly spatial task more effectively in speech.

The GSA framework proposes that another determinant of the height of the gesture threshold is the speaker’s own cognitive load. It takes effort to maintain a high threshold (as we discuss in detail in the following section), and actually producing gestures appears to have a number of cognitive benefits (see Kita, Alibali, & Chu, 2017). Thus, the gesture threshold may be lowered in situations in which externalizing a simulation as gesture can facilitate the speaker’s own thinking and speaking. In support of this view, speakers gesture more in description tasks that are more cognitively demanding (e.g., Hostetter, Alibali, & Kita, 2007), and they gesture more when they are under extraneous cognitive load (e.g., Hoetjes & Masson-Carro, 2017). Moreover, there is evidence that speakers gesture more when ideas are difficult to describe, either because the ideas are not easily lexicalized in their language (e.g., Morsella & Krauss, 2004; but see de Ruiter et al., 2012), because the speakers are bilingual (e.g., Nicoladis, Pika, & Marentette, 2009), or because they have a brain injury (e.g., Göksun, Lehet, Malykhina, & Chatterjee, 2015; Kim, Stierwalt, LaPointe, & Bourgeois, 2015). Although task difficulty does not guarantee an increase in gesture rates— as there must first be an underlying imagistic simulation that is being described—the GSA framework contends that increased cognitive load can result in lower thresholds and higher gesture rates.

This discussion raises the question of whether adjusting the gesture threshold is a conscious mechanism (one that speakers are aware of) or an unconscious one. The GSA framework is neutral with regard to this point. There is evidence that speakers can consciously adjust their gesture thresholds; for example, teachers can gesture more when instructed to do so (Alibali, Young, et al., 2013). Speakers also sometimes behave as though they intend for their gestures to communicate—for example, by omitting necessary information from their speech (e.g., Melinger & Levelt, 2004) or by gazing at their own gestures (e.g., Gullberg & Holmqvist, 2006; Gullberg & Kita, 2009). At the same time, speakers are often unaware of their gestures, and we propose that the gesture threshold often operates outside of conscious awareness. According to the GSA framework, gestures emerge automatically on the basis of the strength of action simulation and on the current level of the gesture threshold at the moment of speaking. Conscious intention to gesture can bring speakers’ attention to this process, but the process occurs even without conscious awareness. We generally agree with the position, articulated by Campisi and Mazzone (2016) and by Cooperrider (2018), that gestures can be produced either intentionally or unintentionally, depending on the situation. The unique contribution of the GSA framework to this discussion is that it provides a possible explanation for how general cognitive processes (action simulation during speaking) can give rise to gestures, regardless of intentionality.

In sum, the GSA framework posits that gestures are determined, in part, by the height of a dynamic gesture threshold that fluctuates around a particular set point. This gesture threshold helps to explain both stable individual differences in gesture rates across speakers and situational differences in gesture rates that depend on the communicative situation or on cognitive demands. Regardless of the set point, a speaker can maintain a high threshold and inhibit simulations from being expressed as gestures. For this reason, in studies testing how different factors affect the gesture threshold, nongesturers should be treated as having a gesture rate of zero, rather than being excluded from analysis. This is because the complete absence of any gesture in a highly imagistic task is meaningful, as it suggests that the gesture threshold of that individual was particularly high. However, under our view, maintaining a high threshold even while describing a strongly activated action simulation should be relatively uncommon, because it requires cognitive effort, a claim we turn to next.

Prediction 6: Gesture inhibition is cognitively costly

According to the GSA framework, action simulations are an automatic byproduct of thinking about visual, spatial, and motor images during speaking. These simulations involve activation of the premotor and motor systems, and this activation is expressed as gesture. Thus, gestures are the natural consequences of a mind that is actively engaged with modal, imagistic representations, and it takes effort to inhibit gestures from being expressed. People can maintain a high gesture threshold, thereby effectively inhibiting simulations from being expressed as gestures, but the GSA framework predicts that there should be an associated cognitive cost.

The cognitive effort involved in gesture production has been investigated using the dual-task paradigm (e.g., Cook, Yip, & Goldin-Meadow, 2012a; Goldin-Meadow et al., 2001; Marstaller & Burianova, 2013; Ping & Goldin-Meadow, 2010; Wagner, Nusbaum, & Goldin-Meadow, 2004). In this paradigm, speakers are presented with extraneous information to remember (e.g., digits, letters, or positions in a grid). They are then asked to speak about something, either with gesture or without. Finally, they are asked to recall as much of the extraneous information as they can. The general finding is that speakers have more cognitive resources available to devote to the memory task when they gesture during the speaking task than when they do not gesture during the speaking task. The interpretation is that producing gestures during speaking “frees up” cognitive resources that can then be devoted to remembering the information. Gestures reduce cognitive load only when they are meaningful; producing circular gestures that are temporally but not semantically coordinated with speech does not have the same effect (Cook et al., 2012a).

It is difficult to discern whether the results of these studies reflect a cognitive benefit of producing gestures, a cognitive cost to not producing gestures, or a combination of both effects. The argument in favor of facilitation has come from studies including a condition in which speakers were given no instructions about gesture, but they nonetheless spontaneously chose not to gesture on some trials (e.g., Goldin-Meadow et al., 2001). The cognitive load observed in these spontaneous nongesture trials is similar to that in instructed nongesture trials, casting doubt on the possibility that the increased cognitive load imposed by gesture inhibition is the result of consciously remembering not to gesture. According to the GSA framework, the cognitive cost associated with gesture inhibition should be present whenever a highly activated simulation underlies language or thinking but gesture is inhibited—regardless of whether gesture is inhibited consciously or not. Nonetheless, the dominant interpretation in the published literature using the dual-task paradigm has been that gesture production reduces cognitive load, rather than that gesture inhibition increases cognitive load (e.g., Goldin-Meadow et al., 2001; Ping & Goldin-Meadow, 2010).

One study has specifically favored the interpretation that gesture inhibition results in a cognitive cost, rather than that gesture production results in a cognitive benefit. Marstaller and Burianova (2013) found that the difference in cognitive load associated with gesture production versus inhibition was greater for participants with low working memory capacity than for those with high working memory capacity. They argued that individuals with low working memory capacity are less able to inhibit prepotent motor responses, such as gesture, and they are thus strongly affected by instructions to inhibit gesture. In contrast, individuals with high working memory capacity are better able to inhibit responses in general, and they are therefore able to inhibit gesture without negative effects on performance.

Although this is the interpretation offered by Marstaller and Burianova (2013), it is also possible to explain their results as stemming from a reduction of cognitive load when gestures are produced. Under this account, individuals with high working memory capacity experienced a manageable load on the speaking task, and they were therefore unable to be further helped by gesture; for this reason, only the individuals with low working memory capacity displayed benefits from gesture (see also Eielts et al., 2018). Indeed, Goldin-Meadow et al. (2001) found that adults who were under a very low working memory load (remembering only two letters) while speaking were not affected by whether or not they gestured, lending some support to the possibility that when cognitive load is very low, there is no “room” for gesture to further reduce load in a way that affects performance. However, it should be noted that the participants in the Marstaller and Burianova study were not at ceiling on the memory task; even the individuals with high working memory capacity recalled fewer than half of the letters presented. Thus, it is difficult to make the case that individuals with high working memory capacity could not have been further helped by gesture, as they did have room to improve. Regardless, with only a gesture and a no gesture condition included in the design, it is impossible to know whether any difference between conditions resulted from decreased cognitive load when gestures were produced, increased load when gestures were inhibited, or a combination of both effects. To disentangle these effects in the dual-task paradigm, studies are needed that include baseline measures of performance on the memory task.

If a cognitive cost is associated with inhibiting a simulation from being expressed as gesture, as we claim, then speakers who are asked to refrain from gesturing may adopt a cognitive strategy on the task, if possible, that does not involve active simulation. Alibali, Spencer, Knox, and Kita (2011) presented participants with gear movement prediction problems, in which they were asked to view a configuration of gears and to predict how the final gear would move, if the initial gear were turned in a particular direction. Participants who were prohibited from gesturing during the task were more likely to generate an abstract rule (i.e., the parity rule, which holds that all odd-numbered gears turn in the same direction as the initial gear) than participants who were allowed to gesture. Inhibiting gesture seemed to discourage participants from using the simulation-based strategy of thinking about the direction of movement of each gear in order, a strategy that was favored by participants who could gesture. One explanation for this finding is that simulating the direction of each gear in order without being able to express that simulation as gesture was too effortful, so participants adopted an easier, non-simulation-based strategy when they could not gesture.

In many cases, another strategy is not readily available for use on a particular task. In such situations, when gestures are prohibited, speakers may struggle to completely inhibit their simulations from being expressed as gestures, and they may overtly express them in other bodily movements. Indeed, when people are prevented from gesturing with their hands in a cartoon-retell task, they are more likely to produce gestures with other body parts, including their heads, trunks and feet, than when they are free to gesture (Alibali & Hostetter, 2018). Along similar lines, Pouw, Mavilidi, van Gog, and Paas (2016) found that participants who were not allowed to gesture as they explained the Tower of Hanoi task made more eye movements to the positions where the pieces should be placed than participants who were allowed to gesture. The effect was particularly pronounced for participants who had low visual working memory capacity, suggesting that individuals who experience high cognitive load on a task have difficulty inhibiting their simulations from being expressed overtly. Pouw et al. argue that this is because participants with low visual working memory capacity need the extra scaffolding that gestures or eye movements can provide, but an equally plausible interpretation is that they do not have the cognitive resources needed to inhibit their simulations from being expressed as motor plans. Because they have been told not to move their hands, they express their simulations in their eye movements instead.

It should be clear, then, that there is some ambiguity in the interpretation of results that compare the cognitive effects of producing versus inhibiting gestures. The interpretation usually proffered is that there is a cognitive benefit to gesture production (e.g., Goldin-Meadow et al., 2001), but the results are equally compatible with the explanation that a cost is associated with gesture inhibition. Importantly, however, the GSA framework does not discount that there might be both cognitive and communicative benefits to producing gestures (a point to which we return below); indeed, anticipating that gestures might make one’s speaking task easier is one factor that could affect a speaker’s gesture threshold—and rate of gesturing—in a particular situation. At the same time, the GSA framework predicts that inhibiting a simulation from being expressed as gesture should have a discernible cognitive cost (in addition to any benefit that may come from producing the gesture). More studies are needed to dissociate the benefits of gesture production from the costs of gesture inhibition, for example by including control conditions that can provide baseline estimates of cognitive effort that are independent of gesture production. Studies are also needed that compare how much speakers gesture on tasks as a function of whether they are under an extraneous cognitive load or not; if speakers are under extraneous load, they may not have cognitive resources available to devote to inhibiting gesture (see, e.g., Masson-Carro, Goudbeek, & Krahmer, 2016b).

Finally, it bears mentioning that our claim regarding the cognitive costs of inhibiting gesture is specifically about gestures that emerge automatically from simulations of actions and perceptual states. If a speaker is producing speech based on a verbal or propositional representation (e.g., reciting a memorized speech) and wishes to produce a gesture along with the speech (perhaps for communciative purposes), cognitive effort might be needed to generate that gesture (rather than to inhibit it). In this case, according to our view, the speaker must form an imagistic simulation of the event in order to produce a gesture, and generating this simulation may be effortful. Similarly, there may be situations in which speakers deliberately exaggerate or adjust the form or size of their gestures for communicative purposes, and the GSA framework is neutral about whether such additional crafting of gesture might require more cognitive resources than not gesturing at all (see Mol, Krahmer, Maes, & Swerts, 2009).

Conclusion

According to the GSA framework, the likelihood that a person will gesture on a given task is determined by the dynamic relation between the level of activation of the relevant action simulations and the current height of the gesture threshold, which depends on stable individual differences, as well as the communicative situation and the cognitive demands of the speaking task. Furthermore, the form of the gestures that are produced is dependent on the content of the underlying simulation. As such, many dynamic factors are relevant to understanding gesture, and each of these factors is difficult to measure with high certainty at any particular moment. Nonetheless, the framework does yield concrete predictions about when gestures should be most likely to occur and about the forms gestures should take.

In the decade since the GSA framework was formulated, each of its six predictions has been tested, either directly or indirectly. Although it is necessary to consider the entire cognitive and communicative situation that participants experienced when interpreting the results of any individual study, taken together, the evidence is largely in favor of the predictions. Thus, we believe the framework has largely stood up to empirical test. At the same time, we appreciate the perspectives of the framework’s critics (e.g., Pouw, de Nooijer, van Gog, Zwaan, & Paas, 2014) and of those who have offered alternative conceptualizations of gestures (e.g., Novack & Goldin-Meadow, 2017). In the next section, we address several specific challenges to the framework that have been raised.

Challenges to the GSA framework

Co-thought gestures also occur