Abstract

Categorical effects are found across speech sound categories, with the degree of these effects ranging from extremely strong categorical perception in consonants to nearly continuous perception in vowels. We show that both strong and weak categorical effects can be captured by a unified model. We treat speech perception as a statistical inference problem, assuming that listeners use their knowledge of categories as well as the acoustics of the signal to infer the intended productions of the speaker. Simulations show that the model provides close fits to empirical data, unifying past findings of categorical effects in consonants and vowels and capturing differences in the degree of categorical effects through a single parameter.

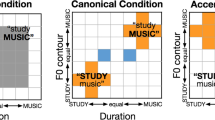

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Assigning categories to perceptual input allows people to sort the world around them into a meaningful and interpretable package. This ability to streamline processing applies to various types of input, both linguistic and non-linguistic in nature (Harnad 1987). Evidence that these categories affect listeners’ treatment of perceptual stimuli has been found in diverse areas such as color perception (Davidoff et al. 1999), facial expressions (Angeli et al. 2008; Calder et al. 1996), familiar faces (Beale and Keil 1995), artificial categories of objects (Goldstone et al. 2001), speech perception (Liberman et al. 1957; Kuhl 1991), and even emotions (Hess et al. 2009; Sauter et al. 2011). Two core tendencies are found across these domains: a sharp shift in the identification function between category centers, and higher rates of discrimination for stimuli from different categories than for stimuli from a single category. Nowhere is this more evident than in speech perception, where these perceptual effects are viewed as a core component of our ability to perceive a discrete linguistic system while still allowing for informative variation in the speech signal.

In speech perception, categorical effects are found in a wide range of phonemes. However, different phoneme classes differ in the degree to which the categories influence listeners’ behavior. At one end of the spectrum, discrimination of stop consonants is strongly affected by the categories to which they belong. Discrimination is little better than would be expected if listeners used only category labels to distinguish sounds, and between-category differences are extremely pronounced (Liberman et al. 1957; Wood 1976). At the other end of the spectrum, vowel discrimination is much more continuous, so much so that some early experiments seemed to suggest that vowels displayed no categorical effects at all (Fry et al. 1962). Since these classic studies, it has become evident that stop consonant perception is not purely categorical, while vowel perception can also exhibit categorical effects (Pisoni and Lazarus 1974; Pisoni 1975). In addition, there is evidence that rather than being purely perceptual, the influence of categories on discrimination behavior may arise later in processing (Toscano et al., 2010; but see Lago et al., 2015). Nevertheless, where the categorical effects do occur, the degree to which consonants are affected is much greater than that of vowels. Researchers have proposed a number of qualitative explanations for these differences. For example, the differences have been claimed to stem from the way each type of sound is stored in memory (Pisoni 1973), to be related to innate auditory discontinuities that could influence stop consonant perception (Pisoni 1977; Eimas et al. 1971), and to result from different processing mechanisms for steady state and rapidly changing spectral cues (Mirman et al. 2004). However, qualitatively the effects are very similar between consonants and vowels, with a sharp shift in the identification function and a peak in discrimination near the category boundary. These qualitative similarities suggest that these two cases may be interpretable as instantiations of the same phenomenon. That is, perceptual differences among different classes of sounds may be purely quantitative rather than qualitative.

Past models have focused on providing a mechanism by which strong categorical perception may arise for consonants, describing the origin of perceptual warping in vowel perception, or exploring general categorical effects without accounting for differences between stop consonants and vowels, but no model has provided a joint explanation of categorical effects together with an account of the variation in the degree of these effects. In this paper we show that categorical effects in consonant and vowel perception can be captured by a single model. We adapt a Bayesian model proposed by Feldman et al. (2009), which analyzes categorical effects as resulting from the optimal solution to the problem of perceiving the speech sound produced by the speaker. The model predicts that the strength of categorical effects is controlled by a single parameter, representing the degree to which within-category acoustic variability contains information that listeners want to recover. Thus, consonants and vowels may simply differ in how much of their variability is meaningful to listeners. Through simulations, we characterize several classes of sounds along this continuum and show the model can provide a unified framework for both strong and weak categorical effects.

We explore the possibility of a cohesive underlying model purely at the computational level, in the sense of Marr (1982). Marr proposed three possible levels at which one might approach this problem: computation, representation and algorithm, and physical implementation. For understanding speech perception, each level of analysis has a unique contribution. It would be impossible to paint a full picture of speech perception without being able to provide explanations at each level independently and show how these explanations relate to each other. However, it is not necessary to consider all three levels simultaneously, and specifying the model only at the computational level is advantageous in that it allows for the possibility of varying algorithmic and implementational levels of analysis for different sets of sounds, while still retaining the idea that all of these carry out the same basic computation. That is, while the perceptual dimensions that are relevant to perceiving stop consonants and vowels are not likely to have the same neural implementation, we show that the computations performed over those perceptual dimensions serve the same purpose.

In the remainder of the paper, we proceed as follows. First, we review previous findings on categorical effects in stop consonants, vowels, and fricatives, considering whether separate mechanisms are needed to account for the observed effects of categories. Next, we review a Bayesian model of speech perception that was originally proposed to capture categorical effects in vowels, and extend it for evaluating effects for various phonemes. We conduct simulations showing that the model provides a close match to behavioral findings from stop consonants and fricatives as well as vowels, capturing differences in the degree of categorical effects across consonants and vowels by varying a single parameter. We conclude by discussing the significance and implications of these findings.

Categorical effects in speech perception

In order to appreciate the differences and similarities between categorical effects for different phonemes, it is insightful to review the classic findings in these domains. In this section we introduce the methods that are used to study categorical effects in speech perception and review descriptions of categorical effects for the three classes of phonemes that we later consider using our unified model: stop consonants, fricatives, and vowels. This overview centers on two key models that have been put forward for characterizing the effect of categories on stop consonant and vowel discrimination: categorical perception (CP) and the perceptual magnet effect (PME) (Liberman et al. 1957; Kuhl 1991). Although we do not claim that either of these is an entirely accurate model of perception, they provide a useful historical context for introducing the basic phenomena of interest.

Behavioral measures and perceptual warping

Categorical effects in speech perception are typically studied through behavioral identification and discrimination tasks, which provide data on listeners’ ability to classify the sounds (identification) and to differentiate sounds along an acoustic continuum (discrimination). The stimuli that participants hear in each task typically lie along a one-dimensional continuum between two phonemes. For presentation purposes, we consider a continuum between two phonemes, c 1 and c 2, with seven equally spaced stimuli S 1...S 7. For example, if c 1 = /b/ and c 2 = /p/, stimuli might be created by varying the voice onset time (VOT) of the signal.

The identification task consists of choosing between two competing labels, c 1 and c 2, in a forced choice paradigm. Participants choose one of the two labels for every stimulus heard, even if they are unsure of the proper classification. By examining the frequency with which participants choose each category, we can observe an apparent boundary between the categories and can determine the sharpness of this boundary. The shape of the identification curve provides information about the distribution of sounds in the categories that the listener expects to hear. If the categories are sharply concentrated with little perceptual overlap, then we would expect more absolute identification and a sudden switch between category labels - resulting in a steep curve. Alternatively, if categories are more diffuse, we would see a shallower curve, i.e., a more gradual switch between category labels. An illustrative example in the presence of sharply concentrated categories can be seen in the solid line in Fig. 1.

Discrimination can be measured in a number of ways (e.g., AX, ABX, or triad presentation), but all of these methods test the ability of a listener to determine whether sounds are the same or different. Our simulations focus on studies employing the AX discrimination paradigm, where listeners are presented with two stimuli, A and X. Their task is to say whether the X stimulus is identical to the A stimulus or different from it. This task measures how likely discrimination is to occur, and therefore serves as a measure of perceptual distance between the stimuli. By considering all equidistant pairs of stimuli along the continuum we can see how listeners’ ability to discriminate sounds changes as we move from the category centers to the category boundary. With no categorical effects, we would expect uniform discrimination along the continuum, due to equal spacing of the stimuli (i.e., listeners should be just as good at telling S 1 and S 3 apart as S 3 and S 5). If perception is biased toward category centers, listeners’ ability to differentiate stimuli near category centers should go down while their ability to differentiate stimuli at the category boundary should go up. An illustrative example of discrimination performance in the presence of strong influences of categories can be seen in the dashed line in Fig. 1. The degree of warping in discrimination, corresponding roughly to the height of the discrimination peak near the category boundary, is a key indicator of how strong the category effects are. It will be used in our model to tease apart different sources of variability in the individual sound categories.

Identification and discrimination tasks have been used to investigate sound perception across a variety of phonemes. By considering both of these tasks together, we can determine the characteristics of listeners’ perceptual categories as well as the extent to which these categories affect their discrimination of sounds. The remainder of this section reviews categorical effects in perception that have been studied using these paradigms, focusing on the perception of stop consonants, vowels, and fricatives, the three classes of phonemes to whose behavioral data we fit our model.

Phonemes considered in this paper

The three phoneme classes we consider in this paper are stop consonants, vowels, and fricatives. The stop consonants we consider are along the voice onset time continuum between bilabial consonants /p/ and /b/. The primary acoustic cues used in the classification of these consonants are static temporal properties, specifically the amount of time between the release burst and the onset of voicing. The vowels we consider lie along the dimension between /i/ and /e/. These vowels are largely identified by the first and second formant, representing the steady state peaks of resonant energy with a certain bandwidth, a static spectral cue. Finally we consider the fricatives lying between /\(\int \)/ and /s/. In terms of cues to identification, these fricatives are identified largely by the static spectral peak locations, though there are many other cues that are also relevant to fricative identification (see (McMurray and Jongman 2011) for a review). Below we go through more details on the three classes of phonemes more broadly, considering behavioral findings, explanations for categorical effects, and models meant to capture the source of these effects.

Stop consonant effects

Stop consonants provide a prototypical example of a strong effect of categories on listeners’ perception of sounds. Liberman et al. (1957) showed that discrimination of stop consonants is only slightly better than would be predicted if listeners only used category labels produced during identification and ignored all acoustic detail. They labeled this observation categorical perception (CP). The core tenet of pure CP for stop consonants is that participants do not attend to small differences in the stimuli, rather treating them as coarse categories, ignoring some of the finer detail in the acoustic stream. Under the CP hypothesis, participants assign a category label to each stimulus and then make their discrimination judgment based on a comparison of these labels.

To model participants’ identification and discrimination data from a place of articulation continuum ranging from /b/ to /d/ to /g/, Liberman et al. (1957) formulated a probabilistic model which used the probabilities from the identification task to make predictions about how often the listener would be able to discriminate the sounds based only on category assignments. This produces the strongest possible categorical effect. They found that using this formula, they could predict overall discrimination behavior very well. The participants’ actual discrimination only out-performed the predictive model slightly. However, the fact that the participants did outperform the model suggests that they were able to use acoustic cues beyond pure category membership.

Since Liberman et al.’s initial findings, there has been extensive research into other phonetic environments with similar categorical effects. Critically for our work here, strongly categorical perception was found by Wood (1976) for the voicing dimension. Strong categorical effects have also been found for /b/-/d/-/g/ by other researchers (Eimas, 1963; Griffith, 1958; Studdert-Kennedy et al., 1963, 1989), as well as for /d/-/t/ (Liberman et al. 1961), /b/-/p/ in intervocalic position (Liberman et al. 1961), and the presence or absence of /p/ in slit vs. split (Bastian et al. 1959; Bastian et al. 1961; Harris et al. 1961). These strong categorical effects can be modulated by contextual effects and task-related factors (Pisoni 1975; Repp et al. 1979), but are generally viewed as robust.

In contrast with the original formulation of categorical perception, evidence has accumulated showing that perception of stop consonants is not purely categorical. Listeners pay attention to subphonemic detail, as evidenced by various behavioral and neural studies. Studies have shown that goodness ratings of stop consonants vary within categories and are prone to context effects based both on phonetic environment and speech rate (Miller 1994). Internal structure of consonant categories is further supported by studies of reaction time, with Pisoni and Tash (1974) showing that participants are slower to respond same to acoustically different within category pairs of sounds than for pairs of sounds that are acoustically identical. Further, priming studies by Andruski et al. (1994) found priming effects of within-category VOT differences for short inter-stimulus intervals of 50 ms. They showed that stimuli with initial stop consonant VOTs near the category center exhibited a stronger priming effect for semantically-related following stimuli, and that non-central values also elicited longer reaction times. Finally, at the neural level, an fMRI study by Blumstein et al. (2005) showed that there are robust neural correlates to subphonemic VOT differences in stimuli. In related work looking at event-related potentials during word categorization in an auditory oddball task, Toscano et al. (2010) showed that listeners are sensitive to fine acoustic differences in VOT independent of the categorization. Effects were found both at a pre-categorization late perceptual stage 100 ms post stimulus as well as in the post-perceptual categorization stage around 400 ms post stimulus, indicating that fine acoustic detail is carried through the entire perceptual process. Together, these studies strongly suggest that not all members of the category are perceived as truly equal and that identification and discrimination performance is not based on an all-or-none scheme. While this goes against the original CP hypothesis, it takes nothing away from the observation that in discrimination tasks, stop consonants are prone to perceptual warping, and that this warping appears to correlate closely with their classification into categories.

Vowel effects

Vowels exhibit less influence from categories and are perceived more continuously than stop consonants, with listeners exhibiting higher sensitivity to fine acoustic detail. Unlike consonants, vowel discriminability cannot be closely predicted from the identification data, which itself is much more prone to context effects (Eimas 1963). Relatively continuous perception has been found repeatedly in the /i/-/I/-/E/ continuum (Fry et al. 1962; Stevens et al. 1963; Stevens et al. 1964), as well as in perception of vowel duration (Bastian and Abramson 1962) and perception of tones in Thai (Abramson 1961). Further support for more continuous perception of vowels comes from mimicry experiments (Chistovich 1960; Kozhevnikov and Chistovich 1965): When participants were asked to mimic stop consonants and vowels, their ability to reproduce vowels accurately was much greater than for consonants, which tended to be reproduced with prototypical members of the category. It should be noted that these findings are all for steady-state vowels, and do not necessarily pertain to vowels in speech contexts that contain rapidly changing formant structures. Stevens (1966) found that one could obtain nearly categorical perception when looking at vowels between consonants pulled out of a rapidly articulated stream. However, for comparisons in this work, we will focus on findings in the perception of isolated vowels.

Kuhl (1991) took a different approach to investigating the role of categories in vowel perception by examining the relationship between discrimination and goodness ratings. In goodness rating tasks, participants give numerical ratings to indicate how good an example a stimulus is of a specific category. Goodness ratings collected by Kuhl (1991) along a vowel continuum near /i/ confirmed that there was variable within-category structure that people could represent and access; multiple participants shared the center of goodness ratings (i.e., the location where stimuli were rated highest on category fit) and had similar judgments for stimuli expanding radially from the center. These findings suggest that participants have a stable representation of the category for /i/ and that its structure does not represent an all-or-nothing judgment of category membership, but rather a gradient representation.

To determine how this gradient representation relates to the perception and discriminability of individual stimuli, adults, children, and monkeys were asked to discriminate sounds equally spaced around the prototypical and non-prototypical /i/ stimuli. Both adults and children were more likely to perceive stimuli around the prototypical category member as the same sound as compared to sounds around the non-prototype. However, monkeys did not show any effect of prototypicality. This suggested that humans’ discrimination abilities depended on linguistically informed representations of category structure and showed that perception of vowels is not entirely veridical, even if the precise nature of the effect differs from that of stop consonants.

These findings led Kuhl to propose the perceptual magnet effect. She described this effect as a within-category phenomenon, focusing on the relationship between category goodness ratings and discriminability. The claim was that stimuli that are judged to be better exemplars of a category act as “perceptual magnets”, making stimuli around them harder to discriminate. Meanwhile, stimuli judged to be poor exemplars exhibit very little effect on neighboring vowels. As a result, under the perceptual magnet hypothesis, there is a correlation between category goodness judgments and discriminability. Iverson and Kuhl (1995) showed a direct link between goodness ratings and discriminability, proposing this as a central tenet of the perceptual magnet effect. Critically, the effect resembles other categorical effects, but contains additional predictions regarding goodness ratings. If one considers an extreme version of perceptual magnets acting on the surrounding stimuli, we could get something akin to the original formulation of categorical perception. Hence, we can immediately see a possible relationship between this view of categorical effects in vowels and consonants.

The extent to which the perceptual magnet effect generalizes to other sound types is an open question. There were documented replications of the perceptual magnet effect in the /i/ category in German (Diesch et al. 1999) and Swedish (Kuhl et al. 1992; Aaltonen et al. 1997), but also failed replication attempts for American English (Lively and Pisoni 1997; Sussman and Gekas 1997) and Australian English (Thyer et al. 2000). Additionally, there was a failure to find evidence of the perceptual magnet effect for certain other vowel categories in English (Thyer et al. 2000). However, it was also found for the Swedish /y/ category (Kuhl et al. 1992; Aaltonen et al. 1997) as well as the lateral and retroflex liquids (/l/,/r/) in American English (Iverson and Kuhl 1996; Iverson et al. 2003). This suggests that it is a robust effect that extends to at least some types of consonants, even if the precise nature of the stimuli that elicit it is unclear

Fricative effects

Fricatives have spectral properties that make them interesting to consider in relation to work on other phonemes. They are consonants; however, they also share properties with vowels, in that they can largely be identified by their spectral properties. Specifically, sibilant fricatives [s] and [\(\int \)] are identified by their two primary frication frequencies, analogously to how vowels are identified by their two primary formants (F 1 and F 2). The precise spectral frequency cues are different in that fricatives have higher frequency aperiodic noise and vowels consist primarily of lower frequency periodic energy. However, they are qualitatively similar, in that these frequencies are key to the identification of particular sounds. Because of this similarity, fricatives serve as an interesting case to explore perception behavior that may fall intermediate between vowels and consonants.

Fricatives pattern with vowels in other aspects of perception as well. In their work on classifying the properties of perception of various forms of speech, Liberman et al., (1967) considered a sound’s tendency to show restructuring—exhibiting varying acoustic representations as a result of varying context—and how this related to observed categorical effects. Stop consonants were found to exhibit a large amount of restructuring, changing how they appear acoustically even though they have the same underlying phonemic status. This was found in both correlates of place of articulation (Liberman et al. 1967) as well as manner and voicing (Lisker and Abramson 1964b; Liberman et al. 1954). Steady state vowels, on the other hand, show no such restructuring, when accounting for speaker normalization and speaking rates (Liberman et al. 1967). Liberman et al. consider the noise produced at the point of constriction in both fricatives and stop consonants, and argue that for longer duration of the noise, precisely the kind found in fricatives, the cue does not change with context. This lack of restructuring was shown specifically for the perception of /s/ and /\(\int \)/ by Harris (1958) and Hughes and Halle (1956). Thus, if categorical effects are related to restructuring, fricatives may pattern with vowels rather than stop consonants in their categorical effects.

Experiments designed to evaluate the effects of categories on the perception of fricatives have led to mixed results. In behavioral experiments with fricatives, Repp (1981) found that participants’ behavior was similar to that originally found in experiments with stop consonants, indicating strong effects of categories for fricatives. However, during the course of the same study, some participants exhibited perception that was much more continuous. To accommodate this apparent contradiction in the findings, Repp proposed that participants were using two distinct processing strategies: acoustic and phonetic processing. Phonetic processing refers to a mode of perception where listeners are actively assigning phonetic category classifications, whereas acoustic processing refers to attention to the fine-grained acoustic variability of the signal.

More recently, Lago et al. (2015) investigated categorical effects in fricatives by focusing on the continuum between sibilant fricatives /s/ and /\(\int \)/. They conducted an identification task, an AX discrimination task, and a goodness judgment task. Their results showed a strong effect of categories on the perception of the stimuli with no strong correlation between discriminability and goodness ratings. Qualitatively, their identification findings showed a sharp change in identification near the category boundary, but a discrimination peak that was markedly shallower than expected. This suggested that fricatives employ a representation that retains more acoustic detail than pure category assignment, making them not as strongly categorical as stop consonants, but also not as continuous as vowel continua.

Models of categorical effects in consonant and vowel perception

Models that have been proposed have largely been split between those focused on strong categorical effects for stop consonants and those focused on weaker effects for vowels. Here we present a brief overview of the models covering a range of approaches. First we consider models focused on categorical perception and generally strong categorical effects. Various models have been put forward to explain the source of these strong categorical effects. Initially, researchers assumed that categorical perception resulted from psychophysical properties of processing speech and argued that it was specific to language processing (Macmillan et al. 1977). Other researchers tended to use more general views of either statistical properties or higher order cognitive processing to explain the effect. Massaro (1987a) used signal detection theory (SDT) to model the identification and discrimination tasks in two stages, sensory and decision operations, leading to a separation of sensitivity and response bias. In their model, categorical behavior can arise from classification behavior even if perception is continuous, with Massaro calling it categorical partition instead of categorical perception. The separation of perception and decision making processes was further investigated by Treisman et al. (1995), who applied criterion-setting theory (CST) (Treisman and Williams 1984) to categorical perception. Their work models the sensory system as able to reset the internal criterion for decision making based on most recently available data, much like Bayesian belief updating. Elman (1979) showed that such a model of criterion setting is able to capture the original stop consonant findings (Liberman et al. 1967) even better than their original Haskins model.

Other researchers considered the problem at a different level of analysis, focusing instead on the possible neural implementation of the categorical perception mechanism. Vallabha et al. (2007) proposed a multi-layer connectionist model that operates on Gaussian distributions of speech sounds as the input and produces categorical effects via interactions of three levels of representation: an acoustic input layer, an intermediate perceptual layer, and a categorical classification output layer. The key to their model is the presence of bidirectional connections between the output category level and hidden perceptual layer, whereby the perception influences the classification, but the classification simultaneously biases perception toward category centers. The setup of the model and use of bidirectional links to create top-down influences is similar to the TRACE model of speech perception proposed by McClelland and Elman (1986), where a feature level, phoneme level, and word level were used to explain various features of speech perception. Other neural network models have also been proposed to show a biologically plausible mechanism by which these categorical perception effect could arise. Damper & Harnad (2000) trained both a Brain-State-In-A-Box (BSB) (following Anderson et al., 1977) and a back-propogation neural network model to show how categorical perception arises through spontaneous generation after training on two endpoint stimuli. They were able to produce typical categorical effects and reproduce the discrepancy between VOT boundaries between different places of articulation found in human participants. Going for even greater biological plausibility, Salminen et al. (2009) exposed a self-organizing neural network to statistical distributions of speech sounds represented by neural activity patterns. Their resulting neural map showed strongly categorical effects from single neurons being maximally activated by prototypical speech sounds, along with the greatest degree of variability in the produced signal at the category boundaries. Kröger et al. (2007) showed that categorical perception arises when using distributions consisting of specific features (bilabial, coronal, dorsal) to train self-organizing maps to learn phonetic categories and discriminate between sounds.

These models suggest that there are many possible processes that underlie strong categorical effects. However, these models are poorly adapted to capture effects going beyond the case of strong categorical perception described above, such as the weaker categorical effects found in vowel perception. For vowels, we focus on models related to explaining the perceptual magnet effect. Several models at different levels of processing have been proposed to explain the source of the perceptual magnet effect. One such theoretical model is the Native Language Magnet Theory (Kuhl 1993), which proposed that prototypes exert a pull on neighboring sounds. However, this leaves open the question of why prototypes should exert a pull on neighboring speech sounds. An exemplar model was then proposed (Lacerda 1995) that showed how the perceptual magnet effect could be construed as an emergent property of an exemplar-based model of phonetic memory. In his model, sound perception is guided by a simple similarity metric that operates on collections of exemplars stored in memory, with no need to refer to special prototypes to derive the sorts of effects typical of the perceptual magnet effect. This then leaves open the question of how do we fully account for within-category discrimination. For this we can consider low-level neural network models that attempt to provide a potential explanation of the type of connectionist network that can give rise to these perceptual effects. One such neural network models was proposed by Guenther and Gjaja (1996), where sensory experience guided the development of an auditory perceptual neural map and the vector representing cell firing corresponded to the perceived stimulus. Another model that Vallabha and McClelland (2007) considered modeled learning via distributions of speech sounds and used online mixture estimation and Hebbian learning to derive the effect. Both models showed how the effect might be derived from a biologically plausible mechanism. Finally, Feldman et al. (2009) proposed a Bayesian model in which listeners infer the phonetic detail of a speaker’s intended target production through a noisy speech signal. It is this model that serves as the basis for the present work, where we try to show how both strong and weak categorical effects can be accounted for as a unified effect at the computational level.

Common ground in vowel and consonant perception

The existing evidence shows differing degrees of categorical effects across different phonemes. Stop consonant perception is characterized by very sharp identification shifts between two categories and a large peak in discrimination at the center between the categories. Vowel perception elicits more continuous identification functions, shallower peaks in discrimination at the category boundaries, and much greater within-category discrimination. Additionally, goodness ratings for vowels descend in gradient fashion from the center of the category outward (Iverson and Kuhl 1995), while for stop consonants the goodness ratings vary only slightly within the category (particularly for /p/), while they exhibit a sharp jump in goodness at the category boundary (Miller and Volaitis 1989). Models proposed for these effects only tend to work for subsets of categories. For stop consonants, the Liberman et al. (1967) model comes close to predicting discrimination based on the identification function, while this prediction fails in vowel perception experiments. Neural network models have been applied to either vowel and liquid perception (Guenther and Gjaja 1996; Vallabha and McClelland 2007) or to stop consonant perception (Damper and Harnad 2000), but the same models have not typically been used to account for perception of both classes. While we now know that no phonemes are perceived purely categorically, the literature has nevertheless continued to treat strongly categorical perception as a separate phenomena from more continuous perception of other sounds, particularly vowels (Table 1).

However, perception of the different sound classes also has much in common qualitatively. Both stop consonants and vowels exhibit greater discriminability at category boundaries, with the peak in discrimination being in close correspondence with the boundary found in identification. Stop consonants do exhibit some within-category discriminability, or at least within-category structure, as evidenced by reaction time measures (Pisoni & Tash, 1974; Massaro, 1987b). Lotto et al. (1998) also suggested that Kuhl’s (1991) failure in the ability to predict vowel discrimination from identification was due to faulty identification data rather than an inherent difference in perception between consonants and vowels: By retesting identification in paired contexts, they removed the need to appeal to goodness of stimuli to explain reduced discriminability near category centers. They argued based on their analysis that the perceptual magnet effect was nothing more than categorical perception. Furthermore, while Iverson and Kuhl (2000) found that the correlation between discriminability and goodness ratings (a key feature of the perceptual magnet effect model for vowel perception) could be dissociated from the relationship between identification and discriminability (a key feature of categorical perception model for stop consonants), Tomaschek et al. (2011) found that these two relationships co-occur. The fact that fricative perception was not found to be strongly categorical, nor as continuous as vowels, and different studies reached different results depending on the task and measurements involved further suggests that strongly categorical and largely continuous perception are merely two ends of a continuum, and not two separate modes of perception, and that there can be gradient degrees of categorical effects that fall between these two extremes.

The goal of our simulations is to show that a common explanation can account for the behavioral findings in both consonants and vowels. We adapt a model that was originally proposed to account for vowel perception and show that it also provides a close match to empirical data from stop consonants and fricatives. We further show how parametric variation within the model can lead to varying strengths in categorical effects. We argue that while perception of the cues to different sounds is implemented differently at a neural level, strong categorical effects in consonant perception and the largely continuous perception of vowels reflect solutions to the same abstract problem of speech perception at Marr’s 1982 computational level. Our analysis appeals to a kind of scientific Occam’s Razor to argue that our unified account is the more parsimonious theory; a similar argument was used to substantiate a unified account of cumulative exposure on selective adaptation and phonetic recalibration by Kleinschmidt and Jaeger (2015).

Bayesian model of speech perception

To show that it is possible to interpret categorical effects across speech perception as qualitatively similar processes, we model these effects using a Bayesian model developed by Feldman et al. (2009) that was originally proposed to account for the perceptual magnet effect along the /i/-/e/ continuum. We apply an extension of this model to a broader range of data, encompassing data from stop consonant and fricative perception. In doing so, we show that the categorical effects seen in consonant and vowel perception can be accounted for in a unified fashion.

Generative model

The model lays out the computational problems that listeners are solving as they perform identification and discrimination of sounds. In identification tasks, it assumes that the listener makes a choice among a set of categories while listening to sounds coming from the continuum between these categories. In discrimination tasks, the model assumes that the listener infers a continuous acoustic value that a speaker intended to produce, compensating for noise in the speech signal. For each of these two inference processes, the model formalizes the assumptions that the listener makes about the generative process that produced the sounds in order to determine how these assumptions affect their perception of the sounds. A graphical representation of the model appears in Fig. 2. For presentation purposes, and because of the nature of these particular studies, we restrict our attention to the case of two categories throughout the remainder of this paper. However, the model can in principle be applied to arbitrary numbers of categories, with Eqs. 2 and 9 being used to derive model predictions in the general case.

Bayesian model of production of phonemes used for optimal speech inference. a These are the distributions involved in the generative process. c is the underlying phonetic category chosen from the two possible categories, T is the intended target production chosen by the speaker, and S is the perceived speech sound heard by the listener. b This is the graphical model of the generative process for speech production under this Bayesian model. T is sampled from a Normal distribution with meaningful category variance \({\sigma _{c}^{2}}\) around the category mean μ c (i.e. \(p(T|c) = N(\mu _{c},{\sigma _{c}^{2}})\)). S is sampled from a normal distribution with noise variance \({\sigma _{S}^{2}}\) around the intended target production T (i.e. \(p(S|T) = N(T,{\sigma _{S}^{2}})\))

We begin with the listener’s knowledge of the two categories, which we call c 1 and c 2. The next steps concern the process that the listener presumes to have generated the sounds heard. First, the speaker chooses one of the two categories, which we refer to as c. We refer to this category as the underlying category. This is not to be confused with the term underlying category used in phonology to represent the abstract phonological category that underlies allophonic variation. Instead, we use this term to refer to a phonetic category that has not been corrupted by noise in the speech signal. This category can be represented in our model as a Gaussian distribution around the category mean μ c with variance \({\sigma _{c}^{2}}\). We are agnostic to the particular dimension for the mean and variance, but in practice it can represent any measure including VOT for stop consonants, F 1 and F 2 for vowels, and many others. This mean and variance of the category being used in the generative procedure by the speaker is known by the listener from previous exposure to sounds from this category in the language. For the purposes of our model, we don’t concern ourselves with how such categories are learned, but rather the perception that occurs once the categories are already acquired. Hence, the mean represents the center of the category in perceptual space. The variance here is assumed by the listener to be derived from processes that provide useful information about the nature of the sound, indexical variables such as speaker identity, or the identities of upcoming sounds. Because of this, we call the categorical variance of the underlying category ‘meaningful’. We consider in more detail in the General Discussion which types of factors might contribute to meaningful variance.

The next step in the generative process is the selection of an intended target production from the normal distribution \(N(\mu _{c},{\sigma _{c}^{2}})\). We refer to the intended target production as T. The probability of choosing a specific target production, T, from a phonetic category, c, is \(p(T|c) = N(\mu _{c}, {\sigma _{c}^{2}})\). Once the intended target production is chosen, it needs to be articulated by the speaker and perceived by the listener. This process introduces additional articulatory, acoustic, and perceptual noise that distorts the signal. We formalize this as an additional Gaussian distribution around the intended target production with mean T and variance \({\sigma _{S}^{2}}\). We refer to the actual speech sound that the listener perceives as S and assume that it is sampled from the distribution of speech signal noise, with probability \(p(S|T) = N(T,{\sigma _{S}^{2}})\). We can also consider the overall distribution of possible speech sounds related to the underlying category chosen by the speaker at the beginning of the generative procedure. If we integrate over all possible intended target productions, T, then we can describe the distribution of speech sounds as \(S|c = N(\mu _{c}, {\sigma _{c}^{2}}+{\sigma _{S}^{2}})\).

Given this generative model, we can consider how this model relates to the behavioral tasks described in the phoneme perception sections above. In those tasks, listeners are asked to either identify the category of the sound or to tell if two sounds are the same or different. In the model, the identification task relates to retrieving the underlying category, c. The discrimination task involves recovering the intended target production T for each of the stimuli heard by the listener and then comparing them to see if they are the same or different. The listener is presumed to be recovering both phonetic detail about the target production as well as category choice information when they perceive sounds. By fitting the model to the results of behavioral tasks performed by listeners, we find the optimal setting of parameters that best describes the data. We can then examine how these parameters relate to the degrees of categoricity seen in the perception of different phoneme continua.

Let us consider how the listener might be able to retrieve this information that they need. First, note that the listener does not have access to the intended target production, T, that the speaker meant to say. The listener does have knowledge of the underlying categories, \(N(\mu _{c1},\sigma _{c1}^{2})\) and \(N(\mu _{c2}, \sigma _{c2}^{2})\), noise variance along the relevant perceptual dimension, \({\sigma _{S}^{2}}\), and the actual speech stimulus that they perceived, S. This means that the listener will use a combination of actual perceived speech information and knowledge of underlying categories in inferring what the speaker intended. This relationship between the contribution of S and μ c1 and μ c2 will become important as we move forward in evaluating the varying effects of categories in identification and discrimination tasks. In terms of our model, the identification task will correspond to finding the probability of a given category given the speech sound. In other words, it entails computing p(c|S). The discrimination task corresponds to finding the probability of a given target production given the same speech stimulus, or computing p(T|S). For both of these inference procedures our model uses Bayes’ rule, which we discuss below in relation to each of these tasks.

Bayes’ rule

Bayes’ rule is derived from a simple identity in probability theory (Bayes 1763). It allows us to compute a belief in a hypothesis based on observed data, stating that the posterior probability of a hypothesis given some data can be calculated from the probability of the data given the hypothesis multiplied by the prior belief in the hypothesis and then normalized by the overall (marginal) probability of the data given all possible hypotheses,

The denominator on the right-hand side of the equation is the marginalized representation of the overall probability p(d). The term of the left-hand side, p(h|d) is called the posterior probability. The term p(d|h) is called the likelihood, since it describes how likely the data are under a certain hypothesis. The final term, p(h) is called the prior probability, since it is the probability of the hypothesis (i.e., belief in the hypothesis) before seeing data. Often these prior probabilities are uninformed and are set using a heuristic, or are uniformly distributed among all possible hypotheses, as is the case in our simulations of identification data below.

The hypotheses and data are different depending on what behavior we are modeling (identification vs. discrimination). In the section below we go through in detail how the inference procedure for the listener is structured, and what parameters we can extract by fitting the model to the listener’s behavioral data.

Bayes’ rule for identification

First we consider the behavioral task of identification. For a listener, the task of identifying a sound involves picking the correct category label for the sound. In our generative model, this means inferring the category c from the speech sound S (Table 2). Bayes’ rule for identification is

If we rewrite this equation using the probability distributions for the prior probability and the likelihood given in our generative model, we can see the critical parameters that can be recovered by fitting the model. We make the simplifying assumption that both categories are equally probable before any speech sound is heard, substituting 0.5 for p(c) in the equation. In other words, we are not taking into account different phoneme frequencies. Although vowel frequencies (Gimson 1980; Wioland 1972; Fok 1979; Fry 1947) and consonant frequencies (Crawford and Wang 1960; Mines et al. 1978) differ greatly, it is possible that expectations about phoneme frequency are diminished in a laboratory setting where participants merely choose between two particular phonemes. We thus proceed with this simplifying assumption in our simulations.

The resulting expression for identification, using probability distributions from the model, is

The values that appear in this equation are: μ c1, μ c2, \(\sigma _{c1}^{2}+{\sigma _{S}^{2}}\), and \(\sigma _{c2}^{2}+{\sigma _{S}^{2}}\). It is these values that we would be able to recover via a fit of our model to the behavioral data produced by the listener. The Simulations section below shows how this fits into the overall process of extracting parameters via model fitting, and how these parameters guide our understanding of the gradient effects of categoricity.

Equation 3 can be used to derive the equation that we will fit to the data. In the original application of this model to vowel data in Feldman et al. (2009), there was a simplifying assumption that underlying category variances for the two categories c 1 and c 2 were equal. This meant that only one sum of variances would need to be considered, \({\sigma _{c}^{2}} + {\sigma _{S}^{2}}\). However, this is an inaccurate assumption for stop consonants, because voiced and voiceless stop consonants have substantial differences in their variances along the voice onset time (VOT) dimension (Lisker and Abramson 1964a). Because of this, we extend the model to allow for different variances for the two categories. The expression for the probability of the sound having been generated from category 1 given the perceived stimulus is

where \({\sigma _{1}^{2}} = \sigma _{c_{1}}^{2} + {\sigma _{S}^{2}}\) and \({\sigma _{2}^{2}} = \sigma _{c_{2}}^{2} + {\sigma _{S}^{2}}\). A full derivation of this identification function is given in Appendix A.

In the simulations below, the optimal fit of this model to behavioral identification data was found by computing an error function between this model and the behavioral data and then running an error minimization routine in MATLAB to find the best-fitting parameters.

The identification portion of this model is compatible with several previous models that focus on identification behavior, including NAPP (Nearey and Hogan 1986) and HICAT (Smits 2001), which assume listeners are performing posterior inference on Gaussian categories. However, these previous models do not contain a variable analogous to a target production T, which in our model denotes the continuous phonetic detail that listeners aim to recover in discrimination tasks. It is this discrimination model that will be critical in accounting for differences between consonants and vowels in the strength of categorical effects.

Bayes’ rule for discrimination

Next, we consider the behavioral task of discrimination. Previous models of categorical perception have assumed that discrimination tasks primarily involved listeners’ inferences of category labels (e.g., (Liberman et al. 1957), (Damper and Harnad 2000)). Our model instead posits that listeners are primarily focused on recovering continuous phonetic detail in discrimination tasks, and recruit their knowledge of categories only because it helps them solve this inference problem. As we show below, inference of continuous phonetic detail is predicted to give rise to a pattern that has many of the same properties as categorical perception. Specifically, listeners perceiving sounds through a noisy channel are predicted to bias their perception toward peaks in their prior distribution over sounds. Because sounds occur most often near category centers, and less often near category edges, this results in a perceptual bias toward category centers.

For the listener, the task of discrimination involves inferring the most likely value of the target production, T, for a pair of stimuli and then comparing the values to see if the intended target productions were the same or different (Table 2). The further apart the pair of Ts are judged to be, the higher the probability that the listener will decide that the stimuli are different.

Given the values of the likelihood and prior probability distribution from Table 2, we can calculate the posterior probability p(T|S), which the listener needs to infer in order to perform the discrimination task during behavioral trials. Because the target production, T, could have derived from either underlying category, we can express the posterior distribution as a weighted sum over the two categories. The posterior has the form

The first term is the posterior distribution on target productions, given that a sound came from a specific category c. The weighting term is the probability of the category being the underlying one chosen given the speech sound heard, p(c|S), which was computed above in the identification section (4).

We can compute the posterior for a specific category, p(T|S, c), using the values introduced above. Bayes’ rule for discrimination is

The summation term from Eq. 1 has been replaced with an integral term because, unlike the category variable in the identification task, the target production is a continuous variable. If we rewrite using the probability distributions from the generative model, we can see the critical parameters that can be recovered via model fitting. The expression is

Plugging this back into Eq. 5 and expanding the summation term yields

The values that appear in this equation are: (1) p(c 1|S), (2) p(c 2|S), (3) T, (4) \({\sigma _{S}^{2}}\), (5) \(\sigma _{c1}^{2}\), and (6) \(\sigma _{c2}^{2}\). Of these values, the noise variance, \({\sigma _{S}^{2}}\), is the only one that needs to be fit in our simulations of discrimination data. This is because the first two, p(c 1|S) and p(c 2|S), are known from the identification part of the model fitting, T is the value being calculated, and the last two, \(\sigma _{c1}^{2}\) and \(\sigma _{c2}^{2}\), can be calculated by subtracting \({\sigma _{S}^{2}}\) from the two sums of variance terms inferred in the identification stage above. We also need a ratio constant, which we refer to as K, to relate the model discrimination predictions to the discriminability metrics used in the behavioral experiments. This additional parameter is merely a constant term that stretches the range of the model values to the range of behavioral findings, and does not provide critical information about the structure of the problem. Hence, we have two free parameters in the model that are estimated via fitting the behavioral discrimination data: \({\sigma _{S}^{2}}\) and K.

Equation 8 can be used to derive the model equation that we can fit to the discrimination data, calculating the optimal value under the posterior distribution for the target production given the speech sound. Specifically, we compute the mean value of the posterior distribution. The posterior distribution is a mixture of Gaussians obtained from the Gaussian prior, p(T|c), and the Gaussian likelihood, p(S|T), via Bayes’ rule. The basic equation can be seen in Eq. 9, which holds for arbitrary numbers of categories. The expanded form for the case of two categories with different category variances is given in Eq. 10. The full derivation can be found in Appendix B.

The expected value for the target production is a weighted average of contributions from each possible underlying category. The contribution from each category is itself a linear combination of the speech sounds, S, and the underlying category mean, μ c .

As with the identification model, the optimal fit of this model to the behavioral discrimination data was found by computing an error function between this model and the behavioral discrimination data and then running an error minimization routine in MATLAB to find the best-fitting parameters.

Degrees of warping: a critical ratio

In Eq. 9 (and expanded upon in Eq. 10), the acoustic value of S is multiplied by the meaningful category variance, \({\sigma _{c}^{2}}\). This means that a higher meaningful variance term yields a greater contribution from S in the inferred target production. Meanwhile, the acoustic value for the category mean, μ c , is multiplied by the noise variance term, \({\sigma _{S}^{2}}\). This means that a higher noise variance term leads to a greater contribution from μ c , the category mean, in the inferred target production. As a result, varying the ratio between these two variance terms leads to varying influences from the category mean and the speech sound, effectively controlling categorical effects. It is this ratio of the meaningful to noise variance that we show to correspond with the degree of categorical effects in phoneme perception. When fitting the behavioral identification data, we are able to extract the sum of the two variances. Then, after fitting the behavioral discrimination curves, we obtain the independent contribution of these two parameters. We call this ratio of variances τ and show that the τ values for different phonemes fall on a continuum that corresponds to the degree of categorical effects, giving us a parametric explanation of the differences within the same model. We do not claim that this measure for τ is explicitly represented or associated with different phonemes, but instead use it as a notational convenience that represents the degree to which the listener is attending to the acoustics of the speech signal based on the two variances associated with the phoneme or phonetic category. An appealing aspect of the τ statistic is that it is dimensionless, which allows us to compare phonemes that fall on continua defined over different acoustic dimensions.

We can gain insight into the continuum of τ values by looking at the extremes. As τ approaches zero, either the meaningful variance of a category approaches zero, or the noise variance grows very large, and in either case listeners have to depend entirely on their existing knowledge of categories. In this case, the entire judgment will be determined by the means of the underlying categories. Perception would look extremely categorical, as the listener discards any contribution of fine acoustic detail and instead uses purely the category mean. At the other extreme, as the ratio approaches infinity, either the meaningful category variance grows large or the noise variance goes to zero. In both cases, the contribution of the underlying category means shrinks to nothing and perception is guided purely by the details in the speech stimulus, S. This means that perception would be entirely continuous and veridical to the acoustic signal. Overall, this relationship represents the degree to which perception is biased by the effect of category membership, and can account for the gradient effects of categoricity we observe in various behavioral tasks.

Figure 3 illustrates the degree of warping along a given continuum with the produced acoustic values of the individual stimuli on the top and the perceived values on the bottom. This process provides an appealing visual perspective of the degree of warping for any given value of τ, showing what happens as we move from a situation with no noise (ratio of infinity) to a condition where there is ten times more noise than meaningful variance (ratio of 0.1). Along with the warping of actual to perceived stimuli, each chart is overlaid on top of the categorical variances that make up the meaningful variance component of the overall variance (we hold the sum of the meaningful and noise variances constant in these simulations).

These simulations illustrate the effect of varying the ratio of meaningful to noise variance. Warping from actual to perceived stimuli is shown in the dispersion of the vertical bars toward category centers. Total variance is held constant throughout the simulations, with the amount of variance attributed to underlying category variance shown in the two Gaussian distributions overlaid over the perceptual warping bars. Ratios presented include: a infinity, b 5.0, c 1.0, and d 0.1

In Fig. 3a, with no noise, all stimuli are perceived veridically and there is no warping of the signal. This is the extreme case with an infinite ratio, but is instructive since this is what would happen if we had full faith in the acoustic signal. In terms of our model, this means that the contribution of the perceived speech stimulus, S, completely trumps the mean of the underlying category, μ c . This graph also serves as a good reference point, with the Gaussian distribution in this case the same as the sum of the two variances in all other graphs (i.e. since there is no noise, the entire variance is due to the meaningful variance). We then see what happens in cases where more and more of the variance is attributed to perceptual noise, thereby shrinking the meaningful:noise variance ratio.

As we move down on the τ continuum, the relative size of the meaningful category variance gets smaller. Holding the sum of the variances constant, greater noise variance means a smaller meaningful variance. Consequently, we see that the Gaussians in the graphs get narrower around the category centers as we reduce the ratio. More importantly, with a decreasing ratio we see greater warping as the individual stimuli get pulled more and more strongly toward the centers of the categories. The contribution of the perceived speech stimulus, S, is decreased compared to the contribution of the mean of the underlying category, μ c . To motivate why this might happen, we can consider the explanation above in terms of perception in a noisy channel. The meaningful variance going down means that there is a greater contribution from the noise variance. Listeners cannot rely on the information coming in through the noisy channel. Instead, they must rely on their prior knowledge of categories. As a result, the perceived stimuli get pulled into the centers of the categories, of whose structure the listener has prior knowledge. At the last step, with a ratio of 0.1, many of the stimuli are almost completely pulled in by the category center.

In the remainder of the paper we examine whether the phonemes that we have been considering thus far can be interpreted as falling at different points along this continuum of categoricity. We fit the model to each type of phoneme’s identification data and discrimination data to get the relevant ratio of meaningful to noise variance. We then compare these ratios to the warping continuum that we just described. If the phonemes map onto the τ continuum in a way that correlates with the respective behavioral findings, this would indicate that the model captures the range of categorical effects, which had previously been described independently, via parametric variation within a single framework. If all of these effects can be captured by the same model, it may not be necessary to appeal to two independent effects to describe categorical effects for vowels and consonants. The categorical effects would be interpretable as instantiations of the same phenomenon.

Simulations

In this section we describe simulations with vowels, stop consonants, and fricatives. First, we show that the model provides a good fit to the behavioral data with an appropriate setting of the parameters. We evaluate this by examining the fit of the model to both the identification and discrimination curves from behavioral experiments. Second, we show that the derived parameters are precisely the type that yield the proposed single qualitative source of categorical effects proposed above. Particularly, we examine the derived τ values and where they fall on the continuum. All of our simulations follow the procedure that is shown in Table 3 and described in more detail below.

Modeling steps

Setting a category mean

First we set one of the category means. This is necessary because the model is otherwise underspecified; an infinite number of category means provide the same fit to the identification data (i.e., the parameters are not identifiable). This is because fitting the model derives the equivalent of the sum of the category means, so moving one mean up would just move the other one down. Since we need to have a specific set of means in order to get the proper variance ratio in the following steps, we fix one of the means. In our simulations we set the mean based on production data from native speakers. We choose which of the two means to set arbitrarily, since the simulation is symmetric and it does not matter which mean we set. We are able to verify that the mean that is found for the second category is reasonable based on production data, since the mean for the underlying and production categories is the same, with the only difference being the variance. Further, it should be noted that we can’t set one of the variances in order to further simplify the calculation since there is no behavioral data that corresponds to the underlying category. Production data serves as a close approximation to the underlying category since it avoids the perceptual noise, but it still includes articulatory noise, leaving us only able to set a category mean and deriving the rest.

Identification fitting

To fit the model’s identification predictions to the behavioral data, we use Eq. 4. We compute the mean squared error between this equation and the actual values from behavioral identification data to find the set of parameters \({\sigma _{1}^{2}}\), \({\sigma _{2}^{2}}\), μ c1, and μ c2 that give the optimal fit. In effect, this recovers the sum of the variances that the listener in our generative model assumes, leading to the structure of the Gaussian distributions for \(p(S|c) = N(\mu _{c},{\sigma _{c}^{2}}+{\sigma _{S}^{2}})\). This is the structure of the perceived category, as opposed to the underlying category.

Discrimination fitting

At this point we have the means and the sums of variances, so we need to pull apart the contribution of the meaningful category variance and the articulatory and perceptual noise variance to the overall variance derived in the previous step. In effect, \({\sigma _{S}^{2}}\) is the only free parameter that is inferred in this step. Separating out the noise variance serves a dual purpose. First, it allows us to calculate the ratio that we show to correspond with degree of categorical effects. Second, we get an independent test of the model’s ability to fit behavioral data. Having separated the two sources of variance, we can also see the shape of the underlying category distributions that are known to the listener in our generative model and can see if the finding is reasonable by comparing this against production data, which as mentioned above serves as a rough approximation to the underlying category with meaningful variance since it removes the perceptual part of the noise variance. This sanity check is especially relevant in the case of stop consonants, where the distributions of the phonemes at the two ends of the continuum exhibit very different variances that may be reflected in those categories’ perceptual signatures.Footnote 1

Calculate the variance ratio τ

As the final step of the simulation process, we compute the value of τ for each phoneme by dividing the meaningful category variance, \({\sigma _{c}^{2}}\), by the noise variance, \({\sigma _{S}^{2}}\). τ quantifies the strength of the pull toward category centers. We find an independent meaningful category variance for each phoneme, so for each phoneme category we actually find two τ values; τ c1 and τ c2. These τ values characterize the degree of perceptual bias toward category centers for either of the phonemes at the ends of the fricative, stop consonant, and vowel continua. In effect, then, they characterize the warping for an idealized continuum if it were to consist of two identical categories at its ends. Looking at the individual warping parameters allows our model to capture varying category structure and varying within-category discrimination, even within a single continuum. This is different from previous investigations of categorical perception, which typically looked across a whole continuum, not at individual phonemes on that continuum. Here, we do not quantify the amount of warping on the stop consonant continuum, but rather for /b/ and /p/ independently, and likewise for /s/ and /\(\int \)/ independently. In our simulations, the τ values end up being very close for the phonemes at the two ends of the continuum for all phonetic categories except stop consonants, for which the within category variance for the voiced stop consonant /b/ is much lower than that for /p/.

Vowels

The simulations we consider for vowel perception were conducted by Feldman et al. (2009), who examined the perceptual magnet effect as a case study in categorical effects in cognition. Their model made the simplifying assumption that the two categories that define the continuum endpoints have equal variance, but was otherwise identical to the model described above. We use the parameters of their simulation to compute a ratio of variances along the τ continuum for vowels and use this value as a basis for comparison with stop consonants and fricatives.

Feldman et al. (2009) used their model to simulate data from a paper by Iverson and Kuhl (1995) that used a multi-dimensional scaling technique to examine discrimination performance by participants on the /i/-/e/ continuum. The formant values for the continuum are reproduced in Table 4 below, as reported by Iverson and Kuhl (2000). Although the parameters here are given in Hertz, the continuum was based on equal sized steps in Mels, a psychoacoustic frequency scale (Stevens et al. 1937). While d’ data were available for the same stimuli, the multidimensional scaling data represented the entire range of stimuli and therefore provided a broader range upon which the model could be fit. Identification data were taken from Lotto et al. (1998), who pointed out that there was a discrepancy in how the identification and discrimination data were collected by Iverson and Kuhl (1995). Whereas the stimuli in discrimination trials were presented in pairs, stimuli in the identification trials were presented in isolation. Because of known effects of context on perception, this meant that the category of the same stimulus might be perceived differently in the two experiments. To circumvent this issue, Lotto et al. (1998), repeated the identification experiment from Iverson and Kuhl (1995), but presented the stimuli for both experiments in pairs. Using the identification data from Lotto et al. (1998) to fit the category means and the sum of the meaningful and noise variances, Feldman et al. showed that the model provided a close fit to the behavioral findings from Iverson and Kuhl (1995).

Feldman et al. (2009) then conducted an experiment to examine how noise affects discrimination judgments and whether the model captures these effects via the noise variance parameter. Listeners made same-different judgments for pairs of stimuli, modeled after those from Iverson and Kuhl (1995) (Table 4), in either quiet or noisy listening conditions. Feldman et al. generated confusion matrices for the stimuli in the experiment and modeled this confusion data using a variant of their model that predicted same-different judgments directly. In effect, they were computing the probability that the distance between the inferred target productions was less than or equal to 𝜖 given the two perceived speech stimuli, S 1 and S 2. Since the same contrast was presented a total of n times across all participants during the experiment, the overall confusion of the two stimuli is measured by the binomial distribution B(n, p), where p is the probability mentioned above and presented below in Eq. 11.

The role of this parameter in the extended model is similar to that of the observer response criterion in signal detection theory (Green and Swets 1966), where 𝜖 determined the size of the judged distance between stimuli necessary to yield a positive response, in their case a response of different.

In order to minimize free parameters in the model, they held constant all other parameters. To do this, they used the category means, μ /i/ and μ /e/, as well as the categorical variance, \({\sigma _{c}^{2}}\), from the multidimensional scaling simulations. They found that the model was able to find close fits to both conditions and, more importantly, that the noise parameter was independently a good predictor above and beyond the setting for the threshold parameter, 𝜖. For our current experiment we are not interested in perception in the presence of additional artificial noise, so we use the noise variance term from the no-noise condition, which was found to be \({\sigma _{S}^{2}} = 878\) (σ S = 30 mels), as the appropriate noise variance to capture discrimination performance for the /i/-/e/ continuum.

The parameters obtained in this discrimination study suggested that the noise parameters derived from fitting the multidimensional scaling analysis were inaccurate, due to skewing introduced via the multi-dimensional scaling procedure. We therefore focus our analysis on the the parameters derived directly from same-different judgments in their discrimination study, and avoid using multidimensional scaling data for the analyses in our simulations below.

The full set of parameters inferred through fitting the model can be found in Table 7 in the row for vowels. With the values for the meaningful and noise variance set to \({\sigma _{c}^{2}} = 5,873\) and \({\sigma _{S}^{2}}=878\), we derive the critical ratio for vowels \(\tau _{V} = \frac {5,873}{878} \approx 6.69\). The corresponding graphical warping picture for this τ value is shown in Fig. 8a together with analogous graphs for other phoneme categories. There is very little warping between the actual and perceived stimuli. There is, however, a small effect of categories, with stimuli closer to the categorical centers pulled together and slightly greater distances at the category boundary as the stimuli are pulled apart. This value and warping picture serve as baselines to consider behavioral data for stop consonants and fricatives.

Stop consonants

Stop consonants have been found to exhibit very strong categorical effects in perception during behavioral experiments. In our model, this would be formalized as a low meaningful to noise variance ratio. As such, in the presence of noise, listeners would rely much more on their knowledge of underlying categories rather than the detail available in the acoustic stream. However, even if the relative contribution of category means and acoustic detail does contribute to consonant perception, factors such as innate phonetic boundaries (Eimas et al. 1971) and auditory discontinuities (Pisoni 1977) may continue to play a role. If they exert an additional effect on stop consonant perception above and beyond the model we have proposed here, this would prevent our model from fully explaining the behavioral findings. Because of this, a good fit to the behavioral data would be a particularly strong argument in favor of positing a unified account for categorical effects in phoneme perception.

For stop consonants we consider both identification and discrimination data derived from Wood (1976). Their experiments focused on the perception of /b/ and /p/ along a voice onset time (VOT) continuum. Their stimuli were synthetically created along the continuum ranging from −50 to + 70 ms VOT. Their identification task was a classic two-alternative forced choice task. For discrimination, they administered both a 10-ms and 20-ms difference AX discrimination task, in which participants heard one stimulus, A, and then had to decide whether the second stimulus, X, was the same or different as the first. For our simulations below, we used data from their 20-ms discrimination condition. Values for both identification and discrimination can be found in Table 5. We use d-prime as the measure of perceptual distance, which we computed from hit and false alarm values reported in Fig 5 in their paper.Footnote 2

As a first step, we need to set the mean for one of the categories in our simulation. Based on production data in Lisker and Abramson (1964a), we set μ /p/ at 60 ms VOT. Once again, the choice of which mean to set is arbitrary so there is no deeper reason for setting one mean over the other. We then ran the error minimization procedure in Matlab in order to determine the optimal fit of the free parameters involved in the identification simulation: μ /b/ = −0.3 m s, \(\sigma _{/b/}^{2} + {\sigma _{S}^{2}} = 96.3\), and \(\sigma _{/p/}^{2} + {\sigma _{S}^{2}} = 336.2\) (Table 7).

The fit of the model to identification data can be seen in Fig. 4a. The figure also shows the category structure of the perceived categories, reflecting the two means as well as sums of variances for each category. The value of -0.3 ms that the model inferred as the /b/ category mean is very close to that found in the production data from Lisker and Abramson (1964a) (Fig. 5).

Production data for the /b/-/p/ continuum (reprinted from Lisker and Abramson (1964a)) overlaid with underlying categories found by the model

Fitting the discrimination data we get the following values for the individual variances in our model: \(\sigma _{/b/}^{2} = 16.3\), \(\sigma _{/p/}^{2} = 256.2\), and \({\sigma _{S}^{2}} = 80.0\) The fit of the model to the discrimination data can be seen in Fig. 4b. In addition to providing a good overall fit, the model is able to accurately predict the lower within-category discriminability of voiced stops relative to voiceless stops. This can be seen in Fig. 4b, where the left side of the distribution is substantially lower than the tail on the right.