Abstract

Optimally recruiting cognitive control is a key factor in efficient task performance. In line with influential cognitive control theories, earlier work assumed that control is relatively slow. We challenge this notion and test whether control also can be implemented more rapidly by investigating the time course of cognitive control. In two experiments, a visual discrimination paradigm was applied. A reward cue was presented with variable intervals to target onset. The results showed that reward cues can rapidly improve performance. Importantly, the reward manipulation was orthogonal to the response, ensuring that the reward effect was due to fast cognitive control implementation rather than to automatic activation of rewarded S-R associations. We also empirically specify the temporal limits of cognitive control, because the reward cue had no effect when it was presented shortly after target onset, during task execution.

Similar content being viewed by others

Introduction

Humans are cognitive beings with intentions and goals. To achieve those goals, they monitor actions and their outcomes to adjust attention and effort levels to suit the situation (Botvinick, Cohen, & Carter, 2004). This set of top-down processes is referred to as “cognitive control,” because it allows controlling basic cognitive processes. Control improves task performance but carries a cost (Kool, McGuire, Rosen, & Botvinick, 2010). To decide if enhancing control is useful, humans integrate cues for difficulty and reward. Evidence for cue integration has been reported in several fMRI studies (Krebs, Boehler, Roberts, Song, & Woldorff, 2012; Vassena et al., 2014) and their influence on control implementation was formalized in computational reinforcement learning models (Verguts, Vassena, & Silvetti, 2015).

Classical models of cognitive control conceptualize control as a serial and thus relatively slow process (Posner & Presti, 1987; Shiffrin & Schneider, 1977), as do more recent models where reactive control is updated between-trials in response to experienced task difficulty (Botvinick, Braver, Barch, Carter, & Cohen, 2001; Botvinick et al., 2004). Also, proactive control (in response to cues before task onset) is conceptualized as rather slow (Braver, 2012). These models have inspired experimental designs exploring relatively slow control, such as the investigation of between-trial adaptation (Gratton, Coles, & Donchin, 1992). Conversely, recent associative models argue that control is implemented via associations between perceptual, motor, and control representations (Egner, 2014; Verguts & Notebaert, 2008, 2009). In this view, a difficult or potentially rewarding stimulus triggers a control representation, which subsequently improves the signal-to-noise ratio of current processing pathways. From such a point of view, control might be implemented more rapidly, perhaps even during task execution. Yet, its exact time course was not clearly specified in such models, perhaps due to lack of empirical specification of this time course.

Research has recently started to look at the time course of control. Evidence for fast control implementation was reported in an EEG frequency tagging experiment (Scherbaum, Fischer, Dshemuchadse, & Goschke, 2011) showing that on difficult, incongruent trials, attention towards task-relevant information increases continuously throughout the trial. A large literature shows that cues that are directly relevant for task execution are processed more efficiently (Kunde, Kiesel, & Hoffmann, 2003; Spruyt, De Houwer, Everaert, & Hermans, 2012). Item congruency (as in Scherbaum et al., 2011) is in this sense directly relevant for task execution and can thus be expected to be processed efficiently. However, it remains unclear to what extent task-irrelevant cues, such as reward cues, which are uninformative about the upcoming task, can induce control enhancements on a faster time-scale, as predicted by associative models.

The influence of reward on performance has been extensively studied. Beneficial effects of reward on cognitive control were found consistently (Bijleveld, Custers, & Aarts, 2010; Botvinick & Braver, 2015; Padmala & Pessoa, 2010, 2011) strongly suggesting that reward motivates participants to intensify control. This earlier work mostly demonstrated relatively slow adjustments. In many studies, reward was manipulated between subjects (Huebner & Schloesser, 2010) or between blocks (Leotti & Wager, 2010; Padmala & Pessoa, 2010). This allows participants to deliberately increase control, but its time scale remains unknown. Another common procedure is to present cues indicating upcoming reward before task onset. Here also, there is ample time for cue processing, because it is always presented with a long interval (several seconds) before task onset (Aarts et al., 2014; Bijleveld et al., 2010; Knutson, Taylor, Kaufman, Peterson, & Glover, 2005; Krebs et al., 2012; Padmala & Pessoa, 2011; Schevernels, Krebs, Santens, Woldorff, & Boehler, 2014).

Studies investigating faster reward-based control implementation are scarce. Krebs, Boehler, and Woldorff (2010) used a Stroop task in which trials with certain ink colors were rewarded and showed that responses were faster for those trials than non-rewarded ones. Because reward information was presented only at task onset, this suggests control can be implemented on a very short time scale. A similar fast reward effect was shown for response inhibition (Boehler, Hopf, Stoppel, & Krebs, 2012). In both studies, however, specific rewarded stimuli were linked to specific responses. Hence, when a stimulus and subsequent response were rewarded, the S-R link was possibly strengthened. When the rewarded stimulus was then presented again, the associated response was automatically activated, possibly speeding task performance (Damian, 2001). Studies avoiding this issue by using an orthogonal S-R mapping are scarce and only report evidence for slow control (Neely, 1977). The latter priming study concluded that control implementation takes at least 400 ms.

As mentioned above, associative models theoretically allow fast control but as the literature review illustrates, an empirical specification of its time course is currently lacking. Filling this gap is the aim of the current study. A visual discrimination task was used in combination with symbolic reward cues unrelated to the target stimulus and response. Three different fast cue timings allowed investigating the time course of cognitive control implementation. The reward cue was presented either 200 ms before, simultaneous to, or 200 ms after target onset. This third condition was included to study ultra-rapid control enhancement during a trial, when task execution has already been initiated. Note that for all timing conditions, the cue-target interval was considerably shorter than in the reward studies discussed above (Aarts et al., 2014; Bijleveld et al., 2010; Krebs et al., 2012; Schevernels et al., 2014). Crucially, the cues were uncorrelated with responses so no S-R learning could occur for the cue. This ensures we measured control rather than automatic S-R effects.

Experiment 1

Method

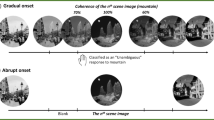

Eighteen paid subjects participated. Reward consisted of points linked to winning a gift voucher. Stimuli were presented centrally on a black background in 18 blocks of 48 trials. A trial (Fig. 1) consisted of a full grey circle (1000 ms), the target, being an opening in the top and bottom of the grey circle (400 ms), a fixation cross (600 ms), and feedback (600 ms). Participants indicated the larger of the two openings with a button press. There were two difficulty levels, determined by the size difference in the openings. A reward cue was presented, indicating no information (+# in white, 50 % of trials), reward (+4 in green, 25 % of trials), or no reward (+0 in red, 25 % of trials). Cue timing was variable: 200 ms before (pre), simultaneous to (at) or 200 ms after (post) target onset (all timings equally probable). Feedback depended on the reward manipulation and the response (+4 or +0 in green for correct and −4 or −0 for error trials). Fifty percent of all trials were rewarded (if correct). All trial types were presented randomly intermixed.

A linear mixed effects (LME) model was fitted for reaction times (RTs) with several predictors: reward (reward vs. no reward vs. neutral), cue timing (pre- vs. at vs. posttarget onset), location of the largest opening (location; top vs. bottom) and difficulty (easy vs. hard). Also, a random intercept across subjects was modeled. Although a maximal random effects structure has been proposed as optimal (Barr, Levy, Scheepers, & Tily, 2013), it has been argued recently that this often results in overparameterized models that fail to converge (Bates, Kliegl, Vasishth, & Baayen, 2015). Therefore, a model building strategy was applied. The added value of a random slope per subject was tested by comparing the basic model to a model with a random slope for one of the predictors. This was then repeated for every predictor. Significant random slopes were obtained for location and difficulty, which were added to the final model. Effects in this final model were tested by ANOVA type III; F-statistics were calculated with Kenward-Roger adjustment of the degrees of freedom (Kenward & Roger, 1997).

A generalized linear mixed effects (GLME) model for binary data was fitted for accuracy with the same predictors and model selection procedure as for RT analysis. The final model included a random slope for location. Because no small-sample adjustments of the degrees of freedom for binary responses have been proposed in the literature, chi-square statistics rather than F-statistics are reported.

Results

RT

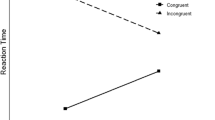

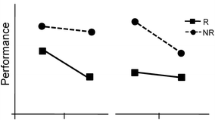

Results showed a main effect of difficulty, F(1, 17) = 127.66, p < 0.001 (slower RTs for difficult trials), and cue timing, F(1, 13336) = 22.36, p < 0.001. RTs were slowest in the at condition (compared to post: t(1, 17) = 6.00, p < 0.001; compared to pre: t(1, 17) = 5.17, p < 0.001) and fastest in the pre condition (compared to post: t(1, 17) = 2.46, p = 0.03). Crucially, there was a significant main effect of reward information, F(1, 13335) = 4.04, p = 0.02, which interacted with cue timing, F(2, 13335) = 7.20, p < 0.001 (Fig. 2a). To investigate this interaction, the effect of reward was tested for each cue timing separately. This revealed no significant effect for the post condition, F(2, 16) = 0.43, p = 0.66, a marginally significant effect for the at condition, F(2, 16) = 2.77, p = 0.09 and a significant effect in the pre condition, F(2, 16) = 6.54, p = 0.008. To further qualify the effect of reward in the pre condition, paired t-tests were performed, revealing a difference between reward and no-reward cues, t(1, 17) = 4.52, p < 0.001, and between reward cues and neutral cues, t(1, 17) = 2.30, p = 0.03 but not between no-reward cues and neutral cues, t(1, 17) = 1.76, p = 0.10.

Experiment 1: RTs (A) and error rates (B) were significantly influenced by reward in the pre condition. The reward effect is mainly driven by response speeding for reward trials relative to neutral trials (plotted in yellow and orange, A) and by an increase in error rate for no-reward trials compared to neutral trials (plotted in red and orange, B). Experiment 2: RTs (C) were significantly influenced by reward in the pre and at condition, error rates (D) were only influenced by reward in the pre condition. *p < 0.05, **p < 0.01, ***p < 0.001

Accuracy

There was a main effect of difficulty, χ2(1, N = 18) = 74.76, p < .001 (more errors for difficult trials) and of cue timing, χ2(1, N = 18) = 20.82, p < 0.001. Fewest errors were made in the post condition (compared to at: t(1, 17) = 3.18, p < 0.01; compared to pre: t(1, 17) = 3.61, p < 0.01). There was no difference between the at and pre condition, t(1, 17) = 1.28, p = 0.22. There was no main effect of reward, χ2(2, N = 18) = 2.46, p = 0.29, but there was an interaction of reward and cue timing, χ2(4, N = 18) = 10.02, p = 0.04 (Fig. 2b). To investigate this interaction, the reward effect was modeled for each cue timing separately. There was no significant reward effect in the post and at conditions, χ2(2, N = 18) = 1.32, p = 0.52 and χ2(2, N = 18) = 1.04, p = 0.60 respectively, but there was a reward effect in the pre condition, χ2(2, N = 18) = 15.37, p < 0.001.

Discussion

We investigated how rapidly reward prospect can modulate task performance. The beneficial reward effect was clear when the cue preceded target onset, both for RTs and accuracy, indicating truly enhanced processing efficiency rather than a shift in speed-accuracy tradeoff. The reward effect was less clear when cue and target appeared simultaneously, with only a marginally significant effect for RTs and no effect for accuracy, and disappeared altogether when the cue followed target onset.

To explore the marginally significant effect in the simultaneous condition and to push the timing limits of the fast control adjustments observed in Experiment 1, cue processing was reduced to its simplest form in Experiment 2. In Experiment 1, participants distinguished between three intermixed cue types (neutral, reward and no-reward). In Experiment 2, we confined neutral cues and informative cues (reward or no-reward) to separate, alternating blocks, thus reducing the number of cues and making distinction easier. Furthermore, we increased power by testing a larger number of subjects.

Experiment 2

Method

Twenty-seven paid subjects participated. The method was nearly identical to that of Experiment 1 (Fig. 1), except that trials with neutral cues and trials with informative cues appeared in separate alternating blocks.

The predictors and model selection procedure were identical to that of Experiment 1. Both the model for RTs and accuracy included random slopes for location and difficulty.

Results

In a preliminary analysis, neutral blocks were compared to informative blocks by fitting an LME model for RTs with block type (neutral vs. informative) as the fixed factor and a random slope for block type. Results showed an effect of block type, with faster RTs in neutral blocks compared with informative blocks, F(1, 26) = 7.49, p = 0.01. There was no block difference for error rates (tested with a GLME model for binary responses), χ2(1, N = 27) = 2.48, p = 0.11. Because of this block effect, neutral trials cannot be straightforwardly compared to reward and no-reward trials. Hence in the remainder of the results, we focus on informative blocks only.

RT

A main effect was observed of difficulty, F(1, 26) = 115, p < 0.001 (slower RTs for difficult trials) and of cue timing, F(1, 26) = 74.24, p < 0.001. RTs were slowest in the at condition (compared to post: t(1, 26) = 5.16, p < 0.001; compared to pre: t(1, 26) = 7.68, p < 0.001) and fastest in the pre condition (compared to post: t(1, 26) = 3.15, p < 0.01). There was a main effect of reward, F(1, 9871) = 14.88, p < 0.001. The interaction of reward and cue timing was marginally significant, F(1, 9872) = 3.65, p = 0.056 (Fig. 2c; note that an F-statistic is by definition two-sided). To investigate the interaction further, separate models were fitted for each timing condition. These revealed no effect of reward in the post condition, F(1, 3372) = 0.56, p = 0.46, but did show an effect in the pre and at condition, F(1, 3250) = 11.69, p < 0.001, and F(1, 3260) = 4.72, p = 0.03, respectively.

Accuracy

There was a main effect of difficulty, χ2(1, N = 27) = 133.87, p < 0.0001 (more errors for difficult trials), and of cue timing, χ2(1, N = 27) = 29.37, p < 0.001. Fewest errors were made in the post condition (compared to at: t(1, 26) = 3.37, p < 0.01; compared to pre: t(1, 26) = 2.20, p = 0.04). There was no difference between the at and pre condition, t(1, 26) = 0.22, p = 0.82. There was a main effect of reward, χ2(2, N = 27) = 6.35, p = 0.01, and an interaction of reward and cue timing, χ2(4, N = 27) = 7.93, p < 0.01 (Fig. 2d). Tests for each cue timing separately revealed no significant reward effect for the post or at condition, χ2(2, N = 27) = 0.005, p = 0.94 and χ2(2, N = 27) = 0.34, p = 0.56 respectively, but there was an effect for the pre condition, χ2(2, N = 27) = 15.37, p < 0.001.

General discussion

In two experiments, we demonstrated that control can be rapidly enhanced in response to reward. The use of three timing conditions also provides novel insights into the nature and time course of cognitive control implementation. When a reward-predictive cue was presented 200 ms before target onset, it improved processing efficiency. The effect of reward diminished as less time was available for cue processing, with smaller effects for simultaneous cue and target presentation, and no effect for cues presented after target onset.

One might argue that difficulty was unmatched across timing conditions: the less time there was to process the cue, the more difficult the task might have become. Because reward and difficulty cues are weighted in the decision to increase control, increased difficulty might eliminate a reward effect. However, RTs were faster and fewer errors were made in the post condition than in the simultaneous condition, indicating that, if anything, the task was more difficult in the simultaneous condition, where we did find a reward effect.

A broad research effort is uncovering the fast and far-reaching influences of reward on cognition. Visual attention research has extensively shown that rewarded stimuli capture attention automatically, even when this is counterproductive (Hickey & van Zoest, 2012; Pearson, Donkin, Tran, Most, & Le Pelley, 2015). Interestingly, this might imply that the currently reported reward effects are an underestimation of enhanced control. Reward cues automatically attracted attention away from the actual discrimination task stimulus, which would cause a slowing of responses rather than the observed speeding.

Our findings challenge models that conceptualize cognitive control as a slow process (Botvinick et al., 2001, 2004; Braver, 2012; Posner & Presti, 1987; Shiffrin & Schneider, 1977). Such models have been challenged before in congruency tasks. There, the magnitude of the congruency effect depends on the proportion of incongruent trials in the task. This proportion congruency effect (PCE) is typically ascribed to a slow process that tonically enhances control in the context of high proportions of incongruency (Braver, 2012). In contrast, Crump, Gong, and Milliken (2006) showed that the PCE also occurs if the proportion congruency only becomes apparent at stimulus onset, suggesting a fast, stimulus-driven control enhancement. Our research shows that also task-irrelevant reward cues (i.e., which are uninformative for the task) can induce such rapid adjustments.

The current research supports more recent accounts that conceptualize cognitive control from an associative learning viewpoint (Egner, 2014; Verguts & Notebaert, 2008, 2009) and adds to these models by specifying the time constraints of cognitive control. We emphasized that we were careful to exclude stimulus-response learning; however, what then is learned in the associative learning point of view? We argue that subjects learn associations between perceptual (in this case, reward cue) and control (rather than motor) representations, which are automatically activated with the next cue appearance (e.g., in event files: Hommel, 1998; Waszak, Hommel, & Allport, 2004) and quickly trigger appropriate levels of control. Future research is needed to determine whether such cueing requires training at all (instruction-based control implementation) and whether its timing changes with extensive training.

References

Aarts, E., Wallace, D. L., Dang, L. C., Jagust, W. J., Cools, R., & D’Esposito, M. (2014). Dopamine and the cognitive downside of a promised bonus. Psychological Science, 25(4), 1003–1009. doi:10.1177/0956797613517240

Barr, D. J., Levy, R., Scheepers, C., & Tily, H. J. (2013). Random effects structure for confirmatory hypothesis testing: Keep it maximal. Journal of Memory and Language, 68(3), 255–278. doi:10.1016/j.jml.2012.11.001

Bates, D. M., Kliegl, R., Vasishth, S., & Baayen, H. (2015). Parsimonious mixed models. Submitted to Journal of Memory and Language, 1–27.

Bijleveld, E., Custers, R., & Aarts, H. (2010). Unconscious reward cues increase invested effort, but do not change speed-accuracy tradeoffs. Cognition, 115(2), 330–335. doi:10.1016/j.cognition.2009.12.012

Boehler, C. N., Hopf, J.-M., Stoppel, C. M., & Krebs, R. M. (2012). Motivating inhibition - reward prospect speeds up response cancellation. Cognition, 125(3), 498–503. doi:10.1016/j.cognition.2012.07.018

Botvinick, M., & Braver, T. (2015). Motivation and cognitive control: From behavior to neural mechanism. Annual Review of Psychology, 66, 83–113. doi:10.1146/annurev-psych-010814-015044

Botvinick, M. M., Braver, T. S., Barch, D. M., Carter, C. S., & Cohen, J. D. (2001). Conflict monitoring and cognitive control. Psychological Review, 108(3), 624–652. doi:10.1037//0033-295X.108.3.624

Botvinick, M. M., Cohen, J. D., & Carter, C. S. (2004). Conflict monitoring and anterior cingulate cortex: An update. Trends in Cognitive Sciences, 8(12), 539–546. doi:10.1016/j.tics.2004.10.003

Braver, T. S. (2012). The variable nature of cognitive control: A dual mechanisms framework. Trends in Cognitive Sciences, 16(2), 106–113. doi:10.1016/j.tics.2011.12.010

Crump, M. J. C., Gong, Z., & Milliken, B. (2006). The context-specific proportion congruent Stroop effect: Location as a contextual cue. Psychonomic Bulletin & Review, 13(2), 316–321. doi:10.3758/BF03193850

Damian, M. F. (2001). Congruity effects evoked by subliminally presented primes: Automaticity rather than semantic processing. Journal of Experimental Psychology: Human Perception and Performance, 27(1), 154–165. doi:10.1037/0096-1523.27.1.154

Egner, T. (2014). Creatures of habit (and control): A multi-level learning perspective on the modulation of congruency effects. Frontiers in Psychology, 5, 1247. doi:10.3389/fpsyg.2014.01247

Gratton, G., Coles, M. G., & Donchin, E. (1992). Optimizing the use of information: Strategic control of activation of responses. Journal of Experimental Psychology: General, 121(4), 480–506. Retrieved from http://www.ncbi.nlm.nih.gov/pubmed/1431740

Hickey, C., & van Zoest, W. (2012). Reward creates oculomotor salience. Current Biology, 22(7), 219–220. doi:10.1016/j.cub.2012.02.007

Hommel, B. (1998). Event files: Evidence for automatic integration of stimulus-response episodes. Visual Cognition, 5(1-2), 183–216. doi:10.1080/713756773

Huebner, R., & Schloesser, J. (2010). Monetary reward increases attentional effort in the flanker task. Psychonomic Bulletin & Review, 17(6), 821–826. doi:10.3758/PBR.17.6821

Kenward, M. G., & Roger, J. H. (1997). Small sample inference for fixed effects from restricted maximum likelihood. Biometrics, 53(3), 983. doi:10.2307/2533558

Knutson, B., Taylor, J., Kaufman, M., Peterson, R., & Glover, G. (2005). Distributed neural representation of expected value. The Journal of Neuroscience: The Official Journal of the Society for Neuroscience, 25(19), 4806–4812. doi:10.1523/JNEUROSCI.0642-05.2005

Kool, W., McGuire, J. T., Rosen, Z. B., & Botvinick, M. M. (2010). Decision making and the avoidance of cognitive demand. Journal of Experimental Psychology: General, 139(4), 665–682. doi:10.1037/a0020198

Krebs, R. M., Boehler, C. N., Roberts, K. C., Song, A. W., & Woldorff, M. G. (2012). The involvement of the dopaminergic midbrain and cortico-striatal-thalamic circuits in the integration of reward prospect and attentional task demands. Cerebral Cortex, 22(3), 607–615. doi:10.1093/cercor/bhr134

Krebs, R. M., Boehler, C. N., & Woldorff, M. G. (2010). The influence of reward associations on conflict processing in the Stroop task. Cognition, 117(3), 341–347. doi:10.1016/j.cognition.2010.08.018

Kunde, W., Kiesel, A., & Hoffmann, J. (2003). Conscious control over the content of unconscious cognition. Cognition, 88(2), 223–242. doi:10.1016/S0010-0277(03)00023-4

Leotti, L. A., & Wager, T. D. (2010). Motivational influences on response inhibition measures. Journal of Experimental Psychology: Human Perception and Performance, 36(2), 430–447. doi:10.1037/a0016802

Neely, J. H. (1977). Semantic priming and retrieval from lexical memory: Roles of inhibitionless spreading activation and limited-capacity attention. Journal of Experimental Psychology: General, 106(3), 226–254. doi:10.1037/0096-3445.106.3.226

Padmala, S., & Pessoa, L. (2010). Interactions between cognition and motivation during response inhibition. Neuropsychologia, 48(2), 558–565. doi:10.1016/j.neuropsychologia.2009.10.017

Padmala, S., & Pessoa, L. (2011). Reward reduces conflict by enhancing attentional control and biasing visual cortical processing. Journal of Cognitive Neuroscience, 23(11), 3419–3432. doi:10.1162/jocn_a_00011

Pearson, D., Donkin, C., Tran, S. C., Most, S. B., & Le Pelley, M. E. (2015). Cognitive control and counterproductive oculomotor capture by reward-related stimuli. Visual Cognition, 23(1-2), 41–66. doi:10.1080/13506285.2014.994252

Posner, M. I., & Presti, D. E. (1987). Selective attention and cognitive control. Trends in Neurosciences, 10(1), 13–17. doi:10.1016/0166-2236(87)90116-0

Scherbaum, S., Fischer, R., Dshemuchadse, M., & Goschke, T. (2011). The dynamics of cognitive control: Evidence for within-trial conflict adaptation from frequency-tagged EEG. Psychophysiology, 48(5), 591–600. doi:10.1111/j.1469-8986.2010.01137.x

Schevernels, H., Krebs, R. M., Santens, P., Woldorff, M. G., & Boehler, C. N. (2014). Task preparation processes related to reward prediction precede those related to task-difficulty expectation. NeuroImage, 84, 639–647. doi:10.1016/j.neuroimage.2013.09.039

Shiffrin, R. M., & Schneider, W. (1977). Controlled and automatic human information processing: II. Perceptual learning, automatic attending and a general theory. Psychological Review, 84(2), 127–190. doi:10.1037/0033-295X.84.2.127

Spruyt, A., De Houwer, J., Everaert, T., & Hermans, D. (2012). Unconscious semantic activation depends on feature-specific attention allocation. Cognition, 122(1), 91–95. doi:10.1016/j.cognition.2011.08.017

Vassena, E., Silvetti, M., Boehler, C. N., Achten, E., Fias, W., & Verguts, T. (2014). Overlapping neural systems represent cognitive effort and reward anticipation. PLoS ONE, 9(3), e91008. doi:10.1371/journal.pone.0091008

Verguts, T., & Notebaert, W. (2008). Hebbian learning of cognitive control: Dealing with specific and nonspecific adaptation. Psychological Review, 115(2), 518–525. doi:10.1037/0033-295X.115.2.518

Verguts, T., & Notebaert, W. (2009). Adaptation by binding: A learning account of cognitive control. Trends in Cognitive Sciences, 13(6), 252–257. doi:10.1016/j.tics.2009.02.007

Verguts, T., Vassena, E., & Silvetti, M. (2015). Adaptive effort investment in cognitive and physical tasks: A neurocomputational model. Frontiers in Behavioral Neuroscience, 9, 57. doi:10.3389/fnbeh.2015.00057

Waszak, F., Hommel, B., & Allport, A. (2004). Semantic generalization of stimulus-task bindings. Psychonomic Bulletin & Review, 11(6), 1027–1033. doi:10.3758/BF03196732

Acknowledgments

This work was supported by the Ghent University Multidisciplinary Research Partnership “The integrative neuroscience of behavioral control” and by grant P6/29 of the Interuniversity attraction poles program from the Belgian Federal Government. Clio Janssens is a PhD fellow with the Ghent University BOF research council; Esther De Loof is a PhD fellow with the Research Foundation Flanders (FWO). Thanks are due to Elger Abrahamse for useful discussion on this topic.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Janssens, C., De Loof, E., Pourtois, G. et al. The time course of cognitive control implementation. Psychon Bull Rev 23, 1266–1272 (2016). https://doi.org/10.3758/s13423-015-0992-3

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-015-0992-3