Abstract

Recent research has shown that being able to interact with an object causes it to be perceived as being closer than objects that cannot be interacted with. In the present study, we examined whether that compression of perceived space would be experienced by people who simply observed such interactions by others with no intention of performing the action themselves. Participants judged the distance to targets after observing an actor reach to an otherwise unreachable target with a tool (Experiment 1) or illuminate a distant target with a laser pointer (Experiment 2). Observing either type of interaction caused a compression of perceived space, revealing that a person’s perception of space can be altered through mere observation. These results indicate that shared representations between an actor and observer are engaged at the perceptual level easily and perhaps automatically, even in the absence of cooperation or an observer’s own intention to interact.

Similar content being viewed by others

Humans use tools to interact with objects that would otherwise be beyond reach, whether it is knocking a piece of fruit out of a tree or changing the TV channel from across the room. Importantly, one’s choice to use a tool not only enables different behavioral outcomes, it can also fundamentally alter one’s perception of the physical environment. For example, extending one’s physical or functional reach with a tool has the consequence of making otherwise out-of-reach objects appear closer (e.g., Witt, Proffitt, & Epstein, 2005) even in the absence of visual feedback about the interaction (Davoli, Brockmole, & Witt, in press). This spatial compression is thought to reflect a perceptual system that represents the environment in terms of one’s ability to interact within it (e.g., Witt, 2011a). Hence, objects that are more easily reachable are perceived to be closer. As social animals, however, we rarely act within our environment in isolation, but rather do so in the presence of others or observe another as he or she acts. This raises the question of whether observing an interaction such as what occurs during tool use has the ability to cause perceptual changes for the observer as it does for the actor. We examined this question in the present study.

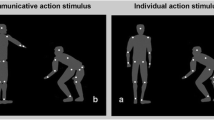

What reasons might there be to suspect that one who observes tool use would perceive space in the same way as an actor? First, there is precedent for observer–actor mimicry in the visual system: Spatial attention is known to be automatically directed by social cues such as eye gaze (Friesen & Kingstone, 1998), head gaze (Langton & Bruce, 2000), pointing (Langton, O’Malley, & Bruce, 1996), and implied body motion (Gervais, Reed, Beall, & Roberts, 2010). Furthermore, when performing collaborative tasks, people tailor their attention and perception in response to what their partner is doing (see Sebanz, Bekkering, & Knoblich, 2006). For example, people spontaneously coordinate the deployment of their own visual attention with that of a partner when conversing about a common object (Richardson, Dale, & Kirkham, 2007) or searching through the same display (Brennan, Chen, Dickinson, Neider, & Zelinksy, 2008; Welsh et al., 2007). Representation of a partner’s task occurs even when it is unnecessary for completing one’s own task (Sebanz, Knoblich, & Prinz, 2003), and it seems that people automatically take into account what a co-viewer sees when considering their own perspective (Samson, Apperly, Braithwaite, Andrews, & Scott, 2010). At a neural level, these behavioral effects are mirrored by evidence that brain areas that are active while performing a goal-directed action are also active during observation of that same action (e.g., Iacoboni et al., 2005; Rizzolatti & Craighero, 2004), including areas that are responsible for perception–action integration and that may be involved in action-induced perceptual modulation. Taken together, these findings support the notion that socially contextualized tasks engender a “common ground,” or shared representation, amongst viewers that reflects an awareness of what the other person is doing (cf. Sebanz et al., 2006, but compare Hommel, Colzato, & van den Wildenberg, 2009, and Vlainic, Liepelt, Colzato, Prinz, & Hommel, 2010).

Although individuals may engage shared representations when performing cooperative tasks requiring mutual effort and participation with a partner, it is unknown whether the shared representations share a common perceptual basis. Additionally, it is not known whether cooperation is necessary in order to form such shared representations. In the present study, we directly investigated these issues. To determine the necessity of cooperation, we asked participants to observe the actions of another individual in the absence of any intention of performing the action themselves. In fact, the observer did not even need to attend to the actor in order to successfully complete his or her task. To determine whether observer effects are perceptual, we asked whether the spatial compression of distance seen in tool users is also present among participants observing tool use. In Experiment 1, participants watched as their partners either reached (unsuccessfully) to an out-of-reach target with their arms or reached (successfully) to the target with a reach-extending tool. Previous studies employing this type of paradigm have demonstrated that actors estimate targets that can be reached as closer than those that are out of reach (Witt, 2011b; Witt et al., 2005; Witt & Proffitt, 2008). If observation of an interaction is sufficient to cause spatial compression, then we should find that observers’ estimates mimic that pattern. Previewing our results, we indeed found that those who observe another person using a tool show the same perceptual distortions as the tool user.

In Experiment 2, we considered the fact that not all tools act to extend a user’s physical reach, but can act as a functional extension of one’s body by allowing a user to remotely affect a distant object, such as when a person turns off the TV with a remote or illuminates a projection screen with a laser pointer. Despite the mechanistic differences between direct and remote tool use, both types cause spatial compression for the user (Davoli et al., in press; Witt et al., 2005). We thus sought to test the limits of spatial compression for observers by asking whether that effect could result from watching remote tool use.

Experiment 1: Observed direct tool use

In Experiment 1, we examined the possible modulation of distance perception among participants observing another person reach for an object. Researchers in previous studies have found that using a tool to reach to an object that is beyond the reach of one’s arm reduces the perceived distance of that object (Witt et al., 2005). In order to determine whether observation of tool use causes a similar compression of perceived space, we asked one group of participants to make distance estimates to a target while watching another group reach toward the target either with their arm or with a reach-extending tool. If observation has similar perceptual consequences as action, then observers should perceive targets to be closer on trials in which their partners are able to reach to them with a tool, even when the physical distance of the objects remains unchanged. If the spatial compression associated with tool-use only occurs for actors, however, then observers should give equivalent distance estimates regardless of whether their partners can successfully reach to the target or not, and thus regardless of whether they reach with their arm or a tool.

Method

Participants

Thirty-two right-handed Washington University undergraduates participated for course credit.

Apparatus, stimuli, procedure, and design

Participants completed the experiment in pairs, with one participant in each session randomly assigned to the role of actor (16 total) and the other assigned as observer (16 total). Pairs of participants were seated side by side at the end of a table that was 152 cm wide and 152 cm long, with one participant assigned to the “actor” condition and the other to the “observer” condition. The table was placed at a diagonal in the room and was covered with a black cloth to minimize environmental anchors by which participants could judge distances. All participants used a chin rest to maintain head position across trials. A metal ball that was 2.54 cm in diameter was the target stimulus. The ball could be placed at 10 possible locations, all beyond reach of participants’ arms: 75 cm, 80 cm, 85 cm, 90 cm, 95 cm, 100 cm, 105 cm, 110 cm, 115 cm, or 120 cm away from the table’s edge nearest the participants’ bodies; this comprised a set of distance judgments. Eight sets were presented sequentially, with the order of distances within each set independently randomized. There were two conditions, arm pointing and tool pointing. During the arm pointing condition, participants pointed toward the target with the index finger of their dominant hands. During the tool pointing condition, participants used a 65-cm long pointing stick to point toward the target.

At the beginning of each trial, participants were asked to close their eyes while the experimenter placed the ball at one location. When the ball was in place, actors then pointed to it with either their arm or the tool while the observer watched the movement. After the pointing was completed, observers provided a written estimate of the target’s distance in whatever units they were most comfortable using (inches or centimeters). All measurements were converted to centimeters for the analysis. The pointing conditions were presented in two blocks of four sets, with the pointing condition fixed within a block and with condition order counterbalanced across participants.

Results and discussion

Data are depicted in Fig. 1. We eliminated the three farthest target distances (110 cm, 115 cm, and 120 cm) from analysis because some actors were unable to reach those targets even when using the tool. Therefore, the included distances are ones that all actors were able to reach with the tool. Distance estimates were submitted to a 2 (observation type: arm pointing, tool pointing) x 7 (distance: 75 cm, 80 cm, 85 cm, 90 cm, 95 cm, 100 cm, 105 cm) repeated measures ANOVA. Not surprisingly, as physical distance increased, so too did distance estimates, F(6, 90) = 156.36, p < .001, η 2p = .91. More importantly, observers judged targets to be closer after watching actors reach with the tool as compared with their arms, F(1, 15) = 5.78, p < .05, η 2p = .22. Tool pointing had a regression equation of y = 5.89x + 63.65, R 2 = .997, and arm pointing had a regression equation of y = 6.07x + 65.40, R 2 = .999. The effects of distance and observation type did not interact, F(6, 90) = 6.16, p > .30, η 2p = .02.

Results indicate that simply observing another person use a tool to reach toward an otherwise unreachable object is sufficient to cause a compression of perceived space, mimicking the pattern of perceptual distortion experienced by actors (e.g., Witt et al., 2005). This finding suggests that individuals who observe others directly interacting with objects share a common perceptual representation with the actors themselves. In Experiment 2, we tested the limits of this shared representation by examining whether the same compression could occur when observing remote interactions—ones in which a user’s reach is still functionally extended, but not physically extended.

Experiment 2: Observed remote tool use

In Experiment 2, we examined whether perceptual compression would be found when participants observed another person performing a remote interaction with a distant object (i.e., illuminating a target with a laser pointer). Although compression of perceived space has been demonstrated in participants who themselves remotely interact with an object (Davoli et al., in press), it is unclear whether that perceptual distortion would also be experienced by one who simply observes a remote interaction. Thus, in the present experiment, participants judged the distance between themselves and a target while another person either illuminated or simply pointed at the target. If observing a remote interaction with the environment is sufficient to influence the perception of the observer, then participants who watch another person illuminate a target with a laser pointer should perceive it to be closer as compared with those who just watch another point to a target. If spatial compression in this case is perceptually restricted to the one who performs the interaction, however, then distance judgments should not depend on whether participants observe an interaction between another person and the target.

Method

Participants

Thirty-six experimentally naive University of Notre Dame undergraduates participated in exchange for course credit.

Apparatus, stimuli, procedure, and design

The experiment was conducted in a basement hallway (42.5 m long and 2.6 m wide) of the psychology building at the University of Notre Dame. The target was a standard shooting target, mounted on a wooden stand. The center of the target was 127 cm above the floor. The target could be placed at eight possible locations: 1.8 m, 5.2 m, 8.8 m, 13.4 m, 19.2 m, 22.6 m, 26.2 m, or 30.5 m away from the participant. There were two observations per location, for a total of 16 experimental trials. The order of locations was randomized with the exceptions that each of the eight unique locations had to be used before a location could be repeated, and a location could not be repeated within three trials of its first occurrence.

Each experimental session involved a confederate, played by the same undergraduate research assistant in all sessions. The experimenter explained that the participant and the confederate would be working together to complete the experiment. The role of the confederate was to first point to the target with either a metal baton or a laser pointer, and the role of the participant was to then estimate the distance to the target. Although the assignment of those roles was posed to participants as being randomly determined, in actuality, it never varied. An equal number of participants observed the confederate use the laser pointer (i.e., observed interaction condition) or the baton (i.e., observed pointing condition), and the assignment to those conditions was randomly determined. The laser pointer and baton were both cylindrical in shape and measured 15 cm in length and 1.4 cm in diameter. Both were constructed from metals to equate, as much as possible, their look.

The participant and the confederate stood next to each other at a fixed location at one end of the hallway. Before each trial, the participant and the confederate both turned around so that they could not see the experimenter position the target. Trials began with the confederate either pointing to the center of the target with the baton or illuminating the target with the laser pointer. The confederate verbally affirmed when he believed to be pointing as close to center as possible, at which point (while he continued to hold his aim) the participant estimated the distance to the target. Participants were encouraged to use whatever they believed to be their most accurate unit of measurement (feet, yards, etc.). For analysis, all measurements were converted to meters.

Results and discussion

Data are depicted in Fig. 2. Mean distance estimates were submitted to a 2 (observation condition: interaction or pointing) x 8 (physical distance to target: 1.8 m, 5.2 m, 8.8 m, 13.4 m, 19.2 m, 22.6 m, 26.2 m, 30.5 m) mixed factors ANOVA.

As would be expected, verbal estimates increased as the distance to the target increased, F(7, 238) = 217.45, p < .001, η p 2 = .87. Importantly, participants in the observed interaction condition estimated the targets to be closer than did participants in the observed pointing condition, F(1, 38) = 5.73, p < .05, η p 2 = .14. Observation condition also interacted with physical distance such that the differences in estimations between the two observation conditions increased as physical distance increased, F(7, 238) = 4.21, p < .05, η p 2 = .11. Planned comparisons revealed significantly shorter distance estimates in the observed interaction condition than in the observed pointing condition at the farthest four target locations, ts(34) > 2.07, ps < .05. At 8.8 m and 13.4 m, the differences between distance estimates did not reach statistical significance, but there is a clear trend in the same direction, ts(34) > 1.85, ps < .075. The regression equation for observed pointing was y = 1.144x + .698, R 2 = .997, and the regression equation for the observed interaction was y = .869x + .021, R 2 = .999. These results suggest that when the target was further than 13.4 m away, participants who observed a remote interaction between another person and a target perceived the target to be significantly closer than did participants who observed another person pointing at but not interacting with a target. The spatial compression found here during observed remote tool-use replicates the previous finding of the presence of spatial compression during actual remote tool-use (Davoli et al., in press).

It is worth noting that although spatial compression was found during observed tool use in both Experiments 1 and 2, the pattern of compression appears to be different. In Experiment 1, the compression manifests as a constant underestimation across distances, whereas in Experiment 2, compression effects increase with increasing target distance. This difference likely originates from the nature of spatial compression effects. Compression appears to be proportional to target distance, meaning that the graded pattern can emerge only over large distances. The restriction of range in Experiment 1 causes the proportional reduction to be virtually identical across all distances, whereas the larger range in Experiment 2 allows this pattern to be apparent.

General discussion

Efficient perception of the actions of others is of fundamental importance for humans. For instance, it is through observation that we generate and inform our own motoric vocabularies, from basic movements at the earliest stages of development (Meltzoff & Moore, 1977; Piaget, 1952) to socially relevant behaviors (Bandura, 1977; Reed & McIntosh, 2008) and complex new skills (Hayes, Ashford, & Bennett, 2008) throughout adulthood. And indeed, our visual system is well suited toward the task of observing what others do, since we are able to rapidly and accurately extract human motion from otherwise ambiguous environments (e.g., Blake & Shiffrar, 2007). In the present study, we found that participants who merely observed an actor interact with a target using a tool, either directly or remotely, showed the same pattern of spatial compression as has been found previously in actors. What this demonstrates is that not only are we expert processors of human action, but that we also are able to have our own perception altered by observing this action.

Why might it be the case that observing an action can alter perception in the same way as performance? One possible explanation is that some brain regions that are active when performing an action are also active when observing it (Buccino, Binkofski, & Riggio, 2004; Chong, Cunnington, Williams, Kanwisher, & Mattingley, 2008). There are some reasons why such dual influence may be beneficial. One is that by forming representations of others’ actions, we are better able to understand their intentions (e.g., Brass & Heyes, 2005; Iacoboni, 2005). It has also been suggested that representing the actions of others during observation aids in the selection of appropriate actions for ourselves (Hickok & Hauser, 2010). Regardless of the purpose, the results of the present study are consistent with an account positing shared activation for both action and observation. The neural activity that leads to modulated distance perception for actors using a tool may be similar for participants merely observing tool use, causing spatial compression in both groups.

Another possible explanation for why observers showed similar perceptual effects to those expected among actors relates to the deployment of attention for both the actor and observer. Davoli et al. (in press) found spatial compression in participants performing remote interactions by shining a laser pointer on a distant target, and suggested that this may have occurred because participants engaged more attentional resources during the interaction. In order to interact successfully, participants likely needed to focus attention on the distant target, leading to a simultaneous altering of the perceptual attributes of the target. This alteration may arise due to a common coding of perception and action (Hommel, Müsseler, Aschersleben, & Prinz, 2001). According to this view, cognitive representations inform not only perception, but action planning as well. Thus, the ability to act on an object may also change the corresponding perception of that object. In the present studies, the observer did not need to interact with the targets him- or herself. However, people are known to coordinate the deployment of their own visual attention with that of a partner (Brennan et al., 2008; Richardson et al., 2007; Welsh et al., 2007) and represent a partner’s task even when it is unnecessary for completing their own task (Sebanz et al., 2003). This type of joint attention has been shown to lead to shared perceptual input (Sebanz et al., 2006). Thus, it is possible that if the actor in our experiments engaged more attentional resources during interaction, the observer may have done so as well. It remains to be seen to what extent attentional resources account for the mechanisms that underlie perceptual alterations during interaction.

In the present study, participants who were assigned to observe the actor never had any intention of performing the action themselves, and in fact they did not even need to attend to the actor to successfully complete their task. This eliminated two important reasons for the observer to form a shared representation with the actor: intention to perform the action and cooperation needed to complete the task. It has been shown that merely holding a tool without the intention to use it does not alter perception (Witt et al., 2005), and collaborative tasks cause participants to tailor their perception to their partner (Sebanz et al., 2006). In spite of this lack of cooperation and intention, observers still established a shared representation with the actors that showed effects at a perceptual level. This indicates that shared representations between an actor and observer are easily, and perhaps even automatically, established.

There is convincing evidence that humans understand the world in terms of action and interaction (e.g., Witt, 2011a). Watching someone perform an action not only provides information about the object of the action, but also informs the observer of what is necessary for that interaction to be successful. Additionally, as the present experiments have shown, observing an interaction can change the perception of an object, perhaps increasing the likelihood of a successful interaction by the observer in the future. These findings provide support for the notion that we not only learn about the world through our own physical experiences, but that we also have the ability to learn through the experiences of others.

References

Bandura, A. (1977). Social learning theory. Oxford: Prentice-Hall.

Brass, M., & Heyes, C. (2005). Imitation: Is cognitive neuroscience solving the correspondence problem? Trends in Cognitive Sciences, 9, 489–495.

Brennan, S. E., Chen, X., Dickinson, C. A., Neider, M. B., & Zelinsky, G. J. (2008). Coordinating cognition: The costs and benefits of shared gaze during collaborative search. Cognition, 106, 1465–1477.

Buccino, G., Binkofski, F., & Riggio, L. (2004). The mirror neuron system and action recognition. Brain and Language, 89, 370–376.

Chong, T. T.-J., Cunnington, R., Williams, M. A., Kanwisher, N., & Mattingley, J. B. (2008). fMRI adaptation reveals mirror neurons in human inferior parietal cortex. Current Biology, 18, 1576–1580.

Davoli, C.C., Brockmole, J.R., & Witt, J.K. (in press). Compressing perceived distance with remote tool-use: Real, imagined, and remembered. Journal of Experimental Psychology: Human Perception & Performance.

Friesen, C. K., & Kingstone, A. (1998). The eyes have it! Reflexive orienting is triggered by nonpredictive gaze. Psychonomic Bulletin & Review, 5, 490–495.

Gervais, W. M., Reed, C. L., Beall, P. M., & Roberts, R. J. (2010). Implied body action directs spatial attention. Attention, Perception, & Psychophysics, 72, 1437–1443.

Hayes, S. J., Ashford, D., & Bennett, S. J. (2008). Goal-directed imitation: The means to an end. Acta Psychologica, 127, 407–415.

Hickok, G., & Hauser, M. (2010). (Mis)understanding mirror neurons. Current Biology, 20, R593–R594.

Hommel, B., Colzato, L. S., & van den Wildenberg, W. P. M. (2009). How social are task representations? Psychological Science, 20, 794–798.

Hommel, B., Müsseler, J., Aschersleben, G., & Prinz, W. (2001). The Theory of Event Coding (TEC): A framework for perception and action planning. The Behavioral and Brain Sciences, 24, 849–937.

Iacoboni, M. (2005). Neural mechanisms of imitation. Current Opinion in Neurobiology, 15, 632–637.

Iacoboni, M., Molnar-Szakacs, I., Gallese, V., Buccino, G., Mazziotta, J. C., & Rizzolatti, G. (2005). Grasping the intentions of others with one's own mirror neuron system. PLoS Biology, 3(3), e79.

Langton, S. R. H., & Bruce, V. (2000). You must see the point: Automatic processing of cues to the direction of social attention. Journal of Experimental Psychology: Human Perception and Performance, 26, 747–757.

Langton, S. R. H., O'Malley, C., & Bruce, V. (1996). Actions speak no louder than words: Symmetrical cross-modal interference effects in the processing of verbal and gestural information. Journal of Experimental Psychology: Human Perception and Performance, 22, 1357–1375.

Meltzoff, A. N., & Moore, M. K. (1977). Imitation of facial and manual gestures by human neonates. Science, 198, 75–78.

Piaget, J. (1952). Play, dreams and imitation in childhood. New York: W. W. Norton & Co.

Reed, C. L., & McIntosh, D. N. (2008). The social dance: On-line body perception in the context of others. New York: Psychology Press.

Richardson, D. C., Dale, R., & Kirkham, N. Z. (2007). The art of conversation is coordination: Common ground and the coupling of eye movements during dialogue. Psychological Science, 18, 407–413.

Rizzolatti, G., & Craighero, L. (2004). The mirror-neuron system. Annual Review of Neuroscience, 27, 169–192.

Samson, D., Apperly, I. A., Braithwaite, J. J., Andrews, B. J., & Bodley Scott, S. E. (2010). Seeing it their way: Evidence for rapid and involuntary computation of what other people see. Journal of Experimental Psychology: Human Perception and Performance, 36, 1255–1266.

Sebanz, N., Bekkering, H., & Knoblich, G. (2006). Joint action: Bodies and minds moving together. Trends in Cognitive Sciences, 10, 71–76.

Sebanz, N., Knoblich, G., & Prinz, W. (2003). Representing others' actions: Just like one's own? Cognition, 88, B11–B21.

Vlainic, E., Liepelt, R., Colzato, L. S., Prinz, W., & Hommel, B. (2010). The virtual co-actor: The Social Simon effect does not rely on online feedback from the other. Frontiers in Psychology, 1, 208.

Welsh, T. N., Lyons, J., Weeks, D. J., Anson, J. G., Chua, R., Mendoza, J., & Elliott, D. (2007). Within- and between-nervous-system inhibition of return: Observation is as good as performance. Psychonomic Bulletin & Review, 14, 950–956.

Witt, J. K. (2011a). Actions effect on perception. Current Directions in Psychological Science, 20, 201–206.

Witt, J. K. (2011b). Tool use influences perceived shape and parallelism: Indirect measures of perceived distance. Journal of Experimental Psychology: Human Perception and Performance, 37, 1148–1156.

Witt, J. K., & Proffitt, D. R. (2008). Action-specific influences on distance perception: A role for motor simulation. Journal of Experimental Psychology: Human Perception and Performance, 34, 1479–1492.

Witt, J. K., Proffitt, D. R., & Epstein, W. (2005). Tool use affects perceived distance, but only when you intend to use it. Journal of Experimental Psychology: Human Perception and Performance, 31, 880–888.

Author information

Authors and Affiliations

Corresponding author

Additional information

Noam Roth is now affiliated with the Department of Neuroscience, University of Pennsylvania

We would like to thank Emily Ehrman, Bryan Golubski, and Sarah Wiesen for their help with data collection

Rights and permissions

About this article

Cite this article

Bloesch, E.K., Davoli, C.C., Roth, N. et al. Watch this! Observed tool use affects perceived distance. Psychon Bull Rev 19, 177–183 (2012). https://doi.org/10.3758/s13423-011-0200-z

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-011-0200-z