Abstract

The story of information processing is a story of great success. Todays' microprocessors are devices of unprecedented complexity and MOSFET transistors are considered as the most widely produced artifact in the history of mankind. The current miniaturization of electronic circuits is pushed almost to the physical limit and begins to suffer from various parasitic effects. These facts stimulate intense research on neuromimetic devices. This feature article is devoted to various in materio implementation of neuromimetic processes, including neuronal dynamics, synaptic plasticity, and higher-level signal and information processing, along with more sophisticated implementations, including signal processing, speech recognition and data security. Due to the vast number of papers in the field, only a subjective selection of topics is presented in this review.

Export citation and abstract BibTeX RIS

1. Introduction

The story of information processing is a story of great success. Todays' microprocessors are integrated circuits of unprecedented complexity and MOSFET transistors are considered as the most widely produced component in the history of mankind, with a total number of fabricated devices easily exceeding several sextillions (1021)1) The contemporary microprocessor contains approximately 3.95 × 109 transistors.2) In a human, there are an estimated 10–20 billion neurons in the cerebral cortex and 55–70 billion neurons in the cerebellum.3) A human brain is, therefore, the most complex information processing structure, as each of the ca. 8 × 1010 neurons may form up to 104 synaptic connections with other neurons. Brain's ability to learn and adapt is a consequence if its dynamically changing topology of synaptic connections, plasticity of individual connections, high redundancy and multilevel dynamics at various geometrical and temporal scales.

These facts stimulate intense research on neuromimetic devices. Their performance, at the present stage of development, cannot be compared with natural systems, but provides stimulation for other fields of investigation, including chemistry, physics, electronics, and computer sciences.

This feature article is devoted to various in materio implementations of neuromimetic processes, including neuronal dynamics, synaptic plasticity, and higher-level signal and information processing. From the plethora of various in-materio implementation of information processing, involving inorganic and organic materials, polymers, various molecular species, as well as biopolymers and even living organisms4) we have chosen a handful of wet photochromic systems and semiconducting materials. This selection is by no means exhaustive, but sufficient to illustrate the main research directions as well as current trends in in-materio neuromimetic computing.

2. Mimicking neural dynamics

Human intelligence emerges from the complex structural and dynamical properties of our nervous system. The primary cellular elements of our nervous systems are neurons. The ultimate computational power of our nervous system relies on the dynamical properties of neurons and their networks. Every neuron is a nonlinear dynamic system,5,6) and according to some theoretical analysis can be regarded as a biological memristive element.7–9) Some neurons operate in the oscillatory regime. They are called pacemaker neurons and fire action potentials periodically. Pacemaker neurons generate rhythmic activities in neural networks involved in the neocortex, basal ganglia, thalamus, locus coeruleus, hypothalamus, ventral tegmentum area, hippocampus, and amygdala.10) These structures are associated with sleep, wakefulness, arousal, motivation, addiction, memory consolidation, cognition, and fear.

Excitable neurons are another type of neurons present in the nervous system. Excitability can be twofold—"tonic" or "phasic". When neurons react to a constant excitatory signal by firing a sequence of spikes, they are classified as "tonic". On the other hand, excitability is "phasic", when neurons react in an analog manner and shoot only once, when receiving a sharp excitatory signal. Excitatory "tonic" neurons are present e.g. in the cortex whereas "phasic" excitable neurons act e.g. in the auditory brainstem (involved in precise timing computations) and in the spinal cord.11,12)

Finally, there are chaotic neurons. Chaotic neurons are quite common in the nervous system because the intrinsic dynamic instability facilitates the extraordinary ability of neural networks to adapt.6,13)

It is possible to mimic the dynamics of neurons by selecting specific chemical systems and maintaining them out-of-equilibrium.14) One of the most widespread examples is the Belousov–Zhabotinsky (BZ) reaction. Other popular models are memristive elements and circuits,7–9) as well as some (photo)electrochemical systems, especially those with self-excitable oscillations.15)

The dynamics of neural networks can be mimicked in reservoir computing systems: unconventional computational systems exhibiting a dynamic behavior, internal dynamics, fading memory and echo state property. They are usually based on a nonlinear node (e.g. memristor) which at the same time provides memory features, an input layer which provides information/signal to be processed, a delayed feedback loop, which provides internal dynamics of the system and a readout layer, the part of the reservoir system which reflects the internal state of the reservoir, thus providing the echo state property. The readout layer is the only part of the system that may undergo training. Details on theory, construction, and properties of reservoir systems can be found elsewhere in numerous specialized papers and are out of the scope of this review.16–21)

2.1. The case of the BZ reaction

The BZ reaction is a catalyzed oxidative bromination of malonic acid in aqueous acidic solution (1):

Various metal ions or metal-complexes, such as either cerium ions or ferroin [i.e. tris-(1,10-phenanthroline)-iron(II)] or tris(2,2'-bipyridyl)dichloro-ruthenium(II) (Ru(bpy)32+) can serve as catalyst. The mechanism of the BZ is quite complicated because it consists of many elementary steps. Briefly, when the concentration of the intermediate bromide (Br−) is higher than its critical value, the reaction proceeds by a set of elementary steps wherein the catalyst maintains the reduced state, and the solution is red-colored in the presence of ferroin. During these elementary steps, bromide is consumed. As soon as the concentration of Br− is lower than its critical value (that corresponds to 5 × 10−6 [BrO3−]), the reaction proceeds through another set of elementary steps, where mono-electronic transformations are involved, and the catalyst goes from the reduced to the oxidized state. In the presence of the indicator ferroin, the solution becomes blue. When the concentration of the oxidized state of the catalyst becomes high, another set of reactions becomes important where bromide is produced. As soon as the bromide concentration becomes again higher than its critical value, the solution switches from blue to red, and the cycle repeats. A BZ reaction in its reduced state is like a resting neuron in its hyperpolarized state. When the BZ reaction feels a small perturbation, it maintains its reduced state. On the other hand, when the perturbation is sufficiently strong, slightly above a critical threshold value, it responds by moving temporarily to its oxidized state and then recovering its original reduced state. By a careful choice of the boundary conditions (i.e. concentrations of the reagents, temperature, and flow rate, in case the reaction is performed in an open system), it is possible to have the BZ reaction in either the oscillatory or the tonic excitable or the chaotic regime.22) The BZ reaction in the oscillatory regime is a good model of real pacemaker cells. Pacemaker cells have their internal rhythm, but external stimuli can alter their timing. In pacemaker cells, information about a stimulus is encoded by changes in the timing of individual action potentials, and it is used to rule proprioception and motor coordination for running, swimming and flying.23) In a similar way to neurons, the BZ reaction in the oscillatory regime can be perturbed in its timing by both inhibitors and activators. Bromide is an example of an inhibitor, whereas Ag+ is an example of an activator, or more precisely of an anti-inhibitor because its addition removes bromide, forming an AgBr precipitate. The effect of injection of either Br− or Ag+ is immediate, and the BZ reaction restores the initial period quickly, after one or a few more cycles, if it is carried out in an open system such as a continuous-flow stirred tank reactor (CSTR). The CSTR guarantees a replenishment of fresh reagents and the elimination of the products. The response of the system is phase-dependent, where for the phase of addition we mean the ratio

In (2), τ is the "time delay", i.e. the time since the most recent spike occurred, and T0 is the period of the previous oscillations. The addition of Br− leads always to a delay in the appearance of a spike. In other words,  (Tpert is the period of the perturbed oscillation) is always positive. The higher the phase of the addition of Br−, the larger the ΔT.24) The addition of silver ion decreases the period unless it is injected in small quantities and at a low phase, inducing a slight lengthening of the period of oscillations.

(Tpert is the period of the perturbed oscillation) is always positive. The higher the phase of the addition of Br−, the larger the ΔT.24) The addition of silver ion decreases the period unless it is injected in small quantities and at a low phase, inducing a slight lengthening of the period of oscillations.

Since the information within our brain is encoded as a pattern of activity of neural networks, it is compelling to study the coupling between artificial neurons and the corresponding dynamics. The coupling among real neurons takes place through discrete chemical pulses of neurotransmitters released by the synapses of a neuron and collected by the dendrites of other neurons. Therefore, it is useful to focus on pulse-coupled oscillators. The study of two pulse-coupled BZ oscillators, implemented in two physically separated CSTRs, may be viewed as the chemical analog of the two pulse-coupled pacemaker cells. The dynamics of two pseudo-neurons has been investigated under both symmetrical inhibitory and/or excitatory coupling.25,26) The latency caused by the propagation of action potentials has been emulated employing a delay between the appearance of a spike and the release of a pseudo-neurotransmitter. Both symmetrical and asymmetrical coupling can give rise to many temporal patterns. For example, mutual and symmetrical inhibitory coupling generates either anti-phase, in-phase or irregular oscillations depending on the time delay and concentration of the inhibitor; when τ is zero and [Br−] is large, suppression of oscillations in one artificial neuron model—that is maintained in its reduced state—has been observed. Even the mutual and symmetrical excitatory coupling generates different dynamical regimes: the so-called master and slave condition, bursting behavior, fast anti-phase oscillations, and suppression of oscillations with the suppressed oscillator maintained in its oxidized state. Further patterns have been achieved with mixed excitatory-inhibitory coupling and with the symmetrical coupling of two unequal BZ oscillators.27) All these dynamical patterns emulate the reasoning code of pairs of real neurons. However, the main drawback of these artificial systems is their hybrid nature. The chemical coupling is ruled by a silicon-based computer (see Fig. 1). To contrive chemical oscillators that can couple autonomously, it is useful to focus on optical signals and photo-sensitive oscillators.

Fig. 1. (Color online) Scheme of a computer-controlled coupling between two BZ reactions performed in two distinct CSTRs.

Download figure:

Standard image High-resolution image2.2. Optical communication among artificial neuron models

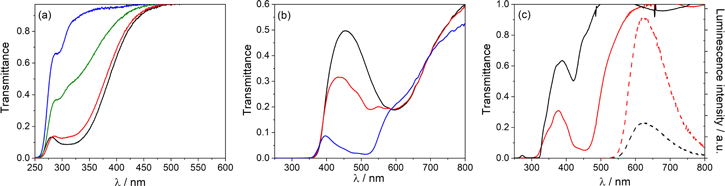

The oscillatory BZ reaction with cerium ions as catalysts gives rise to appreciable transmittance oscillations in the UV part of the electromagnetic spectrum [see Fig. 2(a)]. If a UV radiation with constant intensity crosses the BZ reaction, its transmitted intensity is modulated. In other words, the BZ reaction transmits a UV output, whose intensity oscillates, and the frequency of oscillations coincides with the intrinsic frequency of the BZ. When ferroin is introduced as either the catalyst or the redox indicator, large transmittance oscillations are recordable in all the visible region of the spectrum [see Fig. 2(b)]. Therefore, the BZ reaction becomes suitable to transmit a visible oscillatory signal. Transmittance oscillations in the visible spectrum and oscillatory red luminescence are recordable in case Ru(bpy)32+ is chosen as the catalyst [see Fig. 2(c)].

Fig. 2. (Color online) Transmittance oscillations for the BZ with cerium ions (a), with ferroin (b), and with Ru(bpy)32+ (c). The dashed spectra in (c) represent the oscillations in the red luminescence of [Ru(bpy)3]2+.

Download figure:

Standard image High-resolution imageThe periodic UV or visible radiation, transmitted or emitted by the BZ reaction, can be sent to luminescent and photochromic compounds.28) Luminescent and thermally reversible photochromic compounds are good models of phasic excitable neurons. They respond to a steady excitatory signal with analog output of emitted light or color saturation. Moreover, they "relax", i.e. they recover the initial state upon cessation of the excitatory signal. When the BZ reaction transmits a periodic excitatory optical signal to the photo-excitable receiver, constituted by either a luminescent or a photochromic compound, a master-and-slave relationship is always established. The light emitted by the luminescent compound or the saturation of the color generated by the photochromic compound oscillate with the same frequency of the BZ. The oscillations of the optical signals of the transmitter and the receiver are in-phase or out-of-phase depending on the response rate of the "slave": if it is fast, the synchronization is in phase, whereas if it is slow, the sync is out-of-phase.

Photochromic materials find further applications in neuromimetic systems based on optical signals. Photo-reversible photochromic compounds allow to implement memory effects: if they are direct photo-reversible photochromes, UV and visible signals promote and inhibit their colorations, respectively. Furthermore, when a photochromic compound receives excitatory optical signals at the bottom of a liquid column, wherein there are either laminar or turbulent convective motions of the solvent generated by a vertical thermal gradient, it gives rise to a hydrodynamic photochemical oscillator that originates chaotic spectrofluorimetric signals.29–31) A hydrodynamic photochemical oscillator can mimic a chaotic neuron. If it sends its chaotic excitatory signal to a luminescent compound, the latter synchronizes in-phase and emits an aperiodic fluorescence signal having the same chaotic features of the transmitter.32)

Finally, the intrinsic spectral evolution of every photochromic compound that transforms from one form to the other under irradiation, generates either positive or negative feedback actions. The optical feedback actions produced by every photochromic compound act on both itself and other photo-sensitive neuro-mimetic systems that are optically connected to the photochrome. Therefore, recurrent networks can be implemented by using photochromic compounds.28) The feedback actions of every photochrome are wavelength-dependent because its photo-excitability, which depends on the product  where ε is its absorption coefficient and Φ is its photochemical quantum yield, is also wavelength-dependent. Therefore, photochromic compounds allow implementing neuromodulation,33) which is the alteration of neuronal and synaptic properties in the context of neuronal circuits, allowing anatomically defined circuits to produce multiple outputs reconfiguring networks into different functional circuits.34)

where ε is its absorption coefficient and Φ is its photochemical quantum yield, is also wavelength-dependent. Therefore, photochromic compounds allow implementing neuromodulation,33) which is the alteration of neuronal and synaptic properties in the context of neuronal circuits, allowing anatomically defined circuits to produce multiple outputs reconfiguring networks into different functional circuits.34)

When photochromic and luminescent compounds are combined with luminescent oscillatory reactions, such as the chemiluminescent Orbán transformation, they allow implementing even feed-forward networks wherein optical signals travel unidirectionally.28) The recurrent and feed-forward networks implemented so far with oscillatory and photo-excitable chemical systems consist of two or, at most, three nodes that communicate through an optical code. The UV and visible signals can play both excitatory and inhibitory effects. Within the networks, phenomena of in-phase, out-of-phase, anti-phase, and phase-locking synchronizations have been observed as it occurs in networks of real neurons that communicate through the chemical code of neurotransmitters. Three communication architectures have been devised, labeled as α, β, and γ, respectively. In the α architecture, the transmitter and the receiver are in the same cuvette and the same phase, possibly with one component chemically protected by micelles. In the β architecture, the transmitter and the receiver are in the same cuvette but two immiscible phases. In the γ architecture, the transmitter and the receiver are in two distinct cuvettes. The networks have been attained by hybridizing or upgrading the α, β, and γ architectures.28) All these optical communications do not require an external source of information, such as computer software, to be guided, but they are spontaneous and maintained out-of-equilibrium by chemical and electromagnetic energies.

2.3. An artificial neuron in photoelectrochemical system

Various photoelectrochemical processes have been considered as a computational platform for quite a long-time.35) The basis of these processes was a photoelectrochemical photocurrent switching effect (PEPS)36,37) observed in numerous surface-modified semiconductors38) as well as in highly defected bulk semiconductors like cadmium sulfide,39) bismuth sulfoiodide40) and bismuth oxyiodide.41) The effect was utilized for the implementation of various binary logic gates, reconfigurable logic gates,42,43) combinatorial logic circuits44–47) and arithmetic systems.48) Later the PEPS-based devices were used to implement ternary logic functions,49) ternary combinatorial circuits50) and fuzzy logic systems (FLSs).38,50) The analysis of the PEPS effect and its applications were based solely on the thermodynamics of modified semiconducting materials, whereas the kinetic aspects of photocurrent generation were neglected. The involvement of kinetic factors leads to new switching phenomena with potentials applications in information processing.51)

Detailed photoelectrochemical studies of cadmium sulfide—multiwalled carbon nanotubes composite have shown a peculiar dynamic behavior of this material subjected to short pulses of light.52) The photoelectrodes prepared from this composite material, when subjected to a series of light pulses, generate photocurrent pulses, the intensity of which depends on the history of the photoelectrode. In more detail, it depends on past illumination history: the second pulse yields a photocurrent of higher intensity when the interval between pulses is short enough. The response of the electrode towards trains of pulses of various frequencies are shown in Fig. 3. Quite surprisingly, the dependence of the photocurrent spike intensity on time interval between pulses is described by a bi-exponential equation, like in the case of living plastic neurons.53)

Fig. 3. (Color online) The response of the artificial synapse upon illumination (450 nm) with 2 s (a) and with 50 ms (b) time intervals between light pulses. The plasticity of the studied synaptic system with the fit line described by Eq. (2) (b) is compared to the plasticity of hippocampal glutamatergic neurons in culture (d) (data are taken from Ref. 53). Reproduced from Ref. 52 with permission from Wiley.

Download figure:

Standard image High-resolution imageHebbian learning54) is based on the plastic properties of synapses. Synaptic plasticity is the process that strengthens or weakens the connection between neurons as a consequence of the time sequence of firing events. It was figuratively described as "neurons wire together if they fire together".55) This process is usually described by bi-exponential Eq. (3):56,57)

where Δt is the time interval between post- and presynaptic signals, τ± are the time constants and A± are the parameters determined by the synaptic weights. The upper part of the formula (the  case) describes the potentiation mode (i.e. an increase of synaptic weight as a consequence of the decreased time interval between events), whereas the lower one (the

case) describes the potentiation mode (i.e. an increase of synaptic weight as a consequence of the decreased time interval between events), whereas the lower one (the  case) the depression mode. In the described case only the potentiation mode has been observed, therefore the response function can be simplified to (4):52)

case) the depression mode. In the described case only the potentiation mode has been observed, therefore the response function can be simplified to (4):52)

where α1,2 and Τ1,2 are relevant fitting parameters while β is only a scale factor, which is irrelevant for the data interpretation. The result of the fitting procedure is presented in Fig. 3(c). A good match was found (χ2 around 1.1 × 10−3) with the following set of fitting parameters: α1 =3.014 ± 0.034, α2 = 4.80 ± 0.21, Τ1 = 116.4 ± 3.7 ms, Τ2 =6.88 ± 0.31 ms, and β =1.722 ± 0.024. It is noteworthy that the time constants are of comparable value to the ones obtained for biological structures.56,58)

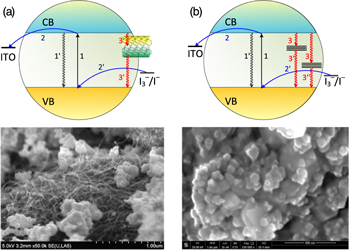

The mechanism of the plastic processes observed in the CdS-MWCNT composite is relatively simple. Photoexcitation of the material within the fundamental absorption of cadmium results in the promotion of electrons from the valence to the conduction band (process 1 in Fig. 4). Some electrons may undergo thermal relaxation to the valence band (1'). The observed photocurrent is a consequence of interfacial electron transfer to the conducting substrate (2) accompanied by the redox reaction with the redox mediator (iodide anions in the studied case) present in the electrolyte (2'). In the presence of carbon nanotubes, acting as additional electron traps the process (3) competes with photocurrent generation (2). The recombination process (3') must be significantly slow, therefore the lifetime of trapped electrons is in order of tenths of a second. Partial filling of the nanotube-related trap states results in increased photocurrent intensities.

Fig. 4. (Color online) The mechanism responsible for the synaptic behavior of the CdS/MWCNT-based device (a) and hexagonal/tetragonal CdS mixtures (b) along with SEM images of studied materials. Adapted from Refs. 52, 59.

Download figure:

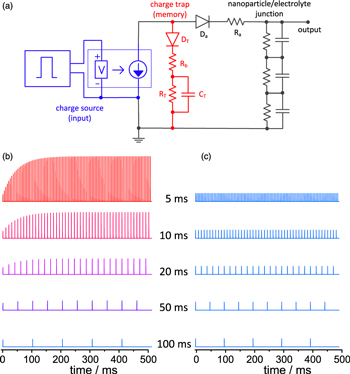

Standard image High-resolution imageThe SPICE model [Fig. 5(a)] proves the correctness of the proposed mechanism. Photoelectrode based on the CdS-MWCNT material was modeled by three RC loops, which simulate the behavior of a nanoparticulate electrode as suggested in previous reports.37,60) The nanotube trapping site was modeled by an additional RTCT loop. The capacitance of this part was set to be 50 times larger (500 nF) than the ones used in the other RC loops. This choice is justified by a very high electric capacitance of carbon nanotubes.61) The equivalent circuit contains also other elements: Ra describes the ohmic resistance of the electrolyte, whereas Rb is the electron transfer resistance of the CdS-MWCNT junction. Two diodes in the circuit represent Schottky junctions between conducting support and the material (Da) and between carbon nanotubes and semiconductor nanoparticles (DT). These diodes provide unidirectional electron transfer from the conduction band to the conducting substrate (Da) and from the conduction band to the nanotube traps (DT).

Fig. 5. (Color online) The equivalent electronic circuit employed to describe the ITO/MWCNTs/CdS photoelectrodes (a) and the simulated time-dependent response of the equivalent circuit to the pulsed stimulation characterized by various repetition intervals. Two cases were taken into consideration: in the first one the branch responsible for charge trapping/de-trapping events (related to the presence of MWCNTs) was introduced (b) and in the second one it was removed (c). Reproduced from Ref. 52 with permission from Wiley.

Download figure:

Standard image High-resolution imageThe application of change pulses results in trains of output potential of characteristics very close to the experimental ones [cf. Fig. 5(b)]. Removal of the charge trapping subcircuit (red in Fig. 5) results in a disappearance of the learning effect [Fig. 5(c)]. Very recently it was found that similar effects can be observed in other highly defected nanocrystalline CdS modifications. Very similar photoelectrochemical neuromimetic devices were based on a 1:1 mixture of hexagonal and tetragonal polymorphs of cadmium sulfide [Fig. 4(b)]. In this particular case, the bi-exponential learning curve was also observed (χ2 = 6.23 × 10−5), but the fitting parameters were significantly different (α1 = 0.218 ± 0.013, α2 = 0.339 ± 0.014, Τ1 = 167 ± 23 ms, Τ2 = 20 ± 4 ms, and β = 1.00 ± 0.01).59)

3. Processing fuzzy logic with molecules

Human intelligence has the remarkable power of handling both accurate and vague information. Vague information is coded through the words of our natural languages. We have the remarkable capability to reason, speak, discuss, and make rational decisions without any quantitative measurement and any numerical computation, in an environment of uncertainty, partiality, and relativity of truth. A major challenge is the design of neuromimetic devices that have the capabilities of human intelligence to compute with words.62) The imitation of the human ability to compute with words is still challenging. One of the approaches that can offer a satisfying approximation is fuzzy logic-based models.

3.1. Some key concepts of fuzzy logic

Fuzzy logic has been defined as a rigorous logic of vague and approximate reasoning.63) Fuzzy logic is based on the theory of fuzzy sets proposed by the engineer Lotfi Zadeh in 1965.64) A fuzzy set is different from a classical Boolean set because it breaks the law of Excluded Middle. An item may belong to a fuzzy set and its complement at the same time, with the same or different degrees of membership. The degree of membership (μ) of an element to a fuzzy set can be any number included between 0 and 1. It derives that fuzzy logic is an infinite-valued logic. Fuzzy logic can be used to describe any nonlinear cause and effect relation by building a FLS. The construction of an FLS requires three fundamental steps. First, the granulation of all the variables in fuzzy sets. The number, position, and shape of the fuzzy sets are context-dependent. Second, the graduation of all the variables: each fuzzy set is labeled by a linguistic variable, often an adjective. Third, the relations between input and output variables are described through syllogistic statements of the type "If..., Then....", which are named as fuzzy rules.

The "If..." part is called the antecedent and involves the labels chosen for the input fuzzy sets. The "Then..." part is called the consequent and involves the labels chosen for the output fuzzy sets. When we have multiple inputs, these are connected through the AND, OR, NOT operators.65) In formulating the fuzzy rules, we must consider all the possible scenarios, i.e. all the possible combinations of input fuzzy sets. At the end of the three-steps procedure, an FLS is built; it is a predictive tool or a decision support system for the particular phenomenon it describes.

Every FLS is constituted by three components: A fuzzifier, a fuzzy inference engine, and a defuzzifier. A fuzzifier is based on the partition of all the input variables in fuzzy sets. It transforms the crisp values of the input variables in degrees of membership to the input fuzzy sets. The fuzzy inference engine is based on fuzzy rules. It turns on all the rules that involve the fuzzy sets activated by the crisp input values. Finally, the Defuzzifier is based on the fuzzy sets of the output variables, and it transforms the collection of the output fuzzy sets, activated by the rules, in crisp output values. Fuzzy logic is a good model of the human ability to compute with words because there are some structural and functional analogies between any FLS and the human nervous system (HNS).66)

3.2. The fuzziness of the HNS

The HNS comprises three elements: (I) the sensory system; (II) the central nervous system; (III) the effectors' system. The sensory system catches physical and chemical signals and transduces them in electro-chemical information that is sent to the brain. In the brain, information is integrated, stored, and processed. The outputs of the cerebral computations are electro-chemical commands sent to the components of the effectors' system, i.e. glands and muscles.67) The sensory system includes visual, auditory, somatosensory, olfactory, and gustatory subsystems. Each type of sensory subsystem encodes four features of a stimulus: modality, intensity, time evolution, and spatial distribution.

The power of distinguishing these features derives from the hierarchical structure of every sensory system. In fact, for each sensory system, we have, at the smallest level, a collection of distinct molecular switches. At an upper level, we have a set of distinct sensory cells: each cell contains many replicas of a specific molecular switch. Finally, at the highest level, we have many replicas of the different receptor cells that are organized in a tissue whose structure depends on the architecture of the sensory organ. For instance, in the case of the visual system, we have four types of photoreceptor proteins (each one absorbing a specific portion of the visible spectrum); four types of cells (one rod and three types of cones), each one having many replicas of one of the four types of photoreceptor proteins. Finally, we have many replicas of the different cells disposed on a photo-sensitive tissue, the retina, with the rods spread on the periphery, and the cones concentrated in the fovea.

Consequently, the information of a stimulus is encoded hierarchically. The collection of four types of photoreceptor proteins plays like an ensemble of four distinct molecular fuzzy sets. The information regarding the modality of the stimulus is encoded as degrees of membership of the stimulus to the four molecular fuzzy sets; that is, it is encoded as fuzzy information at the molecular level ( ). The four types of cells play like cellular fuzzy sets. The information regarding the intensity is encoded as degrees of membership of the stimulus to the cellular fuzzy sets, that is, as fuzzy information at the cellular level (

). The four types of cells play like cellular fuzzy sets. The information regarding the intensity is encoded as degrees of membership of the stimulus to the cellular fuzzy sets, that is, as fuzzy information at the cellular level ( ). Finally, the array of the many replicas of the receptive cells plays like an array of cellular fuzzy sets, and the information regarding the spatial distribution of the stimulus is encoded as degrees of membership to the array of cells, that is, as fuzzy information at the organ level (

). Finally, the array of the many replicas of the receptive cells plays like an array of cellular fuzzy sets, and the information regarding the spatial distribution of the stimulus is encoded as degrees of membership to the array of cells, that is, as fuzzy information at the organ level ( ). The total information of the stimulus will be the composition of the fuzzy information encoded at the three levels. For instance, in the case of the photoreceptor system, the total information will be a matrix of data reproducing the array of cells on the retina. Each element of the matrix will be the product of two terms: the fuzzy information encoded at the molecular level times that encoded at the cellular level.

). The total information of the stimulus will be the composition of the fuzzy information encoded at the three levels. For instance, in the case of the photoreceptor system, the total information will be a matrix of data reproducing the array of cells on the retina. Each element of the matrix will be the product of two terms: the fuzzy information encoded at the molecular level times that encoded at the cellular level.

The sensory cells produce graded potentials that are analog signals. The information of such signals is usually converted into the firing rate of the action potential trains. Often, the action potentials are produced by an architecture of afferent neurons that integrate the information regarding the spatial distribution of the stimuli. Every afferent neuron has a receptive field that works as a fuzzy set encompassing specific receptor cells.68) The action potentials generated by the afferent neurons are the ideal code for sending the information up to the brain. In the cerebral cortex, various areas are having different intrinsic rhythms.69) They form a neural dynamic space partitioned in overlapped cortical fuzzy compartments. Such cortical fuzzy sets are activated at different degrees by separate attributes of the perceptions and produce a meaningful experience of the external and internal worlds.

3.3. The best strategies to implement FLSs

In electronics, the best implementations of FLSs have been achieved through analog circuits, although fuzzy logic is routinely processed in digital electronic circuits. More recently, fuzzy logic has also been processed by using molecules, macromolecules, and chemical transformations. All the methods proposed for processing fuzzy logic can be sorted out in three main strategies.68) The first strategy is an imitation of the "fuzzy parallelism" of the sensory subsystems described in the previous paragraph. The second is the "conformational fuzziness" of molecules and macromolecules that exist as an ensemble of conformers, whose distribution is context-dependent. The third is the "quantum fuzziness" that hinges on the decoherence of overlapped quantum states originating continuous, smooth, analog input-output relationships between macroscopic variables when it involves large amounts of molecules. In the next paragraphs, examples of the three strategies are described.

3.3.1. The "fuzzy parallelism" of the biologically inspired photochromic FLSs

As we have seen in paragraph 3.2, the human visual system grounds on four photoreceptor proteins: three for daily vision in color and one for night vision in black and white. All of them have 11-cis retinal as the chromophore. However, the four photoreceptors have different absorption spectra in the visible region, because they differ in the amino-acidic composition. The absorption spectra of the four photoreceptor proteins behave as "molecular fuzzy sets". The spectral composition of a light stimulus is encoded as degrees of membership of the light to these "molecular fuzzy sets". Moreover, the millions of replicas of the three photoreceptor proteins within each photoreceptor cell allow determining the intensity of the signals at every wavelength. The imitation of the way we distinguish colors has allowed devising chemical systems that extend human vision to the UV.70) Such chemical systems are based on direct thermally reversible photochromic compounds. Direct photochromic species usually absorb just in the UV. The criteria to mix the direct photochromic compounds and generate Biologically inspired photochromic fuzzy logic (BIPFUL) systems that extend the human ability to distinguish electromagnetic frequencies to the UV region have been the following ones. First, the absorption bands of the closed uncolored (Un) forms were assumed to be input fuzzy sets. Second, the absorption bands of the open colored (Col) forms were assumed to be output fuzzy sets. Third, the algorithm expressing the degree of membership of the UV radiation, having the intensity  at the wavelength

at the wavelength  to the absorption band of the ith compound is given by (5):

to the absorption band of the ith compound is given by (5):

In Eq. (5),  is the photochemical quantum yield of photo-coloration,

is the photochemical quantum yield of photo-coloration,  the absorption coefficient at

the absorption coefficient at  for the ith photochromic species, and C0,i is its analytical concentration. Finally, the equation expressing the activation of the ith output fuzzy sets is (6):

for the ith photochromic species, and C0,i is its analytical concentration. Finally, the equation expressing the activation of the ith output fuzzy sets is (6):

In Eq. (6),  is the absorbance at the wavelength

is the absorbance at the wavelength  into the visible and due to the colored form of the ith photochromic species;

into the visible and due to the colored form of the ith photochromic species;  is its absorption coefficient, and

is its absorption coefficient, and  is its kinetic constant of the bleaching reaction. Each absorption spectrum recorded at the photo-stationary state will be the sum of as many terms represented by Eq. (6) as there are photochromic components present within the BIPFUL system. Some BIPFUL systems consisting of from three to five photochromic compounds have been proposed.71) They allow the three regions of the UV spectrum, UV-A, UV-B, and UV-C, to be discriminated because the wavelengths belonging to the three UV regions originate from distinct colors.

is its kinetic constant of the bleaching reaction. Each absorption spectrum recorded at the photo-stationary state will be the sum of as many terms represented by Eq. (6) as there are photochromic components present within the BIPFUL system. Some BIPFUL systems consisting of from three to five photochromic compounds have been proposed.71) They allow the three regions of the UV spectrum, UV-A, UV-B, and UV-C, to be discriminated because the wavelengths belonging to the three UV regions originate from distinct colors.

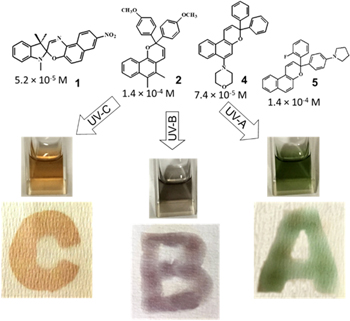

An example of a quaternary BIPFUL system is shown in Fig. 6. It is constituted by four direct thermally reversible photochromic compounds (labeled as 1, 2, 3, and 5).70) It becomes green, gray, and orange when it is irradiated by frequencies belonging to the UV-A, UV-B, and UV-C regions, respectively. It works both in the liquid phase (the concentrations of the species involved are reported in Fig. 6) and on a cellulosic white paper.

Fig. 6. (Color online) A quaternary BIPFL system constituted by the direct thermally reversible photochromic compounds 1, 2, 4, and 5; this BIPFUL system becomes green, grey, and orange when it is irradiated by frequencies belonging to the UV-A, UV-B, and UV-C regions, respectively. Its sensory activity works both in the liquid phase and on a white paper made of cellulose.

Download figure:

Standard image High-resolution imageThe imitation of other sensory subsystems, described as hierarchical fuzzy systems, wherein distinct molecular and cellular fuzzy sets work in parallel, will allow to design new artificial sensory systems. These new artificial sensory systems will have the power to extract the essential features of stimuli and will contribute to the recognition of variable patterns.

3.3.2. The fuzziness of conformers

Every molecular or macromolecular compound that exists as an ensemble of conformers works as a fuzzy set.68,72) The types and amounts of different conformers depend on the physical and chemical contexts. Every compound is like a word of the natural language, whose meaning is context-dependent. Conformational dynamism and heterogeneity enable context-specific functions to emerge in response to changing environmental conditions and allow the same compound to be used in multiple settings. It is possible to quantify the fuzziness of every compound by determining the fuzzy entropy (7):

wherein n is the number of conformers and  is the relative weight of the ith conformer.

is the relative weight of the ith conformer.

The fuzziness of a macromolecule is usually more pronounced than that of a simpler molecule. Among proteins, those completely or partially disordered are the fuzziest.73) Their remarkable fuzziness makes them multifunctional and suitable to moonlight, i.e. play distinct roles, depending on their context.74)

3.3.3. From quantum to fuzzy logic

The elementary unit of quantum information is the qubit. The qubit,  is a quantum system that has two accessible states, labeled as

is a quantum system that has two accessible states, labeled as  and

and  and it exists as a superposition of them (8):

and it exists as a superposition of them (8):

In Eq. (8), a and b are complex numbers that satisfy the normalization condition  The two states,

The two states,  and

and  work as two fuzzy sets. The

work as two fuzzy sets. The  state belongs to both

state belongs to both  and

and  with degrees that are

with degrees that are  and

and  respectively. Any logic operation on a qubit manipulates both states, simultaneously. If a molecular system is a superposition of n qubits, any operation on it manipulates

respectively. Any logic operation on a qubit manipulates both states, simultaneously. If a molecular system is a superposition of n qubits, any operation on it manipulates  states, simultaneously. Therefore, it is evident the alluring parallelism of quantum logic. However, deleterious interactions between the quantum system and the surrounding environment can cause the decoherence of the quantum states.75) The decoherence induces the collapse of any qubit in one of its two accessible states, either

states, simultaneously. Therefore, it is evident the alluring parallelism of quantum logic. However, deleterious interactions between the quantum system and the surrounding environment can cause the decoherence of the quantum states.75) The decoherence induces the collapse of any qubit in one of its two accessible states, either  or

or  with probabilities

with probabilities  and

and  respectively.

respectively.

Whenever the decoherence is unavoidable, the single microscopic units can be used to process discrete logics, i.e. binary or multi-valued crisp logics depending on the original number of qubits.76,77) Advanced microscopic techniques, reaching the atomic resolution, are required to carry out the computations with single particles. Alternatively, large assemblies of particles, e.g. molecules, can be used to make computations. However, vast collections of molecules (amounting to the order of the Avogadro's number) are bulky materials. The inputs and outputs for making computations become macroscopic variables that can change continuously. The functions linking input and output variables can be either steep or smooth. Steep sigmoid functions are suitable to implement discrete logic. In contrast, both linear and nonlinear smooth functions are suitable to build FLSs.78)

Many FLSs have been built by using the emission of light as preferable output because it bridges the gap between the microscopic and the macroscopic world. For instance, the fluorescence of 6(5 H)-phenanthridinone depends smoothly on the hydrogen bonding donation ability of the solvent (HBD) and the temperature.79) The fluorescence of tryptophan, both as an isolated molecule and bonded to the serum albumin, depends smoothly on the temperature and the amount of the quencher flindersine.80) Further examples are a ruthenium complex, whose fluorescence depends on Fe2+ and F−,81) and europium bound to a metal-organic framework, which depends on metal cations, such as Hg2+ and Ag+.82)

With a multi-responsive chromogenic compound, belonging to the class of spirooxazine, all the fundamental fuzzy logic gates, AND, OR, and NOT, have been implemented.83) The protons, Cu2+, and Al3+ ions have been used as inputs and the color coordinates (R, G, B) or the colorability of the chromogenic compound as outputs. Then, other platforms have been proposed. For example, a multi-state tantalum oxide memristive device84) and an anthraquinone-modified titanium dioxide electrode.50) All these case studies demonstrate that fuzzy logic can be processed by unconventional chemical systems showing analog physical-chemical input-output relationships in either the liquid or the solid phase. They are alternative to the conventional way of processing fuzzy logic, which is based on electronic circuits and signals.

4. Classification and transformation of simple signals

All material-based real devices operating at realistic conditions are best described by fractional differential equations, as it was demonstrated in the case of a capacitor by Svante Westerlund in 1991 in a seminal paper with a mind-twisting title "Dead matter has memory!".85) This concept was further extended towards other fundamental devices.86,87) It implies, that in-materio components exhibit some forms of memory, which is a consequence of internal dynamics. Therefore these systems and devices are naturally suited for signal processing and also can be incorporated, as active nodes, into signal classification devices. The following sections will present some selected applications in this field, which also relate to neuromorphic information processing.

4.1. Generation of higher harmonics in memristive devices

The second harmonic generation (SHG) involves generating signals (e.g. optical or electrical) the frequencies of which are twice as high as the fundamental frequency, hence often this effect is called frequency doubling. The generation of higher harmonics is observed in nonlinear resistors, but in this case, the largest spectral weight falls on the fundamental frequency.88) One of the simplest electronic circuits capable of implementing electrical frequency doubling is a diode bridge. It can be shown on the basis of the Fourier analysis that the diode bridge achieves 4.5% efficiency for SHG and 18.9% for higher harmonics in relation to input power.89) Oskoee et al.90) initially suggested the potential for SHG for strongly memristive systems. In an attempt at improving SHG efficiencies, Cohen et al.88) performed a quantitative analysis based on the memristor model for a single element as well as for the memristor bridge. The results show a significant improvement in performance in SHG generation, at 16.9% for a single element and 40.3% for a memristor bridge. Based on the above simulation results, the potential of memristive structures in applications related to SHG has been shown.

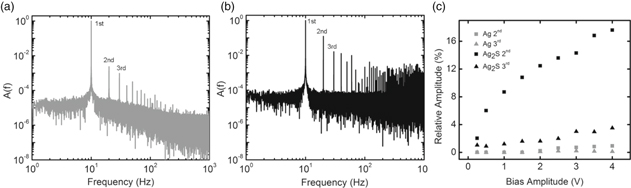

Literature describing research on SHG present in hardware memristive structures is sparse. Majzoub et al. performed an analysis of the influence of higher harmonic components of the recorded current on the pinched hysteresis loop.91) Authors used a commercially available device (KNOWM Inc.) to show, that higher harmonic components are crucial to form the pinched hysteresis loop. By filtering the components above second harmonic, authors obtained distortionless response of the device, without losing functionality in digital applications of AND/OR logic gates simulation. To benefit from the higher complexity of the system and get as close as possible to high interconnectivity of biological nervous structure Avizienis et al.92) have studied the neuromorphic atomic switch network (ASN). Presented ASN was fabricated through electroless self-assembly of silver nanowires from the AgNO3 solution which was added to SU-8 (epoxy-based negative resist) with patterned Cu posts. The oxidation reaction of Cu seeds with the AgNO3 solution leads to the formation of an extremely interconnected structure (109 junctions per cm2) of silver nanowires with variable morphology. Gas-phase sulfurization of the obtained network enables the formation of memristive metal-insulator-metal (Ag∣Ag2S∣Ag) junctions. Analysis of the network properties showed the memristive nature of the junctions after activation through unidirectional electrical sweeps which were associated with the formation of conducting filaments (CFs). Moreover, studied ASN exhibited a pronounced increase in the magnitude of higher harmonic generation (Fig. 7) after system functionalization due to an increase in the number of hard switching junctions.90)

Fig. 7. Fourier transform spectra for control device (a) and functionalized network (b), both subjected to 10 Hz, 2 V stimulation. Second and third harmonic relative amplitudes in the function of bias voltage show a significant increase in SHG for the functionalized network (black) in comparison with the control device [grey, (c)]. Reproduced from Ref. 92 with permission from the Public Library of Science.

Download figure:

Standard image High-resolution image4.2. Amplitude discrimination

The amplitude-discrimination of voltage is the inherent property of memristors. Only pulses with sufficient amplitude can affect the resistive state of the memristive device. In general, the resistive state is dependent on the history applied current and voltage, enabling storage and adjustable changes of the device's conductance. Specific voltage pulse can transfer the memristor from one state [e.g. high resistance state (HRS)] to another [e.g. low resistance state (LRS)].

Memristors employed in a reservoir system can act as a simple amplitude classifier. In general, the resistive state of the memristive device is dependent on the history of the applied current and voltage. Furthermore, exploiting the nonlinear I–V dependence, one can gradually switch the resistance to the other state with the pulses of sufficient amplitude and proper polarity. These inherent properties of memristors can be utilized for the amplitude classification with employing the memristor in the feedback loop. It has been reported that lead iodide (PbI2) incorporated in a single node echo-state network with a delayed feedback loop can efficiently discriminate input voltage pulses on the basis of their amplitude.93) The PbI2∣Cu device shows a distinct rectifying characteristic, which leads to the amplification of forward bias pulses and the reduction of reverse bias pulses. In this simplified reservoir system, the output signal—after a delay time—is routed back to the input. As the number of feedback cycles increases, some signals gain the intensity and the others are attenuated, leading to an amplitude classification. If the signal voltage exceeded the threshold value of 1.85 Vpp, the signal was amplified. On the contrary, lower voltage amplitudes were decreased over the course of subsequent. As another example, [SnI4((C6H5)2SO)2]/Cu in echo state machine can perform similar classification based on both amplitude and duration of the input voltage.94) Higher the amplitude and longer the duration, the signal propagated longer in the feedback loop before the full attenuation.

4.3. Frequency discrimination

Most basic frequency discrimination for neuromorphic memristor-based devices is the spike-rate-dependent plasticity (SRDP) learning strategy. If the frequency of spike train exceeds the threshold value, the short term effects, such as signal intensity decay can be overcome and each next spike has increased intensity, until reaching plateau level. This way of frequency processing has its instant limits—a range of frequencies between threshold and plateau values. The resolution of frequency detection is also associated with the length of individual pulses. The realization of these ideas is possible with the incorporation of several memristive materials, for example, organolead trihalide perovskites (or organic–inorganic perovskites).95) Even transistors, such as nanoparticles/organic memory transistors which are equivalent to leaky memory devices and have kind of STP-like characteristics can be used as very simple frequency discriminators.96,97) Presented strategy is unfortunately insufficient for a wide variety of frequencies and lacks scientific elegance—one still needs dedicated software and von-Neumann architecture-based hardware elements to detect measure and correctly categorize output signals.

Advancement of the above methodology would be to use STP or LTP (short- and long-time plasticity) effects associated with threshold frequency. Experimentally this behavior was shown by He in 201498) for the sandwich-like structure of Pt/FeOx/Pt. To observe this effect one must put the spike train similar to the biological firing curve. The spikes are inspired by the firing behavior of biological neurons—and consist of two pulses. The first one is a very short but high-amplitude pulse, followed by a wide and low-amplitude pulse in the opposite direction. It is a necessary condition to realize bidirectional weight change. At the same time, this is the limitation of the method as the input impulses must be properly shaped in order to distinguish between their frequency. Low-frequency spikes (below 10 kHz) tent to induce long-term depression by decreasing the conductivity of the memristor. On the contrary, 20 kHz spikes accumulate positive constituting pulses, causing an increase of the conductance—c.f. Figs. 8(a) and 8(b).

Fig. 8. (Color online) Spike waveforms, similar to biological neural spikes at different presynaptic firing frequency. Signal intensification is detected after crossing the frequency threshold (a). Emulated spike-rate-dependent plasticity (SRDP) learning rule for memristor build of iron oxide (b). Such behavior is also reported for real-life synapses. Reproduced from Ref. 98 according to the Creative Commons CC-BY-NC-ND licence.

Download figure:

Standard image High-resolution imageSimilarly, better frequency resolution than mere SRDP can also be obtained by directly using the learning rules of Bienenstock–Cooper–Munro according to whom the synapse's weight can exhibit either be strengthened (potentiation) or weakened (depression) even when subjected to the same spike trains. Memristive devices with WOx between metal (Pt) electrodes additionally to STP/LTP regimes utilize the so-called "sliding threshold frequency" as the frequency classification rule.99) Historical synaptic activity influences device performance—periods of increased activity are followed by higher frequency threshold for synaptic weight potentiation and periods of lighter intensity—as presented in Fig. 9(a) for biological neurons.

Fig. 9. (Color online) Activity-dependent plasticity (STP/LTP rules) and sliding threshold effects in memristors. Relative change in synaptic weight as a function of stimulation frequency for two different cases. Low stimulation frequency results in depression and high stimulation frequency results in facilitation. The threshold moves to a lower frequency for filled symbols (low activity period) compared to the normal condition (open symbols). Data obtained in rat visual cortex.100) (a) Memristor response to consecutive programming pulse trains at different frequencies. The 10 Hz pulse train caused a decrease of current in step 2 following strong stimulation in step 1, but the current increase in step 4 following weak stimulation in step 3. Black squares: Simulation results from the memristor model using experimental parameters. (b) Memristor current change as a function of the stimulation frequency after the memristor has been exposed to different levels of activities. Pulse trains consisting of five pulses (1.2 V, 1 ms) with different repetition frequencies were used to program the memristor. Black squares, red circles, and blue triangles represent experimental data. The solid lines are simulation results from the memristor model using experimental parameters. (c) Measured threshold hold voltage as a function of previous activity (represented by different pulse frequency). The device was stimulated by pulse trains with the same repetition frequency of 50 Hz but different amplitudes. Black squares: Experimental data. Solid line: Simulation results from the memristor model using experimental parameters. (d) Reprinted from Ref. 99 with the permission of Wiley.

Download figure:

Standard image High-resolution imageThis behavior was repeated artificially [Fig. 9(b)]—after 200 Hz stimulation, synaptic weight increased and the concomitant 10 Hz stimulation caused current drop [step 2 in Fig. 9(b)]. After that 1 Hz stimulation and 10 Hz stimulation caused increase in output current [step 4 in Fig. 9(b)]. Figure 9(c) shows results for stimulation with different frequency (20, 50 and 100 kHz) and then five probing pulses. After stimulation these probing pulses, depending in turn on their own frequency, result in either weakening or strengthening of the synaptic weight. The higher the pre-stimulation before probing pulses, the higher frequency probing pulse is needed to strengthen the synapse—this effect is similar to the one observed in biological systems. In addition to frequency discrimination, pretreatment of the sample influences also amplitude threshold [Fig. 9(d)].

The learning/forgetting effect with the frequency threshold was also reported for ZnO memristive devices.101) Described devices possessed a biorealistic rate-dependent synaptic plasticity, mimicking biological systems, has been demonstrated in the rectifying diode-like Pt/n-ZnO/SiO2–x/Pt synaptic heterostructures. Among others, the SRDP rule, the STP and LTP retention, and frequency sliding threshold simultaneously exist in the device. The paired pulse facilitation phenomenon along with the SRDP learning rule was discovered to similarly follow the plasticity behavior of that in the actual synapse. The frequency sliding threshold was explored to show the dynamic stability of the synaptic weight depending on spike train time spacing and frequency. In whole, frequency discrimination signal processing help emulated human-like "Learning-Forgetting-Relearning" synaptic behavior. These findings will serve as cornerstones for dynamic hardware-based neuromorphic systems.

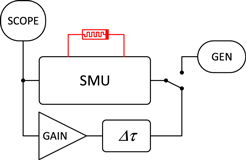

Discrimination of periodic signals of various waveforms according to their frequency should be also possible. It is an obvious fact that due to the switching dynamics memristors are frequency-sensitive elements. At sufficiently high frequencies they may behave like linear memristors, whereas their nonlinear features emerge as low-frequency range. Therefore, it should be possible to build a reservoir system similar to the previously described, which selectively amplifies signals of frequencies lower than the threshold value and attenuates signal of higher frequencies (or vice versa). Such behavior is not a unique feature in classical analog electronics: any bandpass filter (active or passive) can perform similarly. The expected advantage of reservoir memristive devices in comparison to analog filters is a very high slope of their frequency characteristics, especially if they are embedded into a single node echo state machine93,94) as shown in Fig. 10.

Fig. 10. (Color online) A scheme of a memristor-based single node echo machine: SMU stands for a source-measure unit, GAIN for an amplifier, SCOPE for oscilloscope/signal recorder Δτ for a delay line and GEN for arbitrary function generator.

Download figure:

Standard image High-resolution imageIn such systems, low-frequency signals should be amplified, whereas the signals of frequencies higher than the characteristic cut-off frequency should be slowly attenuated. The signal matching the cut-off frequency should remain unchanged. The same type of devices can be also used for more advanced signal processing due to gradual change in the signals' symmetry due to partial rectification at the Schottky junction of the memristor.

Some of the above-described effects were implemented for memristive devices and perform frequency discrimination functions, amplitude-discrimination functions alongside with other time-oriented functions in patented solutions.102) The sum of output currents, thus overall resistivity of the aggregated memristors network, is dependable on the input signal frequency level—making it possible to switch the device between states if the input signal is greater than a threshold frequency.

5. Classification of complex acoustic patterns

Dynamics of resistance changes in memristors as well as their highly nonlinear characteristics seem to be key features in their application is signal processing and classification. Furthermore, they can be incorporated into feedback loops yielding single node echo state machines (or other types of reservoir computers) with a superb performance in signal classification. In the case of reservoir computing training of the reservoir is not required, the classification of the input signal relies on the internal dynamics of the reservoir. The only point that requires training is a readout layer—simple artificial neural network (software-based), single layer perceptron or a simple signal processing circuit. Despite obvious utility of memristive elements in such computational tasks the reports on experimental verification of memristor applicability in signal classification/processing are scarce, however, the number of theoretical works, including numerical simulations, is increasing. The reason is purely technological—analog memristors (vide infra) are an emerging class of devices and require a lot of fundamental and technological studies.

There are two principal categories of memristors, which can be tentatively called analog and digital ones.103,104) Analog memristors gradually change their internal state due to interfacial switching processes (e.g. Schottky barrier height modulation) or dopant migration. As a result, these devices can store not only binary data but also analog data. Materials that show the interface-switching behavior are still under development. Moreover, the accuracy in controlling the memristance value in analog memristors is still considered to be a big concern, however, recent studies on pulse and signal classification define the safe limits of their applicability.93,94,105) On the contrary, most memristors reported so far operate according to the filamentary switching mechanism. In filamentary switching, memristors can have either a HRS or a LRS and therefore the systems based on these devices are considered error-tolerant. On the other hand, the application of binary memristors significantly limits the computational performance of memristive systems. The computational power of memristive devices can be further improved by utilization of their dynamic properties, e.g. in reservoir computer systems.106)

The concept of signal classification based on the dynamic behavior of memristor was reported by Tanaka et al.107) In this report authors demonstrate the applicability of linear-drift-based memristors in discrimination between sine and triangular waves of the same amplitude within a refined range of frequencies. The system used two different topologies of reservoir networks: ring and small-world topology (a network, in which most nodes are not neighbors of one another, but the neighbors of any given node are likely to be neighbors of each other and most nodes can be reached from every other node by a small number of hops or steps), as shown in Fig. 11.

Fig. 11. (Color online) Schematic representation of a memristive reservoir computing system in two different topologies: ring (only black memristors) and small-world (black and red memristors). In the studied case the readout layer was a one later perceptron with a sigmoidal activation function. Adapted from Ref. 107.

Download figure:

Standard image High-resolution imageSuch a system computes an output potential at each node and these values are fed into two nodes of a perceptron. The input of a perceptron node hi (i = 1, 2) is a weighted sum of inputs from reservoir node (9):

Sigmoidal activation function was used to compute the output state of the perceptron nodes (10):

Training of the reservoir involves optimization of weights to achieve the (0,1) output state for sine-wave input and the (1,0) for the triangular one. The systems provided good separability of the waveforms at a sufficiently high number of reservoir nodes coupled with the perceptron: 2 in the case of ring topology and 5 in the case of small-world topology. On the other hand, the ring topology works efficiently only in the case of identical elements, even a small variation in memristor characteristics significantly reduces the performance of the system. The introduction of additional connectivities in the circuit (small-world topology) results in a variability-tolerant system, however, a larger number of readout connections (blue arrows in Fig. 11) is required for optimal performance.

Similar capabilities, even without the trained readout layer should be also observed in a single node echo state machine with a nonlinear node of appropriate characteristics. Composite waveforms, with Fourier spectra covering a significantly large range of frequencies, should yield a complex dynamic behavior: some spectral components should be amplified, whereas some others attenuated. This may lead to a binary classification of waveform shapes. It can be further extended by an appropriate readout layer.

5.1. Classification of musical objects

Music is the most ubiquitous human activity independently on any social and cultural attributes or intellectual abilities. Music belongs to human universals,108) however, according to some opinions, it does not convey any biologically-relevant information.109) According to Guerino Mazzola music provides a platform of communication between symbolic and emotional layers.110) Music, like information, is a notion very difficult to define in precise terms. Different cultures developed different concepts of music, where different elements determine the identity of particular music. However, at the fundamental level, one may indicate some components common to all music, like the use of discrete pitches or the presence of rhythmic patterns.111) Dislike speech, music is not meant for explicit communication purposes, but it triggers various emotional responses in recipients due to aesthetical feelings. On the other hand, music is a very well-organized structure, as not every combination of sounds should be considered as music, however, the modern musicological approach provides a piece of evidence that any purposeful combination of sounds can be considered as music.112,113) Going to the extreme, even silence (a lack of purposeful sound) can be considered music, with famous 4'33' by John Cage as the most prominent example.114) In the simplest approach, however, music can be defined as an appropriate time sequence of quantized acoustic frequencies (Fig. 12). These frequencies are called steps in a musical scale, and along with rhythm and timbre are principal constituents to any musical piece. In the music of European origin, an octave (an interval between frequencies f and 2 f) is divided into 12 steps, called semitones, but other musical systems (the Middle East and India) use smaller intervals (microtones). Also, there exist many different tuning systems, and octave divisions (like Balinese and Javanese gamelan systems). A characteristic feature of European music is the specific concept of musical harmony, which may be considered as a key component of theory and practice. Musical harmony is a complex notion reflected in: (i) the pure content of the ensemble of frequencies heard at given time (also including a timbre of an individual note), (ii) the musical content—the verticality of the chord (a set of notes played simultaneously) and (iii) the position and relation of a chord in relation to the melody at given moment.115,116) Despite well-established musical theories115) the automated classification of intervals, chords and clusters and recognition of consonance and dissonance has been not achieved. The reason for this may be that there are three types of factors responsible for the phenomenon of musical harmony: physical (acoustical), physiological (mechanism of auditory perception), and culturally determined cognitive processes. There are many different styles of musical harmony (culturally and historically determined), but the fundamental to all of these is a basic notion of dissonance and consonance, which is of psycho-acoustical nature. Although, different dissonant or consonant sonorities may be differently aesthetically evaluated within a particular musical style or genre. Furthermore, the understanding of physical nature of dissonance and consonance is still not fully established,109) however various approaches beyond classical Helmholtz curve of sensory dissonance has been developed.117–119)

Fig. 12. (Color online) 3D representation of music as a time sequence of tones of different frequency (pitch) and spectral characteristics (timbre). Adapted from Ref. 115.

Download figure:

Standard image High-resolution imagePhotoelectrochemical reservoir systems, based on wide bandgap semiconductors (neat or modified with simple coordination compounds) cannot compete with photonic devices in terms of speed or efficiency. They operate, however, in a frequency domain corresponding to the audible range. Therefore, we have turned our attention to the classification of acoustic signals. There are a plethora of different data sets that require advanced processing techniques, including ECG and EEG signals, automated speech analysis or the classification of music. We have found the latter as the most suitable one to be addressed in the photoelectrochemical system due to its internal, well-defined structure based on the Pythagorean geometry.109,115,121)

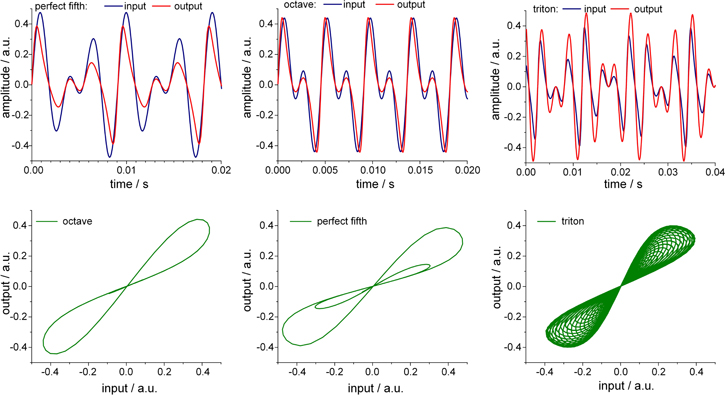

Before the experimental verification of this idea series of numerical simulations for a simple memristive device (a memristor in series with a resistor) have been performed. As the numerical model, an example given by Song et al. was implemented in Multisim (Fig. 13), in which a nonlinear circuit element (a diode) is converted into memristor by mutator circuit based on a capacitor and an inductor connected by a series of voltage- and current-controlled voltage- and current sources.120) It can be observed, that pure tones (single sine waves of frequencies which belong to the natural scale) yield simple memristor-like pinched hysteresis loops (Fig. 14). When a sum of two signals, which form a consonant combination (e.g. perfect octave, perfect fifth or perfect fourth) is applied to the circuit, a stable loop is observed as well, but with an increased number of lobes (Fig. 15). In the case of an interval which is considered dissonant (e.g. triton), the observed characteristics becomes quasi-random (the simulation was too short to justify if the signal is truly chaotic or not), but the trajectory is confined inside the hysteresis loop defined by the lower frequency tone [Fig. 15(c)]. Therefore, the memristor hysteresis loop can be considered as an attractor for the unstable behavior of the memristor-based circuit.

Fig. 13. (Color online) Circuit by Song et al. transforming a diode into a memristor.120) Two sine-wave current sources are used as an input, whereas the voltage drop on resistor R1 is used as an output.

Download figure:

Standard image High-resolution imageFig. 14. (Color online) Simulated responses of a resistor-memristor circuit subjected to the voltage signal modulated with 220 Hz sine (a) and corresponding I–V (input/output) hysteresis loop for 200 and 440 Hz sine waves (b).

Download figure:

Standard image High-resolution imageFig. 15. (Color online) Simulated responses of a resistor-memristor circuit subjected to the voltage signal modulated with two sine waves: 220 Hz + 440 Hz [octave, (a)], 220 Hz + 330 Hz [perfect fifth, (b)] and 220 Hz + 309.375 Hz [triton, (c)].

Download figure:

Standard image High-resolution imageThis result is fully consistent with the chaotic behavior of memristive circuits, especially the so-called Chua circuit (a double loop circuit of a memristor, resistor, and two capacitors).122) This numerical experiment proves the utility of reservoir computing in the processing of acoustic signals, which has been recently postulated on the basis of theoretical models.123) Surprisingly, the results are consistent with the results of neurophysiological studies on the perception of music by humans and monkeys.124,125) In conclusion, this experiment opens a new path into a field of interdisciplinary investigations: the application of molecular systems to the analysis, in the short term, but maybe also to the creation of music in the future. This idea has been recently successfully developed by Professor Eduardo R. Miranda in a series of biocomputing experiments with Physarum slime mold126–129) and follows the cross-boundary research at the interface of the information theory, music, and physical sciences.130) The analogy between the human perception of music and the reservoir perception of simple intervals may be misleading. It does not mean that a simple reservoir is as sensitive as a human ear, but rather it may suggest that memristive systems may provide a universal problem-solving power and with appropriate operation can solve numerous problems, which cannot be easily addressed using other approaches. The combination of reservoirs with logic devices (Boolean or fuzzy) may lead to a substantial increase of complexity and computing efficiency. The first reports on practical combinations of the Boolean logic and the reservoir computing are already available.131,132)

As a result of experiments with memristive reservoir a set of musical compositions was designed. In one of those compositions, titled Reservoir study no. 1, a feedback loop of a length of 555 ms was used to transform music being played by an ensemble of musicians. The process resulted in harmonic and timbral fluctuations of particular aesthetic quality. The repetitive character of those fluctuations reflects continuous processes taking place within the reservoir. It starts with short improvisations on the keyboard, followed by a slowly evolving tune with many almost identical bars—this evolution illustrates the evolution of a signal within a single node echo state machine. In the middle of the piece, the changes accumulate and trigger the reservoir to the chaotic state, which is illustrated by improvisations and a series of glissandi giving an impression of musical chaos. This acoustic chaos calms down, which is a musical illustration of amplitude death in dynamic systems—a set of coupled oscillators reaches a quiet stationary state.133) Finally, the constant pace is established again. It indicates the spontaneous rebirth of oscillations134) which leads to final chords—a musical embodiment of the results of reservoir computation. The piece was designed, completed and performed by The Nano Consort (Konrad Szaciłowski—cello, Dawid Przyczyna, Kacper Pilarczyk—guitars, Marcin Strzelecki—keyboard, Dominika Peszko, Piotr Zieliński—piano) during concert in Krakow Opera House, September 16th, 2019 (Fig. 16, please see the supplementary video file, available online at stacks.iop.org/JJAP/59/050504/mmedia, of the world premiere recording of this composition).

Fig. 16. (Color online) Photos taken on September 16th, 2019 during the rehearsal (left) and the world premiere (right) of Reservoir Study No. 1.

Download figure:

Standard image High-resolution image5.2. Speech processing and classification

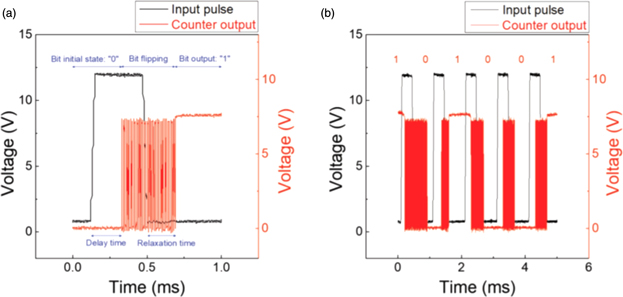

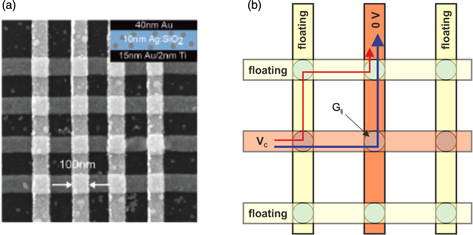

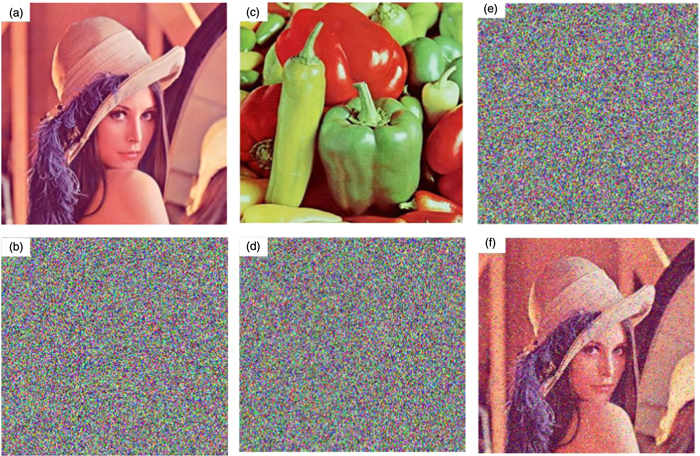

Speech recognition is a fundamental yet complex problem for AI systems of the modern era. Speech is the most common means of communication among the human race, therefore the automated speech recognition system finds numerous applications. Verbal communication is a trivial task in everyday life, but this becomes a complex phenomenon when ported to the machines. The complexity of this task originates from an extremely rich vocabulary of a single language (hundreds of thousands of words), variations of the pronunciations and dialects of the same words, and variations in a timbre, rhythm of speech and personal characteristics. The speech of children and non-native speakers adds additional complications to this already very complicated task.135) Therefore, automated speech recognition is a complex problem in the field of artificial intelligence.136) Software solutions include Fourier and wavelet analysis followed by artificial neural network-based classification of spectral features.137) The most promising approach, however, is based on neuro-inspired speech recognition, involving reservoir computing.138–140) Although there are many advances reported on this front with software simulations, the solutions are not scalable to port it to the hardware of an intelligent machine. Therefore, hardware-based solutions based on memristors and other nonlinear elements are considered as potential candidates to embed acoustic frequency signal analysis and classification (vide supra). An addition of oscillatory characteristics (or other dynamic features) should increase the performance of the computing system based on small networks.141,142)