Explainable Artificial Intelligence for Developing Smart Cities Solutions

Abstract

:1. Introduction

2. Literature Review

- (1)

- For a company or a service provider: to understand and explain how their system works, aiming to identify the root cause of problems and see whether it is working well or not, and explain why.

- (2)

- For end-users: human users need to trust AI systems in obtaining their needs, but what should be the basis for this trust? In addition to providing end-users with knowledge on the system’s prediction accuracy and other aspects of the performance, providing users with an effective explanation for the AI system’s behaviour using semantic rules that are derived from the domain experts can enhance their trust in the system.

- (3)

- For society: it is important to consider the possible impact of AI in terms of increased inequality (bias) and unethical behaviours. We believe it is not acceptable to deploy an AI system which could make a negative impact on society.

3. Flood Monitoring in Smart Cities

3.1. Major Objects and Their Significance

- i.

- Leaves: Leaves were raised as one of the most prevalent problems when it comes to blockages. Once leaves enter into the drainage, they become less of a problem, as they can pass through the sewage system relatively easily. The real problem is when the leaves gather on top of a drainage system and begin to form dams if they cannot pass through, as shown in Figure 2.

- ii.

- Slit (Mud): Silt is solid, dust-like sediment that water, ice and wind transport and deposit. Silt is made up of rock and mineral particles that are larger than clay but smaller than sand, as shown in Figure 3. During the discussion, silt was discussed as a major problem for drainage and gully blockage if they were not sufficiently cleaned regularly and were allowed to build up. Furthermore, if silt accumulated for a longer period, it can be fertile enough for vegetation to grow relatively easily, which can cause further problems with the drainage system.

- iii.

- Plastic and Bottles: Plastic and bottles were identified as another major risk to drainage system due to the capability of these objects being able to cover the drainage and restrict the water flow into the sewage system, as shown in Figure 4. Further discussions revealed that bottles by themselves are not an issue, but in combination with other litter or debris, raise the risk of blockage. As discussed with experts, bottles would typically be pushed up against the entryways to the drainage and gully, leaving the access way either blocked or restricted.

- iv.

- Water: Finally, water was identified as one of the four major objects to be monitored while deciding the drainage and gully blockage. The presence of water along with other objects and their coverage, as shown in Figure 5, is the key factor in deciding the blockage level.

3.2. Convolutional Neural Network for Object Coverage Detection

3.3. Semantics for Flood Monitoring

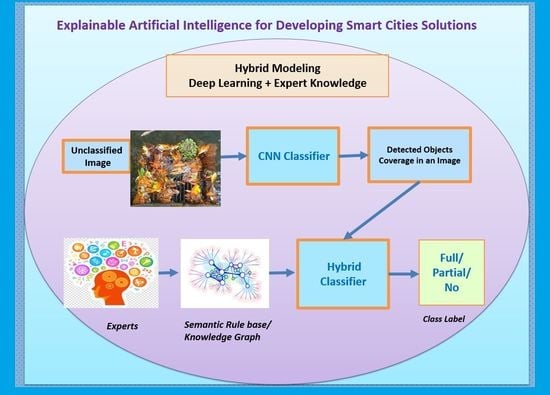

4. Hybrid Image Classification Models with Object Coverage Detectors and Semantic Rules

4.1. Object Coverage Detection

4.2. Semantic Representation and Rule Base Formulation

4.3. Inferencing and Image Classification

5. Methodology

5.1. Data Construction

5.2. Image Augmentation

5.3. Image Annotation and Coverage Level

5.4. Coverage Detector Implementation

5.4.1. Convolutional Neural Network

5.4.2. Model Regularisation and Parameter Selection

5.5. Semantic Representation

5.6. Rule-Based Formulation

6. Experimental Design and Result Analysis

6.1. Object Coverage Detection Training

6.2. Analysis of Semantic Rules Implementation

6.3. Hybrid Class Performance Analysis

6.3.1. Accuracy of the Object Coverage Detector

6.3.2. Accuracy of the Hybrid Image Classifier

7. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Souza, J.T.; Francisco, A.C.; Piekarski, C.M.; Prado, G.F. Data Mining and Machine Learning to Promote Smart Cities: A Systematic Review from 2000 to 2018. Sustainability 2019, 11, 1077. [Google Scholar] [CrossRef] [Green Version]

- Chakraborty, P.; Adu-Gyamfi, Y.O.; Poddar, S.; Ahsani, V.; Sharma, A.; Sarkar, S. Traffic Congestion Detection from Camera Images using Deep Convolution Neural Networks. Transp. Res. Rec. J. Transp. Res. Board 2018, 2672, 222–231. [Google Scholar] [CrossRef] [Green Version]

- Yuan, Z.; Zhou, X.; Yang, T. Hetero-ConvLSTM. In Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, London, UK, 19–23 August 2018; Association for Computing Machinery (ACM): New York, NY, USA, 2018; pp. 984–992. [Google Scholar]

- Shukla, U.; Verma, S.; Verma, A.K. An Algorithmic Approach for Real Time People Counting with Moving Background. J. Comput. Theor. Nanosci. 2020, 17, 499–504. [Google Scholar] [CrossRef]

- Chen, Q.; Wang, W.; Wu, F.; De, S.; Wang, R.; Zhang, B.; Huang, X. A Survey on an Emerging Area: Deep Learning for Smart City Data. IEEE Trans. Emerg. Top. Comput. Intell. 2019, 3, 392–410. [Google Scholar] [CrossRef] [Green Version]

- Simhambhatla, R.; Okiah, K.; Kuchkula, S.; Slater, R. Self-driving cars: Evaluation of deep learning techniques for object detection in different driving conditions. SMU Data Sci. Rev. 2019, 2, 23. [Google Scholar]

- Foody, G.M.; Mathur, A. A relative evaluation of multiclass image classification by support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1335–1343. [Google Scholar] [CrossRef] [Green Version]

- Samek, W.; Wiegand, T.; Müller, K.R. Explainable Artificial Intelligence: Understanding, Visualizing and Interpreting Deep Learning Models. arXiv 2017, arXiv:1708.08296. [Google Scholar]

- Choo, J.; Liu, S. Visual Analytics for Explainable Deep Learning. IEEE Eng. Med. Biol. Mag. 2018, 38, 84–92. [Google Scholar] [CrossRef] [Green Version]

- Shahrdar, S.; Menezes, L.; Nojoumian, M. A Survey on Trust in Autonomous Systems. In Advances in Intelligent Systems and Computing; Springer Science and Business Media LLC: Cham, Switzerland, 2018; pp. 368–386. [Google Scholar]

- Winikoff, M. Towards Trusting Autonomous Systems. In Lecture Notes in Computer Science; Springer Science and Business Media LLC: Cham, Switzerland, 2018; pp. 3–20. [Google Scholar]

- Al Ridhawi, I.; Otoum, S.; Aloqaily, M.; Boukerche, A. Generalizing AI: Challenges and Opportunities for Plug and Play AI Solutions. IEEE Netw. 2020, 1–8. [Google Scholar] [CrossRef]

- Holzinger, A.; Langs, G.; Denk, H.; Zatloukal, K.; Müller, H. Causability and explainability of artificial intelligence in medicine. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, 1312. [Google Scholar] [CrossRef] [Green Version]

- Hossain, M.S.; Muhammad, G.; Guizani, N. Explainable AI and Mass Surveillance System-Based Healthcare Framework to Combat COVID-I9 Like Pandemics. IEEE Netw. 2020, 34, 126–132. [Google Scholar] [CrossRef]

- Calvaresi, D.; Mualla, Y.; Najjar, A.; Galland, S.; Schumacher, M. Explainable Multi-Agent Systems Through Blockchain Technology. In Biometric Recognition; Springer Science and Business Media LLC: Cham, Switzerland, 2019; pp. 41–58. [Google Scholar]

- Marino, D.L.; Wickramasinghe, C.S.; Manic, M. An Adversarial Approach for Explainable AI in Intrusion Detection Systems. In Proceedings of the IECON 2018—44th Annual Conference of the IEEE Industrial Electronics Society, Washington, DC, USA, 21–23 October 2018; pp. 3237–3243. [Google Scholar]

- Abdullatif, A.; Masulli, F.; Rovetta, S. Tracking Time Evolving Data Streams for Short-Term Traffic Forecasting. Data Sci. Eng. 2017, 2, 210–223. [Google Scholar] [CrossRef] [Green Version]

- Fong, R.C.; Vedaldi, A. Interpretable Explanations of Black Boxes by Meaningful Perturbation. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 3449–3457. [Google Scholar]

- Gao, J.; Wang, X.; Wang, Y.; Xie, X. Explainable Recommendation through Attentive Multi-View Learning. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 3, pp. 3622–3629. [Google Scholar]

- Papernot, N.; McDaniel, P. Deep k-Nearest Neighbors: Towards Confident, Interpretable and Robust Deep Learning. arXiv 2018, arXiv:1803.04765. [Google Scholar]

- Berners-Lee, T.; Hendler, J.; Lassila, O. The Semantic Web. Sci. Am. 2001, 284, 34–43. [Google Scholar] [CrossRef]

- Dhavalkumar, T.; Fan, Y.-T.; Dimoklis, D. User Interaction with Linked Data: An Exploratory Search Approach. Int. J. Distrib. Syst. Technol. IJDST 2016, 7, 79–91. [Google Scholar]

- Escolar, S.; Villanueva, F.J.; Santofimia, M.J.; Villa, D.; Del Toro, X.; Lopez, J.C. A Multiple-Attribute Decision Making-based approach for smart city rankings design. Technol. Forecast. Soc. Chang. 2019, 142, 42–55. [Google Scholar] [CrossRef]

- Tobey, M.B.; Binder, R.B.; Chang, S.; Yoshida, T.; Yamagata, Y.; Yang, P.P.-J. Urban Systems Design: A Conceptual Framework for Planning Smart Communities. Smart Cities 2019, 2, 522–537. [Google Scholar] [CrossRef] [Green Version]

- Hoang, G.T.T.; Dupont, L.; Camargo, M. Application of Decision-Making Methods in Smart City Projects: A Systematic Literature Review. Smart Cities 2019, 2, 433–452. [Google Scholar] [CrossRef] [Green Version]

- Gupta, K.; Hall, R.P. Understanding the What, Why, and How of Becoming a Smart City: Experiences from Kakinada and Kanpur. Smart Cities 2020, 3, 232–247. [Google Scholar] [CrossRef] [Green Version]

- Browne, N.J.W. Regarding Smart Cities in China, the North and Emerging Economies—One Size Does Not Fit All. Smart Cities 2020, 3, 186–201. [Google Scholar] [CrossRef] [Green Version]

- Komninos, N.; Bratsas, C.; Kakderi, C.; Tsarchopoulos, P. Smart City Ontologies: Improving the effectiveness of smart city applications. J. Smart Cities 2016, 1, 31–46. [Google Scholar] [CrossRef] [Green Version]

- Subramaniyaswamy, V.; Manogaran, G.; Logesh, R.; Vijayakumar, V.; Chilamkurti, N.; Malathi, D.; Senthilselvan, N. An ontology-driven personalized food recommendation in IoT-based healthcare system. J. Supercomput. 2019, 75, 3184–3216. [Google Scholar] [CrossRef]

- Alkahtani, M.; Choudhary, A.; De, A.; Harding, J.A.; Harding, J. A decision support system based on ontology and data mining to improve design using warranty data. Comput. Ind. Eng. 2019, 128, 1027–1039. [Google Scholar] [CrossRef] [Green Version]

- Sermet, Y.; Demir, I. Towards an information centric flood ontology for information management and communication. Earth Sci. Inform. 2019, 12, 541–551. [Google Scholar] [CrossRef]

- Wu, Z.; Shen, Y.; Wang, H.; Wu, M. An ontology-based framework for heterogeneous data management and its application for urban flood disasters. Earth Sci. Inform. 2020, 13, 377–390. [Google Scholar] [CrossRef]

- Lin, C.-H.; Wang, S.; Chia, C.-; Wu, C.-M.; Huang, C.-M. Temperature Variation Tolerance High Resolution Real-time Liquid Level Monitoring System. In Proceedings of the 2018 IEEE 8th International Conference on Consumer Electronics—Berlin (ICCE-Berlin), Berlin, Germany, 2–5 September 2018; pp. 1–6. [Google Scholar]

- See, C.H.; Horoshenkov, K.V.; Abd-Alhmeed, R.; Hu, Y.F.; Tait, S. A Low Power Wireless Sensor Network for Gully Pot Monitoring in Urban Catchments. IEEE Sens. J. 2011, 12, 1545–1553. [Google Scholar] [CrossRef] [Green Version]

- Atojoko, A.; Jan, N.; Elmgri, F.; Abd-Alhameed, R.A.; See, C.H.; Noras, J.M. Energy efficient gully pot monitoring system using radio frequency identification (RFID). In Proceedings of the 2013 Loughborough Antennas & Propagation Conference (LAPC), Loughborough, UK, 11–12 November 2013; pp. 333–336. [Google Scholar]

- Sunkpho, J.; Ootamakorn, C. Real-time flood monitoring and warning system. Songklanakarin J. Sci. Technol. 2011, 33, 227–235. [Google Scholar]

- Scheuer, S.; Haase, D.; Meyer, V. Towards a flood risk assessment ontology—Knowledge integration into a multi-criteria risk assessment approach. Comput. Environ. Urban Syst. 2013, 37, 82–94. [Google Scholar] [CrossRef]

- Bischke, B.; Bhardwaj, P.; Gautam, A.; Helber, P.; Borth, D.; Dengel, A. Detection of Flooding Events in Social Multimedia and Satellite Imagery Using Deep Neural Networks. In Proceedings of the Working Notes Proceedings of the MediaEval 2017, Dublin, Ireland, 13–15 September 2017. [Google Scholar]

- Tamaazousti, Y.; Le Borgne, H.; Hudelot, C. Diverse Concept-Level Features for Multi-Object Classification. In Proceedings of the 2016 ACM on Internet Measurement Conference—IMC ’16, Santa Monica, CA, USA, 14–16 November 2016; Association for Computing Machinery (ACM): New York, NY, USA, 2016; pp. 63–70. [Google Scholar]

- Ginsca, A.; Popescu, A.; Le Borgne, H.; Ballas, N.; Vo, P.; Kanellos, I. Large-Scale Image Mining with Flickr Groups. In Lecture Notes in Computer Science; Springer Science and Business Media LLC: Cham, Switzerland, 2015; Volume 8935, pp. 318–334. [Google Scholar]

- Torresani, L.; Szummer, M.; FitzGibbon, A. Efficient Object Category Recognition Using Classemes. In Static Analysis; Springer Science and Business Media LLC: Cham, Switzerland, 2010; pp. 776–789. [Google Scholar]

- Xiong, Z.; Zheng, J.; Song, D.; Zhong, S.; Huang, Q. Passenger Flow Prediction of Urban Rail Transit Based on Deep Learning Methods. Smart Cities 2019, 2, 371–387. [Google Scholar] [CrossRef] [Green Version]

- Boutell, M.R.; Luo, J.; Shen, X.; Brown, C.M. Learning multi-label scene classification. Pattern Recogn. 2004, 37, 1757–1771. [Google Scholar] [CrossRef] [Green Version]

- Cheng, G.; Han, J.; Zhou, P.; Guo, L. Multi-class geospatial object detection and geographic image classification based on collection of part detectors. ISPRS J. Photogramm. Remote Sens. 2014, 98, 119–132. [Google Scholar] [CrossRef]

- Li, L.-J.; Su, H.; Lim, Y.; Fei-Fei, L. Objects as Attributes for Scene Classification. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2012; pp. 57–69. [Google Scholar]

- Teichmann, M.; Weber, M.; Zollner, M.; Cipolla, R.; Urtasun, R. MultiNet: Real-time Joint Semantic Reasoning for Autonomous Driving. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 1013–1020. [Google Scholar]

- Manzoor, U.; Balubaid, M.A.; Zafar, B.; Umar, H.; Khan, M.S. Semantic Image Retrieval: An Ontology Based Approach. Int. J. Adv. Res. Artif. Intell. 2015, 4. [Google Scholar] [CrossRef]

- Jiang, S.; Huang, T.; Gao, W. An Ontology-based Approach to Retrieve Digitized Art Images. In Proceedings of the IEEE/WIC/ACM International Conference on Web Intelligence (WI’04), Beijing, China, 20–24 September 2004; pp. 131–137. [Google Scholar]

- Abdullatif, A.; Masulli, F.; Rovetta, S. Clustering of nonstationary data streams: A survey of fuzzy partitional methods. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2018, 8, e1258. [Google Scholar] [CrossRef] [Green Version]

- Ai, Q.; Azizi, V.; Chen, X.; Zhang, Y. Learning Heterogeneous Knowledge Base Embeddings for Explainable Recommendation. Algorithms 2018, 11, 137. [Google Scholar] [CrossRef] [Green Version]

- Holzinger, A. From Machine Learning to Explainable AI. In Proceedings of the 2018 World Symposium on Digital Intelligence for Systems and Machines (DISA), Kosice, Slovakia, 23–25 August 2018; pp. 55–66. [Google Scholar]

- Preece, A.; Braines, D.; Cerutti, F.; Pham, T. Explainable AI for Intelligence Augmentation in Multi-Domain Operations. arXiv 2019, arXiv:1910.07563v1, 1–7. [Google Scholar]

- Spinner, T.; Schlegel, U.; Schafer, H.; El-Assady, M. explAIner: A Visual Analytics Framework for Interactive and Explainable Machine Learning. IEEE Trans. Vis. Comput. Graph. 2019, 26, 1. [Google Scholar] [CrossRef] [Green Version]

- Vasquez-Morales, G.R.; Martinez-Monterrubio, S.M.; Moreno-Ger, P.; Recio-Garcia, J.A. Explainable Prediction of Chronic Renal Disease in the Colombian Population Using Neural Networks and Case-Based Reasoning. IEEE Access 2019, 7, 152900–152910. [Google Scholar] [CrossRef]

- Holzinger, A.; Biemann, C.; Pattichis, M.; Currin, A. What do we need to build explainable AI systems for the medical domain? arXiv 2017, arXiv:1712.09923, 1–28. [Google Scholar]

- Holzinger, A.; Kieseberg, P.; Weippl, E.R.; Tjoa, A.M. Current Advances, Trends and Challenges of Machine Learning and Knowledge Extraction: From Machine Learning to Explainable AI. In Intelligent Tutoring Systems; Springer Science and Business Media LLC: Cham, Switzerland, 2018; pp. 1–8. [Google Scholar]

- Wang, D.; Yang, Q.; Abdul, A.; Lim, B.Y. Designing Theory-Driven User-Centric Explainable AI. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems—CHI ’19, Glasgow, UK, 4–9 May 2019; Association for Computing Machinery (ACM); pp. 1–15. [Google Scholar]

- Amarasinghe, K.; Kenney, K.; Manic, M. Toward Explainable Deep Neural Network Based Anomaly Detection. In Proceedings of the 2018 11th International Conference on Human System Interaction (HSI), Gdansk, Poland, 4–6 July 2018; pp. 311–317. [Google Scholar]

- Alonso, J.M.; Mencar, C. Building Cognitive Cities with Explainable Artificial Intelligent Systems. 2018. In Proceedings of the First International Workshop on Comprehensibility and Explanation in AI and ML 2017 co-Located with 16th International Conference of the Italian Association for Artificial Intelligence (AI*IA 2017), Bari, Italy, 16–17 November 2017; Published on CEUR-WS: 11-Mar-2018. Available online: http://ceur-ws.org/Vol-2071/CExAIIA_2017_paper_1.pdf (accessed on 1 November 2020).

- Lee, H.; Kwon, H. Going Deeper With Contextual CNN for Hyperspectral Image Classification. IEEE Trans. Image Process. 2017, 26, 4843–4855. [Google Scholar] [CrossRef] [Green Version]

- Gebrehiwot, A.; Hashemi-Beni, L.; Thompson, G.; Kordjamshidi, P.; Langan, T.E. Deep Convolutional Neural Network for Flood Extent Mapping Using Unmanned Aerial Vehicles Data. Sensors 2019, 19, 1486. [Google Scholar] [CrossRef] [Green Version]

- Rawat, W.; Wang, Z. Deep Convolutional Neural Networks for Image Classification: A Comprehensive Review. Neural Comput. 2017, 29, 2352–2449. [Google Scholar] [CrossRef] [PubMed]

- Murugan, P. Implementation of Deep Convolutional Neural Network in Multi-class Categorical Image Classification. arXiv 2018, arXiv:1801.01397. [Google Scholar]

- Chen, Y.; Jiang, H.; Li, C.; Jia, X.; Ghamisi, P. Deep Feature Extraction and Classification of Hyperspectral Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6232–6251. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. An object-based convolutional neural network (OCNN) for urban land use classification. Remote Sens. Environ. 2018, 216, 57–70. [Google Scholar] [CrossRef] [Green Version]

- Shorten, C.; Khoshgoftaar, T.M. A survey on Image Data Augmentation for Deep Learning. J. Big Data 2019, 6, 60. [Google Scholar] [CrossRef]

- Taylor, L.; Nitschke, G. Improving Deep Learning with Generic Data Augmentation. In Proceedings of the 2018 IEEE Symposium Series on Computational Intelligence (SSCI), Bangalore, India, 18–21 November 2018; Volume 1708, pp. 1542–1547. [Google Scholar]

- Inoue, H. Data Augmentation by Pairing Samples for Images Classification. arXiv 2018, arXiv:1801.02929. [Google Scholar]

- Cubuk, E.D.; Zoph, B.; Mane, D.; Vasudevan, V.; Le, Q.V. AutoAugment: Learning Augmentation Strategies From Data. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 113–123. [Google Scholar]

- D’Aniello, G.; Gaeta, M.; Orciuoli, F. An approach based on semantic stream reasoning to support decision processes in smart cities. Telemat. Inform. 2018, 35, 68–81. [Google Scholar] [CrossRef]

- Gyrard, A.; Serrano, M. A Unified Semantic Engine for Internet of Things and Smart Cities: From Sensor Data to End-Users Applications. In Proceedings of the 2015 IEEE International Conference on Data Science and Data Intensive Systems, Sydney, NSW, Australia, 11–13 December 2015; pp. 718–725. [Google Scholar]

- Ali, S.; Wang, G.; Fatima, K.; Liu, P. Semantic Knowledge Based Graph Model in Smart Cities. In Communications in Computer and Information Science; Springer Science and Business Media LLC: Cham, Switzerland, 2019; pp. 268–278. [Google Scholar]

- Zhang, N.; Chen, J.; Chen, X.; Chen, J. Semantic Framework of Internet of Things for Smart Cities: Case Studies. Sensors 2016, 16, 1501. [Google Scholar] [CrossRef]

- Bizer, C.; Heath, T.; Berners-Lee, T. Linked data: The story so far. In Semantic Services, Interoperability and Web Applications: Emerging Concepts; IGI Global: Hershey, PA, USA, 2011; pp. 205–227. [Google Scholar]

- Abid, T.; Laouar, M.R. Using Semantic Web and Linked Data for Integrating and Publishing Data in Smart Cities. In Proceedings of the 7th International Conference on Software Engineering and New Technologies, Hammamet, Tunisie, 26–28 December 2018; pp. 1–4, ISBN 978-1-4503-6101-9. [Google Scholar]

- Petrolo, R.; Loscrí, V.; Mitton, N. Towards a smart city based on cloud of things, a survey on the smart city vision and paradigms. Trans. Emerg. Telecommun. Technol. 2015, 28, e2931. [Google Scholar] [CrossRef] [Green Version]

- Kamilaris, A.; Gao, F.; Prenafeta-Boldu, F.X.; Ali, M.I. Agri-IoT: A semantic framework for Internet of Things-enabled smart farming applications. In Proceedings of the 2016 IEEE 3rd World Forum on Internet of Things (WF-IoT), Reston, VA, USA, 12–14 December 2016; pp. 442–447. [Google Scholar]

- Guo, K.; Lu, Y.; Gao, H.; Cao, R. Artificial Intelligence-Based Semantic Internet of Things in a User-Centric Smart City. Sensors 2018, 18, 1341. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jacob, E.K. Ontologies and the Semantic Web. Bull. Am. Soc. Inf. Sci. Technol. 2005, 29, 19–22. [Google Scholar] [CrossRef]

- Keeling, M.; Dirks, S. A Vision of Smarter Cities; IBM Institute for Business Value: Cambridge, MA, USA, 2009. [Google Scholar]

- Gyrard, A.; Zimmermann, A.; Sheth, A. Building IoT-Based Applications for Smart Cities: How Can Ontology Catalogs Help? IEEE Internet Things J. 2018, 5, 3978–3990. [Google Scholar] [CrossRef] [Green Version]

- Saba, D.; Sahli, Y.; Abanda, F.H.; Maouedj, R.; Tidjar, B. Development of new ontological solution for an energy intelligent management in Adrar city. Sust. Comput. Inform. Syst. 2019, 21, 189–203. [Google Scholar] [CrossRef]

- Costin, A.; Eastman, C. Need for Interoperability to Enable Seamless Information Exchanges in Smart and Sustainable Urban Systems. J. Comput. Civ. Eng. 2019, 33, 04019008. [Google Scholar] [CrossRef]

- Rueda, C.; Galbraith, N.; Morris, R.A.; Bermudez, L.E.; Arko, R.A.; Graybeal, J. The MMI device ontology: Enabling sensor integration. In Proceedings of the AGU Fall Meeting Abstracts, San Francisco, CA, USA, 13–17 December 2010; p. 8. [Google Scholar]

- Vakali, A.; Anthopoulos, L.; Krčo, S. Smart Cities Data Streams Integration: Experimenting with Internet of Things and social data flows. In Proceedings of the 4th International Conference on Web Intelligence, Mining and Semantics (WIMS14), Thessaloniki, Greece, 2–4 June 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Viktorović, M.; Yang, D.; De Vries, B.; Baken, N. Semantic web technologies as enablers for truly connected mobility within smart cities. Proc. Comput. Sci. 2019, 151, 31–36. [Google Scholar] [CrossRef]

- Balakrishna, S.; Thirumaran, M. Semantic Interoperable Traffic Management Framework for IoT Smart City Applications. EAI Endorsed Trans. Internet Things 2018, 4, 1–18. [Google Scholar] [CrossRef]

- Calavia, L.; Baladrón, C.; Aguiar, J.M.; Carro, B.; Sánchez-Esguevillas, A. A Semantic Autonomous Video Surveillance System for Dense Camera Networks in Smart Cities. Sensors 2012, 12, 10407–10429. [Google Scholar] [CrossRef]

- De Nicola, A.; Melchiori, M.; Villani, M.L. Creative design of emergency management scenarios driven by semantics: An application to smart cities. Inf. Syst. 2019, 81, 21–48. [Google Scholar] [CrossRef]

- Zheng, Y.; Chen, X.; Jin, Q.; Chen, Y.; Qu, X.; Liu, X.; Chang, E.; Ma, W.Y.; Rui, Y.; Sun, W. A Cloud-Based Knowledge Discovery System for Monitoring Fine-Grained Air Quality. MSR-TR-2014–40 Tech. Rep 2014. Available online: https://www.microsoft.com/en-us/research/wp-content/uploads/2016/02/UAir20Demo.pdf (accessed on 1 November 2020).

- Bellini, P.; Benigni, M.; Billero, R.; Nesi, P.; Rauch, N. Km4City ontology building vs data harvesting and cleaning for smart-city services. J. Vis. Lang. Comput. 2014, 25, 827–839. [Google Scholar] [CrossRef] [Green Version]

- Shang, J.; Zheng, Y.; Tong, W.; Chang, E.; Yu, Y. Inferring gas consumption and pollution emission of vehicles throughout a city. In Proceedings of the 20th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining—KDD ’14, New York, NY, USA, 24–27 August 2014; pp. 1027–1036. [Google Scholar] [CrossRef]

- Choi, C.; Esposito, C.; Wang, H.; Liu, Z.; Choi, J. Intelligent Power Equipment Management Based on Distributed Context-Aware Inference in Smart Cities. IEEE Commun. Mag. 2018, 56, 212–217. [Google Scholar] [CrossRef]

- Howell, S.; Rezgui, Y.; Beach, T.H. Integrating building and urban semantics to empower smart water solutions. Autom. Constr. 2017, 81, 434–448. [Google Scholar] [CrossRef]

- Soldatos, J.; Kefalakis, N.; Hauswirth, M.; Serrano, M.; Calbimonte, J.-P.; Riahi, M.; Aberer, K.; Jayaraman, P.P.; Zaslavsky, A.; Žarko, I.P.; et al. OpenIoT: Open Source Internet-of-Things in the Cloud. In The Semantic Web; Springer Science and Business Media LLC: Cham, Switzerland, 2015; pp. 13–25. [Google Scholar]

- Barnaghi, P.; Tönjes, R.; Höller, J.; Hauswirth, M.; Sheth, A.; Anantharam, P. CityPulse: Real-Time Iot Stream Processing and Large-Scale Data Analytics for Smart City Applications. In Europen Semantic Web Conference (ESWC). 2014. Available online: http://www.ict-citypulse.eu/doc/CityPulse_ExtendedAbstract_ESWC_EU.pdf (accessed on 1 November 2020).

- Petrolo, R.; Loscri, V.; Mitton, N. Towards a Cloud of Things Smart City. IEEE COMSOC MMTC E Lett. 2014, 9, 44–48. [Google Scholar]

- Lefrançois, J.; Ghariani, T.; Zimmermann, A. The SEAS Knowledge Model; Technical Report, ITEA2 12004 Smart Energy Aware Systems; ITEA: Eindhoven, The Netherlands, 2017. [Google Scholar]

- Seydoux, N.; Drira, K.; Hernandez, N.; Monteil, T. IoT-O, a Core-Domain IoT Ontology to Represent Connected Devices Networks. In Pattern Recognition and Computer Vision; Springer Science and Business Media LLC: Cham, Switzerland, 2016; pp. 561–576. [Google Scholar]

- Janowicz, K.; Haller, A.; Cox, S.; Le Phuoc, D.; Lefrancois, M. SOSA: A Lightweight Ontology for Sensors, Observations, Samples, and Actuators. SSRN Electron. J. 2018, 56, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Llaves, A.; Corcho, O.; Taylor, P.; Taylor, K. Enabling RDF Stream Processing for Sensor Data Management in the Environmental Domain. Int. J. Semantic Web Inf. Syst. 2016, 12, 1–21. [Google Scholar] [CrossRef]

- Ploennigs, J.; Schumann, A.; Lécué, F. Adapting semantic sensor networks for smart building diagnosis. In International Semantic Web Conference; Springer: Cham, Switzerland, 2014; pp. 308–323. [Google Scholar]

- Dey, S.; Jaiswal, D.; Dasgupta, R.; Mukherjee, A. Organization and management of Semantic Sensor information using SSN ontology: An energy meter use case. In Proceedings of the 2015 9th International Conference on Sensing Technology (ICST), Auckland, New Zealand, 8–10 December 2015; pp. 468–473. [Google Scholar]

- Fernandez, S.; Ito, T. Using SSN Ontology for Automatic Traffic Light Settings on Inteligent Transportation Systems. In Proceedings of the 2016 IEEE International Conference on Agents (ICA), Matsue, Japan, 28–30 September 2016; pp. 106–107. [Google Scholar]

- Agresta, A.; Fattoruso, G.; Pollino, M.; Pasanisi, F.; Tebano, C.; De Vito, S.; Di Francia, G. An Ontology Framework for Flooding Forecasting. In Proceedings of the Lecture Notes in Computer Science; Springer Science and Business Media LLC: Cham, Switzerland, 2014; Volume 8582, pp. 417–428. [Google Scholar]

- Wang, C.; Chen, N.; Wang, W.; Chen, Z. A Hydrological Sensor Web Ontology Based on the SSN Ontology: A Case Study for a Flood. ISPRS Int. J. Geo Inform. 2017, 7, 2. [Google Scholar] [CrossRef] [Green Version]

- Ding, Y.; Zhu, Q.; Lin, H. An integrated virtual geographic environmental simulation framework: A case study of flood disaster simulation. Geo Spat. Inf. Sci. 2014, 17, 190–200. [Google Scholar] [CrossRef] [Green Version]

- Sun, J.; De Sousa, G.; Roussey, C.; Chanet, J.P.; Pinet, F.; Hou, K.M. Intelligent Flood Adaptive Context-aware System: How Wireless Sensors Adapt their Configuration based on Environmental Phenomenon Events. Sens. Transduc. 2016, 206, 68. [Google Scholar]

- Sinha, P.K.; Dutta, B. A Systematic Analysis of Flood Ontologies: A Parametric Approach. Knowl. Organ. 2020, 47, 138–159. [Google Scholar] [CrossRef]

- Ning, H.; Li, Z.; Hodgson, M.E.; Wang, C. Prototyping a Social Media Flooding Photo Screening System Based on Deep Learning. ISPRS Int. J. Geo Inform. 2020, 9, 104. [Google Scholar] [CrossRef] [Green Version]

- Sit, M.A.; Koylu, C.; Demir, I. Identifying disaster-related tweets and their semantic, spatial and temporal context using deep learning, natural language processing and spatial analysis: A case study of Hurricane Irma. Int. J. Digit. Earth 2019, 12, 1205–1229. [Google Scholar] [CrossRef]

- Burel, G.; Saif, H.; Alani, H. Semantic Wide and Deep Learning for Detecting Crisis-Information Categories on Social Media. In Proceedings of the Lecture Notes in Computer Science; Springer Science and Business Media LLC: Cham, Switzerland, 2017; Volume 10587, pp. 138–155. [Google Scholar]

- Sublime, J.; Kalinicheva, E. Automatic post-disaster damage mapping using deep-learning techniques for change detection: Case Study of the Tohoku Tsunami. Remote Sens. 2019, 11, 1123. [Google Scholar] [CrossRef] [Green Version]

- Cavaliere, D.; Saggese, A.; Senatore, S.; Vento, M.; Loia, V. Empowering UAV scene perception by semantic spatio-temporal features. In Proceedings of the 2018 IEEE International Conference on Environmental Engineering, Milan, Italy, 12–14 March 2018; pp. 1–6. [Google Scholar]

- Cretu, L.-G. Smart Cities Design using Event-driven Paradigm and Semantic Web. Inform. Econ. 2012, 16, 57–67. [Google Scholar]

- Abdulnabi, A.H.; Wang, G.; Lu, J.; Jia, K. Multi-Task CNN Model for Attribute Prediction. IEEE Trans. Multimedia 2015, 17, 1949–1959. [Google Scholar] [CrossRef] [Green Version]

- Perez, L.; Wang, J. The Effectiveness of Data Augmentation in Image Classification using Deep Learning. arXiv 2017, arXiv:1712.04621. [Google Scholar]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep Learning-Based Classification of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Ahmed, T.U.; Hossain, S.; Hossain, M.S.; Islam, R.U.; Andersson, K. Facial Expression Recognition using Convolutional Neural Network with Data Augmentation. In Proceedings of the 2019 Joint 8th International Conference on Informatics, Electronics & Vision (ICIEV) and 2019 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Spokane, WA, USA, 30 May–2 June 2019; pp. 336–341. [Google Scholar]

- Ma, R.; Li, L.; Huang, W.; Tian, Q. On pixel count based crowd density estimation for visual surveillance. In Proceedings of the IEEE Conference on Cybernetics and Intelligent Systems, Singapore, 1–3 December 2004; Volume 1, pp. 170–173. [Google Scholar]

- Vandoni, J.; Aldea, E.; Le Hegarat-Mascle, S. Active learning for high-density crowd count regression. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Zhang, C.; Li, H.; Wang, X.; Yang, X. Cross-scene crowd counting via deep convolutional neural networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 833–841. [Google Scholar]

- Ide, H.; Kurita, T. Improvement of learning for CNN with ReLU activation by sparse regularization. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 2684–2691. [Google Scholar]

- Hao, W.; Bie, R.; Guo, J.; Meng, X.; Wang, S. Optimized CNN Based Image Recognition Through Target Region Selection. Optik 2018, 156, 772–777. [Google Scholar] [CrossRef]

- Gómez-Ríos, A.; Tabik, S.; Luengo, J.; Shihavuddin, A.; Krawczyk, B.; Herrera, F. Towards highly accurate coral texture images classification using deep convolutional neural networks and data augmentation. Expert Syst. Appl. 2019, 118, 315–328. [Google Scholar] [CrossRef] [Green Version]

- Arrieta, A.B.; Díaz-Rodríguez, N.; Del Ser, J.; Bennetot, A.; Tabik, S.; Barbado, A.; Garcia, S.; Gil-Lopez, S.; Molina, D.; Benjamins, R.; et al. Explainable Artificial Intelligence (XAI): Concepts, taxonomies, opportunities and challenges toward responsible AI. Inf. Fusion 2020, 58, 82–115. [Google Scholar] [CrossRef] [Green Version]

- Wu, Y.; Zhang, Z.; Kou, G.; Zhang, H.; Chao, X.; Li, C.-C.; Dong, Y.; Herrera, F. Distributed linguistic representations in decision making: Taxonomy, key elements and applications, and challenges in data science and explainable artificial intelligence. Inf. Fusion 2021, 65, 165–178. [Google Scholar] [CrossRef]

- Alzetta, F.; Giorgini, P.; Najjar, A.; Schumacher, M.; Calvaresi, D. In-Time Explainability in Multi-Agent Systems: Challenges, Opportunities, and Roadmap. In Lecture Notes in Computer Science; Springer Science and Business Media LLC: Cham, Switzerland, 2020; pp. 39–53. [Google Scholar]

| Parameter | Value (Range) |

|---|---|

| Rotation Range | 5–20 |

| Width Shift Range | 0.1–0.25 |

| Height Shift Range | 0.1–0.25 |

| Shear Range | 0.05–0.2 |

| Zoom Range | 0.05–0.15 |

| Horizontal Flip | True |

| Fill Mode | Nearest |

| Data Format | Channel Last |

| Brightness Range | 0.05–1.5 |

| Coverage Level | Coverage Percentage |

|---|---|

| Zero | Coverage Percentage < 5% |

| One | 5% <= Coverage Percentage < 20% |

| Two | 20% <= Coverage Percentage < 50% |

| Three | Coverage Percentage >= 50% |

| Figure | Leaf Coverage (%) | Coverage Level | Plastic $Bottle Coverage (%) | Coverage Level | Mud Coverage (%) | Coverage Level | Water Coverage (%) | Coverage Level |

|---|---|---|---|---|---|---|---|---|

| 9.a | 40.25 | Two | 0 | Zero | 0 | Zero | 43.92 | Two |

| 9.b | 14.19 | One | 0 | Zero | 15.02 | One | 42.95 | Two |

| 9.c | 22.54 | Two | 5.25 | One | 0 | Zero | 8.40 | One |

| Object Detector | Training Loss | Training Accuracy | Validation Loss | Validation Accuracy |

|---|---|---|---|---|

| Leaves | 0.2081 | 0.9633 | 1.4371 | 0.8421 |

| Mud | 0.0335 | 0.9880 | 1.1784 | 0.7717 |

| Plastic & Bottle | 0.1250 | 0.9626 | 1.5632 | 0.7976 |

| Water | 0.1208 | 0.9983 | 0.9052 | 0.8955 |

| Object/Level | Zero | One | Two | Three | |

|---|---|---|---|---|---|

| Leaves | Zero | 75% | 25% | 0% | 0% |

| One | 20% | 80% | 0% | 0% | |

| Two | 0% | 0% | 60% | 40% | |

| Three | 0% | 0% | 33.14% | 66.34% | |

| Plastic and Bottles | Zero | 71.42% | 14.28% | 7.15% | 7.15% |

| One | 0% | 50% | 0% | 50% | |

| Two | 0% | 20% | 80% | 0% | |

| Three | 0% | 0% | 33.33% | 66.67% | |

| Mud | Zero | 92.3% | 7.7% | 0% | 0% |

| One | 33.33% | 33.33% | 0% | 33.34% | |

| Two | 50% | 0% | 50% | 0% | |

| Three | 25% | 25% | 0% | 50% | |

| Water | Zero | 75% | 0% | 25% | 0% |

| One | 50% | 50% | 0% | 0% | |

| Two | 33.33% | 0% | 50% | 16.67% | |

| Three | 0% | 0% | 33.33% | 66.67% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thakker, D.; Mishra, B.K.; Abdullatif, A.; Mazumdar, S.; Simpson, S. Explainable Artificial Intelligence for Developing Smart Cities Solutions. Smart Cities 2020, 3, 1353-1382. https://doi.org/10.3390/smartcities3040065

Thakker D, Mishra BK, Abdullatif A, Mazumdar S, Simpson S. Explainable Artificial Intelligence for Developing Smart Cities Solutions. Smart Cities. 2020; 3(4):1353-1382. https://doi.org/10.3390/smartcities3040065

Chicago/Turabian StyleThakker, Dhavalkumar, Bhupesh Kumar Mishra, Amr Abdullatif, Suvodeep Mazumdar, and Sydney Simpson. 2020. "Explainable Artificial Intelligence for Developing Smart Cities Solutions" Smart Cities 3, no. 4: 1353-1382. https://doi.org/10.3390/smartcities3040065