1. Introduction

Polylactide (PLA) is a bioresorbable polymer derived from plant sources, which breaks down in vivo to H

O and CO

over time. PLA undergoes biodegradation due to the presence of a hydrolysable backbone, which is subject to chemical hydrolysis in the presence of water at elevated temperature [

1]. The biodegradation of PLA has made it an attractive material for packaging, textile, and medical applications. In medical applications, PLA has been used in temporary implantable devices such as bone fixation or local drug-delivery devices. PLA is one of the strongest FDA (Food and Drug Administration) approved bioresorbable polymers and so is of particular interest in applications where initial mechanical support is needed. The implant then degrades safely over time, possibly also releasing a drug such as an antibiotic or anti-inflammatory, as the host tissue heals. However, the industry faces issues regarding the processing of these polymers and their quality assurance once processed. The high temperatures and pressures required for forming the product also tend to degrade the material, which can lead to quality issues such as insufficient mechanical properties [

2]. Identification and control of suitable manufacturing process conditions is extremely challenging, and can vary from batch to batch of raw material [

3]. For applications requiring mechanical strength, the yield stress (

) of the product is viewed as a critical quality characteristic. The laboratory characterisation of yield stress is a protracted, destructive test, and typically deficiencies in strength cannot be detected until several hours post-production, resulting in high scrap rates. Typical costs of medical or pharmaceutical grade PLA can be in the region of thousands of euros per kilogram, so this slow feedback on product quality results in an expensive process with limited production rates and risks in the reliability of supply.

Pressure and temperature sensors are routinely applied for continuous real-time monitoring of extrusion processes, but these provide limited information on physicochemical changes such as polymer degradation, which may occur during the manufacturing process. Over the last decade, many researchers have exploited the development of robust fibre-optic probes to apply vibrational spectroscopy techniques such as near-infrared (NIR), Raman, and UV–Vis, which are sensitive to changes in the molecular bonds present in polymeric materials while they are being extruded [

4,

5,

6,

7]. NIR is particularly attractive, as it is available at a lower cost than Raman but has greater sensitivity and specificity to molecular changes than UV–Vis. NIR spectra, however, have broad absorption bands and are dominated by overlapping overtone and combination band data and hence can be difficult to interpret. Usually, multivariate statistical approaches are applied to the spectral data in so-called ‘soft sensors’ to predict material properties which are measured offline. Due to the cost relative to conventional process instrumentation, NIR spectroscopy is most commonly applied in high-value polymer extrusion for monitoring of blends/mixtures. For example, its use has been widely reported for quantifying the drug content in pharmaceutical products under fixed processing conditions, using the classical chemometric regression technique of partial least squares (PLS) [

8].

NIR spectral data contain a very high number of variables (absorbance of light at hundreds of wavelengths); however, the absorbances of adjacent wavelengths are highly correlated, and so a large number of variables are redundant. Hence, some form of feature reduction is required for regression. The classical approach is dimension reduction via a subset of features derived from a linear transformation of the original variables, such as in principal component regression (PCR) or PLS. PLS dominates as a soft-sensor method for spectroscopy data in polymer extrusion processes, with very little exploration of other methods in the field to date [

8,

9]. PLS has achieved a level of industrial acceptance due to its tried-and-tested effectiveness for chemometric applications, relative simplicity, and suitability for application with relatively small data sets, as is usually the case in process development for pharmaceutical and medical device products. However, PLS does not perform well where relationships in the data are nonlinear [

10]. Further, PLS may in some cases reduce the access or the interpretability of the data, as information on which regions of the spectrum are responsible for the majority of the variation is obscured. Research studies in other fields have examined the use of PLS together with direct selection of a subset of the original wavelength variables (e.g., GA-PLS, bi-PLS, SiPLS), which can result in a simpler and more interpretable model [

11]. However, these methods can be sensitive to the selection of training and validation data and may result in poor performance on new samples, especially where the training data set is small [

12]. An alternative is to reduce the dimensionality of the data set by summarising intervals of the spectra with various statistics [

12]; however, this adds significant complexity in processing the data and tuning additional hyperparameters such as the number of segments, which segments to retain in the regression model, and which statistics/features to use.

Nonlinear soft sensors using, e.g., artificial neural network (ANN) or kernel-based methods such as support vector regression (SVR) or Gaussian process regression (GPR) have commonly been applied in other fields and can outperform linear approaches [

13,

14]. With costly raw materials, such as in this application, the generation of training data is expensive, and hence methods which can be trained with relatively small amounts of data are required. Neural-network-based approaches typically require a large amount of training data; however, SVR is most commonly explored as a nonlinear alternative to PLS with spectral data and can perform well with a small number of samples [

15,

16]. Random forest (RF) regression has gained interest more recently for soft sensing with spectral data in industrial processes and appears to be promising for application with small amounts of data [

17,

18]. Zhang et al. [

17] found that RF regression outperformed SVR and PLS in the quantification of multiple elements in 14 steel samples, using laser-induced breakdown spectroscopy (LIBS). Kneale and Brown [

18] compared five soft sensor methodologies involving different implementations of PLS and RF regression on small data sets. The RF method, together with a hybrid RF–PLS method, (where PLS predictions are included as inputs to the RF regression model), offered the best performance on all data sets with non-monotonic response variables, and the hybrid model yielded the best one-step-ahead prediction on all data sets.

There has recently been increasing interest in monitoring/predicting parameters such as polymer degradation and mechanical properties in melt processing of bioresorbable and medical polymers. McKinley et al. [

19] used ANN with an evolutionary algorithm to explore the effect of extrusion conditions on the mechanical properties of a drug-delivery vaginal film. However, this was to develop insight for process optimisation purposes rather than for quality monitoring. Montano-Herrera et al. [

6] used NIR spectroscopy with PLS to predict the degree of thermal degradation induced during the extrusion of four different grades of the biodegradable polymer Polyhydroxyalkanoate (PHA). Muroga et al. [

20] used NIR hyperspectral imaging on compression-moulded PLA samples to predict mechanical properties under different melting and annealing times and achieved reasonable predictions for the flexural properties and crystallinity of the samples using PLS regression. In this case, the NIR imaging was applied to room-temperature samples after they had been processed. In our previous work [

21], we explored a low-cost method using only in-process pressure and temperature data for the prediction of yield stress in extruded PLA sheet using ensemble decision tree methods (bagging, random forest). If such soft-sensor approaches are to be used for quality assurance, then an appropriately rigorous performance evaluation is required to satisfy regulatory requirements. FDA guidelines for validation of qualitative analyses based on NIR models outline that the evaluation of such methods should include evaluation of the precision, accuracy, linearity, and robustness on an independent (‘external’) test data set [

22]. None of the methods so far proposed have examined performance on an independent test set.

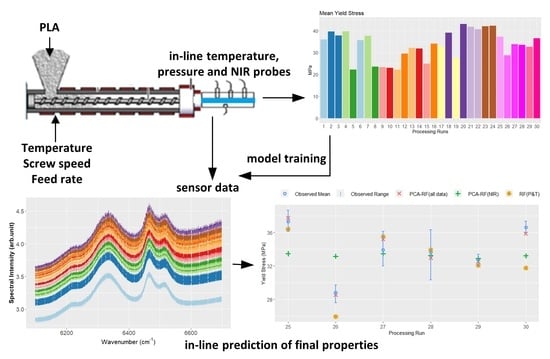

Hence, while both NIR and conventional pressure and temperature sensors appear to yield useful information on the subsequent mechanical properties of melt-processed PLA, the validity of using such sensor data for in-line monitoring of product quality in an industrial process has not yet been explored. It should be noted that in the previous NIR-based studies, process conditions were constant or the NIR imaging was performed on samples post-processing. However, NIR is sensitive to changes in process conditions including melt temperature, screw speed, and polymer rheology [

23], and this may mask the effect of chemical changes in the material. In this paper we explore for the first time the application of both in-process NIR and pressure/temperature data to predict the mechanical properties of an extruded product and investigate whether such a sensing method can satisfy performance demands for quality assurance in the medical/pharmaceutical industries. As this involves spectral data, the modelling complexity significantly increases relative to our previous low-cost method (number of available input features increases from eight to several hundred). This is a significant challenge where it is too expensive and time-consuming to generate a large data set for model training (the so-called ‘curse of dimensionality’), and risks the development of a model with poor generalisation performance on independent data. Hence, in this work we compare the established regression algorithms for spectral data (PLS, PCR, ridge regression) to two promising nonlinear methods (SVR and random forest) and evaluate the performance based on FDA guidelines. This includes an independent external test set with perturbations in the processing conditions relative to the conditions used in the model training. A multi-rate data set comprising in-process NIR spectra together with data from pressure and temperature probes was captured from a small number of process trials covering a wide range of processing conditions under a design of experiments methodology. For the nonlinear methods, we explore a prior dimension reduction step via principal components (PC) analysis, where only the number of PCs to be included must be tuned. Dimension reduction via principal components is preferred here over methods which select a subset of the original variables, which, as discussed, are more interpretable but are too sensitive to the training/validation split in small data sets [

12]. We show that it is possible to meet regulatory demands for accuracy and robustness, but only if NIR and pressure/temperature data are combined. Only the nonlinear methods preceded by a PC step satisfy the quality assurance requirements for use. The results significantly outperform those of earlier studies that used NIR imaging of post-processed PLA samples alone to estimate mechanical properties [

20], with the advantage that the predictions are available continuously during processing, allowing corrective action to be taken if the product is not meeting specification.

4. Model Training

The data split for training and testing the models was prepared as follows. The data from the 24 initial experiments were split in a ratio of 65:35. The 65% set was used as the training data for each of the models. The 35% set, referred to as the ‘internal validation’ set (referencing the language in the FDA guidance for NIR-based soft sensors [

22]), was used to tune the model hyperparameters. The data from the six processing runs in the second set of experiments were withheld as an independent ‘external’ test set. Model training involved the minimisation of the root mean squared error (RMSE) over all upsampled observations (as described in

Section 2) in the training set. The model hyperparameters were then tuned according to the lowest RMSE on the internal validation set.

For PLS and PCR, the number of components included was increased in increments of 1 until a clear minimum in the RMSE on the internal validation set was identified. PLS performed optimally on the internal validation set with 30 components, while the optimal PCR model had 290 components (from the original set of 612 features). For ridge regression, was varied between 0 and 3 in increments of 0.01. The lowest RMSE on the internal validation set was achieved with .

Multiple SVR models were trained using original features or principal components. An SVR model using the original features was trained using default parameters, i.e., , , and , but did not generalise well to the external test set, despite having reasonable performance on the internal validation data. The term represents the number of training features. Further hyperparameter tuning was not pursued using the original features. The principal components were then used to train several SVR models. A bottom-up approach was taken to determine the optimal number of PCs, i.e., beginning with the first five PCs, a model was trained, and on each iteration the subsequent five PCs were added. It was discovered that an SVR model using the first 25 PCs was best. The hyperparameters and C were tuned, while remained at the default value, which was equal to 0.04 for 25 PCs. The optimised PCA–SVR model had and .

Random forest models were trained using all 612 original features, all PCs, or subsets of PCs. For each of the models, either with original features or with all PCs, an

m value equal to 204 was used, i.e., one third of the number of features, which is the default value. To reduce the dimensionality, the model performance with a subset of PCs was investigated, similarly to the approach used in the SVR model training. The number of PCs to include and the model

m value were both tuned in a two-step process. First, an RF model was trained for each of a different number

n of the major PCs. Values of

n of 5, 10, 15, 20, 30, and 50 were tested. One hundred trees were used in each model, where an

m value equal to, or rounded up from,

was applied. This is an accepted heuristic [

35]. The models with 15, 20, and 30 PCs had similarly low RMSE values on the internal validation set; however, the model with 20 PCs performed best on the external test set. Hence, PCs 1 to 20 were chosen as the subset of features to fit the final model. In the second step, using the subset of 20 major PCs, all possible models with 100 trees were trained by varying the tuning parameter

m from 1 to 20. The number of trees was fixed at 100 as it was observed that there was no performance gain above that threshold. An

m value of 5 resulted in the lowest RMSE value on the internal validation set, and this model was selected for performance evaluation.

It is worth noting that we also investigated whether there was an advantage to using an RF model to select a subset from all the PCs (not just the major ones), as an alternative feature selection step. Here, an RF model was trained using all 612 PCs, and an internal feature importance score was generated by the model. The model ranked features by permuting the last feature in each subset used to split tree nodes and measuring the effect on the model error during the training phase. Those which had the greatest effect on the model error were ranked in order of importance. RF models were then generated using each of the top 30 and top 50 important features from the model ranking, and the heuristic m value of was applied. Neither of these models outperformed the the PCA–RF soft sensor using only the first 20 major PCs, and therefore we felt there was no advantage to this approach, which was significantly more time-consuming than selecting from the major PCs.

5. Performance Evaluation

FDA guidelines endorse the use of predictive models to realise the potential of in-line analytical sensors for quality assurance, provided the model reliability is adequate for the purpose [

22]. Assessment of the reliability should include the consideration of: accuracy (predictive errors of calibration and independent test data as well as biases in prediction); precision (standard deviation of predictions); linearity (of predicted vs. measured values over the full range of the analytical procedure); and robustness (e.g., to variations in environmental conditions, operating conditions, raw materials, etc.). Here, we treat the initial 24 experimental process runs, which cover the full process window of the material, as the calibration data set and assess the calibration performance on the internal validation data. Experimental runs 25–30 were conducted months later, allowing for variations in the environmental conditions and raw material as well as introducing variations in the temperature settings relative to the calibration runs. These six later runs were used as an external test set and allowed for an assessment of robustness. Note that similar studies on the validation of soft sensors for monitoring drug content using in-process NIR, Raman, or UV–Vis spectra typically have internal validation sets of fewer than ten runs and external test sets of the order of three to five runs (e.g., [

5,

36]).

Model accuracy was evaluated based on the mean predicted value of the yield stress for each process run, as given by Equation (

6). That is, while a yield stress prediction was made for every observation in the process data (at a frequency of 5–10 Hz), the observed mean yield stress in the product was indicative of the material that had been processed over the entire four-minute run at each process condition. Hence, by averaging the predictions over the equivalent period, a more appropriate rate for predicting mechanical properties was applied. In the equation below,

is the mean predicted value of the yield stress and

is the mean value of the yield stress observed for the corresponding process run, while (

) is the number of process runs.

Table 4 lists the

RMSE values of all models for the internal validation set and the external test set. A normalised root mean squared error (NRMSE) was also calculated by dividing the

RMSE by the range of the mean yield stress values in the data set to illustrate the magnitude of the errors relative to the range of variation in the true values. The NRMSE is illustrated for all models in

Figure 2, together with the standard deviation (Sd) of the model errors and the relative bias of the predictions. Note that while high numbers of components gave the best performance for both PCR and PLS on the internal validation set, these models performed poorly on the external test set and were clearly overfitting to the data captured in the initial 24 experiments. In similar applications, performance on the external test set is used to select the number of components (e.g., [

36]). Here, best performance on the external test set was achieved with a PCR model with five PCs and a PLS model with four latent variables. This resulted in lower external test errors than internal validation errors, as is also seen in other studies using this approach [

36]. In this case, the number of model components selected was tuned to the ’external’ test set such that it was no longer truly independent. High errors on several of the process runs in the internal validation data were evident in the linearity plots for PCR and PLS (

Figure 3c,d), giving poor confidence in the reliability of these models over the full range of processing conditions. The PCA–RF hybrid model outperformed all other models on both sets of unseen data for all error metrics.

Model linearity was determined by plotting the predicted yield stress against the measured yield stress, and a coefficient of determination (

) value and y-intercept was calculated for each model, as shown in

Figure 3. It can be seen that both the PCA–RF and the PCA–SVR model exhibited excellent linearity relative to the conventional linear methods.

As outlined in the Introduction, our previous work examined the use of low-cost pressure and temperature instrumentation for the prediction of yield stress in PLA extrusion [

21]; however, this method was not previously analysed for robustness on an external test set. The RMSE of the PCA–RF model proposed in [

21] on the internal validation and external test sets is also reported in

Table 4, and it is evident this demonstrates poor accuracy on the external test set. The performance of an RF model (without a prior PC step), as well as PCR and PLS models on the same data set are also given for comparison. The ability to predict product yield stress using NIR data alone was also investigated for the RF, PCA–RF, PCR and PLS models (with hyperparameters tuned in the same fashion as for the full data set described above). All models based solely on NIR data performed poorly on both the internal validation and external test sets. As NIR is known to be sensitive to process conditions, particularly temperature, it is unsurprising that using NIR data without information on the physical state of the melt is insufficient to predict the mechanical properties of the extruded product. The predictions of the best-performing model (in all cases a random forest model) on each of the subsets of sensor data are shown with the range and mean of the observed yield stress values for the external test set in

Figure 4.

6. Discussion and Conclusions

The use of in-process vibrational spectroscopy tools coupled with intelligent sensing concepts is gaining acceptance for product quality monitoring in extrusion of medical and pharmaceutical products; however, validated applications are so far limited to quantifying mixture concentrations (e.g., drug content), with fixed processing conditions and almost always using the linear method of partial least squares. In this paper, we showed that combining in-process NIR with pressure and temperature sensors can allow reliable prediction of the mechanical properties of a bioresorbable polymer product under different processing conditions. While previous work indicated the potential of using low-cost pressure and temperature instrumentation for soft sensing of mechanical properties in PLA extrusion, it was shown here that model robustness is significantly improved with the addition of NIR data, which provides information on molecular bond activity in the polymer melt as well as information on the physical state (temperature, viscosity, etc.). The results of the low-cost method may be satisfactory for process control in non-critical applications such as biodegradable packaging, but they are not sufficient to satisfy quality assurance demands in highly regulated industries. Due to the cost of the NIR equipment, the method proposed here is most suitable for high-value PLA applications with stringent quality demands, such as in pharmaceutical and medical device products. Further, the approach described here performs significantly better on predictive accuracy relative to other studies that predicted the mechanical properties of PLA using PLS regression applied to hyperspectral NIR images of post-processed samples [

20]. Imaging of post-processing samples at room temperature removes the complication of the sensitivity of the NIR spectra to changes in processing temperature, melt viscosity, etc. However, a further advantage of the approach presented here is that the mechanical property predictions are available in real time during processing, and are available continuously rather than only on post-processing samples of the product.

We showed that a nonlinear regression method is needed in this application in order to satisfy the reliability demands outlined in regulatory guidelines for quality analysis in the medical and pharmaceutical industries. Both support vector regression and random forest regression approaches worked well when preceded by a principal component dimension reduction step. While the PCA–RF approach gave the best performance, further tuning of the PCA–SVR method is possible. However, an advantage of the random forest method is the simplicity in model tuning, where only the number of PCs to be included and a single hyperparameter (

m) require tuning, compared to SVR where the number of PCs and three hyperparameters require tuning. However, it should be noted that other nonlinear methods have also been proposed in the literature and may also perform well for such a task. In particular, kernel-PLS has been found to have a similar performance to SVR and may be a more attractive alternative in the industry, which has a level of familiarity with PLS methods and their interpretability [

10].

A significant constraint on model development in medical and pharmaceutical applications is the cost of generating training data using very high-value raw materials; however, the nonlinear methods proposed here perform well against all industry-standard reliability metrics for analytical procedures, even with the constraint of limited training data. Further reliability testing should be carried out if the method is to be applied outside the range of conditions analysed in the external test set used here. However, the results indicate the potential for in-process measurements to give rapid feedback for process control purposes, and may reduce the amount of off-line destructive testing of the product required for quality assurance purposes. A challenge to industrial acceptance in highly conservative, regulated industries such as medical devices is the ’black box’ nature of such models. The interpretability of nonlinear approaches should be addressed in future work.