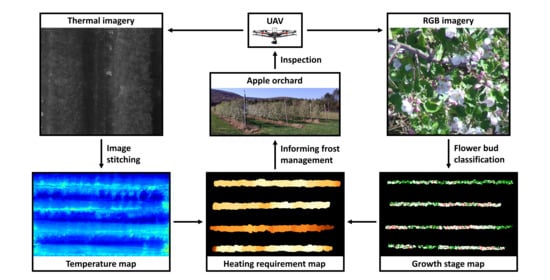

UAV-Based Heating Requirement Determination for Frost Management in Apple Orchard

Abstract

:1. Introduction

2. Study Site, Equipment, and Data Collection

3. Methods

3.1. Thermal Camera Radiometric Calibration

3.2. Thermal Image Stitching

3.3. Thermal Mosaic Georeferencing

3.4. Flower Bud Growth Stage Classification

- For stages at or before tight cluster, include the whole bud in a bounding box. For stages at or after pink, exclude leaves from a bounding box (Figure 6).

- For stages at or before tight cluster, each bounding box should contain only one bud (Figure 6a–c). For pink or petal fall stage, if the flowers that belong to the same bud are recognizable and relatively close to each other, label all flowers in the same bounding box (Figure 6d,g); otherwise, label each flower with a bounding box (Figure 6e,h). For bloom stage, each bounding box should contain only one flower (Figure 6f).

- When knowing a bud or flower exists but the complete shape of it cannot be identified, due to image blurriness or dense flower bud distribution, do not label.

- When having doubts whether a bud is a leaf or flower bud, do not label.

3.5. Flower Bud Location Calculation

3.6. Regional Heating Requirement Determination

4. Results and Discussion

4.1. Thermal Camera Calibration Results

4.2. Thermal Mosaic and Orchard Temperature Map

4.3. Flower Bud Growth Stage Classifier Performance

4.4. Orchard Flower Bud Growth Stage Map

4.5. Orchard Heating Requirement Map

5. Implications and Future Work

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Moeletsi, M.E.; Tongwane, M.I. Spatiotemporal variation of frost within growing periods. Adv. Meteorol. 2017, 2017, 1–11. [Google Scholar] [CrossRef] [Green Version]

- Papagiannaki, K.; Lagouvardos, K.; Kotroni, V.; Papagiannakis, G. Agricultural losses related to frost events: Use of the 850 hPa level temperature as an explanatory variable of the damage cost. Nat. Hazards Earth Syst. Sci. 2014, 14, 2375–2386. [Google Scholar] [CrossRef] [Green Version]

- Perry, K.B. Basics of frost and freeze protection for horticultural crops. Horttechnology 1998, 8, 10–15. [Google Scholar] [CrossRef] [Green Version]

- Savage, M.J. Estimation of frost occurrence and duration of frost for a short-grass surface. S. Afr. J. Plant. Soil 2012, 29, 173–181. [Google Scholar] [CrossRef]

- Snyder, R.L.; de Melo-Abreu, J.P. Frost Protection: Fundamentals, Practice, and Economics; Food and Agriculture Organization (FAO): Rome, Italy, 2005; Volume 1, ISBN 92-5-105328-6. [Google Scholar]

- Yue, Y.; Zhou, Y.; Wang, J.; Ye, X. Assessing wheat frost risk with the support of GIS: An approach coupling a growing season meteorological index and a hybrid fuzzy neural network model. Sustainability 2016, 8, 1308. [Google Scholar] [CrossRef] [Green Version]

- Pearce, R.S. Plant freezing and damage. Ann. Bot. 2001, 87, 417–424. [Google Scholar] [CrossRef]

- Lindow, S.E. The role of bacterial ice nucleation in frost injury to plants. Annu. Rev. Phytopathol. 1983, 21, 363–384. [Google Scholar] [CrossRef]

- Teitel, M.; Peiper, U.M.; Zvieli, Y. Shading screens for frost protection. Agric. For. Meteorol. 1996, 81, 273–286. [Google Scholar] [CrossRef]

- Eccel, E.; Rea, R.; Caffarra, A.; Crisci, A. Risk of spring frost to apple production under future climate scenarios: The role of phenological acclimation. Int. J. Biometeorol. 2009, 53, 273–286. [Google Scholar] [CrossRef]

- Ribeiro, A.C.; de Melo-Abreu, J.P.; Snyder, R.L. Apple orchard frost protection with wind machine operation. Agric. Forest Meteorol. 2006, 141, 71–81. [Google Scholar] [CrossRef]

- Ballard, J.K.; Proebsting, E.L. Frost and Frost Control in Washington Orchards; Washington State University Extension: Pullman, WA, USA, 1972. [Google Scholar]

- Cisternas, I.; Velásquez, I.; Caro, A.; Rodríguez, A. Systematic literature review of implementations of precision agriculture. Comput. Electron. Agric. 2020, 176, 105626. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef] [Green Version]

- Tsouros, D.C.; Triantafyllou, A.; Bibi, S.; Sarigannidis, P.G. Data acquisition and analysis methods in UAV- based applications for precision agriculture. In Proceedings of the 2019 15th International Conference on Distributed Computing in Sensor Systems (DCOSS), Santorini Island, Greece, 29–31 May 2019; pp. 377–384. [Google Scholar]

- Zarco-Tejada, P.J.; González-Dugo, V.; Berni, J.A.J. Fluorescence, temperature and narrow-band indices acquired from a UAV platform for water stress detection using a micro-hyperspectral imager and a thermal camera. Remote Sens. Environ. 2012, 117, 322–337. [Google Scholar] [CrossRef]

- Santesteban, L.G.; Di Gennaro, S.F.; Herrero-Langreo, A.; Miranda, C.; Royo, J.B.; Matese, A. High-resolution UAV-based thermal imaging to estimate the instantaneous and seasonal variability of plant water status within a vineyard. Agric. Water Manag. 2017, 183, 49–59. [Google Scholar] [CrossRef]

- Matese, A.; Di Gennaro, S.F. Practical applications of a multisensor UAV platform based on multispectral, thermal and RGB high resolution images in precision viticulture. Agriculture 2018, 8, 116. [Google Scholar] [CrossRef] [Green Version]

- García-Tejero, I.F.; Ortega-Arévalo, C.J.; Iglesias-Contreras, M.; Moreno, J.M.; Souza, L.; Tavira, S.C.; Durán-Zuazo, V.H. Assessing the crop-water status in almond (Prunus dulcis Mill.) trees via thermal imaging camera connected to smartphone. Sensors 2018, 18, 1050. [Google Scholar]

- Zhang, L.; Niu, Y.; Zhang, H.; Han, W.; Li, G.; Tang, J.; Peng, X. Maize canopy temperature extracted from UAV thermal and RGB imagery and its application in water stress monitoring. Front. Plant. Sci. 2019, 10, 1270. [Google Scholar] [CrossRef]

- Crusiol, L.G.T.; Nanni, M.R.; Furlanetto, R.H.; Sibaldelli, R.N.R.; Cezar, E.; Mertz-Henning, L.M.; Nepomuceno, A.L.; Neumaier, N.; Farias, J.R.B. UAV-based thermal imaging in the assessment of water status of soybean plants. Int. J. Remote Sens. 2020, 41, 3243–3265. [Google Scholar] [CrossRef]

- Quebrajo, L.; Perez-Ruiz, M.; Pérez-Urrestarazu, L.; Martínez, G.; Egea, G. Linking thermal imaging and soil remote sensing to enhance irrigation management of sugar beet. Biosyst. Eng. 2018, 165, 77–87. [Google Scholar] [CrossRef]

- Ezenne, G.I.; Jupp, L.; Mantel, S.K.; Tanner, J.L. Current and potential capabilities of UAS for crop water productivity in precision agriculture. Agric. Water Manag. 2019, 218, 158–164. [Google Scholar] [CrossRef]

- Liu, T.; Li, R.; Zhong, X.; Jiang, M.; Jin, X.; Zhou, P.; Liu, S.; Sun, C.; Guo, W. Estimates of rice lodging using indices derived from UAV visible and thermal infrared images. Agric. Forest Meteorol. 2018, 252, 144–154. [Google Scholar] [CrossRef]

- Sankaran, S.; Quirós, J.J.; Miklas, P.N. Unmanned aerial system and satellite-based high resolution imagery for high-throughput phenotyping in dry bean. Comput. Electron. Agric. 2019, 165, 104965. [Google Scholar] [CrossRef]

- Zhou, J.; Khot, L.R.; Boydston, R.A.; Miklas, P.N.; Porter, L. Low altitude remote sensing technologies for crop stress monitoring: A case study on spatial and temporal monitoring of irrigated pinto bean. Precis. Agric. 2018, 19, 555–569. [Google Scholar] [CrossRef]

- Ren, P.; Meng, Q.; Zhang, Y.; Zhao, L.; Yuan, X.; Feng, X. An unmanned airship thermal infrared remote sensing system for low-altitude and high spatial resolution monitoring of urban thermal environments: Integration and an experiment. Remote Sens. 2015, 7, 14259–14275. [Google Scholar] [CrossRef] [Green Version]

- Quaritsch, M.; Kruggl, K.; Wischounig-Strucl, D.; Bhattacharya, S.; Shah, M.; Rinner, B. Networked UAVs as aerial sensor network for disaster management applications. Elektrotechnik und Informationstechnik 2010, 127, 56–63. [Google Scholar] [CrossRef]

- Brown, M.; Lowe, D.G. Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–15 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra R-CNN: Towards balanced learning for object detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 821–830. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Grimm, J.; Herzog, K.; Rist, F.; Kicherer, A.; Töpfer, R.; Steinhage, V. An adaptable approach to automated visual detection of plant organs with applications in grapevine breeding. Biosyst. Eng. 2019, 183, 170–183. [Google Scholar] [CrossRef]

- Chen, Y.; Lee, W.S.; Gan, H.; Peres, N.; Fraisse, C.; Zhang, Y.; He, Y. Strawberry yield prediction based on a deep neural network using high-resolution aerial orthoimages. Remote Sens. 2019, 11, 1584. [Google Scholar] [CrossRef] [Green Version]

- Koirala, A.; Walsh, K.B.; Wang, Z.; Anderson, N. Deep learning for mango (Mangifera indica) panicle stage classification. Agronomy 2020, 10, 143. [Google Scholar] [CrossRef] [Green Version]

- Milicevic, M.; Zubrinic, K.; Grbavac, I.; Obradovic, I. Application of deep learning architectures for accurate detection of olive tree flowering phenophase. Remote Sens. 2020, 12, 2120. [Google Scholar] [CrossRef]

- Ärje, J.; Milioris, D.; Tran, D.T.; Jepsen, J.U.; Raitoharju, J.; Gabbouj, M.; Iosifidis, A.; Høye, T.T. Automatic flower detection and classification system using a light-weight convolutional neural network. In Proceedings of the EUSIPCO Workshop on Signal Processing, Computer Vision and Deep Learning for Autonomous Systems, A Coruña, Spain, 2–6 September 2019. [Google Scholar]

- Davis, C.C.; Champ, J.; Park, D.S.; Breckheimer, I.; Lyra, G.M.; Xie, J.; Joly, A.; Tarapore, D.; Ellison, A.M.; Bonnet, P. A new method for counting reproductive structures in digitized herbarium specimens using Mask R-CNN. Front. Plant. Sci. 2020, 11, 1129. [Google Scholar] [CrossRef] [PubMed]

- Ponn, T.; Kröger, T.; Diermeyer, F. Identification and explanation of challenging conditions for camera-based object detection of automated vehicles. Sensors 2020, 20, 3699. [Google Scholar] [CrossRef]

- Helala, M.A.; Zarrabeitia, L.A.; Qureshi, F.Z. Mosaic of near ground UAV videos under parallax effects. In Proceedings of the 6th International Conference on Distributed Smart Cameras (ICDSC), Hong Kong, China, 30 October–2 November 2012; pp. 1–6. [Google Scholar]

- Feng, L.; Tian, H.; Qiao, Z.; Zhao, M.; Liu, Y. Detailed variations in urban surface temperatures exploration based on unmanned aerial vehicle thermography. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 13, 204–216. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Eblimit, K.; Peterson, K.T.; Hartling, S.; Esposito, F.; Khanal, K.; Newcomb, M.; Pauli, D.; et al. UAV-based high resolution thermal imaging for vegetation monitoring, and plant phenotyping using ICI 8640 P, FLIR Vue Pro R 640, and thermomap cameras. Remote Sens. 2019, 11, 330. [Google Scholar] [CrossRef] [Green Version]

- Osroosh, Y.; Peters, R.T.; Campbell, C.S. Estimating actual transpiration of apple trees based on infrared thermometry. J. Irrig. Drain. Eng. 2015, 141, 1–13. [Google Scholar] [CrossRef]

- Masuda, K.; Takashima, T.; Takayama, Y. Emissivity of pure and sea waters for the model sea surface in the infrared window regions. Remote Sens. Environ. 1988, 24, 313–329. [Google Scholar] [CrossRef]

- Wang, Y.; Camargo, A.; Fevig, R.; Martel, F.; Schultz, R.R. Image mosaicking from uncooled thermal IR video captured by a small UAV. In Proceedings of the 2008 IEEE Southwest Symposium on Image Analysis and Interpretation, Santa Fe, NM, USA, 24–26 March 2008; pp. 161–164. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded up robust features. In Proceedings of the 9th European Conference on Computer Vision (ECCV 2006), Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Leutenegger, S.; Chli, M.; Siegwart, R.Y. BRISK: Binary robust invariant scalable keypoints. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2548–2555. [Google Scholar]

- Rosten, E.; Drummond, T. Machine learning for high-speed corner detection. In Proceedings of the 9th European Conference on Computer Vision (ECCV 2006), Graz, Austria, 7–13 May 2006; pp. 430–443. [Google Scholar]

- Tareen, S.A.K.; Saleem, Z. A comparative analysis of SIFT, SURF, KAZE, AKAZE, ORB, and BRISK. In Proceedings of the 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET), Sukkur, Pakistan, 3–4 March 2018; pp. 1–10. [Google Scholar]

- OpenCV. Available online: https://opencv.org/ (accessed on 25 October 2020).

- Scikit-Image. Available online: https://scikit-image.org/ (accessed on 25 October 2020).

- Hamming, R.W. Error detecting and error correcting codes. Bell Syst. Tech. J. 1950, 29, 147–160. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Yuen, H.K.; Princen, J.; Illingworth, J.; Kittler, J. Comparative study of Hough Transform methods for circle finding. Image Vis. Comput. 1990, 8, 71–77. [Google Scholar] [CrossRef] [Green Version]

- Ballard, J.K.; Proebsting, E.L.; Tukey, R.B. Apples: Critical Temperatures for Blossom Buds; Washington State University Extension: Pullman, WA, USA, 1971. [Google Scholar]

- Meier, U.; Graf, M.; Hess, W.; Kennel, W.; Klose, R.; Mappes, D.; Seipp, D.; Stauss, R.; Streif, J.; van den Boom, T. Phänologische entwicklungsstadien des kernobstes (Malus domestica Borkh. und Pyrus communis L.), des steinobstes (Prunus-Arten), der Johannisbeere (Ribes-Arten) und der erdbeere (Fragaria x ananassa Duch.). Nachrichten Blatt des Deutschen Pflanzenschutzdienstes 1994, 46, 141–153. [Google Scholar]

- Koutinas, N.; Pepelyankov, G.; Lichev, V. Flower induction and flower bud development in apple and sweet cherry. Biotechnol. Biotechnol. Equip. 2010, 24, 1549–1558. [Google Scholar] [CrossRef]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common objects in context. In Proceedings of the 13th European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Wang, C.-Y.; Liao, H.-Y.M.; Yeh, I.-H.; Wu, Y.-H.; Chen, P.-Y.; Hsieh, J.-W. CSPNet: A New Backbone that can Enhance Learning Capability of CNN. arXiv 2019, arXiv:1911.11929. [Google Scholar]

- Misra, D. Mish: A self regularized non-monotonic neural activation function. arXiv 2019, arXiv:1908.08681. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path aggregation network for instance segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Yolo_Label. Available online: https://github.com/developer0hye/Yolo_Label (accessed on 30 September 2020).

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef] [Green Version]

- Veness, C. Calculate Distance, Bearing and More between Latitude/Longitude Points. Available online: https://www.movable-type.co.uk/scripts/latlong.html (accessed on 5 October 2020).

- Han, X.; Thomasson, J.A.; Siegfried, J.; Raman, R.; Rajan, N.; Neely, H. Calibrating UAV-based thermal remote-sensing images of crops with temperature controlled references. In Proceedings of the 2019 ASABE Annual International Meeting, Boston, MA, USA, 7–10 July 2019; p. 1900662. [Google Scholar]

- Harvey, M.C.; Hare, D.K.; Hackman, A.; Davenport, G.; Haynes, A.B.; Helton, A.; Lane, J.W.; Briggs, M.A. Evaluation of stream and wetland restoration using UAS-based thermal infrared mapping. Water 2019, 11, 1568. [Google Scholar] [CrossRef] [Green Version]

- Collas, F.P.L.; van Iersel, W.K.; Straatsma, M.W.; Buijse, A.D.; Leuven, R.S.E.W. Sub-daily temperature heterogeneity in a side channel and the influence on habitat suitability of freshwater fish. Remote Sens. 2019, 11, 2367. [Google Scholar] [CrossRef] [Green Version]

- Deane, S.; Avdelidis, N.P.; Ibarra-Castanedo, C.; Zhang, H.; Nezhad, H.Y.; Williamson, A.A.; Mackley, T.; Maldague, X.; Tsourdos, A.; Nooralishahi, P. Comparison of cooled and uncooled IR sensors by means of signal-to-noise ratio for NDT diagnostics of aerospace grade composites. Sensors 2020, 20, 3381. [Google Scholar] [CrossRef] [PubMed]

- Torres-Rua, A. Vicarious calibration of sUAS microbolometer temperature imagery for estimation of radiometric land surface temperature. Sensors 2017, 17, 1499. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, T.; Niu, H.; Anderson, A.; Chen, Y.; Viers, J. A detailed study on accuracy of uncooled thermal cameras by exploring the data collection workflow. In Proceedings of the Autonomous Air and Ground Sensing Systems for Agricultural Optimization and Phenotyping III, Orlando, FL, USA, 18–19 April 2018; p. 106640F. [Google Scholar]

- Berni, J.A.J.; Zarco-Tejada, P.J.; Suárez, L.; Fereres, E. Thermal and narrowband multispectral remote sensing for vegetation monitoring from an unmanned aerial vehicle. IEEE Trans. Geosci. Remote Sens. 2009, 47, 722–738. [Google Scholar] [CrossRef] [Green Version]

- Aubrecht, D.M.; Helliker, B.R.; Goulden, M.L.; Roberts, D.A.; Still, C.J.; Richardson, A.D. Continuous, long-term, high-frequency thermal imaging of vegetation: Uncertainties and recommended best practices. Agric. Forest Meteorol. 2016, 228–229, 315–326. [Google Scholar] [CrossRef] [Green Version]

- Teza, G. THIMRAN: MATLAB toolbox for thermal image processing aimed at damage recognition in large bodies. J. Comput. Civ. Eng. 2014, 28, 04014017. [Google Scholar] [CrossRef]

- Sartinas, E.G.; Psarakis, E.Z.; Lamprinou, N. UAV forest monitoring in case of fire: Robustifying video stitching by the joint use of optical and thermal cameras. In Advances in Service and Industrial Robotics: Proceedings of the 27th International Conference on Robotics in Alpe-Adria-Danube Region (RAAD 2018); Springer: Cham, Switzerland, 2019; pp. 163–172. [Google Scholar]

- Evangelidis, G.D.; Psarakis, E.Z. Parametric image alignment using enhanced correlation coefficient maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1858–1865. [Google Scholar] [CrossRef] [Green Version]

- Semenishchev, E.; Agaian, S.; Voronin, V.; Pismenskova, M.; Zelensky, A.; Tolstova, I. Thermal image stitching for examination industrial buildings. In Proceedings of the Mobile Multimedia/Image Processing, Security, and Applications 2019, Baltimore, MD, USA, 15 April 2019; p. 109930M. [Google Scholar]

- Semenishchev, E.; Voronin, V.; Zelensky, A.; Shraifel, I. Algorithm for image stitching in the infrared. In Proceedings of the Infrared Technology and Applications XLV, Baltimore, MD, USA, 14–18 April 2019; p. 110022H. [Google Scholar]

- Yahyanejad, S.; Misiorny, J.; Rinner, B. Lens distortion correction for thermal cameras to improve aerial imaging with small-scale UAVs. In Proceedings of the 2011 IEEE International Symposium on Robotic and Sensors Environments (ROSE), Montreal, QC, USA, 17–18 September 2011; pp. 231–236. [Google Scholar]

- Tian, Y.; Yang, G.; Wang, Z.; Li, E.; Liang, Z. Instance segmentation of apple flowers using the improved mask R–CNN model. Biosyst. Eng. 2020, 193, 264–278. [Google Scholar] [CrossRef]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Sun, H.; Slaughter, D.C.; Ruiz, M.P.; Gliever, C.; Upadhyaya, S.K.; Smith, R.F. RTK GPS mapping of transplanted row crops. Comput. Electron. Agric. 2010, 71, 32–37. [Google Scholar] [CrossRef]

| Hardware | Model | Specification |

|---|---|---|

| UAV | DJI Matrice 600 Pro (with TB47S batteries) | 6 kg payload, 16 to 32 min hovering time, ±0.5 m vertical and ±1.5 m horizontal hovering accuracy |

| Thermal camera | DJI Zenmuse XT2 (with a 19 mm lens) | −25 to 135 °C scene range, 7.5 to 13.5 µm spectral range, 32° × 26° FOV, 640 × 512 resolution |

| RGB camera | DJI Zenmuse Z30 | 30× optical zoom, 63.7° × 38.52° wide-end FOV, 2.3° × 1.29° tele-end FOV, 1920 × 1080 resolution |

| Growth Stage | Tip | Half-Inch Green | Tight Cluster | Pink | Bloom | Petal Fall |

|---|---|---|---|---|---|---|

| BBCH-identification code [64] | 01–09 | 10–11 | 15–19 | 51–59 | 60–67 | 69 |

| Critical temperature | −8.89 | −5.00 | −2.78 | −2.22 | −2.22 | −1.67 |

| Statistics | Network Size | ||||||

|---|---|---|---|---|---|---|---|

| 320 × 320 | 480 × 480 | 640 × 640 | |||||

| Validation | Test | Validation | Test | Validation | Test | ||

| AP | Tip | 31.74% | 51.65% | 48.34% | 65.72% | 50.08% | 61.72% |

| Half-inch green | 39.48% | 50.46% | 48.25% | 57.08% | 45.95% | 56.68% | |

| Tight cluster | 85.07% | 86.98% | 85.50% | 87.65% | 82.83% | 85.48% | |

| Pink | 71.41% | 69.29% | 72.83% | 71.79% | 71.50% | 70.18% | |

| Bloom | 81.54% | 81.33% | 84.58% | 84.52% | 84.12% | 83.49% | |

| Petal fall | 57.34% | 56.75% | 63.46% | 62.68% | 63.69% | 62.88% | |

| mAP | 61.09% | 66.08% | 67.16% | 71.57% | 66.36% | 70.07% | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuan, W.; Choi, D. UAV-Based Heating Requirement Determination for Frost Management in Apple Orchard. Remote Sens. 2021, 13, 273. https://doi.org/10.3390/rs13020273

Yuan W, Choi D. UAV-Based Heating Requirement Determination for Frost Management in Apple Orchard. Remote Sensing. 2021; 13(2):273. https://doi.org/10.3390/rs13020273

Chicago/Turabian StyleYuan, Wenan, and Daeun Choi. 2021. "UAV-Based Heating Requirement Determination for Frost Management in Apple Orchard" Remote Sensing 13, no. 2: 273. https://doi.org/10.3390/rs13020273