Flood Detection Using Multi-Modal and Multi-Temporal Images: A Comparative Study

Abstract

:1. Introduction

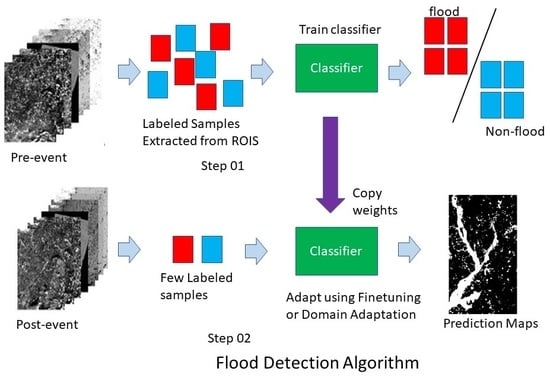

- We implemented domain adaptation methods and compared these methods with traditional machine-learning algorithms for flood detection. To the best of our knowledge, we are the first to implement a deep learning-based domain adaptation approach for flood detection.

- Our experimental results showed that domain adaptation-based methods can achieve competitive performance for flood detection and require much less labeled samples in the post-event images for model fine-tuning.

- Our recommendation for the community is that domain adaptation methods require less labor and are better tools for flood detection.

2. Dataset and Experiment Design

2.1. Dataset

2.2. Data Augmentation with Morphological Operation

2.3. Models for Comparison

2.3.1. Multi-Layer Perceptron (MLP)

2.3.2. Support Vector Machine (SVM)

2.3.3. Source Only (SO)

2.3.4. Unsupervised Domain Adaptation (UDA)

2.3.5. Semi-Supervised Domain Adaptation (SSDA)

2.3.6. SVM, MLP and DCNN Fine-Tuned with 1, 3, 5, 10, and 20 Samples/Shots

2.3.7. MLP and SVM with 20 Samples from Post-Event Images

2.4. Evaluation Metrics

2.5. Hyper-Parameter Determination

3. Results

Original Predicted Probability (OPP) Results

Results after Morphological Operation with Disk Structure on OPP (MO-DS)

4. Discussions

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Guha-Sapir, D.; Vos, F.; Below, R.; Ponserre, S. Annual Disaster Statistical Review 2014: The Numbers and Trends. 2015. Available online: https://reliefweb.int/report/world/annual-disaster-statistical-review-2014-numbers-and-trends (accessed on 28 July 2020).

- Jonkman, S. Loss of life due to floods: General overview. In Drowning; Springer: Berlin, Germany, 2014; pp. 957–965. [Google Scholar]

- Ashley, S.T.; Ashley, W.S. Flood fatalities in the United States. J. Appl. Meteorol. Climatol. 2008, 47, 805–818. [Google Scholar] [CrossRef]

- Malinowski, R.; Groom, G.; Schwanghart, W.; Heckrath, G. Detection and delineation of localized flooding from WorldView-2 multispectral data. Remote Sens. 2015, 7, 14853–14875. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y. Using Landsat 7 TM data acquired days after a flood event to delineate the maximum flood extent on a coastal floodplain. Int. J. Remote Sens. 2004, 25, 959–974. [Google Scholar] [CrossRef]

- Ireland, G.; Volpi, M.; Petropoulos, G.P. Examining the capability of supervised machine learning classifiers in extracting flooded areas from Landsat TM imagery: A case study from a Mediterranean flood. Remote Sens. 2015, 7, 3372–3399. [Google Scholar] [CrossRef] [Green Version]

- Ahmed, M.R.; Rahaman, K.R.; Kok, A.; Hassan, Q.K. Remote sensing-based quantification of the impact of flash flooding on the rice production: A case study over Northeastern Bangladesh. Sensors 2017, 17, 2347. [Google Scholar] [CrossRef] [Green Version]

- Longbotham, N.; Pacifici, F.; Glenn, T.; Zare, A.; Volpi, M.; Tuia, D.; Christophe, E.; Michel, J.; Inglada, J.; Chanussot, J.; et al. Multi-modal change detection, application to the detection of flooded areas: Outcome of the 2009–2010 data fusion contest. IEEE J. Sel. Top Appl. Earth Obs. Remote Sens. 2012, 5, 331–342. [Google Scholar] [CrossRef]

- Twele, A.; Cao, W.; Plank, S.; Martinis, S. Sentinel-1-based flood mapping: A fully automated processing chain. Int. J. Remote Sens. 2016, 37, 2990–3004. [Google Scholar] [CrossRef]

- Sun, D.; Yu, Y.; Zhang, R.; Li, S.; Goldberg, M.D. Towards operational automatic flood detection using EOS/MODIS data. Photogramm. Eng. Remote Sens. 2012, 78, 637–646. [Google Scholar] [CrossRef]

- Mason, D.C.; Giustarini, L.; Garcia-Pintado, J.; Cloke, H.L. Detection of flooded urban areas in high resolution Synthetic Aperture Radar images using double scattering. Int. J. Appl. Earth Obs. Geoinf. 2014, 28, 150–159. [Google Scholar] [CrossRef] [Green Version]

- Chowdhury, E.H.; Hassan, Q.K. Use of remote sensing data in comprehending an extremely unusual flooding event over southwest Bangladesh. Nat. Hazards 2017, 88, 1805–1823. [Google Scholar] [CrossRef]

- Hong Quang, N.; Tuan, V.A.; Le Hang, T.T.; Manh Hung, N.; Thi Dieu, D.; Duc Anh, N.; Hackney, C.R. Hydrological/Hydraulic Modeling-Based Thresholding of Multi SAR Remote Sensing Data for Flood Monitoring in Regions of the Vietnamese Lower Mekong River Basin. Water 2020, 12, 71. [Google Scholar] [CrossRef] [Green Version]

- Sun, D.; Yu, Y.; Goldberg, M.D. Deriving water fraction and flood maps from MODIS images using a decision tree approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 814–825. [Google Scholar] [CrossRef]

- Liu, Z.; Li, G.; Mercier, G.; He, Y.; Pan, Q. Change detection in heterogenous remote sensing images via homogeneous pixel transformation. IEEE Trans. Image Process. 2017, 27, 1822–1834. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; 2012; pp. 1097–1105. Available online: https://papers.nips.cc/paper/4824-imagenet-classification-with-deep-convolutional-neural-networks.pdf (accessed on 28 July 2020).

- Islam, K.A.; Pérez, D.; Hill, V.; Schaeffer, B.; Zimmerman, R.; Li, J. Seagrass detection in coastal water through deep capsule networks. In Proceedings of the Chinese Conference on Pattern Recognition and Computer Vision (PRCV), Guangzhou, China, 23–26 November 2018; pp. 320–331. [Google Scholar]

- Islam, K.A.; Hill, V.; Schaeffer, B.; Zimmerman, R.; Li, J. Semi-supervised Adversarial Domain Adaptation for Seagrass Detection in Multispectral Images. In Proceedings of the 2019 IEEE International Conference on Data Mining (ICDM), Beijing, China, 8–11 November 2019; pp. 1120–1125. [Google Scholar]

- Banerjee, D.; Islam, K.; Xue, K.; Mei, G.; Xiao, L.; Zhang, G.; Xu, R.; Lei, C.; Ji, S.; Li, J. A deep transfer learning approach for improved post-traumatic stress disorder diagnosis. Knowl. Inf. Syst. 2019, 60, 1693–1724. [Google Scholar] [CrossRef]

- Islam, K.A.; Perez, D.; Li, J. A Transfer Learning Approach for the 2018 FEMH Voice Data Challenge. In Proceedings of the 2018 IEEE International Conference on Big Data (Big Data), Seattle, WA, USA, 10–13 December 2018; pp. 5252–5257. [Google Scholar]

- Chowdhury, M.M.U.; Hammond, F.; Konowicz, G.; Xin, C.; Wu, H.; Li, J. A few-shot deep learning approach for improved intrusion detection. In Proceedings of the 2017 IEEE 8th Annual Ubiquitous Computing, Electronics and Mobile Communication Conference (UEMCON), New York, NY, USA, 19–21 October 2017; pp. 456–462. [Google Scholar]

- Ning, R.; Wang, C.; Xin, C.; Li, J.; Wu, H. DeepMag+: Sniffing mobile apps in magnetic field through deep learning. Pervasive Mob. Comput. 2020, 61, 101106. [Google Scholar] [CrossRef]

- Li, F.; Tran, L.; Thung, K.H.; Ji, S.; Shen, D.; Li, J. A robust deep model for improved classification of AD/MCI patients. IEEE J. Biomed. Health Inform. 2015, 19, 1610–1616. [Google Scholar] [CrossRef] [Green Version]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep learning classification of land cover and crop types using remote sensing data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Li, W.; Fu, H.; Yu, L.; Cracknell, A. Deep learning based oil palm tree detection and counting for high-resolution remote sensing images. Remote Sens. 2017, 9, 22. [Google Scholar] [CrossRef] [Green Version]

- Chi, J.; Walia, E.; Babyn, P.; Wang, J.; Groot, G.; Eramian, M. Thyroid nodule classification in ultrasound images by fine-tuning deep convolutional neural network. J. Digit. Imaging 2017, 30, 477–486. [Google Scholar] [CrossRef]

- Jung, H.; Lee, S.; Yim, J.; Park, S.; Kim, J. Joint fine-tuning in deep neural networks for facial expression recognition. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 11–15 December 2015; pp. 2983–2991. [Google Scholar]

- Reyes, A.K.; Caicedo, J.C.; Camargo, J.E. Fine-tuning Deep Convolutional Networks for Plant Recognition. CLEF (Working Notes) 2015, 1391, 467–475. [Google Scholar]

- Costache, R.; Ngo, P.T.T.; Bui, D.T. Novel Ensembles of Deep Learning Neural Network and Statistical Learning for Flash-Flood Susceptibility Mapping. Water 2020, 12, 1549. [Google Scholar] [CrossRef]

- Jain, P.; Schoen-Phelan, B.; Ross, R. Automatic flood detection in SentineI-2 images using deep convolutional neural networks. In Proceedings of the 35th Annual ACM Symposium on Applied Computing, Brno, Czech Republic, 15 September 2020; pp. 617–623. [Google Scholar]

- Nogueira, K.; Fadel, S.G.; Dourado, Í.C.; Werneck, R.d.O.; Muñoz, J.A.; Penatti, O.A.; Calumby, R.T.; Li, L.T.; dos Santos, J.A.; Torres, R.d.S. Exploiting ConvNet diversity for flooding identification. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1446–1450. [Google Scholar] [CrossRef] [Green Version]

- Sarker, C.; Mejias, L.; Maire, F.; Woodley, A. Flood mapping with convolutional neural networks using spatio-contextual pixel information. Remote Sens. 2019, 11, 2331. [Google Scholar] [CrossRef] [Green Version]

- Gebrehiwot, A.; Hashemi-Beni, L.; Thompson, G.; Kordjamshidi, P.; Langan, T.E. Deep Convolutional Neural Network for Flood Extent Mapping Using Unmanned Aerial Vehicles Data. Sensors 2019, 19, 1486. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Tzeng, E.; Hoffman, J.; Saenko, K.; Darrell, T. Adversarial discriminative domain adaptation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7167–7176. [Google Scholar]

- Motiian, S.; Piccirilli, M.; Adjeroh, D.A.; Doretto, G. Unified deep supervised domain adaptation and generalization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5715–5725. [Google Scholar]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Chang, C.C.; Lin, C.J. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 233–240. [Google Scholar]

- Saito, T.; Rehmsmeier, M. The precision-recall plot is more informative than the ROC plot when evaluating binary classifiers on imbalanced datasets. PLoS ONE 2015, 10, e0118432. [Google Scholar] [CrossRef] [Green Version]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Scikit-learn: Machine Learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

| Methods | Recall | Precision | F1-Score | PR-AUC | ||||

|---|---|---|---|---|---|---|---|---|

| OPP | MO-DS | OPP | MO-DS | OPP | MO-DS | OPP | MO-DS | |

| SVM-SO | 0.9761 | 0.9740 | 0.1950 | 0.2108 | 0.3251 | 0.3466 | 0.7904 | 0.8132 |

| MLP-SO | 0 | 0 | 0 | 0 | NA | NA | 0.2032 | 0.2203 |

| CNN-SO | 0.8853 | 0.8571 | 0.3653 | 0.4201 | 0.5172 | 0.5638 | 0.7681 | 0.8070 |

| UDA | 0.8764 | 0.8554 | 0.8246 | 0.8754 | 0.8497 | 0.8652 | 0.8508 | 0.8833 |

| MLP 20 shots | 0.9007 | 0.8593 | 0.2017 | 0.2206 | 0.3295 | 0.3510 | 0.2460 | 0.2605 |

| DCNN 1-shot FT | 0.8076 | 0.7757 | 0.5683 | 0.6201 | 0.6671 | 0.6892 | 0.8281 | 0.8524 |

| MLP 1-shot FT | 0.9311 | 0.9279 | 0.4871 | 0.5305 | 0.6396 | 0.6751 | 0.7900 | 0.8617 |

| SVM 1-shot FT | 0.0723 | 0.0654 | 0.0115 | 0.0105 | 0.0199 | 0.0181 | 0.0485 | 0.0484 |

| SSDA 1-shot | 0.8407 | 0.8111 | 0.8623 | 0.8972 | 0.8513 | 0.8520 | 0.7155 | 0.8433 |

| DCNN 3-shot FT | 0.8699 | 0.8514 | 0.7772 | 0.8259 | 0.8209 | 0.8385 | 0.7783 | 0.8115 |

| MLP 3-shot FT | 0.9168 | 0.9118 | 0.6045 | 0.6539 | 0.7286 | 0.7616 | 0.8470 | 0.8829 |

| SVM 3-shot FT | 0.9092 | 0.9023 | 0.7182 | 0.7631 | 0.8025 | 0.8269 | 0.8780 | 0.8969 |

| SSDA 3-shot | 0.9341 | 0.9306 | 0.5409 | 0.5899 | 0.6851 | 0.7221 | 0.8906 | 0.9062 |

| DCNN 5-shot FT | 0.7934 | 0.7600 | 0.8975 | 0.9261 | 0.8422 | 0.8349 | 0.8349 | 0.8577 |

| MLP 5-shot FT | 0.8404 | 0.8199 | 0.8765 | 0.9055 | 0.8580 | 0.8605 | 0.8910 | 0.9064 |

| SVM 5-shot FT | 0.8792 | 0.8650 | 0.8244 | 0.8614 | 0.8509 | 0.8632 | 0.8797 | 0.8984 |

| SSDA 5-shot | 0.8493 | 0.8216 | 0.8989 | 0.9293 | 0.8734 | 0.8721 | 0.8209 | 0.8901 |

| DCNN 10-shot FT | 0.8349 | 0.8088 | 0.8635 | 0.9004 | 0.8490 | 0.8521 | 0.8163 | 0.8439 |

| MLP 10-shot FT | 0.8505 | 0.8313 | 0.8515 | 0.8862 | 0.8510 | 0.8578 | 0.8813 | 0.9019 |

| SVM 10-shot FT | 0.8720 | 0.8562 | 0.8460 | 0.8798 | 0.8588 | 0.8678 | 0.8820 | 0.8998 |

| SSDA 10-shot | 0.8750 | 0.8518 | 0.8515 | 0.9027 | 0.8631 | 0.8765 | 0.8506 | 0.9035 |

| DCNN 20-shot FT | 0.8812 | 0.8619 | 0.7472 | 0.8317 | 0.8087 | 0.8466 | 0.8663 | 0.8925 |

| MLP 20-shot FT | 0.8756 | 0.8502 | 0.7879 | 0.8465 | 0.8294 | 0.8484 | 0.8194 | 0.8966 |

| SVM 20-shot FT | 0.8901 | 0.8704 | 0.8012 | 0.8566 | 0.8433 | 0.8634 | 0.8532 | 0.8960 |

| SSDA 20-shot | 0.8831 | 0.8704 | 0.8681 | 0.8992 | 0.8755 | 0.8846 | 0.8669 | 0.9173 |

| SVM [8] | 0.9393 | 0.9333 | 0.6263 | 0.7126 | 0.7515 | 0.8082 | 0.8442 | 0.8727 |

| MLP [8] | 0.9406 | 0.9287 | 0.5472 | 0.7139 | 0.6919 | 0.8073 | 0.8567 | 0.8949 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Islam, K.A.; Uddin, M.S.; Kwan, C.; Li, J. Flood Detection Using Multi-Modal and Multi-Temporal Images: A Comparative Study. Remote Sens. 2020, 12, 2455. https://doi.org/10.3390/rs12152455

Islam KA, Uddin MS, Kwan C, Li J. Flood Detection Using Multi-Modal and Multi-Temporal Images: A Comparative Study. Remote Sensing. 2020; 12(15):2455. https://doi.org/10.3390/rs12152455

Chicago/Turabian StyleIslam, Kazi Aminul, Mohammad Shahab Uddin, Chiman Kwan, and Jiang Li. 2020. "Flood Detection Using Multi-Modal and Multi-Temporal Images: A Comparative Study" Remote Sensing 12, no. 15: 2455. https://doi.org/10.3390/rs12152455