1. Introduction

With the development of science and technology, surface remote-sensing system have been widely developed in recent decades. Through high-altitude imaging analysis, surface remote sensing systems can play an important role in environmental pollution monitoring, post-disaster damage assessment, crop monitoring, disaster detection, and some other fields. Remote sensing imaging can obtain different types of images due to different imaging mechanisms, which can be divided into infrared images, natural optical images, and radar images [

1,

2]. Among them, synthetic aperture radar (SAR) has been widely used in various military and civil fields because of its high spatial resolution and all-weather image acquisition ability [

3,

4]. However, SAR also has obvious shortcomings. Due to the reflection mechanism in the imaging process, speckle-induced noise always appears in SAR images [

5,

6]. Speckle noise is a multiplicative non-Gaussian noise with a random distribution, which will lead to the nonlinearity of SAR image data and abnormal data distribution [

7]. Therefore, analysis and processing methods on common natural optical images or infrared images cannot be directly used in SAR images. It makes SAR image data analysis and processing face challenges.

Image analysis and processing is a complex process. As the basic work of computer vision preprocessing, image segmentation plays an important role in image analysis and understanding [

8]. The main purpose of image segmentation is to divide the image into several different regions with similar characteristics and mark them with different color labels [

9]. It could simplify the display mode of the image and make it easy to understand and analyze [

10,

11]. There are four types of common SAR image segmentation methods: the morphological strategy [

12,

13,

14,

15], graph segmentation methods [

16,

17], clustering methods [

1,

18], and model-based methods [

19,

20]. In recent years, with the development of deep learning, there are more and more image segmentation methods realized by deep learning [

21]. They are usually supervised image segmentation methods. Although deep learning segmentation methods can get quite good segmentation results, they also have the problem of requiring a lot of known data for training. However, the unsupervised segmentation methods do not need too much known information and can obtain segmentation results easily and quickly. They are not only conducive to rapid image analysis, but can also be used as an auxiliary means for deep learning data labeling. They can help effectively improve the labeling efficiency of the image’s ground truth. Therefore, it is still necessary to study the unsupervised image segmentation algorithm.

In recent years, many single-channel image segmentation methods have been proposed [

22,

23,

24,

25]. However, they cannot perform well in some single-channel SAR images because of the presence of speckle noise.Therefore, a lot of unsupervised segmentation algorithms for SAR gray images have been proposed. A SAR image segmentation method based on a level-set approach and

model was proposed [

26]. The method combined

distribution parameters to the level-set framework and had a good performance on SAR image segmentation. The MKJS-graph algorithm [

19] used the over-segmentation algorithm to obtain the image superpixels, and the multi-core sparse representation model (MKSR) was used to conduct high-dimensional characterization of the superpixel features. The local spatial correlation and global similarity of the superpixels are used together to improve the segmentation accuracy. The CTMF framework [

27] is an unsupervised segmentation algorithm proposed for SAR images. This framework takes into account the non-stationary characteristics of SAR images, directly models the posterior distribution, and achieves a good segmentation effect.

In addition, the more common segmentation methods use clustering methods, such as FCM clustering methods. By improving the objective function to use the spatial information of the image, the clustering methods can achieve a relatively simple and fast image segmentation process. The ILKFCM algorithm [

28] uses the features from wavelet decomposition to distinguish different pixels and measures feature similarity by using kernel distance. So the algorithm can effectively reduce the effect of speckle noise and get good segmentation results. The NSFCM algorithm [

1] uses a non-local spatial filtering method to reduce the noise of SAR images. In addition, fuzzy inter-class variation is introduced into the improved objective function to further improve the results. In order to solve the problem that the common clustering segmentation methods take too much time, the method using key points to reduce the computation time is proposed in FKPFCM [

29]. By first segmenting the extracted key points and then assigning labels to the pixels around the key points according to the similarity, it can quickly realize SAR image segmentation.

Since there is only single-channel information in each image pixel, in order to effectively and accurately segment the image, it is necessary to make more use of the spatial feature information near each pixel. Many algorithms use polynomials to express the spatial information of each pixel and then cluster the results of polynomials to obtain the final segmentation result. Through experimental analysis, we found that this kind of segmentation method can be approximately equivalent to the method of smoothing the image first and then clustering segmentation. NSFCM [

1] and ILKFCM [

28] respectively use wavelet and non-local mean methods to achieve image smoothing and finally use clustering to obtain better results.

In order to realize this kind of SAR image segmentation more efficiently, a SAR image segmentation method using region smoothing and label correction (RSLC) is proposed in this paper. RSLC uses the region smoothing method to get similar results of spatial information polynomials. A region constraint majority voting algorithm is also used to correct the wrong class labels so as to improve the segmentation accuracy of RSLC. There are usually homogeneous regions and texture regions in SAR images. Homogeneous regions are those regions where the pixel distribution has no specific directionality and the pixel values are close. Texture regions are those regions where the pixel distribution has a specific directionality and the pixel values change according to a certain rule. The texture regions are usually composed of smaller homogeneous regions and edge regions. Edge regions are where the edges of the image are. These regions usually have the largest changes in pixel values and are most prone to segmentation errors. So we deal with the edge regions and the homogeneous regions separately.

RSLC firstly constructs eight direction templates to detect the gradient direction of each coordinate. According to the gradient direction, the corresponding smoothing template is used to smooth the edge regions. Thus, effective edge region smoothing is achieved while preserving the edge information. Better smoothing results can be achieved by performing iterative smoothing operations. The direction results of multiple iterations can be used to calculate the direction-difference map, which indirectly represents the homogeneous regions and edge regions of the image. The value of the direction-difference map is taken as the standard deviation of the Gaussian kernel function to convolve the image. It achieves the smoothing of the image’s homogeneous regions. Then, RSLC combines the results of the two smoothing methods and uses a K-means algorithm to obtain the preliminary segmentation results. RSLC uses the image edge detected by the Canny algorithm as the constraint and uses the image sliding window as the scope to perform a region growth algorithm at each coordinate point. RSLC selects the majority class label as the current point’s class label. Finally, RSLC marks the label of the edge pixel as the label of the most similar pixel in its neighborhoods. Thus, the final segmentation results are obtained.

The main contributions of this paper are:

- (1)

By detecting the direction of all pixel points in the image and smoothing the image with the corresponding template, the edge regions of the image can be effectively smoothed to reduce the influence of noise. The iterative smoothing process used allows better smoothing results to be obtained.

- (2)

The direction-difference map of the image is calculated by using the direction of the image detected iteratively. It is used as the standard deviation of the isotropic Gaussian kernel to smooth the homogeneous regions of the image. The process allows the homogeneous regions to be smoothed quickly and the edge regions to be unaffected as much as possible.

- (3)

The region growth algorithm with an image edge constraint and majority voting are used so that a small number of wrong class labels can be corrected. This processing step has a small time cost in order to achieve better accuracy.

The main structure of this paper is as follows:

Section 2 gives the specific implementation steps of the proposed algorithm.

Section 3 presents and analyzes the experimental results.

Section 4 presents a discussion of the experimental results and the proposed algorithm.

Section 5 draws conclusions and suggests further work.

2. The Proposed Method

2.1. Edge Region Smoothing

Solving speckle noise from its mathematical model is complicated, so we mainly solve it from its effect on pixels. The effect of speckle noise is mainly to make the pixel values of the same target fluctuate differently. It leads to the wrong result of many different labels for the same target. For an image denoising task, the original values of the pixels need to be restored as much as possible. However, for an image segmentation task, it is not necessary to restore the original image. As long as the pixels of a target have similar values, they will eventually be assigned the same label. We perform smoothing operation on SAR images with speckle noise to achieve this goal.

Many image segmentation methods require the special processing of image edges. Because the edges of the image are the junctions of different objects, segmentation errors are most likely to occur. In general, only when the segmentation of the image edge region is done well can the final results have higher segmentation accuracy. Therefore, this paper also deals with the pixels at the edge regions separately. Firstly, it is necessary to reduce the influence of SAR image noise on the premise of preserving image edge information. We do the analysis of the region direction characteristics in the image as shown in

Figure 1.

Through the analysis of the common Gaussian filter and mean filter, it is found that the reason why these filters could achieve effective smoothing in the homogeneous regions is that the gray distribution in these regions basically follows the isotropic distribution (see

Figure 1a). It exactly conforms to the spatial characteristics of the Gaussian filter and mean filter, so these filters could smooth the region well. However, in the image edge regions (see

Figure 1b), the value distribution of pixels presents different characteristics. The distribution of pixel value is still the same in the direction of homogenization, but it changes sharply in the direction of gradient. If the isotropic smoothing method is still used, the pixels in another region with large differences will be added to the calculation, resulting in image edge degradation.

The directional smoothing can be carried out by using the direction characteristic of the edge regions. In order to achieve more simple and effective anisotropic smoothing, the proposed algorithm smoothes edge regions by using the template perpendicular to the gradient direction (see

Figure 2c).

In order to detect different directions in the images, eight direction templates are used for direction detection, as shown in

Figure 2a. The corresponding smoothing templates are also built, as shown in

Figure 2b. The eight direction templates are built from the first template about the center rotation, as shown in

Figure 2c.

The angle difference between the two adjacent templates is 22.5 degrees. Different templates have different response values in different directions, so the direction of corresponding points can be detected. As can be seen from

Figure 2a, the directions of all the templates do not cover a 360 degree angle. This is because of the symmetry of the templates. Obviously, the values of the two perfectly symmetric templates are inverse to each other, and the two symmetrical templates give the same smoothing direction so there is no need to double calculate. The construction formula of the first template is as follows:

where

is the first direction template,

represents the corresponding coordinates in the template, and

is the radius value of the direction templates.

In

Figure 2c, the value of the white area is 1, the value of the black area is

, and the value of the gray area is 0. The regions with values of 1 or

are center symmetry, and the mean value of the whole template is 0. The areas of 1 and

in the template have an important impact on the smoothness of the results. When region 1 and region

overlap at the center of the template, as shown in

Figure 2c, the resulting image edges are much smoother. Rotate the first template to get the template in each direction. The formula is as follows:

where

is the

kth generated template and the function

represents counterclockwise rotation;

is the first direction template.

The generation of smoothing templates is also based on a similar principle. Firstly, the first smoothing template is generated as follows:

where

is the first smoothing template and

is the radius of the smoothing template. Use the following formula to generate smooth templates in the other directions:

where

is the

kth smoothing template and the function

represents counterclockwise rotation;

is the first smoothing template.

The process of edge region smoothing is shown in

Figure 3. From left to right are the original image, the process, and the results, respectively.

As can be seen from

Figure 3, different points match different direction templates, and the corresponding smoothing templates are used for the smoothing operation. The process is described in detail below:

Use the direction template to convolve the image in turn, and the direction with the maximum response is the final direction. The calculation formula is as follows:

where

D is the direction-index map of the whole image. Its value represents which template is used.

is the

kth direction template; ∗ represents the convolution operation;

I is the original SAR image.

According to the calculated direction index, the corresponding smoothing template is used to smooth the edge regions. The formulas are as follows:

where

is the result generated by

kth iteration;

is the original SAR image;

is the smoothing template;

M is the maximum iterations; and

represents the Gaussian standard deviation. In order to get a better smoothing effect on edge regions, we run the smoothing operator several times.

2.2. Homogeneous Region Smoothing

From the principle of edge region smoothing, it can be seen that if the region is not affected by noise, the same regions should keep the same direction during multiple iterations. However, in the actual test, only the edge regions kept the invariance of the detection direction, while the homogeneous regions usually show the different directions during multiple iterations. The reason is that the directions at the edge regions are usually a real gradient, so the gradient can be detected in iterative detections. This leaves the detection results unchanged. While the gradient in the homogeneous regions is usually caused by the noise, there are no real gradients. Since the gray level of these regions usually changes during iteration, so the detection directions also change. By using this property, the homogeneous regions can be distinguished from the edge regions. The calculation formulas are as follows:

where

is the

kth difference map;

and

are the

th and

kth direction-index map, respectively;

S is the direction-difference map;

represents the operation of taking the modulus. The generation process of the direction-difference map is shown in

Figure 4.

In

Figure 4, several direction-index maps are used to generate a direction-different map through the Equations (8) and (9). It can be seen from the direction-different map that the homogeneous regions and the edge regions can be clearly distinguished. Some regions have a darker grayscale, indicating that the directions of the regions are consistent. They are usually the edge regions. Other regions are brighter, indicating more variation in direction during the iteration. They are usually homogeneous regions. Therefore, the value of the direction-difference map could be used to determine whether the region is the edge region or the homogeneous region.

In the process of smoothing the edge regions, the homogeneous regions are partially smoothed. However, because the homogeneous regions are isotropic, the smoothing effect obtained by using the anisotropic smoothing operator is quite general. Considering that the value of the direction-difference map could indirectly distinguish the homogeneous regions and edge regions, the value of the direction-difference map could be used for isotropic smoothing of the homogeneous regions. The smoothing operation is shown in

Figure 5.

As shown in

Figure 5, the values of the direction-difference map are taken as the variances of the Gaussian kernel function to smooth the homogeneous region. Where the value is large, it is considered to be greatly affected by noise. The smoothing effect is closer to using mean smoothing. Where the value is small, the noise effect is considered small, and a small variance is used to smooth. The smoothing effect is closer to keeping the original value of the pixel. Thus, it achieves smoothing according to the characteristics of different regions. The smoothing process is calculated as follows:

where

is the isotropic Gaussian smoothing result;

I is the original SAR image; ∗ is the convolution operation;

is the Gaussian kernel function;

H is the Gaussian normalization term; and

S is the direction-difference map.

In order to eliminate the salt-and-pepper noise in the smoothing results, a median filter with the same window size of the Gaussian function can be used for secondary smoothing. Since its window size is fixed, the fast median filtering algorithm can be used to complete the smoothing. The result of the median filtering is denoted as . Iteration can be used to increase the smoothing effect in homogeneous regions.

2.3. Class Label Correction

Edge region smoothing has a better effect on the edge regions. Homogeneous region smoothing has a better effect on the homogeneous regions. Use the following formula to fuse the two results:

where

is the fusion result;

is the result of homogeneous region smoothing;

S is the direction-difference map; and

is the result of edge region smoothing.

In the homogeneous regions, because the values of the corresponding direction-difference map are large, more weights are assigned to participate in the fusion. For the edge regions, the values of the corresponding direction-difference map are small, so smaller weights are assigned to participate in the fusion. After the fusion operation, the smoothing results of the whole image are obtained. Regarding the smoothing results as the approximate results of the spatial information polynomials, a K-means clustering algorithm is used to get the preliminary segmentation results and denoted them as the symbol L.

Through the observation and analysis of the clustering results, we found that due to slight edge degradation, a small number of pixels in the edge regions have class label errors. These errors can be corrected in some way. In image edge detection, usually only real targets have completely surrounded edges, while the error targets do not. This difference can be used for error class label correction.

In the process of class label correction, it is necessary to ensure that the edges form a closed space, as much as possible, to obtain a better correction effect. Fortunately, the Canny algorithm will connect the edge lines when performing edge detection, which can improve the closedness of the space surrounded by the edges. In addition, the Canny algorithm is simple and fast to implement, which can guarantee the efficiency of the proposed algorithm. Because it is susceptible to noise interference, it cannot be used directly in the original SAR image. Since the previous part has greatly reduced the influence of noise, the Canny algorithm can be effectively used to extract the image edge. In this paper, the adaptive Canny algorithm function provided in the software Matlab2018 is used for edge extraction. The result of image edge detection is recorded as .

Then, the following formula is used to mark the image edge in the clustering results:

where

is the label-map marked edge pixels;

is the image edge map; and

L is the result map of K-means clustering.

Then, the majority voting method is used to correct the wrong class labels. The process is shown in

Figure 6.

Using a fixed window to slide through the image, we regard the image edges as the constraint to perform the region growth algorithm in each window and modify one class label of the center pixel each time. During the performing of the region growth algorithm, the number of each label is counted and the class label with the largest number becomes the final class label. The calculation formula is as follows:

where

is the corrected result;

represents the four-neighborhood region growth operation;

W is the size of the slide window; and

is the image label map after marked edge pixels.

As can be seen from

Figure 6, under the constraint of the image edge, using region growth for class label correction will not affect other normal class labels, while the wrong pixel labels can be effectively corrected. Finally, it is necessary to assign the class label of the edge pixels. The calculation formula is as follows:

where

R is the final segmentation result;

is the fusion result; and

represents the eight neighborhoods. The formula is to mark the class label of the edge pixels as that of the most similar pixel in the neighborhoods. The specific execution steps of the proposed algorithm are shown in the flowchart in

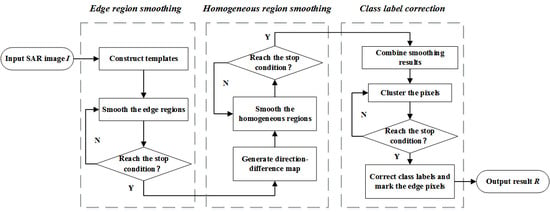

Figure 7.

4. Discussion

The robustness of ALFCM and CKSFCM to noise is not good, so the results vary a lot under different levels of noise. In the segmentation results of these two algorithms, there is usually obvious noise, which leads to the decrease of accuracy. The accuracy of FKPFCM, NSFCM, and ILKFCM does not change much under different noise intensities. They are more robust to noise. The problem with these algorithms is that more pixel segmentation errors are prone to occur at the edges, resulting in a decrease in accuracy. The proposed algorithm can maintain high accuracy under different levels of noise, and the edges of the results are much clearer and smoother. There is almost no noise in the results of the proposed algorithm. But the proposed method has an insufficient segmentation ability for small targets, such as thin-line targets. When using the proposed algorithm, it is best to ensure that the width of the target is smaller than the width of the smooth template. Or, the width of the smoothing template and the parameter W of the class label correction process can be appropriately reduced to better detect small targets. In terms of time consumption, CKSFCM, ALFCM, and ILKFCM take the longest time. The time consumption of NSFCM and FKPFCM is relatively short, but the proposed algorithm maintains the shortest time consumption in all experiments. Therefore, considering the segmentation accuracy and time consumption, the proposed algorithm has obvious advantages over the comparison algorithms, indicating the effectiveness of the proposed algorithm.

Through experiments, we found that there are two important parameters for the segmentation of small targets in an image: one is the width of the smoothing template, and the other is the parameter W in the class label correction process. In order to ensure a smooth effect without affecting small-target segmentation, a smooth template with a width of 5 was used in the experiments. Only when the minimum width of the target is greater than this value can smoothing be performed efficiently without degrading edges. Although the template with a width of 5 is already very small, a smaller target may still affect the segmentation effect. Therefore, the proposed algorithm may be more suitable for SAR image segmentation where the minimum target width is greater than 5 pixels. In addition, the parameter W will further affect the result. Because the edges of small targets are more easily degraded due to smoothing, the edge detection algorithm cannot effectively extract a closed space. In this case, a value of W that is too large will cause the correct labels rarely existing in small targets to be modified, leading to a further decrease in accuracy. Therefore, when there is a small-width target in the image, choosing a smaller W parameter is a better choice.

Since most of the processing in the proposed algorithm is serial, the previously used free memory can be used again in later steps. Therefore, as long as we know the value when the memory demand is greatest, we can know the memory demand of the algorithm. We found that the memory requirements were greatest when generating the direction-difference map. Since it is only necessary to have adjacent direction maps to perform calculations, only memory twice the size of the image is needed to store intermediate results. It also requires extra memory twice the image size to store the direction-difference map and results of the edge region smoothing. Therefore, a total of four times the memory size of the image is required. The direction-detection process needs to store the direction map, response results, and detection templates. The edge region smoothing process needs to store the direction map, smoothing results, and smoothing templates. The homogeneous region smoothing process needs to store the direction-difference map and the smoothing results. However, the memory requirements of these processes are less than four times image size memory. Edge detection, k-means clustering, and majority voting are performed sequentially. Each of them uses less space than the above-mentioned memory. Therefore, when the memory usage is the largest, four times the image size is required. Considering the storage of the original image and some other small intermediate variables, the proposed algorithm requires at least five to six times the memory size of the image.