Detection of Pine Shoot Beetle (PSB) Stress on Pine Forests at Individual Tree Level using UAV-Based Hyperspectral Imagery and Lidar

Abstract

:1. Introduction

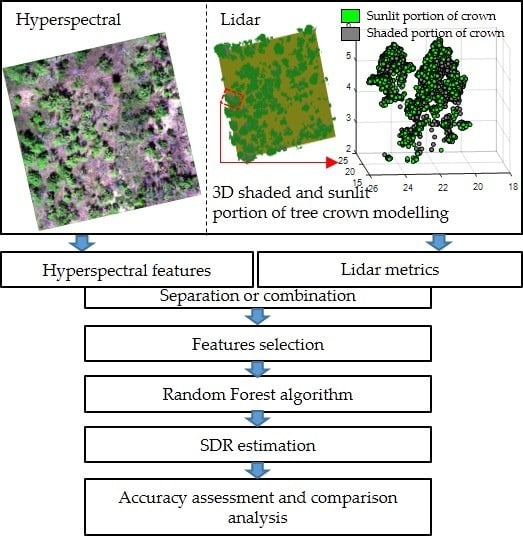

2. Materials and Methods

2.1. Study Area and Field Measurements

2.2. Remote Sensing Data Acquisition and Processing

2.2.1. Hyperspectral Imagery

2.2.2. Tree Crowns Segmentation from Hyperspectral Imagery

2.2.3. Lidar Data

2.2.4. Individual Tree Segmentation from Lidar

2.2.5. 3D Shaded and Sunlit Portions of Tree Crown Modelling

2.3. Features Extraction

2.3.1. Hyperspectral Features Extraction

2.3.2. Lidar Metrics Extraction

2.3.3. Retrieval of Leaf Chlorophyll Content (Cab) from Hyperspectral Images

2.4. Features Selection and Prediction Model for SDR

- (1)

- Hyperspectral approach (using only-HI variables for prediction): the tree crowns were segmented only using HI data; then 11 hyperspectral indices and Cab were derived from the selected sunlit pixels within each tree crown. Finally, 11 hyperspectral features were chosen for estimating SDR.

- (2)

- Lidar approach (using only-lidar variable for prediction): The trees crown were segmented using lidar data. Then, 14 lidar metrics were chosen for estimating the SDR for each tree crown.

- (3)

- Combined approach (using both HI and lidar variables): the crown delineation from lidar segmentation was used for hyperspectral images. For each tree crown, both lidar metrics and hyperspectral features were derived. A combination of 11 hyperspectral features and 14 lidar metrics were chosen for estimating the SDR.

3. Results

3.1. Inversion Results of Tree Crown Cab

3.2. Features Selection

3.3. Estimation of Shoot Damaged Ratio (SDR)

3.4. Shoot Damaged Ratio (SDR) Mapping

4. Discussion

4.1. Error Sources

- (1)

- The poor performance of individual tree crown segmentation using HI data only (STDR = 48%) caused the increase of uncertainty of hyperspectral features extraction. Without vertical information, it was difficult for tree crown segmentation to use hyperspectral images to separate overlapping crowns and distinguish trees from understory [79]. Furthermore, it was hard to distinguish the damaged parts of tree crown, red-attack tree crowns, and gray-attack tree crowns from bare soil using images classification technology.

- (2)

- The underestimation of tree crown SDR was mainly caused by the overestimation of Cab (Figure 5a). During the tree crown delineation process using HI data, the damaged part of tree crown was severely underestimated, leading to the canopy reflectance change. This change caused the canopy reflectance characteristics of damaged trees to be similar (or close) to those of health tree crowns.

- (3)

- The exclusion of shaded pixels of tree crowns may cause the underestimation of tree damage severity by PSB insects because the shaded pixels may contain the damaged shoots.

4.2. Contributions of Lidar

4.3. Possible Improvements of Inversion

4.4. SDR at Individual Tree Level

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Waring, R.H.; Pitman, G.B. Modifying Lodgepole Pine Stands to Change Susceptibility to Mountain Pine Beetle Attack. Ecology 1985, 66, 889–897. [Google Scholar] [CrossRef]

- Ayres, M.P.; Lombardero, M.J. Assessing the consequences of global change for forest disturbance from herbivores and pathogens. Sci. Total Environ. 2000, 262, 263–286. [Google Scholar] [CrossRef]

- Wingfield, M.J.; Brockerhoff, E.G.; Wingfield, B.D.; Slippers, B. Planted forest health: The need for a global strategy. Science 2015, 349, 832–836. [Google Scholar] [CrossRef] [PubMed]

- Kautz, M.; Anthoni, P.; Meddens, A.J.H.; Pugh, T.A.M.; Arneth, A. Simulating the recent impacts of multiple biotic disturbances on forest carbon cycling across the United States. Glob. Chang. Biol. 2017, 24, 2079–2092. [Google Scholar] [CrossRef] [Green Version]

- Yu, L.; Huang, J.; Zong, S.; Huang, H.; Luo, Y. Detecting Shoot Beetle Damage on Yunnan Pine Using Landsat Time-Series Data. Forests 2018, 9, 39. [Google Scholar] [Green Version]

- Coulson, R.N.; Mcfadden, B.A.; Pulley, P.E.; Lovelady, C.N.; Fitzgerald, J.W.; Jack, S.B. Heterogeneity of forest landscapes and the distribution and abundance of the southern pine beetle. For. Ecol. Manag. 1999, 114, 471–485. [Google Scholar] [CrossRef]

- Townsend, P.A.; Singh, A.; Foster, J.R.; Rehberg, N.J.; Kingdon, C.C.; Eshleman, K.N.; Seagle, S.W. A general Landsat model to predict canopy defoliation in broadleaf deciduous forests. Remote Sens. Environ. 2012, 119, 255–265. [Google Scholar] [CrossRef]

- Foster, J.R.; Mladenoff, D.J. Spatial dynamics of a gypsy moth defoliation outbreak and dependence on habitat characteristics. Landsc. Ecol. 2013, 28, 1307–1320. [Google Scholar] [CrossRef]

- Senf, C.; Seidl, R.; Hostert, P. Remote sensing of forest insect disturbances: Current state and future directions. Int. J. Appl. Earth Obs. 2017, 60, 49–60. [Google Scholar] [CrossRef] [Green Version]

- Spruce, J.P.; Sader, S.; Ryan, R.E.; Smoot, J.; Kuper, P.; Ross, K.; Prados, D.; Russell, J.; Gasser, G.; McKellip, R.; et al. Assessment of MODIS NDVI time series data products for detecting forest defoliation by gypsy moth outbreaks. Remote Sens. Environ. 2011, 115, 427–437. [Google Scholar] [CrossRef]

- Somers, B.; Verbesselt, J.; Ampe, E.M.; Sims, N.; Verstraeten, W.W.; Coppin, P. Spectral mixture analysis to monitor defoliation in mixed-aged Eucalyptus globulus Labill plantations in southern Australia using Landsat 5-TM and EO-1 Hyperion data. Int. J. Appl. Earth Observ. Geoinf. 2010, 12, 270–277. [Google Scholar] [CrossRef]

- Kantola, T.; Vastaranta, M.; Yu, X.W.; Lyytikainensaarenmaa, P.; Holopainen, M.; Talvitie, M.; Kaasalainen, S.; Solberg, S.; Hyyppa, J. Classification of defoliated trees using tree-level airborne laser scanning data combined with aerial images. Remote Sens. Basel 2010, 2, 2665–2679. [Google Scholar] [CrossRef]

- Oumar, Z.M. Onisimo Integrating environmental variables and WorldView-2 image data to improve the prediction and mapping of Thaumastocoris peregrinus (bronze bug) damage in plantation forests. J. Photogramm. Remote Sens. 2014, 87, 39–46. [Google Scholar] [CrossRef]

- Meigs, G.W.; Kennedy, R.E.; Cohen, W.B. A Landsat time series approach to characterize bark beetle and defoliator impacts on tree mortality and surface fuels in conifer forests. Remote Sens. Environ. 2011, 115, 3707–3718. [Google Scholar] [CrossRef]

- Senf, C.; Pflugmacher, D.; Wulder, M.A.; Hostert, P. Characterizing spectral–temporal patterns of defoliator and bark beetle disturbances using Landsat time series. Remote Sens. Environ. 2015, 170, 166–177. [Google Scholar] [CrossRef]

- Amman, G.D. Mountain pine beetle—Identification, biology, causes of outbreaks, and entomological research needs. In BC-X-Canadian Forestry Service; Pacific Forest Research Centre: Victoria, BC, USA, 1982. [Google Scholar]

- Coops, N.C.; Johnson, M.; Wulder, M.A.; White, J.C. Assessment of QuickBird high spatial resolution imagery to detect red attack damage due to mountain pine beetle infestation. Remote Sens. Environ. 2006, 103, 67–80. [Google Scholar] [CrossRef]

- Wulder, M.A.; Dymond, C.C.; White, J.C.; Leckie, D.G.; Carroll, A.L. Surveying mountain pine beetle damage of forests: A review of remote sensing opportunities. For. Ecol. Manag. 2006, 221, 27–41. [Google Scholar] [CrossRef]

- Assal, T.J.; Sibold, J.; Reich, R. Modeling a Historical Mountain Pine Beetle Outbreak Using Landsat MSS and Multiple Lines of Evidence. Remote Sens. Environ. 2014, 155, 275–288. [Google Scholar] [CrossRef]

- Walter, J.A.; Platt, R.V. Multi-temporal analysis reveals that predictors of mountain pine beetle infestation change during outbreak cycles. For. Ecol. Manag. 2013, 302, 308–318. [Google Scholar] [CrossRef]

- West, D.R.; Briggs, J.S.; Jacobi, W.R.; Negrón, J.F. Mountain pine beetle-caused mortality over eight years in two pine hosts in mixed-conifer stands of the southern Rocky Mountains. For. Ecol. Manag. 2014, 334, 321–330. [Google Scholar] [CrossRef]

- Sprintsin, M.; Jing, M.C.; Czurylowicz, P. Combining land surface temperature and shortwave infrared reflectance for early detection of mountain pine beetle infestations in western Canada. J. Appl. Remote Sens. 2011, 5, 53566. [Google Scholar] [CrossRef]

- Coops, N.C.; Gillanders, S.N.; Wulder, M.A.; Gergel, S.E.; Nelson, T.; Goodwin, N.R. Assessing changes in forest fragmentation following infestation using time series Landsat imagery. For. Ecol. Manag. 2010, 259, 2355–2365. [Google Scholar] [CrossRef]

- Wulder, M.A.; White, J.C.; Coops, N.C.; Butson, C.R. Multi-temporal analysis of high spatial resolution imagery for disturbance monitoring. Remote Sens. Environ. 2008, 112, 2729–2740. [Google Scholar] [CrossRef]

- Wulder, M.A.; Ortlepp, S.M.; White, J.C.; Coops, N.C.; Coggins, S.B. Monitoring the impacts of mountain pine beetle mitigation. For. Ecol. Manag. 2009, 258, 1181–1187. [Google Scholar] [CrossRef]

- Lin, Q.; Huang, H.; Yu, L.; Wang, J. Detection of shoot beetle stress on yunnan pine forest using a coupled LIBERTY2-INFORM simulation. Remote Sens. Basel 2018, 10, 1133. [Google Scholar] [CrossRef]

- Meddens, A.J.H.; Hicke, J.A.; Vierling, L.A. Evaluating the potential of multispectral imagery to map multiple stages of tree mortality. Remote Sens. Environ. 2011, 115, 1632–1642. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Lyytikäinen-Saarenmaa, P.; Blomqvist, M.; Litkey, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Tanhuanpää, T.; Holopainen, M. Using UAV-Based Photogrammetry and Hyperspectral Imaging for Mapping Bark Beetle Damage at Tree-Level. Remote Sens. Basel 2015, 7, 15467–15493. [Google Scholar] [CrossRef] [Green Version]

- Shendryk, I.; Broich, M.; Tulbure, M.G.; McGrath, A.; Keith, D.; Alexandrov, S.V. Mapping individual tree health using full-waveform airborne laser scans and imaging spectroscopy: A case study for a floodplain eucalypt forest. Remote Sens. Environ. 2016, 187, 202–217. [Google Scholar] [CrossRef]

- Cook, B.D.; Corp, L.A.; Nelson, R.F.; Middleton, E.M.; Morton, D.C.; Mccorkel, J.T.; Masek, J.G.; Ranson, K.J.; Ly, V.; Montesano, P.M. NASA goddard’s lidar, hyperspectral and thermal (G-LiHT) airborne imager. Remote Sens. Basel 2013, 5, 4045–4066. [Google Scholar] [CrossRef]

- Asner, G.P.; Martin, R.E.; Knapp, D.E.; Tupayachi, R.; Anderson, C.B.; Sinca, F.; Vaughn, N.R.; Llactayo, W. Airborne laser-guided imaging spectroscopy to map forest trait diversity and guide conservation. Science 2017, 355, 385. [Google Scholar] [CrossRef]

- Meng, R.; Dennison, P.E.; Zhao, F.; Shendryk, I.; Rickert, A.; Hanavan, R.P.; Cook, B.D.; Serbin, S.P. Mapping canopy defoliation by herbivorous insects at the individual tree level using bi-temporal airborne imaging spectroscopy and lidar measurements. Remote Sens. Environ. 2018, 215, 170–183. [Google Scholar] [CrossRef]

- Hanavan, R.P.; Jennifer, P.; Richard, H. A 10-Year Assessment of Hemlock Decline in the Catskill Mountain Region of New York State Using Hyperspectral Remote Sensing Techniques. J. Econ. Entomol. 2015, 108, 339–349. [Google Scholar] [CrossRef] [Green Version]

- Donoghue, D.N.M.; Watt, P.J.; Cox, N.J.; Wilson, J. Remote sensing of species mixtures in conifer plantations using lidar height and intensity data. Remote Sens. Environ. 2007, 110, 509–522. [Google Scholar] [CrossRef]

- Hovi, A.; Korhonen, L.; Vauhkonen, J.; Korpela, I. Lidar waveform features for tree species classification and their sensitivity to tree- and acquisition related parameters. Remote Sens. Environ. 2016, 173, 224–237. [Google Scholar] [CrossRef]

- Liu, L.; Coops, N.C.; Aven, N.W.; Pang, Y. Mapping urban tree species using integrated airborne hyperspectral and lidar remote sensing data. Remote Sens. Environ. 2017, 200, 170–182. [Google Scholar] [CrossRef]

- Höfle, B.; Pfeifer, N. Correction of laser scanning intensity data: Data and model-driven approaches. J. Photogramm. Remote Sens. 2007, 62, 415–433. [Google Scholar] [CrossRef]

- Solberg, S.; Næsset, E.; Hanssen, K.H.; Christiansen, E. Mapping defoliation during a severe insect attack on Scots pine using airborne laser scanning. Remote Sens. Environ. 2006, 102, 364–376. [Google Scholar] [CrossRef]

- Hanssen, H.K.; Solberg, S. Assessment of defoliation during a pine sawfly outbreak: Calibration of airborne laser scanning data with hemispherical photography. For. Ecol. Manag. 2007, 250, 9–16. [Google Scholar] [CrossRef]

- Senf, C.; Campbell, E.M.; Pflugmacher, D.; Wulder, M.A.; Hostert, P. A multi-scale analysis of western spruce budworm outbreak dynamics. Landsc. Ecol. 2017, 32, 501–514. [Google Scholar] [CrossRef]

- Ashton, E.A.; Wemett, B.D.; Leathers, R.A.; Downes, T.V. A novel method for illumination suppression in hyperspectral images. Proc. SPIE 2008, 6966, 69660C. [Google Scholar]

- Huang, H.; Qin, W.; Liu, Q. RAPID: A Radiosity Applicable to Porous IndiviDual Objects for directional reflectance over complex vegetated scenes. Remote Sens. Environ. 2013, 132, 221–237. [Google Scholar] [CrossRef]

- Yuan, J.; Wang, D.L.; Li, R. Remote Sensing Image Segmentation by Combining Spectral and Texture Features. IEEE Trans. Geosci. Remote Sens. 2014, 52, 16–24. [Google Scholar] [CrossRef]

- Sauvola, J.; Pietikäinen, M. Adaptive document image binarization. Pattern Recogn. 2000, 33, 225–236. [Google Scholar] [CrossRef] [Green Version]

- Zarco-Tejadaa, P.J.; Hornerob, A.; Becka, P.S.A.; Kattenbornd, T.; Kempeneersa, P. Chlorophyll content estimation in an open-canopy conifer forest with Sentinel-2A and hyperspectral imagery in the context of forest decline. Remote Sens. Environ. 2019, 223, 320–335. [Google Scholar] [CrossRef]

- Zhao, X.; Guo, Q.; Su, Y.; Xue, B. Improved progressive TIN densification filtering algorithm for airborne lidar data in forested areas. J. Photogramm. Remote Sens. 2016, 117, 79–91. [Google Scholar] [CrossRef]

- Li, W.; Guo, Q.; Jakubowski, M.K.; Kelly, M. A new method for segmenting individual trees from the lidar point cloud. Photogramm. Eng. Remote Sens. 2012, 78, 75–84. [Google Scholar] [CrossRef]

- Janoutová, R.; Homolová, L.; Malenovský, Z.; Hanuš, J.; Lauret, N.; Gastellu-Etchegorry, J. Influence of 3D Spruce Tree Representation on Accuracy of Airborne and Satellite Forest Reflectance Simulated in DART. Forests 2019, 10, 292. [Google Scholar] [CrossRef]

- MacArthur, R.H.; Horn, H.S. Foliage profile by vertical measurements. Ecology 1969, 50, 802–804. [Google Scholar] [CrossRef]

- Almeida, D.R.A.D.; Stark, S.C.; Shao, G.; Schietti, J.; Nelson, B.W.; Silva, C.A.; Gorgens, E.B.; Valbuena, R.; Papa, D.D.A.; Brancalion, P.H.S. Optimizing the Remote Detection of Tropical Rainforest Structure with Airborne lidar: Leaf Area Profile Sensitivity to Pulse Density and Spatial Sampling. Remote Sens. Basel 2019, 11, 92. [Google Scholar] [CrossRef]

- Martin, R.; Chadwick, K.; Brodrick, P.; Carranza-Jimenez, L.; Vaughn, N.; Asner, G. An Approach for Foliar Trait Retrieval from Airborne Imaging Spectroscopy of Tropical Forests. Remote Sens. Basel 2018, 10, 199. [Google Scholar] [CrossRef]

- Shi, Y.; Skidmore, A.K.; Wang, T.; Holzwarth, S.; Heiden, U.; Pinnel, N.; Zhu, X.; Heurich, M. Tree species classification using plant functional traits from lidar and hyperspectral data. Int. J. Appl. Earth Obs. 2018, 73, 207–219. [Google Scholar] [CrossRef]

- Shi, Y.; Wang, T.; Skidmore, A.K.; Heurich, M. Important lidar metrics for discriminating forest tree species in Central Europe. ISPRS J. Photogramm. Remote Sens. 2018, 137, 163–174. [Google Scholar] [CrossRef]

- Lin, Y.; Hyyppä, J. A comprehensive but efficient framework of proposing and validating feature parameters from airborne lidar data for tree species classification. Int. J. Appl. Earth Obs. 2016, 46, 45–55. [Google Scholar] [CrossRef]

- Rivera, J.; Verrelst, J.; Leonenko, G.; Moreno, J. Multiple Cost Functions and Regularization Options for Improved Retrieval of Leaf Chlorophyll Content and LAI through Inversion of the PROSAIL Model. Remote Sens. Basel 2013, 5, 3280–3304. [Google Scholar] [CrossRef] [Green Version]

- Verrelst, J.; Rivera, J.P.; Leonenko, G.; Alonso, L.; Moreno, J. Optimizing LUT-Based RTM Inversion for Semiautomatic Mapping of Crop Biophysical Parameters from Sentinel-2 and -3 Data: Role of Cost Functions. IEEE T Geosci. Remote 2014, 52, 257–269. [Google Scholar] [CrossRef]

- Ferreira, M.P.; Féret, J.; Grau, E.; Gastellu-Etchegorry, J.; Do Amaral, C.H.; Shimabukuro, Y.E.; de Souza Filho, C.R. Retrieving structural and chemical properties of individual tree crowns in a highly diverse tropical forest with 3D radiative transfer modeling and imaging spectroscopy. Remote Sens. Environ. 2018, 211, 276–291. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Verhoef, W.; Baret, F.; Bacour, C.; Zarco-Tejada, P.J.; Asner, G.P.; François, C.; Ustin, S.L. PROSPECT+SAIL models: A review of use for vegetation characterization. Remote Sens. Environ. 2009, 113, S56–S66. [Google Scholar] [CrossRef]

- Jacquemoud, S.; Baret, F. PROSPECT: A model of leaf optical properties spectra. Remote Sens. Environ. 1990, 34, 75–91. [Google Scholar] [CrossRef]

- Verhoef, W.; Jia, L.; Xiao, Q.; Su, Z. Unified Optical-Thermal Four-Stream Radiative Transfer Theory for Homogeneous Vegetation Canopies. IEEE Trans. Geosci. Remote 2007, 45, 1808–1822. [Google Scholar] [CrossRef]

- Féret, J.B.; Gitelson, A.A.; Noble, S.D.; Jacquemoud, S. PROSPECT-D: Towards modeling leaf optical properties through a complete lifecycle. Remote Sens. Environ. 2017, 193, 204–215. [Google Scholar] [CrossRef] [Green Version]

- Ali, A.M.; Skidmore, A.K.; Darvishzadeh, R.; Duren, I.V.; Holzwarth, S.; Mueller, J. Retrieval of forest leaf functional traits from HySpex imagery using radiative transfer models and continuous wavelet analysis. ISPRS J. Photogramm. Remote Sens. 2016, 122, 68–80. [Google Scholar] [CrossRef] [Green Version]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Mutanga, O.; Adam, E.; Cho, M.A. High density biomass estimation for wetland vegetation using WorldView-2 imagery and random forest regression algorithm. Int. J. Appl. Earth Obs. 2012, 18, 399–406. [Google Scholar] [CrossRef]

- Archer, K.J.; Kimes, R.V. Empirical characterization of random forest variable importance measures. Comput. Stat. Data Ann. 2008, 52, 2249–2260. [Google Scholar] [CrossRef]

- Verikas, A.; Gelzinis, A.; Bacauskiene, M. Mining data with random forests: A survey and results of new tests. Pattern Recogn. 2011, 44, 330–349. [Google Scholar] [CrossRef]

- Abdel-Rahman, E.M.; Ahmed, F.B.; Ismail, R. Random forest regression and spectral band selection for estimating sugarcane leaf nitrogen concentration using EO-1 Hyperion hyperspectral data. Int. J. Remote Sens. 2013, 34, 712–728. [Google Scholar] [CrossRef]

- Immitzer, M.; Atzberger, C.; Koukal, T. Tree Species Classification with Random Forest Using Very High Spatial Resolution 8-Band WorldView-2 Satellite Data. Remote Sens. Basel 2012, 4, 2661–2693. [Google Scholar] [CrossRef] [Green Version]

- Liaw, A.; Wiener, M. Classification and Regression by RandomForest. R. News 2002, 2, 18–22. [Google Scholar]

- Chen, J.M. Evaluation of Vegetation Indices and a Modified Simple Ratio for Boreal Applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Sims, D.A.; Gamon, J.A. Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 1, 309–317. [Google Scholar]

- Gamon, J.A.; Surfus, J.S. Assessing Leaf Pigment Content and Activity with a Reflectometer. N. Phytol. 2010, 143, 105–117. [Google Scholar] [CrossRef]

- Carter, G.A.; Miller, R.L. Early detection of plant stress by digital imaging within narrow stress-sensitive wavebands. Remote Sens. Environ. 1994, 50, 295–302. [Google Scholar] [CrossRef]

- Blackburn, G.A. Quantifying Chlorophylls and Caroteniods at Leaf and Canopy Scales: An Evaluation of Some Hyperspectral Approaches. Remote Sens. Environ. 1998, 66, 273–285. [Google Scholar] [CrossRef]

- Babar, M.A.; Reynolds, M.P.; van Ginkel, M.; Klatt, A.R.; Raun, W.R.; Stone, M.L. Spectral Reflectance Indices as a Potential Indirect Selection Criteria for Wheat Yield under Irrigation. Crop. Sci. 2006, 46, 578. [Google Scholar] [CrossRef]

- Pontius, J.; Martin, M.; Plourde, L.; Hallett, R. Ash decline assessment in emerald ash borer-infested regions: A test of tree-level, hyperspectral technologies. Remote Sens. Environ. 2008, 112, 2665–2676. [Google Scholar] [CrossRef]

- Ma, Y.; Min, H.; Bao, Y.; Zhu, Q. Automatic threshold method and optimal wavelength selection for insect-damaged vegetable soybean detection using hyperspectral images. Compute. Electr. Agricult. 2014, 106, 102–110. [Google Scholar] [CrossRef]

- Tochon, G.; Féret, J.B.; Valero, S.; Martin, R.E.; Knapp, D.E.; Salembier, P.; Chanussot, J.; Asner, G.P. On the use of binary partition trees for the tree crown segmentation of tropical rainforest hyperspectral images. Remote Sens. Environ. 2015, 159, 318–331. [Google Scholar] [CrossRef] [Green Version]

- Stereńczak, K.; Mielcarek, M.; Modzelewska, A.; Kraszewski, B.; Fassnacht, F.E.; Hilszczański, J. Intra-annual Ips typographus outbreak monitoring using a multi-temporal GIS analysis based on hyperspectral and ALS data in the Białowieża Forests. For. Ecol. Manag. 2019, 442, 105–116. [Google Scholar] [CrossRef]

- Wermelinger, B. Ecology and management of the spruce bark beetle Ips typographus—A review of recent research. For. Ecol. Manag. 2004, 202, 67–82. [Google Scholar] [CrossRef]

- Jonsson, A.M.; Appelberg, G.; Harding, S.; Barring, L. Spatio-temporal impact of climate change on the activity and voltinism of the spruce bark beetle. IPS. Typogr. 2009, 15, 486–499. [Google Scholar] [CrossRef]

- Kautz, M.; Kai, D.; Gruppe, A.; Schopf, R. Quantifying spatio-temporal dispersion of bark beetle infestations in epidemic and non-epidemic conditions. For. Ecol. Manag. 2011, 262, 598–608. [Google Scholar] [CrossRef]

| Mean | Standard Deviation | Maximum | Minimum | Range | |

|---|---|---|---|---|---|

| H (m) | 4.5 | 1.6 | 9.8 | 1.2 | 8.6 |

| CBH (cm) | 2.5 | 1.2 | 5.8 | 0.5 | 5.3 |

| DBH (cm) | 8.9 | 4.0 | 25 | 2.5 | 22.5 |

| CD (m) | 2.2 | 1.0 | 7.3 | 0.5 | 6.8 |

| Cab (mg/cm2) | 32.3 | 14.9 | 42.8 | 0.5 | 42.3 |

| SDR (%) | 26 | 35 | 100 | 0 | 100 |

| Parameters | Unit | Range | |

|---|---|---|---|

| N | Structure parameter | - | 1.5–2.5 |

| Cm | Leaf mass per area | g cm−2 | 0.005–0.035 |

| Cab | Leaf chlorophyll content | μg cm−2 | 0.5–43 |

| Cw | Equivalent water thickness | cm | 0.01 |

| Car | Carotenoid content | μg cm−2 | 3–12 |

| Canth | Anthocyanin content | μg cm−2 | 0.1–4 |

| LAI | Leaf area index | - | 0.25–3.5 |

| ALA | Average leaf angle | degree | 30–70 |

| hspot | Hot spot size | - | 0.01 |

| tts | Solar zenith angle | degree | 25 |

| tto | Observer zenith angle | degree | 0 |

| psi | Relative azimuth angle | degree | 0 |

| Variables | Index or Description | Formula | Reference |

|---|---|---|---|

| MSR | Modified simple ratio | MSR = ((R800/R670) − 1) / sqrt ((R800/R670) + 1) | [70] |

| SR _680 | Narrowband simple ratio 680 | SR _680 = R800 / R680 | [71] |

| SR _705 | Narrowband simple ratio 705 | SR _705 = R750 / R705 | [71] |

| NDVI | Normalized Difference Vegetation Index | NDVI = (R800− R670)/(R800+ R670) | [72] |

| ACI | Anthocyanin content index | [73] | |

| PSI | Plant stress index | PSI = R695/R760 | [74] |

| RVSI1 | Ratio vegetation stress index | RVSI1 = R600/ R760 | [74] |

| RVSI2 | Ratio vegetation stress index | RVSI2 = R710/ R760 | [74] |

| PSSR | Pigment specific simple ratio | PSSR = R800/ R635 | [75] |

| NWI | Normalized water index | NWI = (R970 − R850) / (R970+R850) | [76] |

| Cab | Leaf chlorophyll content |

| Variables | Definition |

|---|---|

| Int_mean_first | Mean value of crown first return intensity |

| Int_CV | Coefficient of variation of crown return intensity |

| Int_P25 | 25th percentile of crown return intensity |

| Int_P75 | 75th percentile of crown return intensity |

| Int_C25 | 25h cumulative percentile of crown return intensity |

| Int_C50 | 50h cumulative percentile of crown return intensity |

| Int_CV_SE_Top | Coefficient of variation of the top of SE crown return intensity |

| Int_mean_NW_Top | Mean value of the top of NW crown return intensity |

| Int_mean_NE&SW_Top | Mean value of the top of NE and SW crown return intensity |

| Int_CV_first_E_Top | Coefficient of variation of the top of E crown first return intensity |

| Int_mean_N&SE_Top | Mean value of the top of N and SE crown return intensity |

| Int_Shd_Top | Mean value of the top of shaded crown return intensity |

| CD | Crown density |

| GF | Gap fraction |

| Healthy | Slightly | Moderately | Severely | Dead | |

|---|---|---|---|---|---|

| SDR: 0–10% | SDR: 10–30% | SDR: 30–50% | SDR: 50–80% | SDR: 80–100% | |

| Combined approach | 70 | 69 | 16 | 29 | 88 |

| Lidar approach | 41 | 66 | 8 | 21 | 70 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Q.; Huang, H.; Wang, J.; Huang, K.; Liu, Y. Detection of Pine Shoot Beetle (PSB) Stress on Pine Forests at Individual Tree Level using UAV-Based Hyperspectral Imagery and Lidar. Remote Sens. 2019, 11, 2540. https://doi.org/10.3390/rs11212540

Lin Q, Huang H, Wang J, Huang K, Liu Y. Detection of Pine Shoot Beetle (PSB) Stress on Pine Forests at Individual Tree Level using UAV-Based Hyperspectral Imagery and Lidar. Remote Sensing. 2019; 11(21):2540. https://doi.org/10.3390/rs11212540

Chicago/Turabian StyleLin, Qinan, Huaguo Huang, Jingxu Wang, Kan Huang, and Yangyang Liu. 2019. "Detection of Pine Shoot Beetle (PSB) Stress on Pine Forests at Individual Tree Level using UAV-Based Hyperspectral Imagery and Lidar" Remote Sensing 11, no. 21: 2540. https://doi.org/10.3390/rs11212540