1. Introduction

Near-real-time satellite-based precipitation estimation is of great importance for hydrological and meteorological applications due to its high spatiotemporal resolution and global coverage. The accuracy of precipitation estimates can likely be enhanced with implementation of the recent developments in technologies and data with higher temporal, spatial and spectral resolution. Another important factor to more efficiently and accurately characterize these natural phenomena and their future behavior is the use of the proper methodologies to extract applicable information and exploit it in the precipitation estimation task [

1].

Despite having high-quality information, precipitation estimation from remotely sensed information still suffers from methodological deficiencies [

2]. For example, the application of a single spectral band of information does not provide comprehensive information for accurate precipitation retrieval [

3,

4,

5]. However, the combination of multiple channels of data has been shown to be valuable for cloud detection and improving precipitation estimation [

6,

7,

8,

9]. Another popular source of satellite-based information is passive microwave (PMW) images from sensors onboard Low-Earth-Orbiting (LEO) satellites. This information is more relevant to the vertical hydrometeor distribution and surface rainfall, due to the microwave frequencies response to ice particles or droplets associated with precipitation. Although PMW observations from LEO satellites have broader spatial and spectral resolutions, less frequent sensing can result in uncertainty for the spatial and temporal accumulation of rainfall estimation [

10,

11]. Data from GEO satellites are a unique means to provide cloud-rain information continuously over space and time for weather forecasting and precipitation nowcasting.

An example of using LEO-PMW satellite data along with the GEO-IR-based data to provide global precipitation estimation at near real-time is the Global Precipitation Measurement (GPM) mission. The NASA GPM program provides a key dataset called Integrated Multi-satellite Retrievals for GPM (IMERG). IMERG has been developed to provide half-hourly global precipitation monitoring at 0.1

× 0.1

[

12]. The satellite-based estimation of IMERG consists of three groups of algorithms including the Climate Prediction Center (CPC) morphing technique (CMORPH) from NOAA Climate Prediction Center (CPC) [

10], the Tropical Rainfall Measuring Mission (TRMM) Multi-satellite Precipitation Analysis from NASA Goddard Space Flight Center (TMPA) [

13] and microwave-calibrated Precipitation Estimation from Remotely Sensed Information using Artificial Neural Networks-Cloud Classification System (PERSIANN-CCS) [

14]. PERSIANN-CCS is a data-driven algorithm and is based on an unsupervised neural network. This algorithm uses exponential regression to estimate the precipitation from cloud patches at 0.04

by 0.04

spatial resolution [

14].

Effective use of the available big data from multi-sensors is one direction to improve the accuracy of precipitation estimation products [

15]. Recent developments of Machine Learning (ML) techniques from the fields of computer science have been extended to the geosciences community and is another direction to improve the accuracy of satellite-based precipitation estimation products [

9,

15,

16,

17,

18,

19,

20,

21,

22,

23]. Deep Neural Networks (DNNs) are a specific type of ML model framework with great capability to handle a huge amount of data. DNNs make it possible to extract high-level features from raw input data and obtain desired output through a neural network end-to-end training process [

24]. This is an important superiority of DNNs over simpler models to better extract and utilize the spatial and temporal structures from huge amounts of geophysical data available from a wide variety of sensors and satellites [

25,

26].

Application of DNNs in science and weather/climate studies is expanding and has been implemented in some studies including, short term precipitation forecast [

22], statistical downscaling for climate models [

27], precipitation estimation from Bispectral Satellite Information [

28], extreme weather detection [

29], precipitation nowcasting [

30] and precipitation estimation [

8,

28]. Significant advances of DNNs include Convolutional Neural Networks (CNNs) LeCun et al. [

31], Recurrent Neural Networks (RNNs) Elman [

32], Jordan [

33] and generative models. Each of the networks has strength in dealing with different types of datasets. CNNs benefit from convolution transformation to deal with spatially and temporally coherent datasets [

31,

34]. RNNs can effectively process information in the form of time-series and learn from a range of temporal dependencies in datasets. Generative models are capable of producing detailed results from limited information and provide a better match to observation data distribution by updating conventional loss function in DNNs. Variational AutoEncoder (VAE) [

35,

36] and Generative Adversarial Network (GAN) [

37] are among the popular types of generative models. In this paper, the conventional loss functions to train DNNs is replaced by a combination of cGAN and MSE to specifically provide a proof that generative models are capable to better handle the complex properties of the precipitation.

This study explores the application of the conditional GANs as a type of Generative Neural Networks to estimate precipitation using multiple sources of inputs including multispectral geostationary satellite information. This paper is an investigation for the development of an advanced satellite-based precipitation estimation product driven by state-of-the-art deep learning algorithms and using information from multiple sources. The objectives of this study are to report on: (1) application of CNNs instead of fully connected networks in extracting useful features from GEO satellite imagery to better capture the spatial and temporal dependencies in images; (2) demonstrating the advantage of using more sophisticated loss function to better capture the complex structure of precipitation; (3) evaluating the performance of the proposed algorithm considering different scenarios of multiple channel combinations and elevation data as input; and (4) evaluate the effectiveness of the proposed algorithm by comparing its performance with PERSIANN-CCS as an operational product and a baseline model with a conventional type of loss function. The remainder of this paper is organized as follows.

Section 2 briefly describes the study region and the datasets used for this study.

Section 3 explains the methodologies and details about the experiments in each step of the process.

Section 4 presents the results and discussion and finally,

Section 5 discusses the conclusions.

3. Methodology

With the constellation of a new generation of satellites, an enormous amount of remotely sensed measurements is available. However, it is still a challenge to understand how these measurements should best be used to improve the precipitation estimation task. Specifically, here we explored the application of CNNs and GANs in step-by-step phases of our experiment to provide a data-driven framework for near real-time precipitation estimation.

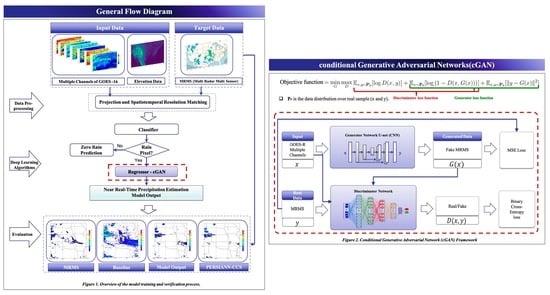

Figure 1 illustrates an overview of our framework, which consists of three main components: data pre-processing, deep learning algorithms and evaluation.

Data pre-processing is an essential part of our framework as measurements collected from different spectral bands have different value ranges. For example, 0.86

m (“reflective”) band contains measurements ranging from 0 to 1 while 8.4

m (“cloud-top phase”) band contains measurements ranging from 181 to 323. Normalizing the input is common practice in machine learning as models tend to be biased towards data with the largest value ranges. We make the assumption that all remotely sensed measurements are equally important, so we normalize the data of each channel to range from 0 to 1. Observations of each channel are normalized using the parameters as shown in

Table 1, by subtracting the min value from the channel value and dividing by the difference between the min and max values. Moreover, all the datasets are matched in terms of spatiotemporal resolution to qualify for image-to-image translation. As a result, both the MRMS and imageries from GOES-16 were up-scaled to match the PERSIANN-CCS as the baseline with 30 min temporal and 4 km by 4 km spatial resolution.

The pre-processed data is then used as input for deep learning algorithms. In this paper, we explore the application of CNNs to learn the relation between input satellite imagery and target precipitation observations. Specifically, we use the U-net architecture that has become popular in recent years in the computer vision—with applications ranging from image-to-image translation to biomedical image segmentation. An illustration of the U-net architecture is presented in

Figure 2, which shows an encoder-decoder network but with additional “skip” connections between the encoder and decoder. The bottle-necking of information in the encoder helps capture global spatial information, however, local spatial information is lost in the process. The idea behind the U-net architecture is that decoder accuracy can be improved by passing the lost local spatial information through the skip connections. Accurately capturing local information is important for precipitation estimation as rainfall is generally quite sparse—making pixel-level accuracy that much more important. For more information regarding U-nets please refer to Ronneberger et al. [

43].

U-net is used to extract features from the pre-processed input data, which are then used to predict the quantity of rainfall and the classification of rain/no-rain for each pixel. Each extracted feature is the same height and width as the input and target data, and is a single channel; the number of channels was selected through separate cross-validation experiments not discussed in this paper. The single channel feature is then fed into a shallow regression network that predicts a quantity of rain for each pixel. The specific details of each network are shown in

Table 2.

Performance verification measurements for precipitation amount estimation and rain/no-rain (R/NR) classification are presented in

Table 3 and

Table 4 respectively.

Two baselines are used to be compared to the output of our framework. The first one is the operational product of PERSIANN-CCS and the other one is a framework with the same structure as the proposed one, except that the loss term is calculated using only MSE. The reason to pick this baseline model is to show the superiority of the application of cGAN term in the objective function to better train the network for the task of precipitation estimation.

First phase of the methodology considers the most common scenario: one channel of IR from GOES-16 satellite is used as input to predict target precipitation estimates. In this phase, the networks in our framework (feature extractor and regressor) are trained using the mean squared error (MSE) loss, optimizing the objective:

where Pr is the data distribution over real sample (x and y),

is the feature extractor and regressor,

x is the input GOES satellite imagery, and

y is the target precipitation observation. According to this phase experiments, the regressor predicts small quantities of rain when the target indicates no-rain pixels. Instead of deciding on an arbitrary threshold to truncate values with, we follow the work of Tao et al. [

15] and use a shallow classification network to predict a rain/no-rain label for each pixel—a binary mask. Tao et al. (2018) applied Stacked Denoising Autoencoders (SDAEs) to delineate the rain/no-rain precipitation regions from bispectral satellite information. SDAEs are common and simple DNNs consisting of an autoencoder to extract representative features and learn from input to predict the output. The binary mask in our study is used to update the regression network’s prediction—pixels where the classification network predicts no-rain is updated to zero. The classifier uses the same single channel feature from the feature extractor as the regressor (details of the classifier are shown in

Table 2). This gives us an updated objective of:

where

is the feature extractor and classifier and

is the binarized version of

y. Here the feature extractor in

share the same weights as those in

.

As mean squared error (MSE) is a commonly used objective for the task of precipitation estimation, we use it as our optimization objective in the first phase. Using MSE, however, we find the outputs from precipitation estimators to be highly skewed toward smaller values due to the dominance of no-rain pixels, as well as, the rarity of pixels with heavy rain. This means that MSE by itself is insufficient in driving the model to capture the true underlying distribution of precipitation values. And since one of the main purposes of satellite-based precipitation estimation is to specifically track extreme events with negative environmental consequences, this behavior is problematic.

The second phase of our methodology looks to address this problematic behavior. We follow along the same line as Tao et al. [

15], who tried to remedy this behavior with the addition of a Kullback-Leibler (KL) divergence term to the optimization objective. KL divergence measures how one probability distribution

p diverges from a second expected probability distribution

q:

achieves the minimum zero when

and

are equal everywhere. It is noticeable according to the formula that KL divergence is asymmetric. In cases where

is close to zero but

is significantly non-zero, then the effect of

q is disregarded. This makes optimizing difficult when using gradient methods as there is no gradient to update parameters in such cases [

44].

We consider instead a different measure, the Jensen-Shannon (JS) divergence:

JS divergence is not only symmetric but is a smoother function compared to KL divergence, making it better suited to use with gradient methods. Huszár [

45] have demonstrated the superiority of JS divergence over the KL divergence for quantifying the similarity between two probability distributions. An implementation of JS divergence is a generative adversarial network (GAN), which adds a discriminator network that works against a generator network. The discriminator network discriminates whether the given input is a real sample from the true distribution (ground truth) or is a fake sample from a fake distribution (output from the generative network) and the generator network attempts to fool the discriminator. The GAN concept is illustrated in

Figure 3, where

G is a generator network and

D is a discriminator network. For further detail on GANs structure please refer to the papers by Goodfellow et al. [

37] and Goodfellow [

46].

In our setup, the generator consist of the previously mentioned networks (feature extractor, classifier and regressor) and a fake sample is an output from the regressor that has been updated using the binary mask from the classifier. Updating Equation (

2) to include the discriminator network for GAN gives the following equation:

where

D is the discriminator. Unlike the previously discussed discriminator that only looks at the target

y or simulated target

, here we use a discriminator that also looks at the corresponding input

x as reference. This is known as a conditional generative adversarial network (cGAN), as now the discrimination of the true or fake distribution is conditioned on the input

x. cGANs have been shown to perform even better than GANs but requires paired

data, which is not always readily available Mirza and Osindero [

47]. However, in this study, the paired data is provided by spatiotemporal resolution matching of the inputs (GOES-R bands) and the observation data (MRMS). Our setup follows closely to that of Isola et al. [

48] as we consider pixel-wise precipitation estimation from satellite imagery as the image-to-image translation problem from computer vision. The notable differences between our setup and that of Isola et al. [

48] are the generator network structure and objective function. While the objective function of Isola et al. [

48] contains only two parts: L1 on the generator and binary cross-entropy on the discriminator, our final objective function (Equation (

5)) contains three parts: L2 on the generator, binary cross-entropy on the discriminator and binary cross-entropy on the output of the classifier. The optimal point for the min-max equation is known from game theory, which is when the discriminator and the generator reach a Nash equilibrium. That’s the point when the discriminator is not able to tell the difference between the fake samples and the ground truth data anymore.

The last phase of the methodology considers the infusion of other channels of GOES-16 satellite data and GTOPO30 elevation information as an ancillary data. We first evaluate selected channels of GOES-16 individually with and without inclusion of elevation data to establish a baseline for how informative each individual channel is for precipitation estimation. We then evaluate combinations of GOES-16 channels to see how well different channels complement each other.

4. Results

In this section, we evaluate the performance of the proposed algorithm over the verification period for the continental United States. We compare the operational product PERSIANN-CCS, in addition to a baseline model that is trained using conventional and commonly used metric MSE as its objective function. The MRMS data is used as the ground truth data to investigate the performance improvement in both detecting the rain/no-rain pixels and the estimates.

Table 5 provides the overall statistic performances of the cGAN model compared to PERSIANN-CCS with reference to the MRMS data. Multiple channels are considered stand-alone and as the input to the proposed model including channel 13 with similar wavelength to PERSIANN-CCS to make the comparison fair.

The elevation data is also considered as another input to the model along with single bands of ABI GOES-16 to investigate the effect of infusing elevation data as auxiliary information. All evaluation metrics show improved results for the proposed cGAN model over the operational PERSIANN-CCS product during the verification period using band number 13. Specifically, the application of elevation data combined with single spectral bands indicates further performance improvement. Beside channel 13 as input to the model, utilization of channel 11 (“Cloud Top Phase”) as a stand-alone input to the model also shows good performance due to the statistics from evaluation metrics. It could be concluded that channel 11 is also playing an important role as channel 13 in providing useful information for the task of precipitation estimation either utilized as stand-alone or combined with elevation information.

Multiple scenarios are considered as shown in

Table 6 to investigate the benefit that channels 11 and 13 provide for the model in combination with some other spectral bands including different levels of water vapor. The evaluation metrics values indicate that the utilization of more spectral bands as input to the proposed model (Sc. 9), leads to lower MSE and higher correlation and CSI.

Visualization of predicted precipitation values for the proposed cGAN model and operational PERSIANN-CCS product are shown in

Figure 4 to emphasize the performance improvement specifically over the regions covered with warm clouds. Capturing clouds with higher temperature associated with rainfall is an important issue that is considered as the main drawback for precipitation retrieval algorithms such as PERSIANN-CCS. This inherent shortcoming is associated with the temperature threshold based segmentation part of the algorithm incapable of fully extracting warm raining clouds [

9].

Figure 4 is showing two sample IR band types and the half-hourly precipitation maps from the proposed model using the inputs listed in the scenario number 9 in

Table 6 for 31 July at 22:00—UTC along with the PERSIANN-CCS output and MRMS data for the same time step.

Daily and monthly values for all the models are also provided in

Figure 5. As shown in the red circled regions for the precipitation values with daily scale in the left panel, the proposed cGAN model output is capturing more of the precipitation as compared to PERSIANN-CCS output. Although both models are showing overestimation compared to MRMS in monthly scale, precipitation values from the proposed model are closer to the ground truth extreme values than PERSIANN-CCS.

Figure 6 presents R/NR identification results for the proposed cGAN model and the PERSIANN-CCS models for the 20th of July 2018. It is obvious that only small sections of rainfall are correctly identified by PERSIANN-CCS while cGAN model is able to reduce the missing rainy pixels and shows a significant improvement in delineating the precipitation area, represented by green pixels. More pixels with false detection of rainfall are observed in cGAN model output than PERSIANN-CCS which are insignificant compared to much higher detection and lower miss of rainy pixels.

Figure 7 presents the maps of POD, FAR and CSI values for the cGAN model compared to PERSIANN-CCS and the baseline model with MSE as the loss function. As explained in the methodology section, the cGAN model’s loss term consists of an additional part other than MSE that has to be optimized as a min-max problem in order to better capture complex precipitation distribution.

Figure 7 indicates the common verification measurements in

Table 3 for regression performance of all three models during the verification period. High measurement values are represented by warm colors and low measurement values are indicated by cold colors. Note that high values are desirable for POD and CSI, while lower values are desirable for FAR.

Figure 7 shows that the cGAN model outperforms the PERSIANN-CCS almost all over the CONUS and is showing better performance over the baseline model as well. For FAR, higher values observed for cGAN model are negligible considering the significant improvement of POD over the baseline model and PERSIANN-CCS. An ascending order can be observed in the maps of CSI of PERSIANN-CCS, the baseline model and the cGAN model.

Correlation and MSE values are also visualized to help to better explain the performance improvement of the cGAN model over PERSIANN-CCS over the verification period in

Figure 8.