Memristor Neural Network Training with Clock Synchronous Neuromorphic System

Abstract

:1. Introduction

2. Materials and Methods

2.1. Clock Synchronous Neuromorphic Hardware System

2.2. Memristor Neural Network Array

2.3. Hebbian Training Method

2.4. Guide Training Method

2.5. Training and Inference Dataset

3. Results

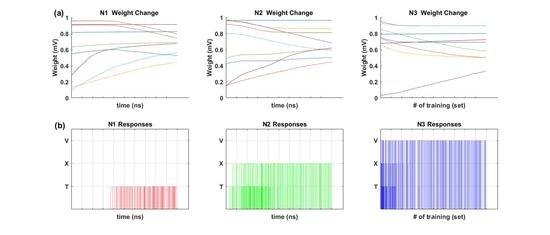

3.1. Inference Results after Hebbian Training

3.2. Inference Results after Guide Training

3.2.1. Inference Results of 9 × 6 Memristor Neural Network

3.2.2. Inference Results of 100 × 20 Memristor Neural Network

4. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Ananthanarayanan, R.; Esser, S.K.; Simon, H.D.; Modha, D.S. The cat is out of the bag: Cortical simulations with 109 neurons, 1013 synapses. In Proceedings of the Conference on High Performance Computing Networking, Storage and Analysis—SC ’09, Portland, OR, USA, 14–20 November 2009; ACM Press: New York, NY, USA, 2009; pp. 1–12. [Google Scholar]

- Merolla, P.A.; Arthur, J.V.; Alvarez-Icaza, R.; Cassidy, A.S.; Sawada, J.; Akopyan, F.; Jackson, B.L.; Imam, N.; Guo, C.; Nakamura, Y.; et al. A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 2014, 345, 668–673. [Google Scholar] [CrossRef] [PubMed]

- Misra, J.; Saha, I. Artificial neural networks in hardware: A survey of two decades of progress. Neurocomputing 2010, 74, 239–255. [Google Scholar] [CrossRef]

- Seo, J.; Brezzo, B.; Liu, Y.; Parker, B.D.; Esser, S.K.; Montoye, R.K.; Rajendran, B.; Tierno, J.A.; Chang, L.; Modha, D.S.; et al. A 45 nm CMOS neuromorphic chip with a scalable architecture for learning in networks of spiking neurons. In Proceedings of the 2011 IEEE Custom Integrated Circuits Conference (CICC), San Jose, CA, USA, 19–21 September 2011; pp. 1–4. [Google Scholar]

- Arthur, J.V.; Merolla, P.A.; Akopyan, F.; Alvarez, R.; Cassidy, A.; Chandra, S.; Esser, S.K.; Imam, N.; Risk, W.; Rubin, D.B.D.; et al. Building block of a programmable neuromorphic substrate: A digital neurosynaptic core. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, Australia, 10–15 June 2012; pp. 1–8. [Google Scholar]

- Schuman, C.D.; Potok, T.E.; Patton, R.M.; Birdwell, J.D.; Dean, M.E.; Rose, G.S.; Plank, J.S. A Survey of Neuromorphic Computing and Neural Networks in Hardware. arXiv 2017, arXiv:1705.06963. [Google Scholar]

- Walter, F.; Röhrbein, F.; Knoll, A. Neuromorphic implementations of neurobiological learning algorithms for spiking neural networks. Neural Netw. 2015, 72, 152–167. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Afifi, A.; Ayatollahi, A.; Raissi, F. Implementation of biologically plausible spiking neural network models on the memristor crossbar-based CMOS/nano circuits. In Proceedings of the 2009 European Conference on Circuit Theory and Design, Antalya, Turkey, 23–27 August 2009; pp. 563–566. [Google Scholar]

- de Garis, H.; Shuo, C.; Ruiting, L. A world survey of artificial brain projects, Part I: Large-scale brain simulations. Neurocomputing 2010, 74, 3–29. [Google Scholar] [CrossRef]

- Mayr, C.; Noack, M.; Partzsch, J.; Schuffny, R. Replicating experimental spike and rate based neural learning in CMOS. In Proceedings of the 2010 IEEE International Symposium on Circuits and Systems, Paris, France, 30 May–2 June 2010; pp. 105–108. [Google Scholar]

- Akopyan, F.; Sawada, J.; Cassidy, A.; Alvarez-Icaza, R.; Arthur, J.; Merolla, P.; Imam, N.; Nakamura, Y.; Datta, P.; Nam, G.-J.; et al. TrueNorth: Design and Tool Flow of a 65 mW 1 Million Neuron Programmable Neurosynaptic Chip. IEEE Trans. Comput. Des. Integr. Circuits Syst. 2015, 34, 1537–1557. [Google Scholar] [CrossRef]

- Benjamin, B.V.; Gao, P.; McQuinn, E.; Choudhary, S.; Chandrasekaran, A.R.; Bussat, J.-M.; Alvarez-Icaza, R.; Arthur, J.V.; Merolla, P.A.; Boahen, K. Neurogrid: A Mixed-Analog-Digital Multichip System for Large-Scale Neural Simulations. Proc. IEEE 2014, 102, 699–716. [Google Scholar] [CrossRef]

- Painkras, E.; Plana, L.A.; Garside, J.; Temple, S.; Galluppi, F.; Patterson, C.; Lester, D.R.; Brown, A.D.; Furber, S.B. SpiNNaker: A 1-W 18-Core System-on-Chip for Massively-Parallel Neural Network Simulation. IEEE J. Solid-State Circuits 2013, 48, 1943–1953. [Google Scholar] [CrossRef] [Green Version]

- Rachmuth, G.; Shouval, H.Z.; Bear, M.F.; Poon, C.-S. A biophysically-based neuromorphic model of spike rate- and timing-dependent plasticity. Proc. Natl. Acad. Sci. USA 2011, 108, E1266–E1274. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Zhang, Y.; Li, P. A digital neuromorphic VLSI architecture with memristor crossbar synaptic array for machine learning. In Proceedings of the 2012 IEEE International SOC Conference, Niagara Falls, NY, USA, 12–14 September 2012; pp. 328–333. [Google Scholar]

- Cassidy, A.S.; Alvarez-Icaza, R.; Akopyan, F.; Sawada, J.; Arthur, J.V.; Merolla, P.A.; Datta, P.; Tallada, M.G.; Taba, B.; Andreopoulos, A.; et al. Real-Time Scalable Cortical Computing at 46 Giga-Synaptic OPS/Watt with ~100× Speedup in Time-to-Solution and ~100,000× Reduction in Energy-to-Solution. In Proceedings of the SC14: International Conference for High Performance Computing, Networking, Storage and Analysis, New Orleans, LA, USA, 16–21 November 2014; pp. 27–38. [Google Scholar]

- Esser, S.K.; Merolla, P.A.; Arthur, J.V.; Cassidy, A.S.; Appuswamy, R.; Andreopoulos, A.; Berg, D.J.; McKinstry, J.L.; Melano, T.; Barch, D.R.; et al. Convolutional networks for fast, energy-efficient neuromorphic computing. Proc. Natl. Acad. Sci. USA 2016, 113, 11441–11446. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Merolla, P.; Arthur, J.; Akopyan, F.; Imam, N.; Manohar, R.; Modha, D.S. A digital neurosynaptic core using embedded crossbar memory with 45pJ per spike in 45 nm. In Proceedings of the 2011 IEEE Custom Integrated Circuits Conference (CICC), San Jose, CA, USA, 19–21 September 2011; pp. 1–4. [Google Scholar]

- Esser, S.K.; Andreopoulos, A.; Appuswamy, R.; Datta, P.; Barch, D.; Amir, A.; Arthur, J.; Cassidy, A.; Flickner, M.; Merolla, P.; et al. Cognitive computing systems: Algorithms and applications for networks of neurosynaptic cores. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–10. [Google Scholar]

- Cassidy, A.S.; Merolla, P.; Arthur, J.V.; Esser, S.K.; Jackson, B.; Alvarez-Icaza, R.; Datta, P.; Sawada, J.; Wong, T.M.; Feldman, V.; et al. Cognitive computing building block: A versatile and efficient digital neuron model for neurosynaptic cores. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–10. [Google Scholar]

- Amir, A.; Datta, P.; Risk, W.P.; Cassidy, A.S.; Kusnitz, J.A.; Esser, S.K.; Andreopoulos, A.; Wong, T.M.; Flickner, M.; Alvarez-Icaza, R.; et al. Cognitive computing programming paradigm: A Corelet Language for composing networks of neurosynaptic cores. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–10. [Google Scholar]

- Preissl, R.; Wong, T.M.; Datta, P.; Flickner, M.; Singh, R.; Esser, S.K.; Risk, W.P.; Simon, H.D.; Modha, D.S. Compass: A scalable simulator for an architecture for cognitive computing. In Proceedings of the 2012 International Conference for High Performance Computing, Networking, Storage and Analysis, Salt Lake City, UT, USA, 10–16 November 2012; pp. 1–11. [Google Scholar]

- Drachman, D.A. Do we have brain to spare? Neurology 2005, 64, 2004–2005. [Google Scholar] [CrossRef] [PubMed]

- Jo, S.H.; Chang, T.; Ebong, I.; Bhadviya, B.B.; Mazumder, P.; Lu, W. Nanoscale Memristor Device as Synapse in Neuromorphic Systems. Nano Lett. 2010, 10, 1297–1301. [Google Scholar] [CrossRef] [PubMed]

- Kim, K.-H.; Gaba, S.; Wheeler, D.; Cruz-Albrecht, J.M.; Hussain, T.; Srinivasa, N.; Lu, W. A Functional Hybrid Memristor Crossbar-Array/CMOS System for Data Storage and Neuromorphic Applications. Nano Lett. 2012, 12, 389–395. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Li, H.; Pino, R.E. Memristor-based synapse design and training scheme for neuromorphic computing architecture. In Proceedings of the 2012 International Joint Conference on Neural Networks (IJCNN), Brisbane, Australia, 10–15 June 2012; pp. 1–5. [Google Scholar]

- Indiveri, G.; Linares-Barranco, B.; Legenstein, R.; Deligeorgis, G.; Prodromakis, T. Integration of nanoscale memristor synapses in neuromorphic computing architectures. Nanotechnology 2013, 24, 384010. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Strukov, D.B.; Snider, G.S.; Stewart, D.R.; Williams, R.S. The missing memristor found. Nature 2008, 453, 80–83. [Google Scholar] [CrossRef] [PubMed]

- Pershin, Y.V.; Martinez-Rincon, J.; Di Ventra, M. Memory Circuit Elements: From Systems to Applications. J. Comput. Theor. Nanosci. 2011, 8, 441–448. [Google Scholar] [CrossRef] [Green Version]

- Amirsoleimani, A.; Shamsi, J.; Ahmadi, M.; Ahmadi, A.; Alirezaee, S.; Mohammadi, K.; Karami, M.A.; Yakopcic, C.; Kavehei, O.; Al-Sarawi, S. Accurate charge transport model for nanoionic memristive devices. Microelectron. J. 2017, 65, 49–57. [Google Scholar] [CrossRef]

- Yakopcic, C.; Taha, T.M.; Subramanyam, G.; Pino, R.E. Memristor SPICE model and crossbar simulation based on devices with nanosecond switching time. In Proceedings of the 2013 International Joint Conference on Neural Networks (IJCNN), Dallas, TX, USA, 4–9 August 2013; pp. 1–7. [Google Scholar]

- Yakopcic, C.; Taha, T.M.; Subramanyam, G.; Pino, R.E.; Rogers, S. A Memristor Device Model. IEEE Electron Device Lett. 2011, 32, 1436–1438. [Google Scholar] [CrossRef]

- Oblea, A.S.; Timilsina, A.; Moore, D.; Campbell, K.A. Silver chalcogenide based memristor devices. In Proceedings of the 2010 International Joint Conference on Neural Networks (IJCNN), Barcelona, Spain, 18–23 July 2010; pp. 1–3. [Google Scholar]

| Symbol | Value | Symbol | Value |

|---|---|---|---|

| a1 | 0.05 | An | 6 × 103 |

| a2 | 0.05 | xp | 0.5 |

| b | 0.05 | xn | 0.5 |

| Vp | 0.75 V | αp | 10 |

| Vn | 0.75 V | αn | 10 |

| Ap | 6 × 103 | xo | 0.5 |

| Input | Output | Modification |

|---|---|---|

| 1 | 1 | Remained |

| 1 | 0 | Increased |

| 0 | 1 | Decreased |

| 0 | 0 | Remained |

| Input Image | Predefined Output Neuron | i-th Input | W (i, 2 × j − 1) j = T | W (i, 2 × j) j = T | W (i, 2 × j − 1) j ≠ T | W (i, 2 × j) j ≠ T |

|---|---|---|---|---|---|---|

| K | T | 1 | Increased | Decreased | Decreased | Increased |

| 0 | Remained | Remained | Remained | Remained |

| i | W (i, 1) | W (i, 2) | W (i, 3) |

|---|---|---|---|

| 1 | 814.7 | 964.8 | 792.0 |

| 2 | 905.7 | 157.6 | 959.4 |

| 3 | 126.9 | 970.5 | 655.7 |

| 4 | 913.3 | 957.1 | 35.7 |

| 5 | 632.3 | 485.3 | 849.1 |

| 6 | 97.5 | 800.2 | 933.9 |

| 7 | 278.4 | 141.8 | 678.7 |

| 8 | 546.8 | 421.7 | 757.7 |

| 9 | 957.5 | 915.7 | 743.1 |

| Noise % | Digit 0 | Digit 1 | Digit 2 | Digit 3 | Digit 4 | Digit 5 | Digit 6 | Digit 7 | Digit 8 | Digit 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 100% | 100% | 100% | 100% | 100% | 98% | 100% | 96% | 100% | 100% |

| 3 | 100% | 100% | 97% | 96% | 100% | 91% | 95% | 100% | 84% | 100% |

| 5 | 99% | 100% | 95% | 93% | 100% | 84% | 88% | 86% | 84% | 92% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jo, S.; Sun, W.; Kim, B.; Kim, S.; Park, J.; Shin, H. Memristor Neural Network Training with Clock Synchronous Neuromorphic System. Micromachines 2019, 10, 384. https://doi.org/10.3390/mi10060384

Jo S, Sun W, Kim B, Kim S, Park J, Shin H. Memristor Neural Network Training with Clock Synchronous Neuromorphic System. Micromachines. 2019; 10(6):384. https://doi.org/10.3390/mi10060384

Chicago/Turabian StyleJo, Sumin, Wookyung Sun, Bokyung Kim, Sunhee Kim, Junhee Park, and Hyungsoon Shin. 2019. "Memristor Neural Network Training with Clock Synchronous Neuromorphic System" Micromachines 10, no. 6: 384. https://doi.org/10.3390/mi10060384