Deep Learning Algorithms with Demographic Information Help to Detect Tuberculosis in Chest Radiographs in Annual Workers’ Health Examination Data

Abstract

:1. Introduction

2. Materials and Methods

2.1. Ethics Statement

2.2. Cohort Data and Definitions

2.3. Tuberculosis Definition

2.4. Preprocessing: Lung Segmentation

2.5. Deep Learning Methods

2.6. Statistical Analysis

3. Results

3.1. Basic Characteristics

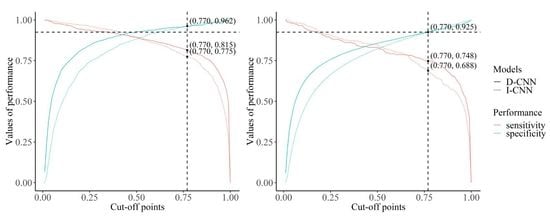

3.2. Image only Convolutional Neural Networks Model Performance

4. Discussion

Author Contributions

Funding

Conflicts of Interest

References

- Lin, Z.Q.; Xu, X.Q.; Zhang, K.B.; Zhuang, Z.G.; Liu, X.S.; Zhao, L.Q.; Lin, C.Y.; Li, Y.; Hua, X.L.; Zhao, H.L.; et al. Chest X-ray and CT findings of early H7N9 avian influenza cases. Acta Radiol. 2015, 56, 552–556. [Google Scholar] [CrossRef] [PubMed]

- Doi, K. Computer-aided diagnosis in medical imaging: Historical review, current status and future potential. Comput. Med. Imaging Graph. 2007, 31, 198–211. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Song, H.; Nguyen, A.-D.; Gong, M.; Lee, S. A review of computer vision methods for purpose on computer-aided diagnosis. J. Int. Soc. Simul. Surg. 2016, 3, 1–8. [Google Scholar] [CrossRef]

- Nam, S.Y.; Ko, E.S.; Lim, Y.; Han, B.K.; Ko, E.Y.; Choi, J.S.; Lee, J.E. Preoperative dynamic breast magnetic resonance imaging kinetic features using computer-aided diagnosis: Association with survival outcome and tumor aggressiveness in patients with invasive breast cancer. PLoS ONE 2018, 13, e0195756. [Google Scholar] [CrossRef] [PubMed]

- Maeda, I.; Kubota, M.; Ohta, J.; Shinno, K.; Tajima, S.; Ariizumi, Y.; Doi, M.; Oana, Y.; Kanemaki, Y.; Tsugawa, K.; et al. Effectiveness of computer-aided diagnosis (CADx) of breast pathology using immunohistochemistry results of core needle biopsy samples for synaptophysin, oestrogen receptor and CK14/p63 for classification of epithelial proliferative lesions of the breast. J. Clin. Pathol. 2017, 70, 1057–1062. [Google Scholar] [CrossRef] [PubMed]

- Komeda, Y.; Handa, H.; Watanabe, T.; Nomura, T.; Kitahashi, M.; Sakurai, T.; Okamoto, A.; Minami, T.; Kono, M.; Arizumi, T.; et al. Computer-Aided Diagnosis Based on Convolutional Neural Network System for Colorectal Polyp Classification: Preliminary Experience. Oncology 2017, 93 (Suppl 1), 30–34. [Google Scholar] [CrossRef] [Green Version]

- Guo, L.H.; Wang, D.; Qian, Y.Y.; Zheng, X.; Zhao, C.K.; Li, X.L.; Bo, X.W.; Yue, W.W.; Zhang, Q.; Shi, J.; et al. A two-stage multi-view learning framework based computer-aided diagnosis of liver tumors with contrast enhanced ultrasound images. Clin. Hemorheol. Microcirc. 2018, 69, 343–354. [Google Scholar] [CrossRef] [PubMed]

- Lakhani, P.; Sundaram, B. Deep Learning at Chest Radiography: Automated Classification of Pulmonary Tuberculosis by Using Convolutional Neural Networks. Radiology 2017, 284, 574–582. [Google Scholar] [CrossRef] [PubMed]

- Abidin, A.Z.; Deng, B.; AM, D.S.; Nagarajan, M.B.; Coan, P.; Wismuller, A. Deep transfer learning for characterizing chondrocyte patterns in phase contrast X-Ray computed tomography images of the human patellar cartilage. Comput. Biol. Med. 2018, 95, 24–33. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Agarwala, S.; Dhara, A.K.; Nandi, D.; Mukhopadhyay, S.; Garg, M.; Khandelwal, N.; Kalra, N. Localization of lung fields in HRCT images using a deep convolution neural network. In Proceedings of the Medical Imaging 2018, Houston, TX, USA, 10–15 February 2018; p. 1057535. [Google Scholar]

- Xue, Z.; Antani, S.; Long, L.R.; Thoma, G.R. Using deep learning for detecting gender in adult chest radiographs. In Proceedings of the Medical Imaging 2018: Imaging Informatics for Healthcare, Research, and Applications, Houston, TX, USA, 10–15 February 2018; p. 105790. [Google Scholar]

- Korea. Occupational Safety and Health Act of Korea, Korea Ministry of Employment and Labor. Available online: http://www.moleg.go.kr/english (accessed on 1 August 2018).

- Korea. Framework Act on Health Examinations, Ministory of Health and Welfare. Available online: http://www.mohw.go.kr/eng/ (accessed on 1 August 2018).

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Gulshan, V.; Peng, L.; Coram, M.; Stumpe, M.C.; Wu, D.; Narayanaswamy, A.; Venugopalan, S.; Widner, K.; Madams, T.; Cuadros, J. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA 2016, 316, 2402–2410. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Cai, W.; Wang, X.; Zhou, Y.; Feng, D.D.; Chen, M. Medical image classification with convolutional neural network. In Proceedings of the 13th International Conference on Control Automation Robotics & Vision (ICARCV), Singapore, 10–12 December 2014; pp. 844–848. [Google Scholar]

- Lopes, U.; Valiati, J. Pre-trained convolutional neural networks as feature extractors for tuberculosis detection. Comput. Biol. Med. 2017, 89, 135–143. [Google Scholar] [CrossRef] [PubMed]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. Breast cancer histopathological image classification using convolutional neural networks. In Proceedings of the 2016 International Joint Conference on Neural Networks (IJCNN), Vancouver, BC, Canada, 24–29 July 2016; pp. 2560–2567. [Google Scholar]

- Apou, G.; Schaadt, N.S.; Naegel, B.; Forestier, G.; Schönmeyer, R.; Feuerhake, F.; Wemmert, C.; Grote, A. Detection of lobular structures in normal breast tissue. Comput. Biol. Med. 2016, 74, 91–102. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv, 2014; arXiv:1409.1556. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2818–2826. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 770–778. [Google Scholar]

- Johnson, J.; Karpathy, A.; Fei-Fei, L. Densecap: Fully convolutional localization networks for dense captioning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 4565–4574. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; p. 12. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- DeLong, E.R.; DeLong, D.M.; Clarke-Pearson, D.L. Comparing the areas under two or more correlated receiver operating characteristic curves: A nonparametric approach. Biometrics 1988, 44, 837–845. [Google Scholar] [CrossRef] [PubMed]

- The R Development Core Team. R: A Language and Environment for Statistical Computing; The R Development Core Team: Vienna, Austria, 2013. [Google Scholar]

- Hontani, H. A computer aided diagnosis system for malignant tumors using FDG-PET/CT images. Nihon Hoshasen Gijutsu Gakkai Zasshi 2007, 63, 1460–1463. [Google Scholar] [CrossRef] [PubMed]

- Shen, D.; Wu, G.; Suk, H.I. Deep Learning in Medical Image Analysis. Ann. Rev. Biomed. Eng. 2017, 19, 221–248. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kim, J.; Kim, J. The impact of imbalanced training data on machine learning for author name disambiguation. Scientometrics 2018, 117, 511–526. [Google Scholar] [CrossRef]

| Variables | Training | Test | ||||

|---|---|---|---|---|---|---|

| Tuberculosis | p Value * | Tuberculosis | p Value * | |||

| Positive (n = 1000) | Negative (n = 1000) | Positive (n = 202) | Negative (n = 37,475) | |||

| Age | 50.08 ± 10.74 | 40.33 ± 11.07 | <0.001 | 50.42 ± 10.48 | 40.30 ± 10.86 | <0.001 |

| Gender | <0.001 | <0.001 | ||||

| Male | 682 (68.20) | 561 (56.10) | 125 (61.88) | 20,445 (54.56) | ||

| Female | 318 (31.80) | 439 (43.90) | 77 (38.12) | 17,030 (45.44) | ||

| Height | 168.36 ± 8.33 | 167.85 ± 8.43 | 0.170 | 168.04 ± 8.53 | 167.54 ± 8.37 | 0.401 |

| Weight | 63.76 ± 11.42 | 64.98 ± 12.99 | 0.025 | 62.51 ± 10.74 | 64.43 ± 12.99 | 0.006 |

| Models | Training | Test | ||||||

|---|---|---|---|---|---|---|---|---|

| AUC | p Value | AUC | p Value | |||||

| I-CNN * | D-CNN ** | Difference | I-CNN * | D-CNN ** | Difference | |||

| VGG19 | 0.9570 | 0.9714 | 0.0144 | <0.001 | 0.9075 | 0.9213 | 0.0138 | 0.049 |

| InceptionV3 | 0.9523 | 0.9616 | 0.0093 | 0.014 | 0.8821 | 0.9045 | 0.0224 | 0.033 |

| ResNet50 | 0.9219 | 0.9250 | 0.0031 | 0.434 | 0.8780 | 0.8955 | 0.0175 | 0.051 |

| DenseNet121 | 0.9315 | 0.9472 | 0.0157 | 0.002 | 0.8605 | 0.8893 | 0.0288 | 0.011 |

| InceptionResNetV2 | 0.9482 | 0.9455 | 0.0027 | 0.407 | 0.8851 | 0.8864 | 0.0013 | 0.888 |

| Input Variables | AUC | p Value * |

|---|---|---|

| I-CNN | 0.9075 | - |

| I-CNN + Gender | 0.9107 | 0.657 |

| I-CNN + Age | 0.9111 | 0.602 |

| I-CNN + Weight | 0.9122 | 0.468 |

| I-CNN + Height | 0.9091 | 0.802 |

| I-CNN + Weight + Age | 0.9212 | 0.039 |

| I-CNN + Weight + Age + Gender | 0.9207 | 0.023 |

| I-CNN + Weight + Age + Gender + Height | 0.9213 | 0.049 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Heo, S.-J.; Kim, Y.; Yun, S.; Lim, S.-S.; Kim, J.; Nam, C.-M.; Park, E.-C.; Jung, I.; Yoon, J.-H. Deep Learning Algorithms with Demographic Information Help to Detect Tuberculosis in Chest Radiographs in Annual Workers’ Health Examination Data. Int. J. Environ. Res. Public Health 2019, 16, 250. https://doi.org/10.3390/ijerph16020250

Heo S-J, Kim Y, Yun S, Lim S-S, Kim J, Nam C-M, Park E-C, Jung I, Yoon J-H. Deep Learning Algorithms with Demographic Information Help to Detect Tuberculosis in Chest Radiographs in Annual Workers’ Health Examination Data. International Journal of Environmental Research and Public Health. 2019; 16(2):250. https://doi.org/10.3390/ijerph16020250

Chicago/Turabian StyleHeo, Seok-Jae, Yangwook Kim, Sehyun Yun, Sung-Shil Lim, Jihyun Kim, Chung-Mo Nam, Eun-Cheol Park, Inkyung Jung, and Jin-Ha Yoon. 2019. "Deep Learning Algorithms with Demographic Information Help to Detect Tuberculosis in Chest Radiographs in Annual Workers’ Health Examination Data" International Journal of Environmental Research and Public Health 16, no. 2: 250. https://doi.org/10.3390/ijerph16020250