A Machine Learning Method for the Fine-Grained Classification of Green Tea with Geographical Indication Using a MOS-Based Electronic Nose

Abstract

:1. Introduction

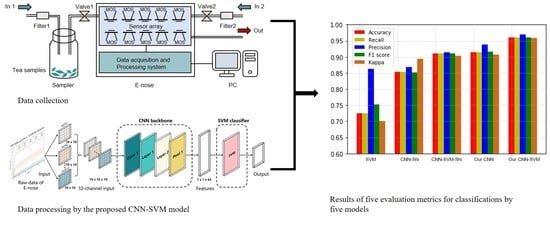

- A preprocessing scheme inspired by the multi-channel input to the CNN model used in image processing is proposed for the 10-channel E-nose’s data. The channel’s sensor data are converted into a single matrix, and the combined matrices of the 10 channels represent the multi-channel data.

- The network structure of the proposed CNN-SVM, a CNN backbone with a SVM classifier, is analyzed. In the framework, the deep CNN is designed to mine the volatile compound information of the tea samples (automatically), and the SVM is used to classify the data (small sample sizes) to improve the classification performance.

- A comprehensive study on the fine-grained classification of green tea with geographical indication is presented, demonstrating the high accuracy and strong robustness of the proposed CNN-SVM framework.

2. Materials and Methods

2.1. General Information of Tea Samples

2.2. Experimental Samples and Conditions

2.3. Principal Component Analysis (PCA)

2.4. Convolutional Neural Network (CNN)

2.5. ResNeXt

2.6. Support Vector Machine (SVM)

2.7. Model Evaluation Metrics

3. Proposed Method

3.1. Data Preprocessing

3.2. Proposed CNN-SVM Framework

| Algorithm 1 CNN-SVM |

Input: training data. Output: predicted category. Begin Step1: train the CNN backbone with the CNN classifier on the training data Step2: save the parameters of the CNN backbone Step3: replace the CNN classifier with the SVM classifier Step4: test the CNN-SVM model on the test data Step5: output the classification results End |

4. Results and Discussion

4.1. Principal Component Analysis

4.2. Comparison of the Classification Results of five Models

5. Conclusions

- PCA, an unsupervised method, was used to show the separability of the 12 tea sample data (stable values) obtained from the E-nose. Not surprisingly, the PCA results showed that this method was ineffective for classifying the sample due to relatively high overlap. The stable values combined with an SVM model were used to distinguish the teas; this approach had poor performance. It showed that the static characteristics were not sufficient for the fine-grained classification of the 12 green teas, and meaningful feature information was lost.

- A 10-channel input matrix, which was obtained by converting the raw data of the 10-channel MOS-based sensors (each sensor for one channel) in the E-nose system, was constructed to mine the deep features using the CNN model. The multi-channel design considered the cross-sensitivity of the sensors and contained sufficient details for the classification tasks, providing a novel feature extraction method for E-nose signals in practical applications.

- A CNN-SVM framework consisting of the CNN backbone and the SVM classifier was proposed to perform the classification using the 10-channel input matrix. The novel structure of the CNN backbone based on ResNeXt was effective for extracting the deep features from the different channels automatically. The SVM classifier improved the generalization ability of the CNN model and increased the classification accuracy from 91.39% (CNN backbone + CNN classifier) to 96.11% (CNN backbone + SVM classifier) among the 12 green teas due to its good discrimination ability for small sample sizes.

- Compared with the other four machine learning models (SVM, CNN-Shi, CNN-SVM-Shi, and CNN), the proposed CNN-SVM provided the highest scores of the five evaluation metrics for the classification of the GTSGI: accuracy of 96.11%, recall of 96.11%, precision of 96.86%, F1 score of 96.03%, and Kappa score of 95.76%. Excellent performance was obtained for identifying the FGTSGI, with the highest F1 scores of 97.77% (for HSMF) and 99.31% (for XYMJ). These experimental results demonstrated the effectiveness of the CNN-SVM for the classification of the GTSGI and the identification of the FGTSGI.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| GTSGI | Green Tea with Geographical Indication |

| FGTSGI | Famous Green Tea with Geographical Indication |

| CNN | Convolutional Neural Network |

| SVM | Support Vector Machine |

| CNN-SVM | a CNN backbone combined with a SVM classifier |

| CNN-Shi | a CNN model proposed by Shi et al. [41] |

| CNN-SVM-Shi | CNN-Shi combined with a SVM classifier |

| E-nose | Electronic Nose |

| MOS | Metal Oxide Semiconductor |

| HSMF | Huangshan Maofeng |

| YDMF | Yandang Maofeng |

| EMMF | Emei Maofeng |

| LXMF | Lanxi Maofeng |

| QSMF | Qishan Maofeng |

| MTMF | Meitan Maofeng |

| XYMJ | Xinyang Maojian |

| GLMJ | Guilin Maojian |

| DYMJ | Duyun Maojian |

| GZMJ | Guzhang Maojian |

| QSMJ | Queshe Maojian |

| ZYMJ | Ziyang Maojian |

| PCA | Principal Component Analysis |

| RBF | Radial Basis Function |

| TP | True Positive |

| FP | False Positive |

| TN | True Negative |

| FN | False Negative |

| R | Recall |

| P | Precision |

| SGD | Stochastic Gradient Descent |

| PC1 | Principal Component 1 |

| PC2 | Principal Component 2 |

References

- The State of the U.S. Tea Industry 2019–20. Available online: Http://en.ctma.com.cn/index.php/2019/12/09/green-tea-market-expected-to-grow (accessed on 15 November 2020).

- Chacko, S.M.; Thambi, P.T.; Kuttan, R.; Nishigaki, I. Beneficial effects of green tea: A literature review. Chin. Med. 2010, 5, 1–9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, X.; Huang, J.; Fan, W.; Lu, H. Identification of green tea varieties and fast quantification of total polyphenols by near-infrared spectroscopy and ultraviolet-visible spectroscopy with chemometric algorithms. Anal. Methods 2015, 7, 787–792. [Google Scholar]

- Graham, H.N. Green tea composition, consumption, and polyphenol chemistry. Prev. Med. 1992, 21, 334–350. [Google Scholar] [PubMed]

- Huang, D.; Qiu, Q.; Wang, Y.; Wang, Y.; Lu, Y.; Fan, D.; Wang, X. Rapid Identification of Different Grades of Huangshan Maofeng Tea Using Ultraviolet Spectrum and Color Difference. Molecules 2020, 25, 4665. [Google Scholar]

- Ye, N.; Zhang, L.; Gu, X. Classification of Maojian teas from different geographical origins by micellar electrokinetic chromatography and pattern recognition techniques. Anal. Sci. 2011, 27, 765. [Google Scholar]

- Hu, L.; Yin, C. Development of a new three-dimensional fluorescence spectroscopy method coupling with multilinear pattern recognition to discriminate the variety and grade of green tea. Food Anal. Methods 2017, 10, 2281–2292. [Google Scholar]

- Zeng, X.; Tian, J.; Cai, K.; Wu, X.; Wang, Y.; Zheng, Y.; Cui, L. Promoting osteoblast differentiation by the flavanes from Huangshan Maofeng tea is linked to a reduction of oxidative stress. Phytomedicine 2014, 21, 217–224. [Google Scholar]

- Guo, G.Y.; Hu, K.F.; Yuan, D. The chemical components of xinyang maojian tea. Food Sci. Technol. 2006, 9, 298–301. [Google Scholar]

- Lv, H.P.; Zhong, Q.S.; Lin, Z.; Wang, L.; Tan, J.F.; Guo, L. Aroma characterisation of Pu-erh tea using headspace-solid phase microextraction combined with GC/MS and GC–olfactometry. Food Chem. 2012, 130, 1074–1081. [Google Scholar]

- Zhu, H.; Ye, Y.; He, H.; Dong, C. Evaluation of green tea sensory quality via process characteristics and image information. Food Bioprod. Process. 2017, 102, 116–122. [Google Scholar]

- Sasaki, T.; Koshi, E.; Take, H.; Michihata, T.; Maruya, M.; Enomoto, T. Characterisation of odorants in roasted stem tea using gas chromatography–mass spectrometry and gas chromatography-olfactometry analysis. Food Chem. 2017, 220, 177–183. [Google Scholar]

- Tan, H.R.; Lau, H.; Liu, S.Q.; Tan, L.P.; Sakumoto, S.; Lassabliere, B.; Yu, B. Characterisation of key odourants in Japanese green tea using gas chromatography-olfactometry and gas chromatography-mass spectrometry. LWT 2019, 108, 221–232. [Google Scholar]

- Boeker, P. On ‘electronic nose’ methodology. Sens. Actuators B Chem. 2014, 204, 2–17. [Google Scholar]

- Wei, H.; Gu, Y. A Machine Learning Method for the Detection of Brown Core in the Chinese Pear Variety Huangguan Using a MOS-Based E-Nose. Sensors 2020, 20, 4499. [Google Scholar] [CrossRef]

- Yu, D.; Wang, X.; Liu, H.; Gu, Y. A Multitask Learning Framework for Multi-Property Detection of Wine. IEEE Access 2019, 7, 123151–123157. [Google Scholar] [CrossRef]

- Liu, H.; Yu, D.; Gu, Y. Classification and evaluation of quality grades of organic green teas using an electronic nose based on machine learning algorithms. IEEE Access 2019, 7, 172965–172973. [Google Scholar] [CrossRef]

- Russo, M.; Serra, D.; Suraci, F.; Di Sanzo, R.; Fuda, S.; Postorino, S. The potential of e-nose aroma profiling for identifying the geographical origin of licorice (Glycyrrhiza glabra L.) roots. Food Chem. 2014, 165, 467–474. [Google Scholar]

- Russo, M.; di Sanzo, R.; Cefaly, V.; Carabetta, S.; Serra, D.; Fuda, S. Non-destructive flavour evaluation of red onion (Allium cepa L.) Ecotypes: An electronic-nose-based approach. Food Chem. 2013, 141, 896–899. [Google Scholar]

- Liu, H.; Li, Q.; Gu, Y. Convenient and accurate method for the identification of Chinese teas by an electronic nose. IEEE Access 2019, 11, 79–88. [Google Scholar]

- Banerjee, M.B.; Roy, R.B.; Tudu, B.; Bandyopadhyay, R.; Bhattacharyya, N. Black tea classification employing feature fusion of E-Nose and E-Tongue responses. J. Food Eng. 2019, 244, 55–63. [Google Scholar]

- Lu, X.; Wang, J.; Lu, G.; Lin, B.; Chang, M.; He, W. Quality level identification of West Lake Longjing green tea using electronic nose. Sens. Actuators B Chem. 2019, 301, 127056. [Google Scholar]

- Gao, T.; Wang, Y.; Zhang, C.; Pittman, Z.A.; Oliveira, A.M.; Fu, K.; Willis, B.G. Classification of tea aromas using multi-nanoparticle based chemiresistor arrays. Sensors 2019, 19, 2574. [Google Scholar]

- Ruengdech, A.; Siripatrawan, U. Visualization of mulberry tea quality using an electronic sensor array, SPME-GC/MS, and sensory evaluation. Food Biosci. 2020, 36, 100593. [Google Scholar]

- Wang, X.; Gu, Y.; Liu, H. A transfer learning method for the protection of geographical indication in China using an electronic nose for the identification of Xihu Longjing tea. IEEE Sens. J. 2021, 21, 8065–8077. [Google Scholar] [CrossRef]

- Le Maout, P.; Wojkiewicz, J.L.; Redon, N.; Lahuec, C.; Seguin, F.; Dupont, L.; Pud, A. Polyaniline nanocomposites-based sensor array for breath ammonia analysis. Portable e-nose approach to non-invasive diagnosis of chronic kidney disease. Sens. Actuators B Chem. 2018, 274, 616–626. [Google Scholar]

- Estakhroyeh, H.R.; Rashedi, E.; Mehran, M. Design and construction of electronic nose for multi-purpose applications by sensor array arrangement using IBGSA. J. Intell. Robot. Syst. 2018, 92, 205–221. [Google Scholar]

- Zhang, S.; Xie, C.; Hu, M.; Li, H.; Bai, Z.; Zeng, D. An entire feature extraction method of metal oxide gas sensors. Sens. Actuators B Chem. 2008, 132, 81–89. [Google Scholar] [CrossRef]

- Liu, H.; Li, Q.; Li, Z.; Gu, Y. A Suppression Method of Concentration Background Noise by Transductive Transfer Learning for a Metal Oxide Semiconductor-Based Electronic Nose. Sensors 2020, 20, 1913. [Google Scholar]

- Yan, J.; Guo, X.; Duan, S.; Jia, P.; Wang, L.; Peng, C. Electronic nose feature extraction methods: A review. Sensors 2015, 15, 27804–27831. [Google Scholar] [CrossRef]

- Wei, Z.; Wang, J.; Zhang, W. Detecting internal quality of peanuts during storage using electronic nose responses combined with physicochemical methods. Food Chem. 2015, 177, 89–96. [Google Scholar] [CrossRef]

- Setkus, A.; Olekas, A.; Senulienė, D.; Falasconi, M.; Pardo, M.; Sberveglieri, G. Analysis of the dynamic features of metal oxide sensors in response to SPME fiber gas release. Sens. Actuators B Chem. 2010, 146, 539–544. [Google Scholar]

- Llobet, E.; Brezmes, J.; Vilanova, X.; Sueiras, J.E.; Correig, X. Qualitative and quantitative analysis of volatile organic compounds using transient and steady-state responses of a thick-film tin oxide gas sensor array. Sens. Actuators B Chem. 1997, 41, 13–21. [Google Scholar]

- Yang, Y.; Liu, H.; Gu, Y. A Model Transfer Learning Framework with Back-Propagation Neural Network for Wine and Chinese Liquor Detection by Electronic Nose. IEEE Access 2020, 8, 105278–105285. [Google Scholar] [CrossRef]

- Zhang, S.; Xie, C.; Zeng, D.; Zhang, Q.; Li, H.; Bi, Z. A feature extraction method and a sampling system for fast recognition of flammable liquids with a portable E-nose. Sens. Actuators B Chem. 2007, 124, 437–443. [Google Scholar] [CrossRef]

- Distante, C.; Leo, M.; Siciliano, P.; Persaud, K.C. On the study of feature extraction methods for an electronic nose. Sens. Actuators B Chem. 2002, 87, 274–288. [Google Scholar]

- Roussel, S.; Forsberg, G.; Steinmetz, V.; Grenier, P.; Bellon-Maurel, V. Optimisation of electronic nose measurements. Part I: Methodology of output feature selection. J. Food Eng. 1998, 37, 207–222. [Google Scholar]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar]

- Liang, S.; Liu, H.; Gu, Y.; Guo, X.; Li, H.; Li, L.; Tao, L. Fast automated detection of COVID-19 from medical images using convolutional neural networks. Commun. Biol. 2020, 4, 1–13. [Google Scholar]

- Kwon, S. A CNN-assisted enhanced audio signal processing for speech emotion recognition. Sensors 2020, 20, 183. [Google Scholar]

- Shi, Y.; Gong, F.; Wang, M.; Liu, J.; Wu, Y.; Men, H. A deep feature mining method of electronic nose sensor data for identifying beer olfactory information. J. Food Eng. 2019, 263, 437–445. [Google Scholar]

- Haddi, Z.; Mabrouk, S.; Bougrini, M.; Tahri, K.; Sghaier, K.; Barhoumi, H.; Bouchikhi, B. E-Nose and e-Tongue combination for improved recognition of fruit juice samples. Food Chem. 2014, 150, 246–253. [Google Scholar] [PubMed]

- GB/T 19460-2008 Product of Geographical Indication-Huangshan Maofeng Tea. Available online: Http://openstd.samr.gov.cn/bzgk/gb/newGbInfo?hcno=74D2547878A48B143A55D6C3722B6DA7 (accessed on 15 November 2020).

- GB/T 22737-2008 Product of Geographical Indication-Xinyang Maojian Tea. Tea Industry 2019–2020. Available online: Http://openstd.samr.gov.cn/bzgk/gb/newGbInfo?hcno=3776C98AEFD24228A55810EDDEBA073B (accessed on 15 November 2020).

- Cheng, S.; Hu, J.; Fox, D.; Zhang, Y. Tea tourism development in Xinyang, China: Stakeholders’ view. Tour. Manag. Perspect. 2012, 2, 28–34. [Google Scholar]

- AIRSENSE Analytics. Available online: Https://airsense.com/en (accessed on 19 March 2021).

- Shi, Y.; Yuan, H.; Xiong, C.; Zhang, Q.; Jia, S.; Liu, J.; Men, H. Improving performance: A collaborative strategy for the multi-data fusion of electronic nose and hyperspectral to track the quality difference of rice. Sens. Actuators B Chem. 2021, 333, 129546. [Google Scholar]

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2012, 2, 433–459. [Google Scholar]

- Shlens, J. A tutorial on principal component analysis. arXiv 2014, arXiv:1404.1100. [Google Scholar]

- Liu, H.; Li, Q.; Yan, B.; Zhang, L.; Gu, Y. Bionic electronic nose based on MOS sensors array and machine learning algorithms used for wine properties detection. Sensors 2019, 19, 45. [Google Scholar] [CrossRef] [Green Version]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, J.; Li, T.; Liu, H.; Li, J.; Wang, Y. Geographical traceability of wild Boletus edulis based on data fusion of FT-MIR and ICP-AES coupled with data mining methods (SVM). Spectrochim. Acta Part A 2017, 177, 20–27. [Google Scholar]

| No. | Maofeng Tea | Maojian Tea | ||||

|---|---|---|---|---|---|---|

| Name | Producing Area | Price ($/50 g) | Name | Producing Area | Price ($/50 g) | |

| 1 | HSMF | Huangshan City, Anhui Province | 47.2 | XYMJ | Xinyang City, Henan Province | 42.1 |

| 2 | YDMF | Wenzhou City, Zhejiang Province | 39.4 | GLMJ | Guilin City, Guangxi Province | 17.7 |

| 3 | EMMF | Yaan City, Sichuan Province | 15.5 | DYMJ | Qiannan Prefecture, Guizhou Province | 39.8 |

| 4 | LXMF | Jinghua City, Zhejiang Province | 26.0 | GZMJ | Changde City, Hunan Province | 35.5 |

| 5 | QSMF | Chizhou City, Anhui Province | 22.6 | QSMJ | Zunyi City, Guizhou Province | 16.8 |

| 6 | MTMF | Zunyi City, Guizhou Province | 13.1 | ZYMJ | Ankang City, Shaanxi Province | 27.3 |

| No. | Sensor | Main Performance |

|---|---|---|

| 1 | W1C | Sensitive to aromatic compounds |

| 2 | W5S | High sensitivity to nitrogen oxides, broad range sensitivity |

| 3 | W3C | Sensitive to ammonia and aromatic compounds |

| 4 | W6S | Sensitive mainly to hydrogen |

| 5 | W5C | Sensitive to alkanes and aromatic components and less sensitive to polar compounds |

| 6 | W1S | Sensitive to methane, broad range sensitivity |

| 7 | W1W | Sensitive primarily to sulfur compounds and many terpenes and organic sulfur compounds |

| 8 | W2S | Sensitive to ethanol and less sensitive to aromatic compounds |

| 9 | W2W | Sensitive to aromatic compounds and organic sulfur compounds |

| 10 | W3S | Highly sensitive to alkanes |

| Stage | Output | Structure Details |

|---|---|---|

| Conv 1 | 10 × 10 × 16 | 1 × 1, 16, stride=1 |

| Layer 1 | 10 × 10 × 32 | |

| Layer 2 | 5 × 5 × 64 | |

| Pool 1 | 1 × 1 × 64 | global average pool |

| Model | Accuracy | Recall | Precision | F1 Score | Kappa Score |

|---|---|---|---|---|---|

| SVM | 0.7250 | 0.7250 | 0.8624 | 0.7524 | 0.7000 |

| CNN-Shi [41] | 0.8527 | 0.8527 | 0.8683 | 0.8516 | 0.8939 |

| CNN-SVM-Shi [41] | 0.9111 | 0.9111 | 0.9142 | 0.9104 | 0.9030 |

| The proposed CNN | 0.9139 | 0.9139 | 0.9375 | 0.9152 | 0.9061 |

| The proposed CNN-SVM | 0.9611 | 0.9611 | 0.9686 | 0.9603 | 0.9576 |

| Class | SVM | CNN-Shi [41] | CNN-SVM-Shi [41] | The Proposed CNN | The Proposed CNN-SVM |

|---|---|---|---|---|---|

| HSMF | 0.8302 | 0.9310 | 0.9474 | 0.9474 | 0.9777 |

| YDMF | 0.5000 | 0.8462 | 0.9667 | 0.9355 | 0.9731 |

| EMMF | 0.8000 | 0.9375 | 0.9677 | 1.0000 | 1.0000 |

| LXMF | 0.8000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

| QSMF | 0.8000 | 0.6441 | 0.7234 | 0.6957 | 0.8924 |

| MTMF | 0.8000 | 0.8333 | 0.8364 | 0.8519 | 0.9108 |

| XYMJ | 0.7532 | 0.8150 | 0.9166 | 0.9474 | 0.9931 |

| GLMJ | 0.8000 | 0.7937 | 0.8824 | 0.8696 | 0.9375 |

| DYMJ | 0.8000 | 0.9231 | 1.0000 | 1.0000 | 1.0000 |

| GZMJ | 0.8000 | 0.8824 | 0.8955 | 0.9016 | 0.9438 |

| QSMJ | 0.5455 | 0.6129 | 0.7887 | 0.8333 | 0.8955 |

| ZYMJ | 0.8000 | 1.0000 | 1.0000 | 1.0000 | 1.0000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, D.; Gu, Y. A Machine Learning Method for the Fine-Grained Classification of Green Tea with Geographical Indication Using a MOS-Based Electronic Nose. Foods 2021, 10, 795. https://doi.org/10.3390/foods10040795

Yu D, Gu Y. A Machine Learning Method for the Fine-Grained Classification of Green Tea with Geographical Indication Using a MOS-Based Electronic Nose. Foods. 2021; 10(4):795. https://doi.org/10.3390/foods10040795

Chicago/Turabian StyleYu, Dongbing, and Yu Gu. 2021. "A Machine Learning Method for the Fine-Grained Classification of Green Tea with Geographical Indication Using a MOS-Based Electronic Nose" Foods 10, no. 4: 795. https://doi.org/10.3390/foods10040795