Robot Imitation Learning of Social Gestures with Self-Collision Avoidance Using a 3D Sensor

Abstract

:1. Introduction

1.1. Related Work

1.1.1. Imitation Learning

1.1.2. Imitation Learning with Self-Collision Avoidance

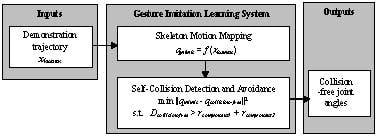

2. Gesture Imitation Learning System

2.1. Skeleton Motion Mapping Module

2.2. Self-Collision Detection and Avoidance Module

subject to D > r1 + r2

2.2.1. Self-Collision Detection

Collision Checks between a Sphere and a Capsule

- (1)

- If , the intersection of the perpendicular line lies on the extended line of near Point Mt, and the shortest distance is .

- (2)

- If , the intersection of the perpendicular line lies on the extended line of near Point Mb, and the shortest distance is .

- (3)

- If , the intersection of the perpendicular line lies on , and the shortest distance is .

Collision Checks between Two Capsules

- (1)

- If , the two lines and are skew lines, as shown in Figure 4. There are two situations as follows:

- (i)

- If both the intersections Mh and Nh lie on the two segments, i.e., and , then the shortest distance is the perpendicular distance .

- (ii)

- If one of the two intersections, Mh or Nh, do not lie on the segment, i.e., or , the shortest distance is the smallest among , and d1, d2, d3 and d4, as shown in Figure 4. d1, d2, d3 and d4 are the perpendicular distance from point Mt to , the perpendicular distance from point Mb to , the perpendicular distance from point Nt to, and the perpendicular distance from point Nb to , respectively.

- (2)

- If the two lines and are proportional, i.e., = , where k ≠ 0, the two lines are parallel, as shown in Figure 5. The shortest distance will be the smaller of d1 and d2.

- (3)

- If the two lines and are neither skew nor parallel, the two lines are intersected at P, as shown in Figure 6. There are two situations as follows:

- (i)

- If P lies on the two segments, i.e., and , the shortest distance is zero. Let the two lines and be represented as vectors and , the intersection of the two segments, i.e., the position of P is the solution of .

- (ii)

- If P does not lie on one of the two segments, i.e., or , the shortest distance is the smallest among d1, d2, d3 and d4.

2.2.2. Self-Collision Avoidance

3. Imitation Learning with the Tangy Robot

4. Experimental Section

4.1. Simulation Results

4.2. Real-World Results with Tangy

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

Appendix A. Kinematics Model of the Tangy Robot Arm

| Frames | θi (rad) | di (mm) | ai (mm) | αi (rad) |

|---|---|---|---|---|

| Frame {m} | 0 | 0 | −t1 | 0 |

| Frame {0} | π | 0 | 0 | π |

| Frame {1} | θ1 − π | 0 | 0 | π |

| Frame {2} | −θ2 + π | 0 | 0 | π |

| Frame {3} | −θ3 + π | d1 | 0 | −π |

| Frame {4} | θ4 − π | 0 | d2 | π |

| Frames | θi (rad) | di (mm) | ai (mm) | αi (rad) |

|---|---|---|---|---|

| Frame {m} | 0 | 0 | t1 | 0 |

| Frame {0} | π | 0 | 0 | π |

| Frame {1} | θ5 − π | 0 | 0 | π |

| Frame {2} | θ6 + π | 0 | 0 | π |

| Frame {3} | −θ7 + π | d1 | 0 | −π |

| Frame {4} | θ8 − π | 0 | d2 | π |

References

- Gonzalez-Pacheco, V.; Malfaz, M.; Fernandez, F.; Salichs, M.A. Teaching Human Poses Interactively to a Social Robot. Sensors 2013, 13, 12406–12430. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Louie, W.G.; Nejat, G. A Learning from Demonstration System Architecture for Social Group Recreational Activities. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 808–814. [Google Scholar]

- Louie, W.G.; Li, J.; Vaquero, T.; Nejat, G. A Focus Group Study on the Design Considerations and Impressions of a Socially Assistive Robot for Long-Term Care. In Proceedings of the 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 237–242. [Google Scholar]

- Clotet, E.; Martínez, D.; Moreno, J.; Tresanchez, M.; Palacín, J. Assistant Personal Robot (APR): Conception and Application of a Tele-Operated Assisted Living Robot. Sensors 2016, 16, 610. [Google Scholar] [CrossRef] [PubMed]

- Park, G.; Ra, S.; Kim, C.; Song, J. Imitation Learning of Robot Movement Using Evolutionary Algorithm. In Proceedings of the 17th World Congress the International Federation of Automatic Control, Seoul, Korea, 6–11 July 2008; Volume 41, pp. 730–735. [Google Scholar]

- Steil, J.J.; Röthling, F.; Haschke, R.; Ritter, H. Situated Robot Learning for Multi-modal Instruction and Imitation of Grasping. Robot. Auton. Syst. 2004, 47, 129–141. [Google Scholar] [CrossRef]

- Billard, A.; Calinon, S.; Dillmann, R.; Schaal, S. Robot Programming by Demonstration. In Springer Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2008; pp. 1371–1394. [Google Scholar]

- Calinon, S.; Sauser, E.L.; Billard, A.G.; Caldwell, D.G. Evaluation of a Probabilistic Approach to Learn and Reproduce Gestures by Imitation. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Anchorage, AK, USA, 3–7 May 2010; pp. 2671–2676. [Google Scholar]

- Ibrahim A, R.; Adiprawita, W. Analytical Upper Body Human Motion Transfer to NAO Humanoid Robot. Int. J. Electr. Eng. Inform. 2012, 4, 563–574. [Google Scholar] [CrossRef]

- Mukherjee, S.; Paramkusam, D.; Dwivedy, S.K. Inverse Kinematics of a NAO humanoid Robot Using Kinect to Track and Imitate Human Motion. In Proceedings of the 2015 International Conference on Robotics, Automation, Control and Embedded Systems (RACE), Chennai, India, 18–20 February 2015; pp. 1–7. [Google Scholar]

- Al-faiz, M.Z.; Shanta, A.F. Kinect-Based Humanoid Robotic Manipulator for Human Upper Limbs Movements Tracking. Intell. Control. Autom. 2015, 6, 29–37. [Google Scholar] [CrossRef]

- Paulin, S.; Botterill, T.; Chen, X.; Green, R. A Specialised Collision Detector for Grape Vines. In Proceedings of the Australasian Conference on Robotics and Automation, Canberra, Australia, 2–4 December 2015; pp. 1–5. [Google Scholar]

- Dube, C.; Tapson, J. A Model of the Humanoid Body for Self-Collision Detection Based on Elliptical Capsules. In Proceedings of the 2011 IEEE International Conference on Robotics and Biomimetics (ROBIO), Karon Beach, Thailand, 7–11 December 2011; pp. 2397–2402. [Google Scholar]

- Zlajpah, L. Smooth Transition between Tasks on a Kinematic Control Level: Application to Self-Collision Avoidance for Two Kuka LWR Robots. In Proceedings of the 2011 IEEE International Conference on Robotics and Biomimetics (ROBIO), Karon Beach, Thailand, 7–11 December 2011; pp. 162–167. [Google Scholar]

- Zhao, X.; Huang, Q.; Peng, Z.; Li, K. Kinematics Mapping and Similarity Evaluation of Humanoid Motion Based on Human Motion Capture. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; pp. 840–845. [Google Scholar]

- Luo, R.C.; Shih, B.H.; Lin, T.W. Real Time Human Motion Imitation of Anthropomorphic Dual Arm Robot Based on Cartesian Impedance Control. In Proceedings of the 2013 IEEE International Symposium Robotic and Sensors Environments (ROSE), Washington, DC, USA, 21 October 2013; pp. 25–30. [Google Scholar]

- Ou, Y.; Hu, J.; Wang, Z.; Fu, Y.; Wu, X.; Li, X. A Real-Time Human Imitation System Using Kinect. Int. J. Soc. Robot. 2015, 7, 587–600. [Google Scholar] [CrossRef]

- Alibeigi, M.; Rabiee, S.; Ahmadabadi, M.N. Inverse Kinematics Based Human Mimicking System Using Skeletal Tracking Technology. J. Intell. Robot. Syst. 2017, 85, 27–45. [Google Scholar] [CrossRef]

- Johnson, S.G. The NLopt Nonlinear-Optimization Package. 2007. Available online: http://ab-initio.mit.edu/nlopt (accessed on 2 June 2016).

- Powell, M.J. A View of Algorithms for Optimization without Derivatives. Math. Today Bull. Inst. Math. Appl. 2007, 43, 170–174. [Google Scholar]

- OpenNI. 2010. Available online: http://www.openni.org (accessed on 20 March 2016).

- International Organization for Standardization. ISO/TS 15066:2016(E): Robots and Robotic Devices—Collaborative Robots; ISO: Geneva, Switzerland, 2016. [Google Scholar]

- Saerbeck, M.; Bartneck, C. Perception of affect elicited by robot motion. In Proceedings of the 2010 ACM/IEEE International Conference on Human-Robot Interaction (HRI), Nara, Japan, 2–5 March 2010; pp. 53–60. [Google Scholar]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, T.; Louie, W.-Y.; Nejat, G.; Benhabib, B. Robot Imitation Learning of Social Gestures with Self-Collision Avoidance Using a 3D Sensor. Sensors 2018, 18, 2355. https://doi.org/10.3390/s18072355

Zhang T, Louie W-Y, Nejat G, Benhabib B. Robot Imitation Learning of Social Gestures with Self-Collision Avoidance Using a 3D Sensor. Sensors. 2018; 18(7):2355. https://doi.org/10.3390/s18072355

Chicago/Turabian StyleZhang, Tan, Wing-Yue Louie, Goldie Nejat, and Beno Benhabib. 2018. "Robot Imitation Learning of Social Gestures with Self-Collision Avoidance Using a 3D Sensor" Sensors 18, no. 7: 2355. https://doi.org/10.3390/s18072355