1. Introduction

Forest ecosystems are important for maintaining life on our planet, as they secure food for local population, contribute to soil conservation, mitigate the effects of climate change, and provide habitats for species and regulate water flow [

1]. In particular, tropical forests have a fundamental role for maintaining biodiversity. For example, the Amazon rainforest is responsible for hosting about a quarter of the world’s terrestrial species and accounts for

of global terrestrial photosynthesis [

2]. Furthermore, tropical forests play a key role in mitigating climate change; for example, the mature Amazon rainforest absorbed the carbons emissions of all Amazonian countries across two decades (1980 to 2000) [

3]. To support the construction of our knowledge on tropical forest processes, it is crucial to develop methods for large-scale forest inventories at the individual tree level to gather information on tree species, crown size, tree height, and diameter in order to estimate biomass and quantify its change through time. These tree metrics are critical for supporting applied research about tropical forest conservation, as these forests are globally threatened by widespread deforestation [

4].

Traditional techniques such as field inventories, especially in tropical regions, are costly and time consuming; they cover small areas (approximately 1 hectare (

)) when compared against inventories produced using a combination of remote sensing and field techniques [

5,

6]. For example, considering the Atlantic rainforest, an important Brazilian biome, only

of its total area has been inventoried so far [

7]. Forest inventories are fundamental for conservation and sustainable management, and remotely sensed aerial or satellite information could be applied to produce them [

6].

With the evolution of satellite imaging technologies, the remote sensing has been getting increasingly accurate information over larger areas [

8], allowing this information to be applied to forestry and monitoring deforested regions [

9,

10,

11]. The recent availability of satellite images with very high spatial resolution (1 pixel < 1 meter (m)) allows us to observe each individual tree crown (ITC), which could enable the development of algorithms for obtaining metrics (for example, crown size) from them; thus, this information could be applied to assist the production of forest inventories [

12,

13]. The advantage of use metrics obtained from remote sensing data to produce forest inventories is that they could cover large areas with a lower production cost when compared to the inventories produced by a field campaign [

13,

14,

15,

16].

Previous condition for performing a forest inventory with relevant information is to apply a tree crown detection and delineation (TCDD) technique [

17]. TCDD techniques help with collecting information about the number of trees in the area, a tree crown’s size, and the distance between them [

13,

18,

19]. In addition, the TCDD with high accuracy allows a better characterization of crown’s spectral signature, a factor that can be applied to develop algorithms for species recognition [

13,

18,

19,

20]. To perform TCDD using remotely sensed images from optical passive sensors, the tree crown must be visually distinguishable, implying that spatial resolution of the image must be greater than the tree’s crown size [

18]. Therefore, remotely sensed aerial or satellite images with spatial resolution in a range of 0.1–1 m/pixel allows techniques to be developed to carry out the TCDD [

21]. Worldview-2 (WV-2), for example, is among the satellites that have provided optical images with very high spatial resolution which have been applied in different studies, such as those regarding species identification as well as TCDD [

13,

20,

22].

Another promising remote sense technology applied for TCDD is the light detection and ranging (LiDAR). Several studies that used LiDAR sensors have obtained promising results in TCDD [

23,

24]. However, the data acquisition by LiDAR sensors is expensive and its processing is complex, which limits the reproducibility of the methods [

25]. When comparing the costs of obtaining the data collected by airborne campaigns and those acquired with satellites with high spatial resolution, the latter is more affordable and provides information with multi-spectral resolution [

13].

TCDD algorithms perform two distinct operations: the first is crown detection, that is, determining the location that the crown occupies, and the second is delineating the tree crown, or, in other words, determining which pixels compose the crown, to establish its borders with other trees or other elements of the scene [

26]. There is a great variety of algorithms for TCDD, which can be categorized in four groups: local maximum/minimum detection [

27], edge detection [

28], region growing [

29], and template matching [

30].

TCDD approaches that use maximum/minimum location and edge detection are based on the assumption that a treetop has a mountainous structure, with a bright region at the top, and a shaded region between crowns [

27,

28]. The use of algorithms that are based in finding brightness pixels (called

local maximum algorithms) may be useful in temperate forest regions, but this category of algorithm may not be suitable for a tropical forest region due to a large variety of tree crown formats [

13]. In addition, pixels with maximum brightness may not be at the top of the crowns but in a region close to the edge; this situation can occur mainly in rounded tree crowns [

13].

The techniques that use region growing are based on the crown spectral characteristics. The result of the application of this category depends on the density of the forest, the tree position and the dataset resolution [

29,

31]. Region growing is a segmentation approach which splits an image in different areas and recognizes objects within each sub-image. This technique depends on the assumption that the color intensity is high on the top of the tree crown and decreases gradually until the border is reached, which has a shaded area [

32].

The template matching approach is based on the tree crown’s shape [

31]. Generally, this approach models a tree crown using an ellipsoid (template equation), and different tree crowns shapes can be modeled by varying the ellipsoid surface (changing the ellipsoid parameters equation). Then, crowns with high correlation with the template equation are considered likely to be tree crowns [

31]. Artificial neural networks (ANNs) also have been applied as a template matching step in TCDD algorithms [

33,

34].

Recently, a novel ANN approach, called convolutional neural network (CNN), has become the state of the art for solving different computer vision problems, such as face recognition [

35], object detection [

36], human pose estimation [

37], and tree species detection [

38]. Due to its promising results in image processing, the CNNs have been used to solve different problems within remote sensing, such as land cover classification [

39], scene classification [

40], object extraction [

41], species classification (e.g., oil palm tree detection in a region located in the south of Malaysia [

42]), fine-grained mapping of vegetation species and communities in the central Chile [

43], tree crown detection [

44], and very high-resolution regional tree species maps [

13].

The CNN (a deep learning algorithm) is a feed-forward neural network trained in a supervised way, which has gained prominence due to its application in computer vision, mainly for solving instance segmentation problems in a scene [

45]. Instance segmentation aims to identify an object at the pixel level and perform its complete delineation. Within the CNN’s architectures, the Mask R-CNN stands out, which has outperformed the results obtained by other architectures designed for instance segmentation tasks [

45]. Despite the promising results reached by this CNN procedure in recent studies, such as hangar detection [

46], livestock farming management [

47], and ship detection [

48], very little was studied about its application in high spatial resolution satellite images.

One of the main problems faced during the application of CNNs (including Mask R-CNN) is the composition of a training set with enough training examples (training patterns) for neural network learning and, hence, to solve the problem in a satisfactory way [

44,

45]. Deep neural networks, including CNN, require a large training set due to the number of free parameters (weights and bias) belonging to the network architecture; these free parameters need to be adjusted during the learning process. There is a directly proportional relationship between the number of free parameters and the number of patterns as input for the neural network in the training phase [

49,

50]. Gathering patterns for the CNN training, allowing the algorithm to solve the object detection or instance segmentation problems is costly and difficult because training sets must be composed of thousands of images, all with objects of interest with the correct delineations [

44]. In addition, the quality and quantity of training patterns can impact the prediction accuracy. When using a CNN for TCDD, the collecting of training samples may become more difficult because, even in high resolution images, especially over tropical forest regions, identify ITC samples are not trivial. One solution proposed for this problem is the use of an unsupervised algorithm to select the training patterns, but the inaccuracy of this algorithm during the selection of samples for training may negatively impact the CNN’s performance [

44,

51]. Another alternative is the use of LiDAR point cloud information to help with the manual delineation [

44].

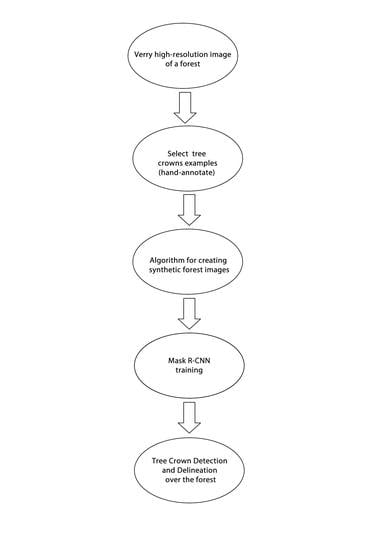

According to this context, this research proposes the application of Mask R-CNN to perform TCDD in very high spatial resolution WV-2 images (

m per pixel) from a highly diverse tropical forest area. To construct the training set, an algorithm was implemented to obtain synthetic images. This algorithm produces the synthetic image using some hand-annotated crowns, and its implementation has two main objectives: first, to overcome the need to delineate by hand a large training set composed of images with all ITCs delineated, and, second, to evaluate its use as an alternative to other techniques (such as LiDAR and unsupervised algorithms) during the training set construction. The main objective of the study is to present a new employment of deep learning to delineate each tree crown individually in tropical forests. The main innovation presented is the use of a deep learning-based algorithm to perform the TCDD over a tropical forest image, which produces as a response the tree crown delineation. In addition, according to Weinstein et al. [

44], there is a difficulty to compare results about TCDD due to variation in applied measurements; therefore, this research provides set of metrics and graphical analysis that can be used like a guideline for the analysis of others research that will perform the TCDD. For these reasons, this methodology can be applied as an auxiliary tool in the development of forest inventories of tropical regions.

4. Discussion

Our research proposes the application of one of the latest-developed deep learning techniques for image instance segmentation, known as Mask R-CNN [

45], to perform tree crown detection and delineation in a tropical forest with a very high-resolution image. The study site was the forest of Santa Genebra Reserve, a well preserved fragment of Atlantic rainforest with a heterogeneous canopy cover [

20].

One of the main difficulties faced during the work with CNN for image segmentation is building the training set, as it needs thousands of images with the object of interest manually delineated for it to be able to properly train the network. For the TCDD within a tropical forest, the difficulty to obtain images with all tree crown hand-delineated is high due to the environment complexity (e.g., the number of tree crowns and species), even using very high spatial resolution images. As an alternative to the difficulty of obtaining forest images with hand-annotated tree crown examples for deep learning training, this research uses an algorithm for the creation of synthetic forests. Using the proposed algorithm together a set of hand-delineated tree crowns, it was possible to construct thousands of synthetic forest images, as the algorithm created images with tree crown overlap and keeps the correct delineation. There is also the possibility of varying the number of tree crowns within the image, thereby allowing the creation of regions with variety in canopy density. Furthermore, each new tree crown overlay is a new pattern to be input into the neural network during the training process, which could increase its capacity in detecting tree crowns within a forest and which avoids the overfitting during the learning process.

The main advantages of the method proposed in this article include the ability to detect and delineate tree crown within a tropical forest with high accuracy and working only with an RGB satellite image to facilitate its reproduction to other areas of interest. Our findings confirmed that the CNN-based model is able to accurately detect and delineate tree crown in a highly heterogeneous tropical forest. We discuss this in greater detail in the sub-sections below the model’s performance, limitations, and perspectives.

4.1. TCDD Detection Performance

With a Kappa index and global accuracy values for tree crown detection of

and

, respectively,

Table 5), the method proposed proves to be useful for application over tropical forests. Using a number of pixels correctly detected over

, a total of 345 tree crowns were considered a true positive tree crown, and the value of Kappa index and the global accuracy were

and

, respectively. Instead, the use of the number of pixels correctly detected to evaluate the detection accuracy, and the

value can be used for this purpose. The research developed for [

44] considered the calculus of metrics using the results with

minimum value of

more stringent. Our research detects 349 tree crowns with an

value

and 79 had an

value

or were not detected. Using these values, the Kappa index obtained was

and the global accuracy detection was

. Comparing those results obtained with a recent study on the same region, our research achieved better results, as Wagner et al. [

13] obtained (for crown detection) a Kappa index value of

and a global accuracy of

. The research developed by Wagner et al. [

13] proposed an algorithm based on edge detection and region growing to perform the TCDD.

In the research developed by Larsen et al. [

63], which compared six different approaches to tree crown detection algorithms, global accuracy results ranged from

(region with high crown density) to

(tree planting region). The six algorithms used in the research developed by Larsen et al. [

63] were the region growing, treetop technique, template matching (but not a machine learning approach), scale-space, Markov random fields, and marked point process. Thus, the results obtained for detection are close to the best values obtained, with the difference that the results obtained in our research were obtained over a highly diverse tropical forest region while the results of Larsen et al. [

63] were obtained over a coniferous forests.

Table 8 summarizes the detection results obtained by Larsen et al. [

63], in the region with high crown density, the detection result obtained by Wagner et al. [

13], and the detection results of our research.

Another important result concerns the Mask R-CNN robustness in avoiding tree crown over-segmentation and under-segmentation. Over-segmentation occurs when the Mask R-CNN splits one example from the evaluation set into two or more tree crowns. Under-segmentation occurs when two or more examples from evaluation set are detected by the neural network as just one tree crown. From the total tree crowns detected by Mask R-CNN (395),

were intersected by one segment. In other words, they were composed by only one tree crown (one segment); hence, they did not suffer from over-segmentation (see

Table 6). Only

were intersected (are composed) by two or more segments (tree crown). As shown in the graph in

Figure 9, the number of segments that intersect a tree crown tends to increase with the dimensions of the crown. This occurs because the great majority of tree crowns obtained for the neural network training have a crown area smaller than 20 m

. An alternative, to circumvent this problem, could be reached introducing larger tree crowns into the training set. Under-segmentation did not occur in this research.

4.2. TCDD Delineation Performance

In this research,

of tree crowns from the evaluation dataset obtained

values over

(the overall accuracy of delineation); from these tree crowns, the

,

, and

scores values were

,

, and

, respectively. The value of

,

, and

score considering all tree crowns detected by Mask R-CNN were respectively,

,

, and

. For semantic segmentation problems using deep learning, this result is considered significant. In the research developed by Weinstein et al. [

44], a pipeline was proposed using hand-annotation examples and LiDAR data to train a CNN to perform the TCDD, the authors evaluated their research using tree different approaches for CNN’s training: the first used the hand-annotation data during the training phase, the second used a self-supervised model and the third, called a full model, combine the two previous strategies. The hand-annotated model obtained a

of

and a Precision value of

. The full model obtained a better result with

,

of

, and

, respectively, with the difference that the study site used is an open woodland located in California, USA.

The study developed by Gomes et al. [

64] performed the TCDD over sub-meter satellite imagery and also applied the metrics

,

, and

for results evaluation. The algorithm proposed by [

64] was based on a marked point process (MPP) to detect and delineate individual tree crowns, and the research obtained the following average values

,

, and

for

,

, and

score, respectively, but none of the study sites used have the same tree crown complexity of a tropical rain-forest.

Table 9 summarizes the value of

,

, and

score of recent research that performed TCDD over sub-meter satellite imagery.

With the overall accuracy rate of

, our method proves to be useful for performing the TCDD because recent research of TCDD algorithm development has obtained close accuracy values; Tochon et al. [

25], and Singh et al. [

15] have reported accuracy of

and

, respectively, Wagner et al. [

13] has reported

and Dalponte et al. [

66] achieved

. Moreover, the relationship between the tree crown area (in pixels) of examples from the evaluation set and tree crown area (in pixels) obtained by the proposed segmentation algorithm,

Figure 10, has a value of

of

, which demonstrates the robustness of the Mask R-CNN to estimate the crowns dimensions and hence to perform the tree crown delineation.

Dalponte et al. [

66] reported that the size of small treetops tends to be underestimated when optical images are applied in the TCDD process. In our study, this problem did not occur since there is no relationship between pixel deficit and tree crown area (see

Figure 11). Pixel deficit occurs mainly in larger tree crowns (see

Figure 11). In addition, our results show that the tree crowns with the smallest area have the best value of

; see

Figure 15A.

However, the proposed algorithm tended to overestimate the tree crown area because the number of examples of excess pixel crowns is greater when compared to the number of examples with pixel deficit (see

Figure 11 and

Figure 12). The overestimated areas occur mainly in tree crowns with area less than

m

(see

Figure 12), but the result for the crown delineation was not significantly impaired because the pixel difference between the segmented tree crowns and the evaluation tree crowns had a normal distribution with a mean of –6 pixels (see

Figure 13) and an

value distribution greater than

for

of examples. In addition, the

score achieved significant values, over

.

In this research, the total of tree crowns detected and delineated by Mask R-CNN was of

, and the tree crown area ranges from 2 m

to

m

(see

Figure S1 in the Supplementary Materials). The average crown area was

m

and the most part of the area ranged from

m

to 34 m

(the 5th to 95th percentiles, respectively). In the research developed by Wagner et al. [

13], which implemented a traditional TCDD algorithm to work over the Santa Genebra Forest Reserve, only 23,278 tree crowns were detected and delineated.

4.3. Algorithm Requirements

4.3.1. Shade Effect—Limitations and How to Resolve Them

One of the main issues for the accuracy of TCDD algorithm is the shade effect [

13,

66], and, in our analysis, some tree crowns present in shadow regions were ignored by the model,

Figure 14. According to Dalponte et al. [

66], the shadow effect in TCDD algorithms could be solved using LiDAR data. Considering the TCDD performed by a neural network approach, to decrease the shadow effect, another strategy to work around the problem using multi-spectral images could be to feed the model with a labeled image of trees present in a shadow region during the neural network training.

4.3.2. How to Deal with the Leaf Fall Effect

The image of this study was taken during the wet season, when all crowns are likely foliated. However, the Santa Genebra reserve is a seasonal semi-deciduous forest with a leaf loss ranging from

to

during the dry season [

20] plus seasonal changes in spectral characteristics [

67]. These characteristics could increase the shadow presence and decrease the algorithm accuracy. As the algorithm presented in this research is based on a supervised neural network, to handle with leafless tree crowns, the presentation of images with leafless trees during training can be an alternative to reduce algorithm errors. Moreover, with the adoption of this alternative, the algorithm could operate over images taken throughout the year. Further works are needed to improve the training sample of the model and limit the effects of shade and seasonal changes in reflectance.

4.3.3. Algorithm Limitations

The prediction of the algorithm was made on patches of pixels in a grid approach; between the patches, there is an overlap of two columns and rows of pixels, and then the prediction for each patch were merged. After the prediction, the algorithm that performs the tree crown merging is applied to make the union between the crown which was split by the grid.

The grid size (

pixels) is not a requirement of the algorithm but was designed to deal with the limitation of the GPU hardware, mainly the memory (hardware configuration

Table 3). However, more recent hardware could make the prediction with a larger grid size.

Through a visual analysis of

Figure 14B, it is possible to observe that the algorithm that performs the tree crown merging works correctly. However, if a different grid size is adopted, this algorithm must be modified because it was developed to solve the merging following the limitations of this research.

4.4. Algorithm’s Advance

Since the launch of Ikonos in 1999, a great number of very high-resolution optical imagery from different satellites (e.g., WorldView, Geoeye, and Quickbird) have become available for the study of ITCs. The development of automatic techniques to perform the TCDD using imagery from these satellites have attracted attention from research from image processing, remote sensing, and forestry [

26,

64].

Most of the algorithms which perform the TCDD on very high-resolution satellite imagery were developed to work on specific regions of temperate forests [

26]. Typically, these algorithms use techniques such as region growing, edge detection, local maximum, and template matching (with a specific geometric form, such as an ellipse) [

64]. The use of these algorithms over tropical forests may be very difficult as they could need several configurations because, in these regions, the ITCs are not so homogeneous (size and shapes are different) and their spectral characteristics and texture vary widely. However, the application of our technique could be extended to any type of region (for example, temperate forests or other tropical forests) because it depends only some hand-annotated ITCs to feed the algorithm of synthetic image creation.

Tropical forests can be composed of a great amount of tree crowns with extensive variety of colors, sizes, and shapes, and even using very high-resolution images is difficult (or impossible) to hand-annotate all ITCs within a specific region. For the training of a delineation algorithm based on a CNN approach, all the ITCs within the imagery of the training set must be annotated, or else the algorithm does not converge to a desired response. Therefore, our approach, which uses the creation of synthetic images, could be an alternative to overcome the need to hand-annotate all tree crowns within a tropical forest region. Besides that, our technique proved to be useful because it achieved the score and the average value of and , respectively (considering the Mask R-CNN response with ).

4.5. Application Perspectives

An important aspect of tropical forests is that their biomass is concentrated in large trees [

68]. The research developed by Blanchard et al. [

69] shows that the relationship between diameter breast height and crown area for individual trees, within tropical regions, is stable with no significant variation. In a perspective for biomass estimation, our delineation algorithm could be applied for this purpose using optical images and large-scale assessments using optical imagery. However, a more detailed work applying our method and validation analyses over different forested areas are still needed to support this idea. For example, field data can be used to calibrate our algorithm and then use its answer to estimate the biomass and biomass change of large area using high resolution satellite images.

Another perspective for a possible application of our algorithm of TCDD is related to mapping species. In the research developed in the same forest site by Wagner et al. [

13], Ferreira et al. [

67], after the delineation, support vector machines (SVMs) were successfully applied to determine the species of each delineated tree crown, showing that spectral information could be used to predict the species. The Mask R-CNN could also be applied to mapping species. The Mask R-CNN works with two distinct modules: (i) one to determine the bounding box of the object of interest; and (ii) another to determine the pixels belonging to each object of interest (see

Figure 4). These two modules are formed by a set of convolutional layers, also known as convolutional filters. In the module formed by Faster R-CNN, the convolutional filters closest to the input layers are responsible for detecting the low-level features. On the other hand, the last convolutional filters from Faster R-CNN are more specialized and they are responsible for detecting the high-level features. Thus, an analysis over the response of high level filter from Mask R-CNN (mainly those that compose the Faster R-CNN) could be applied to identify the filters that are activated during the prediction; the features set obtained from these filters can be applied to perform a more specialized image segmentation, such as species classification. A review about CNN filter analysis can be obtained in Bilal et al. [

70]. For example, after the delineation, the high level feature information can be obtained from high level filters and used for specie recognition, since each tree is delineated as a unique object for our algorithm, and another machine learning technique (other CNN or even the Mask R-CNN) could be used to analyze those filter information and identify the tree species. However, as with a biomass estimation perspective, more detailed work on this issue should be developed to support this assumption.

Another potential application for our CNN-based TCDD algorithm is on forest dynamics applications related to tree mortality and logging detection (either legal or illegal). As an example, the study developed by Dalagnol et al. [

71] demonstrated that multi-temporal very high-resolution optical imagery (e.g., WV-2 and GeoEye-1) allows the semi-automatic detection of individual tree crowns loss with moderate accuracy (>

). However, their tree loss detection was based on applying a simple watershed-based method for TCDD and analyzing the spectral difference between the two dates’ imageries. Therefore, if such study would use an improved TCDD method such as ours, it could produce more reliable estimates of spectral difference between imagery because of the improved tree detection and segmentation and minimal inclusion of shadows in the tree crown segments. Moreover, a more precise TCDD would even allow a direct spatial comparison between dates which was not conducted in such study by comparing which objects are present or absent between dates.