Development and Validation of a Novel Methodological Pipeline to Integrate Neuroimaging and Photogrammetry for Immersive 3D Cadaveric Neurosurgical Simulation

- 1Department of Neurosurgery, Barrow Neurological Institute, St. Joseph’s Hospital and Medical Center, Phoenix, Arizona

- 2Department of Neurosurgery, Hacettepe University Faculty of Medicine, Ankara, Turkey

- 3BTech Innovation, METU Technopark, Ankara, Turkey

- 4Department of Neurosurgery, University of Health Sciences, Sisli Hamidiye Etfal Training and Research Hospital, Istanbul, Turkey

- 5Department of Anatomy, Hacettepe University Faculty of Medicine, Ankara, Turkey

Background: Visualizing and comprehending 3-dimensional (3D) neuroanatomy is challenging. Cadaver dissection is limited by low availability, high cost, and the need for specialized facilities. New technologies, including 3D rendering of neuroimaging, 3D pictures, and 3D videos, are filling this gap and facilitating learning, but they also have limitations. This proof-of-concept study explored the feasibility of combining the spatial accuracy of 3D reconstructed neuroimaging data with realistic texture and fine anatomical details from 3D photogrammetry to create high-fidelity cadaveric neurosurgical simulations.

Methods: Four fixed and injected cadaver heads underwent neuroimaging. To create 3D virtual models, surfaces were rendered using magnetic resonance imaging (MRI) and computed tomography (CT) scans, and segmented anatomical structures were created. A stepwise pterional craniotomy procedure was performed with synchronous neuronavigation and photogrammetry data collection. All points acquired in 3D navigational space were imported and registered in a 3D virtual model space. A novel machine learning-assisted monocular-depth estimation tool was used to create 3D reconstructions of 2-dimensional (2D) photographs. Depth maps were converted into 3D mesh geometry, which was merged with the 3D virtual model’s brain surface anatomy to test its accuracy. Quantitative measurements were used to validate the spatial accuracy of 3D reconstructions of different techniques.

Results: Successful multilayered 3D virtual models were created using volumetric neuroimaging data. The monocular-depth estimation technique created qualitatively accurate 3D representations of photographs. When 2 models were merged, 63% of surface maps were perfectly matched (mean [SD] deviation 0.7 ± 1.9 mm; range −7 to 7 mm). Maximal distortions were observed at the epicenter and toward the edges of the imaged surfaces. Virtual 3D models provided accurate virtual measurements (margin of error <1.5 mm) as validated by cross-measurements performed in a real-world setting.

Conclusion: The novel technique of co-registering neuroimaging and photogrammetry-based 3D models can (1) substantially supplement anatomical knowledge by adding detail and texture to 3D virtual models, (2) meaningfully improve the spatial accuracy of 3D photogrammetry, (3) allow for accurate quantitative measurements without the need for actual dissection, (4) digitalize the complete surface anatomy of a cadaver, and (5) be used in realistic surgical simulations to improve neurosurgical education.

Introduction

One of the most challenging aspects of neurosurgery is visualizing and comprehending 3-dimensional (3D) neuroanatomy. The acquisition of this knowledge and its successful clinical application require years of practice in the operating room and anatomical dissection laboratory. However, both of these training resources may be limited by institutional resources. Furthermore, residents’ work-hour restrictions, decreases in cadaver availability, and the interruption of hands-on training courses as a result of the COVID-19 pandemic have increased the difficulty of acquiring appropriate anatomical training for neurosurgeons. However, the advent of new technology that enables 3D rendering of neuroimaging has allowed the development of 3D virtual models that can be used to augment neurosurgical education. Such tools have been shown to improve performance and have become a complementary part of neurosurgical education when access to cadaveric specimens is restricted and will likely achieve more integration and impact upon neurosurgical training (1–4).

New advances in imaging, computer vision, image-processing technologies, and multidimensional rendering of neuroanatomical models have introduced extended-reality educational tools, such as virtual and augmented reality, into neurosurgeon training (2–6). Photogrammetry and related technologies may complement advanced tomographic neuroimaging studies (e.g., magnetic resonance imaging [MRI] and computed tomography [CT]) by providing detailed surface information of neuroanatomical structures. Stereoscopy, which is commonly used for neurosurgical education (7–9), is the process by which two 2-dimensional (2D) photographs of the same object taken at slightly different angles are viewed together, creating an impression of depth and solidity. Photogrammetry is a technique in which 2D photographs of an object are taken at varying angles (up to 360°) and then overlaid using computer software to generate a 3D reconstruction (10). In a previous study (1), a 3D model of a real cadaveric brain specimen was created using photogrammetry and 360° spanning. Although this technique is very practical and useful, it is limited to objects that can be scanned in 360°. Unfortunately, in most surgical scenarios, the surgeon or trainee can only visualize a portion of the surgical anatomy, usually through a narrow corridor. Thus, more practical photogrammetric approaches are needed to overcome this barrier. Recently, an artificial intelligence (AI)-based tool was developed to estimate monocular depth, which potentially makes it possible to convert 2D photographs into 3D images. We believe that this tool can be used to overcome limitations and bridge the gap between neuroimaging and photogrammetry scanning technologies.

In this proof-of-concept study, we brought together the spatial accuracy of 3D reconstruction of cadaveric neuroimaging data with the realistic texture of 3D photogrammetry to create high-fidelity neurosurgical simulations for education and surgical planning. The method sought to merge the spatial accuracy of medical MRI and CT images with 3D textural features obtained using advanced photogrammetry techniques. We hypothesized that current imaging, photography, computer vision, and rendering technologies could be effectively combined to maximize the use of cadaveric specimens for education, surgical planning, and research. This innovative approach aimed to not only enhance neurosurgical anatomy training but also create a new digital interactive cadaveric imaging database for future anatomical research. If this attempt is successful, a cadaveric specimen would no longer have to be discarded after fixation and dissection. A cadaver could instead be digitalized and passed along from generation to generation in the same condition in which it was first dissected. Herein, we describe and validate our novel methodological paradigm to create qualitatively and quantitatively accurate 3D models.

Materials and Methods

Study Design

No institutional review board approval was required for this study. This proof-of-concept study included two phases: development and validation. In the first phase, a methodological pipeline was developed to combine various imaging, modeling, and visualization techniques to create integrated, multilayered, immersive 3D models for cadaveric research and education (Figure 1). After the development phase, the second phase of the study included validation of this novel methodological approach by matching agreement between surface maps. Four embalmed and injected cadaver heads were used for method development (n = 3) and quantitative validation (n = 1). We initially used 3 heads to optimize the methodological pipeline. Once optimized, we applied this pipeline to produce the virtual model in 1 head for this proof-of-concept study. Importantly, all heads used in this study were of the highest anatomical quality.

Figure 1. The methodological pipeline to fully digitalize the cadavers. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

Neuroimaging

All cadaver heads underwent MRI and CT scans. A 3T MR-system machine (Ingenia, Philips Healthcare, Best, Netherlands) was used for volumetric imaging (1.0 mm), with the 3DT1 MPRAGE and 3DT2 FSE sequences. A LightSpeed VCT 64-slice CT scanner (General Electric Company, Boston, MA, USA) was used for thin-cut (0.65-mm) axial CT images.

3D Rendering

All 3D planning and modeling studies were performed with Mimics Innovation Suite 22.0 Software (Materialise, Leuven, Belgium). DICOM files (in all axial, coronal, and sagittal planes) were imported into Mimics. The masking process was undertaken using Hounsfield unit (HU) values on 2D radiological images, and segmentation of various anatomical structures was performed according to defined anatomical borders visualized on MRI scans. Bone was segmented based on CT scans, whereas MRI was used for all other structures (i.e., soft tissue). The 2 imaging modalities were merged and aligned with the Align Global Registration module.

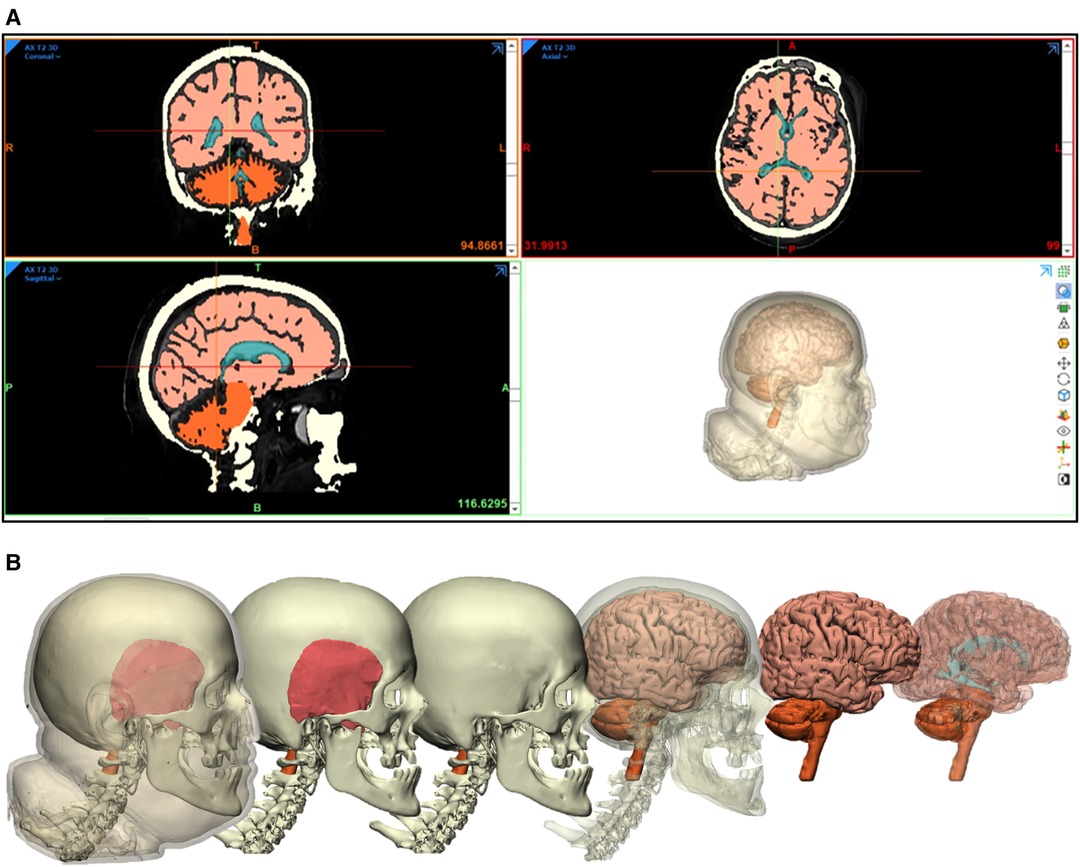

Three-dimensional surface-rendered models of different anatomical structures were created. The design module feature (3-matic 14.0, Materialise, Leuven, Belgium) was used for fine-tuning and model details. Segmented structures included the skin, temporal muscle, bone, cerebrum, cerebellum, brainstem, and ventricles (Figure 2).

Figure 2. Segmentation and rendering process. (A) Neuroimaging data used for 3D rendering of segmented anatomical structures. (B) 3D rendering of segmented anatomical structures. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

Pterional Craniotomy Model

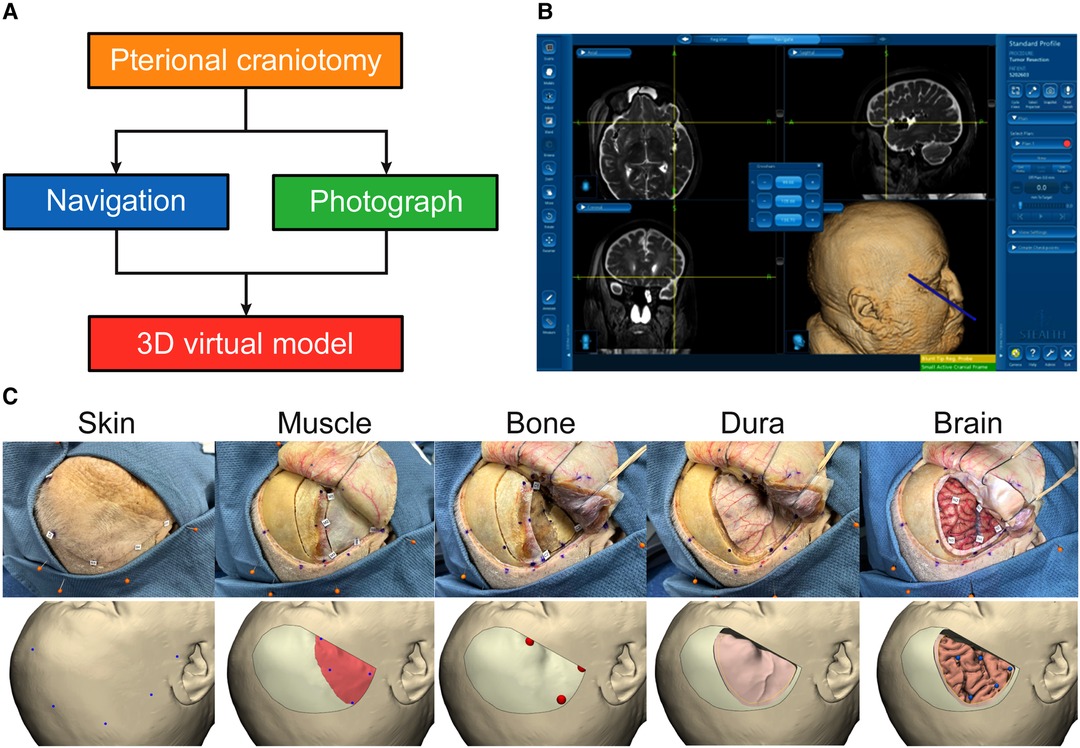

To create a 3D pterional craniotomy model, neuronavigation used during a stepwise surgical procedure was combined with photogrammetry (Figure 3A).

Figure 3. Creation of virtual pterional craniotomy model. (A) Steps to create 3D pterional craniotomy model. (B) Synchronous data acquisition through neuronavigation. (C) Stepwise pterional craniotomy with images in the real-world setting (top panels) and 3D virtual model (bottom panels). Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

Surgical Procedure

A cadaver head was placed and fixed in the Mayfield 3-pin head holder. Neuronavigation registration was performed using surface landmarks (Figure 3B). A pterional craniotomy was performed in a stepwise manner. Surgery was divided into 5 steps: (1) skin incision and dissection, (2) muscle incision and dissection, (3) burr holes and craniotomy, (4) durotomy, and (5) the intradural phase (exposure of brain) (Figure 3C).

Neuronavigation

Medtronic StealthStation S7 (Medtronic PLC, Minneapolis, MN, USA) was used for neuronavigation. Volumetric MRI scans were imported. Registration was performed using surface landmarks. Cartesian coordinates (x, y, z) were acquired and recorded for all registration points and assigned points in 3D space using the navigation probe. Each step of the surgical procedure included the acquisition of 3 to 8 different points corresponding to anatomical structures in the surgical field. For each point, screenshots of the navigation system were obtained verifying and illustrating the corresponding coordinates on axial, coronal, and sagittal planes as well as the 3D reconstructed image on the neuronavigation system (Figure 3B).

Photography

All stages of surgery were photographed with a professional camera (Canon EOS 5DS R, Canon Inc., Ota City, Tokyo, Japan) and a smartphone (iPhone 12, Apple Inc., Cupertino, CA, USA). Each acquired point in the surgical field was tagged with a 5 × 5-mm paper marker indicating an alphabetic-numeric code (e.g., S1 for the first point of skin incision) and photographed for further cross-validation.

Importing Neuronavigation Data to the 3D-Rendered Virtual Model

After the surgery was performed and the Cartesian coordinates for each point were obtained and verified with navigation, all points were imported into a 3D virtual model environment. The original landmarks for neuronavigation registration were used to register the point cloud of the 3D virtual model space. Integrating neuronavigation coordinates and photography data allowed surgical steps to be simulated on the 3D model. All pertinent surgical steps and layers of a pterional craniotomy were included, such as skin incision, muscle incision, burr holes and craniotomy, dura incision, and brain exposure (Figure 3C). Points relevant to each step were added to the 3D virtual environment.

Machine Learning–Assisted 3D Reconstruction of 2D Photographs

Traditional photogrammetry tools use multiple photographs of an object to create 3D representations, whereas a novel AI-based depth-estimation tool developed to produce accurate 3D reconstructions of single 2D photographs provides a new form of photogrammetry. Intel ISL MiDaS v2.1 (Intel Labs, Santa Clara, CA, USA) is a neural network–based platform for robust depth estimation from 2D images that was used to create depth maps. Depth maps are images where every pixel is assigned an intensity value on a specific color-range scale according to its location on the z-axis (i.e., depth axis). Open3D library (an open-source library (11)) was used to create a point cloud from depth maps. MeshLab software (Visual Computing Lab, Pisa, Italy) was used for quality improvement and simplification of 3D modeling and surface reconstruction. The Sketchfab (Epic Games, Cary, NC, USA) website platform was used to visualize and share 3D reconstructed photographic images.

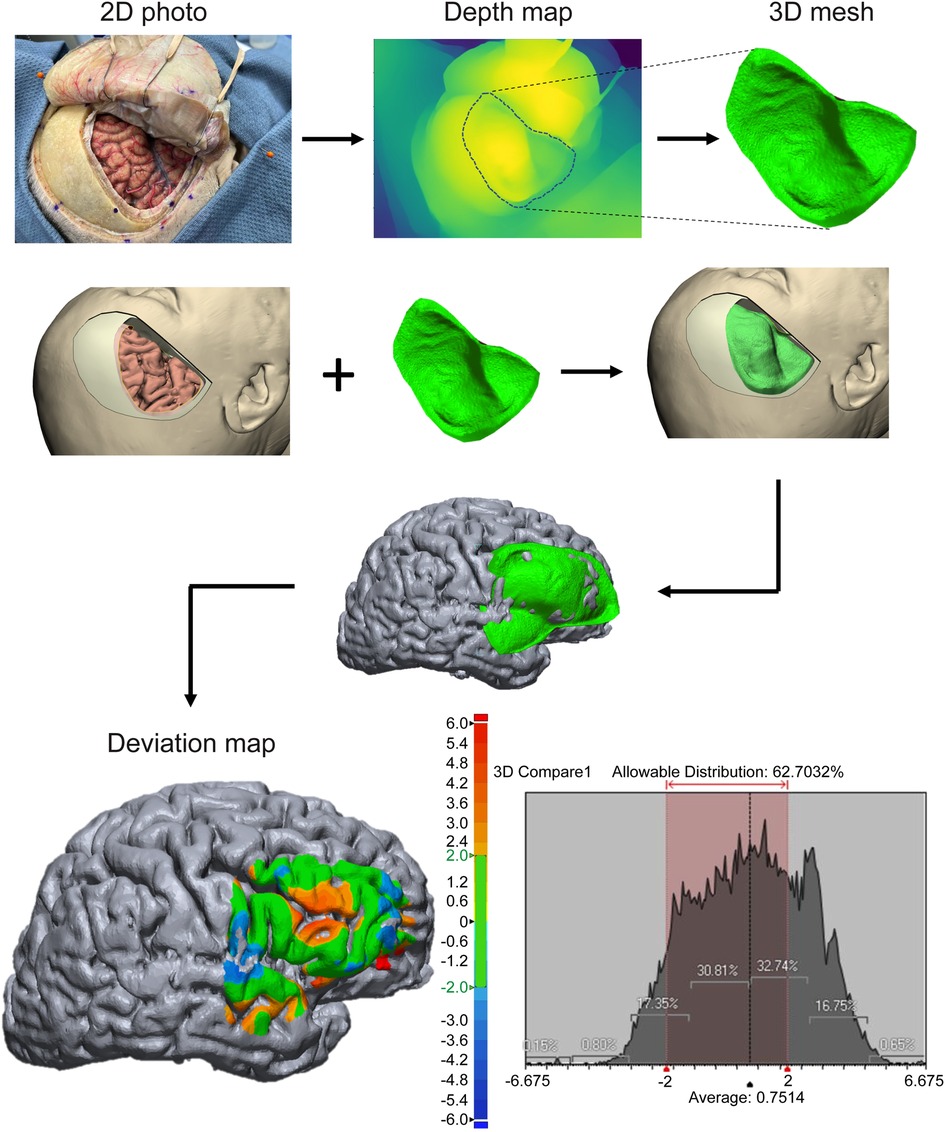

Quantitative Validation Studies

In theory, neuronavigation and imaging-based 3D modeling should demonstrate inherently excellent spatial accuracy because of their reliance on high-resolution volumetric MRI and CT imaging. However, the validity of 3D reconstructed photographs has not been reported previously in the neuroanatomical literature. Therefore, we used the 3D space of the image-based reconstructed model as the ground truth and validation for other modalities (e.g., neuronavigation coordinates of the surgical field and depth maps of 3D reconstructed images) (Figure 4). In other words, the 3D reconstructed surface rendered from standard medical imaging served as the true reference values.

Figure 4. Validation of monocular-depth estimation technique using the 3D virtual model. A single operative photograph was used to create a depth map and corresponding 3D mesh. This mesh was then imported into the 3D virtual model space as an object. Two models (3D mesh of the photographic depth map and 3D virtual model) were aligned, and the deviation map between 2 surfaces was created. The deviation map shows that almost two-thirds of the entire surface area matches almost perfectly between the 2 models (within 2-mm limits). The epicenter and edges show the highest degrees of distortion. Colored scale bar indicates the degree of distortion in millimeters. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

Cross-Validation Studies

Pairwise comparisons of the two modalities were used for cross validation. Geomagic software (3D Systems, Rock Hill, SC, USA) was used to quantitatively measure how well two surfaces (neuroimaging-based 3D model vs. depth map of 3D rendered photograph) matched. The software also produced a color-scale deviation map (Figure 4). The use of neuronavigation also served as a valuable conduit to accurately rebuild the “invisible” anatomical details on the 3D virtual model (e.g., superficial sylvian vein, cortical arteries, etc.) by converting qualitative information of photography into quantitative data.

Results

Feasibility of the Methodological Pipeline

A variety of alternative photogrammetry and 3D-rendering methods were tested. Figure 1 demonstrates our proposed pipeline, which was found to be feasible and effective after a few iterations on different cadaver heads during the development phase.

Generation of Neuroimaging-Based 3D Virtual Models

We created displays of successful reconstruction and segmentation of 3D virtual models, using volumetric imaging data (Figure 2). Highly accurate and realistic features of skin, bone, dura, and brain surface models were created. Sulci and gyri anatomy of the brain mirror those seen in photographic views.

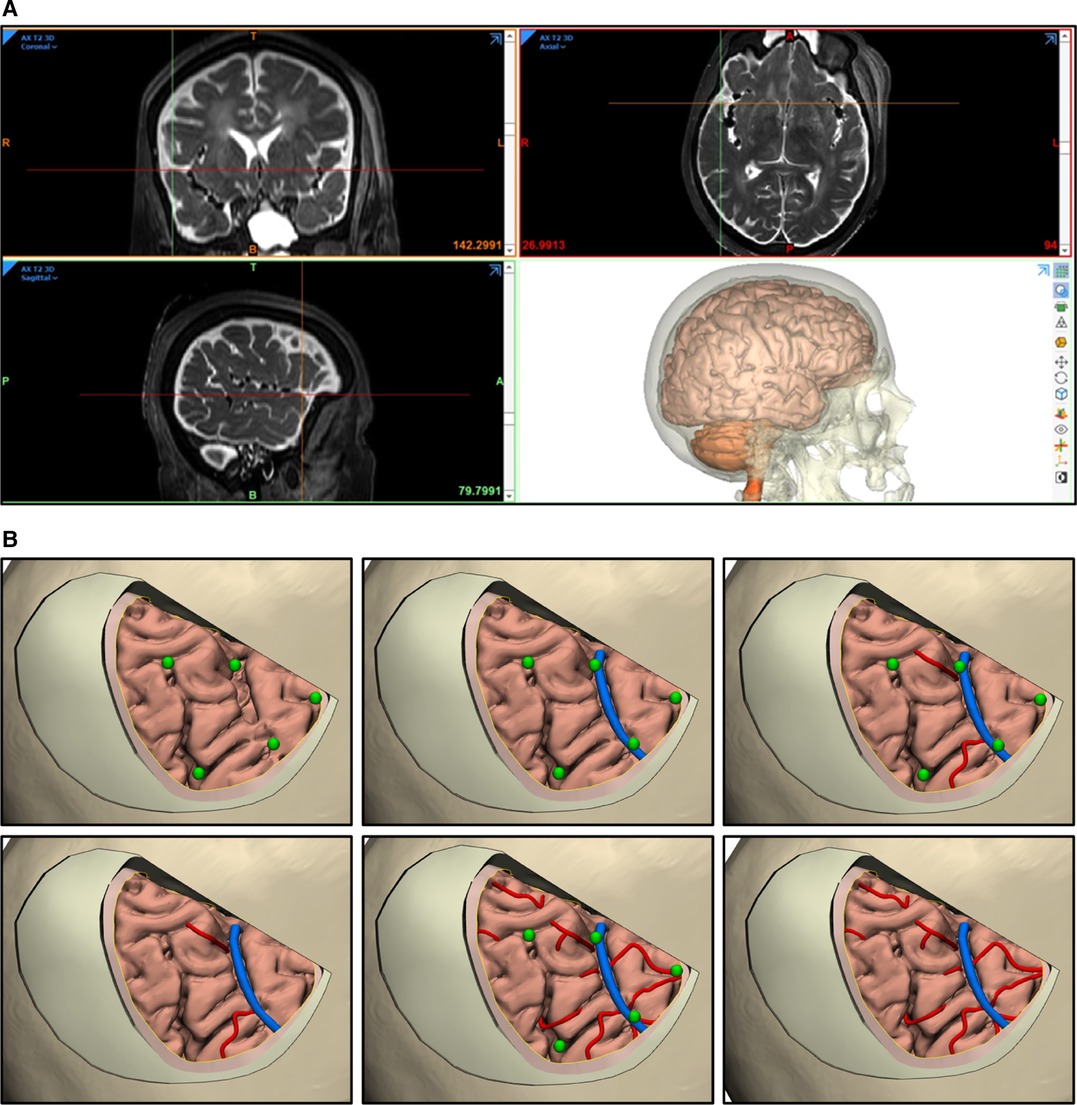

Unlike in vivo human brains, cadaver neuroimaging lacks a clear visualization of vascular structures; therefore, this 3D virtual model does not show vasculature. However, the casts of injected major arteries and veins can still be traced and rendered manually with the software, or a contrast medium can be used for injection if desired. Alternatively, microvasculature or other fine anatomical details can be added manually by using photographic information (Figure 5).

Figure 5. Adding vasculature to 3D virtual models. (A) Vascular structures are visible on MRI (coronal, top left; axial, top right; sagittal, bottom left). However, the delineation of vascular structures in 3D models (bottom right) is not practical or accurate unless a contrast medium is used before the vessels are injected. (B) An alternative method is to artificially add or draw arteries (red lines) or veins (blue lines) using certain anatomical landmarks acquired via neuronavigation and photography (green dots). Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

Cross-Validation of Neuronavigation with a Neuroimaging-Based 3D Model

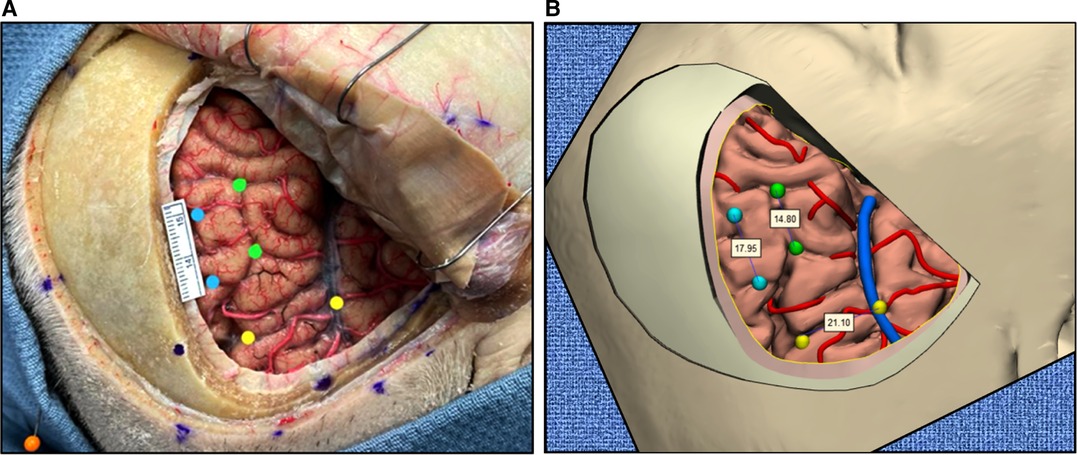

After we constructed an imaging-based 3D model, we assessed the accuracy and validity of the model using the neuronavigation tool in the real-world setting. Co-registration of the 3D space from the neuronavigation system with the 3D virtual model allowed us to quantitatively measure the distortions arising from the surgical procedures on a cadaver (i.e., brain shifting). We measured the differences between the “true” model (image-based 3D reconstruction) and the measured (navigation) coordinates of prespecified points at each step of the surgical procedure. Overall, mean distortion was calculated as 3.3 ± 1.5 mm (range, 0–7 mm) for all 29 points acquired during the surgical procedure. Measurements in the 3D virtual model perfectly matched (defined as deviation <2 mm) that of real measurements on cadaveric specimens (Figure 6).

Figure 6. Measurements in the real-world setting using a photograph (A) correspond to the 3D virtual model (B). Ruler and values shown are millimeters. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

Validation of 3D Images Reconstructed from Single 2D Photograph

The validity of machine learning–based 3D reconstruction (a novel form of photogrammetry, using monocular-depth estimation) of a single 2D photograph was assessed. The first validation step involved analyzing the depth map (seen in Figure 4). The results showed that the depth map accurately represented relative depths of anatomical structures in the surgical field.

The second step was a quantitative analysis of the same depth map with reference to the 3D model’s cortical surface exposed through the pterional craniotomy. The mean (SD) distortion was 0.7 ± 1.9 mm (range, −6.7 to 6.7 mm). Overall, within the craniotomy window, 63% of surface maps were perfectly matched (deviation <2 mm). Notably, the central area (around the inferior frontal sulcus) showed the largest distortion, whereas the match at the peripheral cortical surface was nearly perfect (<2 mm distortions). However, distortions increased again toward the edges.

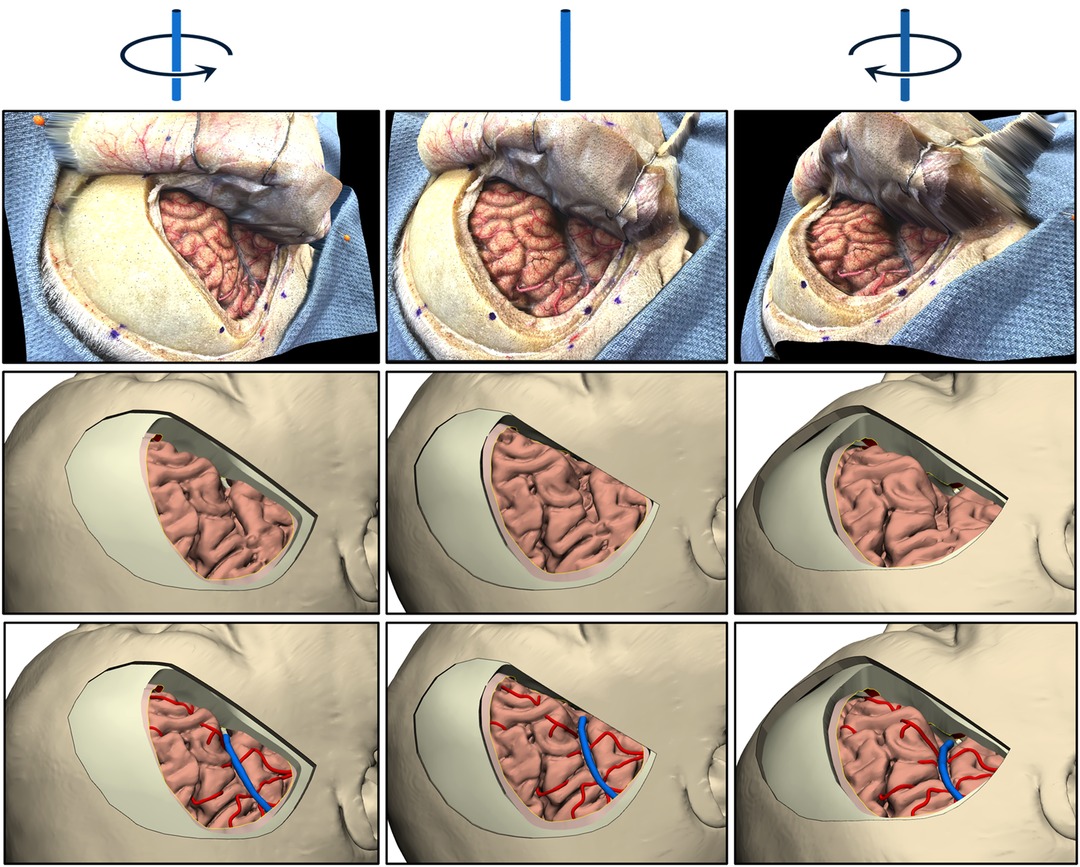

The third step was qualitative validation. Real-time inspection of the 3D images created by texture mapping yielded accurate 3D perception in a wide range (up to 45° in all directions from the perpendicular axis of neutral image). Furthermore, qualitative validation was demonstrated objectively by comparing the same angle visualization of both the 3D photograph and the 3D virtual model (Figure 7).

Figure 7. Three rotations (columns) of real-world and two 3D models. Cadaveric photographs are shown in the top row. Both the 3D images obtained from a single 2D photograph via monocular-depth estimation technique (middle row) and the 3D virtual model generated from neuroimaging data (bottom row) can be rotated and matched in 3D space. Used with permission from Barrow Neurological Institute, Phoenix, Arizona.

Feasibility of Multimodality Integration

Our findings support the feasibility and applicability of the virtual models to be used as simulation tools for education, research, and surgical planning. The capability of supplementing the 3D model with anatomical details invisible to neuroimaging is demonstrated in Figures 5–7.

Discussion

In this study, we introduced and validated a novel methodological pipeline that integrates various imaging and modeling technologies to create an immersive cadaveric simulation. We combined cadaveric dissections, virtual reality, surgical simulation, and postdissection analysis and quantification. Our findings suggest the following. First, neuroimaging and 3D modeling technologies can successfully digitalize precious cadaveric materials with a breadth of volumetric information and segmentation options. Second, various forms of photogrammetry technology can supplement these 3D models with more realistic surface information, such as fine anatomical details, color, and texture. Third, neuronavigation can be used as a medium to connect, communicate, calibrate, and eventually combine these two 3D technologies. Lastly, all these technologies could potentially pave the way for more integrated and immersive neurosurgical simulations. To the best of our knowledge, ours is the first neuroanatomical study to show the feasibility of combining the spatial accuracy provided by standard medical imaging (i.e., CT and MRI) with overlaid realistic textural features to a 3D reconstructed model.

Cadaveric Dissection as a Training Tool

Historically, cadavers have been considered the best material to use for studying human anatomy from both training and research perspectives. Also, cadaveric dissection is the gold standard for training neurosurgeons because it allows surgical techniques to be demonstrated, provides a unique experience with a wide range of sensory inputs, and creates necessary surgical skills (12, 13). These skills include depth perception, 2D and 3D vision orientation, sensitive movements in limited environments (14), bimanual coordination, and hand-eye coordination (15, 16). In fields such as chess, music, sports, and mathematics, studies on human performance have shown that attaining an expert performance level requires about 10,000 hours of focused practice (17, 18). To obtain 10,000 hours of practice in a certain field, a person would have to dedicate 5 hours/day, 6 days/week, 48 weeks/year for 6.9 years (18). This equates to the time needed to graduate from a neurosurgical residency in the United States (7 years). A significant percentage of hours can be efficiently spent in a cadaveric dissection laboratory. However, access to such laboratories is limited due to a global shortage of cadavers, increasing costs of cadaver materials, and fewer dedicated anatomy laboratories, as well as the recent coronavirus pandemic. All these factors have contributed to decreased availability and use of cadavers worldwide (19–23). As a result, these recent limitations have paralleled the rapid adoption of new technologies to replace cadavers in anatomical training and international collaborations to maximize the use of the existing cadaver supply (19, 20, 24–26).

Virtual 3D Models and Simulators for Training

Three-dimensional virtual models are not unique to the field of neurosurgery or medicine. Use of these models began in the 1950s and 1960s as computer-aided design systems for military simulators and the aerospace and automobile industry (27–29). Later, mathematical algorithms were created to define virtual 3D solid models (27, 30). Now, with the emergence of advanced neuroimaging modalities and volumetric reconstruction, the means of teaching neurosurgical anatomy has been markedly influenced, opening the possibility for learning anatomy and simulating surgical interventions through virtual reality (13, 31, 32).

Neurosurgical education and training have been gradually moving toward virtual reality (5, 33). The COVID-19 pandemic further accelerated this trend. In fact, distant (i.e., remote) learning has become the mainstay of education during the pandemic (34). As a result, we believe now is the most opportune time to supplement neurosurgical training worldwide with 3D virtual reality.

The process of creating 3D virtual models in medicine requires the segmentation of CT or MRI, then volume rendering (35), which produces surface models with high spatial resolution. This technique is currently useful for identifying a pathologic location and surgical planning; however, the current 3D models lack color, texture, and fine anatomical details, such as fine blood vessels, cranial nerves, and arachnoid membranes, which cannot be reproduced (5). The next step in the evolution of 3D anatomical and virtual simulations is the development of models that are both spatially accurate and have fine anatomical detail and realistic textures.

Photogrammetry as a Supplement to Anatomy Training

Neuroimaging-based 3D reconstruction models capture anatomical features with high spatial accuracy, but as noted above, they lack fine anatomical details and realistic textures. A possible solution to this limitation is to incorporate photogrammetry into building the models. Photogrammetry creates 3D representations using multiple 2D photographs of an object and has long been used in anatomy to bring 3D perception to 2D photographs or to create virtual 3D representations of anatomical specimens (1, 5, 10, 36–38). De Benedictis et al. (36) reported quantitative validation of photogrammetry for the study of white matter connectivity of the human brain. Although their study used a relatively sophisticated setup, our group recently showed the applicability of a freely available 360° photogrammetry tool for neuroanatomy studies (1). In addition, Roh et al. (5) also incorporated photogrammetry into a virtual 3D environment to create realistic texture details of cadaveric brain specimens, but their study lacked quantitative validity. Nonetheless, these studies clearly show the potential of this rapidly developing technology in enhancing neurosurgical and neuroanatomy training.

Monocular-Depth Estimation as a Novel Form of Photogrammetry

Although photogrammetric technology has advanced tremendously in recent years, the various tools that are available continue to involve very complex hardware or software. However, the proposed monocular-depth estimation tool detailed in this study, which can also be regarded as a novel form of photogrammetry (Intel ISL MiDaS v2.1), appears to be a revolutionary game-changer. The technique provides spatially accurate features by AI-based and machine learning–guided 3D reconstruction of a single 2D image. When this tool is trained with a massive database of 3D films, it outperforms competing methods across diverse data sets (39). Indeed, we have demonstrated that this tool has a surprisingly high accuracy of estimating depth, even on a complex surface like the human brain. The potential for applying this novel photogrammetry tool in neuroanatomy training and research is enormous, and researchers will likely rapidly explore this technology in the near future.

Integrating Various Technologies for Immersive Simulations

All the technologies mentioned in this study are valuable instruments on their own, but each has its own limitations. Nevertheless, each technology can potentially complement another’s weaknesses; therefore, when combined, they may offer an immersive, realistic simulation that is both quantitatively and qualitatively accurate. Thus, we believe the proposed methodological pipeline can be used not only for cadaveric dissections but also for real surgical scenarios. Images taken from surgical microscopes can be exported and implemented in our proposed pipeline. Some microscopes even support robotic and tracked controlled movements that can acquire stepwise stereoscopic imaging to postprocess into a 3D environment showing real tissue color, texture, and surgical approach anatomy (40). In addition, one can supplement the fine anatomical details that are usually missed when reconstructing standard MRI or CT images. This refinement is achieved using neuronavigation as a registration tool during cadaveric dissections and pinpointing the exact location of a structure that is “invisible” on neuroimaging.

Overall, we believe our proposed integrative approach can maximize the utility of cadavers and offers endless virtual dissection possibilities. In other words, a cadaver intended for dissection can be digitalized, so that it can remain an educational instrument forever rather than simply being destroyed when it can no longer be used. Physical models provide the advantage of real anatomical substances, but they are subject to decay and manipulation and require preservation and constant maintenance. In contrast, virtual models can be shared electronically, yield joint diagnostics and collective expertise, and eliminate geographical limitations (27). High-quality neurosurgical training and education can be continued in the comfort of a training neurosurgeon’s home or office rather than a cadaveric dissection laboratory.

Supplementing 3D Virtual Models with Cadaveric Dissection and Live Surgery

Given the proposed pipeline, in what ways can 3D virtual models supplement neurosurgical training through cadaveric dissection and live surgery in real patients? In theory, the 3D methods can apply to both cadaveric heads and live heads, but one is inherently more pragmatic. The construction of an accurate and realistic 3D virtual model requires time, physical (i.e., surgical) dissection, and multiple measurements of different anatomical structures using neuronavigation. Unless an anatomical structure is related to the underlying pathology of a live patient, it will not be exposed in surgery, and under no circumstance will unnecessary dissection be performed on a live patient to expose distant structures. In addition, live patients will have blood and cerebrospinal fluid (CSF) in the surgical field, brain shift due to CSF removal, and brain pulsations that can interfere with the quality and precision of intraoperative imaging and registration. Fixed, injected cadaveric heads do not have limitations created by the real surgical environment. Working with cadaveric heads creates no immediate time limit. Cadavers can be dissected to show all desired anatomical structures. Also, using a cadaver creates no interference in measurements or registration caused by blood, CSF, brain shift, or pulsations as in live surgery.

Cadaveric tissue also has inherent limitations. Digitalizing a cadaver head before the irreversible physical dissection process allows for its repeated use via virtual dissections. This digital copy also provides an opportunity to continually improve the virtual model by acquiring cadaveric photos, even in standard 2D format with smartphones, professional cameras, or microscopes. While the actual cadaveric specimen is damaged from repeated use over time, it will give rise to a more complete and enriched digital cadaveric model dataset. Having such a dataset of cadaveric images and models will also enable novel anatomical measurements, simulation of different surgical approaches, and virtual dissections.

Although the purpose of surgical dissection in a live patient is to treat a certain pathology and not create a realistic 3D model, we believe that our proposed pipeline can be effectively used under certain circumstances in intraoperative situations without interfering with treatment. For example, serial surgical exposure images (both macroscopic and microscopic) can be co-registered with neuroimaging data using either neuronavigation registration points or more reliable anatomical landmarks (e.g., superficial veins). Then, machine learning algorithms can be used to train AI with the overwhelming intraoperative photographic data so that the algorithm can accurately predict the texture and surface details of MRI-based 3D-rendered virtual models.

However, it should be noted that surgical exposure will always be limited by a craniotomy performed at one specific time in a live patient, whereas a cadaveric specimen can be freely explored through many craniotomies and approaches at different time points. Furthermore, when using a cadaveric specimen, more aggressive retraction and dissection can be performed to reach distant anatomical targets that are deemed not safe in a real patient or are being tested for feasibility. Virtual models can support learning by allowing numerous rehearsals, with skills then confirmed by actual dissections in a laboratory. Eventually, with optimization of our pipeline and future advances in 3D virtual technology, we believe that accurate and realistic 3D virtual models can be effectively applied to both cadaveric and real-life specimens. Our technology is developed and exists to augment neurosurgical training; we have no doubt that considerable practice dissecting the cranium and brain is mandatory to achieve technical excellence for the progressing neurosurgeon.

Study Limitations

This study describes and validates a novel methodological pipeline for neurosurgeons and neuroanatomists worldwide. It includes both modifications in already known technologies (3D modeling, navigation, and photogrammetry) and the introduction of new technology (AI-based monocular-depth estimation) into the field. However, the model has certain limitations. This model development pipeline is supported by our preliminary, proof-of-concept study and should be validated with a larger number of specimens and by other groups. The process requires some expensive materials and tools, such as cadavers, neuroimaging, neuronavigation, and modeling software. However, we believe this methodology can be implemented in laboratories that already have these capabilities and resources, and those laboratories could then share their resources and outputs with others across the world who are in need of these valuable and innovative educational materials. Although merging 2 technologies to complement each other provides an exciting opportunity, this process may not be as easy as thought due to the complexity of underlying technical substrates and operational principles of each technology. This potential training tool will require the collaborative effort of surgeons, anatomists, imaging scientists, software developers, computer vision and image recognition specialists, and even graphic designers. The image processing procedures described in this study still require human effort and expertise, which restricts their efficiency but allows for personalization, revision, and modification. AI applications will likely generate more automatic or semiautomatic processes in the near future.

Conclusions

Our report represents the first time multiple 3D rendering technologies have been integrated and validated for the fields of neuroanatomy and neurosurgery. We were able to successfully merge the 3D reconstructions from standard medical imaging (CT and MRI) and photogrammetry (including a novel technique not yet applied to any form of anatomy) with high accuracy. As a result, we produced a 3D virtual model that is both quantitatively and qualitatively accurate. We believe our methodological pipeline can supplement both neurosurgical training and education globally, especially in a setting where cadaveric dissection or operating room access is limited. With 3D virtual technology, we envision a future where neurosurgeons can learn relevant anatomy and practice surgical procedures in the comfort of their own homes and at the pace of their choosing.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material; further inquiries can be directed to the corresponding author.

Author Contributions

SH: Conceptualization, Methodology, Investigation, Format Analysis, Data Curation, Visualization, Writing – Original draft preparation; NGR: Methodology, Software, Resources, Visualization; GM-J: Methodology, Investigation, Writing – Original draft preparation; OT: Software, Formal analysis, Resources, Visualization; MEG: Validation, Investigation, Data Curation; IA: Validation, Data Curation; YX: Validation, Data Curation; BS: Validation, Formal analysis; II: Validation, Resources; IT: Validation, Resources; MB: Supervision; MTL: Supervision; MCP: Resources, Writing – Review & Editing, Supervision, Project administration. All authors contributed to the article and approved the submitted version.

Acknowledgments

This study was supported by the Newsome Chair in Neurosurgery Research held by Dr. Preul and funds from the Barrow Neurological Foundation. We thank the staff of Neuroscience Publications at Barrow Neurological Institute for assistance with manuscript preparation.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Gurses ME, Gungor A, Hanalioglu S, Yaltirik CK, Postuk HC, Berker M, et al. Qlone®: a simple method to create 360-degree photogrammetry-based 3-dimensional model of cadaveric specimens. Oper Neurosurg (Hagerstown). (2021) 21(6):E488–93. doi: 10.1093/ons/opab355

2. Nicolosi F, Rossini Z, Zaed I, Kolias AG, Fornari M, Servadei F. Neurosurgical digital teaching in low-middle income countries: beyond the frontiers of traditional education. Neurosurg Focus. (2018) 45(4):E17. doi: 10.3171/2018.7.Focus18288

3. Cheng D, Yuan M, Perera I, O’Connor A, Evins AI, Imahiyerobo T, et al. Developing a 3D composite training model for cranial remodeling. J Neurosurg Pediatr. (2019) 24(6):632–41. doi: 10.3171/2019.6.Peds18773

4. Benet A, Plata-Bello J, Abla AA, Acevedo-Bolton G, Saloner D, Lawton MT. Implantation of 3D-printed patient-specific aneurysm models into cadaveric specimens: a new training paradigm to allow for improvements in cerebrovascular surgery and research. Biomed Res Int. (2015) 2015:939387. doi: 10.1155/2015/939387

5. Roh TH, Oh JW, Jang CK, Choi S, Kim EH, Hong CK, et al. Virtual dissection of the real brain: integration of photographic 3D models into virtual reality and its effect on neurosurgical resident education. Neurosurg Focus. (2021) 51(2):E16. doi: 10.3171/2021.5.FOCUS21193

6. Condino S, Montemurro N, Cattari N, D'Amato R, Thomale U, Ferrari V, et al. Evaluation of a wearable AR platform for guiding complex craniotomies in neurosurgery. Ann Biomed Eng. (2021) 49(9):2590–605. doi: 10.1007/s10439-021-02834-8

7. Henn JS, Lemole GM, Ferreira MA, Gonzalez LF, Schornak M, Preul MC, et al. Interactive stereoscopic virtual reality: a new tool for neurosurgical education. Technical note. J Neurosurg. (2002) 96(1):144–9. doi: 10.3171/jns.2002.96.1.0144

8. Balogh AA, Preul MC, László K, Schornak M, Hickman M, Deshmukh P, et al. Multilayer image grid reconstruction technology: four-dimensional interactive image reconstruction of microsurgical neuroanatomic dissections. Neurosurgery. (2006) 58(1 Suppl):ONS157–65. doi: 10.1227/01.NEU.0000193514.07866.F0

9. Balogh A, Czigléczki G, Papal Z, Preul MC, Banczerowski P. [The interactive neuroanatomical simulation and practical application of frontotemporal transsylvian exposure in neurosurgery]. Ideggyogy Sz. (2014) 67(11–12):376–83. PMID: 25720239

10. Petriceks AH, Peterson AS, Angeles M, Brown WP, Srivastava S. Photogrammetry of human specimens: an innovation in anatomy education. J Med Educ Curric Dev. (2018) 5:2382120518799356. doi: 10.1177/2382120518799356

11. Zhou Q-Y, Park J, Koltun V. Open3D: a modern library for 3D data processing. arXiv preprint arXiv:180109847. (2018).

12. Cappabianca P, Magro F. The lesson of anatomy. Surg Neurol. (2009) 71(5):597–8. doi: 10.1016/j.surneu.2008.03.015

13. de Notaris M, Topczewski T, de Angelis M, Enseñat J, Alobid I, Gondolbleu AM, et al. Anatomic skull base education using advanced neuroimaging techniques. World Neurosurg. (2013) 79(2 Suppl):S16.e9–3. doi: 10.1016/j.wneu.2012.02.027

14. Rodrigues SP, Horeman T, Blomjous MS, Hiemstra E, van den Dobbelsteen JJ, Jansen FW. Laparoscopic suturing learning curve in an open versus closed box trainer. Surg Endosc. (2016) 30(1):315–22. doi: 10.1007/s00464-015-4211-0

15. Cagiltay NE, Ozcelik E, Isikay I, Hanalioglu S, Suslu AE, Yucel T, et al. The effect of training, used-hand, and experience on endoscopic surgery skills in an educational computer-based simulation environment (ECE) for endoneurosurgery training. Surg Innov. (2019) 26(6):725–37. doi: 10.1177/1553350619861563

16. Oropesa I, Sánchez-Gonzáez P, Chmarra MK, Lamata P, Pérez-Rodríguez R, Jansen FW, et al. Supervised classification of psychomotor competence in minimally invasive surgery based on instruments motion analysis. Surg Endosc. (2014) 28(2):657–70. doi: 10.1007/s00464-013-3226-7

17. Ericsson KA, Hoffman RR, Kozbelt A, Williams AM. The Cambridge Handbook of Expertise and Expert Performance. Cambridge, UK: Cambridge University Press (2018).

18. Omahen DA. The 10,000-hour rule and residency training. CMAJ. (2009) 180(12):1272. doi: 10.1503/cmaj.090038

19. Chen D, Zhang Q, Deng J, Cai Y, Huang J, Li F, et al. A shortage of cadavers: The predicament of regional anatomy education in mainland China. Anat Sci Educ. (2018) 11(4):397–402. doi: 10.1002/ase.1788

20. Tatar I, Huri E, Selcuk I, Moon YL, Paoluzzi A, Skolarikos A. Review of the effect of 3D medical printing and virtual reality on urology training with ‘MedTRain 3D Modsim’ Erasmus + European Union Project. Turk J Med Sci. (2019) 49(5):1257–70. doi: 10.3906/sag-1905-73

21. Brassett C, Cosker T, Davies DC, Dockery P, Gillingwater TH, Lee TC, et al. COVID-19 and anatomy: stimulus and initial response. J Anat. (2020) 237(3):393–403. doi: 10.1111/joa.13274.32628795

22. Jones DG. Anatomy in a post-COVID-19 world: tracing a new trajectory. Anat Sci Educ. (2021) 14(2):148–53. doi: 10.1002/ase.2054

23. Patra A, Asghar A, Chaudhary P, Ravi KS. Integration of innovative educational technologies in anatomy teaching: new normal in anatomy education. Surg Radiol Anat. (2022) 44(1):25–32. doi: 10.1007/s00276-021-02868-6

24. Cikla U, Sahin B, Hanalioglu S, Ahmed AS, Niemann D, Baskaya MK. A novel, low-cost, reusable, high-fidelity neurosurgical training simulator for cerebrovascular bypass surgery. J Neurosurg. (2019) 130(5):1663–71. doi: 10.3171/2017.11.Jns17318

25. Pears M, Konstantinidis S. The future of immersive technology in global surgery education. Indian J Surg. (2021):1–5. doi: 10.1007/s12262-021-02998-6

26. Boscolo-Berto R, Tortorella C, Porzionato A, Stecco C, Picardi EEE, Macchi V, et al. The additional role of virtual to traditional dissection in teaching anatomy: a randomised controlled trial. Surg Radiol Anat. (2021) 43(4):469–79. doi: 10.1007/s00276-020-02551-2

27. Narang P, Raju B, Jumah F, Konar SK, Nagaraj A, Gupta G, et al. The evolution of 3D anatomical models: a brief historical overview. World Neurosurg. (2021) 155:135–43. doi: 10.1016/j.wneu.2021.07.133

28. Vernon T, Peckham D. The benefits of 3D modeling and animation in medical teaching. J Audiov Media Med. (2003) 25:142–8. doi: 10.1080/0140511021000051117

29. Raju B, Jumah F, Narayan V, Sonig A, Sun H, Nanda A. The mediums of dissemination of knowledge and illustration in neurosurgery: unraveling the evolution. J Neurosurg. (2020) 135(3):955–61. doi: 10.3171/2020.7.Jns201053

30. Torres K, Staśkiewicz G, Śnieżyński M, Drop A, Maciejewski R. Application of rapid prototyping techniques for modelling of anatomical structures in medical training and education. Folia Morphol (Warsz). (2011) 70(1):1–4. PMID: 21604245

31. Chen JC, Amar AP, Levy ML, Apuzzo ML. The development of anatomic art and sciences: the ceroplastica anatomic models of La Specola. Neurosurgery. (1999) 45(4):883–91. doi: 10.1097/00006123-199910000-00031

32. Lukić IK, Gluncić V, Ivkić G, Hubenstorf M, Marusić A. Virtual dissection: a lesson from the 18th century. Lancet. (2003) 362(9401):2110–3. doi: 10.1016/s0140-6736(03)15114-8

33. Davids J, Manivannan S, Darzi A, Giannarou S, Ashrafian H, Marcus HJ. Simulation for skills training in neurosurgery: a systematic review, meta-analysis, and analysis of progressive scholarly acceptance. Neurosurg Rev. (2021) 44(4):1853–67. doi: 10.1007/s10143-020-01378-0

34. Sahin B, Hanalioglu S. The continuing impact of coronavirus disease 2019 on neurosurgical training at the 1-year mark: results of a nationwide survey of neurosurgery residents in Turkey. World Neurosurg. (2021) 151:e857–e70. doi: 10.1016/j.wneu.2021.04.137

35. Bücking TM, Hill ER, Robertson JL, Maneas E, Plumb AA, Nikitichev DI. From medical imaging data to 3D printed anatomical models. PLoS One. (2017) 12(5):e0178540. doi: 10.1371/journal.pone.0178540

36. De Benedictis A, Nocerino E, Menna F, Remondino F, Barbareschi M, Rozzanigo U, et al. Photogrammetry of the human brain: a novel method for three-dimensional quantitative exploration of the structural connectivity in neurosurgery and neurosciences. World Neurosurg. (2018) 115:e279–91. doi: 10.1016/j.wneu.2018.04.036

37. Shintaku H, Yamaguchi M, Toru S, Kitagawa M, Hirokawa K, Yokota T, et al. Three-dimensional surface models of autopsied human brains constructed from multiple photographs by photogrammetry. PLoS One. (2019) 14(7):e0219619. doi: 10.1371/journal.pone.0219619

38. Rubio RR, Chae R, Kournoutas I, Abla A, McDermott M. Immersive surgical anatomy of the frontotemporal-orbitozygomatic approach. Cureus. (2019) 11(11):e6053. doi: 10.7759/cureus.6053

39. Ranftl R, Lasinger K, Hafner D, Schindler K, Koltun V. Towards robust monocular depth estimation: mixing datasets for zero-shot cross-dataset transfer. IEEE PAMI. (2019) 44:1623–37. doi: 10.1109/TPAMI.2020.3019967

Keywords: 3D rendering, depth estimation, neuroanatomy, neuroimaging, neurosurgical training, photogrammetry, virtual model

Citation: Hanalioglu S, Romo NG, Mignucci-Jiménez G, Tunc O, Gurses ME, Abramov I, Xu Y, Sahin B, Isikay I, Tatar I, Berker M, Lawton MT and Preul MC (2022) Development and Validation of a Novel Methodological Pipeline to Integrate Neuroimaging and Photogrammetry for Immersive 3D Cadaveric Neurosurgical Simulation. Front. Surg. 9:878378. doi: 10.3389/fsurg.2022.878378

Received: 17 February 2022; Accepted: 25 April 2022;

Published: 16 May 2022.

Edited by:

Bipin Chaurasia, Neurosurgery Clinic, NepalReviewed by:

S. Ottavio Tomasi, Paracelsus Medical University, AustriaNicola Montemurro, Azienda Ospedaliera Universitaria Pisana, Italy

Copyright © 2022 Hanalioglu, Romo, Mignucci-Jiménez, Tunc, Gurses, Abramov, Xu, Sahin, Isikay, Tatar, Berker, Lawton and Preul. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mark C. Preul Neuropub@barrowneuro.org

Specialty section: This article was submitted to Neurosurgery, a section of the journal Frontiers in Surgery

Abbreviations: 2D, 2-dimensional; 3D, 3-dimensional; AI, artificial intelligence; CSF, cerebrospinal fluid; CT, computed tomography; HU, Hounsfield unit; MRI, magnetic resonance imaging.

Sahin Hanalioglu

Sahin Hanalioglu Nicolas Gonzalez Romo1

Nicolas Gonzalez Romo1  Muhammet Enes Gurses

Muhammet Enes Gurses Yuan Xu

Yuan Xu Balkan Sahin

Balkan Sahin Michael T. Lawton

Michael T. Lawton Mark C. Preul

Mark C. Preul