- Deakin University, Geelong, VIC, Australia

Motivation for the study: Workplaces are changing with employees increasingly working remotely and flexibly, which has created larger physical distance between team members. This shift has consequences for trust research and implications for how trust is built and maintained between employees and leaders.

Research design: Three studies collectively aimed to demonstrate how employee trust in leaders has adapted to a hybrid work environment. A validation of a seminal multidimensional employee trust in leaders measure was conducted. Also, an alternative multidimensional measure was developed, piloted, and then validated using exploratory and confirmatory factor analyses.

Main findings: Findings showed the Affective and Cognitive Trust scale not to be sufficiently reliable or valid after testing with a sample working in a hybrid model of virtual and face to face work environments. However, the new measure demonstrated good reliability and validity.

Implication: Findings reinforced that there are behavioural and relational elements to organisational trust, and there are two discreet dimensions to trustworthy behaviour: communication and authenticity.

Introduction

In 2020, a global pandemic changed the workplace forever. Regardless of organisational policy and culture, employers were forced into flexible and remote working arrangements under the weight of public health orders to reduce or prevent infection with COVID-19 (Spurk and Straub, 2020). As the workplace changed, so did the understanding of how organisational culture was built and maintained (Spicer, 2020). Organisational trust is a key aspect of culture at work (Page et al., 2019). However, during the COVID-19 pandemic, organisational trust was strongly challenged, especially between managers and their employees (Sharieff, 2021).

It is hard to deny that the COVID-19 pandemic has changed the modern workplace globally [Coronavirus: How the world of work may change forever (Internet), 2022]. The main change is the workplace itself. Working from home, having a home that is fit for work, and the technological expectations for working, are key features of that change (Arruda, 2021). One of the significant issues that affected employees was the burden of responsibilities of the home, like childcare and the care of family members who would have engaged in services that closed amidst state and nation-wide lock downs (Chung et al., 2019; Power, 2020) These changes put new stresses on the employee-leader relationship, as expectations for ways of working and performance had to change (Kniffin et al., 2021). This new reality has an impact on organisational trust in the modern workplace (Sharieff, 2021).

The theory development of multidimensional employee trust in leaders

Organisational trust has been the subject of much research (McEvily and Tortoriello, 2011; Agote et al., 2016) and there is a discourse in the literature regarding whether organisational trust is unidimensional or multidimensional (McAllister, 1995; Dirks and Ferrin, 2002; Schaubroeck et al., 2011). The seminal tool measuring multidimensional employee trust in leaders is McAllister’s (1995) Affective and Cognitive Trust scale (McEvily and Tortoriello, 2011; Fischer et al., 2020). According to McAllister (1995), affective trust is defined as the employee’s emotional connection that forms through a relationship with the leader, while cognitive trust is defined as the employee’s thoughts about the leader’s trustworthy behaviour. Despite its prominence, McAllister’s (1995) scale may not be appropriate for the modern workplace. It has been nearly 30 years since the items have been reviewed for relevancy (Fischer et al., 2020). In addition, the work environment has changed substantially from traditional face-to-face to a blended mode, if not wholly remote working (Graham, 2020; Shine, 2020). A recent meta-analysis (Fischer et al., 2020) found that most of the multidimensional employee trust in leaders research uses McAllister’s (1995) scale. Within the context of great change in how and where work occurs, there is likely to be an impact on employee trust in leaders. Therefore, an updated measure that is reflective of these workplace context changes may be more appropriate.

Measurement of multidimensional employee trust in leaders

This research presents an in-depth analysis of the reliability and validity of McAllister’s (1995) Affective and Cognitive Trust scale to determine its suitability in the contemporary workplace. There is an important consideration worth exploring in the development of McAllister’s (1995) scale, and thus its construct validity in the contemporary workplace (McEvily and Tortoriello, 2011; Fischer et al., 2020). To design the scale, McAllister (1995) reviewed the available literature and measures of interpersonal trust to devise a list of items. Eleven “organisational behaviour scholars” (p. 35) were employed to review the items against McAllister’s (1995) definitions of affect- and cognition-based trust and classify them accordingly. McAllister (1995) did not provide further clarity about how the definitions of trust were created beyond this in the paper.

It is sound scale development practice to conduct qualitative research to attempt to understand the construct in question before designing the measure (O’Brien, 1993; DeVellis, 2016). There is no overt indication of the use of qualitative research methods to test the content of the items against the real-world work experience of employees trusting their leaders in McAllister’s (1995) scale paper. Contemporary scale development practices consider lack of qualitative research foundations a limitation (Morgado et al., 2017). Even at the time of development of McAllister’s (1995) Affective and Cognitive Trust scale, the use of focus groups was a common practice before developing structured questionnaires (Steckler et al., 1992; O’Brien, 1993). In McAllister’s (1995) original validation study, exploratory factor analysis was used on a data set obtained from a group of postgraduate and undergraduate students to reduce the measure to the 11 items. Although, McAllister (1995) states that the reliability scores for each dimension were good (α = 0.91 and 0.89; p. 36), the selection of participants in 1995 may not reflect the workplace as it cannot be confirmed that students’ experience of employment is generalisable to all employees’ experiences.

Measurement needs to advance with new context

Given it has been almost 30 years since the Affective and Cognitive Trust scale was first designed, it may not be fully applicable in the contemporary workplace context. It is important to consider the possibility that trust at work may develop and be sustained differently in virtual, hybrid and face-to-face work environments. This is key as technology can mediate interpersonal relationships differently (Carrigan et al., 2020; Bodó, 2021).

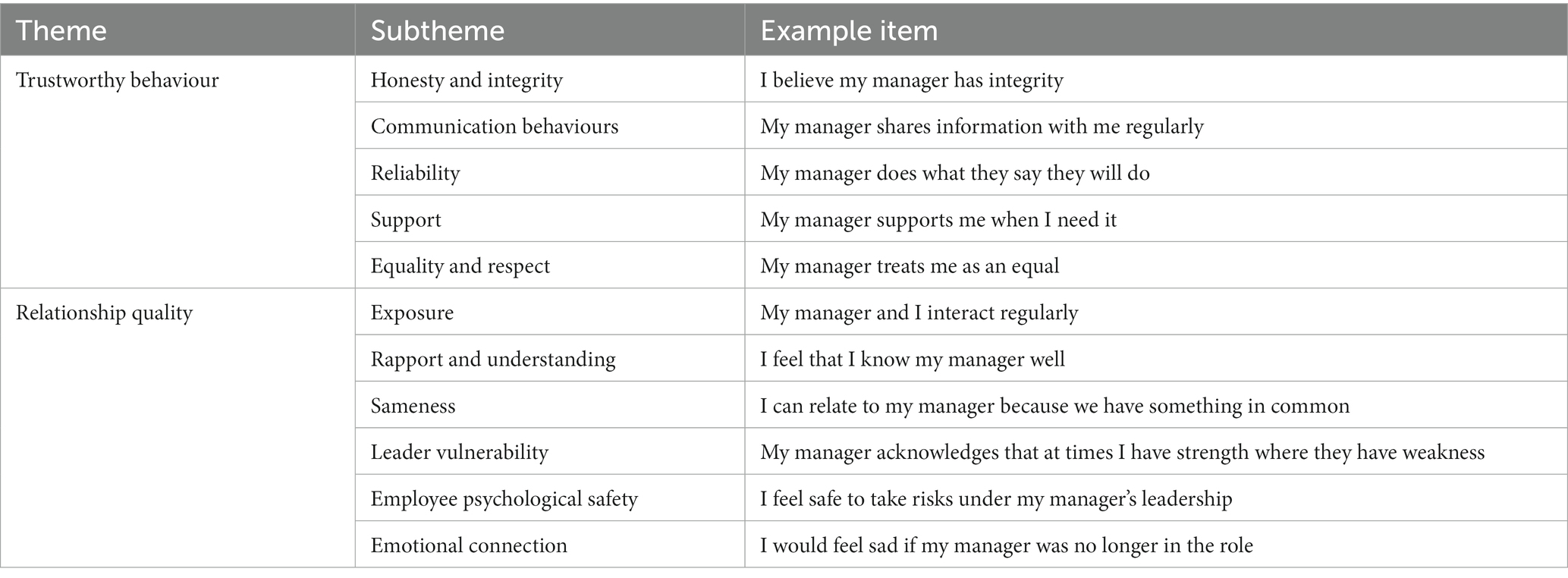

A more recent qualitative analysis (Fischer and Walker, 2022) of employees working in a mix of virtual, hybrid and face-to-face work environments showed that employee multidimensional trust in leaders comprised of an explicit behavioural component and an interpersonal relationship component. The findings also discussed the role of communication, exposure, and relationships. These were highlighted as critical in virtual work environments.

Despite the growth of literature exploring how changes in work environment influence interpersonal interaction at work, measurement of multidimensional employee trust in leaders has not.

Research rationale and hypotheses

The boundary between the home and workplace was becoming a more flexible and fluid environment before the COVID-19 pandemic (Atkinson and Hall, 2011; Galea et al., 2014), but once governments started to impose major restrictions on travel and leaving the home (McLaren and Wang, 2020) the line between home and work virtually disappeared for working adults (Graham, 2020; Power, 2020; Shine, 2020; Arruda, 2021). This change had major impacts on employee relationships and their leader (Kniffin et al., 2021). Based on the considerations highlighted about McAllister’s Affective and Cognitive Trust scale, and the work environment significantly evolving as the world continues to live with the COVID-19 virus and its influence on society, an exploration into the scale’s reliability and validity is warranted. The hypotheses of this research were:

H1: McAllister’s (1995) Affective and Cognitive Trust scale, the most often used measure of multidimensional employee trust in leaders in the literature, is less reliable and valid in the contemporary work environment (Study 1).

H2: An alternative measure of multidimensional employee trust in leaders, developed using appropriate scale design protocols (Studies 2 and 3), will demonstrate good psychometric properties.

To test these hypotheses, the three studies used a participant sample of the adult population who were working during the COVID-19 pandemic. All three studies were conducted during the COVID-19 pandemic (February–April 2021) when most participants were employed in remote or hybrid working arrangements (Spurk and Straub, 2020), experienced new stresses on the virtual employer–employee working relationship (Power, 2020; Kniffin et al., 2021), and faced major changes to the work environment due to increased infection prevention and control requirements (Mayer, 2021).

Study 1: Affective and Cognitive Trust Scale Validation

Method

Participants

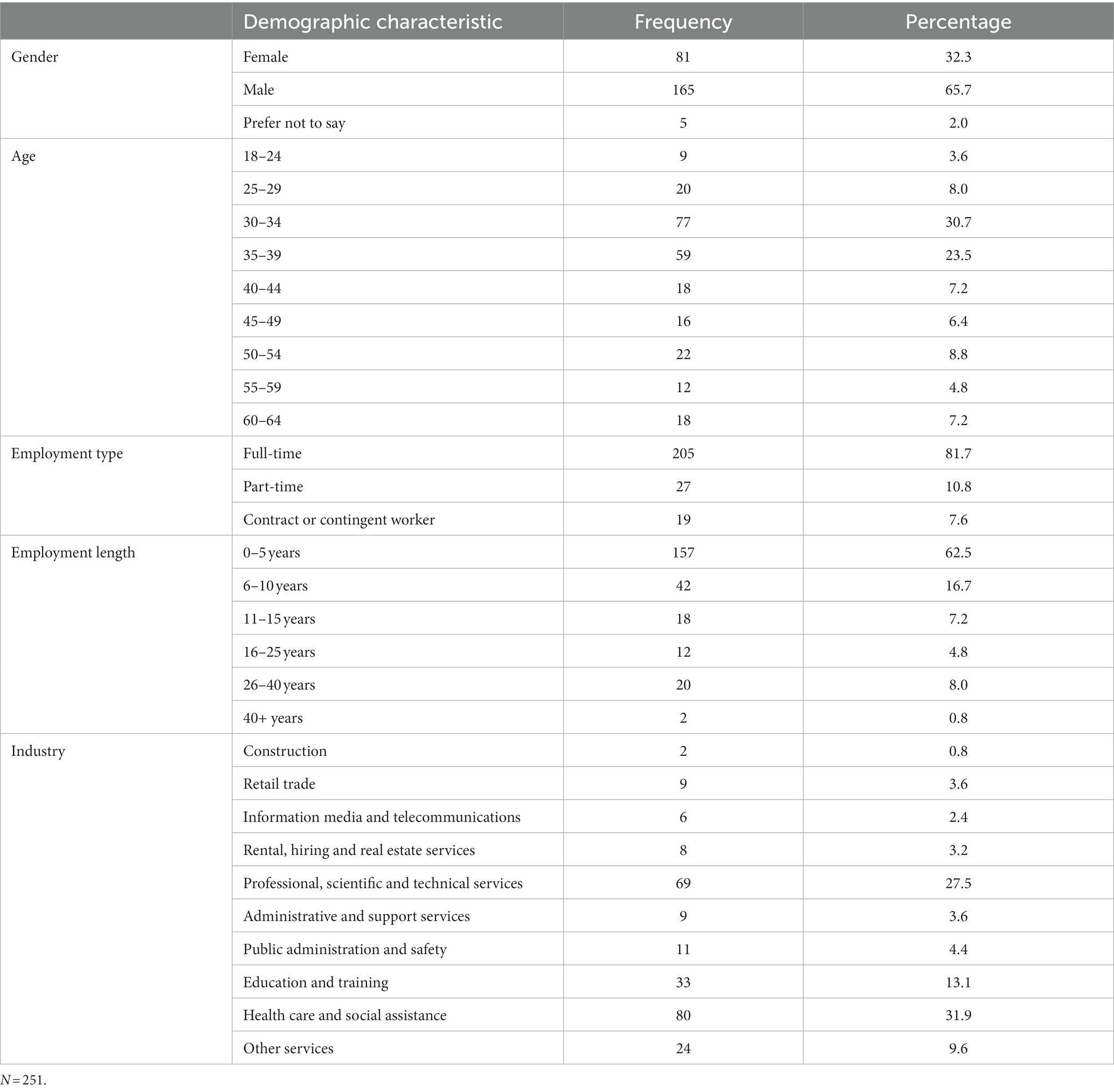

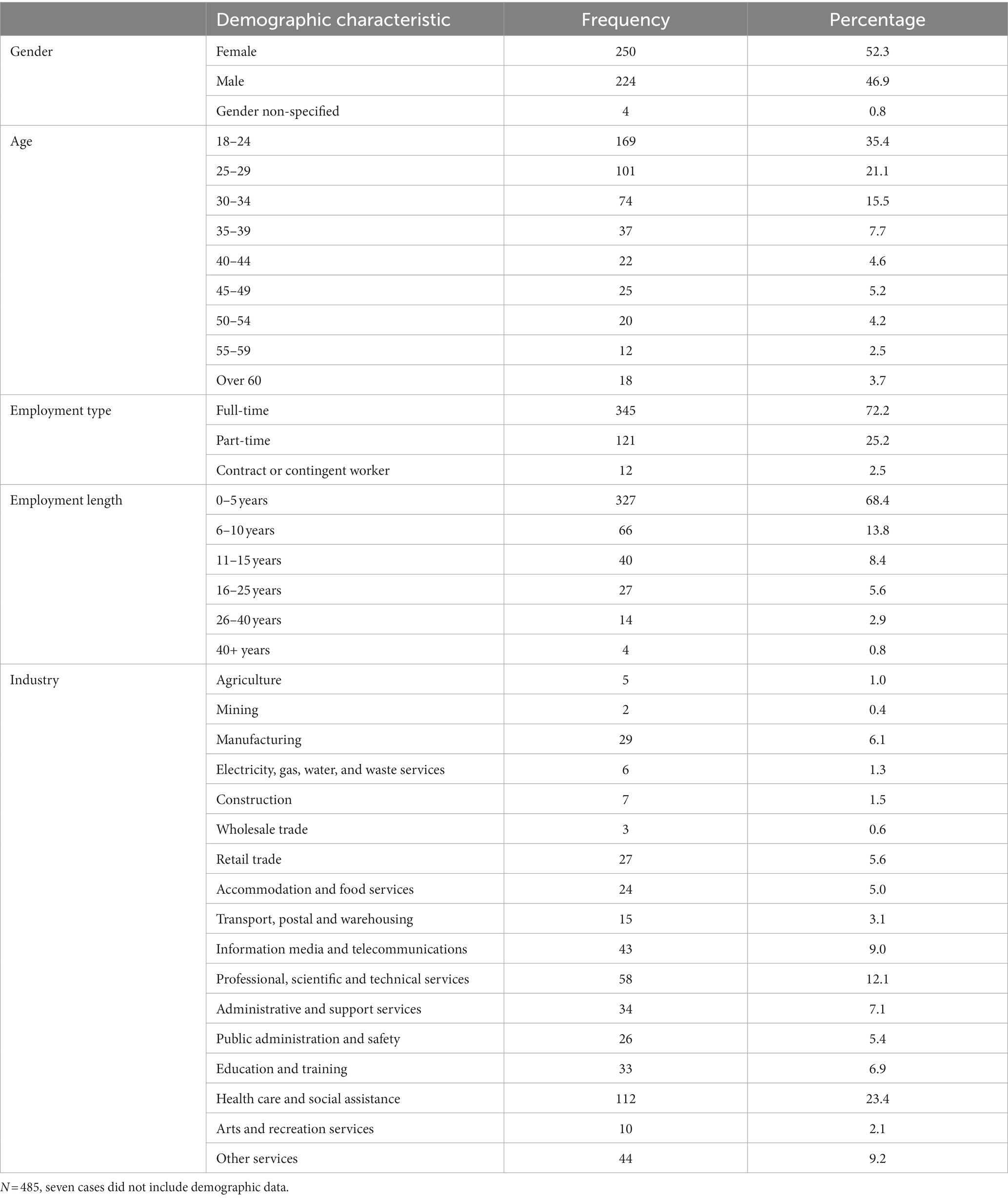

Study 1 sampled from populations of employed adults working in a range of industries (see Table 1 for detail), with most identifying as aged between 30–39, male, and from health and social assistance fields or professional, scientific and technical services. During April 2021, half of the participants were recruited using nonprobability snowball sampling via email and social media through the researcher’s and participants’ communication networks (Leighton et al., 2021; Darko et al., 2022). An advertisement outlined the nature of the study and invited voluntary participation. The remainder of participants were recruited through the online research panel Prolific using convenience sampling. Prolific is a platform to connect academic researchers and participants associated with the University of Oxford (Palan and Schitter, 2018). Consent was provided by submitting the online questionnaire. To ensure the target population for the study, participants were screened by asking if they were currently employed and reported to a manager. The online questionnaire received a total of 251 complete responses.

Data handling

Data were screened through IBM SPSS Statistics Version 27 (SPSS; IBM Corp, 2020) to assess accuracy of input, missing values, univariate outliers, linearity, and normality. No skew or kurtosis was identified and there was no missing data.

Analysis

To assess the internal consistency of the items and subscales of McAllister’s (1995) scale, item analysis was conducted using the following process, recommended by DeVellis (2003), Fishman and Galguera (2003), Kline (1999), Spector (1992), and Walker (2010). The negatively phrased items in McAllister’s (1995) scale were reverse scored to ensure positive correlations. Descriptive statistics were then reviewed. Items would be considered as contributing little to internal consistency if they showed a low item-total correlation coefficient (< 0.60), a large standard deviation (> 0.30), or a large skew index (> 6.00; Spector, 1992; Kline, 1999; DeVellis, 2003; Fishman and Galguera, 2003; Walker, 2010).

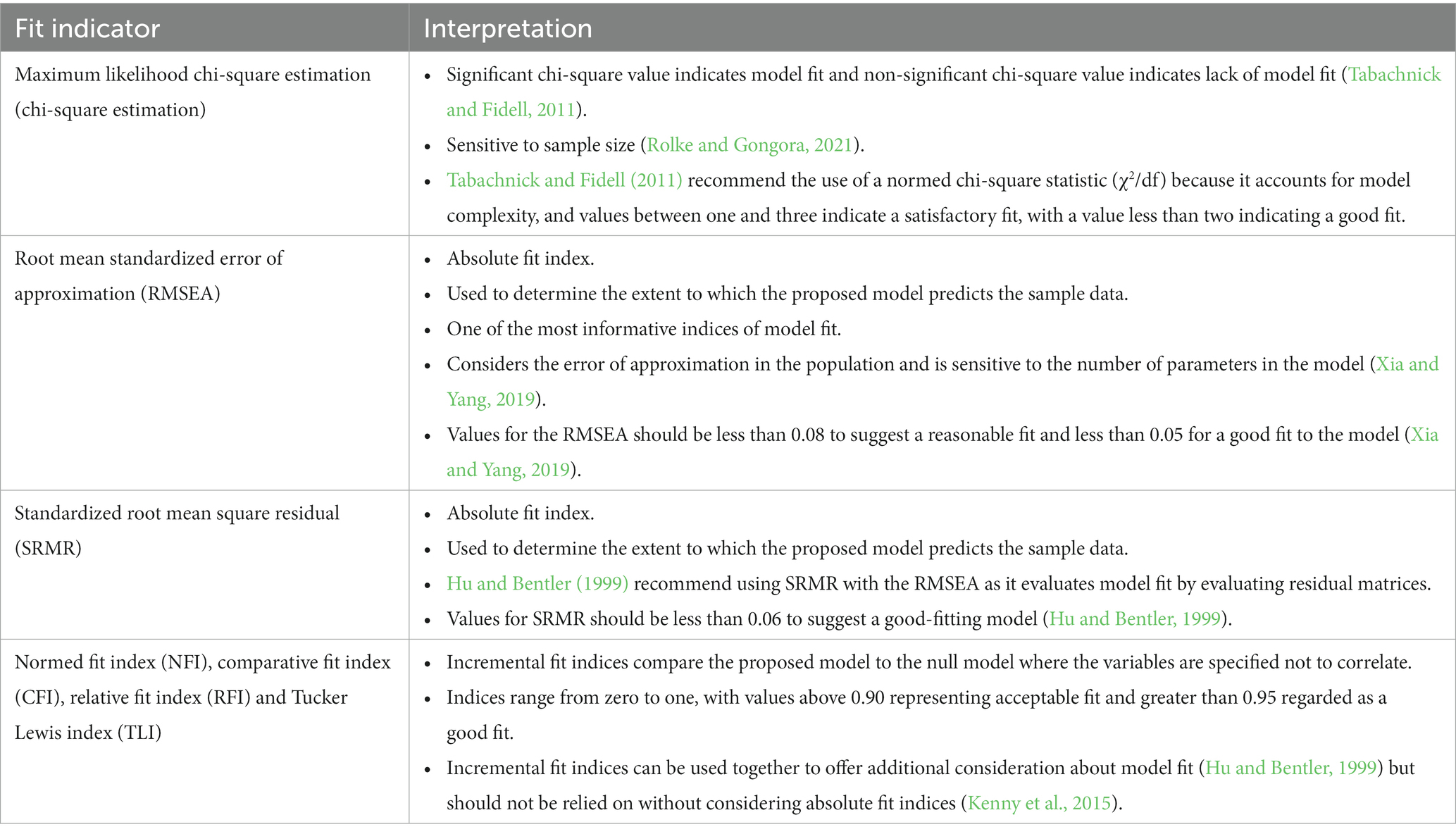

Confirmatory Factor Analysis was performed next using SPSS AMOS Version 27 (AMOS; IBM Corp, 2020) to determine if the McAllister’s (1995) items and subscales were a good fit to the scale model. This analysis was conducted to determine if the scale’s factor structure holds within the context of the sample population of working adults during the COVID-19 pandemic. This analysis involved proposing a set of relationships and evaluating the consistency of this model in an observed covariance-matrix (Hu and Bentler, 1999). Interpretation of the model fit indices used in this analysis are shown in Table 2.

Results

Internal consistency reliability

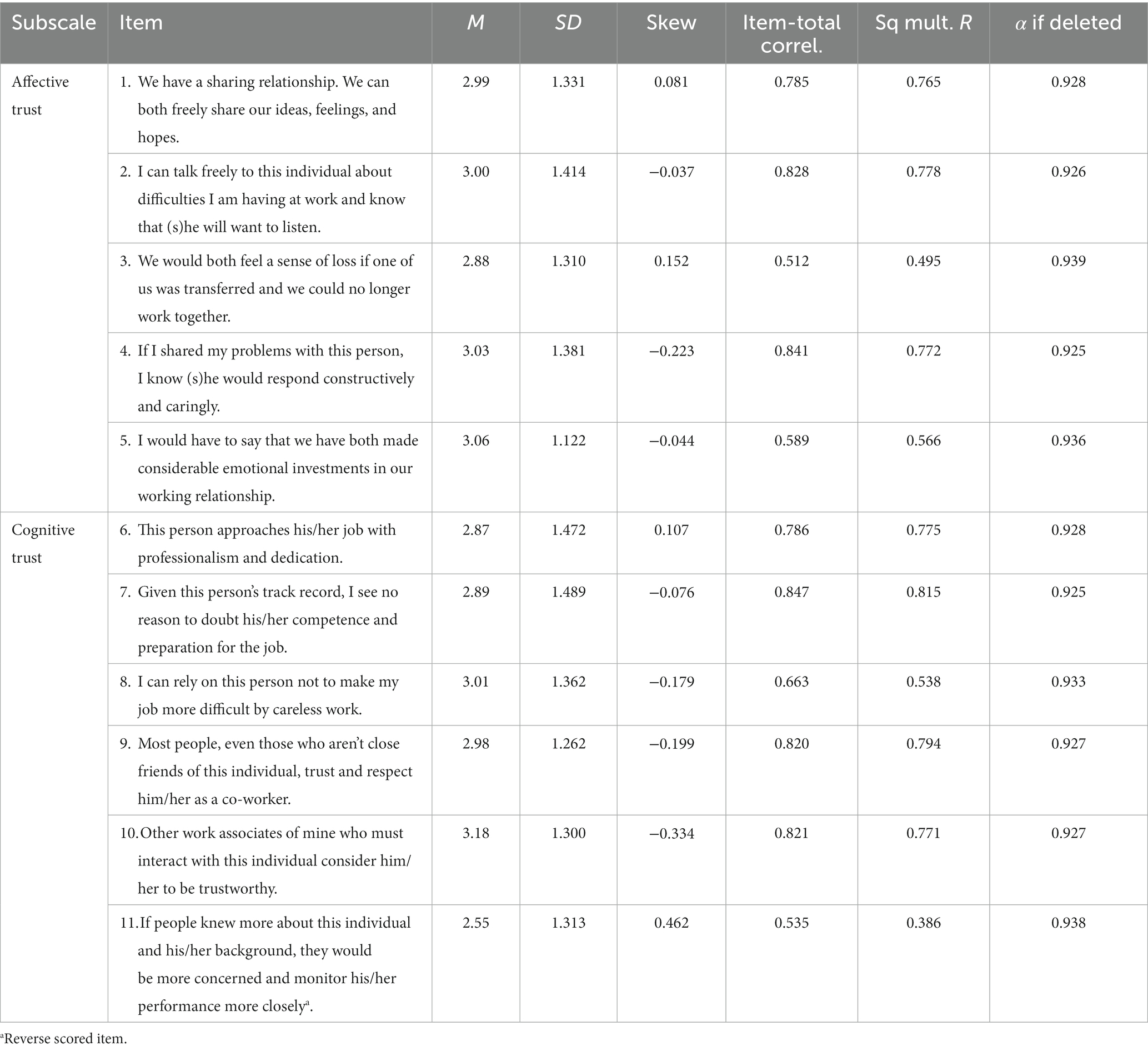

The 11-item Affective and Cognitive Trust scale (McAllister, 1995) was separated into its affective (items 1–5) and cognitive (items 6–11) subscales to conduct reliability analyses. The reliability analyses showed that both subscales had high Cronbach alpha scores (affective trust α = 0.89; cognitive trust α = 0.91). However, examining at the item-level for each subscale showed that three of the items (items 3, 5 and 11 – see Table 3) had low inter-item correlations, well below what would be considered acceptable for a well-validated scale (DeVellis, 2016; < 0.65). Many of the items (2, 4, 7, 9, and 10) also suggested multicollinearity (Grewal et al., 2004) inter-item total correlations >0.80. These findings in total suggest that the scale has less than optimal internal consistency. Meaning, although the subscales appeared internally consistent, the item analyses indicated that there were some unnecessary and some redundant items for this sample.

Table 3. Item analysis of McAllister’s (1995) affective and cognitive trust subscales.

Confirmatory factor analysis

Confirmatory factor analysis was employed to confirm the item structure of the Affective and Cognitive Trust scale (McAllister, 1995). Despite indications from the internal consistency analysis that some items were unnecessary, all items were retained in their original factor structure in the confirmatory factor analysis to further examine whether McAllister’s (1995) scale is valid in the contemporary work environment. The analysis revealed that the data was not an optimal fit to the model χ2 (43, N = 255) = 239.50, p > 0.001; PCMIN/DF = 5.57; NFI = 0.90; CFI = 0.92; RFI = 0.87; TLI = 0.89; RMSEA = 0.14 and RMSEA confidence interval = 0.12–0.15; SRMR = 0.06. Although CFI was acceptable, the NFI, RFI, TLI, and RMSEA indices fell below the criterion for a satisfactory fit. The NFI, RFI, and TLI violations suggested this model was not better than the null model (Hu and Bentler, 1999; Kenny et al., 2015), whereas the outcomes of RMSEA suggested this model was different from the perfect model (Hu and Bentler, 1999; Xia and Yang, 2019). Although the purpose of this analysis was to test the current model’s validity, an attempt was made to improve fit by changing the model based on the outcomes of the modification indices test using AMOS. Two affective items appeared to be predicted by the cognitive trust factor with this sample. Those items were moved from the affective trust factor to cognitive trust, but the model did not improve. Therefore, the model was deemed not fit for the sample and H1 was supported.

Study 2: alternative measure pilot

Study 2 aimed to determine if an alternative multidimensional employee trust in leaders scale could be designed that was reliable and valid for the contemporary work environment.

Item development

Items were developed based on a qualitative study on Australian corporate employees’ experience trusting their director managers and senior leaders (Fischer and Walker, 2022). The study was conducted to explore trust in traditional face-to-face and virtual work environments and used critical incident technique (Flanagan, 1954; Dugar, 2018). Qualitative research methods (Braun and Clarke, 2020, 2021) using real-world examples enabled greater clarity of the individual behaviours that constitute multidimensional employee trust in leaders, thereby ensuring the measure’s potential items were relevant for the contemporary workplace.

The following guidelines were observed in generating the items (Spector, 1992; Kline, 1999; DeVellis, 2016):

1. All items were written in brief, plain English (e.g., no jargon or colloquialisms).

2. No items were written in a double-barrelled manner, so to communicate easily to the respondent what they are meant to rate.

3. As much as possible, negatively phrased items were avoided.

Likert-type scales of agreement are useful in behavioural research (DeVellis, 2016), where items are presented as statements and participants are asked their level of agreement with each statement. The literature suggests that five-point scales are useful to increase response rate (Babakus and Mangold, 1992; Hayes, 1992; Devlin et al., 2003), response reliability and variability (Lissitz and Green, 1975; McKelvie, 1978; Jenkins and Taber, 1997). The verbal anchors were displayed going from the positive “Strongly agree” and ending with the negative “Strongly disagree”, which is the preferred method (Sheluga et al., 1978; Yan and Keusch, 2015). Items for the new scale were reviewed by two experts with experience in psychometrics and scale development before finalising the measure for the Alternative Measure Pilot study.

Method

Participants

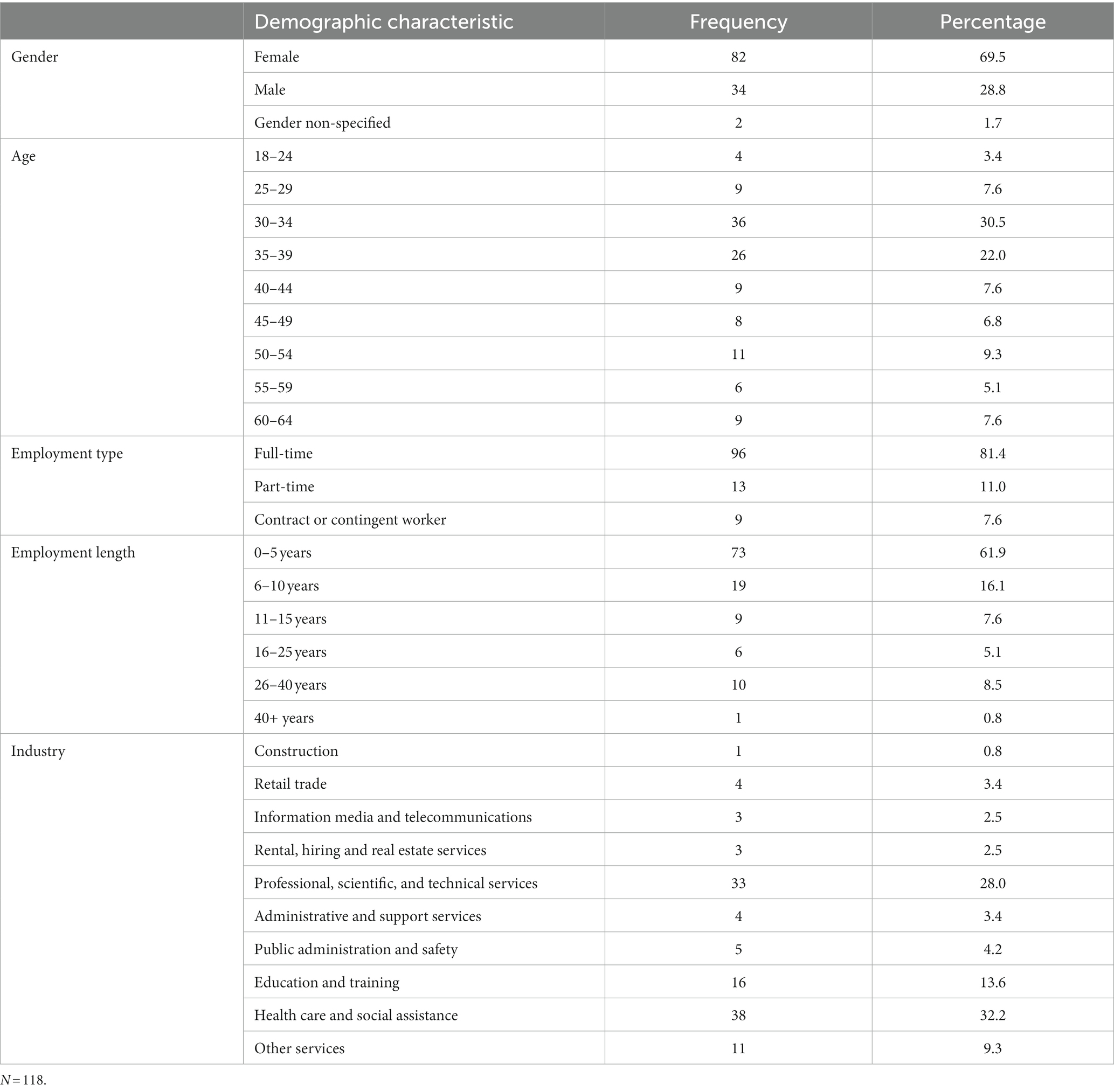

Pilot Study participants were employed adults with a manager to whom they reported. The ideal number of participants for pilot testing measures ranges from 75 to 200 (Hertzog, 2008; Johanson and Brooks, 2010). The sample in this pilot study was N = 118, and therefore considered acceptable (Thompson, 2004). Participant demographics are shown in Table 4, with most aged 30–39, identifying as female, and from health and social assistance fields or professional, scientific and technical services.

Procedure

During February 2021, participants were recruited using nonprobability snowball sampling via email and social media through the researcher’s and participants’ communication networks (Leighton et al., 2021; Darko et al., 2022). An advertisement outlined the nature of the study and invited voluntary participation. Consent was provided by submitting the online questionnaire. Participants were screened by asking if they were currently employed and reported to a manager.

Measure

The developed measure contained 49 items generated from a review of the literature and a qualitative study on multidimensional employee trust in leaders (Fischer and Walker, 2022). Table 5 illustrates the type of trustworthy behaviours and facets of relationship quality explored in this new measure of organisational trust with an example item for each.

Analysis

A process recommended by DeVellis (2003), Fishman and Galguera (2003), Kline (1999), Spector (1992), and Walker (2010) was followed to determine item and scale reliability. Negatively phrased items were initially reverse scored to ensure positive correlations. Descriptive statistics and item analyses were conducted to refine the multidimensional employee trust in leaders scale. This process involved inspecting item means and standard deviations to identify floor or ceiling effects and to ensure a range in responses; skew indices, corrected item-total correlations, squared multiple correlations, and α if item deleted were also examined. Items were considered for deletion if they met at least one of the following criteria: a low item-total correlation coefficient (< 0.60), a large standard deviation (> 0.30), or a large standardised skew index (> 6.0). Finally, a review of the items was carried out to identify ambiguous or redundant items.

Exploratory factor analysis was conducted to investigate underlying constructs using principal component analysis with varimax rotation. Principal component analysis was selected because the goal of the analysis was to reduce the number of items and determine which items were most salient to the final scale and subscales (Tabachnick and Fidell, 2011). Varimax was selected as it is a popular orthogonal rotation method used in Exploratory Factor Analysis (Tabachnick and Fidell, 2007). Finally, reliability analyses using Cronbach’s alpha was conducted to determine internal consistency.

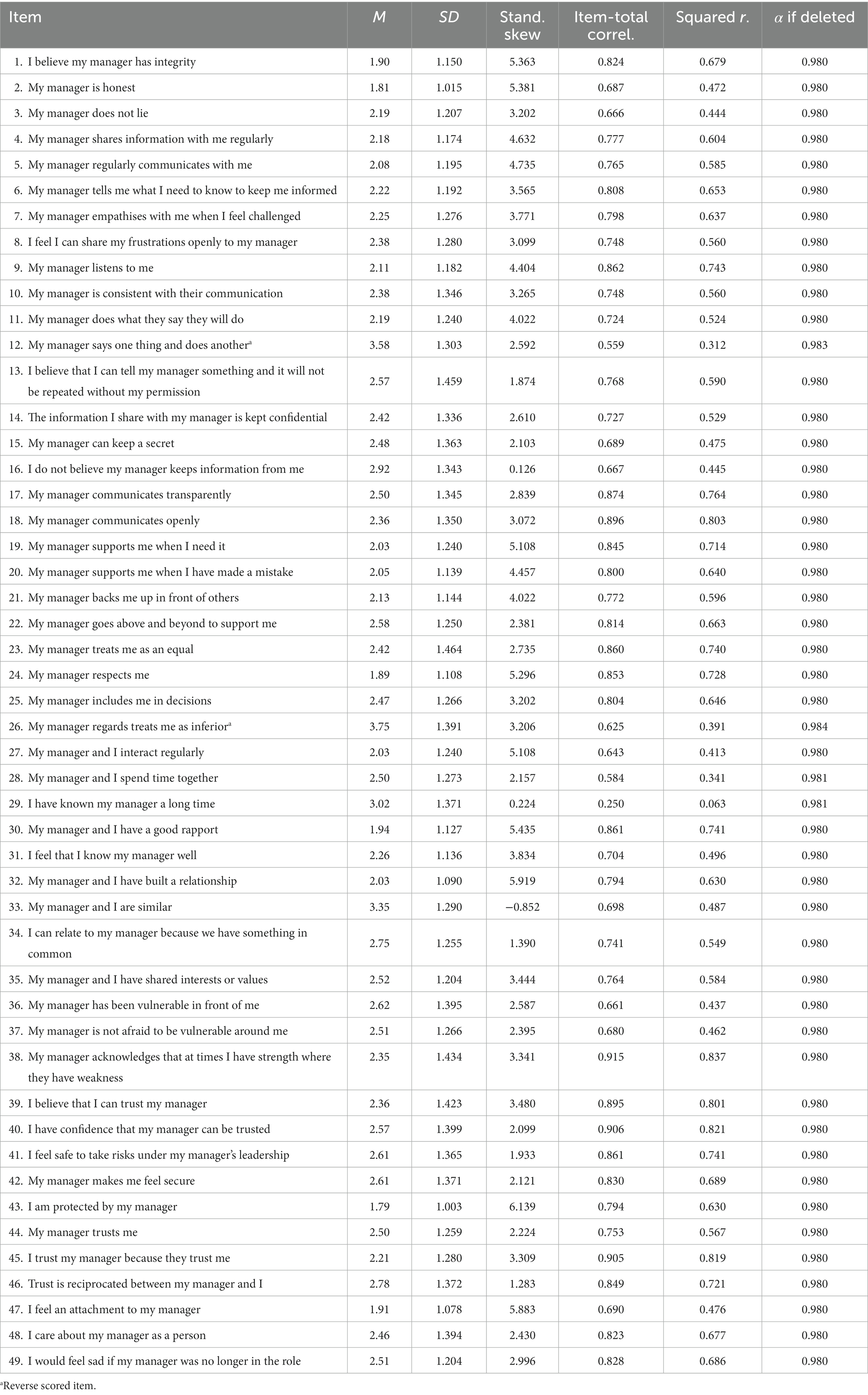

Results

Table 6 presents the descriptive statistics and item analysis for the 49 items comprising the multidimensional employee trust in leaders scale. An examination of the descriptive statistics and item analyses identified item 29 for deletion from the scale due to its extremely low item correlation. At the conclusion of the item analysis, the scale retained 48 items and Cronbach’s alpha indicated high internal consistency (α = 0.98).

Table 6. Descriptive statistics, item analysis, and reworded items for multidimensional employee trust in leaders scale.

Factor analysis with varimax rotation was carried out on the remaining 48-items. Factorability was established by examining the correlation matrix, the Kaiser-Meyer-Olkin Measure of Sampling Adequacy (KMO) and Bartlett’s Test of Sphericit (Browne, 2001). The correlation matrix revealed that all correlations were more than the recommended 0.30; the obtained KMO value was more than the minimal 0.60 at 0.951; and Bartlett’s Test of Sphericity was significant (p < 0.001), thus indicating a data set suitable for factor analysis.

A principal components extraction was used to produce the initial unrotated solution for the scale. Five Eigenvalues greater than one accounted for 74.7% of the variance. The scree plot also indicated the presence of five factors. The following criteria (Browne, 2001; Sellbom and Tellegen, 2019) were used to determine the items that load onto a factor and the stability of a factor: (1) An assigned value of >0.40 was used to identify items with substantive loadings on a factor. (2) Items with loadings >0.40 were also required to load at least 0.20 less on other factors to be considered as distinctive items. Items loading onto more than one factor were included in the factor with the highest loading if the items were distinctive. Items loading onto more than one factor that were not distinctive were not included in any factor. (3) The stability of a factor was determined by the factor having at least three items loading onto it both substantively and distinctively. The rotated factor structure did not yield distinguishable factors. Many items had cross-loadings that were not distinctive, and the factors lacked stability. Therefore, based on the results of Study 2, the wording of several items (6, 8, 19, 26, 31, 32, 36, 41, 47, 49) was revised to determine clearer differences and definitions of factors.

Study 3: alternative measure preliminary validation

Given the reliability and overall model fit problems seen in Study 1’s examination of McAllister’s (1995) Affective and Cognitive Trust scale, Study 3 was run on the revised battery of items from Study 2 on a new sample to address the second hypothesis.

Method

Participants

As with Studies 1 and 2, the sample consisted of employed adults with a manager to whom they reported. Participant demographics are shown in Table 7. The participant demographic profile for this sample was more evenly split across the genders than the samples from Studies 1 and 2. There was a greater skew to the younger ages of 18–34. Whilst the industry range was more even, there remained a greater number of health and social assistance participants.

Procedure

Participant recruitment was conducted in two phases. First, in March 2021 via email and social media through the researcher’s and participants’ communication networks using nonprobability snowball sampling (Leighton et al., 2021; Darko et al., 2022) Second, in April 2021 via a research panel via the data collection agency Prolific using convenience sampling. All participants were screened to ensure they were currently employed and reported to a manager. Thirty-three cases were removed due to substantial missing data, with the remaining number of participants being 485.

Measure

The 48 items identified and revised from Study 2 was used to measure employee multidimensional employee trust in leaders (see Table 6).

Data handling

Data were screened through IBM SPSS Statistics Version 27 (SPSS; IBM Corp, 2020) to assess accuracy of input, missing values, univariate outliers, linearity, and normality. No skew or kurtosis was identified and there was no missing data.

To run both planned analyses for Study 3, the full data set (N = 485) was randomly split into two data sets using IBM SPSS Statistics Version 27 (SPSS; IBM Corp, 2020). A set of cases (N = 241) were analysed using exploratory factor analysis. The remainder of the cases (N = 244) was used for the confirmatory factor analysis.

Analysis

The same process used in the pilot study was used in the validation study to determine item and scale reliability. The sample of N = 241 was considered sound for exploratory factor analysis (Thompson, 2004). Items were again considered for deletion from the scales if they met at least one of the following criteria: a low item-total correlation coefficient (< 0.60), a large standard deviation (> 0.30), or a large standardised skew index (> 6.00; Spector, 1992; Kline, 1999; DeVellis, 2003; Fishman and Galguera, 2003; Walker, 2010).

Exploratory factor analysis was used to determine the factor structure of the scale using principal component analysis. Direct oblimin rotation was selected based on the high number of items and high internal consistency, being a preferred rotation method when variables are correlated (Kline, 2014). Reliability analyses using Cronbach’s alpha was used to determine internal consistency of the subscales.

Confirmatory factor analysis was undertaken following the exploratory factor analysis to determine if the factor structure observed could be confirmed in an equivalent sample. The sample size (N = 244) was considered sound for confirmatory factor analysis (Thompson, 2004). This analysis involved proposing a set of relationships and evaluating the consistency of this model in an observed covariance-matrix (Hu and Bentler, 1999). Interpretation of the model fit indices used in this analysis can be found in Table 2.

Results

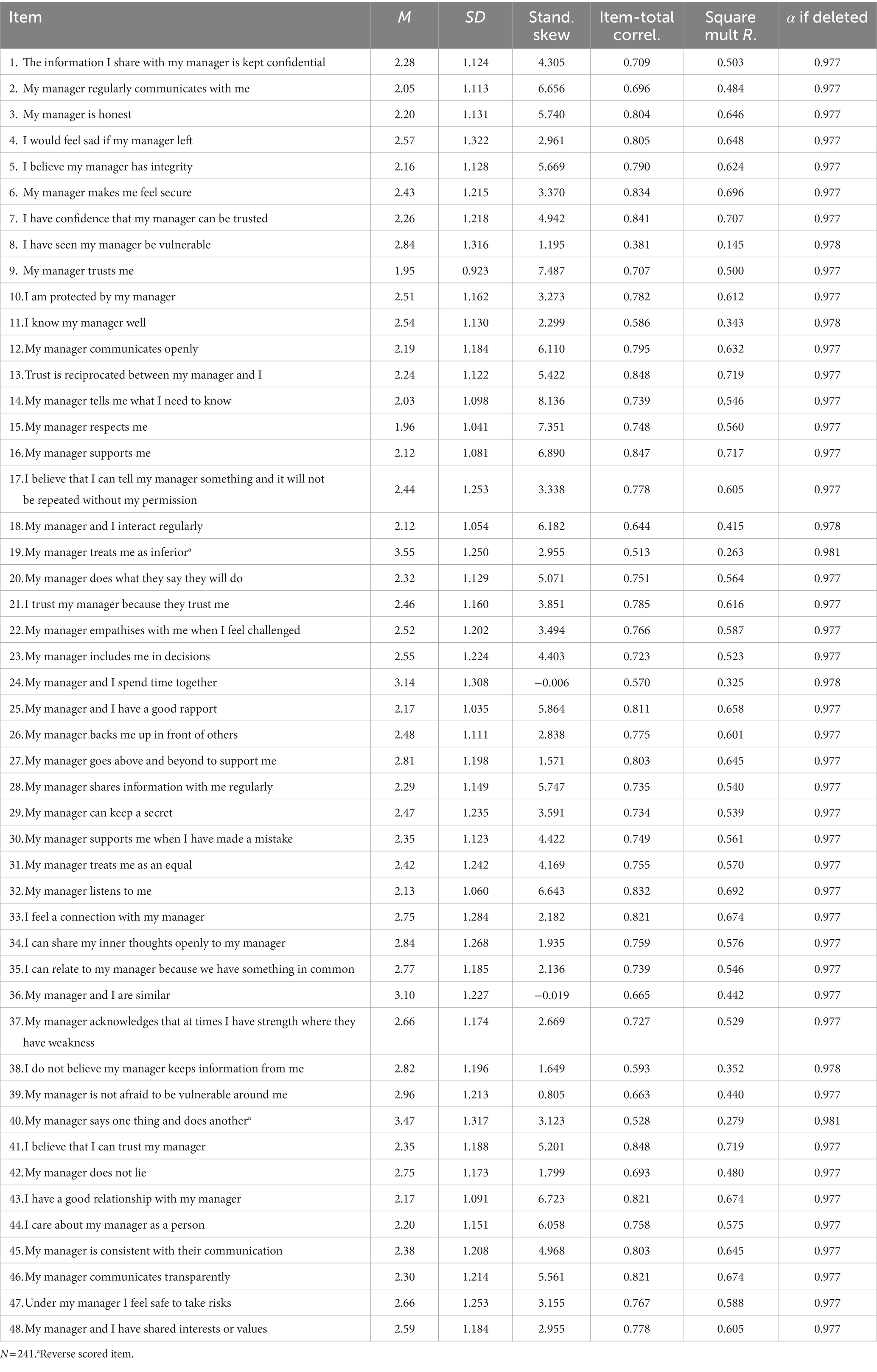

Item analysis

Table 8 presents the descriptive statistics and item analysis prior to conducting exploratory factor analysis with the 48 items comprising the organisational trust scale. An examination of the descriptive statistics and item analyses identified six items (8, 11, 19, 24, 38, and 40) for deletion from the trust scale based on low item-total correlations (0.65). High skewness was originally included in the analysis to identify items for deletion; however, no items were selected for deletion based on skewness alone. This choice was made because participants responses may have been influenced by the drastic global experience of forced virtual work environments (Kaushik and Guleria, 2020; Trougakos et al., 2020) as the data was collected during the height of the COVID-19 pandemic.

Table 8. Descriptive statistics and item analysis for multidimensional employee trust in leaders scale.

Exploratory factor analysis

Exploratory factor analyses using principal component analysis with direct oblimin rotation was carried out on the remaining 42 items (Kline, 2014). Direct oblimin rotation was selected due to the high number of items and high internal consistency as it is a preferred rotation method when variables are correlated (Browne, 2001). Factorability of each data set was established by examining the correlation matrix, the Kaiser-Meyer-Olkin Measure of Sampling Adequacy (KMO) and Bartlett’s Test of Sphericity (Browne, 2001). The correlation matrix revealed that all correlations were above the recommended 0.30; the obtained KMO value exceeded the minimal 0.60 at 0.97 (Kaiser, 1970; Kaiser, 1974); and Bartlett’s Test of Sphericity was significant (Bartlett, 1954) (p < 0.001), thus indicating a data set suitable for factor analysis.

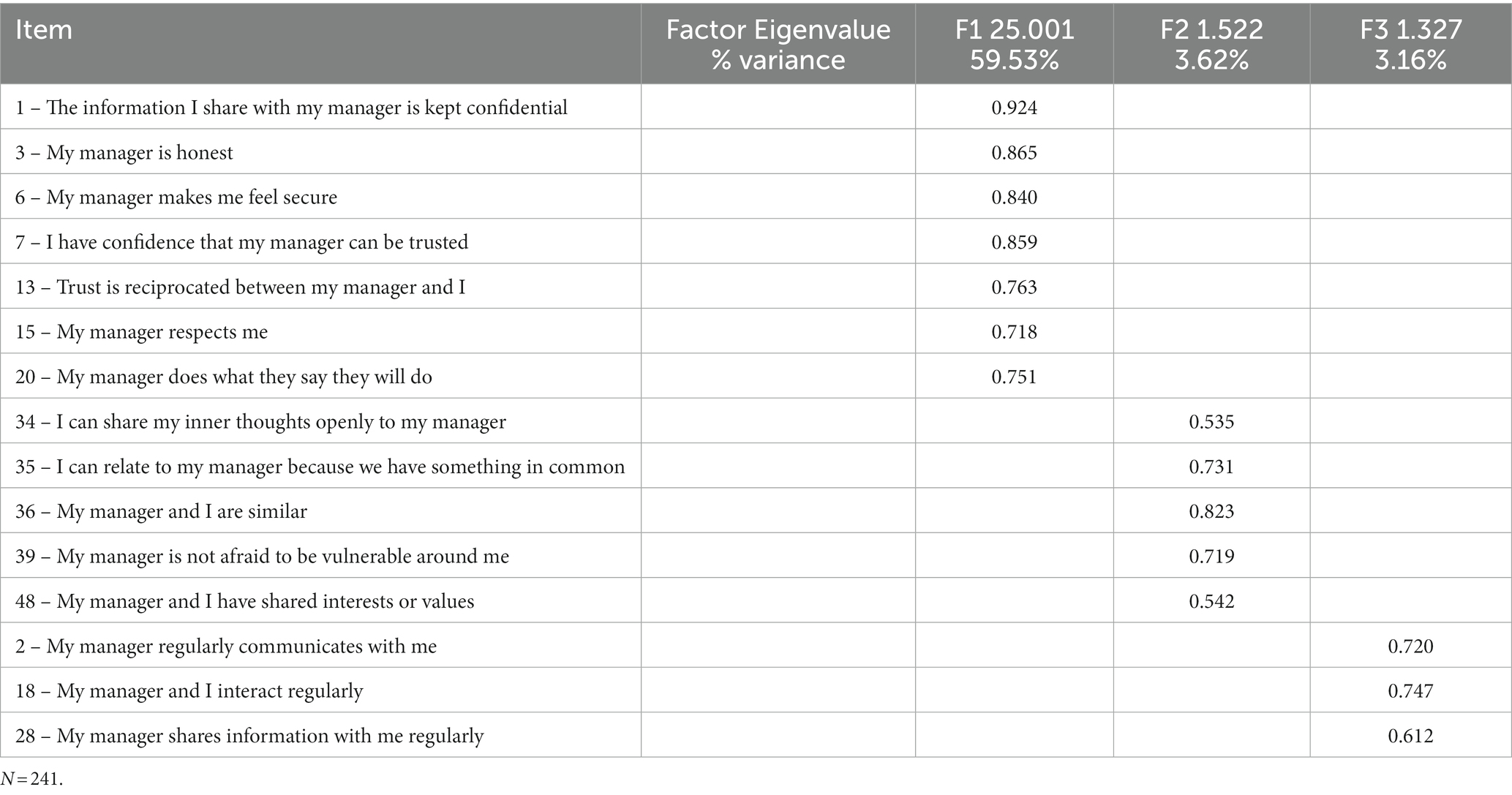

A principal components extraction was used to produce the initial unrotated solution for the scale. Three Eigenvalues greater than one accounted for 66.3% of the variance. The scree plot also indicated the presence of three factors. The pattern matrix, produced from the direct oblimin rotation, was explored to identify which items loaded onto the three factors (Kline, 2014). The same criteria as used in the Pilot Study determined the items that loaded onto a factor and the stability of each factor.

Table 9 shows the three distinguishable factors that emerged, as well as the items associated with each factor. These factors were labelled Authentic Behaviours (F1), Interpersonal Connection and Care (F2), and Consistent Communication (F3). The rationale for the names ascribed to each factor was based on the nature of the items that emerged and the discussion of behavioural and relational elements to multidimensional employee trust in leaders (Dirks and Ferrin, 2002; Schoorman et al., 2007; McEvily and Tortoriello, 2011). Reliability analysis showed strong Cronbach alphas (Authentic Behaviours α = 0.94; Interpersonal Connection and Care α = 0.87; Consistent Communication α = 0.83).

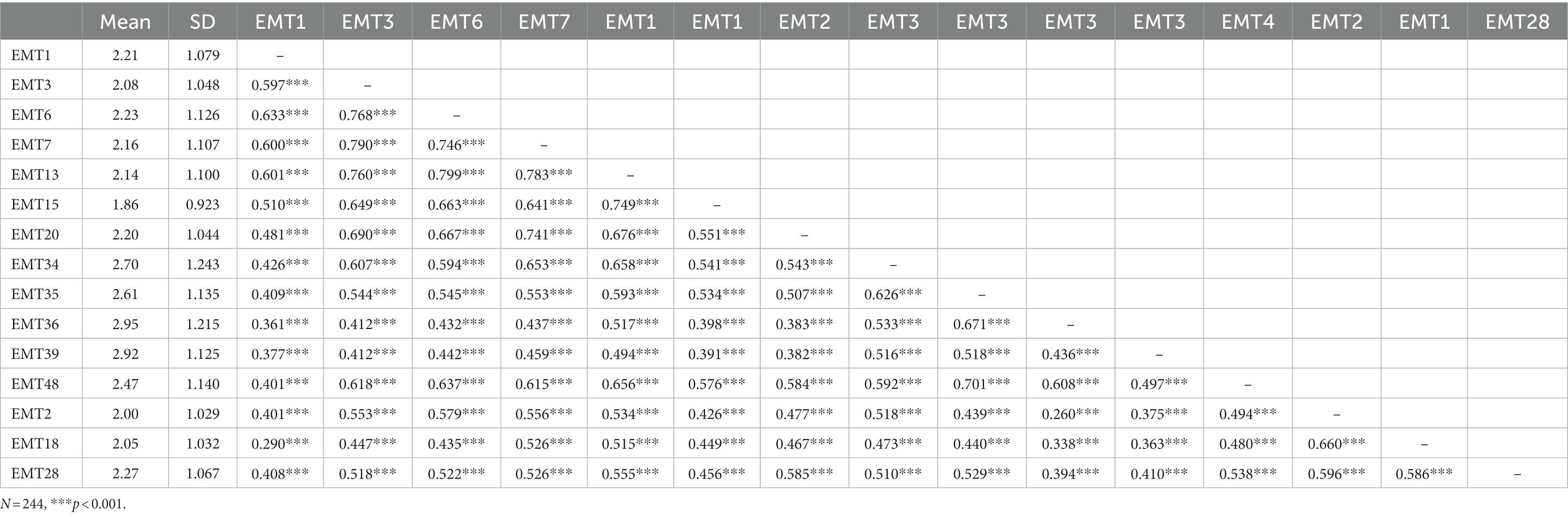

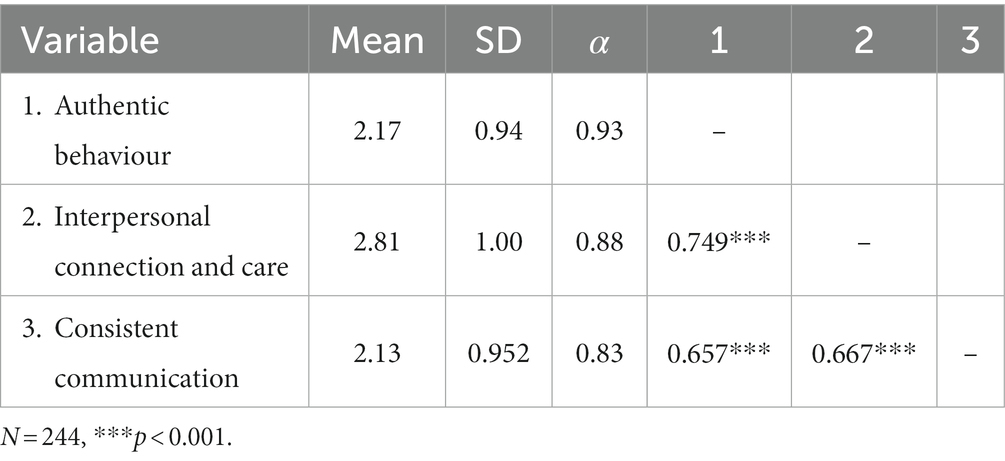

Confirmatory factor analysis

Finally, confirmatory factor analysis was conducted (N = 244) and all items’ correlation values were in an acceptable range (Tabachnick and Fidell, 2011) of ≥0.30 and ≤ 0.80. See Table 10 for the item descriptives and correlations and Table 11 for descriptive statistics, reliability scores and correlations of the factors.

To ensure comparability between the two subsamples of the original data set used for the exploratory factor analysis and the confirmatory factor analysis, a series of one-way ANOVAs and chi-square tests were conducted. The ANOVAs and chi-square tests were based on the factors identified in the exploratory factor analysis (see Table 9: F1, F2 and F3), Age and Gender. No significant differences between the sample used for the exploratory factor analysis and the sample used for the confirmatory factor analysis were found for any variable.

The results showed a good fit to the model (see Figure 1) based on a range of indices (see Table 2 for interpretation), χ2 (87, N = 244) = 171.56, p < 0.001; PCMIN/DF = 1.97; NFI = 0.95; CFI = 0.97; RFI = 0.94; TLI = 0.96; RMSEA = 0.06 and RMSEA confidence interval = 0.05–0.08; SRMR = 0.04. While the χ2 result was significant, the χ2 statistic is sensitive to sample size and is no longer relied upon as the main source for acceptance or rejection of a model (Schermelleh-Engel et al., 2003; Vandenberg, 2006). Rather, the χ2 significant result gives cause to refer to additional fit indices, which is why the full range of reported fit indices were explored. In addition, the model showed excellent fit after the initial item and factor analyses and no further model manipulation was required. Therefore, H2 was supported.

Discussion

The aims of these studies were to examine the validity of McAllister’s (1995) Affective and Cognitive Trust scale in the contemporary work environment (H1; Study 1) and develop and preliminarily validate an alternate measure of employee multidimensional trust in leaders (EMT) appropriate for the blended workplace using appropriate scale design protocols (H2; Studies 2 and 3). Study 1’s examination of McAllister’s (1995) Affective and Cognitive Trust scale achieved the first aim. The item analysis showed that three of the items (3, 5, 11) had low inter-item correlations, well below what would be considered acceptable for a well-validated scale (DeVellis, 2016; ≤ 0.65). Many of the items (2, 4, 7, 9, 10) also suggested multicollinearity37 (inter-item total correlations >0.80). These findings in total suggest that the scale has less than optimal internal consistency. Confirmatory factor analysis reported suboptimal fit of the data to the scale’s structural model. McAllister’s (1995) Affective and Cognitive Trust scale has been used often in trust research (McEvily and Tortoriello, 2011). Given the current findings, there is a case to suggest that McAllister’s (1995) scale may not be ideally suited to the contemporary work environment, or at least not for the type of post COVID workplace studied here, and this may impact future research employing that scale.

This study’s findings give cause for reconsidering employee multidimensional employee trust in leaders measurement. The workplace has evolved substantially since 1995. Generational differences (Twenge, 2010), gender representation (Martin and Phillips, 2007), and the influence of globalisation (Alemanno and Cabedoche, 2011) are changes in the workplace identified in the literature. The work environment has also changed, and been improved, by technology (Andreeva and Kianto, 2012), flexible work practices (Spreitzer et al., 2017) and a focus on employee wellbeing (Guest, 2017). The alternative EMT scale identified this with its reference to the importance of consistent communication, perhaps across distance and mediums, and interpersonal caring relationships which links to focussing on wellbeing. Scales measuring complex psychological constructs must be checked for relevancy in a fast-moving society where the work environment may look very different decade by decade.

Studies 2 and 3, a pilot and a preliminary validation of an alternative EMT scale, addressed the second aim. This 15-item scale, with the subscales of Authentic Behaviours, Interpersonal Connection and Care, and Consistent Communication, was shown to have strong psychometric properties. A main outcome of the current series of studies is in regards the multidimensional structure of employee trust in leaders in the contemporary workplace. Consistent with McAllister (1995) and other trust researchers (Dirks and Ferrin, 2002; Schoorman et al., 2007; McEvily and Tortoriello, 2011; Fischer et al., 2020), Study 3 observed a relational dimension and two behavioural dimensions to multidimensional employee trust in leaders. The relational dimension of the alternative EMT scale contains items that describe interpersonal connection and care. This includes vulnerability, sameness, and shared experiences and values. The two behavioural dimensions of the alternative EMT describes (1) authentic behaviours, including maintaining confidentiality, honesty, respect, reliability, and reciprocity and (2) key communication behaviours. The identification of three dimensions in the EMT scale in Study 3 suggests that theory about trust in organisations needs to advance with the evolving workplace context.

Communication has always been essential to organisational performance (Engelbrecht et al., 2017; Fulmer and Ostroff, 2017; Costa et al., 2018), but now it appears to be more important than ever. Although it could be categorised as a behaviour, communication appeared to have a substantial impact on employee trust in leaders in the contemporary work environment and it emerged as its own factor in the analyses. In McAllister’s (1995) Affective and Cognitive Trust scale the construct of ‘communication’ was embedded across the dimensions and their items. This study highlighted the essential element of communication as a unique factor to be measured separately from relationship quality and other trustworthy behaviours. This second emergent behavioural dimension was called “Consistent Communication”. These findings suggest communication is at the core of trust in the contemporary work environment, as employees working physically together and virtually with their teams and leaders is now commonplace.

Implications

These preliminary findings showed promise for an alternative EMT scale that is applicable to the blended work environment of the contemporary workplace. The behavioural and relational elements of multidimensional employee trust in leaders were upheld, but also showed the significance of communication as its own dimension of trust in the contemporary workplace. This has implications for future trust measurement as previous research has not accounted for three distinct dimensions. McAllister’s (1995) Affective and Cognitive Trust scale only contains two dimensions and does not measure communication as its own dimension. Interpretation of previous multidimensional employee trust in leaders’ literature using McAllister’s (1995) scale must be exercised with caution noting this limitation, and future research should consider measuring all three organisational trust dimensions to be applicable for the contemporary work environment.

The following analyses are proposed to advance the findings of this preliminary analysis. First, discriminant validity analysis is warranted to explore EMT’s association with different constructs, such as job satisfaction or employee engagement. Divergent validity analysis can be used to ensure EMT does not correlate too strongly with measurements of a similar but distinct trait, such as Leader Member Exchange. Construct validity analysis is useful to assess whether a different sample’s EMT CFA results align with the findings of this study. This would also be helpful to replicate the assessment of McAllister’s (1995) Affective and Cognitive Trust scale CFA against the findings of this study with a new sample. Next, predictive validity analysis could be used to determine if EMT predicts an outcome, such as employee engagement or organisational citizenship behaviour. Finally, test–retest reliability analysis should be done to ensure stability in the measure.

Limitations

Although the findings of this study are promising, there are some limitations. Whilst data collection during the COVID-19 pandemic has been positioned as key to the outcomes of the research, it must also be acknowledged that the experience of forced remote working might have impacted the findings in a way that is less generalisable should remote work become less prominent. Next, while gender was relatively balanced in Study 3, Study 1 had greater male representation and Study 2 had greater female representation. There was a larger sample size of younger employees under the age of 34 than those between 35 and retirement age (approximately 65 and older) in Study 3, while more participants aged 30–39 participated in Studies 1 and 2. Also, there were greater numbers of responses from the Healthcare and Social Assistance and Professional, Scientific and Technical Services than other industries. This potential homogeneity in the population may have influenced the findings in unknown ways and may make the findings more relevant to younger professional adults. Lastly, the use of the two recruitment methods may have influenced the findings. Part of the sample was recruited via email, social media, and snowballing through the researcher’s and participants’ communication networks based in Australia. Although the use of social media did improve obtaining responses from outside Australia. The second part of the sample was recruited through a research panel via the data collection agency Prolific. Those participants were recruited from a variety of countries. Work location was not asked of participants, so it is not possible to determine if place influenced outcomes. Nevertheless, the potential issue regarding recruitment strategy differences was partially dealt with by exploring comparability through a series of inferential tests and no significant differences between the participant groups were observed.

Future research

This study has demonstrated the significance of communication as a trustworthy behaviour that requires focus in a blended environment of virtual and face-to-face work contexts. The findings regarding the relationships between the EMT scale dimensions and organisational outcomes indicate important cross-sectional associations and reinforce organisational trust’s multidimensionality. The EMT scale showed three unique dimensions of organisational trust: one relational dimension and two behavioural dimensions. Consistent communication emerged from other trustworthy behaviours as a significant behavioural element of organisational trust. The EMT scale appears to be reliable and valid, but more research is needed to continue to assess the psychometric performance of the scale in other samples.

Data availability statement

The datasets presented in this article are not readily available because the plain language statement used to invite participation explained that the data will only be accessed by the research team, and that data will be securely stored online within Deakin University and then destroyed after 7 years. This was in the approved ethics application and aligns with the Australian Psychology Society’s Code of Ethics. Requests to access the datasets should be directed to the articles first author, SF, fischerlsarah@gmail.com.

Ethics statement

The studies involving human participants were reviewed and approved by Deakin University Human Research Ethics Committee Project ID HEAG-H 179_2019. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author contributions

SF designed, conducted, and wrote up the studies into this manuscript. AW and SH supervised SF through this process as part of SF’s PhD. AW was the principal supervisor and contributed to conception and design of the study. SF performed the statistical analysis under the supervision of SH. SF wrote the first draft of the manuscript. All authors contributed to the article and approved the submitted version.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Agote, L., Aramburu, N., and Lines, R. (2016). Authentic leadership perception, trust in the leader, and followers’ emotions in organizational change processes. J. Appl. Behav. Sci. 52, 35–63. doi: 10.1177/0021886315617531

Alemanno, S. P., and Cabedoche, B. (2011). Suicide as the ultimate response to the effects of globalisation? France Télécom, psychosocial risks, and communicational implementation of the global workplace. Intercult. Commun. Stud. 20, 24–40.

Andreeva, T., and Kianto, A. (2012). Does knowledge management really matter? Linking knowledge management practices, competitiveness and economic performance. J. Knowl. Manag. 16, 617–636. doi: 10.1108/13673271211246185

Arruda, W. (2021) 6 ways COVID-19 will change the workplace forever [Internet]. Forbes. Available at: https://www.forbes.com/sites/williamarruda/2020/05/07/6-ways-covid-19-will-change-the-workplace-forever/?sh=70eb0b8f323e (Accessed April 8).

Atkinson, C., and Hall, L. (2011). Flexible working and happiness in the NHS. Empl. Relat. 33, 88–105. doi: 10.1108/01425451111096659

Babakus, E., and Mangold, W. G. (1992). Adapting the SERVQUAL scale to hospital services: an empirical investigation. Health Serv. Res. 26, 767–786.

Bartlett, M. S. (1954). A note on the multiplying factors for various χ2 approximations. J. R. Stat. Soc. B. Methodol. 16, 296–298. doi: 10.1111/j.2517-6161.1954.tb00174.x

Bodó, B. (2021). Mediated trust: a theoretical framework to address the trustworthiness of technological trust mediators. New Media Soc. 23, 2668–2690. doi: 10.1177/1461444820939922

Braun, V., and Clarke, V. (2020). One size fits all? What counts as quality practice in (reflexive) thematic analysis? Qual. Res. Psychol. 18, 328–352. doi: 10.1080/14780887.2020.1769238

Browne, M. W. (2001). An overview of analytic rotation in exploratory factor analysis. Multivar. Behav. Res. 36, 111–150. doi: 10.1207/S15327906MBR3601_05

Carrigan, M., Magrizos, S., Lazell, J., and Kostopoulos, I. (2020). Fostering sustainability through technology-mediated interactions: conviviality and reciprocity in the sharing economy. Inf. Technol. People 33, 919–943. doi: 10.1108/ITP-10-2018-0474

Chung, H., Birkett, H., Forbes, S., and Seo, H. (2019). Covid-19, flexible working, and implications for gender equality in the United Kingdom. Gend. Soc. 35, 218–232. doi: 10.1177/08912432211001304

Coronavirus: How the world of work may change forever [Internet] (2022) British broadcasting company. 19 January. Available at: https://www.bbc.com/worklife/article/20201023-coronavirus-how-will-the-pandemic-change-the-way-we-work

Costa, A. C., Fulmer, C. A., and Anderson, N. R. (2018). Trust in work teams: an integrative review, multilevel model, and future directions. J. Organ. Behav. 39, 169–184. doi: 10.1002/job.2213

Darko, E. M., Kleib, M., and Olson, J. (2022). Social media use for research participant recruitment: integrative literature review. J. Med. Internet Res. 24:e38015. doi: 10.2196/38015

DeVellis, R. (2003) Scale development: theory and applications, 2nd Edition. Thousand Oaks, CA: Sage Publications.

DeVellis, R. F. (2016) Scale development: theory and applications (Vol. 26). Thousand Oaks, CA: Sage Publications.

Devlin, S. J., Dong, H. K., and Brown, M. (2003). Selecting a scale for measuring quality. Mark. Res. 15, 13–16.

Dirks, K. T., and Ferrin, D. L. (2002). Trust in leadership: meta-analytic findings and implications for research and practice. J. Appl. Psychol. 87, 611–628. doi: 10.1037/0021-9010.87.4.611

Dugar, K. (2018) To renounce or not to renounce that is the question: an exploratory sequential scale development study of consumers’ perceptions of voluntary Deconsumption. AMA Winter Educators’ Conference Proceedings, Volume 29, pp. 31–34.

Engelbrecht, A. S., Heine, G., and Mahembe, B. (2017). Integrity, ethical leadership, trust and work engagement. Leadersh. Organ. Dev. J. 38, 368–379. doi: 10.1108/LODJ-11-2015-0237

Fischer, S., Hyder, S., and Walker, A. (2020). The effect of employee affective and cognitive trust in leadership on organisational citizenship behaviour and organisational commitment: meta-analytic findings and implications for trust research. Aust. J. Manag. 45, 662–679. doi: 10.1177/0312896219899450

Fischer, S., and Walker, A. (2022). A qualitative exploration of trust in the contemporary workplace. Aust. J. Psychol. 74:2095226. doi: 10.1080/00049530.2022.2095226

Fishman, J. A., and Galguera, T. (2003) Introduction to test construction in the social and behavioral sciences: a practical guide. Lanham, MD: Rowman & Littlefield.

Flanagan, J. C. (1954). The critical incident technique. Psychol. Bull. 51, 327–358. doi: 10.1037/h0061470

Fulmer, C. A., and Ostroff, C. (2017). Trust in direct leaders and top leaders: a trickle-up model. J. Appl. Psychol. 102, 648–657. doi: 10.1037/apl0000189

Galea, C., Houkes, I., and De Rijk, A. (2014). An insider’s point of view: how a system of flexible working hours helps employees to strike a proper balance between work and personal life. Int. J. Hum. Resour. Manag. 25, 1090–1111. doi: 10.1080/09585192.2013.816862

Graham, B. (2020). How COVID-19 will reshape the Australian workplace [Internet]. 9news.com.au. Available at: https://www.9news.com.au/national/coronavirus-work-from-home-office-hygiene-post-covid19/28bc5559-e4bb-4097-8616-95d44c2096b0 (Accessed May 20, 2020).

Grewal, R., Cote, J. A., and Baumgartner, H. (2004). Multicollinearity and measurement error in structural equation models: implications for theory testing. Mark. Sci. 23, 519–529. doi: 10.1287/mksc.1040.0070

Guest, D. E. (2017). Human resource management and employee well-being: towards a new analytic framework. Hum. Resour. Manag. J. 27, 22–38. doi: 10.1111/1748-8583.12139

Hayes, B. E. (1992) The measurement and meaning of work social support. Paper presented at the eight annual conference of the Society for Industrial and Organizational Psychology, San Francisco, CA.

Hertzog, M. A. (2008). Considerations in determining sample size for pilot studies. Res. Nurs. Health 31, 180–191. doi: 10.1002/nur.20247

Hu, L. T., and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Model. Multidiscip. J. 6, 1–55. doi: 10.1080/10705519909540118

Jenkins, G. D., and Taber, T. D. (1997). A Monte Carlo study of factors affecting three indices of composite scale reliability. J. Appl. Psychol. 62, 392–398. doi: 10.1037/0021-9010.62.4.392

Johanson, G. A., and Brooks, G. P. (2010). Initial scale development: sample size for pilot studies. Educ. Psychol. Meas. 70, 394–400. doi: 10.1177/0013164409355692

Kaiser, H. F. (1970). A second generation little jiffy. Psychometrika 35, 401–415. doi: 10.1007/BF02291817

Kaiser, H. F. (1974). An index of factorial simplicity. Psychometrika 39, 31–36. doi: 10.1007/BF02291575

Kaushik, M., and Guleria, N. (2020). The impact of pandemic COVID-19 in workplace. Eur. J. Bus. Manag. 12, 9–18. doi: 10.7176/EJBM/12-15-02

Kenny, D. A., Kaniskan, B., and McCoach, D. B. (2015). The performance of RMSEA in models with small degrees of freedom. Sociol. Methods Res. 44, 486–507. doi: 10.1177/0049124114543236

Kline, R. B. (1999). Book review: psychometric theory. J. Psychoeduc. Assess. 17, 275–280. doi: 10.1177/073428299901700307

Kniffin, K. M., Narayanan, J., Anseel, F., Antonakis, J., Ashford, S. P., Bakker, A. B., et al. (2021). COVID-19 and the workplace: implications, issues, and insights for future research and action. Am. Psychol. 76, 63–77. doi: 10.1037/amp0000716

Leighton, K., Kardong-Edgren, S., Schneidereith, T., and Foisy-Doll, C. (2021). Using social media and snowball sampling as an alternative recruitment strategy for research. Clin. Simul. Nurs. 55, 37–42. doi: 10.1016/j.ecns.2021.03.006

Lissitz, R. W., and Green, S. B. (1975). Effect of the number of scale points on reliability: a Monte Carlo approach. J. Appl. Psychol. 60, 10–13. doi: 10.1037/h0076268

Martin, A. E., and Phillips, K. W. (2007). What “blindness” to gender differences helps women see and do: implications for confidence, agency, and action in male-dominated environments. Organ. Behav. Hum. Decis. Process. 142, 28–44. doi: 10.1016/j.obhdp.2017.07.004

Mayer, B. (2021) Why you may feel anxious about a post-pandemic return to ‘Normal’. Healthline. 29 July. Available at: https://www.healthline.com/health/mental-health/why-you-may-feel-anxious-about-a-post-pandemic-return-to-normal.

McAllister, D. J. (1995). Affect-and cognition-based trust as foundations for interpersonal cooperation in organizations. Acad. Manag. J. 38, 24–59. doi: 10.2307/256727

McEvily, B., and Tortoriello, M. (2011). Measuring trust in organisational research: review and recommendations. J. Trust Res. 1, 23–63. doi: 10.1080/21515581.2011.552424

McKelvie, S. J. (1978). Graphic rating scales—how many categories? Br. J. Psychol. 69, 185–202. doi: 10.1111/j.2044-8295.1978.tb01647.x

McLaren, J., and Wang, S. (2020) Effects of reduced workplace presence on COVID-19 deaths: an instrumental-variables approach. Cambridge, MA: National Bureau of Economic Research.

Morgado, F. F., Meireles, J. F., Neves, C. M., Amaral, A., and Ferreira, M. E. (2017). Scale development: ten main limitations and recommendations to improve future research practices. Psicologia: Reflexão e Crítica. 30, 3–20. doi: 10.1186/s41155-016-0057-1

O’Brien, K. (1993) Improving survey questionnaires through focus groups. In D. L. Morgan (Ed.), Successful focus groups: advancing the state of the art, 105–117, London: SAGE Publications, Inc.

Page, L., Boysen, S., and Arya, T. (2019). Creating a culture that thrives: fostering respect, trust, and psychological safety in the workplace. OD Practitioner. 51, 28–35. doi: 10.12968/bjom.2003.11.12.11861

Palan, S., and Schitter, C. (2018). Prolific. Ac—a subject pool for online experiments. J. Behav. Exp. Financ. 17, 22–27. doi: 10.1016/j.jbef.2017.12.004

Power, K. (2020). The COVID-19 pandemic has increased the care burden of women and families. Sustain. Sci. Pract. Policy 16, 67–73. doi: 10.1080/15487733.2020.1776561

Rolke, W., and Gongora, C. G. (2021). A chi-square goodness-of-fit test for continuous distributions against a known alternative. Comput. Stat. 36, 1885–1900. doi: 10.1007/s00180-020-00997-x

Schaubroeck, J., Lam, S. S., and Peng, A. C. (2011). Cognition-based and affect-based trust as mediators of leader behavior influences on team performance. J. Appl. Psychol. 96, 863–871. doi: 10.1037/a0022625

Schermelleh-Engel, K., Moosbrugger, H., and Müller, H. (2003). Evaluating the fit of structural equation models: tests of significance and descriptive goodness-of-fit measures. Methods Psychol. Res. Online 8, 23–74.

Schoorman, F. D., Mayer, R. C., and Davis, J. H. (2007). An integrative model of organisational trust: past, present, and future. Acad. Manag. Rev. 32, 344–354. doi: 10.5465/amr.2007.24348410

Sellbom, M., and Tellegen, A. (2019). Factor analysis in psychological assessment research: common pitfalls and recommendations. Psychol. Assess. 31, 1428–1441. doi: 10.1037/pas0000623

Sharieff, G. Q. (2021). Building organizational trust during a pandemic. NEJM Catalyst Innovations in Care Delivery. 2:19. doi: 10.1056/CAT.20.0599

Sheluga, D., Jacoby, J., and Major, B. (1978). Whether to agree-disagree or disagree-agree: the effects of anchor order on item response. ACR North Am. Adv

Shine, R. An unexpected upside from the coronavirus pandemic may change the face of our workforce [Internet] (2020). Abc.net.au. 5 September. Available at: https://www.abc.net.au/news/2020-05-20/coronavirus-job-losses-hit-women-hard-amid-covid-19-work-changes/12260446.

Spector, P. E. (1992) Summated rating scale construction: an introduction. Thousand Oaks, CA: Sage Publications.

Spicer, A. (2020). Organizational culture and COVID-19. J. Manag. Stud. 57, 1737–1740. doi: 10.1111/joms.12625

Spreitzer, G. M., Cameron, L., and Garrett, L. (2017). Alternative work arrangements: two images of the new world of work. Annu. Rev. Organ. Psych. Organ. Behav. 4, 473–499. doi: 10.1146/annurev-orgpsych-032516-113332

Spurk, D., and Straub, C. (2020). Flexible employment relationships and careers in times of the COVID-19 pandemic. J. Vocat. Behav. 119:103435. doi: 10.1016/j.jvb.2020.103435

Steckler, A., McLeroy, K. R., Goodman, R. M., Bird, S. T., and McCormick, L. (1992). Toward integrating qualitative and quantitative methods: an introduction. Health Educ. Q. 19, 1–8. doi: 10.1177/109019819201900101

Tabachnick, B. G., and Fidell, L. S. (2007) Using multivariate statistics, 7th Ed. Boston, MA: Pearson.

Tabachnick, B. G., and Fidell, L. S. (2011) Using multivariate statistics. Needham Heights, MA: Allyn & Bacon.

Thompson, B. (2004) Exploratory and confirmatory factor analysis: understanding concepts and applications. Washington, DC: American Psychological Association.

Trougakos, J. P., Chawla, N., and McCarthy, J. M. (2020). Working in a pandemic: exploring the impact of COVID-19 health anxiety on work, family, and health outcomes. J. Appl. Psychol. 105, 1234–1245. doi: 10.1037/apl0000739

Twenge, J. M. (2010). A review of the empirical evidence on generational differences in work attitudes. J. Bus. Psychol. 25, 201–210. doi: 10.1007/s10869-010-9165-6

Vandenberg, R. J. (2006). Introduction: statistical and methodological myths and urban legends: where, pray tell, did they get this idea? Organ. Res. Methods 9, 194–201. doi: 10.1177/1094428105285506

Walker, A. (2010). The development and validation of a psychological contract of safety scale. J. Saf. Res. 41, 315–321. doi: 10.1016/j.jsr.2010.06.002

Xia, Y., and Yang, Y. (2019). RMSEA, CFI, and TLI in structural equation modeling with ordered categorical data: the story they tell depends on the estimation methods. Behav. Res. Methods 51, 409–428. doi: 10.3758/s13428-018-1055-2

Keywords: trust, scale development, multidimensional organisational trust, employee trust in leaders, psychometric validation

Citation: Fischer S, Walker A and Hyder S (2023) The development and validation of a multidimensional organisational trust measure. Front. Psychol. 14:1189946. doi: 10.3389/fpsyg.2023.1189946

Edited by:

Jolita Vveinhardt, Vytautas Magnus University, LithuaniaReviewed by:

Deon Kleynhans, North-West University, South AfricaLucia Zbihlejova, University of Prešov, Slovakia

Copyright © 2023 Fischer, Walker and Hyder. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sarah Fischer, fischerlsarah@gmail.com

Sarah Fischer

Sarah Fischer Arlene Walker

Arlene Walker Shannon Hyder

Shannon Hyder