- 1Department of Psychiatry and Psychotherapy, Tübingen Center for Mental Health (TüCMH), University of Tübingen, Tübingen, Germany

- 2Emotion Neuroimaging Lab, Max Planck Institute for Human Cognitive and Brain Sciences, Leipzig, Germany

- 3International Max Planck Research School on Neuroscience of Communication: Function, Structure, and Plasticity, Leipzig, Germany

- 4Graduate Training Centre of Neuroscience, University of Tübingen, Tübingen, Germany

- 5Department of Psychiatry, Psychotherapy and Psychosomatics, RWTH Aachen University, Aachen, Germany

- 6Institute of Neuroscience and Medicine, JARA-Institute Brain Structure Function Relationship (INM 10), Research Center Jülich, Jülich, Germany

- 7Biopsychology and Cognitive Neuroscience, Faculty of Psychology and Sports Science, Bielefeld University, Bielefeld, Germany

- 8Department of Human Perception, Cognition and Action, Max Planck Institute for Biological Cybernetics, Tübingen, Germany

- 9LEAD Graduate School & Research Network, University of Tübingen, Tübingen, Germany

- 10International Max Planck Research School for Cognitive and Systems Neuroscience, University of Tübingen, Tübingen, Germany

- 11Tübingen Neuro Campus, University of Tübingen, Tübingen, Germany

The categorization of dominant facial features, such as sex, is a highly relevant function for social interaction. It has been found that attributes of the perceiver, such as their biological sex, influence the perception of sexually dimorphic facial features with women showing higher recognition performance for female faces than men. However, evidence on how aspects closely related to biological sex influence face sex categorization are scarce. Using a previously validated set of sex-morphed facial images (morphed from male to female and vice versa), we aimed to investigate the influence of the participant’s gender role identification and sexual orientation on face sex categorization, besides their biological sex. Image ratings, questionnaire data on gender role identification and sexual orientation were collected from 67 adults (34 females). Contrary to previous literature, biological sex per se was not significantly associated with image ratings. However, an influence of participant sexual attraction and gender role identity became apparent: participants identifying with male gender attributes and showing attraction toward females perceived masculinized female faces as more male and femininized male faces as more female when compared to participants identifying with female gender attributes and attraction toward males. Considering that we found these effects in a predominantly cisgender and heterosexual sample, investigation of face sex perception in individuals identifying with a gender different from their assigned sex (i.e., transgender people) might provide further insights into how assigned sex and gender identity are related.

Introduction

As social beings, humans communicate with each other on an almost daily basis and research has found that even before communication is initiated, we are able to derive crucial information about our fellow human beings solely based on their faces – regarding characteristics such as age (Bruce and Young, 1986; Wiese et al., 2008; Rhodes, 2009; Carbon et al., 2013), identity (Haxby et al., 2000; Schyns et al., 2002; Calder and Young, 2005), sexual orientation (Rule et al., 2008, 2009; Tskhay et al., 2013) and biological sex (Bruce et al., 1993), and also regarding emotions (Ekman et al., 1987; Prkachin, 2003; Bombari et al., 2013), personality traits (Winston et al., 2002; Willis and Todorov, 2006) and attractiveness (Perrett et al., 1998; Little et al., 2011). Extraction of this socially relevant information might guide our behavior in interpersonal situations and determine the way we approach our counterparts. However, to be able to perform categorization tasks from faces, facial information first needs to be encoded. For sex categorization specifically, the encoding process, and thus accuracy in performing the task, seems to be strongly influenced by featural cues of the face itself, but is also influenced by several attributes related to the individual perceiving the face (Smith et al., 2007; Hillairet de Boisferon et al., 2019).

Literature concurrently indicates that face processing relies on diagnostic information from configurations between individual facial features (e.g., the distance between mouth and nose) as well as on information gained from facial features themselves, such as the mouth and nose (McKelvie, 1976; Rakover, 2012). With regards to sex categorization, various studies provide evidence that featural information from the eye region is particularly relevant for performance in sex categorization tasks (Schyns et al., 2002; Armann and Bülthoff, 2009; Dupuis-Roy et al., 2009). Using Bubbles (Gosselin and Schyns, 2001) – a frequently applied technique that involves partial masking of face stimuli to isolate the facial information that is used to resolve sex categorization tasks – Schyns et al. (2002) demonstrated in a sample of students that information from certain facial features is selectively used depending on the categorization task. They found that information contained in the eye region, especially in the left eye, is particularly important for performing sex categorization. For identity judgements, on the other hand, participants relied on information from both the eye and the mouth region. Similar results were obtained using morphed face stimuli in a study by Armann and Bülthoff (2009), wherein participants were presented with two face images simultaneously, varying either along an identity or a sex morphing continuum, and had to perform sex and identity judgements. Upon investigation with eye-tracking, in both discrimination tasks, fixations were mainly directed toward the eyes – an effect that was even stronger in the sex discrimination task. Although accuracy rates found by Dupuis-Roy et al. (2009) confirm the eye-eyebrow area as the most important region for sex categorization, they also suggest that we only rely on luminance cues from the eyes when color information from the mouth region is not available. Additional evidence that luminance contains diagnostic information for sex classification is provided by Russell (2009), who found that female faces exhibit greater luminance contrast in the eye and lip region as well as in the surrounding skin compared to male faces. In addition, an androgynous face was perceived as more feminine when the luminance contrast was increased. Furthermore, luminance contrast in female faces was increased even more through the application of makeup. Apart from featural information, categorization with regard to sex also decisively depends on diagnostic information from configurations and structure within a face. For example, Roberts and Bruce (1988) showed that categorization was impaired the most, when the nose was obscured in whole faces, however, when presented in isolation, least information was gained from the nose. The authors conclude from these findings that reliable information about the sex of a face is not gained from the nose alone but rather from configurations that are lost when the nose region is obscured. Taken together, diagnostic information regarding the sex of a face is conveyed in the local features as well as in the configurations between those single features and in structural cues (see also Bruce et al., 1993).

Besides the influence of featural and configurational information, sex categorization from faces has been related to certain attributes on the part of the perceiver, such as their biological sex. A common finding is that girls and women show an own-sex bias, i.e., higher accuracy in recognizing female faces compared to males faces, while evidence for an equivalent own-sex bias displayed by male perceivers for male faces is rather scarce (Cross et al., 1971; Lewin and Herlitz, 2002; Rehnman and Herlitz, 2006, 2007). A frequently discussed explanation for why this effect usually only occurs in girls and women is a greater exposure of both, infant girls and infant boys, to female caregivers in early childhood leading to a superior familiarity with female faces (Herlitz and Lovén, 2013). This female face advantage is further strengthened in girls by the fact that they, in general, attend to faces to a greater extent than boys (Connellan et al., 2000). In combination with gender-specific imitations, this results in a more prominent orientation toward other females as interaction partners (Lewin and Herlitz, 2002; Herlitz and Lovén, 2013) and thus to even more experience with female faces compared to male faces. Another effect, which is frequently found in face perception literature on sex categorization, is the so-called male bias. This effect refers to the misclassification of female faces as male faces in sex categorization tasks. It has been observed in experimental setups where adult participants categorize sex-unambiguous faces of neonates, children or adults, which are displayed with eyes facing forward (Kaminski et al., 2011; Daniel and Bentin, 2012; Hillairet de Boisferon et al., 2019) as well as where adults categorize sex-ambiguous (Armann and Bülthoff, 2012) or profile-view silhouette faces (Davidenko, 2007). Researchers usually explain this finding by the absence of diagnostic external sex cues, especially hair, which might be perceived as an indication of baldness being more associated with maleness and thus resulting in the male response bias (Davidenko, 2007). However, given the fact that there are some general sex differences in how face stimuli are perceived and processed (Heisz et al., 2013; Proverbio, 2017), and the suggestion of male faces requiring less information to be correctly recognized (Wild et al., 2000; Cellerino et al., 2004), further research is needed to explore potential reasons for the female own-sex bias and the male response bias more extensively.

As of yet, little is known about the potential influence of perceiver attributes that are closely related to biological sex, such as gender, sexual orientation, and gender roles. Gender, defined as an individuals’ identification as male or female (VandenBos, 2015), has along with perceivers’ age and ethnicity been found to not significantly influence face gender categorization (Simpkins, 2011). One study assessed the relationship of sexual orientation primarily in the context of performing attractiveness judgements and showed that sexual orientation indeed affected attractiveness judgements – while homosexual men judged masculine faces more attractive compared to heterosexual men, no significant group differences were found in homosexual and heterosexual female participants (Hou et al., 2019). Another study also assessed the influence of sexual orientation on voice perception and showed a higher categorization accuracy in heterosexual male and female individuals for voices of the opposite sex, whereas homosexual individuals performed categorization of same-sex voices with higher accuracy (Smith et al., 2019). Notably, given the paucity of studies on the relationship of face sex categorization and perceiver attributes closely related to their biological sex, the aim of the present study was to investigate how biological sex, gender, sexual orientation, and gender role influence face sex categorization performed on sex-ambiguous face stimuli. Regarding the influence of biological sex, we hypothesized that female participants would recognize original female faces and male faces morphed to female with higher accuracy compared to original male faces and female faces morphed to male, thereby replicating the own-sex bias. Furthermore, we expected to replicate the previously reported male bias defined as a tendency to misclassify female faces as male. Our hypotheses regarding gender, sexual orientation and gender roles were nondirectional as - to our knowledge – this is the first study assessing how gender, gender roles, and sexual orientation of the perceiver might influence sex categorization performed on sex-ambiguous face stimuli.

Materials and Methods

Sample Description

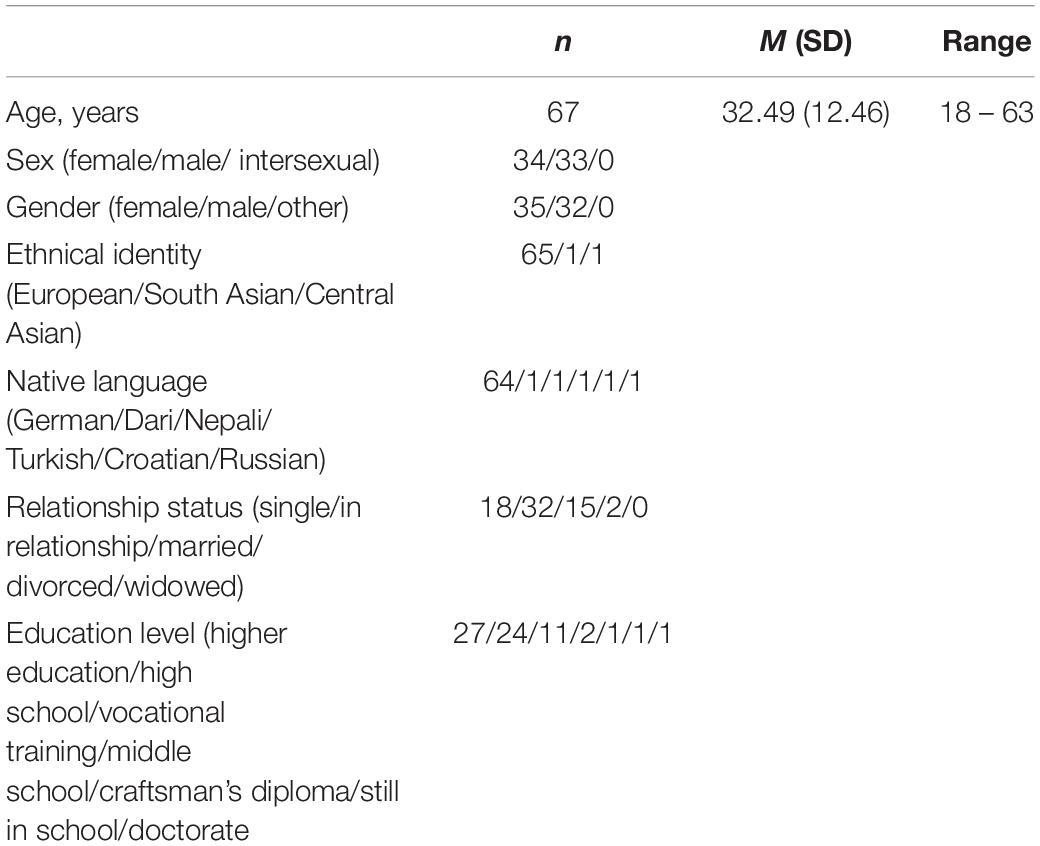

A total of 86 individuals (35 female, 33 male, and 18 not indicating their sex) participated in the study. Data of 19 participants was excluded from analysis as they either did not fully provide sociodemographic information and questionnaire data (n = 1), or they rated less than the minimum of 150 images specified prior to the experiment (n = 18). All remaining individuals formed the final sample consisting of 67 participants aged 18–63 (M = 32.49 years, SD = 12.46 years), 34 of which were female and 33 were male. Of the 67 participants, 55 provided ratings for all 300 images whereas 12 provided ratings for at least 255 images (85% of images). Female and male participants did not significantly differ in terms of age [female: M = 29.97 years, SD = 10.54 years; male: M = 35.09 years, SD = 13.53 years; and t(65) = −1.70, p = 0.093]. All participants identified their gender as either explicitly “male” or “female.” One participant, reporting to be biologically male, indicated her gender as female. All participants had normal vision or used visual aids during the experiment, and they provided written informed consent prior to participation. They took part voluntarily and no reimbursement was offered for participation. All participants were asked to take part individually on their computers or laptops. The study protocol was approved by the ethical review board of the Max Planck Society (2016_02). Detailed information on sample characteristics is provided in Table 1.

Procedure

The experiment was conducted online using the software SoSci Survey (Leiner, 2020) and the questionnaire was made available in German only via www.soscisurvey.de. Participants were provided with the link to the experiment by email and after written information about the study, they provided their written informed consent. Subsequently, each participant provided sociodemographic information regarding sex, gender, and gender roles before the actual experiment began. Each participant underwent 300 trials in each of which one face stimulus, randomly selected from the total stimulus pool of 300 faces, and a Visual Analog Scale (VAS) were presented simultaneously in the center of the display. For each of the presented images, participants indicated how male or female they perceived the image by moving a slider along the VAS with scale endpoints labeled male and female. Stimuli were presented without replacement, so each participant rated each of the 300 faces exactly once. Even though participants were instructed to respond spontaneously, the experiment was self-paced and no reaction times were recorded.

Facial Stimuli

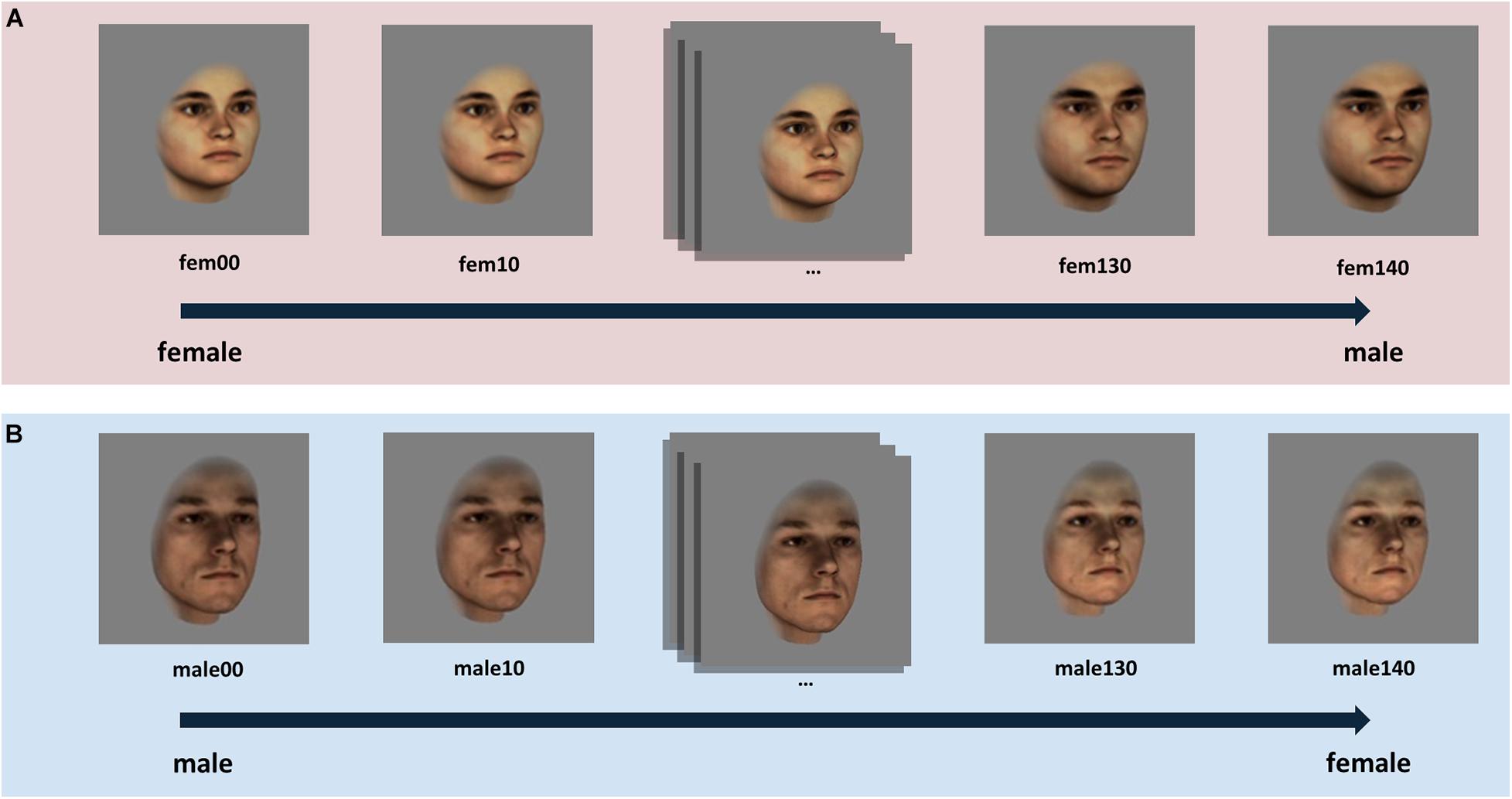

To investigate how female and male participants perceive sex-ambiguous faces, we used a previously validated set of sex-morphed facial images (morphed from male to female and vice versa) which has been created based on 3-dimensional laser scans of real heads from the database of the Max Planck Institute for Biological Cybernetics in Tübingen (Blanz and Vetter, 1999; Armann and Bülthoff, 2012). The stimulus set consisted of 10 original female and 10 original male face identities, morphed to the sex-opposite endpoint in 14 morphing steps. In addition, the images of original female faces were morphed to same sex superfemale because it was evident in a previous rating experiment that the original female faces were not equally perceived as female as the original male faces were perceived as male (see also Armann and Bülthoff, 2012). In order to obtain similar sex ratings for male and female faces (both for the original images and for the opposite-sex versions) super-feminized faces were created only for the original female faces. In total, 300 faces were presented with the hair cropped at the hairline and free of makeup, glasses and facial hair. Faces were presented turned to the right by 20°, in a 24-bit color format on a gray background. Each image was 330 pixels in height and 330 pixels in width. See Figure 1 for an illustration of the face stimuli used.

Figure 1. Illustration of the morphing procedure for (A) original female face identities and (B) for original male face identities, exemplified by means of one female and one male identity. The image captioned “fem00” represents a “superfemale” image which was created by feminizing the original face scan (fem40) of the corresponding female identity. Both the original female face (fem40) and the original male face (male00) were morphed in 10% – intervals along the sex morph continua, created based on a sex vector, to the opposite-sex version endpoint face.

Sex Categorization of Face Stimuli

We used VAS to assess the extent to which each of the presented face stimuli was perceived as male or female. In each trial, a VAS with the left extrema labeled male and the right extrema labeled female, was displayed underneath each centrally presented face image and participants were asked to indicate the degree of perceived male- or femaleness by moving an initially centrally located slider with the cursor. For analyses, the response of the participants on the sliding scale was translated into a rating ranging from 0 (male) to 100 (female). Two cut-off values were set a priori: ratings from 0 to 20 corresponding to “unambiguously male” and ratings from 80 to 100 corresponding to “unambiguously female.” A rating of 50 was defined as “sex-ambiguous.” We decided to set the cut-offs more extreme compared to the ones set in a previous study (unambiguous maleness and femaleness at values 28.57 and 71.43, respectively; Armann and Bülthoff, 2012) in order to allow for a greater differentiation of the ratings.

Questionnaires

Sociodemographic information was obtained from the participants using a self-compiled questionnaire. In addition, subjects completed two self-report inventories: The Gender-Related Attributes Survey (GERAS; Gruber et al., 2019) and the Gender Inclusive Scale (Galupo et al., 2017, 2018).

Gender-Related Attributes Survey

The GERAS is a self-report measure that assesses gender role identity based on positive and negative attributes which are typically associated with the female or male gender in middle European cultures (Gruber et al., 2019; Pletzer et al., 2019). The inventory consists of 50 items representing the following three subscales: Personality, comprised of 20 items, Cognition, comprised of 14 items, and Interests & Activities, comprised of 16 items. In the subscale Personality, participants are asked to indicate how often certain traits apply to them on a 7-point Likert scale ranging from 1 (never) to 7 (always). In the subscale Cognition, they are asked to rate how well they are able to solve certain problems, and in the Interests & Activities subscale, subjects are asked to indicate how much they like certain activities. Responses to the latter two scales are given on a 7-point Likert scale ranging from 1 (not at all) to 7 (very). For each of the three subscales, half of the items describe feminine attributes, while the other half describes male attributes. Masculinity and femininity scores can either be calculated for each of the three scales individually by averaging the ratings for masculine and feminine items or for the inventory as a whole by averaging the individual masculinity and femininity scores of the three subscales. In this study, masculinity and femininity scores were analyzed both on scale level as well as overall. Due to technical issues, subscale Cognition, originally comprising 14 items, in this study comprised 13 items with one item coding for masculinity missing. Subscale Interests & Activities in this study comprised 13 out of originally 16 items, missing two items coding for masculinity and one item coding for femininity. The reduced number of items was accounted for in the calculation of scores.

Gender Inclusive Scale

The Gender Inclusive Scale is a self-report inventory assessing sexual orientation by taking both sex and gender aspects into account (Galupo et al., 2017, 2018). The scale consists of six items, two of which describe elements based on the dimension sex (attraction to females and males), whereas the other four are related to dimensions of gender (attraction toward masculine, feminine, androgynous, and gender nonconforming individuals). All items are rated on a 7-point Likert scale ranging from 1 (not at all) to 7 (very). Each item is analyzed individually. No singular overall score is calculated for this inventory.

Data Analysis

Analyses were conducted using R version 3.5.1 (R Core Team, 2018). Visual inspection suggested that ratings and questionnaire data were normally distributed, therefore, all analyses were conducted using parametric statistical methods with two-tailed significance at p < 0.05. Mean differences in femininity and masculinity traits and in sexual orientation of female and male participants were analyzed using t-tests for unpaired samples. To compare the proportion of ratings in the margin areas (below 20 and above 80), we used a Chi-Square Goodness of Fit Test. To investigate the potential impact of various attributes of the perceiver, a linear mixed-effects model approach provided by R package lme4 version 1.1.23 (Bates et al., 2015) was used. Firstly, to check whether model assumptions were met, residual distribution was inspected. As this indicated normally distributed residuals, we proceeded with the mixed-model approach. We set up linear-mixed effects models with rating of female- and maleness of the images as the outcome variable, fixed effects of sex of image and morphing level and their interaction (basic model). The responses on the GERAS and the Gender Inclusive Scale were dichotomized. Participants reporting higher overall identification with feminine/masculine attributes were considered as “feminine”/“masculine.” Given the similar distribution of responses to items “attraction to females” and “attraction to feminine individuals” and to items “attraction to males” and “attraction to masculine individuals,” responses to these items were collapsed into the category “attraction to females” and “attraction to females,” respectively. The term sexual attraction instead of sexual orientation will therefore be used in the following when referring to the results of the Gender Inclusive Scale. To account for the repeated measurement and random variability across participants and stimuli, random effects in participants and male and female images were incorporated (random intercepts). The variable gender was not analyzed individually as only one participant reported to identify with a gender different from their biological sex. We conducted a sensitivity power analysis using G∗Power version 3.1.9.7 (Faul et al., 2007) to calculate the critical population effect size to find interaction effects accepting a type II error probability of 20%. Our sample (N = 67) was sufficiently powered to detect a medium effect (f2 = 0.255).

Results

Gender Role Identity and Sexual Attraction

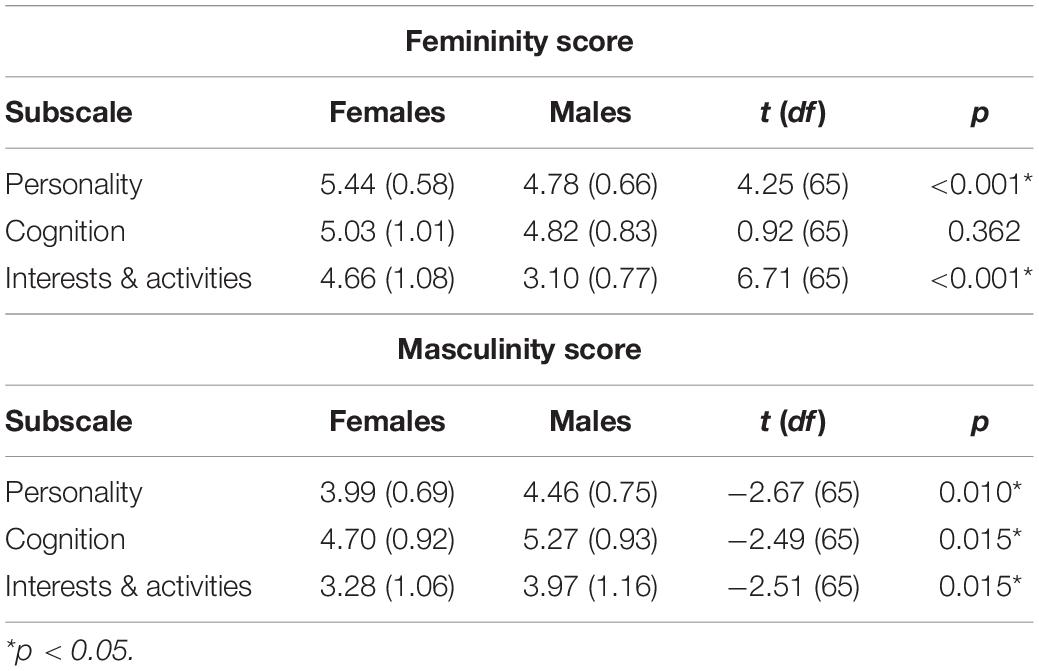

We found significant differences between male and female participants with regard to gender role identity. On average, female participants indicated significantly higher overall femininity scores than male participants [females: M = 5.05, SD = 0.58; males: M = 4.24, SD = 0.54; and t(65) = 5.90, p < 0.001] and male participants obtained significantly higher overall masculinity scores than female participants [males: M = 4.57, SD = 0.66; females: M = 3.99, SD = 0.68; and t(65) = −3.55, p < 0.001]. Furthermore, as can be seen in Table 2, female participants obtained significantly higher femininity scores on subscales Personality (p < 0.001) and Interests & Activities (p < 0.001) compared to male participants, whereas male participants obtained significantly higher masculinity scores on all three subscales compared to females (all ps < 0.05).

Table 2. Mean femininity and masculinity scores (and standard deviations) for female and male participants for the Gender-Related Attributes Survey (GERAS) subscales.

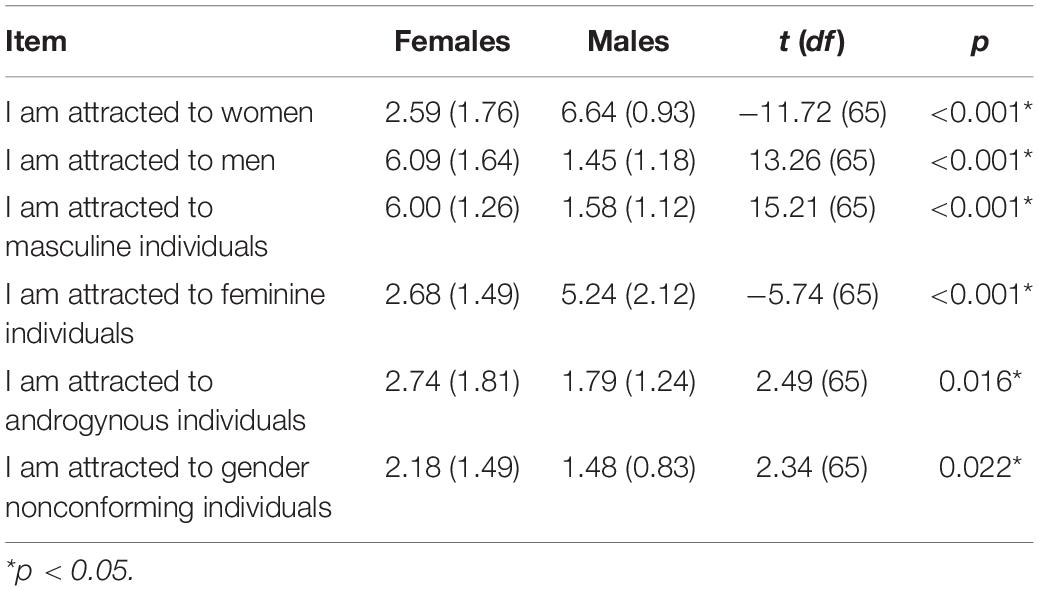

Results from the Gender Inclusive Scale suggest that the majority of participants were heterosexual. Male participants indicated to be more attracted toward women and feminine individuals than female participants. Conversely, female participants indicated to be more attracted toward men and masculine individuals compared to male participants. Female participants also indicated higher attraction toward androgynous individuals and toward gender nonconforming individuals compared to male participants All mentioned differences between female and male participants proved to be statistically significant (all p ≤ 0.02). For statistical details see Table 3.

Table 3. Mean differences (and standard deviations) in female and male participants regarding items of the Gender Inclusive Scale.

Image Ratings

Overall, across all identities and participants, images received a mean rating of 39.07, indicating a general bias toward the male end of the rating continuum. Thirty-three percent of images received a rating ranging into the female section (i.e., a rating above 50 on the rating scale) and 67% of images received a rating ranging into the male section (i.e., a rating below 50 on the rating scale). Regarding the margins, a significantly greater proportion of images received ratings from 0 to 20 inclusively than ratings from 80 to 100 inclusively, χ2(1) = 1677.70, p < 0.001. Original male images were rated more male on average (M = 17.30, SD = 17.77) than original female faces were rated female (M = 57.38, SD = 29.46), and even the mean ratings for the superfemale images did not reach the “unambiguously female” section (M = 71.86, SD = 25.48). So, the effect that it is generally harder to classify original female faces as female then it is to classify original male faces correctly as male was replicated.

Influencing Factors on Ratings

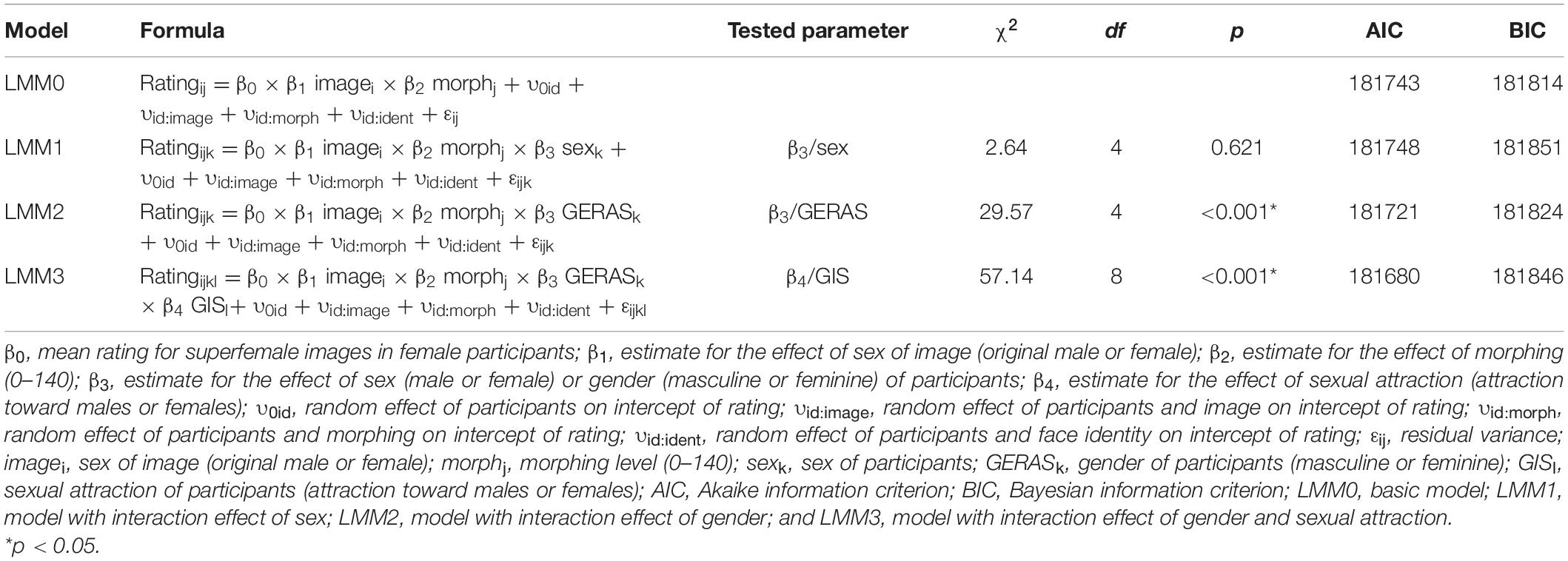

Image Characteristics

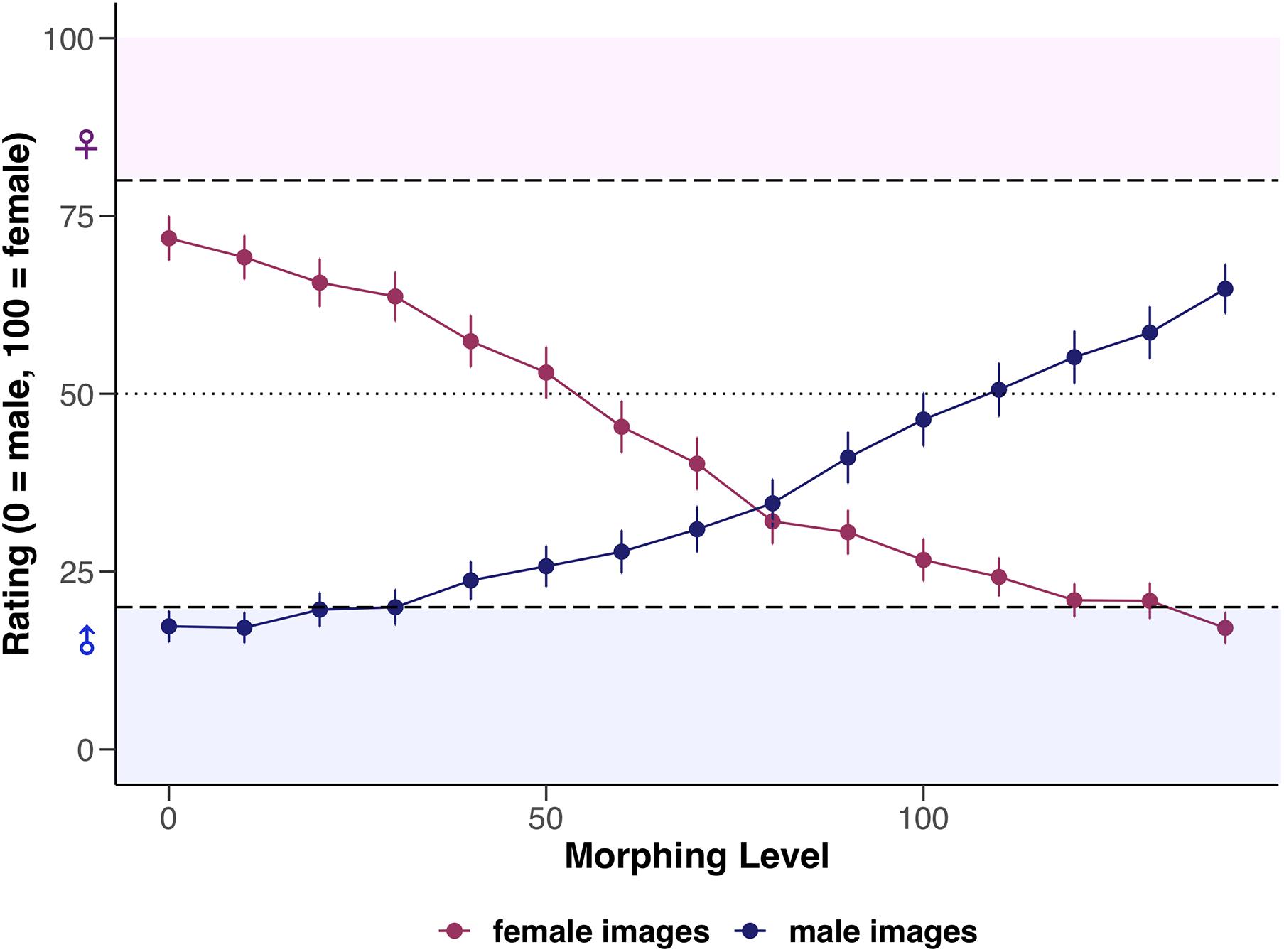

The basic model (LMM0, see Table 4) revealed that original male images were generally rated significantly lower by 61.77 units on the rating scale than female faces which have been feminized (i.e., superfemale images). Furthermore, rating of superfemale images significantly decreased by 4.30 units (i.e., the images were rated more male) when morphing increased by one 10%-interval. With each 10%-increase in morphing, rating of original male images significantly increased by 3.52 units. Statistical details of the basic model are reported in Supplementary Material. For an illustration of the interaction between the factors sex of image and morphing level, see Figure 2.

Figure 2. Mean ratings of female and male images depending on morphing level (0–140), visualized separately for female and male participants. Error bars represent standard error (SE). Dashed horizontal lines represent the a priori defined cut-off values (ratings between 0 and 20 = unambiguously male, ratings between 80 and 100 = unambiguously female, and ratings of 50 = sex-ambiguous).

Perceiver Attributes: Sex, Gender Role, and Sexual Attraction

To investigate the potential influence of perceiver attributes on the rating of the images, the following factors were successively added to the fixed interaction as additional predictor variables: Sex, gender (femininity or masculinity indicated on the GERAS), sexual attraction (attraction toward females or males indicated on the Gender Inclusive Scale). First, we tested the basic model against two models with only one additional interaction effect (LMM1 and LMM2, Table 4). Finally, we tested the model with the interaction effect of gender against a model with an additional interaction effect of sexual attraction (LMM3, Table 4). Model comparisons were conducted via likelihood ratio tests.

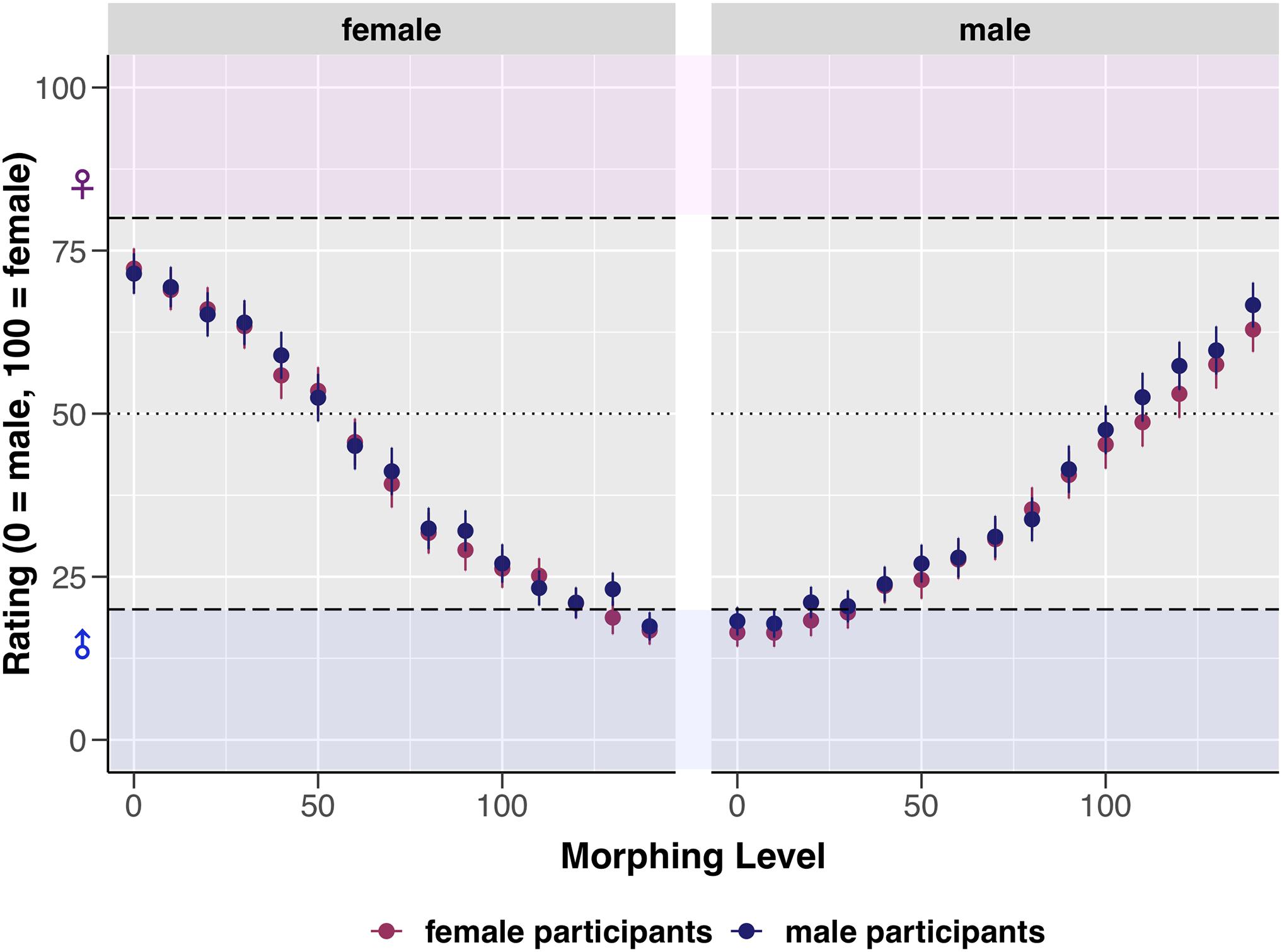

Model comparisons revealed no significant effect of the sex of participants on image rating (p = 0.621), see Table 4 model LMM1 for statistical details and Figure 3 for a visualization. However, a significant predictive contribution of participants’ gender and sexual attraction to the rating was revealed (see Table 4 models LMM2 and LMM3). With each 10%-increase in morphing, perceivers with predominantly masculine attributes who indicated attraction toward females rated original male images by 21.66 units higher (i.e., more female) and superfemale images by 12.13 units less (i.e., more male). Participants with predominantly feminine attributes who indicated attraction toward males rated original male images by 18.61 units higher (i.e., more female) and superfemale images by 10.60 units less (i.e., more male). The last two items of the Gender Inclusive Scale were not included in these models as additional predictor variables, as only a small proportion of participants indicated attraction by a score of four or above toward androgynous (n = 8) and gender-non-conforming individuals (n = 1). For statistical details of the models see Supplementary Material.

Figure 3. Visualization of the effect of morphing level (0–140) and sex of image (male or female) on the mean ratings. Error bars represent SE. Dashed horizontal lines represent the a priori defined cut-off values (ratings between 0 and 20 = unambiguously male, ratings between 80 and 100 = unambiguously female, and ratings of 50 = sex-ambiguous).

Discussion

The aim of the present study was to investigate how certain attributes of the perceiver influence face sex categorization of faces with varying degree of sex-ambiguousness. Participants were presented with original facial stimuli of 10 female and 10 male identities, as well as with facial images created by morphing the level of sex information in these original faces, and were asked to rate the extent to which they perceived each image as female or male. As we were particularly interested in how perceiver attributes affect the ratings of male and female original faces as well as the sex-ambiguous (i.e., morphed) facial images, we assessed participants’ sex, their gender, their gender role identification and their sexual orientation and related them to the ratings. Besides the exploratory investigation of the effects of gender roles and sexual orientation, we hypothesized a sex effect, with females outperforming males for female faces as well as a male bias, which has been described in numerous previous studies within the face perception literature as the tendency to misclassify female faces as male faces in sex categorization tasks.

In contrast to our hypothesis and to various previous findings, we did not find a significant effect of participants’ sex on ratings in our sex categorization task. This discrepancy with research findings of an own-sex bias, found primarily in female participants, is probably largely due to methodological differences: In previous studies, Cross et al. (1971) and Rehnman and Herlitz (2006) used yearbook portraits and frontal view images, respectively, not controlling for external features such as clothing and hair style, whereas in a study by Lewin and Herlitz (2002) full-face images as well as faces in which hair, ears, jewelry, and face contour had been removed were used. Further, the stimulus material in all of these three studies consisted of sex-unambiguous faces and the studies’ main focus was on recognition memory of male and female faces instead of face categorization.

We did not run separate analyses for how participants’ gender might affect ratings because only one participant, reporting to be biologically male, indicated to identify as female. So, we expect a similar null finding as with participants’ sex. Since according to our knowledge, only one study has dealt with the impact of observers’ gender on image sex categorization so far, using unambiguous male and female face stimuli, future research specifically recruiting transgender and gender nonconforming individuals is needed to further investigate whether and how the interaction between sex and gender is manifested behaviorally in the rating of morphed, sex-ambiguous facial images. As a result of transgender individuals’ lacking identification with their sex and based on brain-imaging studies demonstrating similarities of transgender individuals with individuals belonging to the gender they desire (e.g., Junger et al., 2014), it would be particularly interesting to assess whether a (own-gender) bias similar to the often reported own-sex bias in cisgender individuals (e.g., Lewin and Herlitz, 2002) can be found in transgender individuals when they are asked to discriminate sex-ambiguous facial images. Such a research approach would not only provide further insight into how assigned sex and gender identity are related, but also allow for gaining more knowledge about the implications associated with an experienced discrepancy between assigned sex and desired gender.

Regarding gender role identification, male participants indicated that they identified significantly more with masculine attributes on all three subscales of the GERAS compared to female participants. In contrast, female participants stated that they identified significantly more with feminine attributes on GERAS subscales Personality and Interests & Activities compared to male participants. Interestingly, female and male participants did not significantly differ with regard to the obtained femininity score on subscale Cognition. The almost equally high scores may be explained by a presumably large overlap of some items, coding for feminine cognitions, with academic skills (“to find the right words to express a certain content,” “to phrase a text,” “to find synonyms for a word in order to avoid repetitions,” “to explain foreign words”).

Based on our fitted models, we found that rating of sex-ambiguous face images is significantly influenced by certain attributes related to gender role identification. For both masculine participants attracted to females and feminine participants attracted to males, the rating of superfemale images decreased with morphing, whereas the rating of original male images increased with morphing. This effect was more pronounced in masculine compared to feminine participants.

In line with previous studies and in accordance with our expectations, we found an overall bias to respond with lower ratings (below 50), i.e., to classify faces more often as male than as female: only one third of images (33.4%) received a rating above 50, while two thirds (66.6%) were rated below 50. Furthermore, our results show that original male faces were rated more strongly as male than original female faces as female. Various potential factors account for this male bias, mainly originating from the specificity of the stimulus material, which have been discussed in prior studies. While it could be argued that our stimulus material did not adequately represent sexually dimorphic facial features, the unidirectionality of this response bias is most likely attributable to an interplay of numerous reasons and cannot simply be explained by this general assumption. More specifically, it might be that physiognomic features prototypically associated with female faces, such as a less protrusive, more roundly shaped nose and more protruding eyes compared to men (Enlow and Moyers, 1982), were not distinctive enough in the original female faces, and that morphing of original male faces did not work equally well as the morphing of original female faces. Another sexually dimorphic feature which might have been less visible in our stimuli – although the morphing technique used to create the images (Blanz and Vetter, 1999) allows for adjusting facial texture – is facial contrast (Russell, 2009). Naturally, luminance contrast between the eyes, lips and the surrounding skin is greater in female compared to male faces (Russell, 2009), and it has been shown that the application of makeup can further increase facial contrast, thus making the face perceptually look even more feminine (Cox and Glick, 1986; Russell, 2011). Because the face stimuli in our study were free of makeup, facial contrast could probably not be used as a reliable feature to distinguish female from male faces which might at least partly account for the replication of the male bias. Furthermore, note that due to cropping at the hairline, several other cues such as hair style, clothing and jewelry were not visible in our face stimuli. However, it might be that those external features are particularly relevant for recognizing faces an individual has no prior experience with, i.e., unfamiliar faces (Ellis et al., 1979) and more specifically for categorizing female faces (e.g., Burton et al., 1993). Taking into consideration that people construct mental representations of faces through experience and that faces appearing more similar to these prototypical faces are perceived as more familiar and attractive (e.g., Langlois and Roggman, 1990; Rhodes et al., 2003; Halberstadt, 2006), it might be that at least some of the female faces used in our study did not resemble the prototypical female face our participants had formed by experience with female faces in their personal surroundings (e.g., their girlfriends or spouses), encounters with women in everyday life or female faces displayed in media. Also given the fact that we did not select female faces for our study on the basis of their femaleness, such assumptions and expectations formed by the participants might have contributed to the misclassification of female faces.

Another potential factor that might have contributed to our results is the frequently reported link between face femininity and perceived attractiveness: Both male and female observers judge female faces with exaggerated female features, as well as feminized male faces, as more attractive (e.g., Rhodes et al., 2000). Therefore, the effect that female faces were more often misclassified as male might have been further enhanced either by a reduced perceived attractiveness of the stimuli (potentially by not, or only to a lesser degree, displaying features associated with femininity) or by the fact that this reduced attractivity was due to a greater cognitive effort associated with the classification of sex-ambiguous faces compared to the original male and (super-)female faces (Owen et al., 2016). It could also be that at least a small proportion of men whose faces were included in the database displayed a beard shadow, which would also have been reflected in the sex vector, i.e., the difference vector between the average male and the average female face, resulting in female faces that are morphed along the sex vector to the male end of the continuum displaying this slightly male feature already at a low morphing level. While such a visibility of male cues would provide an explanation for the remark given by a few participants that some faces seemed to have beard hair, it could certainly not individually account for the male bias, as has already been suggested by Bruce et al. (1993).

Limitations

Because we did not explicitly control for facial expressions in the images, it is possible that some of the faces used in our study did not display a fully neutral facial expression. However, it is an open question whether this would have been due to the face stimuli we used or whether factors such as lighting or individual face shape could have induced the perception of facial expressions. Several studies indicate an overlap of sex cues and emotional face expressions, in particular that recognition of angry male and happy female faces is enhanced, and that classification of female faces expressing anger is impaired (Becker et al., 2007; Bayet et al., 2015). Thus, depending on what emotions were expressed or perceived in some of the faces, this might have influenced our results in the way that perceived anger could have resulted in judging faces more frequently as male.

Another important aspect that was not controlled for in our study due to the setup of the experiment as an online study, was image size and quality. Although all images were uploaded in 24-bit color format with a pixel size of 330×330, the actual (physical) size in which each image was then displayed on participants’ devices strongly depended on the pixel density (resolution) of the respective end device. Especially the perception of fine facial features such as luminance contrast might therefore have been impaired in some participants and thus could have influenced their ratings.

Finally, we have limitations concerning the assessment of sexual orientation. Even though we designed the study in a way that allows sexual diversity to be represented, by implementing the Gender Inclusive Scale as an instrument that incorporates aspects of both sexual attraction based on sex and based on gender expression, our final sample predominantly comprised of heterosexual individuals.

Conclusion

Although the present study failed to show a significant effect of participants’ sex on classification of sex-ambiguous facial stimuli, it demonstrates that sex classification performed on sex-ambiguous faces is more strongly impacted by attributes which are closely related to biological sex, such as gender role identification (i.e., gender specific traits) and sexual attraction of perceivers. Given the crucial role of sex categorization for social behavior and interaction, the findings of this study highlight the importance for future research to further investigate the association between perceiver attributes and face sex perception, particularly with regard to the gender and gender role of the perceiver. The application of a similar research design in a sample of transgender and gender nonconforming individuals might help to provide more insight into how assigned sex and gender identity are related, thus allowing us to derive potential implications on how to provide support to these individuals.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Ethical Review Board of the Max Planck Society (2016_02). The patients/participants provided their written informed consent to participate in this study.

Author Contributions

UH and BD devised the study and the main conceptual ideas. IB and CF developed and validated the stimulus material. TL collected the data and prepared the first draft of the manuscript. TL, CL, and MG performed the data analyses. All authors contributed to designing the study, critically revising and editing the content of the manuscript, and approved the final version of the manuscript for submission.

Funding

CL, MG, and BD are supported by the German Research Fund (DFG 2319/2-4). PH and UH are supported by the German Research Fund (DFG 3202/7-4).

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We acknowledged support by the Open Access Publishing Fund of University of Tübingen.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fpsyg.2021.718004/full#supplementary-material

References

Armann, R., and Bülthoff, I. (2009). Gaze behavior in face comparison: the roles of sex, task, and symmetry. Attent. Percept. Psychophys. 71, 1107–1126. doi: 10.3758/APP.71.5.1107

Armann, R., and Bülthoff, I. (2012). Male and female faces are only perceived categorically when linked to familiar identities – and when in doubt he is a male. Vision Res. 63, 69–80. doi: 10.1016/j.visres.2012.05.005

Bates, D., Mächler, M., Bolker, B., and Walker, S. (2015). Fitting linear mixed-effects models using Lme4. J. Stat. Softw. 67, 1–48. doi: 10.18637/jss.v067.i01

Bayet, L., Pascalis, O., Quinn, P. C., Lee, K., Gentaz, É, and Tanaka, J. W. (2015). Angry facial expressions bias gender categorization in children and adults: behavioral and computational evidence. Front. Psychol. 6:346. doi: 10.3389/fpsyg.2015.00346

Becker, D. V., Kenrick, D. T., Neuberg, S. L., Blackwell, K. C., and Smith, D. M. (2007). The confounded nature of angry men and happy women. J. Pers. Soc. Psychol. 92, 179–190. doi: 10.1037/0022-3514.92.2.179

Blanz, V., and Vetter, T. (1999). “A morphable model for the synthesis of 3D faces,” in Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques, ed. A. P. Rockwood (Los Angeles, CA: ACM Press), 187–194.

Bombari, D., Schmid, P. C., Schmid Mast, M., Birri, S., Mast, F. W., and Lobmaier, J. S. (2013). Emotion recognition: the role of featural and configural face information. Q. J. Exp. Psychol. 66, 2426–2442. doi: 10.1080/17470218.2013.789065

Bruce, V., Burton, A. M., Hanna, E., Healey, P., Mason, O., Coombes, A., et al. (1993). Sex discrimination: how do we tell the difference between male and female faces? Perception 22, 131–152. doi: 10.1068/p220131

Bruce, V., and Young, A. (1986). Understanding face recognition. Br. J. Psychol. 77, 305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x

Burton, A. M., Bruce, V., and Dench, N. (1993). What’s the difference between men and women? Evidence from facial measurement. Perception 22, 153–176. doi: 10.1068/p220153

Calder, A. J., and Young, A. W. (2005). Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 6, 641–651. doi: 10.1038/nrn1724

Carbon, C.-C., Grüter, M., and Grüter, T. (2013). Age-dependent face detection and face categorization performance. PLoS One 8:e79164. doi: 10.1371/journal.pone.0079164

Cellerino, A., Borghetti, D., and Sartucci, F. (2004). Sex differences in face gender recognition in humans. Brain Res. Bull. 63, 443–449. doi: 10.1016/j.brainresbull.2004.03.010

Connellan, J., Baron-Cohen, S., Wheelwright, S., Batki, A., and Ahluwalia, J. (2000). Sex differences in human neonatal social perception. Infant Behav. Dev. 23, 113–118. doi: 10.1016/S0163-6383(00)00032-1

Cox, C. L., and Glick, W. H. (1986). Resume evaluations and cosmetics use: when more is not better. Sex Roles 14, 51–58. doi: 10.1007/BF00287847

Cross, J. F., Cross, J., and Daly, J. (1971). Sex, race, age, and beauty as factors in recognition of faces. Percept. Psychophys. 10, 393–396. doi: 10.3758/BF03210319

Daniel, S., and Bentin, S. (2012). Age-related changes in processing faces from detection to identification: ERP evidence. Neurobiol. Aging 33, 206.e1–206.e28. doi: 10.1016/j.neurobiolaging.2010.09.001

Davidenko, N. (2007). Silhouetted face profiles: a new methodology for face perception research. J. Vis. 7:6. doi: 10.1167/7.4.6

Dupuis-Roy, N., Fortin, I., Fiset, D., and Gosselin, F. (2009). Uncovering gender discrimination cues in a realistic setting. J. Vis. 9:10. doi: 10.1167/9.2.10

Ekman, P., Friesen, W. V., O’Sullivan, M., Chan, A., Diacoyanni-Tarlatzis, I., Heider, K., et al. (1987). Universals and cultural differences in the judgments of facial expressions of emotion. J. Pers. Soc. Psychol. 53, 712–717. doi: 10.1037/0022-3514.53.4.712

Ellis, H. D., Shepherd, J. W., and Davies, G. M. (1979). Identification of familiar and unfamiliar faces from internal and external features: some implications for theories of face recognition. Perception 8, 431–439. doi: 10.1068/p080431

Enlow, R., and Moyers, R. E. (1982). Handbook of Facial Growth. Pennsylvania: WB Saunders Saunders Company.

Faul, F., Erdfelder, E., Lang, A.-G., and Buchner, A. (2007). G∗Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi: 10.3758/BF03193146

Galupo, M. P., Lomash, E., and Mitchell, R. C. (2017). “All of my lovers fit into this scale”: sexual minority individuals’ responses to two novel measures of sexual orientation. J. Homosex. 64, 145–165. doi: 10.1080/00918369.2016.1174027

Galupo, M. P., Mitchell, R. C., and Davis, K. S. (2018). Face validity ratings of sexual orientation scales by sexual minority adults: effects of sexual orientation and gender identity. Arch. Sex. Behav. 47, 1241–1250. doi: 10.1007/s10508-017-1037-y

Gosselin, F., and Schyns, P. G. (2001). Bubbles: a new technique to reveal the use of information in recognition tasks. J. Vis. 1, 333–333. doi: 10.1167/1.3.333

Gruber, F. M., Distlberger, E., Scherndl, T., Ortner, T. M., and Pletzer, B. (2019). Psychometric properties of the multifaceted gender-related attributes survey (GERAS). Eur. J. Psychol. Assess. 36, 612–623. doi: 10.1027/1015-5759/a000528

Halberstadt, J. (2006). The generality and ultimate origins of the attractiveness of prototypes. Pers. Soc. Psychol. Rev. 10, 166–183. doi: 10.1207/s15327957pspr1002_5

Haxby, J. V., Hoffman, E. A., and Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends Cogn. Sci. 4, 223–233. doi: 10.1016/S1364-6613(00)01482-0

Heisz, J. J., Pottruff, M. M., and Shore, D. I. (2013). Females scan more than males: a potential mechanism for sex differences in recognition memory. Psychol. Sci. 24, 1157–1163. doi: 10.1177/0956797612468281

Herlitz, A., and Lovén, J. (2013). Sex differences and the own-gender bias in face recognition: a meta-analytic review. Vis. Cogn. 21, 1306–1336. doi: 10.1080/13506285.2013.823140

Hillairet de Boisferon, A., Dupierrix, E., Uttley, L., DeBruine, L. M., Jones, B. C., and Pascalis, O. (2019). Sex categorization of faces: the effects of age and experience. i-Perception 10, 1–13. doi: 10.1177/2041669519830414

Hou, J., Sui, L., Jiang, X., Han, C., and Chen, Q. (2019). Facial attractiveness of chinese college students with different sexual orientation and sex roles. Front. Hum. Neurosci. 13:132. doi: 10.3389/fnhum.2019.00132

Junger, J., Habel, U., Bröhr, S., Neulen, J., Neuschaefer-Rube, C., Birkholz, P., et al. (2014). More than just two sexes: the neural correlates of voice gender perception in gender dysphoria. PLoS One 9:e111672. doi: 10.1371/journal.pone.0111672

Kaminski, G., Méary, D., Mermillod, M., and Gentaz, E. (2011). Is it a he or a she? Behavioral and computational approaches to sex categorization. Attent. Percept. Psychophys. 73, 1344–1349. doi: 10.3758/s13414-011-0139-1

Langlois, J. H., and Roggman, L. A. (1990). Attractive faces are only average. Psychol. Sci. 1, 115–121. doi: 10.1111/j.1467-9280.1990.tb00079.x

Leiner, d. J (2020). SoSci Survey (Version 3.2.06) [Computer Software]. Available online at: https://www.soscisurvey.de (accessed June 24, 2020).

Lewin, C., and Herlitz, A. (2002). Sex differences in face recognition—women’s faces make the difference. Brain Cogn. 50, 121–128. doi: 10.1016/S0278-2626(02)00016-7

Little, A. C., Jones, B. C., and DeBruine, L. M. (2011). Facial attractiveness: evolutionary based research. Philos. Trans. R. Soc. B Biol. Sci. 366, 1638–1659. doi: 10.1098/rstb.2010.0404

McKelvie, S. J. (1976). The role of eyes and mouth in the memory of a face. Am. J. Psychol. 89, 311–323. doi: 10.2307/1421414

Owen, H. E., Halberstadt, J., Carr, E. W., and Winkielman, P. (2016). Johnny Depp, reconsidered: how category-relative processing fluency determines the appeal of gender ambiguity. PLoS One 11:e0146328. doi: 10.1371/journal.pone.0146328

Perrett, D. I., Lee, K. J., Penton-Voak, I., Rowland, D., Yoshikawa, S., Burt, D. M., et al. (1998). Effects of sexual dimorphism on facial attractiveness. Nature 394, 884–887. doi: 10.1038/29772

Pletzer, B., Steinbeisser, J., van Laak, L., and Harris, T. (2019). Beyond biological sex: interactive effects of gender role and sex hormones on spatial abilities. Front. Neurosci. 13:675. doi: 10.3389/fnins.2019.00675

Prkachin, G. C. (2003). The effects of orientation on detection and identification of facial expressions of emotion. Br. J. Psychol. 94, 45–62. doi: 10.1348/000712603762842093

Proverbio, A. M. (2017). Sex differences in social cognition: the case of face processing. J. Neurosci. Res. 95, 222–234. doi: 10.1002/jnr.23817

R Core Team (2018). R: a Language and Environment for Statistical Computing. (Version 4.0.4) [Computer Software]. R Foundation for Statistical Computing. Available online at: https://www.r-project.org (accessed February 21, 2021).

Rakover, S. S. (2012). A feature-inversion effect: can an isolated feature show behavior like the face-inversion effect? Psycho. Bull. Rev. 19, 617–624. doi: 10.3758/s13423-012-0264-4

Rehnman, J., and Herlitz, A. (2006). Higher face recognition ability in girls: magnified by own-sex and own-ethnicity bias. Memory 14, 289–296. doi: 10.1080/09658210500233581

Rehnman, J., and Herlitz, A. (2007). Women remember more faces than men do. Acta Psychol. (Amst.) 124, 344–355. doi: 10.1016/j.actpsy.2006.04.004

Rhodes, G., Hickford, C., and Jeffery, L. (2000). Sex-typicality and attractiveness: are supermale and superfemale faces super-attractive? Br. J. Psychol. 91, 125–140. doi: 10.1348/000712600161718

Rhodes, G., Jeffery, L., Watson, T. L., Clifford, C. W. G., and Nakayama, K. (2003). Fitting the mind to the world: face adaptation and attractiveness aftereffects. Psychol. Sci. 14, 558–566. doi: 10.1046/j.0956-7976.2003.psci_1465.x

Rhodes, M. G. (2009). Age estimation of faces: a review. Appl. Cogn. Psychol. 23, 1–12. doi: 10.1002/acp.1442

Roberts, T., and Bruce, V. (1988). Feature saliency in judging the sex and familiarity of faces. Perception 17, 475–481. doi: 10.1068/p170475

Rule, N. O., Ambady, N., Adams, R. B., and Macrae, C. N. (2008). Accuracy and awareness in the perception and categorization of male sexual orientation. J. Pers. Soc. Psychol. 95, 1019–1028. doi: 10.1037/a0013194

Rule, N. O., Ambady, N., and Hallett, K. C. (2009). Female sexual orientation is perceived accurately, rapidly, and automatically from the face and its features. J. Exp. Soc. Psychol. 45, 1245–1251. doi: 10.1016/j.jesp.2009.07.010

Russell, R. (2009). A sex difference in facial contrast and its exaggeration by cosmetics. Perception 38, 1211–1219. doi: 10.1068/p6331

Russell, R. (2011). “Why cosmetics work,” in The Science of Social Vision, eds R. B. A. N. Ambady, K. Nakayama, and S. Shimojo (New York, NY: Oxford University Press), 186–203.

Schyns, P. G., Bonnar, L., and Gosselin, F. (2002). Show me the features! understanding recognition from the use of visual information. Psychol. Sci. 13, 402–409. doi: 10.1111/1467-9280.00472

Simpkins, J. J. (2011). The Role of Facial Appearance in Gender Categorization. Bachelor’s thesis. Florida: University of Central Florida.

Smith, E., Junger, J., Pauly, K., Kellermann, T., Dernt, B., and Habel, U. (2019). Cerebral and behavioural response to human voices is mediated by sex and sexual orientation. Behav. Brain Res. 356, 89–97. doi: 10.1016/j.bbr.2018.07.029

Smith, M. L., Gosselin, F., and Schyns, P. G. (2007). From a face to its category via a few information processing states in the brain. Neuroimage 37, 974–984. doi: 10.1016/j.neuroimage.2007.05.030

Tskhay, K. O., Feriozzo, M. M., and Rule, N. O. (2013). Facial features influence the categorization of female sexual orientation. Perception 42, 1090–1094. doi: 10.1068/p7575

VandenBos, G. R. (2015). APA Dictionary of Psychology. Washington, DC: American Psychological Association.

Wiese, H., Schweinberger, S. R., and Neumann, M. F. (2008). Perceiving age and gender in unfamiliar faces: brain potential evidence for implicit and explicit person categorization. Psychophysiology 45, 957–969. doi: 10.1111/j.1469-8986.2008.00707.x

Wild, H. A., Barrett, S. E., Spence, M. J., O’Toole, A. J., Cheng, Y. D., and Brooke, J. (2000). Recognition and sex categorization of adults’ and children’s faces: examining performance in the absence of sex-stereotyped cues. J. Exp. Child Psychol. 77, 269–291. doi: 10.1006/jecp.1999.2554

Willis, J., and Todorov, A. (2006). First impressions: making up your mind after a 100-Ms exposure to a face. Psychol. Sci. 17, 592–598. doi: 10.1111/j.1467-9280.2006.01750.x

Keywords: face perception, sex categorization, gender role, gender, sexual orientation

Citation: Luther T, Lewis CA, Grahlow M, Hüpen P, Habel U, Foster C, Bülthoff I and Derntl B (2021) Male or Female? - Influence of Gender Role and Sexual Attraction on Sex Categorization of Faces. Front. Psychol. 12:718004. doi: 10.3389/fpsyg.2021.718004

Received: 05 June 2021; Accepted: 02 September 2021;

Published: 21 September 2021.

Edited by:

Marco Salvati, Sapienza University of Rome, ItalyReviewed by:

Lijun Zheng, Southwest University, ChinaMarcello Passarelli, National Research Council (CNR), Italy

Copyright © 2021 Luther, Lewis, Grahlow, Hüpen, Habel, Foster, Bülthoff and Derntl. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Teresa Luther, Teresa.Luther@med.uni-tuebingen.de

Teresa Luther

Teresa Luther Carolin A. Lewis

Carolin A. Lewis Melina Grahlow

Melina Grahlow Philippa Hüpen

Philippa Hüpen Ute Habel

Ute Habel Celia Foster

Celia Foster Isabelle Bülthoff

Isabelle Bülthoff Birgit Derntl

Birgit Derntl