- The University of Auckland, Auckland, New Zealand

The problem of academic dishonesty is as old as it is widespread – dating back millennia and perpetrated by the majority of students. Attempts to promote academic integrity, by comparison, are relatively new and rare – stretching back only a few hundred years and implemented by a small fraction of schools and universities. However, the past decade has seen an increase in efforts among universities to promote academic integrity among students, particularly through the use of online courses or tutorials. Previous research has found this type of instruction to be effective in increasing students’ knowledge of academic integrity and reducing their engagement in academic dishonesty. The present study contributes to this literature with a natural experiment on the effects of the Academic Integrity Course (AIC) at The University of Auckland, which became mandatory for all students in 2015. In 2012, a convenience sample of students (n = 780) had been asked to complete a survey on their perceptions of the University’s academic integrity polices and their engagement in several forms of academic dishonesty over the past year. In 2017, the same procedures and survey were used to collect data from second sample of students (n = 608). After establishing measurement invariance across the two samples on all latent factors, analysis of variance revealed mixed support for the studies hypotheses. Unexpectedly, students who completed the AIC (i.e., the 2017 sample) reported: (1) significantly lower (not higher) levels of understanding, support, and effectiveness with respect to the University’s academic integrity policies; (2) statistically equivalent (not higher) levels of peer disapproval of academic misconduct, and; (3) significantly higher (not lower) levels of peer engagement in academic misconduct. However, results related to participants’ personal engagement in academic misconduct offered partial support for hypotheses – those who completed the AIC reported significantly lower rates of engagement on three of the eight behaviors included in the study. The implications and limitations of these findings are discussed as well as possible future directions for research.

Introduction

The problem of academic dishonesty is an ancient one, dating back millennia (Lang, 2013). For the past 50 years, the problem has been epidemic – with the majority of students reporting that they have cheated, plagiarized, or otherwise behaved dishonestly at least once in the past year (for a review, see Murdock et al., 2016). By comparison, attempts to promote academic integrity are relatively new, stretching back only a few hundred years. The past decade, in particular, has seen an increase in efforts among universities to promote academic integrity among students. These efforts include face-to-face workshops and online courses as well as blended learning approaches (Stoesz and Yudintseva, 2018). The most common (and growing) approach appears to be requiring (or at least encouraging) incoming students to complete a short, web-based course or tutorial on academic integrity.

Previous research has shown that such tutorials can be effective not only in increasing students’ knowledge of academic integrity (e.g., Curtis et al., 2013; Cronan et al., 2017) but also reducing their engagement in academic dishonesty (e.g., Belter and du Pré, 2009; Dee and Jacob, 2010; Owens and White, 2013; Zivcakova et al., 2014). However, there are important differences in how these academic integrity tutorials have been implemented and assessed in the existing research and the way in which they are being used (and often left unassessed) by an increasing number of universities. At the University of Auckland, for example, all new students are required to complete the Academic Integrity Course (AIC) during their first semester. Like many of the tutorials studied in previous research, the AIC is a short online course that students are expected to complete by themselves in their own time.

However, unlike the tutorials in extant studies (which were completed by students as a requirement in one of their academic courses), the AIC is not connected to any academic courses or programs. Moreover, existing studies also limited the assessment of tutorial effects to the specific course in which the tutorial was required; whereas a comparable assessment of the AIC (and other courses like it) would necessitate looking for effects across all applicable courses. Finally, in prior research, assessment of the tutorial was completed either immediately following completion of the tutorial or within weeks thereafter. In contrast, an assessment of an existing tutorial or course (like the AIC) may involve students who completed it years earlier.

The present investigation seeks to broaden the research on academic integrity tutorials with a natural experiment on the effects of the AIC. That is, by assessing the impact of the course “in the everyday (i.e., real life) environment of the participants, [where] the experimenter has no control over the IV as it occurs naturally in real life” (McLeod, 2012), the present investigation extends the methodological approaches that have been used to assess tutorial effects. In doing so, the present study hopes to offer important contributions to the growing literature on academic integrity tutorials, particularly concerning the extent to which the positive effects previously reported can be replicated under different conditions.

Literature Review

A Long History of Academic Dishonesty

Academic dishonesty (also termed “academic misconduct” or, most succinctly, “cheating”) has a long history. Although the first documented report of cheating pertained to sport rather than academia, the contextual elements typically at play in decisions to cheat in the two domains are similar – extended periods of intense preparation leading up to a comparatively brief moment of competition (often against one’s peers, if not the clock or other criteria as well) resulting in immense pressure to perform near perfection (Lang, 2013). Such were the circumstances of the Thessalian boxer, Eupolos, who was apprehended while trying to bribe his opponents during the early fourth century Olympic Games in Greece (Guttman, 2004; Spivey, 2004).

The setting of the first known case(s) of academic cheating – involving China’s stringent civil service examinations during the Sui dynasty on the seventh century – was not much different. The exams required a thorough knowledge of Confucius’ works, skill in poetry writing in Confucian style, and memorization of the complete Imperial documents on education. Years of preparation were necessary, and failure (the lot of most candidates) resulted in vastly reduced life opportunities, misery for many, and suicide for some. Cheating abounded, with the use of model answers secreted about candidates’ persons, and bribery as examples (Lang, 2013).

Other records of academic dishonesty – dating from the 1760s to the present day – confirm that young people who typically displayed impeccable honor in other aspects of their lives have engaged in cheating in academic contexts (e.g., Hartshorne and May, 1928; Bertram Gallant, 2008). The first large-scale study of cheating occurred in the United States in the 1960s and indicated that 75% of United States tertiary students had cheated at least once in their academic careers (Bowers, 1964). Remarkably, this very high percentage has only fluctuated modestly over the past five decades – the majority of tertiary students (the world over, wherever asked) report having cheated in the past year (e.g., Lupton et al., 2000; Lupton and Chapman, 2002; McCabe, 2005; Stephens et al., 2010; Ma et al., 2013).

While digital technologies have made some forms of dishonesty much quicker and easier (from do-it-yourself cut-n-paste plagiarism to paying a “shadow scholar” to write a bespoke paper for you), they are not the reason why students cheat. A sober look at our evolution and ontogeny make it clear that cheating is “natural and normal” even if – with respect to academic dishonesty – “unethical and evitable” (for an overview of evidence, see Stephens, 2019).

With this mind, the important question is not who is most likely to cheat and under what circumstances, but rather what conditions are most likely to mitigate cheating. If the history of cheating has a lesson to teach us about dishonesty, it is less about the weakness of humans and more about the power of circumstances. It is the same lesson that contemporary empirical research teaches us – contextual factors outweigh individual characteristics in explaining variance in cheating behavior (e.g., Leming, 1978; McCabe and Trevino, 1997; Eisenberg, 2004; Day et al., 2011). Among the contextual factors that seem to matter most in reducing academic dishonest are efforts to promote academic integrity.

The (Relatively) Brief History of Promoting Academic Integrity

Punishment and prevention are distinct, and it is a mistake to believe that threats of former (if only severe enough) equates with the latter. As described above, academic dishonesty dates back millennia and is epidemic today. This despite the fact that early “deterrents” for cheating included flogging, public ridicule, stripping of academic credentials, banishment from one’s hometown, and even death (Lang, 2013). The history of using severe punishment as both penalty and prevention is as long as the history of cheating itself, and that history has taught us that it is neither ethical nor effective. In short, punishment is not a very good teacher – it arrives late and is quite primitive (seeking to condition a basic stimulus-response association rather than a mindful understanding).

Efforts to promote academic integrity – attempts to advance a conscious understanding and active commitment to honesty in one’s scholarly endeavors – are comparatively recent (relative, that is, to police and punish approaches of deterrence). The first such effort dates back to 1736 with the establishment of the Honor Code at The College of William and Mary1. The code is run by students, who are responsible for its administration and maintenance, including the adjudication of suspected violations. Today, there are over a hundred colleges and universities (mostly in North America and most private, highly selective institutions) with honor codes. Nonetheless, research has shown significant differences in academic dishonesty between honor code institutions and their non-code counterparts (McCabe and Trevino, 1993; McCabe et al., 1999; Schwartz et al., 2013). In their oft-cited study, McCabe and Trevino (1993) found that only 29% of students at the former reported cheating in the past year compared to 53% at the latter. More importantly, research also suggest the lower level of cheating at honor code institutions is attributed to the “culture of integrity” they engender and not the threat of punishment (McCabe et al., 1999).

While honor codes, and other systems-based, multi-level approaches to creating cultures of integrity (e.g., Bertram Gallant, 2011; Stephens, 2015) offer the most comprehensive approach to promoting academic integrity, they require more time and commitment than most institutions are willing or able to invest. However, the past decade has seen numerous high profile cases of cheating in the headlines (e.g., Perez-Pena and Bidgood, 2012; Visentin, 2015; Medina et al., 2019) and with them an increase in number of colleges and universities seeking to do something, even if small(er) in scope and scale. At a minimum, these efforts include the adoption of online methods of self-checking for plagiarism – allowing students to see their “mistakes” and learn from them (Walker, 2010). More pro-active still is the creation of face-to-face workshops, online modules, or blended approaches that provide opportunities to develop the knowledge and skills needed to achieve with integrity before mistakes are made (e.g., York University2).

The adoption of such approaches has spread widely and numerous universities around the world now require their students to complete a course or tutorial on academic integrity during or shortly after matriculation. Australia appears to be leading the way in terms of implementing a national framework and requirements. In the wake of several high profile cheating scandals (e.g., McNeilage and Visentin, 2014), the Tertiary Education Quality and Standards Agency (the national quality assurance and regulatory agency for education) revised its Higher Education Standards Framework to include four broad requirements (of all tertiary education providers) related to academic and research integrity. The third requirement is “to provide students and staff with guidance and training on what constitutes academic or research misconduct and the development of good practices in maintaining academic and research integrity” (Universities Australia, 2017, p. 1). As a result, over half of all Australian universities offer (but not always require students to complete) online tutorials on academic integrity. Despite similar high profile cheating scandals in New Zealand (e.g., Jones, 2014; Weeks, 2019), there is not (yet) a comparable framework or set of requirements in place there; and, perhaps not incidentally, only one university in the country that requires its students to complete a course on academic integrity (i.e., the AIC at the University of Auckland described below).

Empirical research over the past decade suggest such approaches can be effective not only increasing knowledge and attitudes (e.g., Curtis et al., 2013; Cronan et al., 2017) but also reducing dishonesty (e.g., Belter and du Pré, 2009; Dee and Jacob, 2010; Owens and White, 2013; Zivcakova et al., 2014). For example, results from field-based quasi-experimental (Belter and du Pré, 2009) and experimental (Dee and Jacob, 2010) research showed that completion of online tutorials related to plagiarism greatly reduced its occurrence – by 75.4% in the former study and an estimated 41% in the latter. However, as noted in the introduction, there is no evidence to date that the effects of these tutorials extend beyond the immediate context (i.e., the academic course) in which they are implemented (Stoesz and Yudintseva, 2018). Additionally, the empirical research on the effects of academic integrity instruction has yet to include any delayed testing. To date, all of the studies have assessed the effects of such instruction immediately or shortly after it was delivered.

In short, it is not clear yet if the learning and behavioral changes associated with these tutorials transfers to other contexts (i.e., courses beyond the one in which the learning occurred) or across longer periods of time (i.e., greater than a few minutes or few weeks). There are both theoretical and empirical reasons to believe that the more we move away – in place and time – from something we’ve learned, the less likely we are to recall or utilize that learning. Specifically, from a learning transfer perspective (e.g., Perkins and Salomon, 1992), extant studies have required near transfer of learning (i.e., demonstration of learning in the same or highly similar context) and not far transfer (i.e., impact in a context outside of or remote from the context of learning). Similarly, research on recency effects has provided strong evidence that are better able to remember recent experiences than those that happened further back in time (e.g., Morgeson and Campion, 1997; Jones and Sieck, 2003). The present investigation on attitudinal and behavioral differences associated with completing the AIC extends the boundaries (contextual and temporal) of existing research on the effect of academic integrity instruction.

The Academic Integrity Course at the University of Auckland

Developed by professional library staff at The University of Auckland (UOA) in 2013, the Academic Integrity Course (AIC) has been compulsory for all matriculating students since 2015. Students are enrolled in the AIC during their first term (semester or quarter), and failure to complete the course results in automatic re-enrolment for the next term. Although a student may forestall completion of the course, it is required for the conferral of any degree, diploma or certificate. According to a university official (personal communication), “most students” complete the AIC within their first of study at the university.

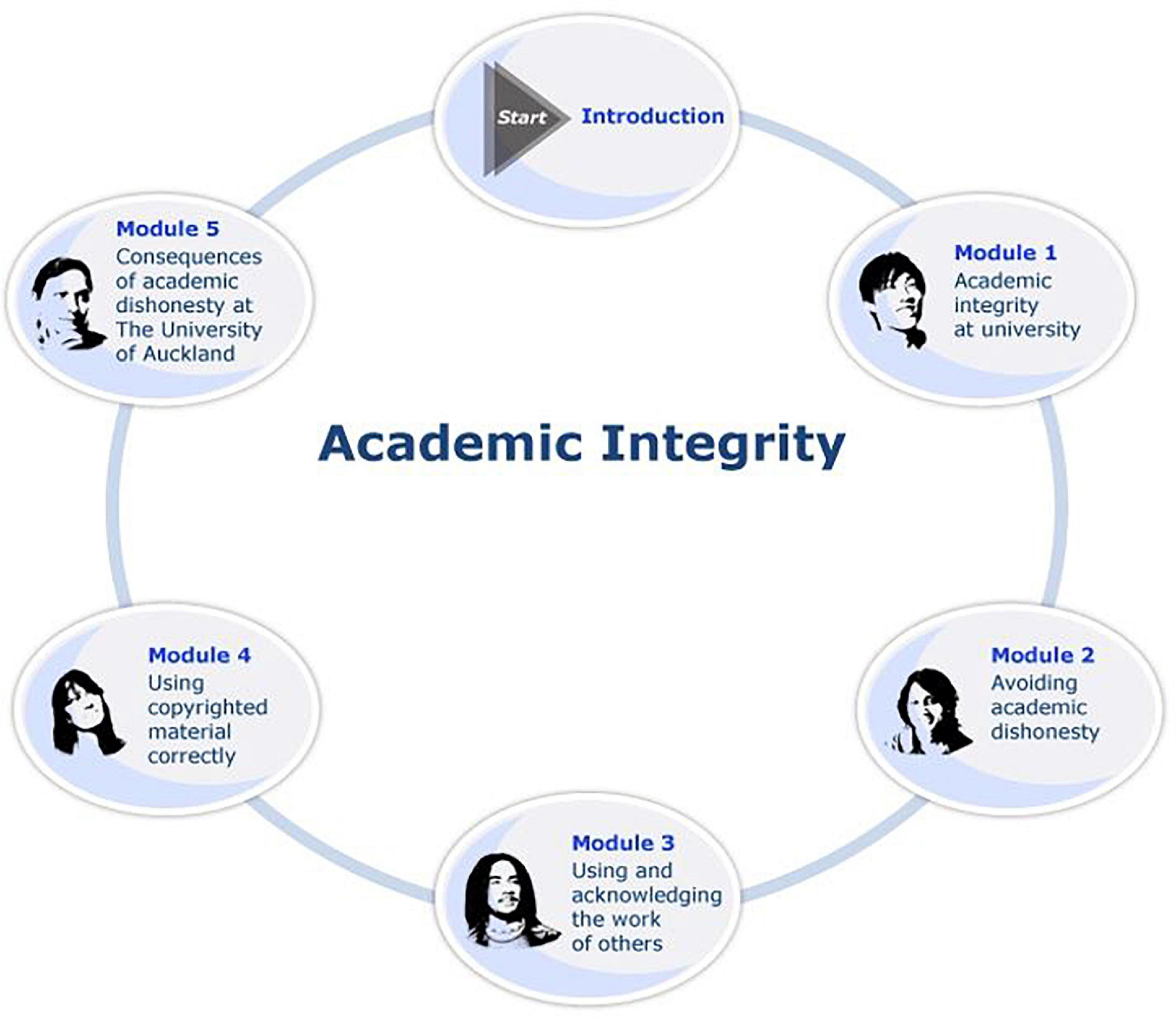

As depicted in Figure 1, the AIC is comprised of five modules course, each comprised of readings that teach students about the different facets of academic integrity and each with a test at the end that students must finish. The AIC is an online and self-paced, allowing students to complete the modules and tests in their own time. The modules and tests can be completed in any order the student prefers, but they must achieve 100% on all five tests (with unlimited attempts to do so). Completion time for the whole course is between one and two hours.

Figure 1. The Academic Integrity Course (AIC) at the University of Auckland. The AIC is a self-paced online course in which students are automatically enrolled during their first semester at the university.

Finally, with respect to its goals, the AIC was “designed to increase student knowledge of academic integrity, university rules relating to academic conduct, and the identification and consequences of academic misconduct3.” As described by the University’s Deputy Vice-Chancellor (Academic) John Morrow, the AIC is “not about dishonesty as such, it’s about students learning how they can use printed and published resources in an effective way in their own work” (as quoted in van Beynen, 2015). For a topical outline of the AIC, please see Appendix A.

The Present Investigation

The primary purpose of the present investigation was to test the effects of the AIC on students’ perceptions of and engagement in academic dishonesty. Specifically, compared to students who did not do so, it was hypothesized that students who completed the AIC would report:

H1: greater perceived understanding, support for, and effectiveness of university policies related to academic integrity;

H2: greater perceived peer disapproval of academic dishonesty;

H3: lower levels of perceived peer engagement in academic dishonesty; and

H4: lower levels of personal engagement in three types of academic dishonesty (i.e., assignment cheating, plagiarism, and test/exam cheating).

The foregoing hypotheses are based prior research indicating that tutorials (similar to the AIC) have been shown to increase students’ knowledge of academic integrity (e.g., Curtis et al., 2013; Cronan et al., 2017) and reduce their engagement in academic dishonesty (e.g., Belter and du Pré, 2009; Dee and Jacob, 2010; Owens and White, 2013; Zivcakova et al., 2014). However, as described above, the literature on learning transfer (e.g., Brown, would suggest that any effects in the present study (with its demand for far rather than near transfer) are likely to smaller than those found in previous research.

Finally, based on the existing literature on recency effect, it hypothesized (H5) that all of the foregoing effects would be stronger among participants who completed the AIC within the past month and weakest among those who completed it two or more years ago.

Materials and Methods

The effects of the AIC on students’ perceptions of and engagement in academic dishonesty were assessed using a quasi-experimental research design; namely, a natural experiment. Unlike a true experiment, a natural experiment is a type of observational study in which individuals (or groups of them) are naturally (i.e., determined by nature or other factors beyond the control of researchers) exposed to control and experimental conditions. The processes determining participants’ exposures only approximates random assignment. In the present investigation, survey data related to students’ academic integrity perceptions and behavior was collected as a part of course-based research exercise carried out in 2012 and 2017. When the AIC became mandatory for all students in 2015, it rendered (approximately) the 2012 participants a control or comparison group (i.e., AIC non-existent) and the 2017 participants an experimental or treatment group (i.e., AIC compulsory). To be clear, the data collect in 2012 data were not collected for the purposes of publication. It was not until 2016 that the authors thought about using the 2012 data as part of a natural experiment to test the effects of the AIC; deciding to replicate the research exercise (and data collection associated with it) in 2017. This research was approved by The University of Auckland Human Participants Ethics Committee in 2012 (Reference Number 2009/C/026) and again in 2017 (Reference Number 019881).

Procedures

As noted above, data for this investigation were collected under the auspices of a course-based research project. The course was an advanced level undergraduate course in educational psychology that required students take on the role of researchers. Specifically, all students enrolled in the course in 2012 (n = 108) and 2017 (n = 48) were asked to recruit 8 (in 2012) or 15 (in 2017) other UOA students (not enrolled in the course) to complete a short anonymous survey (detailed below in Measures). In 2012, 93.5% of students (101 of the 108 enrolled) completed the assignment and, in 2017, 95.8% (46 of 48) did so. Importantly, with the exception of the number of participants students were asked to recruit for the study (owing to the different enrolment numbers), the instructions provided to students and the sampling procedures used by them were identical in 2012 and 2017. Please see Appendix B for a copy of the assignment instructions provided to students.

Participants

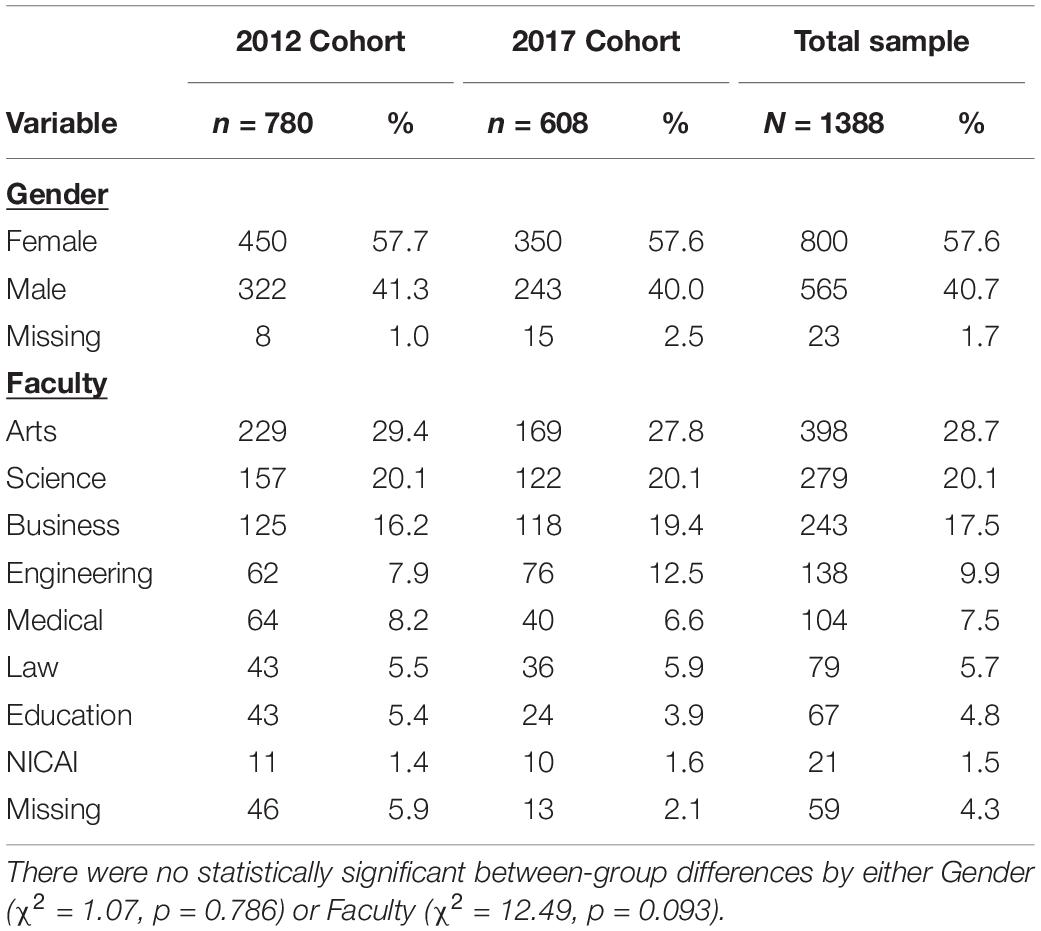

In 2012, the 101 students who completed the course research activity recruited a total of 803 students to participate in the study; 23 of whom (2.9%) were subsequently removed from the sample for missing data. In 2017, the 46 students who completed the same activity recruited a total of 674 students to participate in the study; 31 of whom (4.6%) were subsequently removed from the sample for missing data and an additional 41 (6.1%) because they indicated that they had not yet completed the AIC. Thus, the final sample included 1,388 university students: 780 in 2012 and 608 in 2017. As detailed in Table 1, the majority of participants in both cohorts were females (57.7% and 57.6% in 2012 and 2007, respectively) and drawn from all eight faculties within the university. Importantly, although percentages varied slightly between cohorts, the differences in observed versus expected counts (given marginal frequencies) were not statistically significant for either gender (χ2 = 1.07, p = 0.786) or faculty (χ2 = 12.49, p = 0.093).

Measures

The survey used in the present investigation was comprised of measures adapted from the Academic Motivation and Integrity Survey (AMIS; Stephens and Wangaard, 2013). In addition to the measures and items, participants in 2012 and 2017 were asked to report their sex (where 0 = Female, 1 = Male, and 3 = Other/prefer not to say) and faculty affiliation (e.g., Arts, Sciences, Business, Engineering).

Perceptions

Students’ perceptions of their peers’ attitudinal and behavioral norms related to academic integrity were assessed with three measures:

USE of Academic Integrity Policies

Participants’ perceptions of the understanding, support and effectiveness (USE) the university’s policies related to academic integrity were assessed with a 5-point Likert-type scale ranging from 1 (very low) to 5 (very high). Specifically, participants used this scale to rate three statements: “The average student’s understanding of policies concerning cheating,” and “The average student’s support of policies concerning cheating,” and “The effectiveness of these policies.”

Peer Disapproval of Academic Dishonesty

Participants’ perceptions related to peer disapproval of academic dishonesty was assessed with a 5-point Likert-type scale that ranged from 1 (not at all) to 5 (very strongly). Specifically, participants were asked to report about how strongly their peers would disapprove if they knew they had engaged in three types of academic dishonesty: “homework cheating,” “plagiarism,” and “test or exam cheating.”

Peer Engagement in Academic Dishonesty

Participants’ perceptions related to peer engagement in academic dishonesty were assessed with a 5-point Likert-type scale ranging from 1 (never) to 5 (almost daily). Specifically, students were asked to report about how often, during the past year, they “thought” other students had engaged in three types of cheating behavior: “copying each other’s homework,” “plagiarism,” and “cheating on tests or exams.”

Behaviors

Personal engagement in academic dishonesty was assessed with a 5-point Likert-type scale that ranged from 1 (never) to 5 (almost daily). Specifically, participants were asked to use that scale to indicate how often they had engaged in eight “academic behaviors” that comprised three types of academic dishonesty:

Assignment Cheating

1. Copied all or part of another student’s work and submitted it as your own.

2. Worked on an assignment with others when the instructor asked for individual work.

Plagiarism

3. From a book, magazine, or journal (not on the Internet): Paraphrased or copied a few sentences or paragraphs without citing them in a paper you submitted.

4. From an Internet Website: Paraphrased or copied a few sentences or paragraphs without citing them in a paper you submitted.

Test or Exam Cheating

5. Used unpermitted notes or textbooks during a test or exam.

6. Used unpermitted electronic notes (stored in a PDA, phone or calculator) during a test or exam.

7. From a friend or another student: Copied from another’s paper during a test or exam with his or her knowledge.

8. Used digital technology such as text messaging to “copy” or get help from someone during a test or exam.

9. Got questions or answers from someone who has already taken a test or exam.

Finally, the 2017 survey included one extra question, which asked participants to indicate when they completed the AIC (where 1 = Within past 3 months, 2 = 4 to 6 months ago, 3 = 7 to 12 months ago, 4 = 1 to 2 years ago, 5 = 2 or more years ago, and 6 = I have not yet completed it).

Data Analyses

Data were first screened for missing or invalid responses, and then subjected to confirmatory factor analysis (CFA) to confirm the structure and fit of the two three-factor measurement models. Based on recommendations by Hu and Bentler (1999) and Ullman and Bentler (2003), normed chi-square values and several other indices were used to determine model fit, where χ2/df < 3.0, CFI > 0.95, TLI > 0.95, RMSEA < 0.06, and SRMR < 0.05) for a “good” fit and χ2/df < 5.0) CFI > 0.90, TLI > 0.90, RMSEA < 0.08, and SRMR < 0.08) for an “acceptable” fit. Given the need to compare cohorts of participants sampled 5 years apart, multi-group confirmatory factory analysis (MGCFA) was employed to test for measurement invariance across groups/time (to ensure the psychometric equivalence of the constructs). Based on recommendations by Chen (2007), change in CFI, RMSEA, and SRMR values were used to determine the level of invariance achieved: ΔCFI of < −0.01 and ΔRMSEA of < 0.015 for each successive level, and ΔSRMR of < 0.030 for metric invariance and < 0.015 for scalar or residual invariance. After establishing acceptable model fit and measurement invariance, Cronbach’s alphas were calculated to assess the internal consistency of the six factors and Pearson correlation coefficients to assess their convergent and discriminant validity. Finally, ANOVA and cross-tabulations with Chi-square analyses were employed to test study hypotheses. All analyses were conducted using version 25 of SPSS and its AMOS programme.

Results

Results from the CFA and MGCFA and are reported first, followed by the descriptive statistics of and intercorrelations among the six latent factors. Subsequently, results pertaining to the four hypotheses are described.

Confirmatory Factor Analysis of the Two Three-Factor Measurement Models

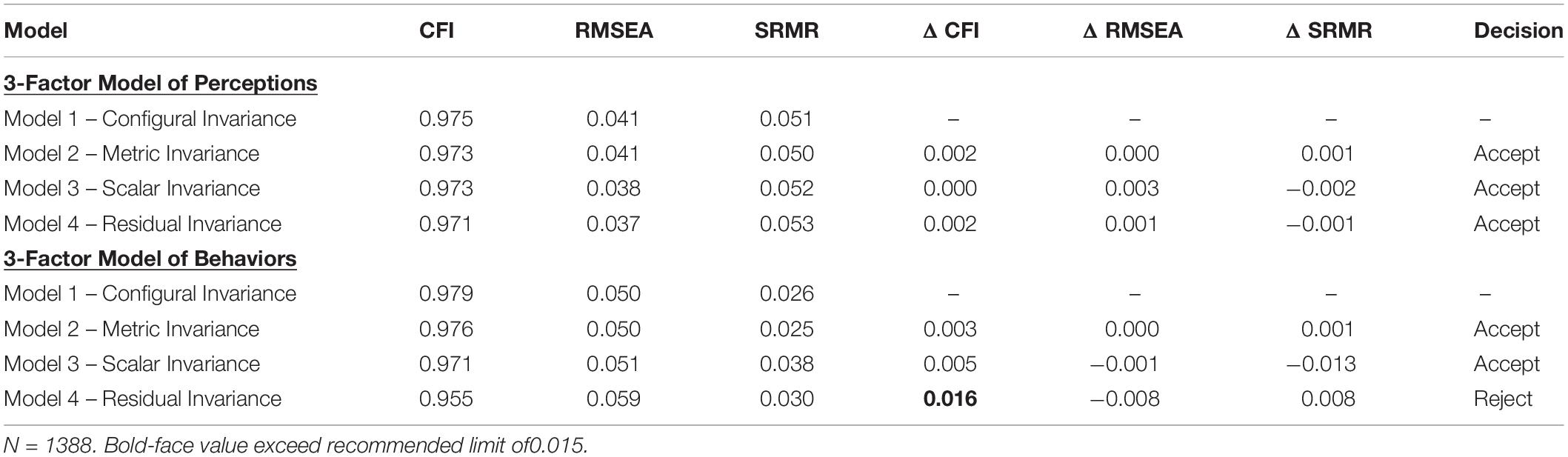

Confirmatory factor analysis was used to test the validity of the two three-factor measurement models for both cohorts of participants. That is, before proceeding to MGCFA to test for measurement invariance, CFA was conducted to ensure that the factor structure (configuration of paths) was equivalent across cohorts. As detailed in Table 2, whether combined or tested independently, the data from both cohorts offered a good (or at least acceptable) fit to both models. The only exception was Model 1.3 for Behaviors with normed Chi-square (χ2/df) value of 5.02 and RMSEA value of 0.082 (both slightly above the recommended threshold values of 5.00 and 0.080, respectively). Examination of the modification indices suggested freeing the covariance between two error terms (i.e., the two forms of digital test cheating). Doing so significantly improved the model fit (χ2/df = 2.00, TLI = 0.989, CFI = 0.994, RMSEA = 0.040, and SRMR = 0.024). However, given the sensitivity of χ2 in large samples, and that the other indices (i.e., the TLI, CFI, and SRMR) indicated a “good” fit, the decision was deem the model acceptable as hypothesized (without freeing the covariance between error terms).

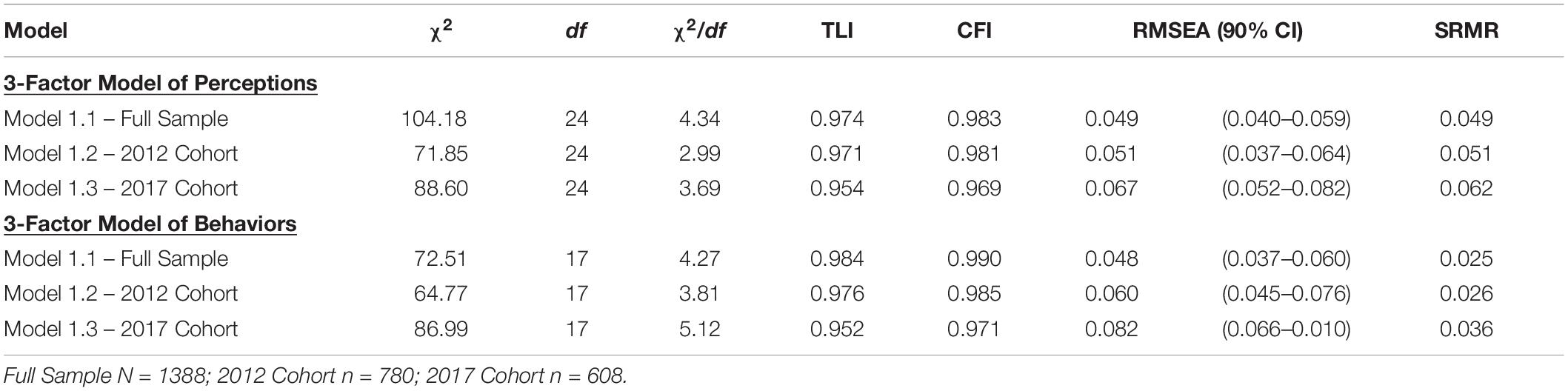

Table 2. Model fit statistics for the two three-factor measurement models of perceptions and behaviors.

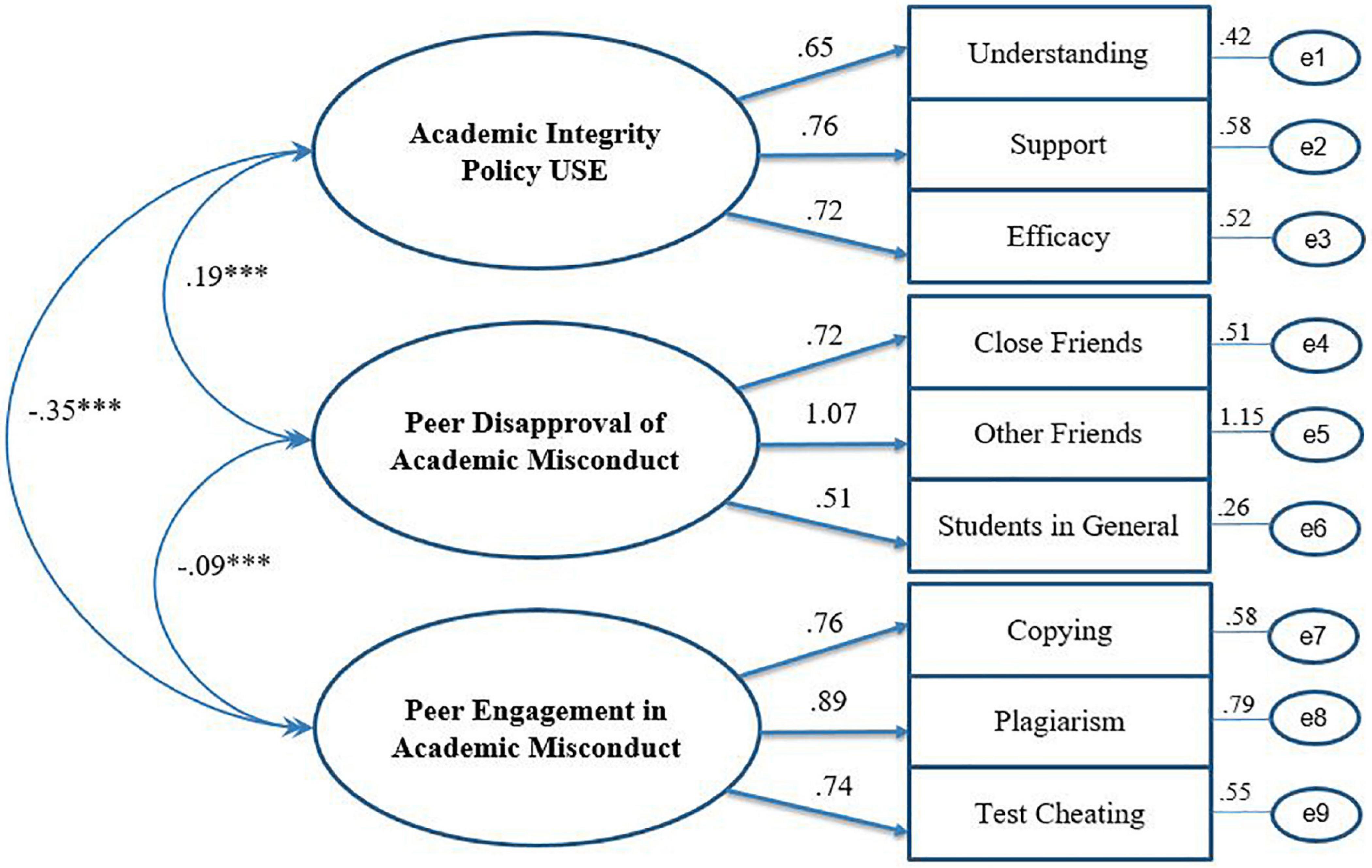

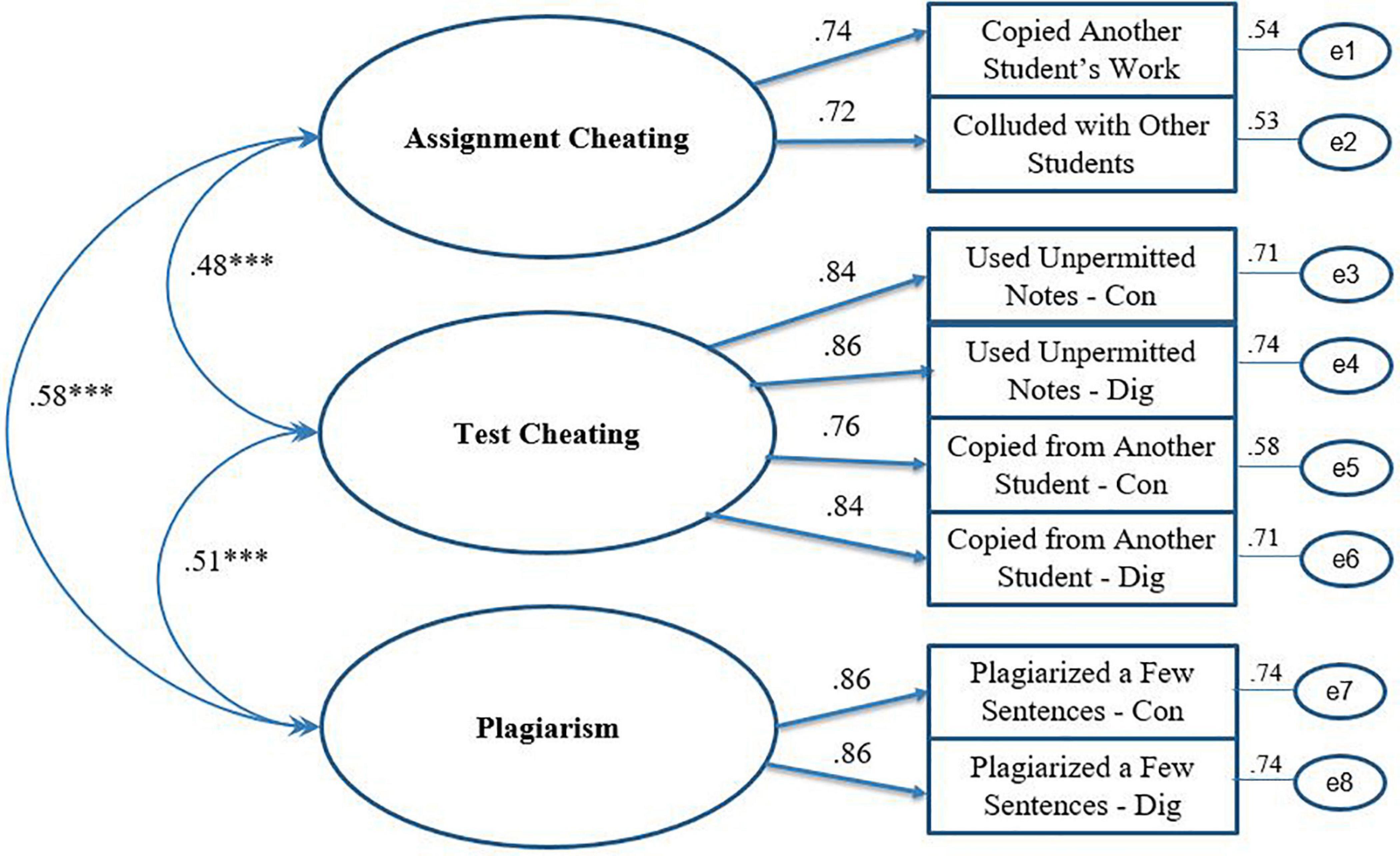

Figures 2, 3 offer schematic representations of the two models (perceptions and behaviors, respectively) along with standardized estimates using the full sample (i.e., 2012 and 2017 combined; N = 1388). As depicted, all items loaded significantly onto their respective factors for both models (λ ’s = 0.51 to 1.07 for Perceptions and 0.72 to 0.86 for Behaviors). As hypothesized, the intercorrelations among the three factors in both models were statistically significant (all p’s < 0.001).

Figure 2. Standardized estimates of the three-factor measurement model of participants’ perceptions related to academic integrity policies and peer norms. Model 1.1 – Full Sample. ∗∗∗p < 0.001.

Figure 3. Standardized estimates of the three-factor measurement model of academic dishonesty. Model 1.1 – Full Sample. ∗∗∗p < 0.001.

Multi-Group Confirmatory Factor Analysis

Having established that the models for both cohorts had equivalent path configurations with respect to the two three-factor models, MGCFA was conducted to progressively test both models of metric, scalar, and then residual invariance. As detailed in Table 3, based on change (Δ) in CFI, RMSEA, and SRMR values, the three-factor model for perceptions achieved the level of residual invariance – also known as “strict” invariance – and the three-factor model achieved the level of a scalar (or “strong”) invariance. In the latter case, the decision to “reject” invariance of residuals was based on the high change in CFI (Δ = 0.016). Importantly, however, only measurement invariance at the configural, metric, and scalar levels (not the residual level) is necessary to infer the model equivalence and proceed with between-cohort comparisons on the latent factors.

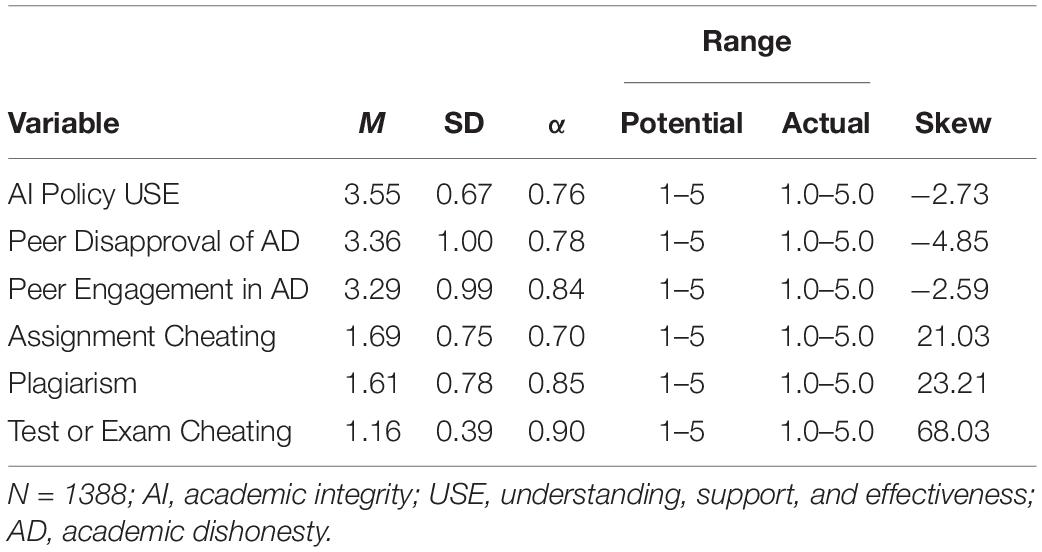

Descriptive Statistics of the Six Latent Factors

Full sample descriptive statistics (i.e., means, standard deviations, Cronbach’s alphas, potential and actual range of responses, and skew) of the six latent factors measured in this study are detailed in Table 4. Two details are most notable. First, although Cronbach’s alphas varied widely among the six factors – from a low of 0.70 for Assignment Cheating and a high of 0.90 for Test or Exam Cheating – all were either acceptable, good, or very good. Second, the three factors related to personal engagement in academic dishonesty were significantly skewed (where skew = skewness/standard error of skewness, and values >5.0 are considered large). In other words, for these three factors, a significantly greater proportion of participants used the first two points of the five-point scale (i.e., Never and Once or twice this year) relative to latter end of the scales (i.e., About weekly and Almost daily). This was not unexpected as significant positive skews are typical on the AMIS (e.g., Stephens, 2018) as well as other measures of academic dishonesty (e.g., Anderman et al., 1998).

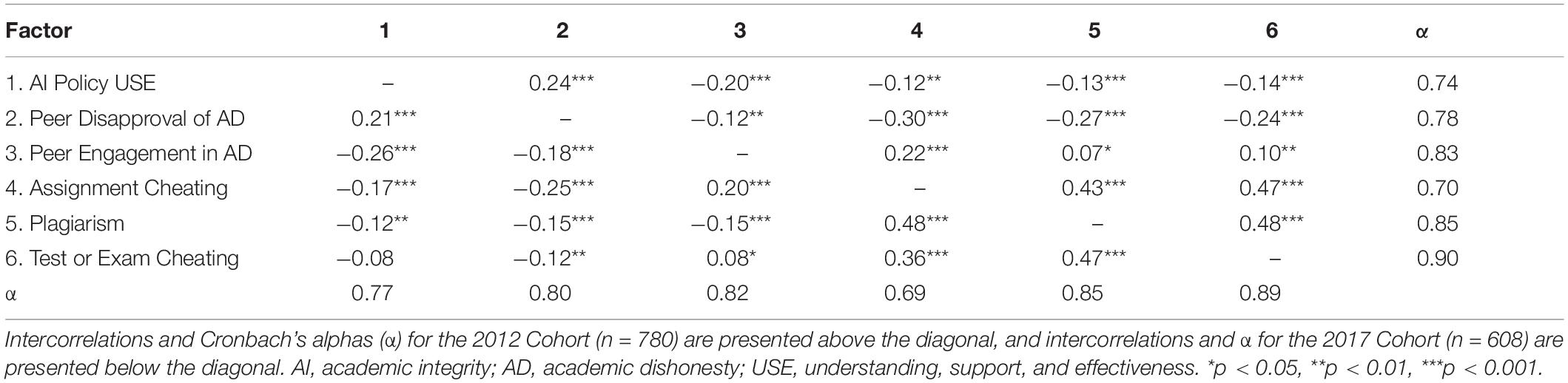

Bivariate Correlations Among Latent Factors by Cohort

As detailed in Table 5, most of the intercorrelations among the six latent factors were statistically significant. The magnitude of the associations, however, were often small (r < 0.30). That is, with the exception of the three types of academic dishonesty – assignment cheating, plagiarism, and test or exam cheating (r’s = 0.36 to 0.48, p < 0.001). Importantly, the pattern of results (i.e., the direction and strength of the correlation coefficients) were very similar for the two cohorts – all differences were < 0.10 with the exception of the association between assignment and test cheating (r = 47 in 2012 and 0.36 in 2017). Finally, as evidenced in the last column and row, the Cronbach’s alphas for all six latent factors were also very similar across the two cohorts.

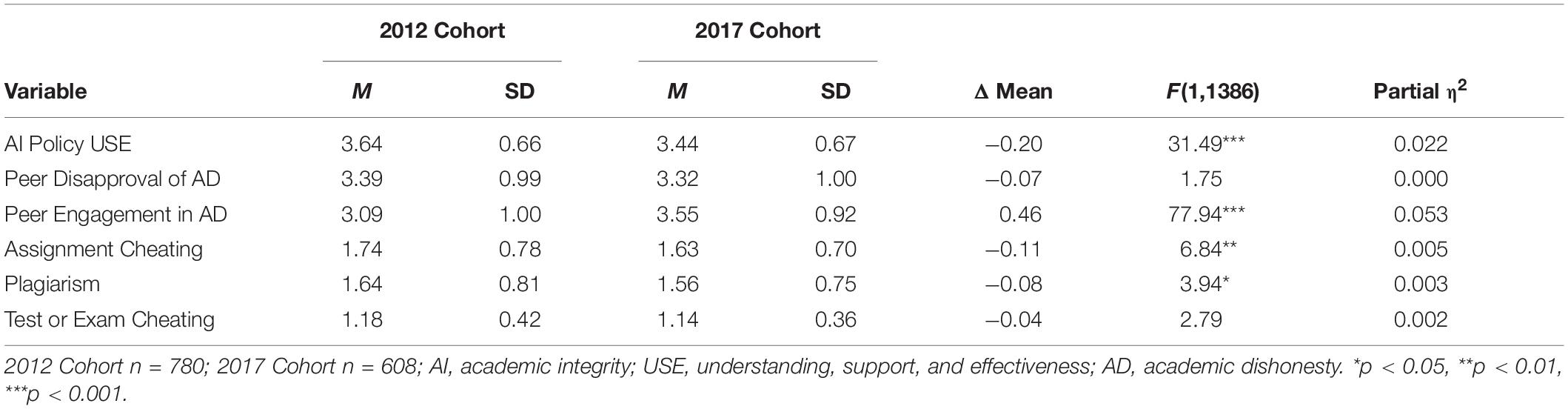

Hypothesis Testing

A series of ANOVAs were conducted to test the study’s four hypotheses. As detailed in Table 6, results offered mixed support. Contrary to the first hypothesis, the level of perceived understanding, support for, and effectiveness (USE) of university policies related to academic integrity was significantly lower (not higher) for the 2017 Cohort (i.e., participants who completed the AIC) compared to the 2012 Cohort (M’s = 3.44 and 3.66, respectively). Also contrary to hypotheses, there was no significant between-cohort difference in peer disapproval of academic dishonesty and the level of perceived peer engagement in academic dishonesty was significantly higher (not lower) for the 2017 Cohort compared to the 2012 Cohort (M’s = 3.55 and 3.09, respectively). As hypothesized, participants in the 2017 Cohort reported significantly lower levels of engagement in both assignment cheating and plagiarism compared to participants in the 2012. Cohort; however, there was no significant difference in test/exam cheating. Finally, as indicated by the partial η2 values, all of the observed differences were small in magnitude (η2 values < 0.06).

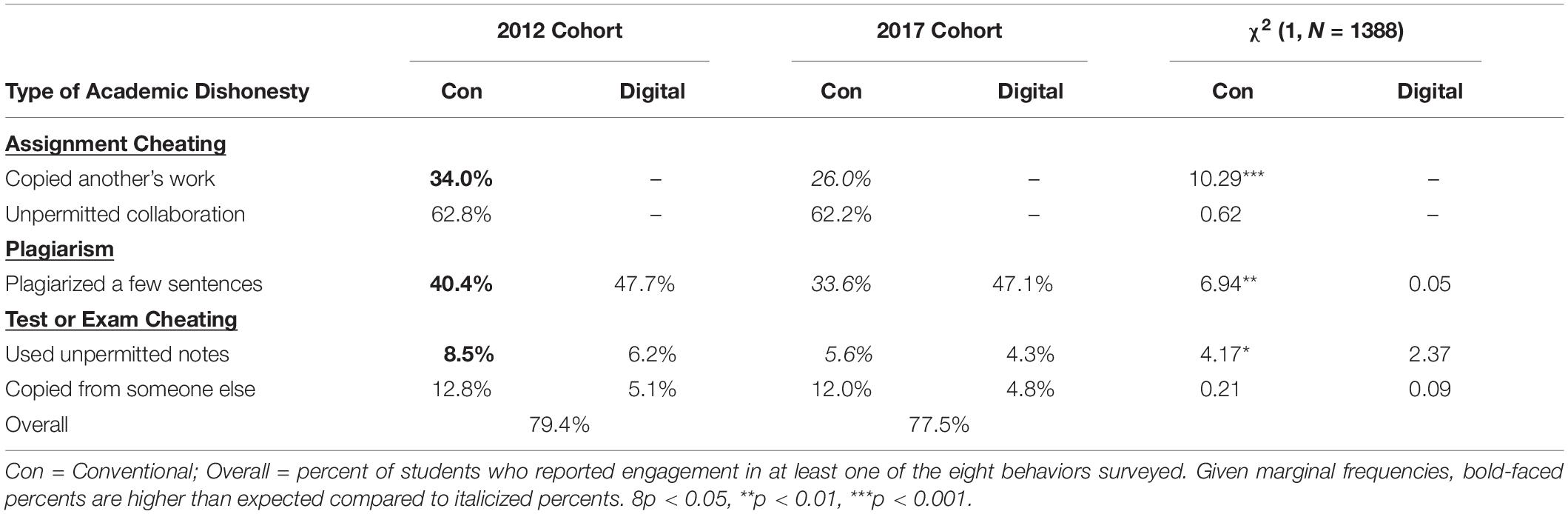

In order to examine more closely the between-cohort differences in academic dishonesty, each of the eight behaviors was dichotomized (where 0 = Never did it and 1 = Did it at least once) and cross-tabulated. As detailed in Table 7, Chi-square analyses indicated significant between-cohort differences on three of the eight behaviors. With respect to assignment cheating, compared to the participants in the 2012 Cohort, significantly fewer participants in the 2017 Cohort reported that they had “copied another student’s work and submitted it as their own” (34 to 26%, respectively; a 23.5% reduction). A similar reduction (from 40.4% in 2012 to 33.6% in 2017; a 16.8% reduction) was observed for conventional plagiarism – “From a book, magazine, or journal (not on the Internet): Paraphrased or copied a few sentences or paragraphs without citing them in a paper you submitted.” There was no corresponding decrease for digital plagiarism, which nearly half of all students from both cohorts reported doing at least once in the past year.

The third (statistically) significant difference also concerned a decrease in a conventional form of dishonesty but not one in its corresponding digital analog. Specifically, compared to the participants in the 2012 Cohort, significantly fewer participants in the 2017 Cohort reported that they had used “unpermitted notes or textbooks during a test or exam” (8.5 to 5.6%, respectively; a 34.1% reduction). However, although not statistically significant, compared to 2012 participants, a lower percentage of 2017 participants reported using “unpermitted electronic notes (stored in a PDA, phone or calculator) during a test or exam” (6.2 to 4.3%, respectively; a 30.6% reduction). Finally, it’s worth noting that nearly four out of five participants (regardless of cohort) reported engaging at least once in at least one of eight behaviors described.

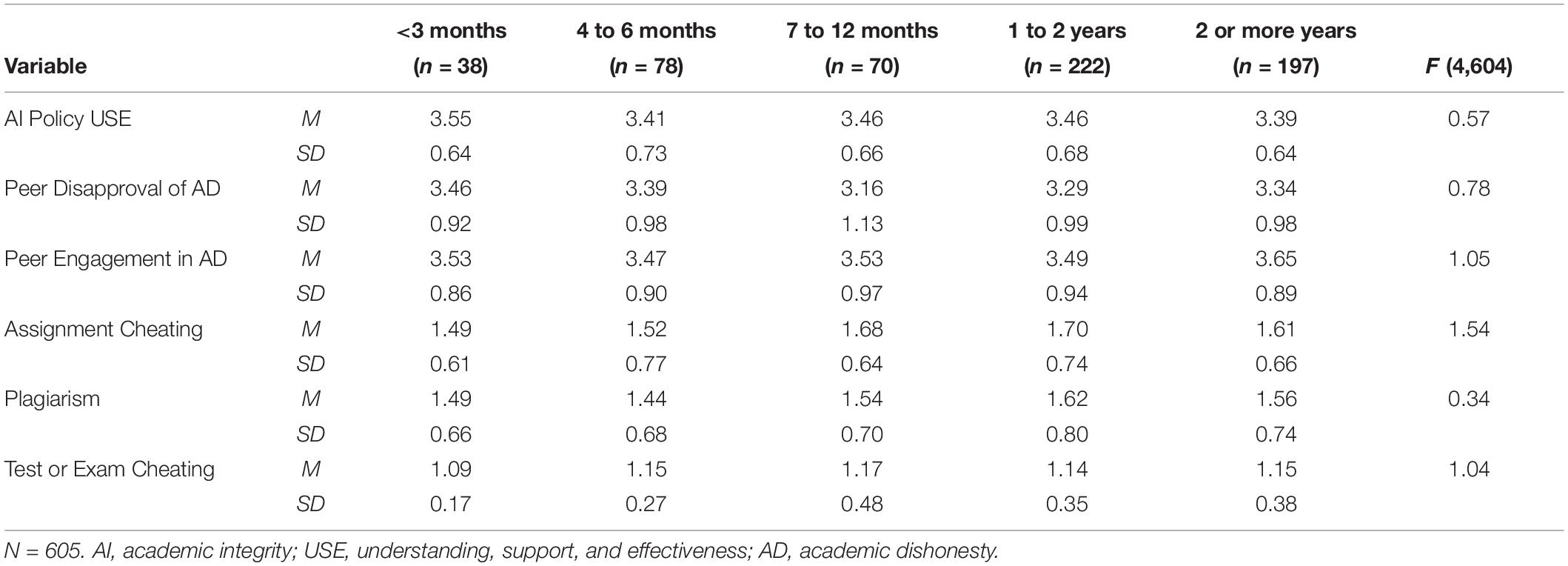

Finally, ANOVA used to determine if participants (from the 2017 cohort) who completed the AIC more recently (i.e., relative to the completion date of the survey) reported significantly different perceptions or behaviors compared to other participants. As detailed in Table 8, there was no significant differences on any of the six factors based on time elapsed between participants’ completion of the survey and the AIC. In short, contrary to the fifth hypothesis, participants who had completed the AIC within the past 3 months did not report greater understanding or peer disapproval nor lower levels of perceived peer or personal engagement in academic dishonesty, compared to participants who completed the AIC at any time further in the past (i.e., from four to 6 months or two or more years ago).

Discussion

The present study sought to extend the existing research on the effects of online academic integrity instruction on university students’ perceptions of and engagement in academic dishonesty. Previous research had shown such instruction to be effective in increasing knowledge and decreasing cheating, but results from the present investigation offered mixed evidence. Contrary to hypotheses, participants who completed the AIC reported: (1) significantly lower (not higher) levels of understanding, support, and effectiveness with respect to the University’s academic integrity policies; (2) statistically equivalent (not higher) levels of peer disapproval of academic dishonesty, and; (3) significantly higher (not lower) levels of peer engagement in academic dishonesty. However, results related to participants’ personal engagement in academic dishonesty offered partial support for hypotheses – those who completed the AIC reported significantly lower rates of engagement on three of the eight behaviors included in the study (copying another student’s work, conventional plagiarism, and use of unpermitted notes during a test or exam). The effect sizes associated with all differences were small. Finally, contrary to hypotheses, there was no evidence of a recency effect on any of the six latent factors; participant responses did not varyas a function of the time elapsed between completion of the AIC and completion of the survey. In short, to the extent that AIC had any effects on the perceptions and behaviors of those that completed it, they were not always as predicted and always modest.

Significance of Findings

The present investigation offers some potentially important insights concerning the implementation or delivery of online instruction related academic integrity. While previous research had shown such courses or tutorials to be effective in increasing knowledge (e.g., Curtis et al., 2013; Cronan et al., 2017) and decreasing dishonesty (e.g., Belter and du Pré, 2009; Dee and Jacob, 2010; Owens and White, 2013; Zivcakova et al., 2014), their implementation and assessment was confined to a single course and over a short period of time. The findings of this study suggest that online courses or tutorials may not be effective when delivered outside of or abstracted from a specific course. In other words, when delivered as a stand-alone registration requirement, online academic integrity instruction appears limited (if not ineffective) in changing students’ perceptions and behaviors related to academic integrity.

From a learning transfer perspective (e.g., Perkins and Salomon, 1992), the difference in results between previous studies and the present investigation is not unexpected. Specifically, while the former required only near transfer (a demonstration of learning in the same or similar context), the AIC (and others like it) demand far transfer (an impact in a context outside of or remote from the context of learning). The idea of far transfer – and disagreement about its possibilities – date back to the early 1900s (cf. Woodworth and Thorndike, 1901; Judd, 1908; for a brief review, see Barnett and Ceci, 2002). This long-running disagreement over far transfer has been sustained for over a century because the empirical evidence for it has also been divided, particularly for knowledge and skills associated with critical thinking and problem-solving (cf. Brown, 1989; Sala and Gobet, 2017). Importantly, research has shown support for far transfer is more likely to occur when participants are provided support such as hints or other cues that prompt recall of the previous learning and its potential of generalization or applicability in the new situation (e.g., Gick and Holyoak, 1980; Halpern, 1998).

Transfer of learning, of course, presupposes that learning occurred in the first instance. Given that none of the hypotheses of the present study were supported (i.e., there were no meaningful difference between participants who did and did not complete the AIC, combined with the absence of any recency effects), it’s not clear how much was actually learned (i.e., processed and stored into memory) from the completing the AIC. That said, much like the research on far transfer, recency effects (themselves a type of near “temporal” transfer, see Barnett and Ceci, 2002) are also aided and amplified by prompts and reinforcement (e.g., Lambert and Yanson, 2017). Jones et al. (2006), for example, provided evidence of a “decisional recency effect,” whereby individuals weigh “recent information more heavily, which produces a tendency to choose responses or actions that have recently been reinforced” (p. 316). In retrospect, given the context in which the AIC completed (as a registration requirement and not integrated into any academic course or program of study), the failure to find support for this studies hypotheses is not so surprising (even if somewhat disappointing).

With this in mind, the null findings of this study do not mean that the AIC is useless and unnecessary, but rather that is insufficient when delivered independent of any other efforts to strengthen and support the use of the principles and skills it hopes to teach. Such efforts would include requiring (or at least) encouraging university instructors to reference the AIC in their course, not only to remind students of the principles and practices associated with academic integrity but to offer specific guidance on how those principles and practices are relevant to their course. More generally, as argued elsewhere (e.g., Bertram Gallant, 2011; Wangaard and Stephens, 2011; Seider, 2012; Alzona, 2014; Stephens, 2015, 2019), more holistic approaches are needed to create a culture of integrity and academic dishonesty. One-off interventions, however, well-designed, are unlikely to ameliorate the long-standing epidemic of academic dishonesty.

Limitations and Future Directions

The present study used an observational research design, a “natural experiment” without true random assignment or control over the administration of the intervention. While convenient and cost-effective, this research design presents limits with respect to making firm causal claims (Campbell and Fiske, 1959). Accordingly, where possible, future studies should use longitudinal or (true) experimental designs to assess with more certainty the effects of interventions such as the AIC. Secondly, these future studies should assess for more and/or different potential outcomes. The present study measured only a handful of perceptions and self-reported behaviors related to academic integrity. Future research should include assessment of other factors such as future behavioral intentions as well as actual knowledge gains associated with completing courses like the AIC and the effects of those gains on demonstrable outcomes (including but not limited to academic dishonesty).

Conclusion

The results of the present study suggest that short, stand-alone courses on academic integrity have only modest, if any, effects on students’ perceptions and behavior. While previous research has shown such courses capable of producing substantial reductions in academic dishonesty (plagiarism, in particular), these courses were required in the context of a specific course. The AIC, in contrast, was not linked to any specific course or program, and its underwhelming effects likely caused by a failure to transfer. The findings suggest that requiring students to complete a course like the AIC may be useful, but only as part of a more comprehensive approach to promoting academic integrity.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the University of Auckland Human Participants Ethics Committee in 2012 (Reference Number 2009/C/026) and again in 2017 (Reference Number 019881). The patients/participants provided their written informed consent to participate in this study.

Author Contributions

JS: conceptualization, formal analysis, writing – original draft of methods, results, discussion, writing. review, and editing of complete manuscript. PW: project administration, writing – original draft of literature review, writing, review, and editing of complete manuscript. MA: project administration, data entry, and formal analysis. GL: data entry, research for literature review, and writing – original draft of literature review. ST: data entry and research for literature. All authors contributed to the article and approved the submitted version.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

- ^ https://www.wm.edu/offices/deanofstudents/services/communityvalues/honorcodeandcouncils/honorcode/index.php

- ^ https://spark.library.yorku.ca/academic-integrity-module-objectives/

- ^ https://www.auckland.ac.nz/en/students/forms-policies-and-guidelines/student-policies-and-guidelines/academic-integrity-copyright/academic-integrity-course.html

References

Alzona, R. (2014). Creating A Culture of Honesty, Integrity, Article. Business Mirror. Available online at: http://go.galegroup.com.ezproxy.auckland.ac.nz/ps/i.do?id=GALE%7CA379785136&v=2.1&u=learn&it=r&p=ITOF&sw=w&asid=2ddf9b0c98cf1e20f510f3fe6cafb04f (accessed August 23, 2014).

Anderman, E. M., Griesinger, T., and Westerfield, G. (1998). Motivation and cheating during early adolescence. J. Educ. Psychol. 90, 84–93. doi: 10.1037/0022-0663.90.1.84

Barnett, S. M., and Ceci, S. J. (2002). When and where do we apply what we learn? A taxonomy for far transfer. Psychol. Bull. 128, 612–637. doi: 10.1037/0033-2909.128.4.612

Belter, R. W., and du Pré, A. (2009). A strategy to reduce plagiarism in an undergraduate course. Teach. Psychol. 36, 257–261. doi: 10.1080/00986280903173165

Bertram Gallant, T. (2008). Academic Integrity in the Twenty-First Century: A Teaching and Learning Imperative. San Francisco, CA: Jossey-Bass.

Bertram Gallant, T. (2011). Creating the Ethical Academy: A Systems Approach to Understanding Misconduct and Empowering Change in Higher Education. Abingdon: Routledge.

Bowers, W. J. (1964). Student Dishonesty and its Control in College. New York, NY: Columbia University Bureau of Applied Social Research.

Brown, A. L. (1989). “Analogical learning and transfer: what develops?,” in Similarity and Analogical Reasoning, eds S. Vosniadou and A. Ortony (New York, NY: Cambridge University Press), 369–412. doi: 10.1017/cbo9780511529863.019

Campbell, D. T., and Fiske, D. W. (1959). Convergent and discriminant validation by the multitrait-multimethod matrix. Psychol. Bull. 56, 81–105. doi: 10.1037/h0046016

Chen, F. F. (2007). Sensitivity of goodness of fit indexes to lack of measurement invariance. Struct. Equ. Modeling 14, 464–504. doi: 10.1080/10705510701301834

Cronan, T. P., McHaney, R., Douglas, D. E., and Mullins, J. K. (2017). Changing the academic integrity climate on campus using a technology-based intervention. Ethics Behav. 27, 89–105. doi: 10.1080/10508422.2016.1161514

Curtis, G. J., Gouldthorp, B., Thomas, E. F., O’Brien, G. M., and Correia, H. M. (2013). Online academic-integrity mastery training may improve students’ awareness of, and attitudes toward, plagiarism. Psychol. Learn. Teach. 12, 282–289. doi: 10.2304/plat.2013.12.3.282

Day, N., Hudson, D., Dobies, P., and Waris, R. (2011). Student or situation? Personality and classroom context as predictors of attitudes about business school cheating. Soc. Psychol. Educ. 14, 261–282. doi: 10.1007/s11218-010-9145-8

Dee, T. S., and Jacob, B. A. (2010). Rational Ignorance in Education: A Field Experiment in Student Plagiarism: (Working Paper No. 15672). Cambridge, MA: National Bureau of Economic Research.

Eisenberg, J. (2004). To cheat or not to cheat: effects of moral perspective and situational variables on students’ attitudes. J. Moral Educ. 33, 163–178. doi: 10.1080/0305724042000215276

Gick, M. L., and Holyoak, K. J. (1980). Analogical problem solving. Cogn. Psychol. 12, 306–355. doi: 10.1016/0010-0285(80)90013-4

Guttman, A. (2004). Sports: The First Five Millenia. Amherst, MA: University of Massachusetts Press.

Halpern, D. F. (1998). Teaching critical thinking for transfer across domains: disposition, skills, structure training, and metacognitive monitoring. Am. Psychol. 53, 449–455. doi: 10.1037/0003-066X.53.4.449

Hartshorne, H., and May, M. A. (1928). Studies in the Nature of Character: Studies in Deceit, Vol. 1. New York, NY: Macmillan.

Hu, L. t, and Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Struct. Equ. Modeling 6, 1–55. doi: 10.1080/10705519909540118

Jones, M., and Sieck, W. R. (2003). Learning myopia: an adaptive recency effect in category learning. J. Exp. Psychol. Learn. Mem. Cogn. 29, 626–640. doi: 10.1037/0278-7393.29.4.626

Jones, M., Love, B. C., and Maddox, W. T. (2006). Recency effects as a window to generalization: separating decisional and perceptual sequential effects in category learning. J. Exp. Psychol. Learn. Mem. Cogn. 32, 316–332. doi: 10.1037/0278-7393.32.3.316

Jones, N. (2014). Uni Cheats: Hundreds Punished. New Zealand Herald. Available online at: https://www.nzherald.co.nz/nz/news/article.cfm?c_id=1&objectid=11240751 (accessed April 19, 2014).

Judd, C. H. (1908). The relation of special training and general intelligence. Educ. Rev. 36, 28–42.

Lambert, A., and Yanson, R. (2017). E-learning for professional development: preferences in learning method and recency effect. J. Appl. Bus. Econ. 19, 51–63.

Lang, J. M. (2013). Cheating Lessons: Learning From Academic Dishonesty. Cambridge, MA: Harvard University Press.

Leming, J. S. (1978). Cheating behavior, situational influence, and moral development. J. Educ. Res. 71, 214–217. doi: 10.1080/00220671.1978.10885074

Lupton, R. A., and Chapman, K. J. (2002). Russian and American college students’ attitudes, perceptions and tendencies towards cheating. Educ. Res. 33, 17–27. doi: 10.1080/00131880110081080

Lupton, R. A., Chapman, K. J., and Weiss, J. E. (2000). A cross-national exploration of business students’ attitudes, perceptions, and tendencies toward academic dishonesty. J. Educ. Bus. 75, 231–235. doi: 10.1080/08832320009599020

Ma, Y., McCabe, D., and Liu, R. (2013). Students’ academic cheating in Chinese Universities: prevalence, influencing factors, and proposed action. J. Acad. Ethics 11, 169–184. doi: 10.1007/s10805-013-9186-7

McCabe, D. L. (2005). Cheating among college and universtiy students: a North American perpspective. Int. J. Educ. Integr. 1, 1–11.

McCabe, D. L., and Trevino, L. K. (1993). Academic dishonesty: honor codes and other contextual influences. J. High. Educ. 64, 522–538. doi: 10.1080/00221546.1993.11778446

McCabe, D. L., and Trevino, L. K. (1997). Individual and contextual influences on academic dishonesty: a multicampus investigation. Res. High. Educ. 38, 379–396.

McCabe, D. L., Trevino, L. K., and Butterfield, K. D. (1999). Academic integrity in honor code and non-honor code environments: a qualitative investigation. J. High. Educ. 70, 211–234. doi: 10.2307/2649128

McLeod, S. A. (2012). Experimental Method: Simply Psychology. Available online at: https://www.simplypsychology.org/experimental-method.html (accessed January 14, 2012).

McNeilage, A., and Visentin, L. (2014). Students Enlist MyMaster Website to Write Essays, Assignments. Sydney Morning Herald. Available online at: https://www.smh.com.au/education/students-enlist-mymaster-website-to-write-essays-assignments-20141110-11k0xg.html (accessed March 21, 2020).

Medina, J., Benner, K., and Taylor, K. (2019). Actresses, Business Leaders and Other Wealthy Parents Charged in U.S. College Entry Fraud. New York Times, (Section A), 1. Available online at: https://www.nytimes.com/2019/03/12/us/college-admissions-cheating-scandal.html (accessed March 21, 2020).

Morgeson, F. P., and Campion, M. A. (1997). Social and cognitive sources of potential inaccuracy in job analysis. J. Appl. Psychol. 82, 627–655. doi: 10.1037/0021-9010.82.5.627

Murdock, T. B., Miller, A., and Kohlhardt, J. (2004). Effects of classroom context variables on high school students’ judgments of the acceptibility and likelihood of cheating. J. Educ. Psychol. 96, 765–777. doi: 10.1037/0022-0663.96.4.765

Murdock, T. B., Stephens, J. M., and Grotewiel, M. M. (2016). “Student dishonesty in the face of assessment: who, why, and what we can do about it,” in Handbook of Human and Social Conditions in Assessment, eds G. T. L. Brown and L. R. Harrisx (Abingdon: Routledge), 186–203.

Owens, C., and White, F. A. (2013). A 5-year systematic strategy to reduce plagiarism among first-year psychology university students. Aust. J. Psychol. 65, 14–21. doi: 10.1111/ajpy.12005

Perez-Pena, R., and Bidgood, J. (2012). Harvard Says 125 Students Yay Have Cheated on A Final Exam. The New York Times, A18. Available online at: http://www.nytimes.com/2012/08/31/education/harvard-says-125-students-may-have-cheated-on-exam.html?ref=harvarduniversity (accessed March 21, 2020).

Perkins, D. N., and Salomon, G. (1992). Transfer of Learning International Encyclopedia of Education, Vol. 2. Oxford: Pergamon Press, 6452–6457.

Sala, G., and Gobet, F. (2017). Does far transfer exist? Negative evidence from chess, music, and working memory training. Curr. Dir. Psychol. Sci. 26, 515–520. doi: 10.1177/0963721417712760

Schwartz, B. M., Tatum, H. E., and Hageman, M. C. (2013). College students’ perceptions of and responses to cheating at traditional, modified, and non-honor system institutions. Ethics Behav. 23, 463–476. doi: 10.1080/10508422.2013.814538

Seider, S. (2012). Character Compass: How Powerful School Culture can Point Students Towards Success. Cambridge, MA: Harvard Education Press.

Stephens, J. M. (2015). “Creating cultures of integrity: a multi-level intervention model for promoting academic honesty,” in Handbook of Academic Integrity, ed. T. A. Bretag (Singapore: Springer), 995–1007. doi: 10.1007/978-981-287-098-8_13

Stephens, J. M. (2018). Bridging the divide: the role of motivation and self-regulation in explaining the judgment-action gap related to academic dishonesty. Front. Psychol. 9:246. doi: 10.3389/fpsyg.2018.00246

Stephens, J. M. (2019). Natural and normal, but unethical and evitable: the epidemic of academic dishonesty and how we end it. Change Mag. High. Learn. 51, 8–17. doi: 10.1080/00091383.2019.1618140

Stephens, J. M., and Gehlbach, H. S. (2007). “Under pressure and under-engaged: motivational profiles and academic cheating in high school,” in Psychology of Academic Cheating, eds E. M. Anderman and T. B. Murdock (Amsterdam: Academic Press), 107–139. doi: 10.1016/b978-012372541-7/50009-7

Stephens, J. M., and Nicholson, H. (2008). Cases of incongruity: exploring the divide between adolescents’ beliefs and behaviors related to academic cheating. Educ. Stud. 34, 361–376. doi: 10.1080/03055690802257127

Stephens, J. M., Romakin, V., and Yukhymenko, M. (2010). Academic motivation and misconduct in two cultures: a comparative analysis of U.S. and Ukrainian undergraduates. Int. J. Educ. Integr. 6, 47–60. doi: 10.21913/IJEI.v6i1.674

Stephens, J. M., and Wangaard, D. B. (2013). Using the epidemic of academic dishonesty as an opportunity for character education: a three-year mixed methods study (with mixed results). Peabody J. Educ. 88, 159–179. doi: 10.1080/0161956X.2013.775868

Stoesz, B. M., and Yudintseva, A. (2018). Effectiveness of tutorials for promoting educational integrity: a synthesis paper. Int. J. Educ. Integr. 14:6. doi: 10.1007/s40979-018-0030-0

Ullman, J. B., and Bentler, P. M. (2003). “Structural equation modeling,” in Handbook of Psychology, ed. I. B. Weiner (New Jersey, NJ: John Wiley & Sons), 607–634.

Universities Australia (2017). UA Academic Integrity Best Practices Priniples. Deakin, ACT: Universities Australia.

Visentin, L. (2015). Macquarie University Revokes Degrees for Students Caught Buying Essays in MyMaster Cheating Racket. The Sydney Morning Herald. Available online at: http://www.smh.com.au/national/education/macquarie-university-revokes-degrees-for-students-caught-buying-essays-in-mymaster-cheating-racket-20150528-ghba3z.html (accessed March 21, 2020).

Walker, J. (2010). Measuring plagiarism: researching what students do, not what they say they do. Stud. High. Educ. 35, 41–59. doi: 10.1080/03075070902912994

Wangaard, D. B., and Stephens, J. M. (2011). Creating A Culture of Academic Integrity: A Tool Kit for Secondary Schools. Minneapolis, MN: Search Institute.

Weeks, J. (2019). More Cheating Cases at University of Auckland, Union Warns of Ghostwriting Threat. Stuff. Available online at: https://www.stuff.co.nz/national/education/113545622/more-cheating-cases-at-university-of-auckland-union-warns-of-ghostwriting-threat (accessed June 27, 2019).

Woodworth, R. S., and Thorndike, E. L. (1901). The influence of improvement in one mental function upon the efficiency of other functions. Psychol. Rev. 8, 247–261. doi: 10.1037/h0074898

Appendix A

Topical Outline of The University of Auckland’s Academic Integrity Course

Module 1: Academic integrity at the University

1.1 What is academic integrity?

1.2 Understanding the academic environment

1.3 The University of Auckland Graduate Profiles

Module 2: Avoiding academic dishonesty

2.1 Examples of academic dishonesty

2.2 When working in groups…

2.3 When getting and giving help…

Module 3: Using and acknowledging the work of others

3.1 Quoting, paraphrasing and summarizing

3.2 Citing and referencing

3.3 Avoid plagiarism

Module 4: Using copyrighted material correctly

4.1 What is copyright?

4.2 How to use copyrighted material?

4.3 What is Creative Commons? Intellectual property (IP)

Module 5: Consequences of academic dishonesty at university

5.1 What happens if someone is academically dishonest?

5.2 What happens if someone cheats during an exam?

5.3 Equip yourself

Appendix B

Assignment Instructions Provided to Students (abbreviated)

The purpose of this assignment is to provide you with an opportunity to learn about research methods and reporting practices as well as to deepen your understanding related to some of theories we have been (or will be) reading about and discussing in this course. To do so, we have decided to structure this assignment around the principles of project-based learning and social constructivism more broadly (e.g., active participation, self-regulation, social interaction and construction of knowledge). The project explored in this assignment concerns academic dishonesty – a serious problem in schools and universities around the world (e.g., Murdock et al., 2016). We have created a shortened form of a questionnaire (the AMIS-SF) comprising “measures” related to academic motivation and moral judgment that have been in the research literature to investigate the problem of academic dishonesty (Anderman et al., 1998; Murdock et al., 2004; Stephens and Gehlbach, 2007; Stephens and Nicholson, 2008; Stephens et al., 2010). As indicated above, this Assignment has two parts.

You are asked to recruit a total of [8 in 2012, 15 in 2017] UOA students from at least two different faculties) to complete the AMIS-SF. This questionnaire comprises approximately 30 items that measure various perceptions and behaviors related to academic integrity. These topics are covered in your textbook and will be discussed in lectures 3 and 7 during the semester. In addition, you will also receive readings and in-course training related to the research procedures (e.g., when, where, and how to approach potential participants) and ethical principles (e.g., autonomy, confidentiality, and non-maleficence) inherent in conducting survey research. These procedures and principles are of the utmost importance in conducting “good” research; research that not only produces valid and reliable data but also demonstrates respect for study participants throughout the research process.

Below is a summary of the procedures and principles you must follow when carrying-out this part of the assignment:

• All potential participants must be approached in public spaces on or around the UOA city campus.

• As you approach potential participants, please introduce yourself and state your purpose clearly:

∘ Hello, my name is ______ and I’m conducting some research for an assignment in my educational psychology course. If you’re student at the UOA, like me, I’m hoping you might have a few minutes to complete an anonymous survey. Here [handover PIS] is an information sheet detailing the study and your rights as a participant. [Allow time to read]. Are you willing to participant?

∘ [If no] No worries, thanks for considering it. Have a great day [move on].

∘ [If yes]. Thanks for your willingness to participant. Here’s the Questionnaire [hand it over, along with the sealable envelope and a pen, if needed]. It should take approximately 5 min to complete, please remember that the survey is anonymous, so please do not write your name or any identification numbers on it or the envelope. Please also recall that your participation is voluntary, and you may stop at any time without given reason. After you’ve completed the survey, please place it in the envelope and seal it before returning to me. Please let me know if you have any questions.

∘ NOTE: Please give the participant time and space to enter responses privately – do not attempt to look at their response, and only receive questionnaires once they have been sealed in the envelope.

• After you have collected the sealed envelope, it should be mixed amongst the other envelopes you’ve collected and remained sealed until all Questionnaires are collected.

Keywords: natural experiment, tertiary students, academic dishonesty, online instruction and learning, academic integrity initiatives

Citation: Stephens JM, Watson PWStJ, Alansari M, Lee G and Turnbull SM (2021) Can Online Academic Integrity Instruction Affect University Students’ Perceptions of and Engagement in Academic Dishonesty? Results From a Natural Experiment in New Zealand. Front. Psychol. 12:569133. doi: 10.3389/fpsyg.2021.569133

Received: 03 June 2020; Accepted: 26 January 2021;

Published: 17 February 2021.

Edited by:

Douglas F. Kauffman, Medical University of the Americas – Nevis, United StatesReviewed by:

Michael S Dempsey, Boston University, United StatesMeryem Yilmaz Soylu, University of Nebraska–Lincoln, United States

Copyright © 2021 Stephens, Watson, Alansari, Lee and Turnbull. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jason Michael Stephens, jm.stephens@auckland.ac.nz

Jason Michael Stephens

Jason Michael Stephens Penelope Winifred St John Watson

Penelope Winifred St John Watson Mohamed Alansari

Mohamed Alansari Grace Lee

Grace Lee Steven Martin Turnbull

Steven Martin Turnbull