- 1Department of Communication, Stanford University, Stanford, CA, United States

- 2Department of Psychiatry and Behavioral Sciences, School of Medicine, Stanford University, Stanford, CA, United States

Virtual reality (VR) has been proposed as a methodological tool to study the basic science of psychology and other fields. One key advantage of VR is that sharing of virtual content can lead to more robust replication and representative sampling. A database of standardized content will help fulfill this vision. There are two objectives to this study. First, we seek to establish and allow public access to a database of immersive VR video clips that can act as a potential resource for studies on emotion induction using virtual reality. Second, given the large sample size of participants needed to get reliable valence and arousal ratings for our video, we were able to explore the possible links between the head movements of the observer and the emotions he or she feels while viewing immersive VR. To accomplish our goals, we sourced for and tested 73 immersive VR clips which participants rated on valence and arousal dimensions using self-assessment manikins. We also tracked participants' rotational head movements as they watched the clips, allowing us to correlate head movements and affect. Based on past research, we predicted relationships between the standard deviation of head yaw and valence and arousal ratings. Results showed that the stimuli varied reasonably well along the dimensions of valence and arousal, with a slight underrepresentation of clips that are of negative valence and highly arousing. The standard deviation of yaw positively correlated with valence, while a significant positive relationship was found between head pitch and arousal. The immersive VR clips tested are available online as supplemental material.

Introduction

Blascovich et al. (2002) proposed the use of virtual reality (VR) as a methodological tool to study the basic science of psychology and other fields. Since then, there has been a steady increase in the number of studies that seek to use VR as a tool (Schultheis and Rizzo, 2001; Fox et al., 2009). Some studies use VR to examine how humans respond to virtual social interactions (Dyck et al., 2008; Schroeder, 2012; Qu et al., 2014) or as a tool for exposure therapy (Difede and Hoffman, 2002; Klinger et al., 2005), while others employ VR to study phenomenon that might otherwise be impossible to recreate or manipulate in real life (Slater et al., 2006; Peck et al., 2013). In recent years, the cost of a typical hardware setup has decreased dramatically, allowing researchers to spend less than the typical price of a laptop to implement compelling VR. One of the key advantages of VR for the study of social science is that sharing of virtual content will allow “not only for cross-sectional replication but also for more representative sampling” (Blascovich et al., 2002). What is needed to fulfill this vision is a database of standardized content.

The immersive video (or immersive VR clip) is one powerful and realistic aspect of VR. It shows a photorealistic video of a scene that updates based on head-orientation but is not otherwise interactive (Slater and Sanchez-Vives, 2016). When a viewer watches an immersive VR clip, he sees a 360° view from where the video was originally recorded, and while changes in head orientation are rendered accurately, typically these videos do not allow for head translation. A video is recorded using multiple cameras and stitched together through software to form a total surround scene. In this sense, creating content for immersive video is fairly straightforward, and consequently there is a wealth of content publicly available on social media sites (Multisilta, 2014).

To accomplish the goal of a VR content database, we sourced and created a library of immersive VR clips that can act as a resource for scholars, paralleling the design used in prior studies on affective picture viewing (e.g., International Affective Picture System, IAPS; Lang et al., 2008). The IAPS is a large set of photographs developed to provide emotional stimuli for psychological and behavioral studies on emotion and mood induction. Participants are shown photographs and asked to rate each on the dimensions of valence and arousal. While the IAPS and its acoustic stimuli counterpart the International Affective Digital Sounds (IADS; Bradley and Lang, 1999) are well-established and used extensively in emotional research, a database of immersive VR content that can potentially induce emotions does not exist to our knowledge. As such, we were interested to explore if we can establish a database of immersive VR clips for emotion induction based on the affective response of participants.

Most VR systems allow a user to have a full 360° head rotation view, such that the content updates based on the particular orientation of the head. In this sense, the so-called field of regard is higher in VR than in traditional media such as the television, which doesn't change when one moves her head away from the screen. This often allows VR to trigger strong emotions in individuals (Riva et al., 2007; Parsons and Rizzo, 2008). However, few studies have examined the relationship between head movements in VR and emotions. Darwin (1965) discussed the idea of head postures representing emotional states. When one is happy, he holds his head up high. Conversely, when he is sad, his head tends to hang low. Indeed, more recent empirical research has provided empirical evidence for these relationships (Schouwstra and Hoogstraten, 1995; Wallbott, 1998; Tracy and Matsumoto, 2008).

An early study which investigated the influence of body movements on presence in virtual environments found a significant positive association between head yaw and reported presence (Slater et al., 1998). In a study on head movements in VR, participants saw themselves in a virtual classroom and participated in a learning experience (Won et al., 2016). Results showed a relationship between lateral head rotations and anxiety, where the standard deviation of head yaw significantly correlated to the awareness and concern individuals had regarding other virtual people in the room. Livingstone and Palmer (2016) tasked vocalists to speak and sing passages of varying emotions (e.g., happy, neutral, sad) and tracked their head movements using motion capture technology. Findings revealed a significant relationship between head pitch and emotions. Participants raised their heads when vocalizing passages that conveyed happiness and excitement and lowered their heads for those of a sad nature. Understanding the link between head movements in VR and emotions may be key in the development and implementation of VR in the study and treatment of psychological disorders (Wiederhold and Wiederhold, 2005; Parsons et al., 2007).

There are two objectives of the study: First, we seek to establish and allow public access to a database of immersive VR clips that can act as a potential resource for studies on emotion induction using virtual reality. Second, given we need a large sample size of participants to get reliable valence and arousal ratings for our video, we are in a unique position explore the possible links between head movements and the emotions one feels while viewing immersive VR. To accomplish our goals, we sourced for and tested 73 immersive VR clips which participants rated on valence and arousal dimensions using self-assessment manikins. These clips are available online as supplemental material. We also tracked participants' rotational head movements as they watched the clips, allowing us to correlate the observers' head movements and affect. Based on past research (Won et al., 2016), we predicted significant relationships between the standard deviation of head yaw with valence and arousal ratings.

Methods

Participants

Participants comprised of undergraduates from a medium-sized West Coast university who received course credit for their participation. In total, 95 participants (56 female) between the ages of 18 and 24 took part in the study.

Stimulus and Measures

The authors spent 6 months searching for clips of immersive VR which they thought will effectively induce emotions. Sources include personal contacts and internet searches on website such as YouTube, Vrideo, and Facebook. In total, more than 200 immersive VR clips were viewed and assessed. From this collection, 113 were shortlisted and subjected to further analysis. The experimenters evaluated the video clips and a subsequent round of selection was conducted based on the criteria employed by Gross and Levenson (1995). First, the clips had to be of relatively short length. This is especially important as longer clips may induce fatigue and nausea among participants. Second, the VR clips had to be understandable on their own without the need for further explanation. As such, clips which were sequels or part of an episodic series were excluded. Third, the VR clips should be likely to induce valence and arousal. The aim is to get a good spread of videos that will vary across the dimensions. A final 73 immersive VR clips were selected for the study. They ranged from 29 to 668 s in length with an average of 188 s per clip.

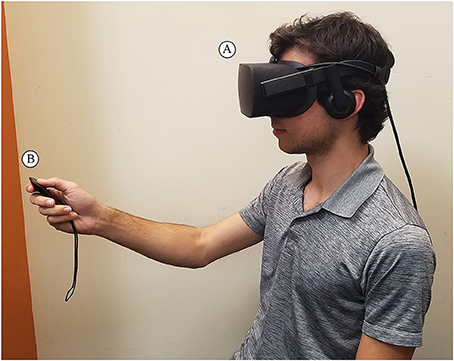

Participants viewed the immersive VR clips through an Oculus Rift CV1 (Oculus VR, Menlo Park, CA) head-mounted display (HMD). The Oculus Rift has a resolution of 2,160 × 1,200 pixels, a 110° field of view and a refresh rate of 90 Hz. The low-latency tracking technology determines the relative position of the viewer's head and adjusts his view of the immersive video accordingly. Participants interacted with on-screen prompts and rated the videos using an Oculus Rift remote. Vizard 5 software (Worldviz, San Francisco, CA) was used to program the rating system. The software ran on a 3.6 GHz Intel i7 computer with an Nvidia GTX 1080 graphics card. The experimental setup is shown in Figure 1.

Figure 1. The experimental setup depicting a participant (A) wearing an Oculus Rift HMD to view the immersive VR clips, and (B) holding an Oculus. Rift remote to select his affective responses to his viewing experience.

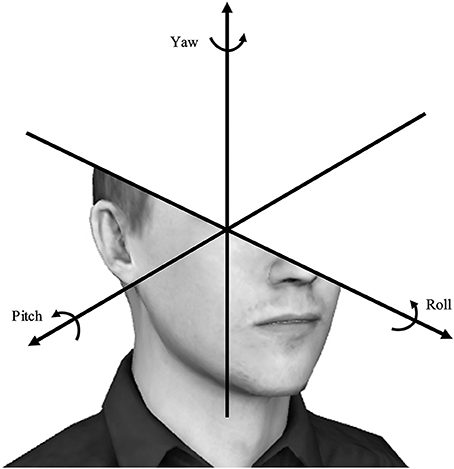

The Oculus Rift HMD features a magnetometer, gyroscope, and accelerometer which combine to allow for tracking of rotational head movement. The data was digitally captured and comprised of the pitch, yaw, and roll of the head. These are standard terms for rotations around the respective axes, and are measured in degrees. Pitch refers to the movement of the head around the X-axis, similar to a nodding movement. Yaw represents the movement of the head around the Y-axis, similar to turning the head side-to-side to indicate “no.” Roll refers to moving the head around the Z-axis, similar to tilting the head from one shoulder to the other. These movements are presented in Figure 2. As discussed earlier, Won et al. (2016) found a relationship between lateral head rotations and anxiety. They showed that scanning behavior, defined as the standard deviation of head yaw, significantly correlated with the awareness and concern people had of virtual others. In this study, we similarly assessed how much participants moved their heads by calculating the standard deviations of the pitch, yaw, and roll of their head movements while they watched each clip and included them as our variables.

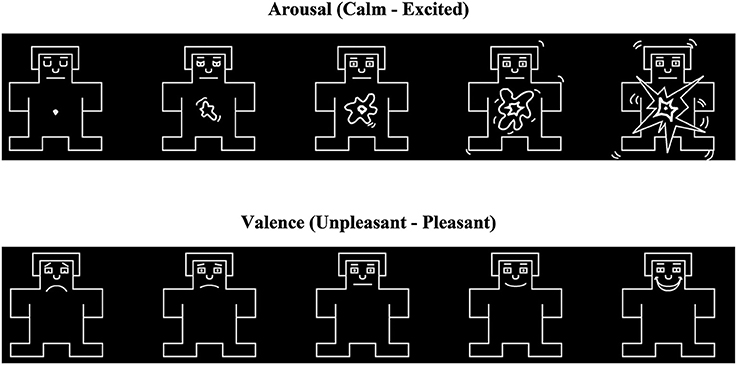

Participants made their ratings using the self-assessment manikin (SAM; Lang, 1980). SAM shows a series of graphical figures that range along the dimensions of valence and arousal. The expressions of these figures vary across a continuous scale. The SAM scale for valence shows a sad and unhappy figure on one end, and a smiling and happy figure at the other. For arousal, the SAM scale depicts a calm and relaxed figure on one end, and an excited and interested figure on the other. A 9-point rating scale is presented at the bottom of each SAM. Participants select one of the options while wearing the HMD using the Oculus Rift remote control device that could scroll among options. Studies have shown that SAM ratings of valence and arousal are similar to those obtained from the verbal semantic differential scale (Lang, 1980; Ito et al., 1998). The SAM figures are presented in Figure 3.

Procedure

Pretests were conducted to find out the duration that participants were comfortable with watching immersive videos before they experience fatigue or simulation sickness. Results revealed that some participants encountered fatigue and/or nausea if they watched for more than 15 min without a break. Most participants were at ease with a duration of around 12 min. The 73 immersive VR clips were then divided into clusters with an approximate duration of 12 min per cluster. This resulted in a total of 19 groups of videos. Based on the judgment of the experimenters, no more than two clips of a particular valence (negative/positive) or arousal (low/high) were shown consecutively (Gross and Levenson, 1995). This was to discourage participants from being too involved in any particular affect and influence his judgement in the subsequent ratings. Each video clip was viewed by a minimum of 15 participants.

When participants first arrived, they were briefed by the experimenter that the purpose of the study was to examine how people respond to immersive videos. Participants were told that they would be wearing an HMD to view the immersive videos, and that they can request to stop participating at any time if they feel discomfort, nauseous, or some form of simulator sickness. Participants were then presented with a printout of the SAM measures for valence and arousal, and told that they would be rating the immersive videos based on these dimensions. Participants were then introduced to the Oculus Rift remote and its operation in order to rate the immersive VR clips.

The specific procedure is presented here: Participants sat on swivel chair which allowed them to turn around 360° if they wished to. They first watched a test immersive VR clip and did a mock rating to get accustomed to the viewing and rating process. They then watched and rated a total of three groups of video clips with each group comprising of between two and four video clips. A 5 s preparation screen was presented before each clip. After the clip was shown, participants were presented with the SAM scale for valence. After participants selected the corresponding rating using the Oculus Rift remote, the SAM scale for arousal was presented and participants made their ratings. Following this, the aforementioned 5 s preparation screen was presented to get participants ready to view the next clip. After watching one group of immersive VR clips, participants were given a short break of about 5 min before continuing with the next group of clips. This was done to minimize the chances of participants feeling fatigue or nauseous by allowing them to rest in between group of videos. With each group of videos having a duration of about 12 min, the entire rating process lasted around 40 min.

Results

Affective Ratings

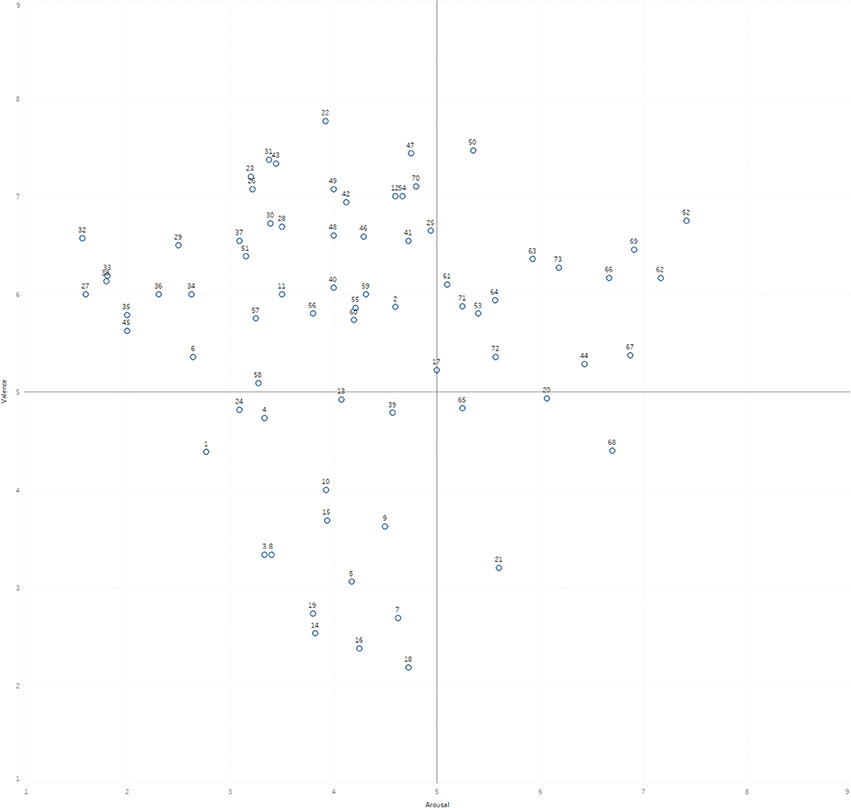

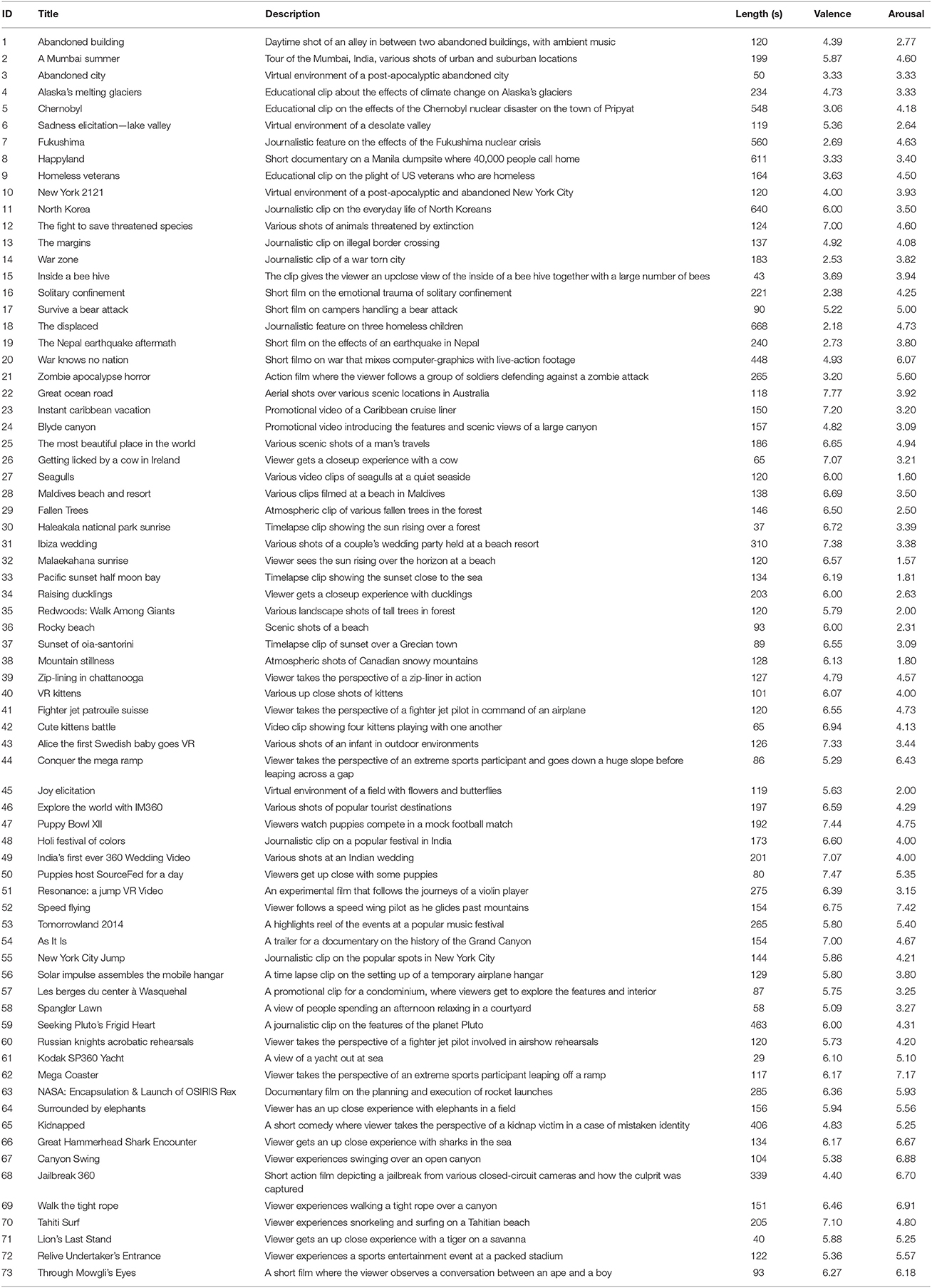

Figure 4 shows the plots of the immersive video clips (labeled by their ID numbers) based on mean ratings of valence and arousal. There is a varied distribution of video clips above the midpoint (5) of valence that vary across arousal ratings. However, despite our efforts to locate and shortlist immersive VR clips for the study, there appears to be an underrepresentation for clips that both induce negative valence and are highly arousing. Table 1 shows a list of all the clips in the database, together with a short description, length and their corresponding valence and arousal ratings.

The immersive VR clips varied on arousal ratings (M = 4.20, SD = 1.39), ranging from a low of 1.57 to a high of 7.4. This compares favorably with arousal ratings on the IAPS, which range from 1.72 to 7.35 (Lang et al., 2008). Comparatively, arousal ratings on the IAPS ranged from 1.72 to 7.35. The video clips also varied on valence ratings (M = 5.59, SD = 1.40), with a low of 2.2 and a high of 7.7. This compares reasonably well with valence ratings on the IAPS, which range from 1.31 to 8.34.

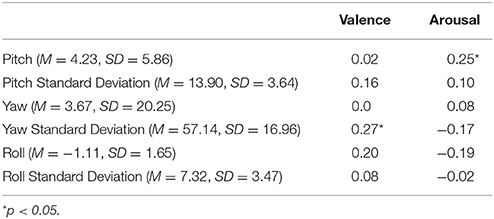

Head Movement Data

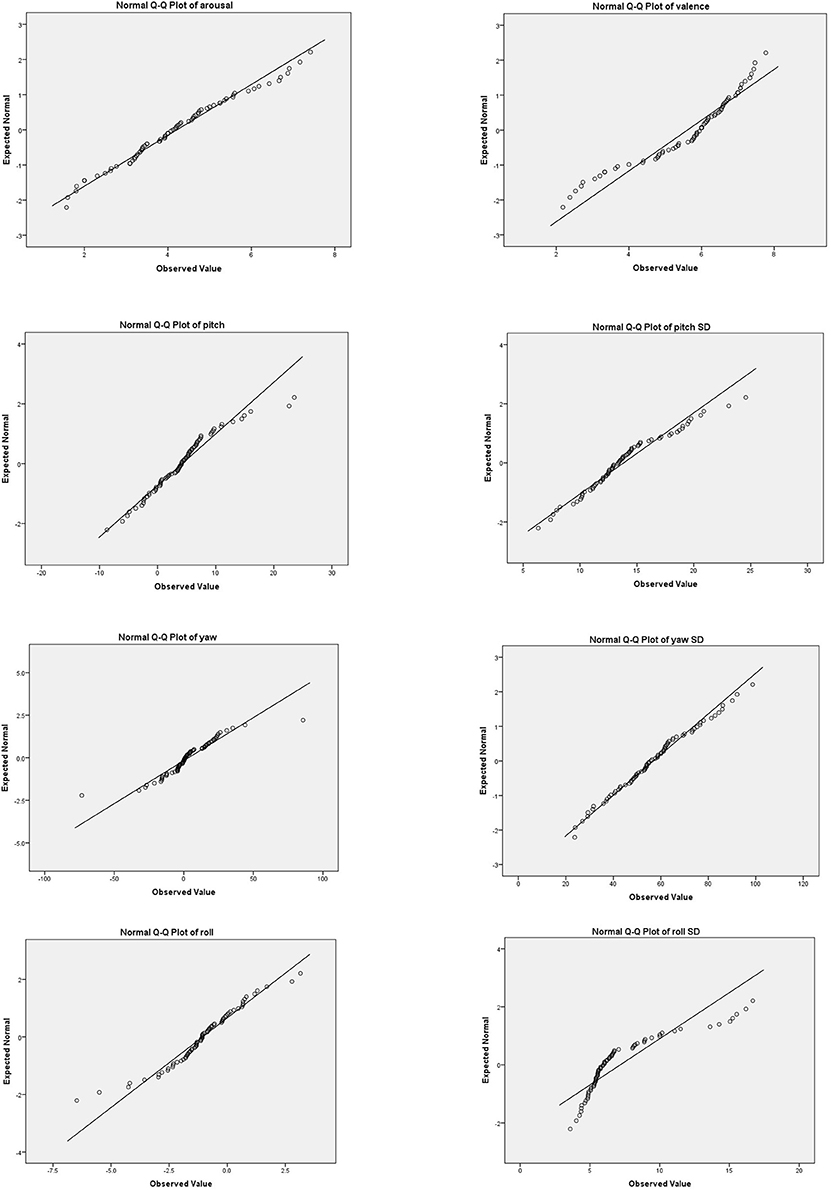

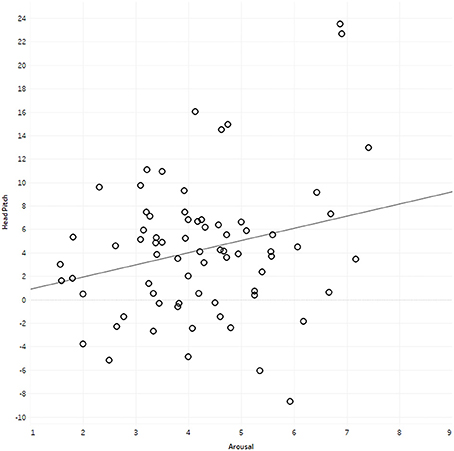

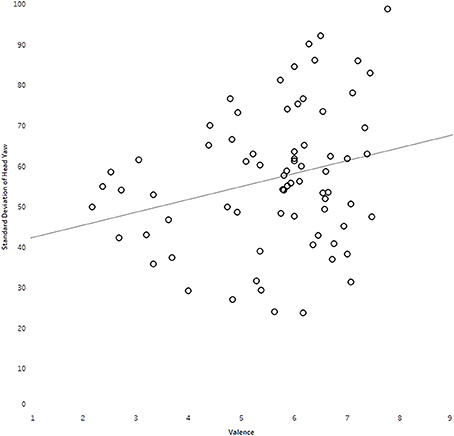

Pearson's product-moment correlations between observers' head movement data and their affective ratings are presented in Table 2. Most scores appear to be normally distributed as assessed by a visual inspection of Normal Q-Q plots (see Figure 5). Analyses showed that average standard deviation of head yaw significantly predicted valence [F(1, 71) = 5.06, p = 0.03, r = 0.26, adjusted R2 = 0.05], although the direction was in contrast to our hypothesis. There was no significant relationship between standard deviation of head yaw with arousal [F(1, 71) = 2.02, p = 0.16, r = 0.17, adjusted R2 = 0.01)]. However, there was a significant relationship between average head pitch movement and arousal [F(1, 71) = 4.63, p = 0.04, r = 0.25; adjusted R2 = 0.05]. Assumptions of the F-test for the significant relationships were met, with analyses showing homoscedasticity and normality of the residuals. The plots of the significant relationships are presented in Figures 6, 7.

Figure 6. Plot illustrating relationship between standard deviation of head yaw and valence ratings.

Discussion

The first objective of the study was to establish and introduce a database of immersive video clips that can serve as a resource for emotion induction research through VR. We sourced and tested a total of 73 video clips. Results showed that the stimuli varied reasonably well along the dimensions of valence and arousal. However, there appears to be a lack of representation for videos that are of negative valence yet highly arousing. In the IAPS and IADS, stimuli that belong to this quadrant tend to represent themes that are gory or violent, such as a victim of an attack that has his face mutilated, or a woman being held hostage with a knife to her throat. The majority of our videos are in the public domain and readily viewable on popular websites such as Youtube which have a strict policy on the types of content that can be uploaded. Hence, it is not surprising that stimuli of negative valence and arousal were not captured in our selection of immersive videos. Regardless, the collection of video clips (which can be found here) should serve as a good launching pad for researchers interested to examine the links between VR and emotion.

Although not a key factor of interest for this paper, we observed variance in the length of the video clips which was confounded with video content. Long video clips in our database tend to be of serious journalism content (e.g., nuclear fallout, homeless veterans, dictatorship regime) and naturally evoke negative valence. Length is a distinct factor of videos in contrast to photographs which are the standard emotional stimuli of photographs. Hence, while we experienced difficulty sourcing for long video clips that are of positive valence, future studies should examine the influence of video clip length on affective ratings.

The second objective sought to explore the relationship between observers' head movements and their emotions. We demonstrated a significant relationship between the amount of head yaw and valence ratings, which suggests that individuals who displayed greater movement of side-to-side head movement gave higher ratings of pleasure. However, the positive relationship shown here is in contrast to that presented by Won et al. (2016) who showed a significant relationship between the amount of head yaw and reported anxiety. It appears that content and context is an important differentiating factor when it comes to the effects of head movements. Participants in the former study explored their virtual environment and may have felt anxious in the presence of other virtual people. In our study, participants simply viewed the content presented to them without the need for navigation. Although no significant relationship was present between standard deviation of head yaw and arousal ratings, we found a correlation between head pitch and arousal, suggesting that people who tend to tilt their head upwards while watching immersive videos reported being more excited. This parallels research conducted by Lhommet and Marsella (2015) who compiled data from various studies on head positions and emotion states and showed that tilting the head up corresponds to feelings of excitement such as surprise and fear. The links between head movement and emotion are important findings and deserves further investigation.

One thing of note is the small effect sizes shown in our study (adjusted R2 = 0.05). While we tried our best to balance efficient data collection and managing participant fatigue, some participants may not be used to watching VR clips at length and may have felt uncomfortable or distressed without overtly expressing it. This may have influenced their ratings for VR clips toward the end of their study session, and may explain the small effect size. Future studies can explore when participant fatigue is likely to take place and adjust the viewing duration accordingly to minimize the impact on participant ratings.

Self-perception theory posits that people determine their attitudes based on their behavior (Bem, 1972). Future research can explore whether tasking participants to direct their head in certain directions or movements can lead to changes in their affect or attitudes. For example, imagine placing a participant in a virtual garden filled with colorful flowers and lush greenery. Since our study shows a positive link between amount of head yaw and valence ratings, will participants tasked to keep their gaze on a butterfly fluttering around them (therefore increasing the amount of head movement) lead to stronger valence compared to those who see a stationary butterfly resting on a flower? Results from this and similar studies can possibly aid in the development of virtual environments that assist patients undergoing technology-assisted therapy.

Our study examined the rotational head movements enacted by participants as they watched the video clips. Participants in our study sat on a swivel chair, which allowed them to swing around to have a full surround view of the immersive video. Future studies can incorporate translational head movements, which refers to movements that operate horizontally, laterally and vertically (x-, y-, and z- axes). This can exist through allowing participants to sit, stand or walk freely, or even program depth field elements into the immersive videos and seeing how participants' rotational and translational head movements correlate with their affect. Exploring the effects of the added degrees of freedom will contribute to a deeper understanding on the connection between head movements and emotions.

Ethics Statement

This study was carried out in accordance with the recommendations of the Human Research Protection Program, Stanford University Administrative Panel on Human Subjects in Non-Medical Research with written informed consent from all subjects. All subjects gave written informed consent in accordance with the Declaration of Helsinki. The protocol was approved by the Stanford University Administrative Panel on Human Subjects in Non-Medical Research.

Author Contributions

The authors worked as a team and made contributions throughout. BL and JB conceptualized and conducted the study. AP contributed in the sourcing and shortlisting of immersive VR clips and in revising the manuscript. WG and LW acted as domain consultants for the subject and contributed in writing and revisions.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Funding

This work is part of the RAINBOW-ENGAGE study supported by NIH Grant 1UH2AG052163-01.

Supplementary Material

The Supplementary videos are available online at: http://vhil.stanford.edu/360-video-database/

References

Bem, D. (1972). “Self perception theory,” in Advances in Experimental Social Psychology, ed L. Berkowitz (New York, NY: Academic Press), 1–62.

Blascovich, J., Loomis, J., Beall, A. C., Swinth, K. R., Hoyt, C. L., and Bailenson, J. N. (2002). Immersive virtual environment technology as a methodological tool for social psychology. Psychol. Inq. 13, 103–124. doi: 10.1207/S15327965PLI1302_01

Bradley, M. M., and Lang, P. J. (1999). International Affective Digitized Sounds (IADS): Stimuli, Instruction Manual and Affective Ratings. Technical Report B-2, The Center for Research in Psychophysiology, University of Florida, Gainesville, FL.

Darwin, C. (1965). The Expression of the Emotions in Man and Animals. Chicago: University of Chicago Press.

Difede, J., and Hoffman, H. G. (2002). Virtual reality exposure therapy for world trade center post-traumatic stress disorder: a case report. Cyberpsychol. Behav. 5, 529–535. doi: 10.1089/109493102321018169

Dyck, M., Winbeck, M., Leiberg, S., Chen, Y., Gur, R. C., and Mathiak, K. (2008). Recognition profile of emotions in natural and virtual faces. PLoS ONE 3:e3628. doi: 10.1371/journal.pone.0003628

Fox, J., Arena, D., and Bailenson, J. N. (2009). Virtual reality: a survival guide for the social scientist. J. Media Psychol. 21, 95–113. doi: 10.1027/1864-1105.21.3.95

Gross, J. J., and Levenson, R. W. (1995). Emotion elicitation using films. Cogn. Emot. 9, 87–108. doi: 10.1080/02699939508408966

Ito, T. A., Cacioppo, J. T., and Lang, P. J. (1998). Eliciting affect using the international affective picture system: trajectories through evaluative space. Pers. Soc. Psychol. Bull. 24, 855–879. doi: 10.1177/0146167298248006

Klinger, E., Bouchard, S., Légeron, P., Roy, S., Lauer, F., Chemin, I., et al. (2005). Virtual reality therapy versus cognitive behavior therapy for social phobia: a preliminary controlled study. Cyberpsychol. Behav. 8, 76–88. doi: 10.1089/cpb.2005.8.76

Lang, P. J. (1980). “Behavioral treatment and bio-behavioral assesment: computer applications,” in Technology in Mental Health Care Delivery Systems, eds J. B. Sidowski, J. H. Johnson, and T. A. Williams (Norwood, NJ: Ablex), 119–137.

Lang, P. J., Bradley, M. M., and Cuthbert, B. N. (2008). International Affective Picture System (IAPS): Affective Ratings of Pictures and Instruction Manual. Technical Report A-6, University of Florida, Gainsville, FL.

Lhommet, M., and Marsella, S. C. (2015). “Expressing emotion through posture and gesture,” in The Oxford Handbook of Affective Computing, eds R. Calvo, S. D'Mello, J. Gratch, and A. Kappas (Oxford; New York, NY: Oxford University Press), 273–285.

Livingstone, S. R., and Palmer, C. (2016). Head movements encode emotions during speech and song. Emotion 16:365. doi: 10.1037/emo0000106

Multisilta, J. (2014). Mobile panoramic video applications for learning. Educ. Inform. Technol. 19, 655–666. doi: 10.1007/s10639-013-9282-8

Parsons, T. D., Bowerly, T., Buckwalter, J. G., and Rizzo, A. A. (2007). A controlled clinical comparison of attention performance in children with ADHD in a virtual reality classroom compared to standard neuropsychological methods. Child Neuropsychol. 13, 363–381. doi: 10.1080/13825580600943473

Parsons, T. D., and Rizzo, A. A. (2008). Affective outcomes of virtual reality exposure therapy for anxiety and specific phobias: a meta-analysis. J. Behav. Ther. Exp. Psychiatry 39, 250–261. doi: 10.1016/j.jbtep.2007.07.007

Peck, T. C., Seinfeld, S., Aglioti, S. M., and Slater, M. (2013). Putting yourself in the skin of a black avatar reduces implicit racial bias. Conscious. Cogn. 22, 779–787. doi: 10.1016/j.concog.2013.04.016

Qu, C., Brinkman, W.-P., Ling, Y., Wiggers, P., and Heynderickx, I. (2014). Conversations with a virtual human: synthetic emotions and human responses. Comput. Hum. Behav. 34, 58–68. doi: 10.1016/j.chb.2014.01.033

Riva, G., Mantovani, F., Capideville, C. S., Preziosa, A., Morganti, F., Villani, D., et al. (2007). Affective interactions using virtual reality: the link between presence and emotions. CyberPsychol. Behav. 10, 45–56. doi: 10.1089/cpb.2006.9993

Schouwstra, S. J., and Hoogstraten, J. (1995). Head position and spinal position as determinants of perceived emotional state 1. Percept. Mot. Skills 81, 673–674. doi: 10.1177/003151259508100262

Schroeder, R. (2012). The Social Life of Avatars: Presence and Interaction in Shared Virtual Environments. New York, NY: Springer Science & Business Media.

Schultheis, M. T., and Rizzo, A. A. (2001). The application of virtual reality technology in rehabilitation. Rehabil. Psychol. 46:296. doi: 10.1037/0090-5550.46.3.296

Slater, M., Antley, A., Davison, A., Swapp, D., Guger, C., Barker, C., et al. (2006). A virtual reprise of the stanley milgram obedience experiments. PLoS ONE 1:e39. doi: 10.1371/journal.pone.0000039

Slater, M., Steed, A., McCarthy, J., and Maringelli, F. (1998). The influence of body movement on subjective presence in virtual environments. Hum. Factors 40, 469–477. doi: 10.1518/001872098779591368

Slater, M., and Sanchez-Vives, M. V. (2016). Enhancing our lives with immersive virtual reality. Front. Robot. AI 3:74. doi: 10.3389/frobt.2016.00074

Tracy, J. L., and Matsumoto, D. (2008). The spontaneous expression of pride and shame: evidence for biologically innate nonverbal displays. Proc. Natl. Acad. Sci. U.S.A. 105, 11655–11660. doi: 10.1073/pnas.0802686105

Wallbott, H. G. (1998). Bodily expression of emotion. Eur. J. Soc. Psychol. 28, 879–896. doi: 10.1002/(SICI)1099-0992(1998110)28:6<879::AID-EJSP901>3.0.CO;2-W

Wiederhold, B. K., and Wiederhold, M. D. (2005). Virtual Reality Therapy for Anxiety Disorders: Advances in Evaluation and Treatment. Washington, DC: American Psychological Association.

Keywords: virtual reality, database, immersive VR clips, head movement, affective ratings

Citation: Li BJ, Bailenson JN, Pines A, Greenleaf WJ and Williams LM (2017) A Public Database of Immersive VR Videos with Corresponding Ratings of Arousal, Valence, and Correlations between Head Movements and Self Report Measures. Front. Psychol. 8:2116. doi: 10.3389/fpsyg.2017.02116

Received: 08 September 2017; Accepted: 20 November 2017;

Published: 05 December 2017.

Edited by:

Albert Rizzo, USC Institute for Creative Technologies, United StatesReviewed by:

Andrej Košir, University of Ljubljana, SloveniaHongying Meng, Brunel University London, United Kingdom

Copyright © 2017 Li, Bailenson, Pines, Greenleaf and Williams. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Benjamin J. Li, benjyli@stanford.edu

Benjamin J. Li

Benjamin J. Li Jeremy N. Bailenson1

Jeremy N. Bailenson1 Walter J. Greenleaf

Walter J. Greenleaf Leanne M. Williams

Leanne M. Williams