Researcher Perspectives on Ethical Considerations in Adaptive Deep Brain Stimulation Trials

- 1Center for Medical Ethics and Health Policy, Baylor College of Medicine, Houston, TX, United States

- 2Department of Neuroscience, Rice University, Houston, TX, United States

- 3Evans School of Public Policy & Governance, University of Washington, Seattle, WA, United States

- 4Department of Anatomy & Neurobiology, University of Puerto Rico School of Medicine, San Juan, Puerto Rico

- 5Program in Bioethics, University of California, San Francisco, San Francisco, CA, United States

Interest and investment in closed-loop or adaptive deep brain stimulation (aDBS) systems have quickly expanded due to this neurotechnology’s potential to more safely and effectively treat refractory movement and psychiatric disorders compared to conventional DBS. A large neuroethics literature outlines potential ethical concerns about conventional DBS and aDBS systems. Few studies, however, have examined stakeholder perspectives about ethical issues in aDBS research and other next-generation DBS devices. To help fill this gap, we conducted semi-structured interviews with researchers involved in aDBS trials (n = 23) to gain insight into the most pressing ethical questions in aDBS research and any concerns about specific features of aDBS devices, including devices’ ability to measure brain activity, automatically adjust stimulation, and store neural data. Using thematic content analysis, we identified 8 central themes in researcher responses. The need to measure and store neural data for aDBS raised concerns among researchers about data privacy and security issues (noted by 91% of researchers), including the avoidance of unintended or unwanted third-party access to data. Researchers reflected on the risks and safety (83%) of aDBS due to the experimental nature of automatically modulating then observing stimulation effects outside a controlled clinical setting and in relation to need for surgical battery changes. Researchers also stressed the importance of ensuring informed consent and adequate patient understanding (74%). Concerns related to automaticity and device programming (65%) were discussed, including current uncertainties about biomarker validity. Additionally, researchers discussed the potential impacts of automatic stimulation on patients’ autonomy and control over stimulation (57%). Lastly, researchers discussed concerns related to patient selection (defining criteria for candidacy) (39%), challenges of ensuring post-trial access to care and device maintenance (39%), and potential effects on personality and identity (30%). To help address researcher concerns, we discuss the need to minimize cybersecurity vulnerabilities, advance biomarker validity, promote the balance of device control between patients and clinicians, and enhance ongoing informed consent. The findings from this study will help inform policies that will maximize the benefits and minimize potential harms of aDBS and other next-generation DBS devices.

Introduction

Adaptive deep brain stimulation (aDBS) devices are part of the emerging field of personalized neurointerventions that are responsive to a patient’s neural activity. In contrast to conventional DBS, the promise of aDBS systems is that they will identify neural activity associated with symptoms and adjust stimulation delivery in real time to alter neural activity and manage symptoms accordingly (Arlotti et al., 2016; Shute et al., 2016). The goal of aDBS systems is to deliver stimulation only when pathological brain activity is detected in order to prevent overtreatment, decrease side effects (e.g., hypomania), and battery depletion, which requires surgical replacement (Hosain et al., 2014; Beudel and Brown, 2016; Shukla et al., 2017). In addition to these safety advantages, aDBS may lead to better outcomes for patients because it adjusts automatically, thus avoiding the delay between suboptimal symptom management and adjustment of stimulation in a clinical encounter (Klein, 2020, p.336).

However, some have suggested that these defining features, which make aDBS promising, may also exacerbate certain neuroethics concerns (Klein, 2020, p.336; Aggarwal and Chugh, 2020, p.158). In particular, aDBS could exacerbate concerns about felt authenticity of affective states and patient agency due to the fact devices adjust stimulation automatically, which likely occur outside of a patient’s conscious awareness (Gilbert et al., 2018, p.9; Gilbert et al., 2018, p.323–324; Goering et al., 2017, p.59–70). Moreover, advancements in aDBS technology depend largely on measuring and storing neural data for programming, raising novel challenges related to patient privacy. Addressing ethical concerns related to these defining features of closed-loop DBS may help to promote safety and efficacy, with potentially broader implications for other next-generation DBS devices containing with similar features.

In an effort to understand researchers’ perspectives on the key neuroethics considerations related to the development of aDBS devices, we conducted interviews with researchers working in aDBS studies, who provided critical insights into the concerns raised by the capabilities and limitations of these devices. Drawing from these interviews, we identify pressing neuroethics issues and concerns, some of which apply to conventional DBS, but many of which are distinctive of or exacerbated by aDBS devices. We contextualize these findings within the existing neuroethics literature and discuss potential responses to these concerns as technologies with adaptive features become more prevalent in the future.

Materials and Methods

We interviewed researchers (n = 23) involved in aDBS trials using a semi-structured, open-ended interview format. Understanding this stakeholder group’s perspectives about ethical issues related to the development of aDBS systems is essential because these individuals possess expert knowledge about these devices, have direct experience developing and implementing them, and/or have expertise related to conditions with characteristics (e.g., treatment-resistance, severity of symptoms) that are similar to the intended users of these technologies. Thus, they are in an ideal position to identify ethical issues and inform resultant discussions related to these devices (Lázaro-Muñoz et al., 2019).

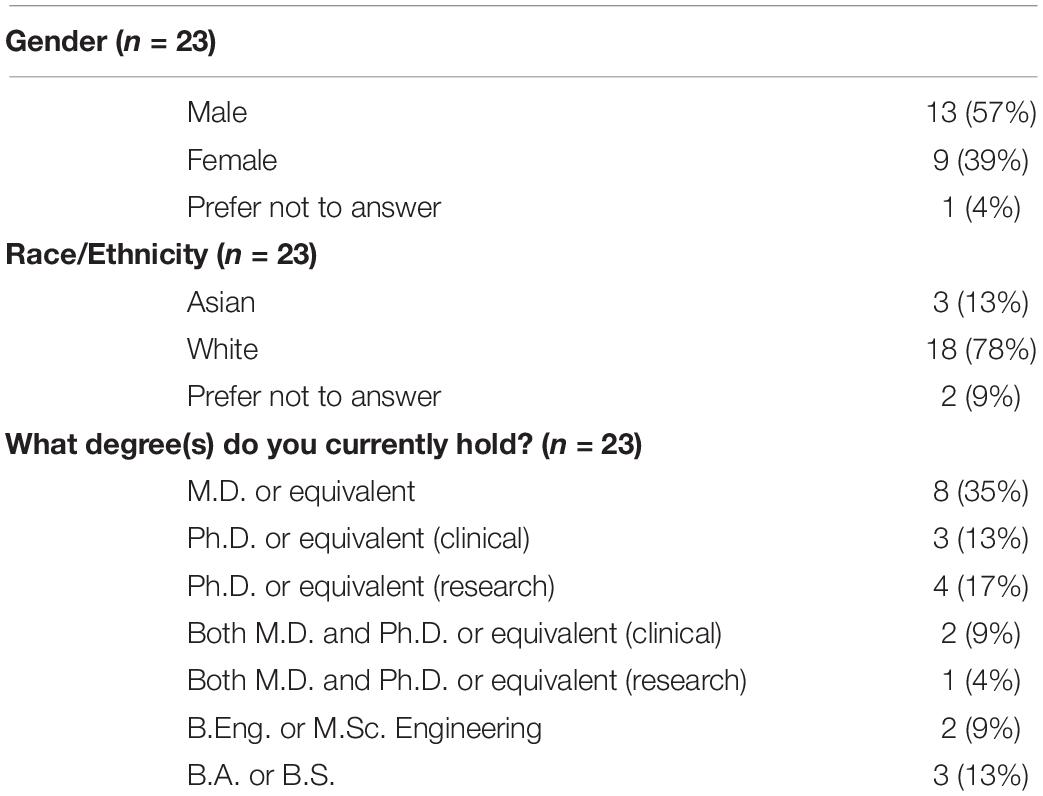

Participants were recruited from funded aDBS trials. Purposeful sampling with a snowball strategy was employed (Palinkas et al., 2015) in order to ensure recruitment of different project roles of researchers involved in aDBS trials (e.g., trial coordinators, neurologists, neurosurgeons, psychiatrics, and engineers) (See Table 1). Our sample also represents a diverse group of researchers targeting different disorders, including Parkinson’s disease, dystonia, essential tremor, Tourette syndrome, depression, and obsessive-compulsive disorder (OCD). One participant was not specifically involved in aDBS but in conventional DBS and other next-generation DBS.

Participants were asked about their perspectives on pressing ethical issues in aDBS research and challenges they personally face in their research. We also asked researchers specifically about concerns pertaining to distinctive features of aDBS devices, including the device’s ability to measure brain activity, automatically adjust stimulation, and store neural data. Our interview guide was developed based on a review of key issues raised in bioethics and neuroethics literature, during participant observation in a lab conducting aDBS research, and in discussions with experts in the aDBS field. Respondents were also asked questions about other topics, including several questions related to aDBS data sharing. We report those results elsewhere (Zuk et al., unpublished). The study was approved by the Institutional Review Board at Baylor College of Medicine.

Interviews were conducted via phone and Zoom, and were audio-recorded, transcribed verbatim, and analyzed using MAXQDA 2018 software (Kuckartz, 2014). Each interview transcript was coded independently by at least two members of the research team to identify researcher responses to six questions related to neuroethical concerns in aDBS research. Inconsistencies in coding were discussed to reach consensus among the research team. Utilizing thematic content analysis (Boyatzis, 1998; Schilling, 2006, p.28–37), information from coded segments was progressively abstracted to identify the content and frequency of emergent themes.

Results

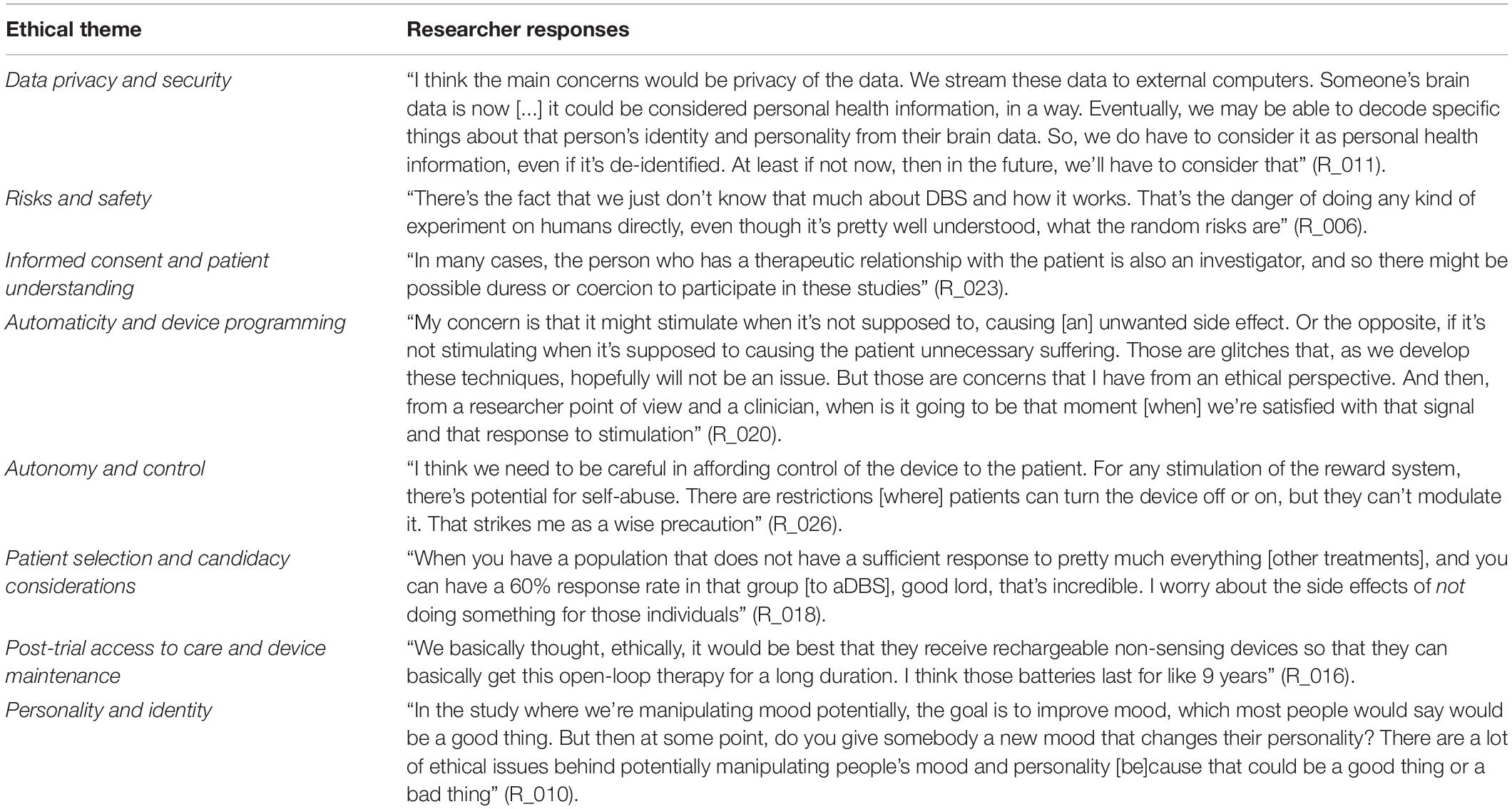

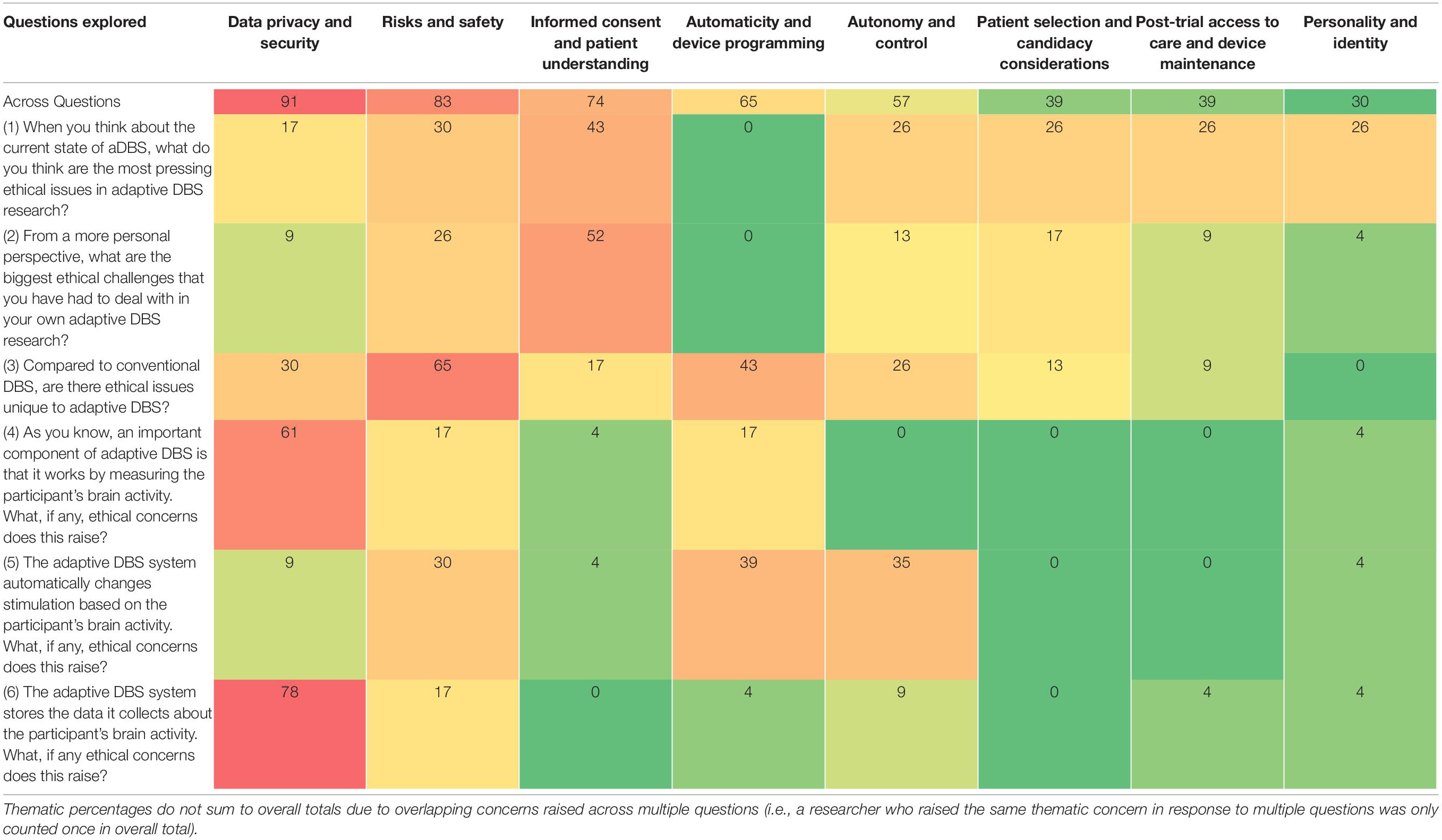

We identified eight overarching themes in researchers’ responses to six questions about neuroethical concerns and challenges in aDBS research (Table 2). Starting with the most frequent, these include concerns related to (1) data privacy and security (noted by 91% of researchers); (2) risks and safety (83%); (3) informed consent and adequate patient understanding (74%); (4) automaticity and device programming (65%); (5) patient autonomy and control over stimulation (57%); (6) patient selection for aDBS candidacy (39%); (7) post-trial access to care and device maintenance (39%); (8) and potential effects on personality and identity (30%). While some of these ethical concerns may be broadly relevant to both conventional and adaptive DBS, most were identified by our respondents as being exacerbated by certain characteristics distinctive of aDBS, particularly its capacity to measure and store brain activity and to respond using automatic stimulation. The ways in which these concerns were specifically raised in response to our six questions is illustrated in Table 2 and elaborated below.

Table 2. Percentage (%) of respondents (n = 23) who discussed main ethical concerns related to aDBS.

Data Privacy and Security

Nearly all (91%) respondents expressed concerns about data privacy and security in relation to the capacity of aDBS systems to measure and store neural activity data (NAD). There was disagreement about the sensitivity of NAD. Some researchers felt that “brain recordings themselves [are] not identifiable” (R_013) because researchers currently do not know enough about what the recordings mean to be able to identify sensitive information, however, this could change in the future (See Table 3). Researchers also pointed out that stored data could be inappropriately used or shared: “The fact that we have the ability to report this data suggests that perhaps it can be used as evidence. Could this be forensic evidence that’s used in lawsuits, in courts, or to settle discussions or arguments?” (R_022). We explored researchers’ views on the sensitivity of NAD as they relate specifically to data sharing elsewhere (see also Zuk et al., unpublished; Naufel and Klein, 2020).

Researchers also discussed device “hacking,” including the potential for stored data or algorithms to be manipulated to disrupt therapy or control patients. One researcher suggested, “We’d have to make sure that there are lots of safety measures in place [.] so that the algorithm can’t be adjusted. Or if we have someone controlling stimulations remotely, like the clinicians. if someone were to steal that control and send the person into a manic state or something maliciously, that would be really bad” (R_017). Some respondents felt that data security risks are minimal because aDBS systems are HIPAA-compliant and researchers who study them are required to submit plans to protect patient information to the FDA. Others, however, emphasized that data security risks could grow as researchers learn more about recordings, and that further plans should be put in place to anticipate future challenges in protecting NAD.

Risks and Safety

Most (83%) respondents raised ethical challenges surrounding risks and safety, particularly in relation to unique features of aDBS devices (i.e., capacity to measure and store neural activity and automatically adjust stimulation) compared to conventional DBS. In some cases, researchers are inserting additional electrodes in different brain regions (cortical and subcortical) to identify biomarkers that allow for automatic or responsive stimulation. One researcher explained, “Whenever we are pushing the envelope of neuromodulation with new, additional implanted devices, [there is] increased risk of hemorrhage, seizure, stroke, any kind of additional manipulation or extension of the surgery. So, I always have to weigh what the risks and benefits [are] for this specific person” (R_021). A number of researchers discussed unknown risks and unintended effects of aDBS, particularly in relation to automatic delivery of stimulation in new environments outside of the controlled clinical setting (See Table 3). One researcher wondered, “Are there any spot gaps that need to be in place in certain contextual situations that it could fire and do something in a way that we haven’t imagined yet? We haven’t actually thought through and imagined all the potential situations that could play out” (R_015). Unanticipated effects were especially concerning because researchers do not constantly monitor devices, which also raises the “challenge of when to intervene as a clinician taking care of this patient [when] these systems are supposed to be autonomous” (R_020). As a way to potentially mitigate unforeseen risks, respondents emphasized the importance of working within safe stimulation parameters and maintaining researchers’ ability to intervene when necessary.

Other researchers raised concerns that stimulation could inadvertently and unknowingly affect other neural circuits, potentially causing side effects. The risk of overlooking these side effects may be exacerbated in aDBS because researchers – and aDBS systems operating autonomously – could be overly focused on therapeutic outcomes. As one researcher described, “We’ve always looked at therapeutic outcomes, but then became increasingly aware of the side effects… So the potential is to cause more side effects unknowingly, especially in an adaptive system that’s not tuned to the right outcome” (R_023). Respondents also discussed risks specific to certain subpopulations of aDBS patients, such as overstimulation leading to hypomanic states in patients with OCD.

Informed Consent and Patient Understanding

A majority (74%) of researchers said that one of the most pressing ethical concerns in aDBS studies is ensuring that patients understand and are able to provide informed consent to aDBS. Over half of respondents reported having encountered related challenges in their own aDBS research. Some researchers raised the concern that patients may feel pressured to participate, particularly because “some of the patients who are looking at this kind of procedure don’t really have other helpful interventions” (R_005). Patients may also feel pressured to participate in aDBS research due to an established therapeutic relationship with clinician-researchers leading aDBS research studies (See Table 3). Further potential compromises to informed consent can stem from therapeutic misconception and therapeutic misestimation. One respondent explained patients can potentially “lose track of the investigational nature of the study” (R_011), and another respondent suggested that it is challenging to ensure realistic patient expectations about aDBS during the consent process: “DBS…seems, to them – because it’s so risky, but can have such promise – that it’s like a silver bullet, so to speak” (R_005). Patients must understand that aDBS is a complex intervention and not “one size fits all” (R_015).

Researchers also reflected on how the automatic nature of aDBS raises unique ethical concerns about patient consent. One researcher wondered whether patients can robustly consent to automatic, moment-to-moment changes in stimulation, explaining that “it’s almost as though the intervention is changing at each time point” (R_018). Researchers stressed the need to ensure that patients who explicitly consent to the adaptive component of aDBS at the beginning of their treatment are continuing to implicitly consent to ongoing stimulation changes, which evolve as device recognition of a patient’s neural activity improves and likely occur outside of a patient’s conscious awareness. To address this concern about patient consent, one researcher suggested that devices could be designed to notify patients when they detect symptom-related brain activity: “It would be interesting to have a device be able to [.] give the patient an alert somehow. ‘[If] I [the device] think you’re dyskinetic, I’m going to turn myself down.’ The patient could override it” (R_013). Researchers conveyed that improving patient understanding about when and how the device adjusts stimulation can help to ensure that patients are continuing to consent to the device’s automatic changes. Consent challenges may be especially pronounced among certain subpopulations of patients, including those with severe psychiatric symptoms that potentially influence decisional capacity.

Automaticity and Device Programming

Researchers (65%) raised unique concerns related to automaticity and device programming for aDBS systems. They stressed the importance of using validated, reliable biomarkers given that researchers are relying upon a device designed to make autonomous decisions to affect patients’ mood, behavior, or movement. One researcher wondered, “How validated does a biomarker have to be before you start deploying a system like this and letting it deliver therapy in real time?” (R_023). Researchers explained that a biomarker lacking validity could cause devices to respond to false positives or negatives, leading to over- or under-stimulation. These “misreadings” of neural biomarkers could result in patients experiencing suboptimal symptom management or undesirable effects (See Table 3). One researcher shared,

“Let’s say we come across… a good biomarker for hypomania, and it misreads the patient just having a really great weekend, because they’re at a family member’s wedding. Now all of a sudden, they’re depressed again or they’re feeling more of their OCD symptoms come on at that time. That’s obviously a problematic situation we want to avoid” (R_007).

Respondents said that to avoid stimulation errors, devices would ideally be programmed so they could recognize when “the patient’s behavior and mood is elevated beyond where it is beneficial to the patient” and subsequently “turn down the system” (R_019). Researchers were also concerned that patients may be unaware of inappropriate stimulation changes because the changes are occurring automatically, impacting patients’ and clinicians’ ability to actively intervene to mitigate negative consequences. As one researcher described, “There is still a decision being made on a second-by-second basis out in the field, in the wild, by an algorithm that may change that person’s current mental status” (R_025). Another researcher stressed the ethical implications of this unique feature of aDBS, saying, “[Normally], we always have a physician intervening and assessing, [but aDBS] is an autonomous system making decisions about the delivery of therapy” (R_023).

Autonomy and Control

Related to the concerns about automaticity described above, over half (57%) of respondents raised concerns related to patient autonomy and control over stimulation. One researcher explained how “people’s sense of autonomy may be altered by the use of a computer unit” if they believe their “motor state or their mental state… are being controlled by an external source or by a computer” (R_008). Researchers felt this concern could be particularly exacerbated for aDBS patients due to the fact that changes in stimulation occur automatically. Another researcher commented, imagining from a patient’s perspective, “Even with open loop, there’s the issue that now I have a device in my brain that’s modulating and controlling some of my brain activity. I think as we develop closed-loop, that concern about allowing a device to take some command over your activity will be extenuated” (R_009). This researcher speculated that automatic device control may be even more concerning for psychiatric patients if they view the targets of aDBS adjustment – e.g., mood and anxiety – as central to their sense of self and identity. They said, imagining the perspective of a patient, “’A tremor doesn’t represent me. It’s a dysfunction.’ [But] when you have a device that’s modulating your mood or your anxiety level, your energy level, that’s much more your core sense of being” (R_009) (See also Personality and Identity below).

Alternatively, one researcher noted, “[patients] seem to have an awareness of how the device is being set. They trust the researchers that are controlling it. They don’t feel like there’s any questionable agency to be concerned with” (R_008). According to another researcher, concerns about patients’ sense of control, “are mitigated substantially by the design of these protocols, where patients do have a controller and at any point can flip themselves out of adaptive stimulation into conventional stimulation” (R_011). While some researchers highlighted this need to allow patients to override unwanted stimulation, others alluded to potential risks of giving patients substantive control over their stimulation. Some respondents noted that determining how much control patients should have over stimulation may depend on which areas of the brain are being stimulated. In cases like aDBS for OCD, in which part of the brain’s reward circuit is stimulated, some researchers said they feel hesitant providing too much patient control due to the potential for stimulation abuse (See Table 3). Researchers said that other patients, such as those with essential tremor who receive stimulation elsewhere in the brain, could be given greater unilateral discretion to adjust stimulation. Overall, researchers stressed caution in deciding whether and how much patient control to allow.

Some researchers offered similar cautions against giving physicians too much control, advocating for limits to physician access to stimulation. As one respondent commented, “We still don’t want the clinician to be kind of messing with it whenever they want to. How do you put in the safeguard so that only authorized people can access it, and even they can only do so with the patient’s permission every time?” (R_022). Another respondent highlighted a tension between ensuring patient safety and respecting their autonomy, saying, “In the future, it would be important to have a button that the doctor could press remotely if they hear something is going on, like turn everything off or turn it down…But then that’s like a doctor controlling remotely” (R_017). One researcher suggested that a potential solution to finding an ethical balance is to integrate all stakeholder groups – including patients and caregivers – in the development of control and safety policies.

Patient Selection and Candidacy Considerations

Over a third of researchers (39%) raised ethical concerns related to patient selection and candidacy for aDBS treatment. Because DBS treatment is an invasive therapy typically offered to patients who are treatment-resistant, some researchers said they want to be sure that patients have “tried enough different treatments, even some of the ones that are a bit more experimental” (R_009) in order to warrant taking on the challenges and risks of aDBS. Other researchers noted that the treatment-resistant nature of a patient’s disorder supports not only their fit as an aDBS candidate but also the ethical imperative to make aDBS treatment available to them (See Table 3). Respondents pointed out that deciding whether and when a patient may benefit from aDBS requires that multiple clinical and demographic factors be taken into consideration. For example, researchers discussed the difficulty of defining “normal” versus “abnormal” thoughts, moods, and behaviors in the context of aDBS. Ideally, aDBS treatment will be able to appropriately decipher between “normal” and “abnormal,” however, some researchers said it may be problematic to expect a human, let alone a machine to make these fine distinctions. One researcher said,

“I think the most interesting, challenging question to me is, what are we defining as our set point or as our ‘normal?’ I think for a movement disorder [like] tremors, for example, ‘normal’ is not having tremors. When you’re talking about mood and anxiety, like with OCD, how much time you spend thinking about whatever is your concern – contamination or orderliness or symmetry… Is that normal? Is that abnormal?” (R_022).

Post-Trial Access to Care and Device Maintenance

Nearly a third (30%) considered post-trial access to care and device maintenance to be an additional pressing ethical issue. Researchers said that some patients who want to continue aDBS are unable to access aDBS care and device maintenance after a research study ends: “I think, honestly, the biggest issue right now is the amount of money that it costs patients to maintain the device, or obtain a replacement after the study is over” (R_004). Reasons for this include the fact that conventional DBS and aDBS have not yet been approved by the FDA for some of the conditions targeted in trials, which may result in insurance providers not covering costs associated with battery or hardware replacements, thereby limiting post-trial access. Post-trial access to care may be particularly problematic for certain patients, such as those whose batteries need replacing early. For example, one researcher said, “For Tourette’s therapy, amplitudes are really high, and [batteries] get depleted really quickly. And then it’s not FDA approved, so they don’t get it covered by insurance companies. We can’t promise to provide them again with the study devices, even if they convert to standard DBS batteries” (R_016). Some researchers recommended giving patients conventional DBS with rechargeable batteries at the end of studies to extend these patients’ access to DBS (See Table 3).

Personality and Identity

Researchers (30%) also discussed the important ethical challenge of mitigating potential unwanted effects of aDBS on personality and identity, including mania or hypomania caused by aDBS stimulation in patients with OCD. One researcher commented, “One [concern] is, changing someone’s personality, and their behavior and how that can be manipulated through deep brain stimulation, either inadvertently or maliciously. That’s one of my concerns” (R_021). Another researcher felt that the brain was a unique organ and different from, for example, the heart. They explained that altering brain activity and “directly stimulating reward tracks in the brain, generat[e] both hedonic responses” and other responses “that are really part of the fabric of personality.” On the other hand, “for someone with heart irregularities, a cardiac pacemaker may be beneficial, expanding their range of motion and activity, but only very indirectly, if at all, affecting them as an individual” (R_026). While manipulating and improving mood could be the goal for some uses of aDBS, researchers expressed concern over lasting changes on personality (See Table 3). Furthermore, navigating these situations could be particularly challenging for researchers in cases where patients do not understand or acknowledge that their mental state is negatively affecting their functioning.

Discussion

Minimizing Vulnerabilities in Cybersecurity

In this paper, we identified potential ethical issues and challenges that are heightened in or unique to aDBS research relative to conventional DBS, drawn from the perspectives of aDBS researchers working at the forefront of their field. Our findings suggest that the technical features that give aDBS distinct advantages over conventional DBS systems also raise distinct issues that should be addressed in order to ensure that patients receive the full benefits of these neurotechnologies while minimizing potential medical and non-medical harms. Among the most pressing concerns raised by researchers was the potential for aDBS systems to compromise patient privacy and data security. Researchers pointed out that while NAD that is recorded and stored by aDBS systems may not itself contain identifiers or other sensitive information presently, this could change in the future, which is a concern frequently raised in the theoretical neuroethics literature (Klein, 2016, p.1310; Zuk et al., 2018, p.45–46; Aggarwal and Chugh, 2020, p.160). Theoretical work further predicts that privacy concerns will increase as larger amounts of data are collected, advances in technologies make it easier to integrate data, and DBS devices interface with other devices in the future (Hendriks et al., 2019, p.1508; Klein, 2020, p.335). Researchers should therefore maintain awareness of advances in neuroscience and technology that could change the degree of NAD sensitivity and implement additional data protections if and when necessary. Researchers also have a responsibility to inform participants of what information could and could not be extrapolated from their neural recordings (Pugh et al., 2018, p.221). Moreover, researchers and clinicians will need to determine participants’ desired boundaries around neural privacy and preferences around how their NAD is used in the future, which could require researchers to not collect or to filter certain kinds of neural recordings (Klein, 2020, p.335).

To avoid the possibility of device hacking, data manipulation, and therapy interruption, researchers and clinicians can incorporate additional security patches and upgrade software systems to reinforce the cybersecurity of both hospital-wide networks as well as patient devices linked to networks (Jaret, 2018; Pugh et al., 2018, p.221). The FDA should also hold device manufacturers accountable for identifying and addressing vulnerabilities in medical devices and ensure that the responsibility to safeguard devices is shared amongst providers and manufacturers. Currently, the FDA is exploring the development of a CyberMed Safety (Expert) Analysis Board, which is “a public-private partnership that would complement existing device vulnerability coordination and response mechanisms and serve as a resource for device makers and FDA” (U.S. Food & Drug Administration, 2018). This board would function to assess vulnerabilities, patient safety concerns, and mitigation plans, which could play a large role in supporting aDBS researchers and addressing device security concerns.

Mitigating Risks and Advancing Biomarker Validity

A second highly salient concern raised by researchers is the need to mitigate risks and ensure safety for patients being treated with aDBS. Identifying valid neurophysiological biomarkers is an enduring challenge for researchers that involves a variety of strategies, depending on the disorder. For example, with essential tremor, researchers record NAD while patients perform a motor task (e.g., clasping a cup and brining it toward their mouth) (Opri et al., 2019). With Parkinson’s Disease, researchers record NAD when patients are on and off medication and on and off therapeutic DBS (Swann et al., 2016).

Identifying biomarkers for psychiatric disorders, however, is especially challenging because there are often no external, visible symptoms as in motor disorders, and psychiatric symptoms involve highly complex and dynamic cognitive states and behaviors. Currently, researchers developing aDBS for OCD can utilize video recording of facial expressions and physiological measurements (e.g., heart rate) collected while patients perform psychophysical tasks (e.g., unscripted social interactions with strangers) (Girard et al., 2015; Provenza et al., 2019). However, the validity of biomarkers identified during these tasks depends on the extent to which they elicit the same brain processes associated with OCD symptomology as it manifests in everyday life. To help improve biomarker validity, particularly for psychiatric disorders, further research is needed into the translatability of clinic-derived biomarkers to neural processing and patient functioning in less controlled and more naturalistic settings (Provenza et al., 2019). This research could help address researchers’ concerns and fill contextual blind spots that could cause aDBS devices to “misread” brain activity and either over- or under-stimulate. Improving biomarkers may also help to mitigate potential unwanted effects of aDBS on personality and identity, another significant concern raised by respondents, by avoiding device settings associated with any such effects.

Promoting Autonomy and Balancing Device Control

Researchers from our sample recognized that determining when clinicians should intervene to ensure patient safety is challenging for a number of reasons, including that researchers and patients may not be aware of when the device begins stimulating inappropriately, and researchers may feel uneasy about potentially violating patient privacy or undermining patient autonomy. Researchers’ reflections on autonomy and patient control illuminate the challenging and complex nature of these issues and suggest possible tension between patient safety and patient autonomy. On one hand, some researchers suggested that patient autonomy requires that clinicians do not have too much control and that patients have adequate control over aDBS functionality, such as having the ability to reject an upcoming change in stimulation (Fins, 2009; Goering et al., 2017, p.65). One way to manage this and respect patient autonomy would be to engage patients and clinicians early in the consent process to discuss preferences and conditions for patient versus physician intervention within the larger context of a patient’s treatment goals. Patients could also identify a close caregiver to provide assistance in adjusting stimulation parameters or finding appropriate medical care when clinicians or caregivers identify a concern during treatment, thus supporting patient autonomy in a relational way (Baylis, 2013, p.516–519; De Haan et al., 2015, p.22; Goddard, 2017, p.332–334; Goering et al., 2017, p.67; Gallagher, 2018).

On the other hand, some researchers suggested patients may trust or prefer clinicians to have a substantial amount of control, and for certain patients, providing them with too much control could lead to autonomy being undermined. Despite concerns about autonomy and control being raised frequently in theoretical neuroethics and sometimes in empirical work, some researchers believed that, at least in general, autonomy concerns are not highly problematic in the context of aDBS research context because patients trust clinicians to manage treatment modifications (Lipsman and Glannon, 2013, p.468; De Haan et al., 2015, p.6–16; Klein, 2016, p.1311; Gilbert et al., 2017, p.96; Gilbert et al., 2018). A study by Klein in 2016 found that the majority of patients receiving open-loop DBS expressed a preference for primarily clinician-controlled rather than patient-controlled stimulation settings, were such control to become available (Klein, 2016, p.3). Additionally, empirical work indicates that the brain region targeted is also an important consideration when examining potential effects that DBS could have on patient autonomy and control (Gilbert et al., 2017, p.101). Researchers in our sample similarly stated that it would be wise to limit the degree of control of patients with OCD given that they receive stimulation in the reward system (i.e., ventral striatum), which could lead to stimulation abuse. Over-stimulation of this brain region could result in mania and increased risk-taking behaviors, which could alter judgment or diminish the degree of control patients have over their actions, thus undermining autonomy (De Haan et al., 2017, p.23; Gilbert et al., 2017, p.98–99).

One can foresee a potential conflict between the above considerations if, for example, a patient receiving aDBS for OCD in the ventral striatum is limited in their ability to adjust stimulation and feels on that basis that they lack adequate control. These considerations are further complicated by the positive impact of symptom relief, which could outweigh potential diminishments in autonomy resulting from a lack of control over device functionality (Lázaro-Muñoz et al., 2017, p.74). Ideally, a balance between patient and clinician control over stimulation will be achieved through the assessment of individual patient preferences, targeted brain region, and different means of device control. All relevant stakeholders will need to be involved in these discussions, including patients, caregivers, clinicians, programmers, and engineers. This process may be assisted by development of multi-faceted empirical measures incorporating different conceptions of autonomy, which will be particularly useful given patients have been found to use the idea of “becoming a new person” inconsistently and not all researchers in our sample made a clear distinction between autonomy and sense of autonomy (Roskies, 2015, p.6; Sullivan, 2015, p25; De Haan et al., 2017, p.17–18; Zuk and Lázaro-Muñoz, 2019).

Enhancing Patient Knowledge and Ongoing Informed Consent

Researchers pointed out that issues related to safety and autonomy highlight the need for patients to adequately understand and provide informed consent to aDBS treatment, which is a concern that is frequently raised in empirical and theoretical neuroethics literature (Cabrera et al., 2014, p.37–42; De Haan et al., 2015, p.25; Chiong et al., 2018, p.32–33; Klein, 2020, p.330). Pre- and post-operative counseling and psychosocial support could provide opportunities for patients to learn about aDBS, including how aDBS works, the role and rationale behind automaticity, and what the unique features of aDBS imply for ongoing consent. These forums would provide patients with multiple opportunities to voice any concerns or uncertainties about their treatment so that problems may be mitigated or avoided early on and at different time points throughout a patient’s treatment trajectory (De Haan et al., 2015, p.20).

Severe, refractory symptoms combined with a lack of treatment alternative suggests that patients considering aDBS are in a more vulnerable position than most and may perceive research participation as their only option, a situation that could be further influenced by the presence of a therapeutic relationship between the patient and a study investigator (Cabrera et al., 2014, p.39–42; Chiong et al., 2018, p.32, p.34; Zuk et al., 2018, p.48; Morain et al., 2019, p.11; Klein, 2020, p.333). Researchers shared these same concerns around patient consent and acknowledged that they have a responsibility to ensure that consent is not inadvertently biased by a patients’ perceptions or expectations. More specifically, researchers felt that patients should be adequately informed of aDBS devices’ unique ability to automatically adjust stimulation, which could potentially prevent some autonomy related concerns (Aggarwal and Chugh, 2020, p.156). More research is needed to clarify patient understandings about what they believe they are consenting to when they agree to participate in an aDBS trial, how consent may change over the span of the trial, and how understandings affecting consent may differ among certain patient subpopulations (Chiong et al., 2018, p.33–34).

Adequate patient understanding of aDBS research participation will also require that patients are informed about potential post-study uncertainties and issues (Lázaro-Muñoz et al., 2018, p.317–318; Hendriks et al., 2019, p.1511; Sierra-Mercado et al., 2019, p.760). Researchers expressed the need to help ensure post-trial access to care and device maintenance. They were concerned that patients who wanted to continue DBS may not be able to due to a lack of FDA approval for certain indications, causing insurance providers to not cover costs associated with battery or hardware replacements and clinical visits in some cases. Ensuring that patients understand these potential limitations to post-trial access to aDBS or conventional DBS was viewed by respondents as a critical aspect of informed consent procedures for these trials (Klein, 2016, p.1308). In addition to informing patients of the current realities of post-trial access, ongoing discussions are needed to determine different stakeholders’ obligations and potential responses, such as funders making supplementary funds available and device manufacturers covering costs to help improve post-trial access to care and device maintenance (Zuk et al., 2018, p.46; Hendriks et al., 2019, p.1511).

Our results should be considered within the limitations of our study. The lack of representation of all clinical applications of developing aDBS systems limits the generalizability of our findings. Our sample includes researchers working on aDBS systems for six different disorders, however, a more robust sample size could enhance insights into different uses of aDBS systems and closed-loop devices more generally. Furthermore, the researchers interviewed are experts working on the development of these technologies in a translational research context, thus, their perspectives may not capture the range of ethical considerations that could arise if aDBS systems are adopted more widely in clinical care. Researchers are just one of the key stakeholder groups involved in the development of aDBS systems. Other groups such as patients and caregivers may have different perspectives which are critical to understand to promote the responsible use and development of these technologies. Although we ensured recruitment of researchers who have various professional roles in aDBS trials, 78% of the sample identified as white, reflecting a lack of racial and ethnic representation in our sample, which could be addressed through more purposeful sampling. Other limitations of qualitative research include potential ambiguity in interview responses, which could lead to misinterpretation of data. Thematic content analysis was performed by at least two independent team members and inconsistencies in abstracted coded segments were discussed to reach a consensus among the research team to mitigate the potential impact of this limitation.

Conclusion

Drawing on the perspectives of expert stakeholders working at the forefront of aDBS research, we identified potential ethical issues and challenges that are heightened in or unique to aDBS research relative to conventional DBS. Due to the need to measure and store neural data, aDBS researchers raised concerns about protecting the privacy of neural data and preventing unwanted third-party access to data. The automatic nature of stimulation sparked risk and safety concerns associated with the experimental nature of identifying biomarkers to automatically adjust stimulation outside the clinic. Additionally, researchers discussed challenges of determining the degree of control researchers and patients should have over adaptive stimulation and challenges of ensuring that patients provide appropriate consent to continuous alterations in stimulation. Our findings therefore suggest that the technical features that give aDBS advantages over conventional DBS systems also raise distinct issues. We identified four areas where researcher concerns can begin to be addressed, including minimizing cybersecurity vulnerabilities, advancing biomarker validity, promoting the balance of device control between patients and clinicians, and enhancing ongoing informed consent. Further research and ethical analysis of these pressing issues are needed to better ensure that patients receive the full benefits of these neurotechnologies while minimizing potential medical and non-medical harms.

Data Availability Statement

The datasets presented in this article are not readily available because full datasets must remain unavailable in order to ensure de-identification of interview participants.

Ethics Statement

The studies involving human participants were reviewed and approved by Baylor College of Medicine Institutional Review Board. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements. Written informed consent was not obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article. Verbal consent was obtained from each research participant before beginning interviews.

Author Contributions

All authors contributed to project design. SO, LT, DSM, and RH conducted interviews and contributed to data collection. KAM, KK, CS, and LK completed data analysis. RH provided assistance in identifying global themes that emerged during data analysis. KAM, KK, PZ, and GLM conceptualized the manuscript. KAM drafted the manuscript. KK, PZ, and GLM made substantial revisions to the manuscript. SP, JOR, and AM also provided feedback and suggested edits on the manuscript. DSM provided insight on the technical points raised in the paper. SP, AM, GLM, JOR, and BAK provided senior-level leadership for the project.

Funding

Research for this article was funded by the BRAIN Initiative-National Institutes of Health (NIH), parent grant R01MH114854 and supplemental grant R01MH114854-01S1 (Lázaro-Muñoz, McGuire, Goodman). The views expressed are those of the authors and do not necessarily reflect views of the NIH, Baylor College of Medicine, Rice University, University of Washington, Seattle, University of Puerto Rico, or University of California, San Francisco.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

We thank the researcher-participants for their time and thoughtful responses during interviews.

References

Aggarwal, S., and Chugh, N. (2020). Ethical implications of closed loop brain device: 10-year review [Review of ethical implications of closed loop brain device: 10-year review]. Minds Mach. 30, 145–170. doi: 10.1007/s11023-020-09518-7

Arlotti, M., Rosa, M., Marceglia, S., Barbieri, S., and Priori, A. (2016). The adaptive deep brain stimulation challenge. Parkinson. Relat. Disord. 28, 12–17. doi: 10.1016/j.parkreldis.2016.03.020

Baylis, F. (2013). “I Am Who I Am”: on the perceived threats to personal identity from deep brain stimulation. Neuroethics 6, 513–526.

Beudel, M., and Brown, P. (2016). Adaptive deep brain stimulation in Parkinson’s disease. Parkinson. Relat Disord. 22, S123–S126.

Boyatzis, R. E. (1998). Transforming Qualitative Information: Thematic Analysis and Code Development. London: SAGE Publications.

Cabrera, L., Evans, E., and Hamilton, R. (2014). Ethics of the electrified mind: defining issues and perspectives on the principled use of brain stimulation in medical research and clinical care. Brain Topogr. 27, 33–45. doi: 10.1007/s10548-013-0296-8

Chiong, K., Leonard, F., and Chang, F. (2018). Neurosurgical patients as human research subjects: ethical considerations in intracranial electrophysiology research. Neurosurgery 83, 29–37. doi: 10.1093/neuros/nyx361

De Haan, S., Rietveld, E., Stokhof, M., and Denys, D. (2017). Becoming more oneself? Changes in personality following DBS treatment in psychiatric disorders: experiences of OCD patients and general considerations. PLoS One 12:175748. doi: 10.1371/journal.pone.0175748

De Haan, S., Rietveld, E., Stokhof, M., Denys, D., and Haan, S. (2015). Effectsof deep brain stimulation on the lived experience of obsessive-compulsive disorder patients: in-depth interviews with 18 patients: e0135524. PLoS One 10:e0135524. doi: 10.1371/journal.pone.0135524

Fins, J. (2009). Deep brain stimulation, deontology and duty: the moral obligation of non-abandonment at the neural interface. J. Neural Eng. 6:050201. doi: 10.1088/1741-2552/6/5/050201

Gallagher, S. (2018). Deep brain stimulation, self and relational autonomy. Neuroethics 18, 1–13. doi: 10.1007/s12152-018-9355-x

Gilbert, F., Goddard, E., Viaña, J., Carter, A., and Horne, M. (2017). I Miss being me: phenomenological effects of deep brain stimulation. AJOB Neurosci. 8, 96–109. doi: 10.1080/21507740.2017.1320319

Gilbert, F., O’Brien, T., and Cook, M. (2018). The effects of closed-loop brain implants on autonomy and deliberation: what are the risks of being kept in the loop? Camb. Q. Healthc. Ethics 27, 316–325. doi: 10.1017/S0963180117000640

Gilbert, F., Viaña, J., and Ineichen, C. (2018). Deflating the “DBS causes personality changes” bubble. Neuroethics 18, 1–17. doi: 10.1007/s12152-018-9373-8

Girard, J., Cohn, J., Jeni, L., Sayette, M., and Torre, F. (2015). Spontaneous facial expression in unscripted social interactions can be measured automatically. Behav. Res. Methods 47, 1136–1147. doi: 10.3758/s13428-014-0536-1

Goddard, E. (2017). Deep brain stimulation through the “lens of agency”: clarifying threats to personal identity from neurological intervention. Neuroethics 10, 325–335.

Goering, S., Klein, E., Dougherty, D. D., and Widge, A. S. (2017). Staying in the loop: relational agency and identity in next-generation DBS for psychiatry. AJOB Neurosci. 8, 59–70. doi: 10.1080/21507740.2017.1320320

Hendriks, S., Grady, C., Ramos, K., Chiong, W., Fins, J., Ford, P., et al. (2019). Ethical challenges of risk, informed consent, and posttrial responsibilities in human research with neural devices: a review. JAMA Neurol. 76, 1506–1514. doi: 10.1001/jamaneurol.2019.3523

Hosain, M. K., Kouzani, A., and Tye, S. (2014). Closed loop deep brain stimulation: an evolving technology. Australas Phys. Eng. Sci. Med. 4, 619–634.

Jaret, P. (2018). Exposing Vulnerabilities: How Hackers Could Target Your Medical Devices. Available online at: https://www.aamc.org/news-insights/exposing-vulnerabilities-how-hackers-could-target-your-medical-devices (accessed June 5, 2020).

Klein, E. (2016). Informed consent in implantable BCI research: identifying risks and exploring meaning. Sci. Eng. Ethics 22, 1299–1317. doi: 10.1007/s11948-015-9712-7

Klein, E. (2020). Ethics and the emergence of brain-computer interface medicine. Handbook Clin. Neurol. 168, 329–339. doi: 10.1016/B978-0-444-63934-9.00024-X

Klein, E., Goering, S., Gagne, J., Shea, C., Franklin, R., Zorowitz, S., et al. (2016). Brain-computer interface-based control of closed-loop brain stimulation: attitudes and ethical considerations. Brain Comput. Interfaces 3, 140–148. doi: 10.1080/2326263X.2016.1207497

Kuckartz, U. (2014). Qualitative Text Analysis: A Guide to Methods, Practice & Using Software. London: SAGE Publications Ltd, 1–15. doi: 10.4135/9781446288719

Lázaro-Muñoz, G., Mcguire, A., and Goodman, W. (2017). Should we be concerned about preserving agency and personal identity in patients with adaptive deep brain stimulation systems? AJOB Neurosci. 8, 73–75.

Lázaro-Muñoz, G., Yoshor, D., Beauchamp, M., Goodman, W., and Mcguire, A. (2018). Continued access to investigational brain implants. Nat. Rev. Neurosci. 19, 317–318. doi: 10.1038/s41583-018-0004-5

Lázaro-Muñoz, G., Zuk, P., Pereira, S., Kostick, K., Torgerson, L., Sierra-Mercado, D., et al. (2019). Neuroethics at 15: keep the kant but add more bacon. AJOB Neurosci. 10, 97–100. doi: 10.1080/21507740.2019.1632960

Lipsman, N., and Glannon, W. (2013). Brain, mind and machine: what are the implications of deep brain stimulation for perceptions of personal identity, agency and free will? Bioethics 27, 465–470. doi: 10.1111/j.1467-8519.2012.01978.x

Morain, S. R., Joffe, S., and Largent, E. A. (2019). When is it ethical for physician-investigators to seek consent from their own patients? Am. J. Bioethics AJOB 19, 11–18. doi: 10.1080/15265161.2019.1572811

Naufel, S., and Klein, E. (2020). Brain-computer interface (BCI) researcher perspectives on neural data ownership and privacy. J. Neural Eng. 17:016039. doi: 10.1088/1741-2552/ab5b7f

Opri, E., Cernera, S., Okun, M., Foote, K., Gunduz, A., and Opri, E. (2019). The functional role of thalamocortical coupling in the human motor network. J. Neurosci. 39, 8124–8134. doi: 10.1523/JNEUROSCI.1153-19.2019

Palinkas, L. A., Horwitz, S. M., Green, C. A., Wisdom, J. P., Duan, N., and Hoagwood, K. (2015). Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Administrat. Policy Mental Health 42, 533–544. doi: 10.1007/s10488-013-0528-y

Provenza, N., Matteson, E., Allawala, A., Barrios-Anderson, A., Sheth, S., Viswanathan, A., et al. (2019). The case for adaptive neuromodulation to treat severe intractable mental disorders. Front. Neurosci. 13:152. doi: 10.3389/fnins.2019.00152

Pugh, J., Pycroft, L., Sandberg, A., Aziz, T., and Savulescu, J. (2018). Brainjacking in deep brain stimulation and autonomy. Ethics Inform. Technol. 20, 219–232. doi: 10.1007/s10676-018-9466-4

Roskies, A. (2015). Agency and intervention. Philos. Transact. R. Soc. Lond. Ser. B Biol. Sci. 370:20140215. doi: 10.1098/rstb.2014.0215

Schilling, J. (2006). On the pragmatics of qualitative assessment: designing the process for content analysis. Eur. J. Psychol. Assess. 22, 28–37. doi: 10.1027/1015-5759.22.1.28

Shukla, A. W., Zeilman, P., Fernandez, H., Bajwa, J. A., and Mehanna, R. (2017). DBS programming: an evolving approach for patients with Parkinson’s disease. Parkinsons Dis. 2017:11. doi: 10.1155/2017/8492619

Shute, J. B., Okun, M. S., Opri, E., Molina, R., Rossi, P. J., Martinez-Ramirez, D., et al. (2016). Thalamocortical network activity enables chronic tic detection in humans with Tourette syndrome. Neuroimage Clin. 12, 165–172.

Sierra-Mercado, D., Zuk, P., Beauchamp, M., Sheth, S., Yoshor, D., Goodman, W., et al. (2019). Device removal following brain implant research. Neuron 103, 759–761. doi: 10.1016/j.neuron.2019.08.024

Sullivan, S. L. (2015). Do implanted brain devices threaten autonomy or the “sense” 1212 of autonomy? AJOB Neurosci. 6, 24–26. doi: 10.1080/21507740.2015.1094551

Swann, N., de Hemptinne, C., Miocinovic, S., Qasim, S., Wang, S., Ziman, N., et al. (2016). Gamma oscillations in the hyperkinetic state detected with chronic human brain recordings in Parkinson’s disease. J. Neurosci. 36, 6445–6458. doi: 10.1523/JNEUROSCI.1128-16.2016

U.S. Food & Drug Administration, (2018). Medical Devices Safety Action Plan: Protecting Patients, Promoting Public Health. Available online at: https://www.fda.gov/files/aboutfda/published/Medical-Device-Safety-Action-Plan–Protecting-Patients–Promoting-Public-Health-(PDF).pdf (accessed June 5, 2020).

Zuk, P., and Lázaro-Muñoz, G. (2019). DBS and autonomy: clarifying the role of theoretical neuroethics. Neuroethics 19, 1–11. doi: 10.1007/s12152-019-09417-4

Keywords: ethics, neuroethics, bioethics, interviews, neuromodulation, deep brain stimulation, ELSI, closed-loop

Citation: Muñoz KA, Kostick K, Sanchez C, Kalwani L, Torgerson L, Hsu R, Sierra-Mercado D, Robinson JO, Outram S, Koenig BA, Pereira S, McGuire A, Zuk P and Lázaro-Muñoz G (2020) Researcher Perspectives on Ethical Considerations in Adaptive Deep Brain Stimulation Trials. Front. Hum. Neurosci. 14:578695. doi: 10.3389/fnhum.2020.578695

Received: 01 July 2020; Accepted: 19 October 2020;

Published: 12 November 2020.

Edited by:

Michael S. Okun, University of Florida Health, United StatesReviewed by:

Manish Ranjan, West Virginia University, United StatesAlik Sunil Widge, University of Minnesota Twin Cities, United States

Copyright © 2020 Muñoz, Kostick, Sanchez, Kalwani, Torgerson, Hsu, Sierra-Mercado, Robinson, Outram, Koenig, Pereira, McGuire, Zuk and Lázaro-Muñoz. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Gabriel Lázaro-Muñoz, glazaro@bcm.edu

Katrina A. Muñoz

Katrina A. Muñoz Kristin Kostick1

Kristin Kostick1  Lavina Kalwani

Lavina Kalwani Laura Torgerson

Laura Torgerson Demetrio Sierra-Mercado

Demetrio Sierra-Mercado Stacey Pereira

Stacey Pereira Amy McGuire

Amy McGuire Peter Zuk

Peter Zuk Gabriel Lázaro-Muñoz

Gabriel Lázaro-Muñoz