Epileptic focus localization using transfer learning on multi-modal EEG

- 1Chongqing Institute of Green and Intelligent Technology, Chinese Academy of Sciences, Chongqing, China

- 2Chengdu Institute of Computer Application, Chinese Academy of Sciences, Chengdu, China

- 3Chongqing School, University of Chinese Academy of Sciences, Chongqing, China

- 4Department of Neurology, The First Affiliated Hospital of Chongqing Medical University, Chongqing, China

The standard treatments for epilepsy are drug therapy and surgical resection. However, around 1/3 of patients with intractable epilepsy are drug-resistant, requiring surgical resection of the epileptic focus. To address the issue of drug-resistant epileptic focus localization, we have proposed a transfer learning method on multi-modal EEG (iEEG and sEEG). A 10-fold cross-validation approach was applied to validate the performance of the pre-trained model on the Bern-Barcelona and Bonn datasets, achieving accuracy rates of 94.50 and 97.50%, respectively. The experimental results have demonstrated that the pre-trained model outperforms the competitive state-of-the-art baselines in terms of accuracy, sensitivity, and negative predictive value. Furthermore, we fine-tuned our pre-trained model using the epilepsy dataset from Chongqing Medical University and tested it using the leave-one-out cross-validation method, obtaining an impressive average accuracy of 90.15%. This method shows significant feature differences between epileptic and non-epileptic channels. By extracting data features using neural networks, accurate classification of epileptic and non-epileptic channels can be achieved. Therefore, the superior performance of the model has demonstrated that the proposed method is highly effective for localizing epileptic focus and can aid physicians in clinical localization diagnosis.

1 Introduction

Epilepsy is a worldwide nervous system disease caused by sudden abnormal discharges of nerve cells in the brain. According to statistics, 70 million people worldwide suffer from epilepsy. Clinical manifestations of epileptic seizures include impaired consciousness, limb spasms, urinary incontinence, frothing, and other symptoms. Although short-term epileptic seizures have minimal impact, frequent long-term seizures severely affect patients’ physical, mental and intellectual health (Kwan and Brodie, 2000; Rakhade and Jensen, 2009; Rasheed et al., 2021).

The characteristics of EEG (electroencephalogram) data during epileptic seizure period are related to the original localization and the cause of epilepsy. Different nervous system diseases or brain conditions can cause various epileptic seizures (Babb et al., 1987; Fisher et al., 2017). In the treatment of epilepsy, around 1/3 of patients with intractable epilepsy are drug-resistant. Therefore, precise localization of the epileptic focus during presurgical assessment is necessary for the successful resection of epileptic focus.

There are four clinical methods for epileptic focus localization, including observing clinical symptoms, analyzing fMRI (functional magnetic resonance imaging) data, examining sEEG (scalp electroencephalogram) signal, and studying iEEG (intracranial electroencephalogram) signal. Each method has its advantages and limitations. Observing clinical symptoms is the most direct method but can only localize the functional brain areas. Analyzing fMRI data is expensive and has low temporal resolution. Moreover, if the seizures of epileptic patients are not caused by structural brain lesions, this method will not be able to accurately localize the epileptic focus (Morgan et al., 2004; Stufflebeam et al., 2011; Zhang et al., 2015). Examining sEEG signal is widely used in the detection and prediction of epilepsy (Zhang et al., 2021; Wan et al., 2023a; Yang et al., 2023a,b). This method is non-invasive and has high temporal resolution, but requires expert judgement with a long period of time and the judgement by different physicians may vary. Furthermore, electrodes are implanted in the appropriate target areas of the brain for iEEG signal acquisition and analysis, which is costly, complex, and carries a risk of infection, etc.

Patient-independent methods, which involve joint training with data from multiple patients, face challenges in eliminating significant differences between patients (mainly caused by multiple factors such as physical condition, pathogenesis, seizure intensity, seizure type, etc.). Moreover, the sEEG and iEEG signals are multi-modal data with significantly different characteristics. sEEG, or scalp EEG, is severely attenuated by the skull, leading to signals that are not an accurate representation of the region due to volume conduction effects. iEEG, or intracranial EEG, offers high quality signals that truly reflect the activity of the region. Combining the advantages of sEEG and iEEG data offers a promising approach for epileptic focus localization.

The main contributions of our study can be summarized as follows:

(1) In the pre-trained model, the style-feature randomization module and the domain adversarial network were introduced to enhance the generalization ability of the model, and achieving the optimal test results on the Bern-Barcelona dataset and the Bonn dataset;

(2) We have proposed a novel transfer learning method for epileptic focus localization, which can make use of the Bern-Barcelona dataset to pre-train the model. Then, we fine-tuned this pre-trained model with the epilepsy dataset from Chongqing Medical University, and conducted sufficient experiments to validate the practical applicability value of our method.

2 Related works

So far, a number of epileptic focus localization technologies have been developed, primarily transforming the epileptic focus localization problem into a classification task. For example, Chen et al. (2017) used discrete wavelet transform (DWT) to extract feature metrics such as Max, Min, Mean, STD, Skewness of wavelet coefficients at all levels, achieving an accuracy of 83.07% on sym6 wavelet coefficients. Daoud and Bayoumi (2020) proposed a method based on semi-supervised learning, achieving an accuracy of 93.21% on the Bern-Barcelona dataset. Zhao et al. (2020) extracted the entropy features of six frequency bands, used STFT to extract time-frequency features for EEG, and combined two features into a CNN network for feature extraction and classification, achieving an accuracy of 88.77%. Zhao et al. (2021) combined entropy, STFT, and 1D-CNN, achieving an accuracy of 93.44%. Sui et al. (2021) proposed TF-HybridNet, incorporating a 1D convolutional network and STFT for time-frequency feature extraction, achieving an accuracy of 94.3%.

In addition, the characteristics of EEG signal offer valuable information for the localization of epileptic focus. Staljanssens et al. (2017) used brain functional connectivity metrics to calculate weighted adaptive orientation transfer functions, achieving an accuracy of 88.6% on the University Hospital of Geneva epilepsy dataset. Amirsalar et al. (2019) used the Pierson correlation coefficient between signals in each lead to calculate the mean number of connections and connection strength, finally achieving a sensitivity of 80% on the Karunya University EEG dataset. Gunnarsdottir et al. (2022) proposed an algorithm to identify two groups of nodes (“sources” node and “sinks” node) in a resting-state iEEG network. They validated the SSI (source-sink index) in a retrospective analysis of 65 patients, achieving an accuracy of 79%.

The analysis shows that the existing method has the following disadvantages:

(1) The Bern-Barcelona dataset and the Bonn dataset only contain channel category information. (1) Therefore, they transformed the localization problem into a classification task, which does not achieve accurate epileptic focus localization;

(2) The Bern-Barcelona dataset contains only five patients, and the existing literature does not consider the negative impact of multi-patient differences;

(3) The dataset for epileptic focus localization is limited and the accuracy of epileptic focus localization is low.

Therefore, a method with low cost and high detection accuracy is needed to solve the above issues.

3 Materials and methodology

3.1 EEG data

In this study, we utilized three datasets, including the Bern-Barcelona dataset, the Bonn dataset and the Chongqing Medical University Epilepsy dataset. The Bern-Barcelona and the Bonn datasets were used for pre-training and model performance evaluation. The parameters of the pre-trained model were obtained by training with the Bern-Barcelona dataset, and the Chongqing Medical University epilepsy dataset was used for fine-tuning and testing.

3.1.1 Bern-Barcelona dataset

Recordings from Department of Neurology, University of Bern, Switzerland were used as the first iEEG dataset in this study. To the best of our knowledge, this is the only open dataset that provides clear annotation on focal and non-focal signals during seizure-free periods (Ralph et al., 2012), including data from five patients with drug-resistant temporal lobe epilepsy and being the candidates of epilepsy surgery. The dataset contains 7,500 focal samples and 7,500 non-focal samples, each lasting 20 s with a sampling rate of 512 Hz, signals being filtered by a fourth-orders Butterworth bandpass filter with cutoff frequency at 0.5 and 150 Hz.

3.1.2 Bonn dataset

The second iEEG dataset used in this study, obtained from the Epileptology Department of Bonn University (Andrzejak et al., 2001), consists of five sets of EEG recordings labeled A to E. Each set consists of data from five subjects. Set A represents healthy subjects with open eyes. Set B is recorded from healthy subjects with closed eyes. Set C is recorded from non-epileptogenic zone of the epileptic patients’ brain, while Set D is recorded from epileptogenic zone. Lastly, Set E represents epileptic patients during ictal period. Each set contains a total of 100 EEG segments. Each segment is 23.6 s long with a sampling rate of 173.61 Hz. The iEEG signals were filtered using fourth-order Butterworth bandpass filter with cutoff frequency at 0.5 and 85 Hz. In this study, we focus on set C and D as they represent the non-focal and focal iEEG signals, respectively.

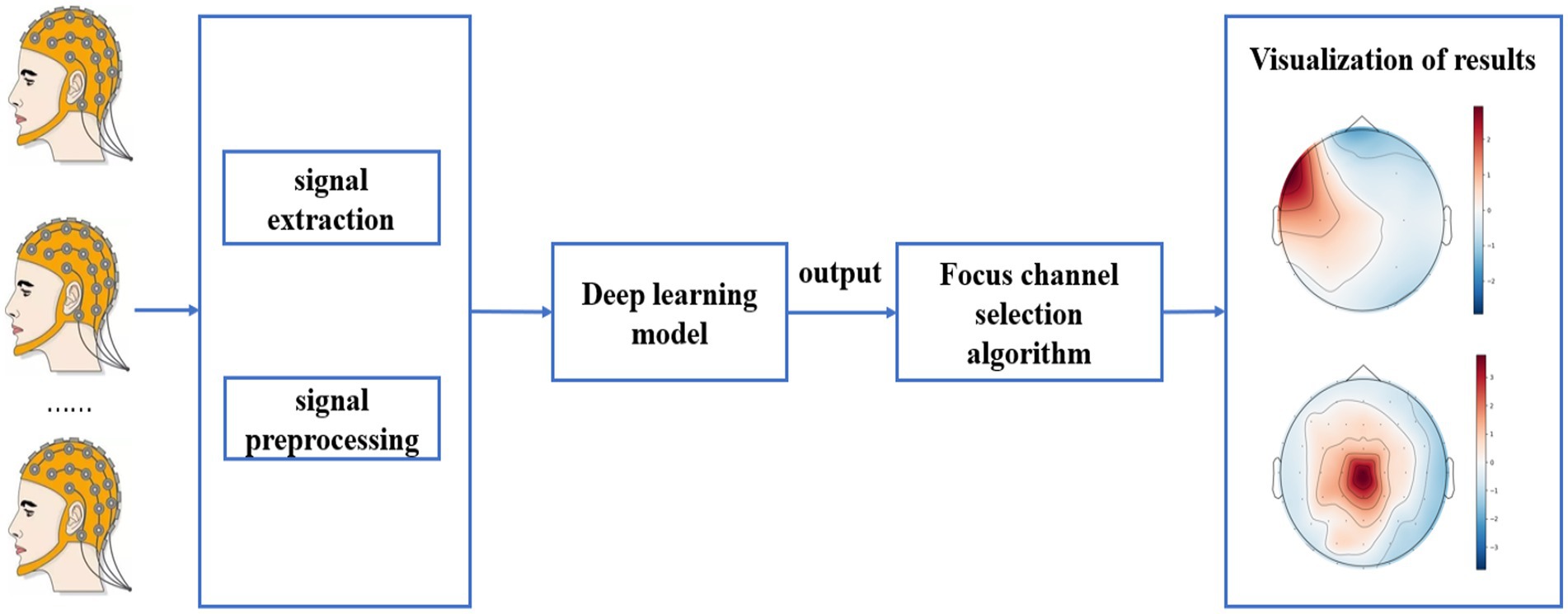

3.1.3 Chongqing Medical University epilepsy dataset

The third sEEG dataset used in this study was obtained from Chongqing Medical University, including data from six patients. To expand the sample size, we selected patients with multiple seizures. The dataset comprises 16 channels. Each sample is 20 s long with a sampling rate of 512 Hz, filtered by a fourth-order Butterworth bandpass filter with cutoff frequencies at 0.5 and 150 Hz. Details of the dataset are given in Table 1.

3.2 Methodology

Due to the large amount of data in Bern-Barcelona dataset, which contains 7,500 focal data and 7,500 non-focal data, we have proposed a transfer learning method to make full use of the large amount of data during the pre-trained model phase. In this approach, we utilize the CQMUE dataset to fine-tune and test the model. Notably, the Bern-Barcelona dataset includes data from five epilepsy patients, with significant differences (mainly due to multiple factors such as physical condition, pathogenesis, seizure intensity, and seizure type). If we train the model directly with multi-patient data, it will quickly lead to model underfitting. To address this issue, we implemented a style-feature randomization block, a multi-level temporal-spectral feature extraction network, and a domain adversarial network to enhance the generalization ability of the pre-trained model.

3.2.1 Pre-trained model

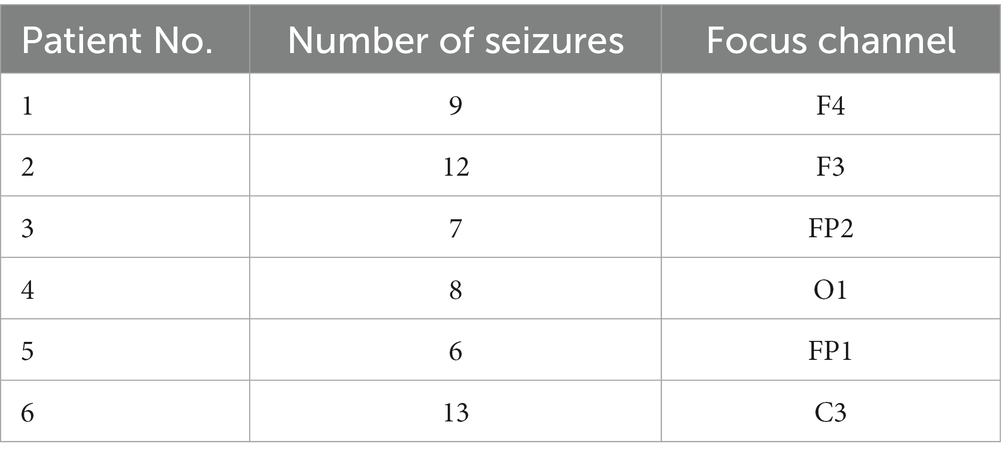

The pre-trained model consists of an embedding block, a style-feature randomization block, a multi-level temporal-spectral feature extraction network (Hu et al., 2018; Li et al., 2020; Wan et al., 2023b), a category classifier, and a patient discriminator, as illustrated in Figure 1. The embedding block extends the data across multiple channels to enhance the discriminative properties. The style-feature randomization module disrupts data features within a training batch, enhancing the generalization ability of the model. The multi-level temporal-spectral feature extraction network utilizes temporal-spectral features to enhance feature discrimination. The category classifier completes the classification of the data. The patient discriminator employs DANN (domain-adversarial training of neural networks; Yaroslav et al., 2016) to extract the essential data features.

3.2.1.1 Embedding block

Before the data is fed into the embedding block, necessary data preprocessing is required (Liu et al., 2015; Versaci et al., 2022). The embedding block, i.e., successive temporal convolution and batch normalization (BN) operations, was initially employed to derive an optimal filter band for subsequent analysis [since convolution operators are essentially equivalent to a low-pass filter (Azimi et al., 2019)]. As a result, after stacking the original data and the output embeddings with a channel-wise concatenation function, the embedding block obtained sub-band matrices that provided a subsequent network with adaptive sub-band responses and actual data. Finally, the data was fed into the multi-level temporal-spectral feature extraction module for feature extraction.

3.2.1.2 Style-feature randomization

Within a training batch, the sub-band matrices are computed by the embedding block. Due to significant style-feature differences between the data of each sub-band for different patients, an enhancement of the model’s generalization ability is necessary. To achieve this, we computed and across spatial dimensions independently for each sub-band (Oren et al., 2021).

where , represents the batch size, represents the number of channels, represents the height of the data matrix, and represents the width of the data matrix. represents an element in the data matrix, and represent the mean and standard deviation for each sub-band.

Then, we randomly disrupt and to obtain and , is obtained by the following equation finally.

where x is the sub-band matrix obtained by the embedding block.

3.2.1.3 Multi-level temporal-spectral feature extraction network

To prevent deformation of the boundary data caused by zero padding in the convolution operation, the head and tail of the data are filled according to Eq. (4) (Li et al., 2020):

where | is a concatenating operator, represents the i-th element of input , and represents the parameter kernel size in the convolution operation.

To expedite calculation time, the proposed method adopted convolution operation to perform multi-level wavelet decomposition, which is defined as follows (Li et al., 2020):

where is the convolution operation, and represent a pair of scaling and wavelet filter, represents the parameter stride in the convolution operation, represents the approximation (low pass) coefficients, and represents the detail (high pass) coefficients.

In the multi-level temporal feature extraction module, we adopted five independent convolution, batch normalization, and empirical linear unit (ELU) operations to capture multi-level temporal feature information within different perceptual domains. The convolution kernel size is set to [S, 1], where the value of S is { }, , and ultimately, the temporary features ( , , , , , ) are derived.

In the multi-level spectral feature extraction module, we selected Daubechies order-4 (Db4) wavelet function to extract the corresponding wavelet coefficients within standard physiological sub-bands δ(0 ~ 4 Hz), θ(4 ~ 8 Hz), α(8 ~ 16 Hz), β(16 ~ 32 Hz), and γ(32 ~ 64 Hz), high-γ(65 ~ 128 Hz). Finally, the frequency features ( , , , , , ) are derived.

To further extract discriminative feature information, the features extracted by the multi-level temporal feature extraction module and the multi-level spectral feature extraction module were combined according to the feature dimensions:

The combined features were fed into a multi-level squeeze-and-extinction network (Hu et al., 2018) to enhance discriminability of features.

3.2.1.4 Category classifier

For the category classifier, the method utilized data from each channel to achieve binary classification of epileptic and non-epileptic focus channels. A 3-layer fully connected network was employed for the category classifier. We applied the CrossEntropy loss to achieve accurate classification and the MSE (Mean Squared Error) loss to minimize the output differences between source data and style-feature randomization data. The loss functions of the classification network are as follows:

where is the CrossEntropy loss function, represents the embedding block, represents style-feature randomization, represents the multi-level temporal-spectral feature extraction network, represents the category classifier, represents the category label, represents input samples, and represents a dataset.

3.2.1.5 Patient discriminator

Since the dataset contains data from multiple patients, we have proposed a method based on the DANN (Yaroslav et al., 2016) to enhance the generalization ability of the model. Features from each patient were extracted according to the marginal distribution by the global adversarial network. The global adversarial loss function is as follows:

where is the CrossEntropy loss function, represents the multi-level temporal-spectral feature extraction network, represents the patient discriminator, represents the patient label, and represents the patient sample set.

3.2.1.6 Training details

We proposed an adversarial training strategy to train the loss functions jointly:

Where , . are trained by a special layer called Gradient Reversal Layer (GRL). This GRL is omitted during forward propagation, and the gradient is reversed in backpropagation. Finally, we searched for the optimal parameters to meet the following requirements:

where is the parameters of the multi-level temporal-spectral feature extraction network, represents the parameters of the category classifier, and represents the parameters of the patient classifier.

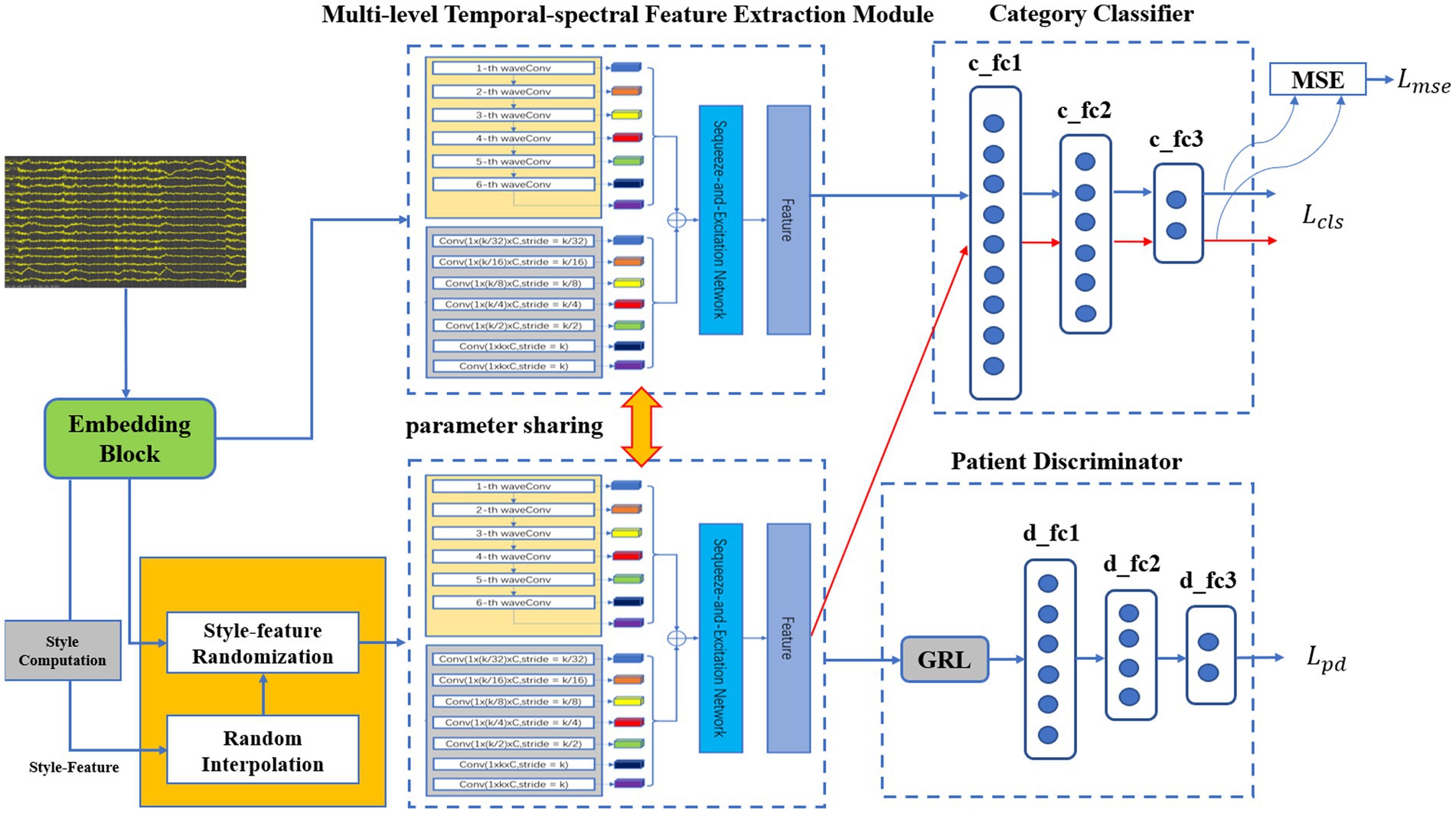

3.2.2 Model fine-tuning

The parameters of feature extraction module in the pre-trained model were frozen, and then the category classifier was fine-tuned using the CQMUE dataset. In the CQMUE dataset, only one channel is a seizure channel, causing an imbalance between the positive and negative samples. To address this issue, we have introduced the weighted CrossEntropy loss function (Rezaei-Dastjerdehei et al., 2020):

where is the weight parameter for each category within the dataset, , represents the total number of samples in the dataset, represents the number of samples for each category within the dataset. represents the number of categories, represents the model output probability, and represents the label for each category.

In the fine-tuning phase, the CrossEntropy loss function must be replaced with the weighted CrossEntropy loss function. The parameters of the feature extraction module were frozen, while the parameters of the category classifier were trainable. The transfer learning model is shown in Figure 2.

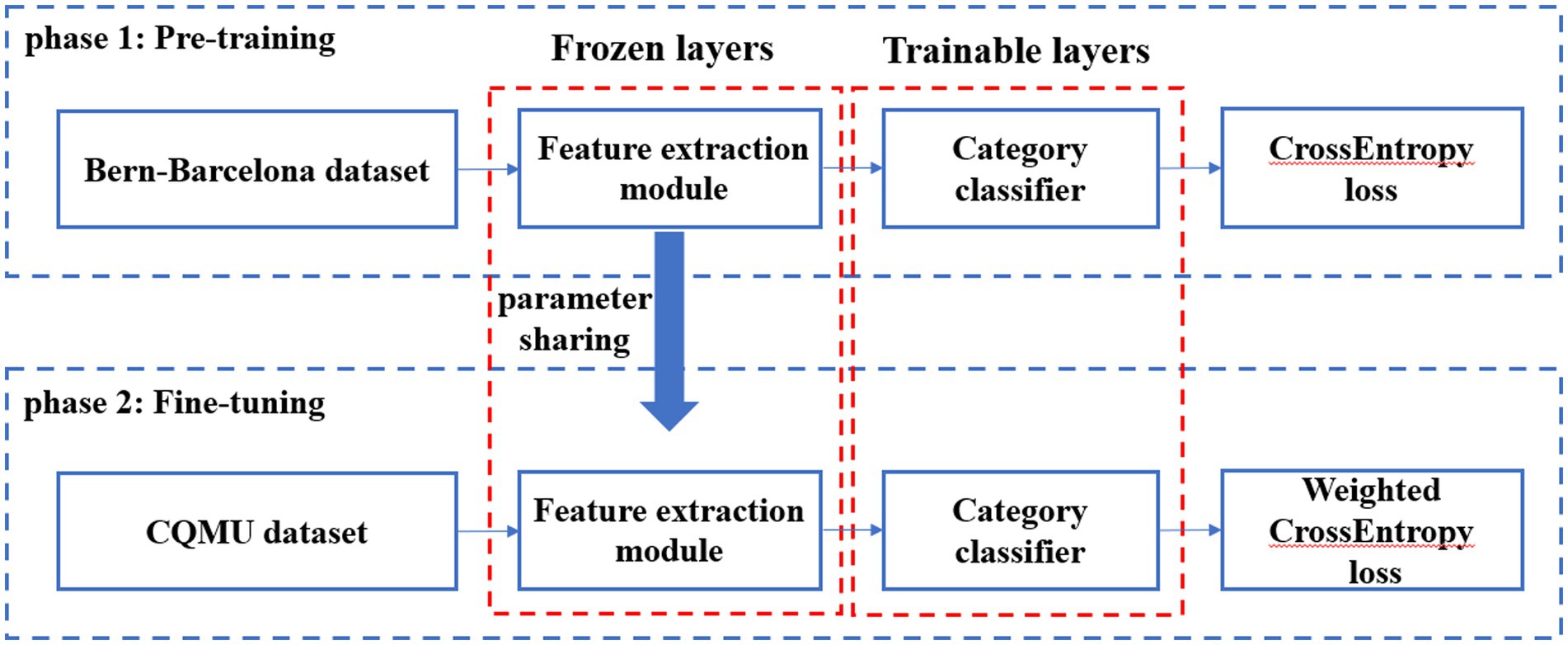

3.2.3 Result visualization

For the test data, first the output probability of each channel was calculated, then the channel with the highest output probability was selected as the epileptic focus channel, and finally the output probability of each channel was visualized by whole brain topography. The test procedure is shown in Figure 3.

3.3 Evaluation

To evaluate the pre-trained models, a 10-fold cross-validation was performed on the Bern-Barcelona dataset and the Bonn dataset. All data were randomly scrambled and divided into 10 parts, one part of which was used for testing and the others for training.

Moreover, testing our model through the leave-one-out cross-validation method has validated the robustness of our approach on the CQMUE dataset, i.e., data from one person are used for testing and data from another person are used for fine-tuning the model. The training results were averaged to obtain the final test results.

3.3.1 Experimental parameters

The experimental environment included Windows 10 operating system, Python 3.7.4 as the program language, and Pytorch (version 11.1) as the deep learning framework. The graphics card used was: GeForce RTX 3060.

The training epoch was set to 100 times and the batch size was set to 100. The loss function consists of the CrossEntropy loss function, the weighted CrossEntropy loss function and the MSE loss function. The model adopted the Adam optimizer, and the learning rate was set to 0.0005. All parameters were optimized using grid search.

3.3.2 Evaluation metrics

The experiment employed accuracy (ACC), sensitivity (SN), specificity (SP), positive predictive value (PPV) and negative predictive value (NPV) to quantify the performance of the proposed method (Chen et al., 2017).

where TP, TN, FP, and FN are true positive, true negative, false positive and false negative, respectively.

4 Experiments and discussions

4.1 Overall comparison

In this section, we computed a number of performance metrics such as accuracy, sensitivity, specificity, positive predictive value (PPV) and negative predictive value (NPV) on the Bern-Barcelona and Bonn datasets respectively, to evaluate our pre-trained model. The classification accuracy of the proposed method was 94.50% when applied to the Bern-Barcelona dataset, while it was 97.50% when applied to the Bonn dataset. The high accuracy was due to the use of convolutional layers, style-feature randomization, squeeze-and-extinction network, and domain adversarial. The robustness of our approach has been validated via the 10-fold cross-validation method.

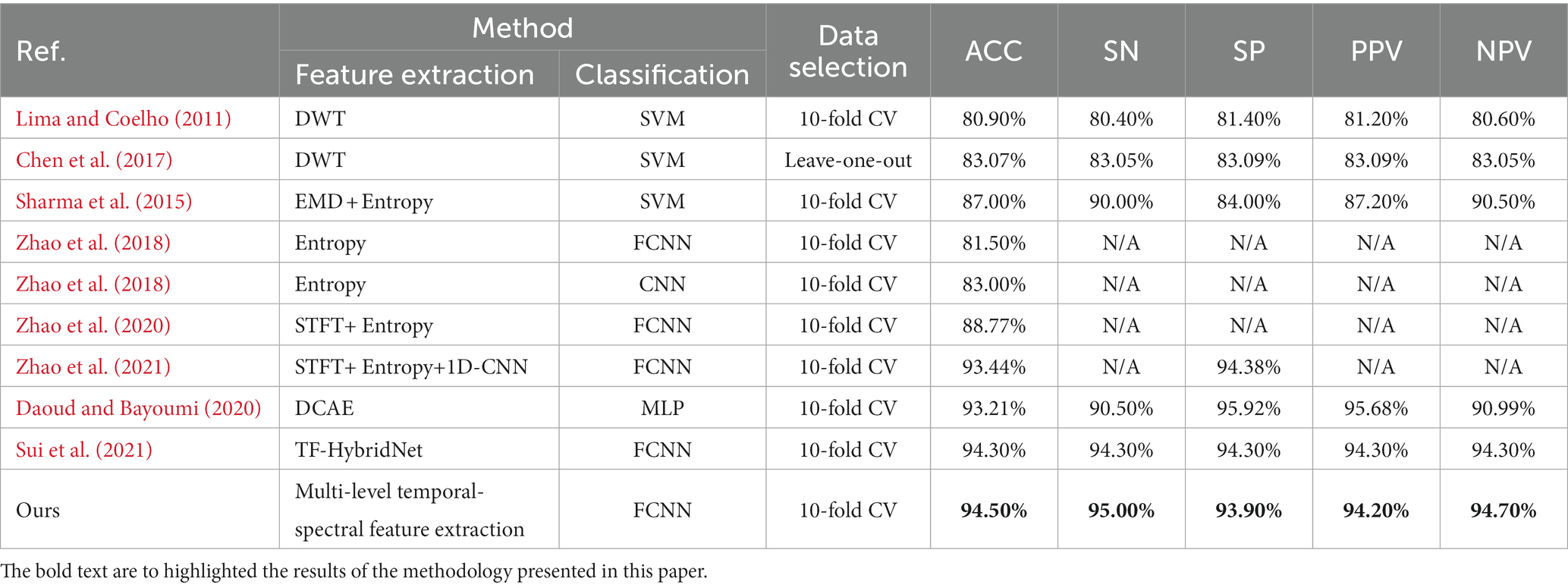

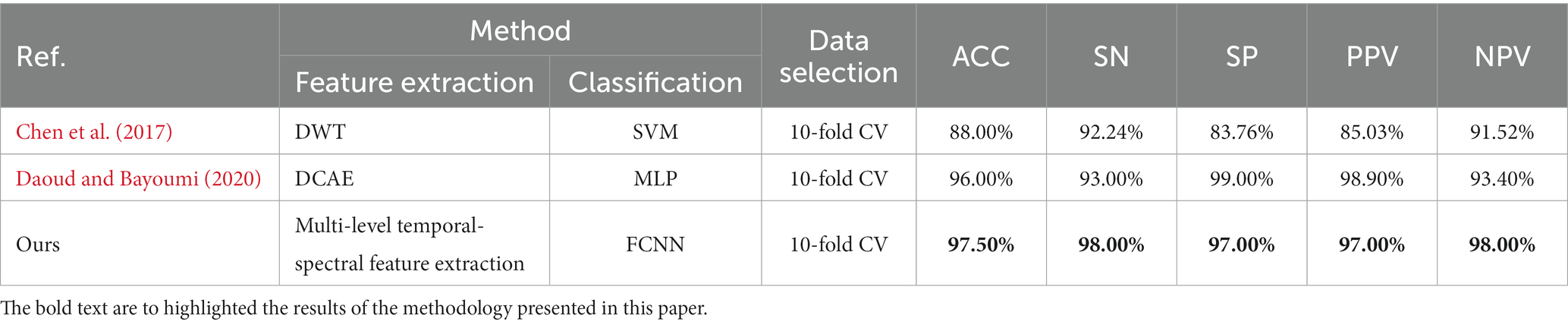

For an additional evaluation of our method, we performed a comparison experiment on the same dataset. Table 2 shows the results on the Bern-Barcelona dataset, and Table 3 shows the results on the Bonn dataset.

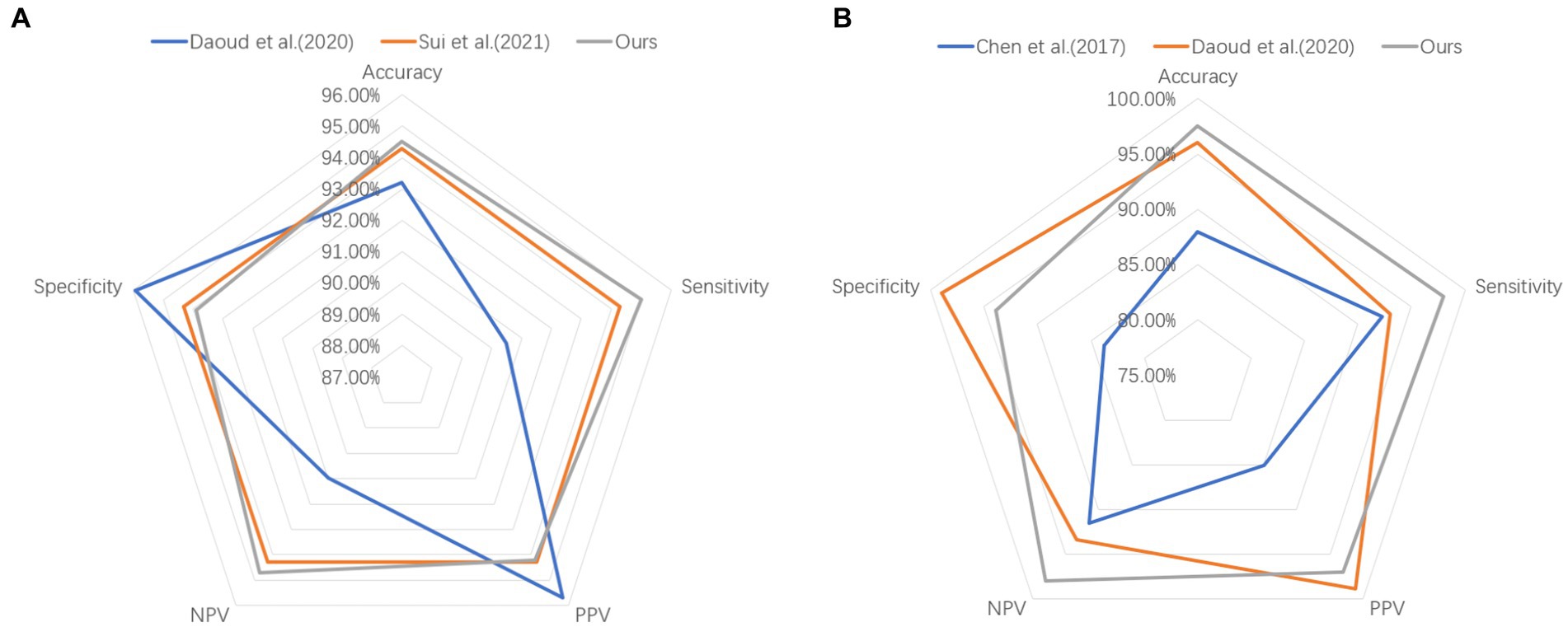

To better demonstrate the comparison results between our proposed method and other methods on both datasets, we adopt the radar chart, which can compare the superiority using several different indicators (accuracy, sensitivity, specificity, PPV, NPV). It is clear that our method covers a larger pentagon in both datasets, which shows that our approach outperforms previous work in localizing the epileptic focus. The radar charts are illustrated in Figure 4.

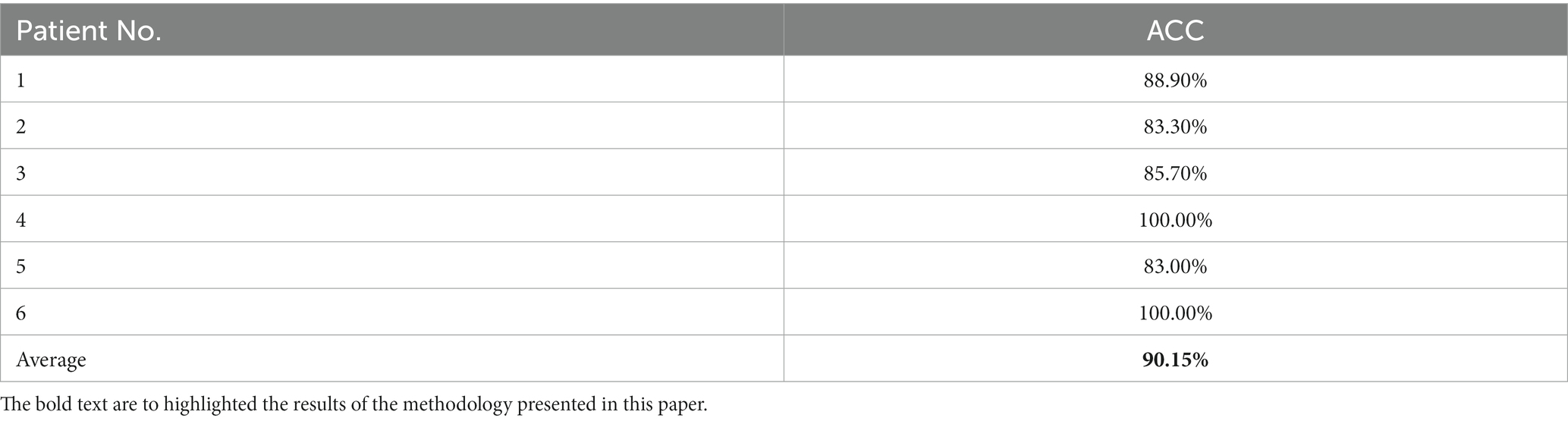

We employed the CQMUE dataset to validate the performance of the pre-trained model. The CQMUE dataset contains EEG data from only six patients, while each patient has multiple epileptic seizures. In the fine-tuning and validation experiment, we use the leave-one-out cross-validation method, i.e., data from five patients are used for model fine-tuning and data from the remaining patient are used for validation. To avoid model overfitting, we applied the method of increasing the number of fine-tuning samples, each EEG sample lasting 20 s and adjacent fragments with 90% overlap. For data from one patient used for validation, we obtained only one sample (20 s) in each epileptic seizure. Experimentally, the average accuracy of epileptic focus localization was 90.15%. The results are shown in Table 4.

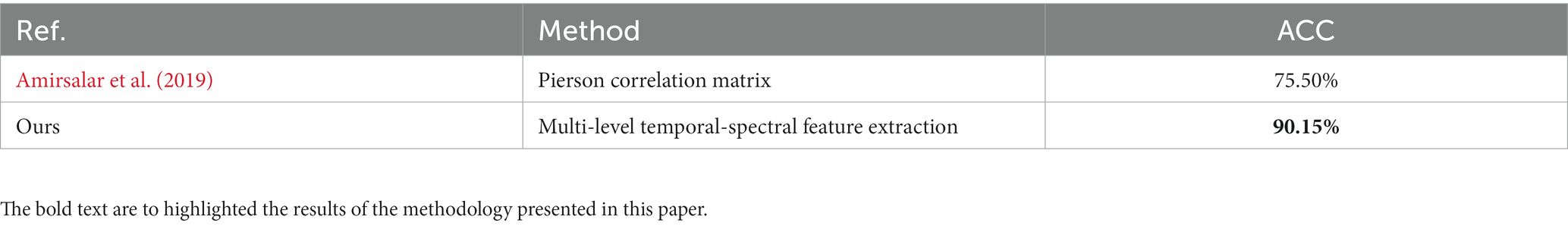

To better demonstrate the comparison between our proposed method and related literature (Amirsalar et al., 2019) on the CQMUE dataset, we can find in Table 5 that our method achieved a high accuracy of 90.15%.

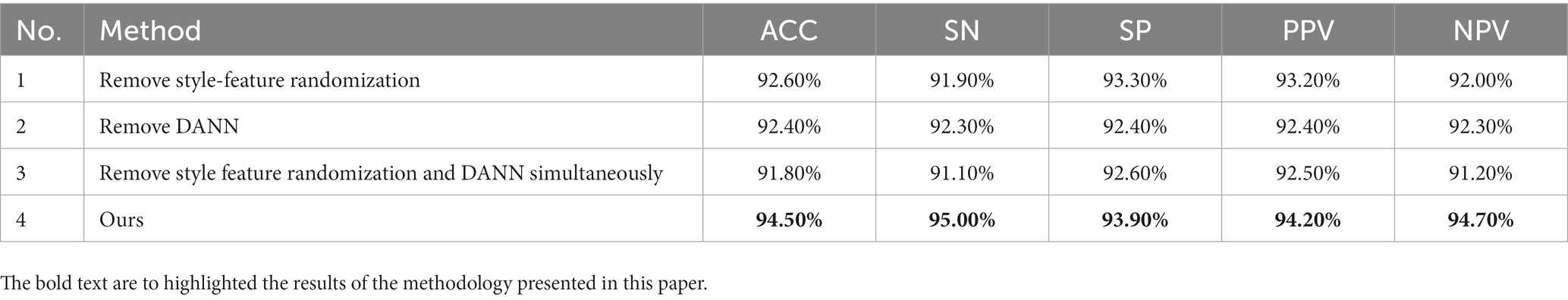

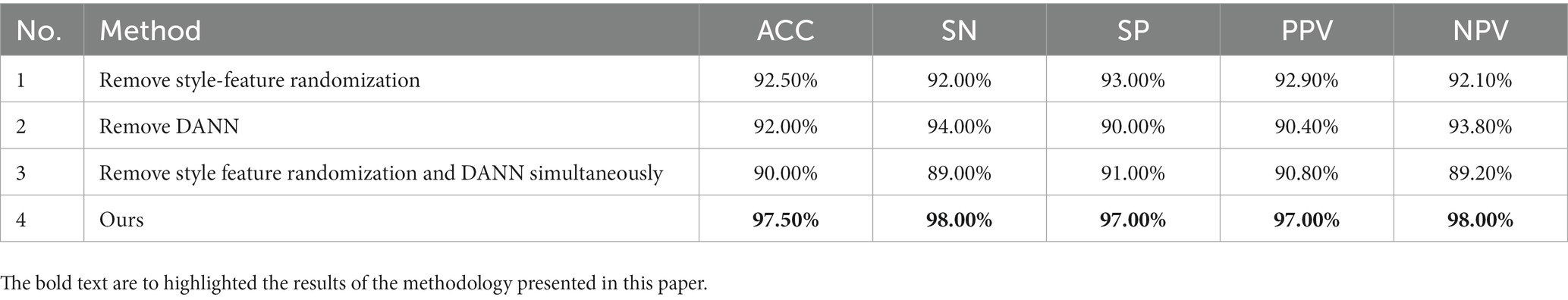

4.2 Ablation experiments with pre-trained models

To validate the performance of the style-feature randomization module and the DANN in the pre-trained model, we performed ablation experiments on the Bern-Barcelona and Bonn datasets. We tested the performance of removing the style-feature randomization module, removing the DANN, and removing both the style-feature randomization and DANN modules, and compared them with the proposed method. Tables 6, 7 show that the performance of the model decreased after removing the style-feature randomization and DANN modules on the Bern-Barcelona and Bonn datasets, respectively.

5 Conclusion

In this paper, we have proposed a deep learning model for the localization of epileptic focus. This method includes a pre-training phase and a fine-tuning phase. In the pre-training phase, the model adopted a multi-level temporal-spectral feature extraction model and an attention mechanism to enhance the feature extraction ability, achieving an average focus localization accuracy of 94.5% on the Bern-Barcelona dataset and 97.5% on the Bonn dataset, respectively. When compared with related methods, the experimental results have demonstrated that the pre-trained model outperforms competitive state-of-the-art baselines in accuracy, sensitivity, and negative predictive value. To validate the model’s actual performance, we fine-tuned our pre-trained model using the epilepsy dataset from Chongqing Medical University and conducted tests, obtaining an impressive average accuracy of 90.15%. Therefore, the superior performance of the model has demonstrated that the proposed method is highly effective for localizing epileptic focus. Next, we will develop a medical device that incorporates the proposed method to assist physicians’ clinical localization diagnosis of epileptic focus.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://www.upf.edu/web/ntsa/downloads/-/asset_publisher/xvT6E4pczrBw/content/2012-nonrandomness-nonlinear-dependence-and-nonstationarity-of-electroencephalographic-recordings-from-epilepsy-patients.

Ethics statement

The studies involving humans were approved by the First Affiliated Hospital of Chongqing Medical University. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

YY: Methodology, Software, Writing – original draft. FL: Data curation, Resources, Writing – original draft. JL: Data curation, Resources, Writing – original draft. XQ: Supervision, Writing – review & editing. DH: Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This study was supported in part by Sichuan Science and Technology Plan of China under Grants 2019ZDZX0006 and 2020YFQ0056.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Amirsalar, M., Sanjay, P. S., and Khalid, S. (2019). Oneline EEG seizure detection and localization. Algorithms 12:176. doi: 10.3390/a12090176

Andrzejak, R. G., Lehnertz, K., Mormann, F., Rieke, C., David, P., and Elger, C. E. (2001). Indications of nonlinear deterministic and finite-dimensional structures in time series of brain electrical activity: dependence on recording region and brain state. Phys. Rev. E 64, 1–8. doi: 10.1103/PhysRevE.64.061907

Azimi, S. M., Fischer, P., Körner, M., and Reinartz, P. (2019). Aerial LaneNet: lane-marking semantic segmentation in aerial imagery using wavelet-enhanced cost-sensitive symmetric fully convolutional neural networks. IEEE Trans. Geosci. Remote Sens. 57, 2920–2938. doi: 10.48550/arXiv.1803.06904

Babb, T. L., Wilson, C. L., and Isokawa-Akesson, M. (1987). Firing patterns of human limbic neurons during stereoencephalography (SEEG) and clinical temporal lobe seizures. Electroencephalogr. Clin. Neurophysiol. 66, 467–482. doi: 10.1016/0013-4694(87)90093-9

Chen, D., Wan, S., and Bao, F. S. (2017). Epileptic focus localization using discrete wavelet transform based on interictal intracranial EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 25, 413–425. doi: 10.1109/TNSRE.2016.2604393

Daoud, H., and Bayoumi, M. (2020). Deep learning approach for epileptic focus localization. IEEE Trans. Biomed. Circ. Syst. 14, 209–220. doi: 10.1109/TBCAS.2019.2957087

Fisher, R. S., Cross, J. H., French, J. A., Higurashi, N., Hirsch, E., Jansen, F. E., et al. (2017). Operational classification of seizure types by the international league against epilepsy: position paper of the ILAE commission for classification and terminology. Epilepsia 58, 522–530. doi: 10.1111/epi.13670

Gunnarsdottir, K. M., Li, A., Smith, R. J., Kang, J. Y., Korzeniewska, A., Crone, N. E., et al. (2022). Source-sink connectivity: a novel interictal EEG marker for seizure localization. Brain 145, 3901–3915. doi: 10.1093/brain/awac300

Hu, J., Shen, L., and Sun, G. (2018). “Squeeze-and-excitation networks.” in 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. pp. 7132–7141.

Kwan, P., and Brodie, M. J. (2000). Early identification of refractory epilepsy. N. Engl. J. Med. 342, 314–319. doi: 10.1056/NEJM200002033420503

Li, Y., Liu, Y., Cui, W., Guo, Y., Huang, H., and Hu, Z. (2020). Epileptic seizure detection in EEG signals using a unified temporal-spectral squeeze-and-excitation network. IEEE Trans. Neural Syst. Rehabil. Eng. 28, 782–794. doi: 10.1109/TNSRE.2020.2973434

Lima, C. A. M., and Coelho, A. L. V. (2011). Kernel machines for epilepsy diagnosis via EEG signal classification: A comparative study. Artif. Intell. Med. 53, 83–95. doi: 10.1016/j.artmed.2011.07.003

Liu, Z. B., Qu, W. Y., Liu, W. J., Li, Z. Y., and Xu, Y. J. (2015). Resource preprocessing and optimal task scheduling in cloud computing environments. Concurrency Computat. Pract. Exper. 27, 3461–3482. doi: 10.1002/cpe.3204

Morgan, V. L., Price, R. R., Arain, A., Modur, P., and Abou-Khalil, B. (2004). Resting functional MRI with temporal clustering analysis for localization of epileptic activity without EEG. NeuroImage 21, 473–481. doi: 10.1016/j.neuroimage.2003.08.031

Oren, N., Sagie, B., and Lior, W. (2021). “Permuted AdaIN: reducing the bias towards global statistics in image classification.” in 2021 IEEE/CVF conference on computer vision and pattern recognition (CVPR).

Rakhade, S. N., and Jensen, F. E. (2009). Epileptogenesis in the immature brain: emerging mechanisms. Nat. Rev. Neurol. J. 5, 380–391. doi: 10.1038/nrneurol.2009.80

Ralph, G. A., Kaspar, S., and Christian, R. (2012). Nonrandomness, nonlinear dependence, and nonstationarity of electroencephalographic recordings from epilepsy patients. Phys. Rev. E. 86:046206. doi: 10.1103/PhysRevE.86.046206

Rasheed, K., Qayyum, A., Qadir, J., Sivathamboo, S., Kwan, P., Kuhlmann, L., et al. (2021). Machine learning for predicting epileptic seizures using EEG signals: a review. IEEE Rev. Biomed. Eng. 14, 139–155. doi: 10.1109/RBME.2020.3008792

Rezaei-Dastjerdehei, M.R., Mijani, A., and Fatemizadeh, E. (2020). “Addressing imbalance in multi-label classification using weighted Cross entropy loss function.” in 27th national and 5th international Iranian conference on biomedical engineering (ICBME). pp. 333–338.

Sharma, R., Pachori, R. B., and Acharya, U. R. (2015). Application of entropy measures on intrinsic mode functions for the automated identification of focal electroencephalogram signals. Entropy 17, 669–691. doi: 10.3390/e17020669

Staljanssens, W., Strobbe, G., Holen, R. V., Birot, G., Gschwind, M., Seeck, M., et al. (2017). Seizure onset zone localization from ictal high-density EEG in refractory focal epilepsy. Brain Topogr. 30, 257–271. doi: 10.1007/s10548-016-0537-8

Stufflebeam, S. M., Liu, H., Sepulcre, J., Tanaka, N., Buckner, R. L., and Madsen, J. R. (2011). Localization of focal epileptic discharges using functional connectivity magnetic resonance imaging. J. Neurosurg. 114, 1693–1697. doi: 10.3171/2011.1.JNS10482

Sui, L., Zhao, X., Zhao, Q., Tanaka, T., and Cao, J. (2021). Hybrid convolutional neural network for localization of epileptic focus based on iEEG. Neural Plast. 2021, 6644365–6644369. doi: 10.1155/2021/6644365

Versaci, M., Angiulli, G., Foresta, F. L., Crucitti, P., Laganá, F., Pellicanó, D., et al. (2022). Innovative soft computing techniques for the evaluation of the mechanical stress state of steel plates. Appl. Intellig. Inform. 1724, 14–28. doi: 10.1007/978-3-031-24801-6_2

Wan, Z., Cheng, W., Li, M., Zhu, R., and Duan, W. (2023b). GDNet-EEG: an attention-aware deep neural network based on group depth-wise convolution for SSVEP stimulation frequency recognition. Front. Neurosci. 17:1160040. doi: 10.3389/fnins.2023.1160040

Wan, Z., Li, M., Liu, S., Huang, J., Tan, H., and Duan, W. (2023a). EEGformer: a transformer-based brain activity classification method using EEG signal. Front. Neurosci. 17:1148855. doi: 10.3389/fnins.2023.1148855

Yang, Y., Li, F., Qin, X., Wen, H., Lin, X., and Huang, D. (2023b). Feature separation and adversarial training for the patient-independent detection of epileptic seizures. Front. Comput. Neurosci. 17:1195334. doi: 10.3389/fncom.2023.1195334

Yang, Y., Qin, X., Wen, H., Li, F., and Lin, X. (2023a). Patient-specific approach using data fusion and adversarial training for epileptic seizure prediction. Front. Comput. Neurosci. 17:1172987. doi: 10.3389/fncom.2023.1172987

Yaroslav, G., Evgeniya, U., Hana, A., Pascal, G., Hugo, L., François, L., et al. (2016). Domain-adversarial training of neural networks. J. Mach. Learn. Res. 17, 2096–2030. doi: 10.48550/arXiv.1505.07818

Zhang, Q., Ding, J., Kong, W., Liu, Y., Wang, Q., and Jiang, T. (2021). Epilepsy prediction through optimized multidimensional sample entropy and Bi-LSTM. Biomed. Signal Process. Control. 64:102293. doi: 10.1016/j.bspc.2020.102293

Zhang, C. H., Lu, Y., Brinkmann, B., Welker, K., Worrell, G., and He, B. (2015). Lateralization and localization of epilepsy related hemodynamic foci using presurgical fMRI. Clin. Neurophysiol. 126, 27–38. doi: 10.1016/j.clinph.2014.04.011

Zhao, X., Solé-Casals, J., Zhao, Q., Cao, J., and Tanaka, T. (2021). “Multi-feature fusion for epileptic focus localization based on tensor representation.” in 2021 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC). pp. 1323–1327.

Zhao, X., Sui, L., Tanaka, T., Cao, J., and Zhao, Q. (2020). “Epileptic focus localization based on iEEG plot images by using convolutional neural network.” in The 12th international conference on bioinformatics and computational biology. 70: 173–181.

Keywords: epileptic focus localization, multi-modal EEG, transfer learning, adversarial training, patient-independent

Citation: Yang Y, Li F, Luo J, Qin X and Huang D (2023) Epileptic focus localization using transfer learning on multi-modal EEG. Front. Comput. Neurosci. 17:1294770. doi: 10.3389/fncom.2023.1294770

Edited by:

Zhijiang Wan, Nanchang University, ChinaReviewed by:

Mario Versaci, Mediterranea University of Reggio Calabria, ItalyHai Tan, Nanjing Audit University, China

Guoqiang Li, Linyi University, China

Copyright © 2023 Yang, Li, Luo, Qin and Huang. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yong Yang, yangyong@cigit.ac.cn; Feng Li, 954036024@qq.com

Yong Yang

Yong Yang Feng Li

Feng Li Jing Luo4

Jing Luo4