Spatiotemporal Patterns of Adaptation-Induced Slow Oscillations in a Whole-Brain Model of Slow-Wave Sleep

- 1Department of Software Engineering and Theoretical Computer Science, Technische Universität Berlin, Berlin, Germany

- 2Bernstein Center for Computational Neuroscience Berlin, Berlin, Germany

- 3Department of Neurology, University Medicine, Greifswald, Germany

- 4German Center for Neurodegenerative Diseases, Greifswald, Germany

During slow-wave sleep, the brain is in a self-organized regime in which slow oscillations (SOs) between up- and down-states travel across the cortex. While an isolated piece of cortex can produce SOs, the brain-wide propagation of these oscillations are thought to be mediated by the long-range axonal connections. We address the mechanism of how SOs emerge and recruit large parts of the brain using a whole-brain model constructed from empirical connectivity data in which SOs are induced independently in each brain area by a local adaptation mechanism. Using an evolutionary optimization approach, good fits to human resting-state fMRI data and sleep EEG data are found at values of the adaptation strength close to a bifurcation where the model produces a balance between local and global SOs with realistic spatiotemporal statistics. Local oscillations are more frequent, last shorter, and have a lower amplitude. Global oscillations spread as waves of silence across the undirected brain graph, traveling from anterior to posterior regions. These traveling waves are caused by heterogeneities in the brain network in which the connection strengths between brain areas determine which areas transition to a down-state first, and thus initiate traveling waves across the cortex. Our results demonstrate the utility of whole-brain models for explaining the origin of large-scale cortical oscillations and how they are shaped by the connectome.

Introduction

Slow oscillations (SOs) are a hallmark of slow-wave sleep (SWS), during which neuronal activity slowly (<1 Hz) transitions between up-states of sustained firing and down-states in which the neurons remain almost completely silent (Steriade et al., 1993; Neske, 2016). During SWS, large cortical networks collectively depolarize and hyperpolarize, producing high-amplitude oscillations of the local field potential which can be measured in electroencephalography (EEG) (Massimini et al., 2004). These oscillations play a crucial role for memory consolidation during deep sleep (Diekelmann and Born, 2010).

Intracranial in-vivo recordings (Nir et al., 2011) of the human brain, as well as EEG (Vyazovskiy et al., 2011; Malerba et al., 2019), show that SOs can cover a wide range of participation of brain areas. While the majority of SOs remain locally confined in a few brain regions, some can recruit the entire brain. They preferably originate in anterior parts and propagate to posterior parts of the cortex, like a traveling wave (Massimini et al., 2004; Nir et al., 2011; Mitra et al., 2015; Malerba et al., 2019). In-vitro recordings of isolated cortical tissue (Sanchez-Vives and McCormick, 2000; Capone et al., 2019) demonstrate that SOs can be generated in the absence of any external neural inputs. Taken together, these observations support the idea that SOs are generated locally in an individual brain region, while the synchronized propagation of SOs across the cortex is shaped by the global structure of the human connectome.

While not all details of the cellular processes underlying SOs are known, hyperpolarizing spike-frequency adaptation currents, mediated by activity-dependent potassium concentrations, are thought to play a major role in terminating the up-state (Sanchez-Vives and McCormick, 2000; Neske, 2016). This mechanism has been explored in models of isolated cortical masses where activity-dependent adaptation lead to slow oscillations between high-activity up-states and a low-activity down-states (Tartaglia and Brunel, 2017; Cakan and Obermayer, 2020; Nghiem et al., 2020).

In this paper, we address the question of how brain-wide spatiotemporal SO patterns emerge using a large-scale network model of the human brain during SWS. Whole-brain models have been shown to be capable of reproducing functional resting-state patterns of the awake brain (Deco et al., 2011; Breakspear, 2017) from functional magnetic resonance imaging (fMRI) (Deco et al., 2009; Cabral et al., 2017), EEG (Endo et al., 2020) and MEG (Cabral et al., 2014; Deco et al., 2017). While a pioneering study (Deco et al., 2014) showed that a whole-brain model with adaptation-induced SOs can be fitted to resting-state fMRI, following modeling work did not include SO activity (Jobst et al., 2017; Ipiña et al., 2020) and, thus, did not explore the spatiotemporal activity patterns present during slow-wave sleep on a brain-wide scale.

To address the emergence of brain-wide SO activity during sleep, we construct a whole-brain model in which cortical brain areas are represented by a mean-field neural mass model (Augustin et al., 2017; Cakan and Obermayer, 2020) with a bistability of up- and down-states. Adding a local spike-frequency adaptation mechanism to each brain area induces transitions between these states which in turn affect the global brain dynamics. A state-space analysis of the whole-brain model reveals several possible dynamical attractors of the system. To determine optimal model parameters, and hence, the optimal location of the model in state space, we then use a multi-objective evolutionary optimization framework to fit the whole-brain model to resting-state fMRI and sleep EEG recordings simultaneously. This procedure identifies an operating point in which the whole-brain model produces realistic sleep-like SO activity, which we characterize in detail.

Specifically, we find that N3 sleep EEG power spectra are only accurately reproduced if spike-frequency adaptation is included and the adaptation strength is at values close to the boundary of the bifurcation to a state of globally synchronous and self-sustained SOs. Only in this regime, a continuum of locally confined and globally synchronous SOs can be observed, which is consistent with reports of in-vivo SO statistics during SWS (Massimini et al., 2004; Nir et al., 2011; Kim et al., 2019; Nghiem et al., 2020) and can therefore be considered as a condition for creating a realistic model of whole-brain SOs. In line with these experiments, we also find that local oscillations are shorter, more frequent, and have a lower amplitude, compared to global ones.

Global oscillations that involve multiple brain areas travel as "waves of silence" across the cortex with a preferred origin of waves in prefrontal areas. The heterogeneous distribution of connection strengths in the human connectome guides the propagation of these waves from anterior to posterior regions. Finally, we explore the role of spike-frequency adaptation of the underlying neurons in affecting brain-wide SO statistics, a mechanism which is subject to cholinergic modulation when the real brain falls asleep (McCormick and Williamson, 1989; Nghiem et al., 2020).

Our results demonstrate the utility of whole-brain models for representing a wider range of brain dynamics beyond the resting-state, which highlights their potential for elucidating the origin and the dynamical properties of large-scale brain oscillations in general.

Results

Neural Mass Model of a Cortical Brain Region

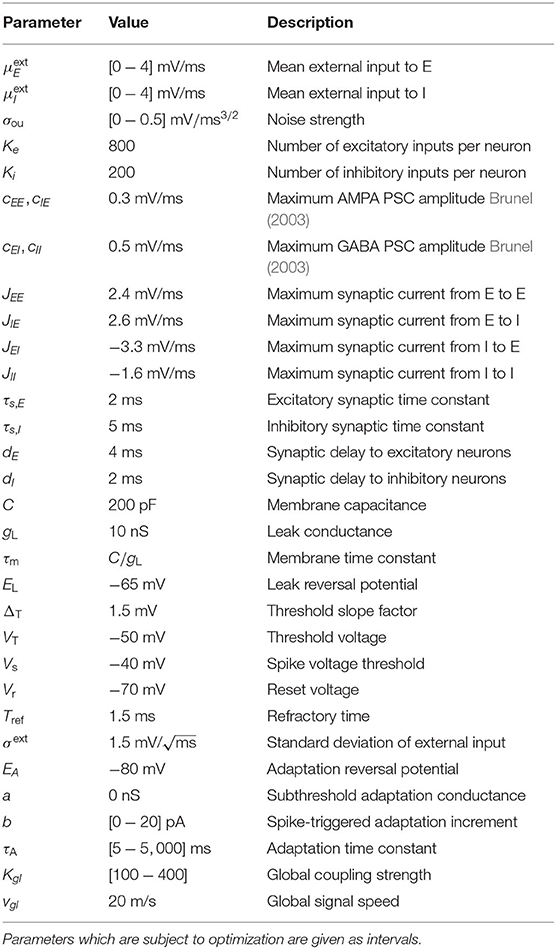

A whole-brain network model is constructed by combining a model of an isolated cortical region with information regarding the structural connectivity of the human brain (Figure 1). A mean-field neural mass model (Augustin et al., 2017; Cakan and Obermayer, 2020) derived from populations of excitatory (E) and inhibitory (I) adaptive exponential integrate-and-fire (AdEx) neurons represents a single cortical region as a node in the whole-brain network. The neural mass model has been previously validated against detailed simulations of spiking neural networks (Augustin et al., 2017; Cakan and Obermayer, 2020). The excitatory subpopulations of every brain area are equipped with an activity-dependent adaptation mechanism.

Figure 1. Construction of the whole-brain model. Structural connectivity is obtained from probabilistic DTI tractography, and the nodes of the brain network are defined by 80 cortical regions of the AAL2 atlas. The neural mass model represents a single brain area and consists of an excitatory (red) and an inhibitory (blue) population. Long-range connections between areas are via the excitatory populations. All nodes receive a background input currents with means and noise variance σou. The excitatory firing rate of each region is converted to a BOLD signal using the hemodynamic Balloon-Windkessel model. For model optimization, the power spectrum of the average excitatory firing rate is compared to the mean EEG power spectrum during sleep stage N3. The functional connectivity (FC) and its temporal dynamics (FCD) are compared to empirical FC and FCD matrices. For details see Methods.

For a sparsely connected random network of N → ∞ AdEx neurons, the distribution p(V) of membrane potentials and the mean population firing rate rα of population α ∈ {E, I} can be calculated using the Fokker-Planck equation (Brunel, 2000). Here, we use a low-dimensional linear-nonlinear cascade model (Fourcaud-Trocmé et al., 2003; Ostojic and Brunel, 2011) of the Fokker-Planck equation which captures its steady-state and transient dynamics via a set of ordinary differential equations and nonlinear transfer functions Φ(μα, σα) with the mean membrane current μα and standard deviation σα.

Model Equations

Every brain area is represented by a node which consists of an excitatory (E) and inhibitory (I) population. For every node, the dynamics of the mean membrane currents are governed by the differential equation:

Here, μα describes the total mean membrane currents, the currents induced by synaptic activity from internal connections within a brain area and external connections from other brain areas, represents the currents from external input sources, and represents an external noise source which is described by an Ornstein-Uhlenbeck process with standard deviation, i.e., noise strength, σou, simulated independently for each subpopulation α. The synaptic currents depend on the structural connectivity, i.e., the connection strengths and delays between brain regions, and the global coupling strength parameter Kgl which scales the strengths of all connections globally. The input-dependent adaptive timescale τα = Φτ(μα), the mean membrane potential , and the instantaneous population spike rate rα = Φr(μα) of every subpopulation are determined at every time step using precomputed transfer functions (Supplementary Figure 1).

Every excitatory subpopulation is equipped with a somatic spike-frequency adaptation mechanism. The population mean of the adaptation currents ĪA is given by Augustin et al. (2017)

The adaptation currents effectively act like an additional inhibitory membrane current, i.e., Φr,τ,V(μα − ĪA/C). The full set of model equations, including the synaptic equations and the equations for the second statistical moments of all dynamical variables are provided in detail in the Methods section.

State-Space Description of the Whole-Brain Model

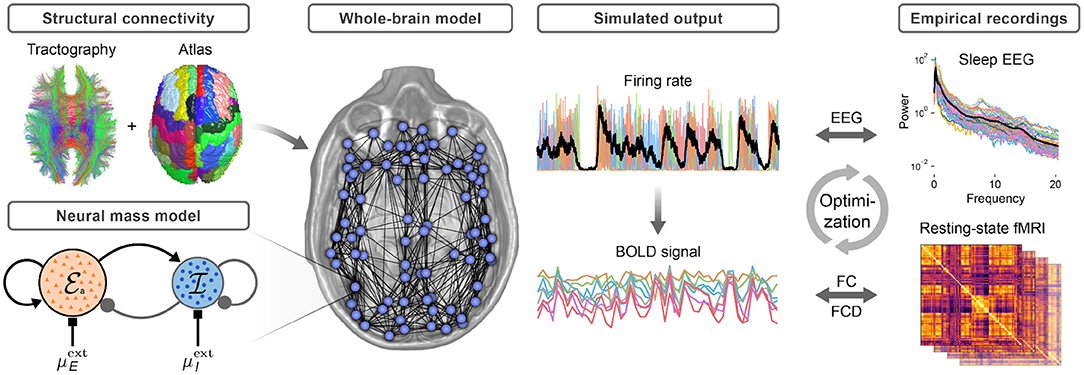

Figure 2 a shows the state space of an isolated E-I node and the coupled whole-brain network in terms of the mean background input currents to all E and I subpopulations. The state space of the whole-brain model is closely related to the state space of the isolated E-I system. The oscillatory and the bistable states of the brain network are inherited from the isolated nodes and the transitions between states take place at similar locations in parameter space. Due to the heterogeneous connection strengths for different brain regions, in the whole-brain model, transitions between the depicted states happen gradually for one brain region at a time as the input currents to all brain regions are increased simultaneously. However, the range of the input current strengths at which these gradual transitions happen are so small such that when noise is added to the system, we only observe homogeneous states across all areas (see the Methods section for a more detailed discussion).

Figure 2. State space of the brain network. (A) Single node (top row) and the whole-brain network (bottom row) without (b = 0 pA, left column) and with spike-frequency adaptation (b = 20 pA, right column). Horizontal and vertical axes denote the mean input to the excitatory (E), , and to the inhibitory (I) populations, . Colors denote the maximum firing rate rE of all E populations. Regions of low-activity down-states (down) and high-activity up-states (up) are indicated. Dashed green contours indicate bistable (bi) regions where both states coexist. Solid white contours indicate oscillatory states with fast E-I (LCEI) and slow excitation-adaptation (LCEA) limit cycles. (B) Time series of the firing rate rE (black) and the adaptation current IA (red) of one node (left precentral gyrus) in the whole-brain network at several locations in the state space: (1) Bistable up- and down-states are reached through a decaying stimulus (blue) that is delivered to all nodes. (2) Finite adaptation causes slow oscillations in the LCEA region. (3) When external noise is added, fast oscillations already occur outside but close to the LCEI region. (4) With adaptation, fast oscillations are slowly modulated. (5) Noise-induced down-to-up transitions with adaptation occur close to the LCEI region. (6) Up-to-down transitions with down-states shown as shaded areas. Parameters are Kgl = 300, τA = 600ms, σou = 0 mV/ms3/2 in (A, b1, b2), and σou = 0.1 mV/ms3/2 else. , of marked locations are 1: (2.3, 2.8), 2: (2.5, 2.0), 3: (0.3, 0.5), 4: (0.4, 0.5), 5: (2.6, 2.5), and 6: (2.5, 2.0) mV/ms. All other parameters are given in Table 1.

Without adaptation, the system can occupy several dynamical states, depending on the mean external inputs to E and I: a down-state with almost no activity, an up-state with a constant high firing rate corresponding to an irregular asynchronous firing state on the microscopic level (Brunel, 2000; Cakan and Obermayer, 2020), a bistable region where these two fixed-point states coexist (Figure 2B1), and a fast oscillatory limit-cycle region LCEI (Figure 2B3) that arises from the coupling of the E and I subpopulations with frequencies between 15 and 35 Hz. Without noise, oscillations in different brain areas are at near-perfect synchrony with only the inter-areal signal transmission delays and slight differences in oscillation frequency counteracting perfect synchrony.

Adaptation, Bistability, and Slow Oscillations

The activity-dependent adaptation mechanism in the excitatory subpopulations lead to hyperpolarizing currents that destabilize the high-activity up-state in the bistable regime. As we increase the adaptation strength, a Hopf bifurcation (Tartaglia and Brunel, 2017; Cakan and Obermayer, 2020) gradually replaces the bistable regime with a second limit cycle LCEA (Figure 2B2,4) that produces slow oscillations between the (formerly stable) up- and the down-state. The oscillation frequencies range from around 0.5–2 Hz, depending on the external inputs and the adaptation parameters. As with the fast limit cycle, when the whole-brain network is parameterized in the slow adaptation limit cycle, without noise, the resulting oscillations are at near-perfect synchrony across all brain regions.

The feedback mechanism leading to this oscillation can be summarized as follows: Without adaptation, in the region of bistability, the up- and down-states remain stable for an arbitrarily long time. With adaptation, the high firing rate in the up-state leads to an increase of the adaptation currents (controlled by the adaptation strength parameter b). These inhibitory currents weaken the response of active neurons, which causes the population activity to decay to the down-state. In the down-state, the activity-driven adaptation currents decrease (with a slow timescale τA) until the recurrent excitation is strong enough to drive the system back to the up-state, ultimately causing a slow oscillation between the two originally bistable states.

Therefore, when the system is placed in the bistable region, the interplay of the adaptation strength b, the adaptation timescale τA, the strength of mean external inputs, and fluctuations in the form of external noise can lead to stochastic switching between up- and down-states (Figure 2B5,6). The resulting pattern of state transitions resembles the bistable neural activity often observed experimentally and in computational models (Sanchez-Vives and McCormick, 2000; D'Andola et al., 2018; Capone et al., 2019; Nghiem et al., 2020).

Multi-Objective Evolutionary Optimization

Key parameters such as the mean external inputs and , the global coupling strength Kgl, the adaptation strength b, the adaptation time scale τA, and the strength of the external noise σou determine the system's dynamics. We perform an evolutionary optimization on all of these parameters simultaneously in order to produce a model with an optimal fit to empirical brain recordings.

Evolutionary algorithms are stochastic optimization methods that are inspired by the mechanisms of biological evolution and natural selection. The use of a multi-objective optimization method, such as the NSGA-II algorithm (Deb et al., 2002), is crucial in a setting in which a model is fit to multiple independent targets or to data from multiple modalities. In our case, these are features from fMRI and EEG recordings. In a multi-objective setting, not one single solution but a set of solutions can be considered optimal, called the Pareto front, which refers to the set of solutions that cannot be improved in any one of the objectives without diminishing its performance in another one.

For each parameter configuration, a goodness of fit is determined between the simulated model output and the empirical dataset. The simulated BOLD functional connectivity (FC) and the functional connectivity dynamics (FCD) matrices are compared to the empirical fMRI data. While fMRI recorded during deep sleep would be preferable, only awake resting-state fMRI recordings were available. However, as we elaborate in more detail in the Discussion section, fMRI FC recorded during sleep and awake show a remarkable similarity justifying the use of resting-state fMRI for the purpose of the present study. The frequency spectrum of the simulated firing rate is compared to EEG power spectra recorded during sleep stage N3. Fitness scores are then averaged across all subjects in the empirical dataset.

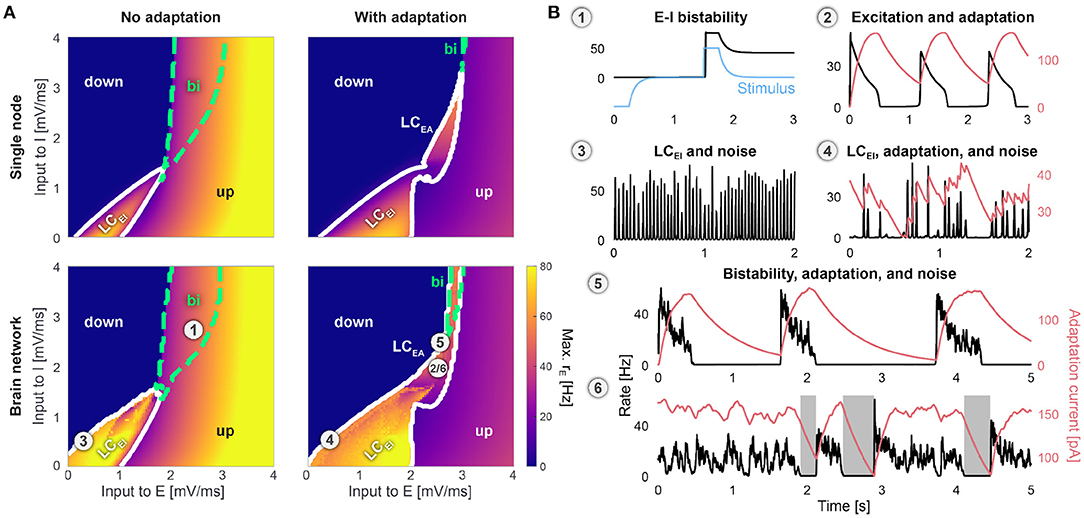

The optimization is carried out in incremental steps such that the contribution of each step can be assessed individually. The model is first fit to fMRI data only and the optimization is carried out without adaptation (b = 0) and with adaptation (b ≥ 0, τA are allowed to vary) separately. The quality of the fitting results to fMRI data is comparable to previous works (Cabral et al., 2017; Jobst et al., 2017; Demirta et al., 2019) (Supplementary Figure 3B). We then include EEG data as an additional objective in the optimization to derive models with realistic firing rate power spectra. The resulting parameters that produce a good fit to either optimization scheme are shown in Figure 3B and Supplementary Figure 3A. Details on the optimization procedure are provided in the Methods.

Figure 3. Optimization results. (A) Regions of good fits in the multi-objective optimization. Shown are fMRI fits with FC correlation > 0.35 with the empirical data, FCD fits with KS distance < 0.5, and EEG fits with a power spectrum correlation > 0.7. fMRI fits are shown without adaptation (bright green) and with adaptation (dark green). All EEG fits with down-to-up (blue) and up-to-down oscillations (red) are shown separately. Lines indicate boundaries between the four different dynamical states of the brain network in Figure 2A with b = 0pA (top panel) and 20pA (bottom panel). (B) Best values of input current parameters to the E and I populations. The colors represent the results from different optimization setups. Good fMRI-only fits appear at the state transition line of the fast limit cycle LCEI without adaptation, b = 0pA (bright green) and close to the bistable region with adaptation b ≥ 0pA (dark green). Good fMRI+EEG fits are found close to the adaptation limit cycle LCEA (blue and red). The star symbol indicates the parameters of the sleep model in Figure 4. (C–F) Example average firing rate time series of good fits for all different optimizations. (C) Fit to fMRI data only with b = 0pA (D) and with adaptation b ≥ 0pA. Fit to fMRI+EEG reveals two classes of solutions (E) with long down-states and (F) with in-vivo-like long up-states. All parameters are given in Table 1.

Fit to Empirical Data: Up-to-Down and Down-to-up Oscillations

Without adaptation, the region of good fMRI fits lies close to the line that marks the transition from a silent down-state to the fast E-I limit cycle LCEI (Figure 3A, top panel and Figure 3B, bright green dots). The activity in this region shows noisy oscillations with frequencies between 15 and 35 Hz and brief excursions to the silent down-state (Figure 3C).

The region of good EEG fits, however, lies in the bistable regime at the boundary to the down-state. Here, noise-induced transitions between up- and down-states produce low-frequency components necessary for a good fit to the empirical power spectrum. Therefore, when no adaptation is present, the regions of good fit to fMRI and EEG are disjoint in state space making it impossible to produce a model that fits to both data modalities simultaneously.

With adaptation, however, the bistable region is replaced by a new oscillatory limit cycle LCEA around which new regions of good fMRI fits appear (Figure 3A, bottom panel). In these solutions, the firing rates slowly oscillate between up- and down-states (Figures 3D–F). The power spectrum is dominated by this slow oscillation with a 1/f-like falloff, similar to the empirical EEG power spectrum during SWS (Supplementary Figure 5). It should be noted that this 1/f-like falloff is not caused by the noise input to the system alone, since it is not present when the system is parameterized in the fast limit cycle, for example, but originates from the slow transitions between up- and down-states with a stochastic frequency and state duration.

As a result of the optimization, two classes of good solutions that simultaneously fit well to fMRI and EEG data can be observed: In down-to-up solutions (Figures 2B5, 3E), all nodes remain silent for most of the time and the network exhibits short and global bursts of up-state activity. In up-to-down solutions (Figures 2B6, 3F), however, the activity of the network is in the up-state most of the time and slow oscillations are caused by irregular transitions to the down-state with a varying degree of synchrony across brain areas. Compared to down-to-up solutions, up-to-down solutions require stronger external inputs to the excitatory populations (medians 3.36 vs. 2.73 mV/ ms, Figure 3B), stronger noise σou (0.36 vs. 0.27 mV/ ms 3/2, Supplementary Figure 3A), and a weaker adaptation b (3.8 vs. 9.9 pA).

Sleep Model

Tonic firing with irregular transitions to the down-state is indeed typically observed during deep sleep in intracranial in-vivo recordings of humans and other mammals (Vyazovskiy et al., 2009; Nir et al., 2011). To present the results from the optimization, we randomly pick one of the parameter configurations from the set of up-to-down solutions that resulted from the evolutionary optimization (star in Figure 3B and Supplementary Figure 3A) as our reference sleep model. This model was randomly selected from a uniform distribution of all candidate up-to-down fits (red dots in Figure 3B). We did not consider the down-to-up fits since they show mostly down-state activity. To ensure that the chosen model is representative for the remaining up-to-down candidates, we confirmed the results reported below for the 100 best up-to-down individuals resulting from the evolution (Supplementary Figure 6).

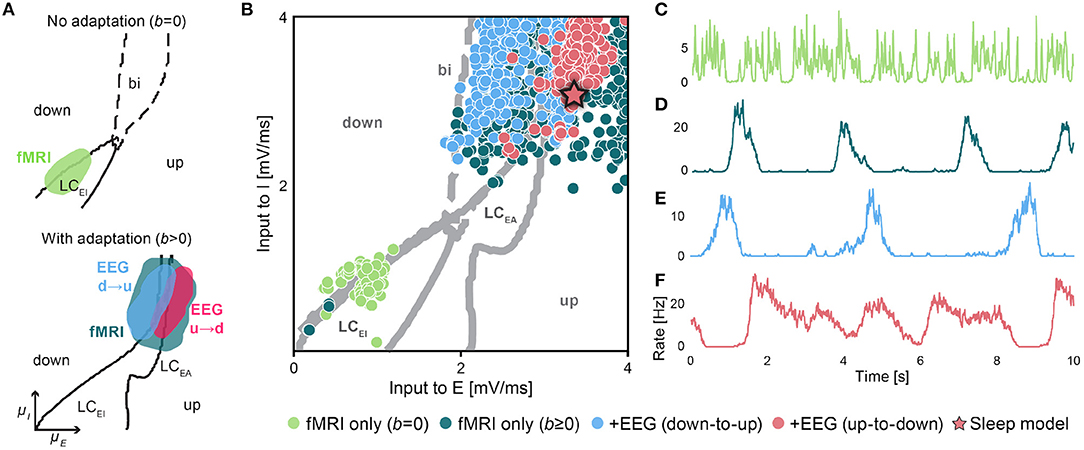

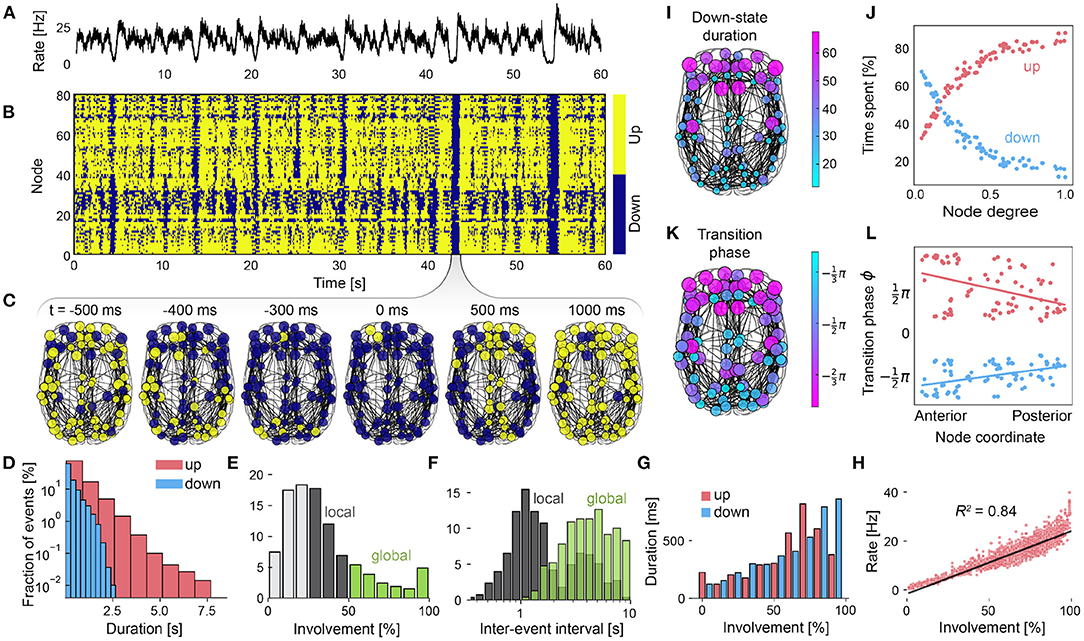

In Figure 4A, SOs are visible in the average firing rate across all brain areas. In the corresponding node-wise state time series in Figure 4B, global oscillations that involve the entire brain (cf. Figure 4C) are visible as vertical lines, and spatially confined oscillations appear as small vertical patches.

Figure 4. Dynamics of the whole-brain sleep model. (A) Firing rate averaged across all nodes of the whole brain network. (B) State time series of all nodes with active up- (yellow) and silent down-states (blue). (C) Snapshots of state time series plotted on the brain network centered around a global slow wave at t=43 s. (D) The durations of up- (red) and down-states (blue) are exponentially distributed. (E) Distribution of the down-state involvement which measures the fraction of participating brain regions. (F) Inter-event interval distributions of local (gray) and global oscillations (green). (G) Mean state durations depend on the involvement in up- and down-states. (H) The excitatory firing rate depends on the up-state involvement of brain regions. Every dot represents a time point of the simulation. Linear regression line is shown in black with regression coefficients R2 = 0.84, p < 0.001. (I) Mean down-state duration of areas. Color indicates relative time spent in the down-state. (J) State durations depend on each brain area's degree. (K) Mean of the up-to-down transition phases of all areas (relative to the global oscillation phase ϕ, see Methods). Down-states are preferably initiated in anterior areas. (L) Down-state transition phases are positively correlated with the anterior-posterior coordinates of brain areas, i.e., they travel from anterior to posterior regions. Up-state transitions behave in an opposite manner. Parameters are , , b = 3.2pA, τA = 4765ms, Kgl = 265, . All other parameters are given in Table 1.

Up- and down-state durations follow a close to exponential distribution with up-states having longer durations overall (Figure 4D), which is in agreement with human in-vivo data (Nghiem et al., 2020). The involvement of brain areas in down-state oscillations, i.e., the proportion of nodes that simultaneously take part in the down-state, is skewed toward lower values (Figure 4E), meaning that most oscillations remain local. Only a small fraction of down-state oscillations involve the whole brain, similar to statistics from human intracranial recordings (Nir et al., 2011). The mean down-state involvement of all brain regions was 32% with 83% of oscillations involving less than half of the brain. Comparing the inter-event intervals (Figure 4F) of local (e.g., oscillations that involve between 25 and 50% of areas) and global oscillations (involvement above 50%), we see that local oscillations happen more frequently than global oscillations, which was also observed experimentally (Kim et al., 2019).

Down-states last longer, when more brain areas are involved (Figure 4G), i.e., when areas receive less input on average. Due to the adaptation mechanism of excitatory populations, up-states follow a non-monotonic relationship: when more than 65% of areas are involved, excessive excitation leads to a faster increase of adaptation currents which, in turn, shorten up-states. The firing rates across all regions also depend on the involvement of brain areas (Nir et al., 2011) which means that the more nodes participate, the larger the average firing rate amplitudes of SOs become (Figure 4H). This relation holds for the average firing rate across all areas, as well the firing rates of individual brain areas, as each of them receives more input when other brain areas are in the up-state as well (not shown). In summary, due to the interactions of different brain areas, global oscillations are longer, slower, and have a larger amplitude compared to local ones.

In our computational model, the dynamics of SOs and their propagation across the cortex are shaped by the structural properties of the connectome. Since anterior regions have a lower node degree compared to posterior ones (Supplementary Figure 2E), areas which spent the most time in the down-state are part of the frontal and the temporal lobe (Figure 4I and Supplementary Figure 7A). Nodes with a higher degree receive stronger inputs from other areas, lengthening the time spent in up-states and shortening down-states (Figure 4J).

Traveling Waves of Silence

SOs are known to appear as traveling waves which tend to originate in frontal regions and travel to posterior parts of the brain within a few 100 ms, recruiting multiple brain regions during their propagation (Massimini et al., 2004; Nir et al., 2011). Our next goal is to determine whether our model displays a directionality in the propagation of SOs. Since the only differences between brain areas are due to their connectivity, network properties are expected to play an important role in how oscillations propagate.

Figure 4K shows the average timing of the individual up-to-down transitions for every brain area with respect to the global down-state measured by a transition phase as determined from the involvement time series (see Methods and Supplementary Figures 8A,B). The phases for both up-to-down and down-to-up transitions strongly correlate with the brain areas coordinates along the anterior to posterior axis (Figure 4L). Down-states tend to appear earlier in the anterior brain and propagate to posterior areas (Figure 4L, blue line. Linear regression slope m = 3.6 × 10−3, R = 0.46, p < 10−3). Subsequent transitions to the up-state happen earlier in posterior and later in anterior areas (Figure 4L, red line, m = −6.0 × 10−3, R = −0.41, p < 10−3). Brain areas that transition to the down-state last, tend to initiate up-states first (Supplementary Figure 8C), leading to a reversed down-to-up wave front propagation. The respective mean transition phases of all regions are shown in Supplementary Figure 7B.

Adaptation and Noise Determine State Statistics

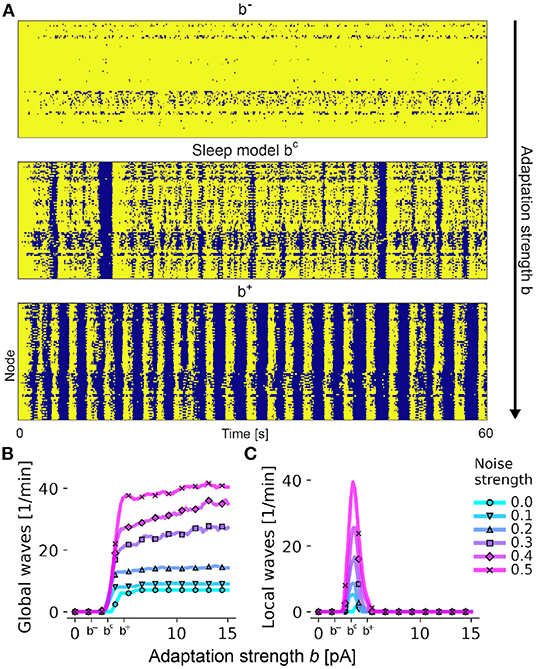

The interplay of noise and adaptation, which are both subject to cholinergic modulation in the brain (Nghiem et al., 2020), determines whether SOs remain local or recruit the entire cortex, greatly affecting the SO statistics. Taking the sleep model of Figure 4 with intermediate adaptation strength b = 3.2 pA as a reference, a reduction of the adaptation strength by 50% (b = 1.6 pA) leads to the disappearance of global oscillations (Figure 5A). On the contrary, higher adaptation strengths (b = 4.8 pA) lead to maximum involvement of brain areas for almost every SO. This transition is accompanied by an increase of synchrony across all brain areas (Supplementary Figure 9B).

Figure 5. Adaptation shapes the spatial pattern of slow-wave activity. (A) State time series across all nodes in the brain network, with up-states in bright yellow and down-states in dark blue. Values for the adaptation strength b from the top panel to the bottom panel are 1.6, 3.2, and 4.8pA. (B) Number of global waves in which at least 50% of all brain regions participate in an SO down-state as a function of the adaptation strength b and the input noise strength σou (measured in mV/ms3/2, colored lines). Tick marks b−, bc, and b+ indicate values for each in (A) respectively. (C) Number of local waves per minute with an involvement of 25–50% of brain areas. All other parameters are as in Figure 4.

The adaptation strength b acts as a bifurcation parameter, and small adjustments around its threshold value cause a sudden change of the network's dynamics. Figure 5B shows how the number of global oscillations per minute quickly increases after a critical value bc close to 3.2 pA is crossed, which is equal to the adaptation strength of the optimized sleep model in Figure 4. Beyond the threshold, the number of global waves does not significantly increase. For values lower than bc, all nodes stay in the up-state indefinitely (Supplementary Figure 9A). Local waves are observed only around bc (Figure 5C), indicating that the system is only able to generate a wide range of local and global oscillations in this regime. Here, the system is in a state of maximum metastability (Supplementary Figure 9C) which is an indication for the emergence of complex dynamical patterns. All up-to-down solutions with a good fit to the empirical data are close to the threshold value bc (Supplementary Figure 3A).

Discussion

We presented a biophysically grounded human whole-brain model of slow-wave sleep (SWS) that reproduces the observed resting-state fMRI functional connectivity (FC) and its dynamics (FCD), and that captures the EEG frequency spectrum during SWS which is dominated by low frequencies. The model was fitted to multimodal data from fMRI and EEG by the use of a multi-objective evolutionary algorithm (Deb et al., 2002). Good fits to both measurements were only achieved if an activity-dependent adaptation mechanism to the excitatory subpopulations was included. At critical values of the adaptation strength, this resulted in a model in which the interplay of adaptation currents and noise creates a dynamically rich activity with irregular switching between up- and down-states, similar to the underlying brain activity of slow oscillations (SOs) during SWS (Massimini et al., 2004; Nir et al., 2011; Nghiem et al., 2020).

Comparison to Human SWS

As a result of the optimization procedure, two classes of well-fitting models emerged (Figure 3), which we named down-to-up and up-to-down solutions. Down-to-up solutions did not produce realistic in-vivo SO statistics, since here the simulated brain activity was silent for most of the time. These solutions were more similar to in-vitro recordings of SOs (Sanchez-Vives and McCormick, 2000; D'Andola et al., 2018) where down-states of longer duration than observed during in-vivo SWS (Nir et al., 2011; Nghiem et al., 2020) are interrupted by short bursts of up-state activity.

In up-to-down solutions, up-states were of longer duration and SOs were produced by transitions to down-states which represent brief off-periods in neuronal activity. Up-to-down solutions require stronger excitatory input currents, stronger noise fluctuations, and a weaker adaptation strength (Figure 3B and Supplementary Figure 3A), which all facilitate the initiation of the up-state and help sustain it. Only up-to-down solutions reproduced in-vivo SO statistics during SWS. Intracranial in-vivo data from humans (Nir et al., 2011; Nghiem et al., 2020) and other mammals (Holcman and Tsodyks, 2006; Vyazovskiy et al., 2009) show that up-states in the cortex are longer than down-states, i.e., that cortical activity is in a tonic and irregular firing state for the most time.

One explanation for this difference might be that the up-state transitions observed in-vivo are foremost driven by the convergence of external inputs to brain areas (Chauvette et al., 2010; Nir et al., 2011) and fluctuations thereof (Jercog et al., 2017), which are both absent in isolated in-vitro tissue. This is supported by our modeling results where external mean inputs and noise strengths were key parameters separating both classes.

Balance Between Local and Global Oscillations at Critical Values of the Adaptation Strength

Up-to-down transitions can remain local because transitions of individual brain regions have only a weak effect on other brain areas. Global up-to-down transitions happen only when the local adaptation feedback currents of a large fraction of brain areas synchronize. Down-to-up transitions, however, spread by exciting other brain areas in an all-or-nothing fashion. In fact, most up-to-down transitions remain local (Figure 4E), which is a well-described property of SOs (Nir et al., 2011; Vyazovskiy et al., 2011; Malerba et al., 2019).

Global waves last longer, have a higher amplitude (Supplementary Figures 8A,B), and occur less frequently (Figure 4F) than local waves. In the experimental literature these different characteristics of local and global waves have been used to discern delta waves from SOs (Kim et al., 2019). However, there is still no consensus on whether delta waves and SOs are generated by the same or a qualitatively different underlying neural mechanism (Dang-Vu et al., 2008). In the model, local oscillations have these properties because participating regions receive more input from neighboring areas. Here, oscillations with similar characteristics of delta waves do not emerge because of a different generating mechanism, but solely due to the fact that the individual brain areas are embedded in a network and that the model operates in a regime with a broad involvement distribution.

Only at these critical values bc of the adaptation strength parameter where the model is parameterized close but not inside the adaptation-driven low-frequency limit cycle a tight balance between local and global oscillations is maintained (Figures 5B,C). The best fits to the empirical data were found in this regime. This optimal working point also coincides with states in which the metastability of the whole-brain dynamics was maximal (Supplementary Figure 9C).

Slow Waves are Guided by the Connectome

In the model, up-to-down transitions can originate in many different brain areas and propagate in different directions. However, when averaged over many events, a preferred direction from anterior regions to posterior brain regions becomes evident. This is a well-known feature of SOs (Massimini et al., 2004; Nir et al., 2011; Mitra et al., 2015; Malerba et al., 2019) and emerges from the model without any specific adjustments.

While down-to-up transitions spread through excitatory coupling of brain areas, up-to-down transitions initiate periods of silence. In the latter case, these oscillations represent a "window of opportunity" in which other brain areas can transition to the down-state due to a relative lack of inputs. Consistent with prior studies (Hagmann et al., 2008), we measured a positive correlation between the coordinate of a brain region on the anterior-posterior axis and its in-degree (Supplementary Figure 2E). This means that frontal regions receive less input on average and, therefore, spend more time in the down-state, as was also observed experimentally (Malerba et al., 2019). As a consequence, up-to-down transitions tend to be initiated in frontal areas and spread more easily to other low-degree nodes, ultimately producing wave fronts that travel, on average, from front to back. A mirrored directionality for down-to-up transitions (Figure 4J and Supplementary Figure 8C) can be observed as well which is in agreement with a previous computational study (Roberts et al., 2019) that considered cortical waves as spreading activation only. Other heterogeneities that might affect wave propagation, such as a differential sensitivity of brain areas to neuromodulators (Kringelbach et al., 2020), have not been addressed here.

In summary, the increased likelihood of slow waves to be initiated in the prefrontal cortex and the directionality of propagation is determined by the structural properties of the whole-brain model alone. Hence, recognizing that slow waves are propagating as periods of silence explains the possibly unintuitive fact that, although frontal areas are not strongly connected, they become sources of global waves exactly due to their low network degree.

Underlying Neuronal Activity of Resting-State fMRI is Underdetermined

Our findings confirm other studies in that best fits to empirical data are found when the system is parameterized close to a bifurcation (Deco et al., 2013; Cabral et al., 2017; Jobst et al., 2017; Demirta et al., 2019) which usually separates an asynchronous firing state from an oscillatory state. In accordance with these studies, we find that low-gamma frequencies (25–35 Hz) can produce good fits to fMRI data. However, by including an adaptation mechanism, we also found good fits at the state transition to the slow limit cycle with very low oscillation frequencies (0.5–2 Hz). In some cases, a single up-state burst from an otherwise silent brain was enough to produce a good FC fit.

This shows that a wide range of parameters and oscillation frequencies can reproduce empirical resting-state (rs) fMRI patterns, primarily because BOLD models act like an infraslow (0.005–0.1 Hz) low-pass filter. The wide range of possible solutions ultimately poses the question which of these regimes is the most suitable candidate for the underlying neuronal activity of rs-fMRI. This problem is particularly evident in sleep. SOs during SWS are profoundly distinct from the tonic firing activity observed in the awake state. Strikingly, however, fMRI FCs during both states are very similar and differ primarily in the average strength of correlations between regions but not significantly in the spatial structure of the FC matrix itself (Dang-Vu et al., 2008; Mitra et al., 2015; Jobst et al., 2017). In agreement with another whole-brain modeling study with adaptive neurons (Deco et al., 2014), we observed that while the strength of adaptation has a profound effect on the global activity, it did not lead to significantly different FC patterns. FC matrices resulting from the optimization (Supplementary Figure 4A) were largely independent of adaptation strength (not shown) and produced good model fits in either case (Supplementary Figure 3B). Therefore, we think that it is justified to use awake rs-fMRI data for calibrating a whole-brain model of slow-wave sleep, as we do not expect a significant difference compared to fMRI recorded during sleep.

We conclude that it is necessary to incorporate data from faster modalities, such as MEG or EEG, when validating whole-brain models and that the use of fMRI data alone is not sufficient to constrain the vast space of possible models well enough.

Adaptation, Cholinergic Modulation, and Slow Oscillations

The emergence of SOs caused by activity-dependent adaptation has been thoroughly studied in the past in models of isolated cortical networks (Bazhenov et al., 2002; Jercog et al., 2017; Cakan and Obermayer, 2020; Nghiem et al., 2020), and our findings suggest that adaptation plays a major role in the organization of SOs across the whole brain. This mechanism for generating SOs should generalize to a wider class of cortical E-I population models, as long as a bistability between up- and down-states and a suitable activity-dependent adaptation mechanism is present, such as in Deco et al. (2014). This is further supported by the fact that a link of this bistable dynamics in large spiking networks with adaptive neurons and their respective mean-field representations was previously established (Tartaglia and Brunel, 2017; Cakan and Obermayer, 2020).

These modeling results are in line with experimental evidence that activity-dependent hyperpolarizing potassium conductances, which contribute to spike-frequency adaptation of pyramidal cells, are in fact responsible for the termination of up-states in the cortex (Neske, 2016). Furthermore, experiments show that increased levels of acetylcholine (ACh) effectively lead to a weakening of adaptation (McCormick and Williamson, 1989). Analogously, a higher ACh concentration corresponds to a lower adaptation strength b in our model, enabling cholinergic modulation of SO statistics.

The application of carbachol, a drug which causes a blockage of potassium channels and which is an agonist of ACh, can be used to transition a cortical slice from a sleep-like state with SOs to an awake-like state with tonic firing (D'Andola et al., 2018), same as predicted by the model (Supplementary Figure 9A). Indeed, in-vitro experiments have shown that carbachol application leads to a lengthening of up-states and a shortening of down-states (Nghiem et al., 2020). In line with this, endogenous ACh levels are found to be significantly higher during wakefulness and sharply decrease during SWS (Hasselmo, 1999) and, vice-versa, that the administration of carbachol enhances transitions from SWS to REM sleep (Carrera-Cañas et al., 2019).

In humans, the frequency with which SOs occur changes during a night of sleep and increases with the depth of the sleep stage, ranging from no SOs during the awake stage to around 20 SOs per minute in the deepest sleep stage (Massimini et al., 2004). Similar changes of SO event frequency and durations of up- and down-states were reported in rats (Vyazovskiy et al., 2009). Measuring changes of SO event frequency in the EEG, in addition to the analysis of the power spectrum could potentially provide a way to better calibrate whole-brain models to in-vivo data and to characterize transitions between sleep stages. One advantage of the evolutionary optimization method is that the fitness function can be extended to include these more specific features which could help determine differences in model parameters when fitted to multiple sleep stages independently, for instance.

The transitioning of neural dynamics from awake to deep sleep stages as a function of ACh concentrations was previously explored in models of single populations of thalamocortical networks (Bazhenov et al., 2002; Hill and Tononi, 2005; Krishnan et al., 2016). Combined with the experimental evidence of the significance of cholinergic modulation during sleep, this strongly suggests that the same principles can be applied on the level of the whole brain. In our model, increasing the adaptation strength b, which is akin to lowering ACh concentrations in the cortex, also leads to an increase of the number of global oscillations per minute (Figure 5B). This highlights the importance of the adaptation mechanism of individual neurons during the transitioning from superficial to deeper sleep stages where SOs are abundant and can involve the whole brain.

In accordance with this, it has been shown that transitions into deeper sleep stages that are accompanied by an increase of SO power also lead to a decrease of delta oscillation power (Rosenblum et al., 2001). A similar transition can be observed in the model where increased adaptation values lead to a replacement of faster local oscillations by slower global oscillations (Figure 5).

Outlook

Despite the abundance of macroscopic waves in the human cortex (Muller et al., 2018), computational models have only recently been used to study them on a whole-brain scale in order to understand how the connectome shapes these oscillations (Atasoy et al., 2016; Robinson et al., 2016; Roberts et al., 2019). Our work is a step in this direction, linking the emergence of cortical waves to the underlying neural mechanism that governs adaptation in the human brain during SWS. We have shown how the interplay of local properties of brain areas and the global connectivity of the brain shapes these oscillations. The present study also confirms that whole-brain models can represent a wider range of brain activity beyond the resting-state.

Apart from oscillations during SWS, which was the main focus of this paper, cortical waves can be observed in many other scenarios, for example evoked by external stimuli (Stroh et al., 2013), during sensory processing (Davis et al., 2020), and during the propagation of epileptic seizures (Proix et al., 2018). They can range from the mesoscopic (Muller et al., 2018) to the whole-brain scale (Burkitt et al., 2000; Mitra et al., 2015). Therefore, we have reason to believe that a whole-brain modeling approach, that builds on biophysically grounded and computationally efficient neural mass models, in combination with advanced optimization methods, can help to address questions about the origin and the spatio-temporal patterns of cortical waves in the healthy as well as the diseased human brain.

Methods

Neural-Mass Model

We construct a mean-field neural mass model of a network of coupled adaptive exponential integrate-and-fire (AdEx) neurons. The neural mass model (Augustin et al., 2017) has been previously validated against detailed simulations of spiking neural networks (Cakan and Obermayer, 2020). The AdEx model successfully reproduces the sub- and supra-threshold voltage traces of single pyramidal neurons found in cerebral cortex (Jolivet et al., 2008; Naud et al., 2008) while offering the advantage of having interpretable biophysical parameters. The dimensionality reduction by means of a mean-field approximation provides an increase in simulation speed of about four orders of magnitude over the large spiking neural network while still retaining the same set of biophysical parameters and reproducing all of its dynamical states.

For a sparsely connected random network of N → ∞ AdEx neurons, the distribution of membrane potentials p(V) and the mean population firing rate rα of population α can be calculated using the Fokker-Planck equation in the thermodynamic limit N → ∞ (Brunel, 2000). Determining the distribution involves solving a partial differential equation, which is computationally demanding. Instead, a low-dimensional linear-nonlinear cascade model (Fourcaud-Trocmé et al., 2003; Ostojic and Brunel, 2011) captures the steady-state and transient dynamics of a population in the form of a set of ordinary differential equations. For a given mean membrane current μα with standard deviation σα, the mean of the membrane potentials as well as the population firing rate rα in the steady-state can be calculated from the Fokker-Planck equation (Richardson, 2007) and can be captured by a set of simple nonlinear transfer functions Φr,τ,V(μα, σα). These transfer functions can be precomputed (once) for a specific set of single AdEx neuron parameters (Supplementary Figure 1).

For the construction of the mean-field model, a set of conditions need to be fulfilled: We assume (1) random connectivity (within and between populations), (2) sparse connectivity (Holmgren et al., 2003; Laughlin and Sejnowski, 2003), but each neuron having a large number of inputs (Destexhe et al., 2003) K with 1 ≪ K ≪ N, (3) and that each neuron's input can be approximated by a Poisson spike train (Fries et al., 2001; Wang, 2010) where each incoming spike causes a small (c/J ≪ 1) and quasi-continuous change of the postsynaptic potential (PSP) (Williams and Stuart, 2002) (diffusion approximation).

Model Equations

A detailed mathematical derivation of the model equations was provided before in Refs. (Augustin et al., 2017; Cakan and Obermayer, 2020). Here, we only present the equations of the linear-nonlinear cascade model which were used to simulate the whole-brain network model. Every brain area is represented by a node consisting of an excitatory (E) and inhibitory (I) population α ∈ {E, I}. All parameters of the whole-brain model are listed in Table 1. For every node, the following equations govern the dynamics of the membrane currents:

Here, μα describe the total mean membrane currents, the currents induced by synaptic activity, the currents from external input sources, and the external noise input. Means and variances are across all neurons within each population. Note that mean currents measured in units of mV/ms can be expressed in units of nA by multiplying with the membrane capacity C = 200 pF, i.e., 1 mV/ms ·C = 0.2 nA. is the variance of the membrane currents. The parameters Jαβ determine the maximum synaptic current when all synapses from population β to population α are active. The synaptic dynamics is given by:

Here, represents the mean of the fraction of all active synapses, which is bounded between 0 (no active synapses) and 1 (all synapses active), and represents its variance.

The input-dependent adaptive timescale τα = Φτ(μα, σα), the mean membrane potential and the instantaneous population spike rate rα = Φr(μα, σα) are determined at every time step using the precomputed transfer functions (Supplementary Figure 1). The mean rαβ and the variance ραβ of the effective input rate from population β to α for a spike transmission delay dα are given by

rα is the instantaneous population spike rate, cαβ defines the amplitude of the post-synaptic current caused by a single spike (at rest, i.e., for ), and Jαβ sets the maximum membrane current generated when all synapses are active (at ). Kgl is the global coupling strength parameter, Cij are elements from the whole-brain fiber count matrix connecting region j with region i, and Dij are elements from the fiber length delay matrix. Here, the Kronecker delta δαβE is 1 if α = β = E and 0 otherwise, restricting inter-areal coupling to the excitatory subpopulations only.

Adaptation Currents

For a single AdEx neuron, the hyperpolarizing adaptation current is increased after ever single spike (Benda and Herz, 2003) which leads to a slowly-decreasing spike frequency in response to a constant input (Naud et al., 2008). In the mean-field limit of a large population, an adiabatic approximation can be used to express the mean adaptation current in terms of the mean firing rate of the population. The mean adaptation current is given by ĪA and acts as an inhibitory membrane current, Φr,τ,V(μα − ĪA/C, σα), with its slow dynamics (compared to the membrane time constant) given by (Augustin et al., 2017):

We set the subthreshold adaptation parameter a to 0 and only consider finite spike-triggered adaptation b. In Cakan and Obermayer (2020), it was shown that a finite a mainly shifts the state space in the positive direction of the excitatory input and produces no new states compared to when only b is allowed to vary. All parameters are listed in Table 1.

Noise and External Input

Each subpopulation α of every brain area receives an external input current with the same mean and standard deviation σext with respect to all neurons of the subpopulation. We set the value of to draw the state space diagrams in Figure 2. The origin of these mean currents is not further specified and can be thought of as a tonic mean background input, for example, from subcortical areas to the cortex. Additionally, every subpopulation also receives an independent noise input which also originates from unidentified neural sources such as subcortical brain areas and which is modeled as an Ornstein-Uhlenbeck process with zero mean,

where ξ(t) is a white noise process sampled from a normal distribution with zero mean and unitary variance. The noise strength parameter σou determines the amplitude of fluctuations around the mean of the process.

BOLD Model

The firing rate output rE(t) of the excitatory population of each brain area is converted to a BOLD signal using the Balloon-Windkessel model (Friston et al., 2000; Deco et al., 2013) with parameters taken from Friston et al. (2003). The BOLD signal is represented by a set of differential equations that model the hemodynamic response of the brain caused by synaptic activity. After simulation, the BOLD signal is subsampled at 0.5 Hz to match the sampling rate of the empirical fMRI recordings.

State Space Diagrams

Due to the semi-analytic nature of the model, the state space diagrams in Figure 2 a were computed numerically by simulating each point in the diagrams for 20 s. Corresponding to the resolution of the diagrams, this resulted in a total of 161 × 161 simulations for a single node and 101 × 101 simulations for the whole-brain network. State transition lines which mark the bifurcations of the system were drawn by classifying the state of each simulated point and thresholding certain measures to identify abrupt state changes in parameter space. Every simulation was initialized randomly. The state space diagrams were computed without adaptation (b = 0) and with spike-triggered adaptation (b= 20 pA). All nodes in the brain network received the same mean background input .

To classify a state as bistable, a decaying stimulus in negative and subsequently in positive direction was applied to the excitatory population of all nodes. An example of this stimulus can be seen in Figure 2B1. This ensured that, if the system was in the bistable regime, it would reach the down-state and subsequently the up-state. If a difference in the 2 s-mean firing rate in any brain area of at least 10 Hz was detected, after the stimulus had relaxed to zero after 8 s, it was classified as bistable. A threshold of 10 Hz was chosen because it was less than the smallest difference in firing rate between any down- and up-state in the state space.

It should be noted that the state space diagrams of the whole-brain network in Figure 2 a necessarily provide a reduced description disregarding heterogeneous states that can arise at the depicted state transition boundaries. In the absence of noise, transitions from one network state to another, e.g., from down-state to up-state, happen gradually as we change the corresponding bifurcation parameter, such as the excitatory external input. Close to the bistable regime some nodes effectively receive more input than others, depending on the in-degree of each node, and can transition to the up-state before others do. However, these regions are so narrow that they do not play any meaningful role in the dynamics, i.e., the width of these regions is much smaller than the standard deviation of the noise input. Examples of these states are shown in Supplementary Figure 10 which we could find only by fine-tuning the input strengths of the system without noise. When noise is added, the fluctuating activity of the individual nodes is typically enough to drive the entire network into one of the states in an all-or-nothing fashion.

Oscillatory regions were classified as such if the oscillation amplitude of the firing rate of at least one brain area was larger than 10 Hz after 8 s of stimulation after which all transients vanished. An amplitude threshold of the firing rate oscillations of 10 Hz was chosen because all oscillatory states had a larger amplitude across the entire state space. This was confirmed by choosing a smaller threshold and comparing the transition lines with the former one, which yielded the same results. Note that the output of the mean-field model is a firing rate, describing the average number of spikes per second measured in Hertz. Therefore, when the system is in a limit cycle, the firing rate oscillates with an amplitude measured in Hertz. At the same time, the frequency of the oscillations is also measured in Hertz. In the whole-brain network, states were classified as oscillatory, if at least one node was in an oscillatory state. This criterion was compared with a stricter version in which all brain regions had to be simultaneously classified as oscillatory, which again yielded almost the same results with only minimal differences. This leaves us with the conclusion that, in the brain network, the regions in which state transitions from non-oscillatory to oscillatory states happen gradually for each individual brain region, are so narrow that they can be disregarded in our analysis. This is especially true, once noise was added to the system, which made these regions practically unnoticeable.

Neuroimaging Data

Participants

Structural and resting-state functional MRI data were acquired from 27 older adults (15 females: age range = 51–77 years, mean age = 62.93 years; 12 males: age range = 50–78 years, mean age = 64.17 years) at the Universitätsmedizin Greifswald. For 18 out of the 27 participants, subsequent daytime sleep EEG recordings were also acquired. Nine participants were excluded from the EEG phase due to an inability to sleep in the laboratory. The study was approved by the local ethics committee at Universitätsmedizin Greifswald and was in accordance with the Declaration of Helsinki. All participants gave their written informed consent prior to taking part in the study and were reimbursed for their participation.

Structural Imaging Data

Acquisition

Data acquisition was performed using a 3T Siemens MAGNETOM Verio syngo B17 MR scanner with a 32-channel head coil. High-resolution anatomical T1 images were acquired using a gradient echo sequence (TR = 1,690 ms, TE = 2.52 ms, TI = 900 ms, flip angle (FA) = 9°, FOV = 250 x 250, matrix size = 246 x 256, slice thickness = 1 mm, number of slices = 176), while for diffusion data a single-shot echo-planar imaging (EPI) sequence (TR = 11,100 ms, TE = 107 ms) was used. For every participant, there were 64 gradient directions (b = 1,000 s/mm2) and one non-diffusion-weighted acquisition (b = 0 s/mm2) acquired over a field of view of 230 x 230 x 140 mm, with a slice thickness of 2 mm and no gap, and a voxel size of 1.8 x 1.8 x 2 mm.

Preprocessing and Connectome Extraction.

Preprocessing of T1- and diffusion-weighted images was conducted using a semi-automatic pipeline implemented in the FSL toolbox (www.fmrib.ox.ac.uk/fsl, FMRIB, Oxford). Preprocessing of anatomical T1-weighted images involved the removal of non-brain tissue using the brain extraction toolbox (BET) implemented in FSL and the generation of a brain mask. The quality of the brain-extracted images was assessed manually, and, subsequently, 80 cortical regions were defined based on the automatic anatomical labeling (AAL2) atlas introduced in Rolls et al. (2015). Brain extraction was also conducted for the diffusion-weighted images, and it was followed by head motion and eddy current distortion correction. Subsequently, a probabilistic diffusion model was fitted to the data using the Bayesian Estimation of Diffusion Parameters Obtained using Sampling Techniques (BEDPOSTX) FSL toolbox. Individual connectomes were obtained by linearly registering each subject's b0 image to the corresponding T1-weighted image, transforming the high-resolution mask volumes from MNI to the individual's diffusion space and running probabilistic tractography with 5,000 random seeds per voxel using FSL's PROBTRACKX algorithm (Behrens et al., 2007). Furthermore, as probabilistic tractography contains no directionality information but is dependent on the seeding location, the connection strength Cij between regions i and j was considered equal to the connection strength Cji between regions j and i and was obtained by averaging the corresponding entries in the connectivity matrix. Each connectivity matrix was normalized by dividing every matrix entry by the maximum value of the matrix.

Finally, all subject-specific matrices were averaged to yield a single structural connectivity (Supplementary Figure 2A) and a single fiber length matrix (Supplementary Figure 2B). The resulting connectivity matrix, when multiplied by a coupling parameter Kgl, determines the coupling strength between any two regions. For a given simulation, the fiber length matrix is divided by the signal propagation speed vgl to yield a time-delay matrix. The average inter-subject Pearson correlation of individual structural connectivity (fiber length) matrices was 0.96 (0.68) (cf. Supplementary Figures 2C,D).

Resting-State Functional MRI Data

Acquisition

Resting-state functional MRI data were acquired using the same 3T Siemens MAGNETOM Verio syngo B17 MR scanner, with the following parameters: TR = 2000 ms, TE = 30, slice thickness = 3 mm, spacing between slices = 3.99 mm, FA = 90°, matrix size = 64 x 64, FOV = 192 x 192, voxel size = 3 x 3 x 3 mm. Participants were scanned for 12 min, leading to an acquisition of 360 volumes per participant.

Preprocessing and Network Construction

Preprocessing of rsfMRI data was conducted using the FSL FEAT toolbox (Woolrich et al., 2001). The first five volumes of each dataset were discarded. Data were corrected for head motion using the FSL McFLIRT algorithm, and high-passed filtered with a filter cutoff of 100 s. Functional images were linearly registered to each subject's anatomical image using FLIRT. A brain mask was created from the mean volume of the data using BET. MELODIC ICA was conducted and artifactual components (including motion, non-neuronal physiological artifacts, scanner artifacts and other nuisance sources) were removed using the ICA FIX FSL toolbox (Griffanti et al., 2014; Salimi-Khorshidi et al., 2014). Subsequently, the high-resolution mask volumes were transformed from MNI to individual subject functional space and average BOLD time courses for each cortical region were extracted using the fslmeants command included in Fslutils.

Sleep EEG Data

Acquisition

Electroencephalography (EEG) recordings were obtained during afternoon naps with a duration of 90 min as part of a larger study in which slow oscillatory transcranial direct current stimulation (so-tDCS) was also applied. For the purposes of the current study, however, only the two baseline recordings and one sham (no so-tDCS stimulation) were used. In the baseline sessions, EEG was recorded from 28 scalp sites using Ag/AgCl active ring electrodes placed according to the extended 10–20 international EEG system, while in the sham session, 26 scalp electrodes were used. Data were recorded at a sampling rate of 500 Hz using the Brain Vision Recorder software and referenced to an electrode attached to the nose. Additionally, chin electromyography (EMG) and electrooculography (EOG) data were acquired according to the standard sleep monitoring protocol.

Preprocessing

Preprocessing of EEG data was conducted using custom scripts implemented in the FieldTrip toolbox (Oostenveld et al., 2011), in two parts: in the first part, raw data were prepared for an independent component analysis (ICA), which was conducted in order to identify and remove artefactual components, while in the second part, different filtering settings, as required by our main data analysis, were applied again to the raw data and previously identified artifactual independent components (ICs) were removed. In preparation for ICA, data were bandpass-filtered between 1 and 100 Hz using a finite impulse response filter. In addition, a bandstop filter centered at 50 Hz with a bandwidth of 4 Hz was applied. Subsequently, a manual inspection of the data was conducted in order to remove gross noise artifacts affecting all channels, and ICA was performed using the runica algorithm (Makeig et al., 1997). EMG channels were excluded from the ICA. The resulting 30 maximally independent components (ICs) were visually inspected and those corresponding to muscle artifacts, heart beat, and, where applicable, eye movements were marked for rejection based on scalp topography (Jung et al., 1998, 2000) and power spectrum density (Criswell, 2010; Berry and Wagner, 2014).

For the main analysis, raw data were bandpass-filtered between 0.1-100 Hz using a finite impulse response filter, then segmented in 10 s epochs. Previously identified artifactual ICs were removed from the data, together with the EOG channels. Next, a two-step procedure was employed for detecting remaining artifact-contaminated channels: the first step was based on kurtosis, as well as low- (0.1–2 Hz) and high-frequency (30–100 Hz) artifacts, while in the second step, the FASTER algorithm was used (Nolan et al., 2010); these channels were afterwards interpolated. In a final step, any 10 s epochs still containing artifacts which could not be removed in the previous steps were manually removed from the analysis and the data were linearly detrended.

Sleep stage classification was conducted manually on the raw data, according to the criteria described in Rechtschaffen and Kales (1968), using the Schlafaus software (Steffen Gais, Lübeck, Germany). Here, 30 s epochs were used, and each was classified as belonging to one of seven categories: wakefulness, non-REM sleep stage 1, 2, 3, or 4, REM sleep, or movement artifact.

Model Optimization

Functional Connectivity (FC)

From each subject's resting-state fMRI recording, functional connectivity (FC) matrices are obtained by computing the Pearson correlation between the BOLD time series of all brain areas, yielding a symmetric 80 × 80 FC matrix per subject. The FC matrix of the simulated BOLD activity (simulation time T= 12 min) is computed the same way. In order to determine the similarity of the simulated and the empirical FC matrices, the Pearson correlation of the lower-triangular elements (omitting the diagonal) between both matrices is computed. For each simulation, this is done for all subjects, and the average of all FC correlation coefficients is taken to determine the overall FC fit. A higher FC correlation coefficient means a better correspondence of simulated and empirical data, with values ranging from –1 to 1 (higher is better). This ensures that the simulated activity produces realistic spatial correlation patterns.

For the four fitting scenarios, the best subject-averaged FC fit scores were the following: 0.57 for fMRI-only fits without and 0.56 with adaptation. With EEG data included in the optimization, fits were 0.57 for both up-to-down and down-to-up solutions (Supplementary Figure 3B). For individual subjects, best fits reached up to 0.75 (Supplementary Figures 4A,B).

Functional Connectivity Dynamics (FCD)

Functional connectivity dynamics (FCD) matrices quantify the cross-correlation between time-dependent FC matrices FC(t). In contrast to computing the grand-average FC alone, this ensures that the temporal dynamics of the simulated and empirical FCs is similar. A rolling window of length 60 s and a step length 10 s is applied to the BOLD time series of length T = 12 min to determine FC(t) (Hutchison et al., 2013). For all t1, t2 < T, the element-wise Pearson correlation of the lower diagonal entries of the matrices FC(t1) and FC(t2) is computed, yielding a symmetric T × T FCD matrix. In order to determine the similarity of the simulated FCD to the empirical FCD, the Kolmogorov-Smirnov (KS) distance of the distributions of lower-triangular elements (omitting the diagonal) of simulated and empirical FCD matrices is calculated. This value ranges from 0 for maximum similarity to 1 for maximum dissimilarity (lower is better). The KS distance is determined for each subject, and an average of all values is taken to determine the overall FCD fit for a simulation. Average FCD fits for all four optimization scenarios were 0.26, 0.26, 0.25, and 0.25, respectively. The best subject-specific fits reached 0.05 (Supplementary Figures 4C,D).

Fit to EEG Power Spectrum

Lastly, the frequency spectrum of the firing rate of the excitatory populations of all brain areas is compared to the mean power spectrum of the EEG data during sleep stage N3.

14 out of the 18 subjects with sleep EEG recordings reached the deep sleep stage N3 in a total of 29 sleep sessions. This resulted in a EEG dataset of 1,034 non-overlapping epochs of 10 s each. The power spectrum of each epoch was computed using the implementation of Welch's method (Welch, 1967) scipy.signal.welch in SciPy (v1.4.1) (Virtanen et al., 2020). A rolling Hanning window of length 10 s was used to compute each spectrum. For each channel, the mean of all epoch-wise power spectra was computed. All channel-wise power spectra were then averaged across all channels to yield a subject-specific N3 EEG power spectrum. The power spectra of all subjects were then averaged across all subjects to yield a single empirical EEG power spectrum of N3 sleep. The average and subject-wise EEG power spectra are shown in Supplementary Figure 5.

Based on the results of Martínez-Cañada et al. (2021), who showed that in a network of point neurons both the total synaptic current and the average firing rate correlate well with the LFP time series and its power spectrum, we used the firing rate output of the model as a proxy for comparison with the power spectrum of the empirical EEG data. The firing rate of the last 60 s of every simulation was averaged across all N brain regions and the power spectrum was computed using the same method as for the empirical data. We then computed the Pearson correlation coefficient between the simulated and the empirical power spectra between 0 and 40 Hz, with correlation values ranging from –1 to 1 (higher is better). During deep sleep, low frequency components (< 1 Hz) are particularly strong due to the underlying slow oscillations (SO) between up- and down-states (Massimini et al., 2004). Using the power spectrum correlation between the simulated model and the empirical data, we were able to find model parameters that produce SOs with a frequency spectrum similar to EEG during SWS. Average EEG spectrum correlations for the two scenarios in which the model was fitted to EEG data were 0.93 when we allowed only up-to-down solutions during the optimization, and 0.97 when down-to-up solutions were allowed as well. In addition, we confirmed that the power spectra of the excitatory synaptic activity variable (Equation 6) and the firing rate (Equation 8) were similar (Pearson correlation > 0.99) leading to the same optimization results.

In Supplementary Figure 5C, the correlation between the power spectrum of the model (Supplementary Figure 5A) and the EEG power spectrum during sleep stage N3 (which was the optimization target) is the highest and decreases from N3 to the awake state (Wa). The lower correlation with stages Wa-N2 (which were not used in the optimization) is mainly due to the emergence of a strong peak in the alpha range between 8 and 12 Hz in addition to the emergence of the SO peak in N3 at around 1 Hz. In order to be more sensitive toward these differences in the data at low frequencies, the EEG cost function used here could be extended. One possibility could be using an event-based SO detection algorithm to extract more granular sleep stage-dependent features from the EEG time series, such as the event frequency, event duration, and the event involvement distribution, instead of relying on the power spectra alone.

Evolutionary Algorithm

An evolutionary algorithm is used to find suitable parameters for a brain network model that fits to empirical resting-state fMRI and sleep EEG recordings. A schematic of the evolutionary algorithm is shown in Supplementary Figure 11. The use of a multi-objective optimization method, such as the NSGA-II algorithm (Deb et al., 2002), is crucial in a setting in which a model is fit to multiple independent targets or data from multiple modalities. Algorithms designed to optimize for a single objective usually rely on careful adjustment of the weights of each objective to the overall cost function. In a multi-objective setting, not one single solution but a set of solutions, called the first Pareto front, can be considered optimal. This refers to the set of solutions that cannot be improved in any direction of the multi-objective cost function without diminishing its performance in another direction. These solutions are also called non-dominated.

In the evolutionary framework, a single simulation is called an individual and its particular set of parameters are called its genes, which are represented as a six-dimensional vector, i.e., one element for each free parameter (listed below). A set of individuals is called a population. For every evolutionary round, also called a generation, the fitness of every new individual is determined by simulating the individual and computing the similarity of its output to the empirical data, resulting in a three-dimensional fitness vector with FC, FCD, and EEG fits, determined as described above. Then, a subset of individuals is selected as parents from which a new set of offspring are generated. Finally, these offspring are mutated, added to the total population, and the procedure is repeated until a stopping condition is reached, such as reaching a maximum number of generations. The multi-objective optimization is based on non-dominated sorting and several other evolutionary operators as introduced in Deb et al. (2002) which are implemented in our software package neurolib (Cakan et al., 2021) using the evolutionary algorithm framework DEAP (Fortin et al., 2012).

The evolutionary algorithm consists of two blocks, the initialization block and the evolution block (Supplementary Figure 11). For initialization, a random population of Ninit = 320 (in the fMRI-only case) or Ninit = 640 (in the fMRI and EEG case) individuals is generated from a uniform distribution across the following intervals of the model parameters: and ∈ [0.0, 4.0] mV/ms, b ∈ [0.0, 20.0] pA, τA ∈ [0.0, 5000.0] ms, Kgl ∈ [100, 400], and σou ∈ [0.0, 0.5] mV/ms3/2. Since the parameter space of good fits in the fMRI and EEG case is smaller compared to the fMRI-only case (see Figure 3A), a larger initial population was used to ensure that the algorithm is able to find these regions. The initial population is simulated, and the fitness scores are evaluated for all individuals.

We then start the evolutionary block which will repeat until the stopping condition of a maximum number of 20 (fMRI-only) or 50 (fMRI and EEG) generations is reached. Using a non-dominated sorting operator, the initial population is reduced to the population size Npop = 80 (fMRI-only) or Npop = 160 (fMRI and EEG). From this population, using a tournament selection operator based on dominance and crowding distance, a set of parents is chosen. A simulated binary crossover is used as a mating operator and is applied on the parent population to generate Npop new offspring. Finally, a polynomial mutation operator is applied on the offspring population, which introduces randomness and thus aids the exploration of the parameter space. After all offspring have been evaluated and a fitness is assigned to each of them, the population is merged with the parent population. The process is then repeated for each generation such that the algorithm produces improving fits in every new generation.

The evolutionary process was significantly accelerated by avoiding long (12 min) simulations with almost no activity. This was achieved by a stage-wise simulation scheme. In the first stage, every run was simulated for 10 s with a transient time of 1 s. If the maximum firing rate of any brain area did not exceed 10 Hz, the run was omitted and marked as invalid. Then, in the second stage, each valid run was simulated for the full length of 12 min and the fitness of that run was evaluated.

When fitting to fMRI and EEG data simultaneously, we filtered for up-to-down solutions by thresholding the median firing rate in the first stage of each simulation. If the median firing rate (across all nodes and time) was below 1 Hz, the simulation was omitted and marked as invalid to avoid finding down-to-up solutions. If the median firing rate was above 15 Hz, the simulation was omitted, to avoid solutions that showed excessive up-state activity. No filtering was necessary when the model was optimized for down-to-up solutions, since the algorithm had a strong tendency to find these solutions without any intervention. The second stage was not subject to such filtering.

Sleep Model Analysis

Up- and Down-State Detection