Researching Pre-school Teachers’ Knowledge of Oral Language Pedagogy Using Video

- University of Oxford, Oxford, United Kingdom

The Observing Language Pedagogy (OLP) tool uses videos of authentic classroom interactions to elicit the procedural knowledge which pre-school teachers can access, activate and use to support classroom decision-making. Three facets are captured: perceiving (the ability to identify salient language-supporting strategies); naming (the use of specific professional vocabulary to describe interactions); and interpreting (the ability to interpret the interactions observed). Prior research has shown that the OLP predicts classroom quality; with naming and interpreting proving the strongest predictors. This study examines OLP responses from 104 teachers to consider the nature of their pedagogical knowledge (perceiving, naming, interpreting), and describe differences between expert teachers (those leading language-supporting classrooms) and non-expert teachers (those leading lower quality classrooms). It offers insight into the nature of language-related expertise and to guide design of teacher professional development, suggesting a tri-fold focus on knowledge of linguistic input, relational pedagogy and cognitive challenging interactions.

Introduction

Pre-school oral language skills predict literacy and broader outcomes at school entry (Morgan et al., 2015; Roulstone et al., 2011), which in turn predict later school achievement (Duncan et al., 2007). However, many children, particularly those from disadvantaged households, start school without the language skills they need (Waldfogel and Washbrook, 2010). While attending high-quality pre-school can help children catch up with their peers (Sylva et al., 2010), not all early education providers offer language-rich environments for children, particularly in the disadvantaged areas where this is most needed (Mathers and Smees, 2014). Further, the in-service professional development which might strengthen practice is often inconsistent in quality (Cordingley et al., 2015) and in its impact on teaching quality or child outcomes (Markussen-Brown et al., 2017). To support more effective workforce development, it is necessary to understand the processes which underpin professional growth (Sheridan et al., 2009).

Effective teaching is understood to require knowledge of both subject content (what is to be taught) and pedagogy (how to represent content for learners) (Shulman, 1986, 1987). There is empirical evidence that pre-school teachers language-and-literacy content knowledge predicts classroom quality and child outcomes (Piasta et al., 2009; Schachter et al., 2016). However, equivalent evidence does not exist for pedagogical knowledge: studies are scarcer and have identified small or null effects (Phillips et al., 2020; Spear et al., 2018; Schachter et al., 2016). This is puzzling, given evidence from later educational phases that pedagogical knowledge does matter (Baumert et al., 2010). More work is needed to establish the role of teacher’s language-related pedagogical knowledge in early childhood classrooms - and what teachers currently know (or do not know).

Recent studies of pedagogical knowledge have focused on effective measurement, on the basis that prior null results may have resulted from the use of de-contextualised data collection methods (i.e. questionnaires) to assess knowledge which is inherently context-specific and highly situated (Schachter et al., 2016). This is particularly true for procedural pedagogical knowledge, which is generated and applied in the classroom context (Eraut, 2004; Stürmer et al., 2013). Procedural knowledge involves knowing how as opposed to knowing that (Anderson and Krathwohl, 2001). This dynamic knowledge allows teachers to orchestrate information about children, pedagogy and instructional goals “in the moment”, and apply strategies flexibly to maximise child learning (Knievel et al., 2015; Putnam and Borko, 2000; Shulman, 1987).

Several recent studies have used videos of real classroom interactions to offer a more authentic and contextualised means of assessing procedural knowledge (Jamil et al., 2015; Kersting et al., 2010). Teachers watch video clips and identify or interpret teaching practice or children’s responses, allowing assessment not just of what they know but of the knowledge they are able to access, activate and use in classroom situations (Kersting et al., 2012). This approach is underpinned by theory and empirical evidence showing that: 1) expert teachers are more skilled than non-experts in focusing on the features of an interaction which are salient for child learning, and that 2) this ability to perceive and interpret classroom situations (also termed “noticing” or “professional vision”) supports effective decision-making and practice (Goodwin, 1994; Sherin and Van Es, 2009; Wiens et al., 2020). Teachers professional vision also provides a window onto their procedural knowledge because, in order to perceive strategies in a video, teachers must have cognitive scripts to represent those strategies (Jamil et al., 2015). Since the scripts of expert teachers have been deeply and holistically codified through repeated rehearsal and observation, they can be efficiently retrieved to support fast, accurate and comprehensive understanding and interpretation of new situations (Berliner, 1988; Sabers et al., 1991; van Es and Sherin, 2002; Glaser and Chi, 2014), whether on video or in the classroom. Teachers ability to interpret video interactions thus offers a proxy for their expertise and, specifically, for the dynamic pedagogical knowledge which underpins the enactment of effective strategies in real classroom interactions.

The Observing Language Pedagogy (OLP) tool uses video assessment to capture pre-school teachers knowledge of oral language pedagogy. It is designed to elicit knowledge of teaching strategies and – specifically – teachers ability to identify and interpret salient language-supporting strategies in video interactions. A recent pilot study conducted with 104 pre-school teachers (Mathers, 2021) showed that OLP scores predict classroom quality as measured by observational rating scales, and that knowledge may also mediate improvements in quality through teacher professional development (Mathers, 2021). Further evidence of promise for using video to capture pre-school teacher’s pedagogical knowledge is provided by the Video Assessment of Interactions and Learning (VAIL). This tool, which captures teacher’s ability to identify effective, non-domain-specific adult-child interactions, has been shown to predict the observed quality of teachers emotional and instructional support (Jamil et al., 2015; Wiens et al., 2020). Together, these studies offer evidence that pedagogical knowledge matters for pre-school classroom quality, and that video assessment may offer a more sensitive measure of contextualised knowledge than decontextualized methods such as questionnaires.

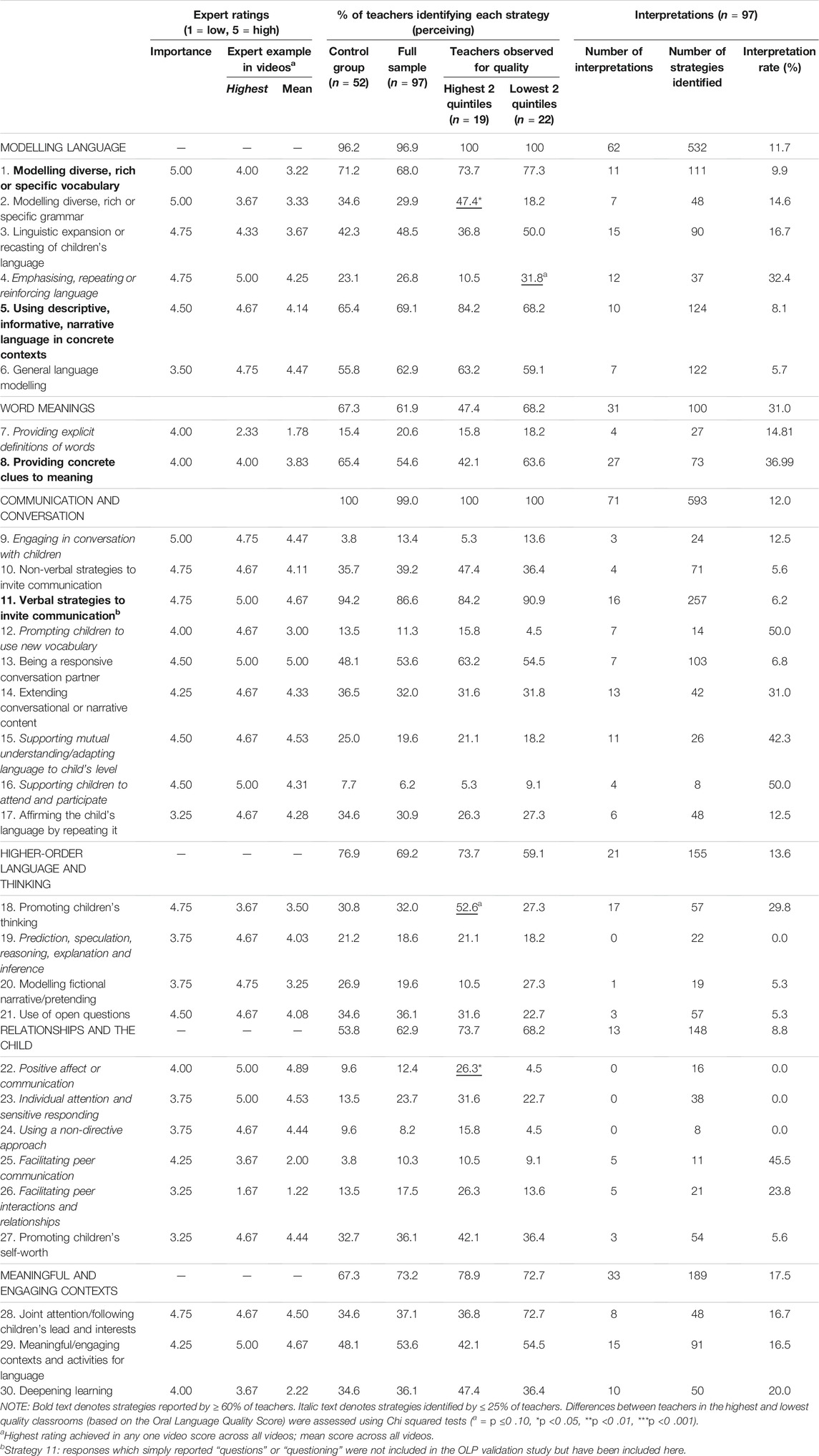

The OLP also provides some information about which facets of pedagogical knowledge matter. Three empirically-distinct cognitive facets are captured: perceiving, naming and interpreting. Perceiving reflects the ability to identify salient strategies in video interactions. It is understood to reflect the existence of codified cognitive scripts which can be recalled to support use of appropriate strategies in specific classroom interactions (Anderson and Krathwohl, 2001). These scripts may be informal or implicit, developed through (near) unconscious observation during classroom experience (Eraut, 2004). Thirty pedagogical strategies are included in the OLP coding framework, on the basis of empirical evidence of benefits for language and literacy outcomes (Table 1). Strategies were derived from a literature review and affirmed through expert review (Mathers, 2020). More detailed descriptions, and examples of underpinning literature, are shown in the Supplementary Material. While most strategies (e.g. modelling vocabulary) relate directly to oral language support, others (e.g. sensitive responding) address the broader nurturing of positive relationships and child affect. Although evidence of a direct impact on child language is somewhat mixed (e.g. Burchinal et al., 2010; Curby et al., 2013; Leyva et al., 2015), these “relational” strategies are included based on their recognised importance in the early years.

TABLE 1. The OLP Framework: expert ratings, strategy identification rates and strategy interpretation rates.

Naming (the use of professional vocabulary to describe video interactions) and interpreting (the ability to interpret the interactions observed) are theorised to reflect explicit, higher-order pedagogical knowledge. Such knowledge is more deeply codified and accessible for deliberate manipulation to support classroom decision-making and the articulation of knowledge to others. For example, alongside an informal script governing when to use questions beginning with “how” or “why”, a teacher might also possess an explicit, overarching script identifying these as open questions, understand when and why open questions might be appropriate, and be able to analyse the effectiveness of a specific open question in eliciting a child’s thinking.

Of the three facets, naming and interpreting most strongly predicted classroom quality in the OLP validation study (Mathers, 2021). Having the vocabulary with which to name a concept may allow teachers to engage in explicit reflection and discussion on that concept, supporting deeper understanding and intentional practice. Use of specialist vocabulary may also indicate prior professional development, and thus be a proxy for wider formal knowledge. Reasoning about classroom situations may further support teachers in deliberate or practice to promote specific child language outcomes (Kind, 2009). Both naming and interpreting may also aid teachers in pedagogical leadership; for example, in articulating their knowledge to others and explaining why certain practices are important. The importance of classroom reasoning is further supported by research in primary and secondary education, showing that expert teachers are more able to reason about pedagogical intentions, alternative approaches, or children’s thinking and outcomes (Blömeke et al., 2015; Sabers et al., 1991; Seidel and Stürmer, 2014; van Es and Sherin, 2002) and that pedagogical reasoning predicts child learning gains (Kersting et al., 2010, 2012).

The OLP validation study was the first preschool video study to examine knowledge of oral language pedagogy and the roles played by naming and interpreting. It offered important information about the broad facets of pedagogical knowledge which matter for classroom quality. Findings suggest that pre-school teachers need learning opportunities which stimulate explicit, higher-order procedural knowledge (Eraut, 2004), including the development of pedagogical reasoning and a rich professional lexicon. However, detailed information on precisely what such expertise “looks like”, and on what pre-school teachers currently know and do not know, is lacking.

The OLP validation study did not analyse which strategies teachers could identify, name and interpret – or the nature of their interpretations – and few other studies exist which can provide such information. One study by Kersting et al. (2012) examined different aspects of teachers classroom reasoning in response to videos of teaching interactions, including their analysis of mathematical content, student thinking, suggestions for improvement and overall depth of interpretation. All facets predicted observed teaching quality (with content analysis dominating in multi-variate models) and teachers spontaneous suggestions for improvement predicted child learning gains. However, since the participants were secondary-school mathematics teachers, the findings may not hold for the pre-school context. The work of Dwyer and Schachter on pedagogical reasoning in preschool classrooms offers more contextually relevant information. Their studies show that teachers draw on multiple sources of contextualised knowledge to inform their reasoning about language and literacy instruction, including knowledge of how children learn; knowledge of specific children, their learning goals for them and their instructional history with them; factors related to the school context; and ideas about themselves as teachers (Schachter, 2017; Dwyer and Schachter, 2020). However, since relationships with teaching quality and child outcomes were not analysed, this work cannot shed light on which facets matter for effective pre-school teaching. Similarly, although efforts have been made to detail the professional lexicon of mathematics teachers (Clarke et al., 2017), no such work exists for pre-school language pedagogy. Further work is needed to expand our detailed understanding of teachers pedagogical knowledge, in order to inform practice and professional development.

The Current Study

The current study aims to fill these gaps in understanding through additional analysis of the OLP validation study dataset (Mathers, 2021). In particular, it conducts a detailed examination of teachers naming and interpreting (the facets most predictive of classroom quality), with the aim of providing concrete information to guide practice and professional development relating to oral language teaching. It directly compares the responses of teachers leading the most and least language-supporting classrooms, to examine differences. Finally, it examines the strategies identified by teachers (perceiving) to consider which were most commonly reported (and thus known) and which less so. Although perceiving was not associated with higher classroom quality in multi-variate models (i.e. when entered alongside the more predictive naming and interpreting), strategy knowledge did show small positive correlations with classroom quality in bivariate tests. There is, therefore, something to be gained from analysing teachers strategy identification and, in particular, from comparing responses of teachers leading the highest and lowest quality classes.

Analysis is based on a sample of 104 teachers from 72 schools. Data were collected in the context of a wider randomised controlled trial (RCT) designed to evaluate a professional development intervention for pre-school teachers (Mathers, 2020). Data were also available on observed classroom quality for 55 of the 104 teachers. Four research questions are addressed:

1) Perceiving: which language-supporting strategies did teachers notice?

2) Naming: which specialist vocabulary terms did teachers use to describe the strategies they identified?

3) Interpreting: what was the nature of interpretations made by teachers?

4) Experts vs non-experts: were there differences in perceiving, naming and interpreting between teachers leading the highest and lowest quality classrooms?

Implications are drawn regarding teachers professional knowledge and its development.

Methods

Sample

120 schools participated in the wider RCT between September 2017 and June 2018 (61 intervention, 59 control). All were state-funded and recruited from disadvantaged regions of England: in the lowest 3 deciles as defined by the Indices of Multiple Deprivation (IMD) (DCLG, 2011). Participating teachers were from nursery (age 3) or reception (age 4) classes in these schools. After attrition, the post-test sample included 115 schools and 283 teachers.

The current sample comprised 104 teachers who responded to an online survey at the post-test stage of the RCT, which assessed procedural pedagogical knowledge using the OLP (knowledge was not assessed at pre-test). Approximately two-thirds of teachers (n = 68) taught in reception classes (age 4), 30 taught in nursery (age 3) and six were coded as “other” (teachers in combined classes, leaders with no direct teaching role). All held, or were working towards, the graduate level “Qualified Teacher Status”. On average, participants had 10.7 years teaching experience (range=0.5–33, SD = 7.6) and 8.2 years of pre-school teaching experience (range 0.5–33, SD = 7.0). Most (n = 99) were female. Slightly under half (n = 50) were from schools (N = 35) receiving the intervention. Schools were largely in disadvantaged areas, with four fifths (N = 84) below the 50th IMD percentile, and more than half (N = 59) below the 20th percentile.

The modest survey response rate (63% school response, 37% teacher response) must be noted. Although study teachers reflected the wider RCT sample on many dimensions (Mathers, 2021), they were more likely than RCT participants to be teaching in reception [F (1,281) = 4.16, p=.04] and to have participated in the RCT since inception [F (1,279) = 4.23, p=.04], and it was not possible to compare samples on all features. As such, the possibility of response bias cannot be dismissed.

Procedures

Ethical approval was provided by the Education Research Ethics Committee of the University of Oxford (for the RCT) and the UCL Institute of Education (for the current study). Both the OLP knowledge survey and the observations of classroom quality took place post-intervention, between October 2017 and February 2018. The quality assessments were conducted in one reception class per school, and were available for 55 of the 104 study teachers.

Measures of Procedural Knowledge

Procedural knowledge (perceiving, naming, interpreting) was assessed using the OLP tool (see Mathers, 2020, 2021 for further detail on rationale and development). Teachers were asked to watch two short (2–3 min) videos of authentic, pre-school classroom interactions. The videos were selected from a wider pool, via piloting and expert review, as reflecting good-quality examples of the 30 OLP strategies. In Video 1, a teacher and child interact in the block area; in Video 2 a teacher supports a small group to discuss and draw a shopping list. Although using videos of this length and number is supported by previous studies (Bruckmeier et al., 2016; Jamil et al., 2015), it must be noted that the OLP does not reflect the full range of classroom contexts.

Data were also gathered for a third video, which was dropped during development and excluded from the validation study. It shows a teacher telling a whole-class story and returning to story themes during subsequent play. The third video is included in the current analysis, as it provides additional information about teacher responses. The extent to which experts considered each of the 30 OLP strategies to be reflected in each of three videos is shown in Table 1.

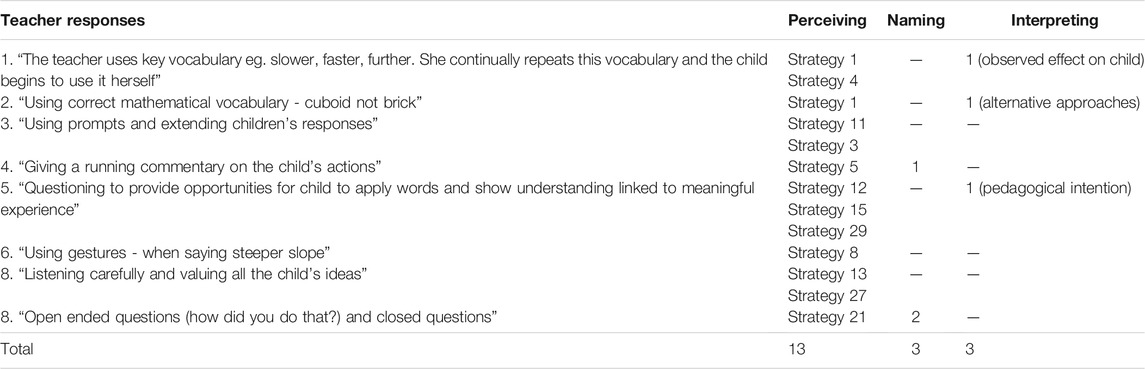

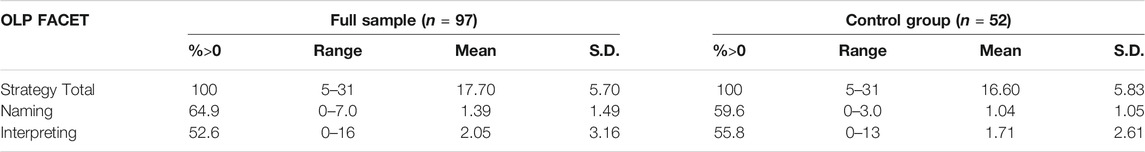

To assess perceiving, teachers were prompted to identify up to eight strategies in each video which might support children’s oral language. Responses were considered valid if they matched at least one strategy in the OLP framework (Table 1; Supplementary Material). A single response could be credited as reflecting multiple strategies, and strategies could be described using informal language. Coding was conducted by the author, with a proportion independently coded by a second researcher, trained to reliability on a proportion (35%) of actual responses. Independent coding was conducted on a further 35% of responses, with high levels of exact agreement (82–89%). Discrepancies were resolved through discussion. Table 2 presents an illustrative set of coded responses, and Table 3 presents descriptive statistics for the full sample and the control group (as reflecting a population unaffected by intervention). Strategy totals were normally distributed with a broad range. For the OLP validation study, a perceiving score was generated by multiplying each valid strategy by the relevant “expert example” rating for the video. Since the current study uses the raw strategy scores, this process is not shown, but details can be found in Mathers, 2021.

Informed by the work of Kersting and colleagues (2010, 2012), the higher-order facets of naming and interpreting were not directly prompted. Although this risked under-representing teachers who could have offered an interpretation if prompted, it was reasoned that spontaneous use would reflect the knowledge most likely to be mobilised in real classroom situations—and thus most closely associated with actual practice. Though undeniably light-touch as a means of examining pedagogical reasoning, the resulting responses did reflect awareness of pedagogical intention, observed effects on the children and possible alternative approaches (Table 2).

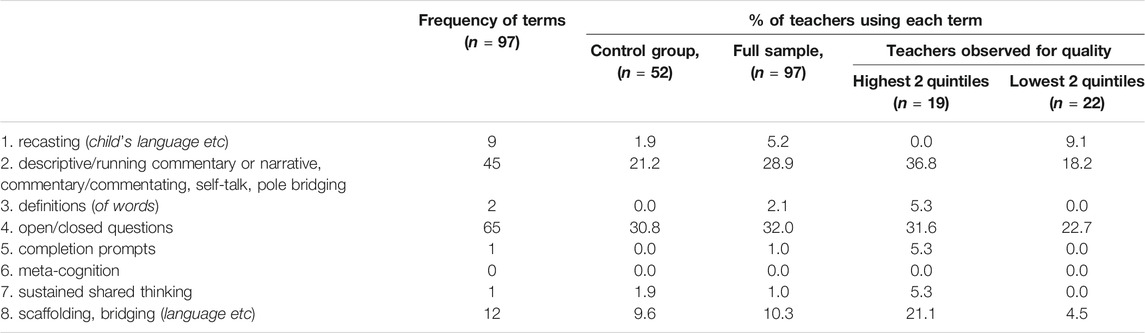

All valid responses (≤8 per video) were coded to reflect instances of professional vocabulary (naming) using a vocabulary list derived through expert review (Table 6). Terms credited were defined prior to coding, refined following coding and then subjected to expert review. Details of the terms reviewed but not selected are shown in the Supplementary Material. Multiple vocabulary terms could be credited within an individual response. Table 2 shows an illustrative coded example for one set of responses. 50% of responses were double-coded, with 100% exact agreement for all videos. The current analysis is based on the raw number of vocabulary terms credited, allowing multiple terms per response, to provide the richest data on terms reported1.

Valid responses (≤8 per video) were also coded to reflect instances of interpretation: either a possible pedagogical intention, an observed effect on the child, or a possible alternative approach (Table 2, Supplementary Material). Half of responses were double-coded, with high levels of exact agreement (naming = 100%; interpreting: 96–98%). The interpretation score is based on the number of responses for which a valid interpretation was provided.

Due to a high proportion of zero responses, both naming and interpreting displayed narrow ranges, low means and a positive skew (Table 3). Nonetheless, scores did discriminate between respondents: 64.9% used at least one expert term (naming) and 52.6% gave at least one interpretation. As noted, the OLP validation study identified both naming and interpreting as predictors of classroom quality [standardised βs in the range 0.29 (p <0 .05) - 0.57 (p <0 .001)].

Measures of Practice Quality

Three observational measures of classroom quality were used in the wider RCT: the Early Childhood Environment Rating Scale Third Edition (ECERS-3; Harms et al., 2014), the literacy subscale from the curricular extension to the ECERS (ECERS-E; Sylva et al., 2003) and the Sustained Shared Thinking and Emotional Wellbeing (SSTEW) scale (Siraj et al., 2015). All are known to predict children’s development (eg, Sylva et al., 2010; Howard et al., 2018).

The ECERS-3 comprises 35 items which assess the global education and care environment. The six items of the ECERS-E literacy subscale assess support for language and emergent literacy; and the fourteen items of the SSTEW assess adult-child interactions supporting children’s thinking, language, emotional well-being and self-regulation. All items are scored on a seven-point scale from 1 (inadequate) to 7 (excellent). Data collectors were experienced observers blind to treatment allocation, with training and reliability conducted according to author guidance. All scales were completed in 1 day: ECERS-3 over 3 hours; STEW and ECERS-E over the full day.

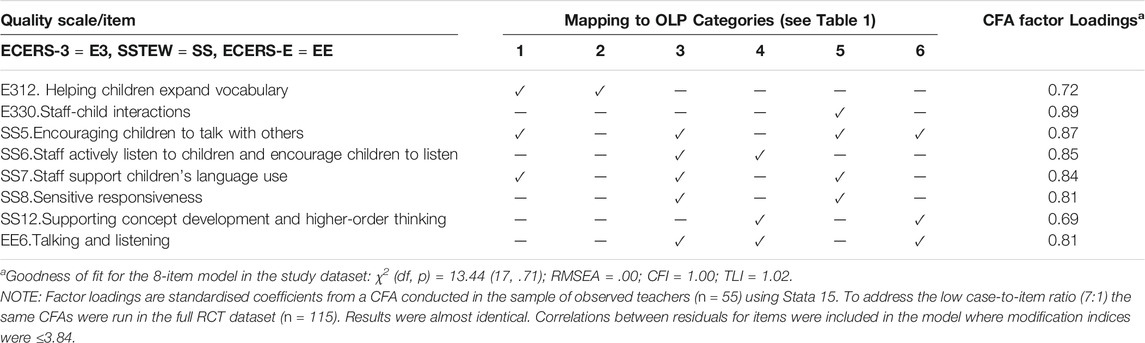

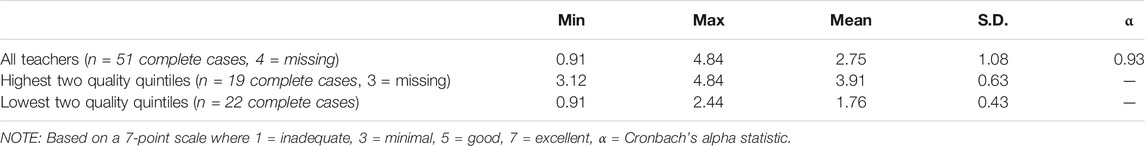

An Oral Language Quality Score (OLQS) was generated using relevant items from all three scales. Although this departs from traditional use of these tools, it was necessary because they capture many practices not directly relevant to language pedagogy. Items were selected based on their alignment with the OLP pedagogical categories and subjected to confirmatory factor analysis (CFA) to establish whether they formed a coherent latent construct (Schreiber et al., 2006). Full details can be found in Mathers, 2021. The eight items included in the OLQS are shown in Table 4, alongside factor loadings for the final CFA model, model fit statistics and alignment with the OLP. Descriptive data are shown in Table 5. Quality was low on average, although there was variation between schools. The OLQS was normally distributed. For the purposes of the current study, observed respondents were divided into quintiles based on their OLQS (Table 5) to enable knowledge data for the top and bottom two quintiles to be compared.

The Intervention

The intervention aimed to improve children’s oral language skills via improvements in language-supporting teaching practice. It comprised 6 days of training and up to 3 days of in-class mentoring per school over a period of just less than 1 year. In intervention schools, at least one nursery and reception class teacher participated, with two reception teachers participating in larger schools. Teachers attended the training and were supported to engage their wider team in implementation. The programme was designed to develop theoretical and procedural knowledge and to provide direct examples of effective practice. During training, teachers were introduced to a set of language-learning principles (e.g. “be a magnet for communication”, “be a language radiator”) which reflected all six of the OLP pedagogical categories (Table 1). They were taught how to use these principles and a range of research tools (including ECERS-3 and SSTEW) to observe practice seen in videos, and were provided with strategies and resources for refining practice. Between training days, they were supported to use these resources to evaluate their practice and make changes.

Data Preparation and Analysis

Seven teachers (6.7%) failed to complete Video 3, with three of these (2.9%) also omitting Video 2. In the validation study, a number of data preparation techniques were employed, including use of Full Information Likelihood Estimation, Winsorisation of outliers and use of clustered robust standard errors to account for schools with multiple responses (Mathers, 2021). In the current study, with its focus on descriptive examination of teachers knowledge, only complete responses (n = 97) were used and no adjustments made. Since data were collected post-intervention, all analyses are conducted for the control group alone (n = 54, complete n = 52) as well as for the full sample, to provide information about a population unaffected by intervention. Data from all three OLP videos were used, but analyses were also conducted using only Videos one and two (ie, those included in the final OLP measure) as a robustness check.

For analyses exploring relationships between teacher knowledge and classroom quality, the sample was restricted to teachers whose classes were observed (n = 55, complete n = 51), including both intervention and control groups to provide an adequate sample. OLP data for teachers in the highest two quality quintiles (n = 19, missing = 3) and lowest two quintiles (n = 22, missing = 0) were compared, and taken to reflect the knowledge of expert teachers and non-expert teachers.

Analyses were conducted using SPSS V.27 (IBM Corp, 2020) and, given the modest sample, are mainly descriptive. The modest sample also means that findings must be treated as exploratory.

Results

Teachers generated a mean of 19.1 responses across the three videos: slightly over six responses per video on average (of a maximum eight per video). More detailed analysis is presented below, with data for the control group (ie, a population unaffected by language-specific professional development) shown first, followed by the full sample, unless otherwise specified.

Perceiving: Which Language-Supporting Strategies did Teachers Know?

On average, teachers identified just less than twenty valid strategies across the three videos, with rates similar for the full sample and control group (Table 3); although these may not reflect unique strategies since the same strategy could be identified across multiple videos.

Table 1 shows the proportion of teachers identifying each individual strategy. Overall, teachers displayed highest awareness of strategies to model language and to facilitate communication and conversation, with assignment rates ≥96%. The most frequently identified individual strategies (>60% of teachers) were: modelling rich vocabulary (1); using descriptive, information or narrative language in concrete contexts (5); providing concrete clues to meaning (8); and verbal strategies to invite communication (11). Illustrative responses are shown in the Supplementary Material. All four strategies had been rated by external experts as being important (≥4/5) and well represented in at least one video (≥4/5). One further point is worth noting regarding verbal strategies to invite communication (11). The majority of responses coded to this category (90.4/80.4%) related to the use of questioning, indicating a high awareness of this technique. However, the higher-level strategy of open questions (21) was identified by fewer than 40% of teachers, despite being rated by external experts as being both important and present in the videos.

Strategies under “Relationships and the child” were identified least often, with reporting rates ≤25% for all except Strategy 27. Reporting rates tended to be higher for the full sample than the control group, suggesting the intervention may have raised awareness of relational strategies.

In all, twelve of the 30 strategies were reported by ≤ 25% of teachers. In some cases, this accurately reflected low strategy presence in the videos. However, six of the twelve strategies were considered by the external expert panel to be well-reflected in at least one video (≥4/5) and important for language development (≥4/5). These include: emphasising, repeating or reinforcing language (4); engaging in conversation with children (9); prompting children to use new vocabulary (12); supporting mutual understanding/adapting language to the child’s level (15); supporting children to attend and participate (16); and positive affect and communication (22).

Perceiving: Were There Differences Between What Experts and Non-experts Noticed?

Table 1 compares reporting rates for expert teachers (n = 19) and non-experts (n = 22) based on their Oral Language Quality Score. Chi squared tests were used to assess differences between groups. Given the challenges of detecting statistically significant results in a small sample, findings significant at the 10% level (p ≤0.10) are reported as well as those significant at the 5% level.

Expert teachers reported 66.8 valid strategies on average across, compared with a mean of 59.2 for teachers leading the lowest quality classrooms. In the “Modelling Language” category, experts were more likely to identify: modelling diverse, rich or specific grammar (2) (χ = 4.01, p=.045) but less likely to report emphasising, repeating or reinforcing language (4) (χ = 2.70, p=.10).

Expert teachers were also more likely than non-experts to identify promoting children’s thinking (18) (χ = 2.76, p=.097). Although the differences for other strategies were not statistically significant, reporting rates across the “Higher-order Language and Thinking” category tended to be higher for experts than non-experts, with the exception of Strategy 20. Finally, experts were more likely than non-experts to identify positive affect or communication (22) (χ = 3.87, p=.049) and, although no other differences were statistically significant, reporting for all strategies under “Relationships and the Child” were higher in the expert group.

When the analysis was rerun based on the mean number of strategies reported within each code rather than the proportion of teachers reporting each code, a difference between experts and non-experts was also identified for linguistic expansion or recasting of children’s language (3), with experts reporting the strategy more often (0.68 vs 0.95, χ = 11.03, p=.026).

Lexicon: Which Words did Teachers Know and Use?

Tables 3 and 6 present data on teachers use of professional vocabulary to describe the interactions they observed. Use of specialist vocabulary was low overall: although 59.6% of teachers in the control group used at least one professional term, teachers used only 1.04 terms on average across a mean of 19.08 total responses (full sample: 1.39/19.07). The most commonly used terms were those relating to open and closed questioning (30.8%/32.0%) and descriptive commentary (21.2%/28.9%). The remaining vocabulary terms showed low reporting rates.

To some extent, teachers use of professional vocabulary accurately reflects the content of the videos and teachers reporting of strategies in response to them. For example, open questions and commentary were both prominent in the videos and identified by a third of teachers or more, while word definitions were less well reflected and less frequently reported. However, the findings also reveal widespread use of informal language to describe strategies which were present in the clips. For example, although 48.5% of all teachers reported seeing linguistic expansion or recasting in the video interactions, the majority used informal descriptions such as “repeating sentences correctly or in more detail when the child has finished”. Only 5.2% used a professional term such as “recasting”. Within Strategies 11/12, thirteen teachers explicitly reported observing a completion prompt to invite communication or use of new vocabulary. Of these, twelve used informal language descriptions such as “leaving time for child to fill in the blanks” or “gaps for the child to fill in the word”, with only one using the specific term “completion prompt”.

The OLP validation study had already identified naming as a predictor of classroom quality; that is, teachers who used more professional vocabulary led classrooms which offered higher quality support for language development than teachers who used fewer professional terms. Descriptive examination of the individual terms used by teachers leading the highest and lowest quality classrooms (Table 6) suggests this pattern applied across the board. Although absolute numbers are small, the proportion of teachers using each term within their responses was higher among experts than non-experts for almost all vocabulary terms.

Analysis: What Was the Nature of Teacher’s Interpretations?

On average, teachers offered approximately two interpretations (Table 3) across an average of 19 responses, with rates marginally lower in the control group than the full sample. Slightly under half provided no interpretations at all. Among teachers who provided at least one interpretation, the mean number reported was slightly over three (control group = 3.1, full sample = 3.9).

In all, 199 interpretations of the OLP videos were offered by teachers in the sample. Table 1 shows the absolute number of interpretations coded to each of the pedagogical strategies and the “interpretation rate” for each (ie, of absolute number of strategies identified, the proportion which were interpreted).2 Given the small number of interpretations within each pedagogical code, it was not considered appropriate to present data for the control group alone.

All six broad categories (Modelling Language, Word Meanings etc) were interpreted to some degree. Interpretation rates were highest for “Word Meanings”, particularly for concrete clues to meaning (8) (37%). Interpretations typically referenced the use of gestures, for example “using hand gestures to clarify the meaning of words”. Other strategies with relatively frequent rates of interpretation (40.0–50%) include prompting children to use new vocabulary (12), supporting mutual understanding (15), supporting children to attend/participate (16) and facilitating peer communication (25). Illustrative responses are shown in the Supplementary Material. To some degree, these trends are a product of the coding system, whereby responses are coded to certain categories only if enough information is provided to support assignment. For example, the response “questioning” would be coded to verbal strategies to invite communication (11). However, “used missing word questions to encourage vocabulary?” would be coded to prompting children to use new vocabulary (12), based on the additional detail provided.

More interesting, perhaps, are the strategies for which few interpretations were made. For example, across the 257 responses coded to verbal strategies to invite communication (11) – the majority of which (205) referenced use of questions - only 16 interpretations were offered (6%). Interpretation rates were similarly low (5%) for use of open questions (21). As noted, the interpretation rate may have been somewhat reduced because questions with an explicitly stated pedagogical intention were coded elsewhere. Nonetheless, these findings indicate low levels of pedagogical interpretation in relation to the use of questioning techniques. Low interpretation rates (0–7%) were also seen for strategies relating to adult-child relationships (strategies 22, 23, 24, 27), responsive conversation techniques and non-verbal communication (strategies 10, 13) and within the Higher-Order Language and Thinking category (strategies 19, 20, 21).

Finally, a supplementary analysis considered the types of interpretation made, coding responses to reflect whether they highlighted a potential pedagogical intention, an inferred effect on the child, or a possible alternative approach or pedagogical decision (and allowing responses to be coded to multiple categories). By far the most common (93%, n = 185) were inferred pedagogical intentions, for example “using hand gestures to clarify the meaning of words”. Only a small proportion (5%, n = 10) explicitly inferred an effect on the child (eg, “Teacher models how to use narrative skills to describe what the child is doing. This is copied by the child and she is able to narrate what she is doing and the effect it was having on the speed of the car”) or a possible pedagogical decision (e.g. “using correct mathematical vocabulary, cuboid not brick”) (2.5%. n = 5).

The validation study findings (Mathers, 2021) had already shown that teachers who provided interpretations tended to lead classrooms offering higher quality language-supporting practice. In line with this, a greater proportion of teachers leading classrooms in the highest two quality quintiles reported at least one interpretation (63.2%), compared with those leading the lowest quality classrooms (50.0%). Given the small number of teachers providing interpretations for each pedagogical strategy, it was not considered appropriate to compare expert and non-expert teachers at any greater level of detail.

Discussion

The OLP tool captures teachers professional vision as a means of eliciting the dynamic knowledge they can access, activate and use in classroom situations to support real-time decision-making (Kersting et al., 2012). It is the first pre-school video measure to focus on knowledge of oral language pedagogy, and to consider the role of higher order knowledge for pre-school teachers. The OLP validation study (Mathers, 2021) demonstrated the importance of pedagogical knowledge, with higher scores (ie, greater knowledge) predicting the quality of classroom practice.

The current study conducts additional analysis of the OLP validation study dataset (Mathers, 2021), examining responses in greater depth to generate guidance for practice and teacher professional development. It considers which language-supporting strategies teachers noticed (perceiving), which professional vocabulary terms they used (naming) and the nature of the interpretations they made (interpreting), and examines differences between expert and non-expert teachers. This section presents conclusions, interpretations and implications. Although the small sample means findings must be treated with caution, they nonetheless provide valuable information to guide workforce development and future research.

Knowledge of Language-Supporting Strategies

Teachers displayed high awareness of many important strategies, particularly relating to language modelling and the facilitation of communication and conversation. The ability to perceive these strategies in videos is theorised to reflect teachers procedural knowledge of these strategies (ie, the existence of scripts which can be recalled to support enactment in real classroom interactions). Given the open-ended nature of the task, the identification of these strategies as salient (over and above others present in the videos) suggests two further things. First, it indicates that teachers gave importance to these strategies in relation to language learning; and, second, it suggests that teachers’ codified scripts for these strategies were “close to the surface” and accessible for activation—potentially because they had been deeply codified through repeated cycles of classroom application and observation. Thus, what teachers notice may reflect not only what they know, but also what they are likely to do in specific classroom situations. This suggests pre-school teachers both value—and hold accessible and useable knowledge regarding—the modelling of vocabulary, description and narration, verbal prompts to communicate, and the use of concrete clues to meaning (eg, gestures). However, findings also indicate some potential gaps in pedagogical knowledge, with six strategies (4, 9, 12, 15, 16, 22) displaying low reporting rates despite high expert “importance” and “presence” ratings.

Comparison of expert and non-expert teachers suggested that teachers leading the most language-supporting classrooms were more cognisant of the need to model grammar (2) alongside more general language modelling (6) and modelling vocabulary (1) — for which reporting rates were similar across groups. They were also more aware of the need to target children’s thinking (18) alongside their language. These findings may reflect the more specialist linguistic knowledge of expert teachers. This theory is further supported by a non-significant trend in the “Communication and Conversation” category. While reporting rates for verbal strategies to invite communication (11) were similar across groups, expert teachers were somewhat more likely (15.8 vs 4.5%) to identify the specific strategy of prompting children to use new vocabulary (12). While the modest sample means these expert comparisons are based on differences of a few teachers, they nonetheless provide insights to guide further research.

Expert teachers were also more conscious of the role played by positive affect or communication 22) in eliciting oral language, and showed a trend for higher reporting across the strategies representing relational and responsive pedagogy in Relationships and the Child (23, 24, 26, 27) and Facilitating Communication and Conversation (10, 13). There is strong evidence that responsive communication facilitation (e.g. being warm and receptive to encourage interaction) is beneficial. In fact, one recent study concluded that only communication-facilitation predicted child vocabulary growth when considered alongside the “data-providing” features of teachers talk such as language modelling (Justice et al., 2018). And although direct evidence of links between teacher emotional support and oral language growth is more equivocal, there is strong evidence from research on parent-child interactions that positive relationships matter for language development. These findings point to a dual focus for professional development on relational pedagogy alongside specialist linguistic knowledge, particularly given the low reporting rates for some of these strategies (eg, 12, 22).

A final interesting point relates to the finding from supplementary analysis that teachers in the lowest-quality classrooms placed more emphasis on linguistic expansion and recasting. This is puzzling, given that experts were more likely than non-experts to report the modelling of grammar, which is a closely-related strategy. An examination of responses reveals a possible explanation. Many referred in some way (either explicitly or implicitly) to “correcting” children’s language, for example: “Feedback on the child’s language - rephrasing their words in a more grammatically correct way” or “The child said upper and the practitioner then modelled the word steeper by repeating the sentence”. It may be that some of the differences between expert and non-expert teachers stem from fundamental differences in their beliefs about pedagogy and children; for example, experts are more likely to focus on the role of teachers in providing a rich language model for children, while non-experts tend to focus on children’s “errors” and the need to correct them. This is mere speculation and cannot be confirmed from the data available. However, it is worthy of exploration in future studies, and may reflect the relevance of teachers conceptions about learning and teaching and the close relationship between knowledge and beliefs (Hoy, David and Pape, 2006). It is a pertinent reminder of the complexity of professional expertise and the multi-faceted influences on teachers practices.

Use of Professional Vocabulary

The use of professional vocabulary (naming) to describe the strategies reported is assumed to reflect explicit knowledge of the relevant concepts, and spontaneous access to this knowledge in context. Findings indicate some use of specialist terms among participating teachers, for example “open questions” or terms relating to descriptive commentary. However, in general, use of specialist terminology was low compared to use of informal descriptions. In fact, very few of the terms used by teachers were identified by experts as representing “specialist professional vocabulary” at all—only eight concepts were represented in the coding scheme (Table 6). This may reflect a generally low level of formal oral-language-related professional knowledge in the early childhood workforce, and the absence of a specialist professional lexicon.

Building a professional lexicon is important, since learning the language of a discipline is part of learning the discipline itself. In the OLP validation study, use of professional vocabulary (naming) was the strongest predictor of classroom quality. Having the vocabulary with which to name a concept may help teachers to sharpen their professional reflection and discussion upon that concept, supporting deeper understanding and intentional teaching. It may also aid them in pedagogical leadership; for example, in articulating knowledge to others and explaining why certain practices are important. However, simply improving the vocabulary of pre-school teachers will not ensure improved knowledge and practice. Use of professional vocabulary likely predicts classroom quality because it is a proxy for prior language-specific professional development, and reflects wider formal knowledge. To become expert language pedagogues, pre-school teachers require learning opportunities which support their explicit understanding and application of pedagogical concepts relating to oral language development.

Interpretations

Despite not being directly prompted, more than half the teachers offered an interpretation of the video interactions. Although responses were not as rich as in studies which directly elicit pedagogical analysis (eg, Kersting et al., 2012) they provide evidence that some teachers were spontaneously connecting the strategies they noticed in the videos with a potential pedagogical intention or child outcome, or inferring possible pedagogical decision-making—perhaps due to their highly interconnected cognitive schemata (Blömeke et al., 2015; Sabers et al., 1991; Seidel and Stürmer, 2014; van Es and Sherin, 2002). The analytic expertise of these teachers was “close to the surface” and readily activated, perhaps having become embedded through the frequent exercising of their analytic muscles in the classroom. We can infer that they were, therefore, also the most likely to reason during real classroom interactions, in support of intentional teaching practice. The relevance of interpretation as an indicator of teaching expertise was confirmed by the predictive validity of the interpretation score in the OLP validation study (Mathers, 2021). In the current study, closer examination of these interpretations provides valuable information to guide practice and professional development.

The first significant finding is that most interpretations connected observed teaching behaviours to a broader pedagogical purpose without explicitly referring to the child’s behaviour or response, indicating a focus on the teacher rather than the child. In support of this conclusion, a softer analysis of interpretations inferring a pedagogical intention showed that only a minority referenced the development of a specific child outcome such as vocabulary, listening, comprehension or narrative skills, confidence or thinking (eg, “questioning to extend children’s thinking, vocabulary and explanations”). More common was the linking of the strategy identified to a broader pedagogical intention without explicit reference to child outcomes (eg, “comments used to start and continue the conversation”). This would seem to contradict recent work showing that pre-school teachers draw on multiple sources to support their pedagogical reasoning during language and literacy activities—including knowledge of child development and learning, and knowledge about specific children and their learning goals for them (Dwyer and Schachter, 2020). It is unsurprising that teachers in the current study focused on adult pedagogy rather than child learning, since they were prompted to focus on teaching strategies and did not know the children (in Dwyer and Schachter’s work, teachers were reflecting on their own practice). Nonetheless, the observation is worthy of further research, as it may indicate a need to support teachers in explicitly connecting pedagogy to child learning.

The findings also indicate some specific targets for professional development. Notably, despite high awareness among teachers of questioning as a technique, there were low levels of pedagogical reasoning in relation to this strategy, perhaps indicating an “unquestioning use of questioning”. This suggests pre-school teachers would benefit from support in using questions in a more nuanced and intentional manner to fulfil a specific pedagogical purpose.

The same was also true for some of the “softer” responsive and relational techniques, and for several strategies under “Higher-order Language and Thinking”. The lack of interpretation of such strategies suggests teachers may not be fully understanding the value of cognitively challenging techniques such as inferential language, open questions and storytelling in developing higher-order language and thought; or the role of responsive and relational pedagogy in encouraging children to communicate. Much may be gained from addressing these aspects through professional development and - more specifically - in explicitly nurturing teachers’ ability to link knowledge of relevant pedagogical techniques with knowledge of child development to promote their intentional use in fostering oral language. The OLP validation study (Mathers, 2021) showed that such “classroom reasoning” does not develop naturally through experience, nor necessarily through professional development which does not have an explicit focus on doing so. Given that interpretation predicted the quality of classroom practice, this suggests teachers need access to learning opportunities which explicitly nurture their classroom reasoning. This might include, for example, structured opportunities to reflect on practice (ones own, that of colleagues, or video examples) in order to identify and discuss child learning needs, strategies which might support learning, and the success (or otherwise) of strategies employed. Such an approach already has a long history, reflected in the work of van Es, Sherin and others (e.g. van Es and Sherin, 2010). The current study offers valuable guidance on the potential focus of such analytical professional development (eg, questioning, responsive practice, higher-order language and thought).

Limitations

This study faced a number of limitations. Both the OLP survey response rate and the resulting sample size were modest, which limits the generalisability of results and opportunities for robust sub-group analysis. The OLP itself cannot be said to reflect all domains of pedagogical knowledge, or assess knowledge across a representative range of early childhood contexts. It was based on responses to a small number of short videos, and focuses on knowledge of teaching strategies while excluding other facets (eg, knowledge of child development).

The fact that naming and interpreting were not directly prompted supports examination of teachers spontaneous use of pedagogical knowledge in response to real classroom interactions. However, it also meant that reporting rates were relatively low, and interpretations less rich than in studies which explicitly prompt teachers to analyse video interactions. Gathering qualitative data from expert teachers alongside quantitative responses would have provided richer material for a deeper examination of reasoning.

Finally, the quality of language support in classrooms was relatively low overall (<5 on the 7-point ERS scale, which is the benchmark for “good quality” - Table 5). Thus, even the “expert teachers” may not have reflected the levels of expertise one might wish for when studying expert knowledge and practice. Future studies should pay close attention to sample selection to ensure learning can be drawn from studying teachers who lead the very highest quality language-supporting classrooms.

Conclusion

The OLP captures a “slice” of preschool teachers pedagogical knowledge, eliciting the strategies which they consider most salient for child language in selected video interactions. While it cannot claim to assess all aspects of knowledge needed for classroom practice, it offers important insight into the nature of expert knowledge in relation to oral language pedagogy. Specifically, it allows assessment of the knowledge which is “close to the surface” and accessible for classroom application, and where there may be gaps in procedural knowledge. This study confirms that pre-school teachers have rich informal knowledge of language-supporting strategies but need learning opportunities which support them to develop greater explicit knowledge, and help them connect knowledge of child development and pedagogy (knowing why as well as how) in order to engage in intentional practice. It offers new insights to guide the content of such professional development, suggesting a tri-fold focus on linguistic input (both grammar and vocabulary), relational pedagogy and cognitive challenging interactions. The fact that these three facets are identified as the features of adult language input which benefit children’s oral language growth (Rowe and Snow, 2019) gives credence to the findings. Finally, this study reminds us that professional development must explicitly support teachers in developing procedural as well as theoretical knowledge to support their live decision-making during classroom interactions.

Data Availability Statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by Education Research Ethics Committee of the University of Oxford (for the RCT) and the UCL Institute of Education (for the current study). The patients/participants provided their written informed consent to participate in this study.

Author Contributions

SM conceived of the presented idea, developed the theory and OLP tool, conducted the analysis and wrote the manuscript. IS provided critical feedback and helped shape the research, its interpretation and the manuscript.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/feduc.2021.748347/full#supplementary-material

Footnotes

1In comparison, the naming score in the OLP validation study reflected the number of responses credited as including a valid professional term

2The absolute number of interpretations (231) is somewhat higher than 199 because the data reflect the number of valid pedagogical strategies which had an interpretation attached, rather than the number of responses which had a pedagogical code attached - and teachers’ individual responses could be awarded more than one pedagogical code

References

Baumert, J., Kunter, M., Blum, W., Brunner, M., Voss, T., Jordan, A., et al. (2010). Teachers' Mathematical Knowledge, Cognitive Activation in the Classroom, and Student Progress. Am. Educ. Res. J. 47, 133–180. doi:10.3102/0002831209345157

Berliner, D. C. (1988). “February)The Development of Expertise in Pedagogy,” in Hunt Memorial Lecture Presented at the Annual Meeting of the American Association of Colleges for Teacher Education. Editor W. Charles New Orleans, LA.

Blömeke, S., Gustafsson, J.-E., and Shavelson, R. J. (2015). Beyond Dichotomies. Z. für Psychol. 223 (1), 3–13. doi:10.1027/2151-2604/a000194

Bruckmaier, G., Krauss, S., Blum, W., and Leiss, D. (2016). Measuring Mathematics Teachers' Professional Competence by Using Video Clips (COACTIV Video). ZDM Math. Edu. 48, 111–124. doi:10.1007/s11858-016-0772-1

Burchinal, M., Vandergrift, N., Pianta, R., and Mashburn, A. (2010). Threshold Analysis of Association between Child Care Quality and Child Outcomes for Low-Income Children in Pre-kindergarten Programs. Early Child. Res. Q. 25, 166–176. doi:10.1016/j.ecresq.2009.10.004

Clarke, D., Mesiti, C., Cao, Y., and Novotna, J. (2017). “The Lexicon Project: Examining the Consequences for International Comparative Research of Pedagogical Naming Systems from Different Cultures,” in Proceedings of the Tenth Congress of the European Society for Research in Mathematics Education. Editors T. Dooley, and G. Gueudet (Dublin, Ireland: DCU Institute of Education and ERME). CERME10, February 1 – 5, 2017.

Cordingley, P., Higgins, S., Greany, T., Buckler, N., Coles-Jordan, D., Crisp, B., et al. (2015). Developing Great Teaching: Lessons from the International Reviews into Effective Professional Development. London: Teacher Development Trust.

Curby, T. W., Brock, L. L., and Hamre, B. K. (2013). Teachers' Emotional Support Consistency Predicts Children's Achievement Gains and Social Skills. Early Edu. Dev. 24 (3), 292–309. doi:10.1080/10409289.2012.665760

Department for Communities and Local Government (2011). English Indices of Deprivation 2010. Available at: http://www.communities.gov.uk/documents/statistics/pdf/1871208.pdf (Accessed March, , 2019).

Duncan, G. J., Dowsett, C. J., Claessens, A., Magnuson, K., Huston, A. C., Klebanov, P., et al. (2007). School Readiness and Later Achievement. Dev. Psychol. 43, 1428–1446. doi:10.1037/0012-1649.43.6.1428

Dwyer, J., and Schachter, R. E. (2020). Going beyond Defining: Preschool Educators' Use of Knowledge in Their Pedagogical Reasoning about Vocabulary Instruction. Dyslexia 26 (26), 173–199. doi:10.1002/dys.1637

Eraut *, M. (2004). Informal Learning in the Workplace. Stud. Cont. Edu. 26 (2), 247–273. doi:10.1080/158037042000225245

Gamoran Sherin, M., and van Es, E. A. (2009). Effects of Video Club Participation on Teachers' Professional Vision. J. Teach. Edu. 60, 20–37. doi:10.1177/0022487108328155

Glaser, R., and Chi, M. T. H. (20142014). “Overview,” in The Nature of Expertise. Editors M. T. H. Chi, R. Glaser, and M. J. Farr (New York/London: Psychology Press).

Goodwin, C. (1994). Professional Vision. Am. Anthropologist 96 (3), 606–633. doi:10.1525/aa.1994.96.3.02a00100

Hamre, B. K., Pianta, R. C., Burchinal, M., Field, S., LoCasale-Crouch, J., Downer, J. T., et al. (2012). A Course on Effective Teacher-Child Interactions. Am. Educ. Res. J. 49 (1), 88–123. doi:10.3102/0002831211434596

Harms, T., Clifford, R. M., and Cryer, D. (2014). Early Childhood Environment Rating Scale. Third Edition. New York: Teachers College Press.

Hindman, A. H., Wasik, B. A., and Snell, E. K. (2016). Closing the 30 Million Word Gap: Next Steps in Designing Research to Inform Practice. Child. Dev. Perspect. 10 (2), 134–139. doi:10.1111/cdep.12177

Howard, S. J., Siraj, I., Melhuish, E., Kingston, D., Neilsen-Hewett, C., de Rosnay, M., et al. (2018). Measuring Interactional Quality in Pre-School Settings: Introduction And Validation of The SSTEW Scale. Early Child Development and Care, 1–14.

Hoy, A. W., Davis, H., and Pape, S. J. (2006). “Teacher Knowledge and Beliefs,” in Handbook of Educational Psychology. Editors P. A. Alexander, and P. H. Winne (Lawrence Erlbaum Associates Publishers), 715–737.

Jamil, F. M., Sabol, T. J., Hamre, B. K., and Pianta, R. C. (2015). Assessing Teachers' Skills in Detecting and Identifying Effective Interactions in the Classroom. Elem. Sch. J. 115 (3), 407–432. doi:10.1086/680353

Kersting, N. B., Givvin, K. B., Thompson, B. J., Santagata, R., and Stigler, J. W. (2012). Measuring Usable Knowledge. Am. Educ. Res. J. 49 (3), 568–589. doi:10.3102/0002831212437853

Kersting, N., Givvin, K. B., Sotelo, F. L., and Stigler, J. W. (2010). Teachers’ Analyses of Classroom Video Predict Student Learning of Mathematics: Further Explorations of a Novel Measure of Teacher Knowledge. J. Teach. Educ. 61 (1–2), 172–181. doi:10.1177/0022487109347875

Kind, V. (2009). Pedagogical Content Knowledge in Science Education: Perspectives and Potential for Progress. Stud. Sci. Edu. 45, 169–204. doi:10.1080/03057260903142285

Knievel, I., Lindmeier, A. M., and Heinze, A. (2015). Beyond Knowledge: Measuring Primary Teachers' Subject-specific Competences in and for Teaching Mathematics with Items Based on Video Vignettes. Int. J. Sci. Math. Educ. 13, 309–329. doi:10.1007/s10763-014-9608-z

Leyva, D., Weiland, C., Barata, M., Yoshikawa, H., Snow, C., Treviño, E., et al. (2015). Teacher-child Interactions in Chile and Their Associations with Prekindergarten Outcomes. Child. Dev. 86 (3), 781–799. doi:10.1111/cdev.12342

L. W. Anderson, and D. R. Krathwohl (Editors) (2001). A Taxonomy for Learning, Teaching, and Assessing: A Revision of Bloom’s Taxonomy of Educational Objectives (New York: Longman).

Markussen-Brown, J., Juhl, C. B., Piasta, S. B., Bleses, D., Højen, A., and Justice, L. M. (2017). The Effects of Language- and Literacy-Focused Professional Development on Early Educators and Children: A Best-Evidence Meta-Analysis. Early Child. Res. Q. 38, 97–115. doi:10.1016/j.ecresq.2016.07.002

Mathers, S. (2020) Observing Language Pedagogy (OLP): Developing and Piloting a Contexualised Video-based Measure of Early Childhood Teachers– Pedagogical Language Knowledge. Doctoral dissertation. Available at: https://discovery.ucl.ac.uk/id/eprint/10094735/.

Mathers, S. (2021) Using Video To Assess Preschool Teachers’ Pedagogical Knowledge: Explicit And Higher-Order Knowledge Predicts Quality. Early Childhood Res. Quarterly 55, 64–78.

Mathers, S., and Smees, R. (2014) Quality And Inequality - Do Three And Four Year Olds In Deprived Areas Experience Lower Quality Early Years Provision. London: Nuffield Foundation.

Morgan, P. L., Farkas, G., Hillemeier, M. M., Hammer, C. S., and Maczuga, S. (2015). 24-Month-old Children with Larger Oral Vocabularies Display Greater Academic and Behavioral Functioning at Kindergarten Entry. Child. Dev. 86, 1351–1370. doi:10.1111/cdev.12398

Peterson, C., Jesso, B., and McCabe, A. (1999). Encouraging Narratives in Preschoolers: An Intervention Study. J. Child. Lang. 26, 49–67. doi:10.1017/s0305000998003651

Phillips, B. M., Oliver, F., Tabulda, G., Wood, C., and Funari, C. (2020). Preschool Teachers' Language and Vocabulary Knowledge: Development and Predictive Associations for a New Measure. Dyslexia 26, 153–172. doi:10.1002/dys.1644

Pianta, R. C., La Paro, K. M., and Hamre, B. (2008). Classroom Assessment Scoring System. Baltimore: Paul H. Brookes Publishing Company.

Piasta, S., Connor, C. M., Fishman, B., and Morrison, F. (2009). Teachers' Knowledge of Literacy Concepts, Classroom Practices, and Student Reading Growth. Scientific Stud. Reading 13 (3), 224–248. doi:10.1080/10888430902851364

Putnam, R. T., and Borko, H. (2000). What Do New Views of Knowledge and Thinking Have to Say about Research on Teacher Learning. Educ. Res. 29 (1), 4–15. doi:10.3102/0013189x029001004

Roulstone, S., Law, J., Rush, R., Clegg, J., and Peters, T. (2011). Investigating the Role of Language in Children’s Early Educational outcomesResearch. Report DFE-RR134. London: Department for Education.

Rowe, M. L., Silverman, R. D., and Mullan, B. E. (2013). The Role of Pictures and Gestures as Nonverbal Aids in Preschoolers' Word Learning in a Novel Language. Contemp. Educ. Psychol. 38, 109–117. doi:10.1016/j.cedpsych.2012.12.001

Sabers, D. S., Cushing, K. S., and Berliner, D. C. (1991). Differences Among Teachers in a Task Characterized by Simultaneity, Multidimensionality, and Immediacy. Am. Educ. Res. J. 28 (1), 63–88. doi:10.2307/1162879

Schachter, R. E. (2017). Early Childhood Teachers' Pedagogical Reasoning about How Children Learn during Language and Literacy Instruction. Ijec 49, 95–111. doi:10.1007/s13158-017-0179-3

Schachter, R. E., Spear, C. F., Piasta, S. B., Justice, L. M., and Logan, J. A. R. (2016). Early Childhood Educators' Knowledge, Beliefs, Education, Experiences, and Children's Language- and Literacy-Learning Opportunities: What Is the Connection. Early Child. Res. Q. 36, 281–294. doi:10.1016/j.ecresq.2016.01.008

Schreiber, J. B., Nora, A., Stage, F. K., Barlow, E. A., and King, J. (2006). Reporting Structural Equation Modeling and Confirmatory Factor Analysis Results: A Review. J. Educ. Res. 99 (6), 323–338. doi:10.3200/joer.99.6.323-338

Seidel, T., and Stürmer, K. (2014). Modeling and Measuring the Structure of Professional Vision in Preservice Teachers. Am. Educ. Res. J. 51 (4), 739–771. doi:10.3102/0002831214531321

Sheridan, S. M., Edwards, C. P., Marvin, C. A., and Knoche, L. L. (2009). Professional Development in Early Childhood Programs: Process Issues and Research Needs. Early Educ. Dev. 20, 377–401. doi:10.1080/10409280802582795

Shulman, L. (1987). Knowledge and Teaching:Foundations of the New Reform. Harv. Educ. Rev. 57, 1–23. doi:10.17763/haer.57.1.j463w79r56455411

Shulman, L. S. (1986). Those Who Understand: Knowledge Growth in Teaching. Educ. Res. 15, 4–14. doi:10.3102/0013189x015002004

Siraj, I., Kingston, D., and Melhuish, E. C. (2015). Assessing Quality in Early Childhood Education and Care: Sustained Shared Thinking and Emotional Well-Being (SSTEW) Scale for 2–5-Year-Olds Provision. Stoke-on-Trent: Trentham Books.

Spear, C. F., Piasta, S. B., Yeomans-Maldonado, G., Ottley, J. R., Justice, L. M., and O’Connell, A. A. (2018). Early Childhood General and Special Educators: An Examination of Similarities and Differences in Beliefs, Knowledge, and Practice. J. Teach. Edu. 69 (3), 263–277. doi:10.1177/0022487117751401

Stürmer, K., Seidel, T., and Schäfer, S. (2013). Changes in Professional Vision in the Context of Practice. Gruppendyn Organisationsberat 44 (3), 339–355. doi:10.1007/s11612-013-0216-0

sVan Es, E. A., and Sherin, M. G. (2002). Learning to Notice: Scaffolding New Teachers’ Interpretations of Classroom Interactions. J. Tech. Teach. Educ. 10 (4), 571–596.

Sylva, K., Melhuish, E., Sammons, P., Siraj-Blatchford, I., and Taggart, B. Editors (2010) Early childhood Matters: Evidence from the Effective Pre-school and Primary Education Project. London: Routledge.

Sylva, K., Siraj-Blatchford, I., and Taggart, B. (2003) Assessing Quality in the Early Years Early Childhood Environment Rating Scales Extension (ECERS-E). Four Curricular Subscales. Stoke on Trent, UK and Stirling: USA Trentham Books.

van Es, E. A., and Sherin, M. G. (2010). The Influence of Video Clubs on Teachers' Thinking and Practice. J. Math. Teach. Educ 13 (2), 155–176. doi:10.1007/s10857-009-9130-3

van Kleeck, A., Vander Woude, J., and Hammett, L. (2006). Fostering Literal and Inferential Language Skills in Head Start Preschoolers with Language Impairment Using Scripted Book-Sharing Discussions. Am. J. Speech Lang. Pathol. 15 (1), 85–95. doi:10.1044/1058-0360(2006/009)

Waldfogel, J., and Washbrook, E. V. (2010). Low Income and Early Cognitive Development in the UK. A Report for the Sutton Trust. London: Sutton Trust.

Keywords: teacher knowledge, procedural pedagogical knowledge, teacher expertise, professional development (PD), oral language, professional vision, intentional practice, video

Citation: Mathers S and Siraj I (2021) Researching Pre-school Teachers’ Knowledge of Oral Language Pedagogy Using Video. Front. Educ. 6:748347. doi: 10.3389/feduc.2021.748347

Received: 27 July 2021; Accepted: 15 October 2021;

Published: 02 November 2021.

Edited by:

Tara Ratnam, Independent researcher, Mysore, IndiaReviewed by:

Rocio Garcia-Carrion, University of Deusto, SpainJason DeHart, Appalachian State University, United States

Copyright © 2021 Mathers and Siraj. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Sandra Mathers, sandra.mathers@education.ox.ac.uk

Sandra Mathers

Sandra Mathers Iram Siraj

Iram Siraj