Abstract

This paper presents a measurement of the electroweak production of two jets in association with a \(Z\gamma \) pair, with the Z boson decaying into two neutrinos. It also presents a search for invisible or partially invisible decays of a Higgs boson with a mass of 125 \(\text {GeV}\) produced through vector-boson fusion with a photon in the final state. These results use data from LHC proton–proton collisions at \(\sqrt{s}\) = 13 \(\text {TeV}\) collected with the ATLAS detector and corresponding to an integrated luminosity of 139 \(\hbox {fb}^{-1}\). The event signature, shared by all benchmark processes considered for the measurements and searches, is characterized by a significant amount of unbalanced transverse momentum and a photon in the final state, in addition to a pair of forward jets. Electroweak \(Z\gamma \) production in association with two jets is observed in this final state with a significance of 5.2 (5.1 expected) standard deviations. The measured fiducial cross-section for this process is \(1.31\pm 0.29\) fb. An observed (expected) upper limit of 0.37 (\(0.34^{+0.15}_{-0.10}\)) at 95% confidence level is set on the branching ratio of a 125 \(\text {GeV}\) Higgs boson to invisible particles, assuming the Standard Model production cross-section. The signature is also interpreted in the context of decays of a Higgs boson into a photon and a dark photon. An observed (expected) 95% CL upper limit on the branching ratio for this decay is set at 0.018 (\(0.017^{+0.007}_{-0.005}\)), assuming the Standard Model production cross-section for a 125 \(\text {GeV}\) Higgs boson.

Similar content being viewed by others

1 Introduction

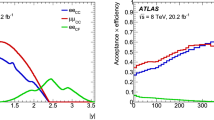

Representative Feynman diagrams for the dominant SM processes contributing to the considered signature: a is an example of the order \(\alpha _{\text {s}} ^2\alpha ^3\) diagrams referred to as “strong”; b, c are examples of the order \(\alpha ^5\) diagrams, which are collectively referred to as “electroweak” (EW)

Studying the self-couplings of the Standard Model (SM) vector bosons, precisely predicted through the SU(2)\(_\text {L}\times \)U(1)\(_\text {Y}\) gauge symmetry, provides a unique opportunity to better understand the electroweak sector of the SM and gain insight into possible anomalies due to new phenomena. Vector-boson scattering (VBS), \(VV'\rightarrow ~VV'\) with \(V,V' = W/Z/\gamma \), is an interesting process to study, being sensitive to both the triple and quartic gauge-boson couplings, which are particularly sensitive to the presence of physics effects beyond the Standard Model (BSM) [1,2,3]. In hadron collisions, such as those produced at the Large Hadron Collider (LHC), VBS events occur whenever two vector bosons, radiated from the initial-state quarks, interact with each other [4], producing a final-state signature characterized by the two vector bosons and a pair of forward hadronic jets in opposite hemispheres. The two vector bosons can similarly annihilate and produce a SM Higgs boson in the so-called vector-boson fusion (VBF) mechanism. Depending on the subsequent decay of the SM Higgs boson, different final-state signatures can be exploited to investigate its properties. Precise knowledge of the various Higgs boson decay branching ratios is a fundamental aspect of understanding whether the 125 \(\text {Ge}\text {V}\) scalar boson behaves according to the SM predictions or whether new physics phenomena modify the Higgs sector.

This paper presents a set of SM measurements and searches for new phenomena in a final-state signature characterized by two forward hadronic jets, a photon, and a significant amount of unbalanced momentum in the plane transverse to the beam direction (\(E_{\text {T}}^{\text {miss}}\)) due to undetected particles. In the SM this can result from \(V\gamma \,+\,\text {jets}\) production, where V is either a \(Z\) boson decaying into an undetected neutrino–antineutrino pair or a \(W\) boson decaying leptonically, where the charged lepton is not reconstructed in the detector. The latter is a background to the measurements and searches in this paper. The \(V\gamma \,+\,\text {jets} \) events are produced in the SM through a combination of ‘strong’ and ‘electroweak’ (EW) contributions: the former are produced through diagrams of order \(\alpha _{\text {s}} ^2\alpha {}^3\) at the Born level as shown in Fig. 1a, where \(\alpha _{\text {s}}\) is the strong coupling constant and \(\alpha \) is the electromagnetic coupling constant, while the latter are produced more rarely through diagrams of order \(\alpha ^5\) at the Born levelFootnote 1 as shown in Fig. 1b, c. The \(Z\gamma \,+\,\text {jets}\) cross-sections have been computed at next-to-leading order (NLO) in \(\alpha _{\text {s}}\) for both the strong [5] and EW [6] production modes. It is not possible to study VBS diagrams, as shown in Fig. 1b, independently of other electroweak processes (e.g. triboson production as shown in Fig. 1c) as only the ensemble is gauge invariant [7]. There is also interference between the SM electroweak and strong processes, which is accounted for in the measurement.

In Run 1 the \(Z\gamma \,+\,\text {jets} \) EW production cross-section has been measured in the dielectron and dimuon final states of the Z boson by the ATLAS and CMS experiments [8, 9] with observed significances of 4.1 and 3.0 standard deviations, respectively. The CMS Collaboration measured EW \(Z(\rightarrow \ell \ell )\gamma \mathrm { jj}\) after observing the process with a significance of 9.4 standard deviations in 137 \(\text {fb}^{-1}\) of 13 \(\text {Te}\text {V}\) proton–proton (\(pp\)) collisions [10].

Profiting from the full 139 \(\text {fb}^{-1}\) dataset collected with the ATLAS detector during Run 2 of the LHC, the analysis presented in this paper reports the observation of EW \(Z(\rightarrow \nu \nu )\gamma \mathrm { jj}\) production at the LHC.

The observation of EW production of \(Z(\rightarrow \nu \nu )\gamma \mathrm { jj}\) lays the groundwork for further investigation of this signature in looking for possible hints of BSM physics, based on interesting and well-motivated benchmark scenarios involving new dark matter (DM) candidate particles or a hidden sector of new particles coupling with the SM Higgs boson. The existence of DM is evident from astrophysical observations [11], although its connection with the SM is still unknown because it has only been observed through gravitational interactions. In this paper, the connection of DM with SM particles is explored by introducing a coupling with the 125 \(\text {Ge}\text {V}\) Higgs boson. A class of BSM scenarios, referred to as Higgs-portal models [12], feature a DM candidate behaving as a singlet under the SM gauge symmetries, with the Higgs boson playing the role of a mediator between the DM candidate and SM particles.

Representative Feynman diagrams corresponding to the dominant BSM signal processes: a the signal due to the invisible Higgs boson decay produced through VBF in association with an emitted photon. The incoming quarks appearing on the left hand side of the diagram are different from each other. b The signal due to the decay of the Higgs boson to a photon–dark-photon pair, produced through VBF

Two main benchmark models are chosen when searching for new phenomena in the considered final-state signature, probing the invisible decay of the Higgs boson or its semi-visible decay into one invisible particle and one photon. The decay of the Higgs boson into invisible particles (\(H\rightarrow \mathrm {inv.}\) ) when produced through the SM-predicted VBF process in association with an emitted photon [13, 14] is probed in this paper for the first time. The Feynman diagram for this signature is shown in Fig. 2a. Compared to a similar signature without the photon, this one benefits from better background rejection and a higher signal reconstruction efficiency; however, it has a lower production cross-section.

No direct constraints on the \(H\rightarrow \mathrm {inv.}\) decays in this experimental signature currently exist. Constraints on the invisible Higgs boson branching ratio (\(\mathcal {B}_{\mathrm {inv}}\)) were set by the ATLAS Collaboration using the full Run 1 dataset at 7 and 8 \(\text {Te}\text {V}\)[15,16,17] and the full Run 2 dataset at 13 \(\text {Te}\text {V}\)[18, 19]. Similar searches were carried out by the CMS Collaboration [20,21,22] using both the Run 1 and Run 2 datasets. The most stringent limits are from the statistical combination of the search results, for which ATLAS reports an observed (expected) upper limit on \(\mathcal {B}_{\mathrm {inv}}\) of 0.26 (0.17) and CMS reports an upper limit of 0.19 (0.15), all at 95% confidence level (CL). In these combinations, the VBF production channel is the single channel with the highest expected sensitivity, for which ATLAS and CMS reported observed (expected) 95% CL limits on \(\mathcal {B}_{\mathrm {inv}}\) of 0.37 (0.28) and 0.33 (0.25), respectively, using 36 \(\text {fb}^{-1}\)of Run 2 data.

In addition to the \(H\rightarrow \mathrm {inv.}\) benchmark, this analysis probes a dark-photon model [23,24,25] which predicts a light or masslessFootnote 2 dark photon (\(\gamma _{\text {d}} \)) coupled with the Higgs boson through a U(1) unbroken dark sector. The Feynman diagram for this signature is shown in Fig. 2b, and BSM extensions to other resonance masses are possible [26]. In this model, a Higgs boson decays into a photon and an invisible dark photon with a branching ratio \(\mathcal {B}(H\rightarrow \gamma \gamma _{\text {d}})\). CMS has probed this benchmark model by considering associated ZH production and VBF Higgs boson production [27, 28], and reported observed (expected) 95% CL upper limits of 0.046 (0.036) and 0.035 (0.028) on \(\mathcal {B}(H\rightarrow \gamma \gamma _{\text {d}})\) in their respective signatures. These two results were combined to yield an observed (expected) 95% CL upper limit of 0.029 (0.021).

The SM processes resulting in the same final-state signature as described before, mainly \(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) and \(W(\rightarrow \ell \nu )\gamma \,+\,\text {jets}\), represent background contributions in the searches for new phenomena. These processes were simulated, for both the \(Z\gamma \,+\,\text {jets}\) EW cross-section measurement and the searches for new phenomena, through dedicated Monte Carlo samples, detailed in Sect. 4. Minor and reducible background contributions arise due to the misreconstruction of objects in the detector, including hadronic jets reconstructed as photons. These background processes are estimated using simulation and data-driven techniques and are validated using dedicated data samples. The SM \(H\rightarrow Z (\rightarrow \nu \nu )\gamma \) process produces the same signature as the investigated \(H\rightarrow \mathrm {inv.} +\gamma \) signal, but the small contribution of such events satisfying the criteria defined in Sect. 6 is neglected in the searches for new phenomena.

A brief description of the ATLAS detector is provided in Sect. 2. The dataset, simulated samples, and physics object selection are covered in Sects. 3, 4, and 5 , respectively. The analysis strategies and the event selection for the EW \(Z(\rightarrow \nu \nu )\gamma \mathrm { jj} \) measurement and searches based on different signal hypotheses, \(H\rightarrow \mathrm {inv.}\) and \(H\rightarrow \gamma \gamma _{\text {d}}\) , are discussed in Sect. 6. The modelling of the different processes contributing to the considered signature is presented in Sect. 7, and the fit models and extracted results for the different signal hypotheses are described in Sect. 8.

2 ATLAS detector

The ATLAS experiment [29,30,31] at the LHC is a multipurpose particle detector with a forward–backward symmetric cylindrical geometry and near \(4\pi \) coverage in solid angle.Footnote 3 It consists of an inner tracking detector surrounded by a thin superconducting solenoid providing a 2 T axial magnetic field, electromagnetic and hadron calorimeters, and a muon spectrometer. The inner tracking detector (ID) covers the pseudorapidity range \(|\eta | < 2.5\). It consists of silicon pixel, silicon microstrip, and transition radiation tracking detectors. Lead/liquid-argon (LAr) sampling calorimeters provide electromagnetic (EM) energy measurements with high granularity. A steel/scintillator-tile hadron calorimeter covers the central pseudorapidity range (\(|\eta | < 1.7\)). The endcap and forward regions are instrumented with LAr calorimeters for both the EM and hadronic energy measurements up to \(|\eta | = 4.9\). The muon spectrometer (MS) surrounds the calorimeters and is based on three large superconducting air-core toroidal magnets with eight coils each. The field integral of the toroids ranges between 2.0 and 6.0 T m across most of the detector. The muon spectrometer includes a system of precision tracking chambers and fast detectors for triggering. A two-level trigger system is used to select events. The first-level (L1) trigger is implemented in hardware and uses a subset of the detector information to accept events at a rate below 100 kHz. This is followed by a software-based high-level trigger (HLT) [32, 33] which reduces the accepted event rate to 1 kHz on average depending on the data-taking conditions. An extensive software suite [34] is used in the reconstruction and analysis of real and simulated data, in detector operations, and in the trigger and data acquisition systems of the experiment.

3 Data samples

This analysis relies on the data collected by the ATLAS experiment from LHC \(pp\) collisions at \(\sqrt{s}\) = 13 \(\text {Te}\text {V}\) during 2015–2018 stable beam conditions when all subdetectors were operational [35], corresponding to a total integrated luminosity of 139 \(\text {fb}^{-1}\) [36, 37]. The data used in this analysis were recorded mainly using trigger algorithms based on the presence of missing transverse momentum, \(E_{\text {T}}^{\text {miss}}\) (described in Sect. 5) [38]. The trigger thresholds for the \(E_{\text {T}}^{\text {miss}}\) were determined by the data-taking conditions during the different periods, especially by the average number of multiple \(pp\) interactions in the same or neighbouring bunch crossings, referred to as pile-up. The first-level trigger threshold was 50–55 \(\text {Ge}\text {V}\), depending on the data-taking period, and the lowest-transverse-momentum (\(p_{\text {T}}\) ) unprescaled HLT threshold for the \(E_{\text {T}}^{\text {miss}}\) trigger algorithm was 70 \(\text {Ge}\text {V}\) in 2015, 90 \(\text {Ge}\text {V}\) in 2016 and 110 \(\text {Ge}\text {V}\) in 2017–2018. This corresponds to a trigger operating on its maximum-efficiency plateau for events with offline \(E_{\text {T}}^{\text {miss}}\) of \(\sim \)180 \(\text {Ge}\text {V}\), depending on the trigger thresholds, in this final state. Independent data samples exploited to study \(W(\rightarrow \ell \nu )\gamma \,+\,\text {jets}\) background were collected using the lowest-\(p_{\text {T}}\) unprescaled single-lepton triggers, with \(p_{\text {T}}\) thresholds ranging from 20 to 26 \(\text {Ge}\text {V}\) for the triggers with the tightest lepton identification criteria [39, 40]. The \(E_{\text {T}}^{\text {miss}}\) triggers complemented the muon triggers to increase the number of accepted single-muon events by 28%, as discussed in Sect. 6.5.

4 Simulated event samples

Monte Carlo (MC) simulated samples are used to model both the SM and BSM processes. The full set of simulated samples is summarized in Table 1. The generated events were processed through a simulation [41] of the ATLAS detector geometry and response using Geant4 [42], and through the same reconstruction software as the collected data. For BSM signal samples with a Higgs boson mass different from 125 \(\text {Ge}\text {V}\), the detector response was simulated using a fast parameterized simulation of the ATLAS calorimeters [43] and the full Geant4 simulation for the other subdetectors.

The pile-up effects in the same and neighbouring proton-bunch crossings were modelled by adding detector signals from simulated inelastic \(pp\) events to the original hard-scattering (HS) event. These were generated with  [44] using the

[44] using the  set of parton distribution functions (PDFs) [45] and the A3 set of tuned parameters (tune) [46]. The energy scale and resolution for leptons and jets, their reconstruction and identification efficiencies, and the trigger efficiencies in the simulation are corrected to match those measured in data.

set of parton distribution functions (PDFs) [45] and the A3 set of tuned parameters (tune) [46]. The energy scale and resolution for leptons and jets, their reconstruction and identification efficiencies, and the trigger efficiencies in the simulation are corrected to match those measured in data.

4.1 \(V\gamma \,+\,\text {jets}\) processes

The \(W(\rightarrow \ell \nu )\gamma \,+\,\text {jets}\) and \(Z\gamma \,+\,\text {jets}\) (together labelled \(V\gamma \,+\,\text {jets}\) ) processes contributing to the signature considered in this analysis contain a charged lepton (\(\ell \) = e, \(\mu \) or \(\tau \)) and a neutrino, a pair of neutrinos (\(\nu \nu \)) or a pair of charged leptons (\(\ell \ell \)) together with a photon and associated jets. The \(V\gamma \,+\,\text {jets}\) processes are split into two components based on the order in the electroweak coupling constant \(\alpha \) . At tree level, the strong component is of order \(\alpha _{\text {s}} ^2\alpha {}^3\) and the EW component is of order \(\alpha {}^5\); example Feynman diagrams are shown in Fig. 1 for these different contributions. The strong component of each \(V\gamma \,+\,\text {jets}\) contribution was simulated for photon \(p_{\text {T}}\) greater than 7 \(\text {Ge}\text {V}\) using the  [47] event generator at \(\text {NLO}\) precision in \(\alpha _{\text {s}}\) for up to one additional parton and at \(\text {LO}\) precision in \(\alpha _{\text {s}}\) for up to three additional partons. These calculations use the Comix [48] and OpenLoops [49] matrix element generators, and the parton-shower matching [50] was performed using the MEPS@LO [51] or MEPS@NLO [51,52,53,54] prescription. The

[47] event generator at \(\text {NLO}\) precision in \(\alpha _{\text {s}}\) for up to one additional parton and at \(\text {LO}\) precision in \(\alpha _{\text {s}}\) for up to three additional partons. These calculations use the Comix [48] and OpenLoops [49] matrix element generators, and the parton-shower matching [50] was performed using the MEPS@LO [51] or MEPS@NLO [51,52,53,54] prescription. The  set of PDFs [55] was used, along with dedicated parton shower tuning developed by the

set of PDFs [55] was used, along with dedicated parton shower tuning developed by the  authors. Electroweak radiative corrections to strong \(V\gamma \,+\,\text {jets}\) production have been computed at NLO [56,57,58] as weights in

authors. Electroweak radiative corrections to strong \(V\gamma \,+\,\text {jets}\) production have been computed at NLO [56,57,58] as weights in  , and these are roughly \(-2\)% to \(-4\)% relative corrections in the chosen signal region. A second sample of \(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) was generated using

, and these are roughly \(-2\)% to \(-4\)% relative corrections in the chosen signal region. A second sample of \(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) was generated using  [59] with the FxFx merging scheme [60] at \(\text {NLO}\) precision in \(\alpha _{\text {s}}\) for up to one additional parton, filtered for photon \(p_{\text {T}}\) >10 \(\text {Ge}\text {V}\), and showered using

[59] with the FxFx merging scheme [60] at \(\text {NLO}\) precision in \(\alpha _{\text {s}}\) for up to one additional parton, filtered for photon \(p_{\text {T}}\) >10 \(\text {Ge}\text {V}\), and showered using  [61]. The difference between the

[61]. The difference between the  and

and  \(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) predictions is symmetrized around the

\(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) predictions is symmetrized around the  prediction and is taken as a source of modelling systematic uncertainty.

prediction and is taken as a source of modelling systematic uncertainty.

Matrix elements for the EW contribution were calculated at \(\text {LO}\) in \(\alpha _{\text {s}}\) using  , and the photon \(p_{\text {T}}\) was required to be larger than 10 \(\text {Ge}\text {V}\). Generated events were showered using

, and the photon \(p_{\text {T}}\) was required to be larger than 10 \(\text {Ge}\text {V}\). Generated events were showered using  [61] with the dipole recoil option enabled along with the CKKW-L [62, 63] merging scheme. These EW samples are normalized to \(\text {NLO}\) QCD predictions obtained from

[61] with the dipole recoil option enabled along with the CKKW-L [62, 63] merging scheme. These EW samples are normalized to \(\text {NLO}\) QCD predictions obtained from  [64] through a correction which depends on the dijet invariant mass (\(m_{\text {jj}}\) ). The effect of the QCD factorization and renormalization scale choices is evaluated from the same \(\text {NLO}\) QCD process calculation in

[64] through a correction which depends on the dijet invariant mass (\(m_{\text {jj}}\) ). The effect of the QCD factorization and renormalization scale choices is evaluated from the same \(\text {NLO}\) QCD process calculation in  . The

. The  EW samples include VBS contributions (shown in Fig. 1b) and triboson contributions (shown in Fig. 2c) which can depend on trilinear and quartic gauge couplings. The triboson processes contribute as much as 15% of the EW samples for low \(m_{\text {jj}} \) values, \(250< m_{\text {jj}} < 500\) \(\text {Ge}\text {V}\), but less than 3% in the more signal-like high-\(m_{\text {jj}}\) region. The interference between the EW and strong-production diagrams, which is of order \(\alpha _{\text {s}} \alpha {}^4\), was simulated at LO in \(\alpha _{\text {s}}\) in

EW samples include VBS contributions (shown in Fig. 1b) and triboson contributions (shown in Fig. 2c) which can depend on trilinear and quartic gauge couplings. The triboson processes contribute as much as 15% of the EW samples for low \(m_{\text {jj}} \) values, \(250< m_{\text {jj}} < 500\) \(\text {Ge}\text {V}\), but less than 3% in the more signal-like high-\(m_{\text {jj}}\) region. The interference between the EW and strong-production diagrams, which is of order \(\alpha _{\text {s}} \alpha {}^4\), was simulated at LO in \(\alpha _{\text {s}}\) in  and the corresponding events were showered using

and the corresponding events were showered using  with the dipole recoil shower enabled. This simulated sample is not included in the background predictions but is used to calculate an uncertainty in the EW \(V\gamma \,+\,\text {jets}\) contribution, which is 5% or less in the \(m_{\text {jj}} {} > 500\) \(\text {Ge}\text {V}\) kinematic region. Similarly, the EW production of a W or Z boson in association with two photons was found to be less than 2% of the EW \(V\gamma \,+\,\text {jets}\) samples calculated with

with the dipole recoil shower enabled. This simulated sample is not included in the background predictions but is used to calculate an uncertainty in the EW \(V\gamma \,+\,\text {jets}\) contribution, which is 5% or less in the \(m_{\text {jj}} {} > 500\) \(\text {Ge}\text {V}\) kinematic region. Similarly, the EW production of a W or Z boson in association with two photons was found to be less than 2% of the EW \(V\gamma \,+\,\text {jets}\) samples calculated with  , so it is not included.

, so it is not included.

The whole \(V\gamma \,+\,\text {jets}\) simulation (i.e. both EW and strong production) applies smooth-cone isolation [65] with a cone size of 0.1 and parameters \(n = 2\) and \(\epsilon = 0.10\) to remove the collinear singularity between photons and charged partons which would otherwise appear in the process amplitude calculations.

4.2 \(V+\,\text {jets}\) processes

Simulation is used to model \(V+\,\text {jets}\) processes when the vector boson decays into neutrinos, muons or \(\tau \)-leptons, and most of these simulated events are removed by the lepton vetoes, object overlap removal described in Sect. 5, and the removal of overlaps with \(V\gamma \,+\,\text {jets}\) , which is described later in this section. Therefore, their contribution is very small. However, V decays into electrons enter the selection mostly through the electrons being misidentified as photons, and this is modelled using the fully data-driven method described in Sect. 7.1. The strong-production \(V+\,\text {jets}\) sample was simulated with the  event generator with the

event generator with the  set of PDFs [55]. Parton-shower matching [50] was performed using either the MEPS@LO or MEPS@NLO prescription with associated parameters tuned by the

set of PDFs [55]. Parton-shower matching [50] was performed using either the MEPS@LO or MEPS@NLO prescription with associated parameters tuned by the  authors. The strong production of \(V+\,\text {jets}\) uses \(\text {NLO}\) matrix elements for up to two partons and \(\text {LO}\) matrix elements for up to four partons calculated with the Comix and OpenLoops libraries and the MEPS@NLO prescription. The samples are normalized to a next-to-next-to-leading-order (\(\text {N}\) \(\text {NLO}\)) prediction for \(V+\,\text {jets}\) [66]. The EW \(V+\,\text {jets}\) sample was generated at \(\text {NLO}\) in \(\alpha _{\text {s}}\) , using Herwig 7 [67] to perform the parton shower and hadronization with the

authors. The strong production of \(V+\,\text {jets}\) uses \(\text {NLO}\) matrix elements for up to two partons and \(\text {LO}\) matrix elements for up to four partons calculated with the Comix and OpenLoops libraries and the MEPS@NLO prescription. The samples are normalized to a next-to-next-to-leading-order (\(\text {N}\) \(\text {NLO}\)) prediction for \(V+\,\text {jets}\) [66]. The EW \(V+\,\text {jets}\) sample was generated at \(\text {NLO}\) in \(\alpha _{\text {s}}\) , using Herwig 7 [67] to perform the parton shower and hadronization with the  PDF set [68]. Herwig 7 uses its Matchbox module [69] to assemble calculations with dipole shower algorithms [70] and interfaces with matrix element plug-ins from

PDF set [68]. Herwig 7 uses its Matchbox module [69] to assemble calculations with dipole shower algorithms [70] and interfaces with matrix element plug-ins from  to compute NLO \(\alpha _{\text {s}}\) corrections in the VBF approximation.

to compute NLO \(\alpha _{\text {s}}\) corrections in the VBF approximation.

After generating events for both the strong and EW \(V\gamma \,+\,\text {jets}\) processes as in Sect. 4.1, the samples may overlap with \(V+\,\text {jets}\) events in which an ISR/FSR photon is radiated. To avoid this overlap, \(V\gamma \,+\,\text {jets}\) events are removed if the photon passes the smooth-cone isolation and lies within \(\Delta R=0.1\) of an electron, muon, or \(\tau \)-lepton that has \(p_{\text {T}} >10\) \(\text {Ge}\text {V}\). In \(V+\,\text {jets}\) samples, events are accepted only if they satisfy the same criteria.

4.3 Top-quark processes

Minor background contributions originating from top-quark-related processes and associated production of top quarks with a \(W\) boson, were all modelled using the  [71,72,73] generator at \(\text {NLO}\) with the

[71,72,73] generator at \(\text {NLO}\) with the  PDF set. The events were interfaced with

PDF set. The events were interfaced with  for the parton shower and hadronization modelling with the A14 tune [74] and the

for the parton shower and hadronization modelling with the A14 tune [74] and the  set of PDFs. The production of \(t{\bar{t}}\gamma \) was modelled using the

set of PDFs. The production of \(t{\bar{t}}\gamma \) was modelled using the  generator at \(\text {NLO}\) . The events were interfaced with

generator at \(\text {NLO}\) . The events were interfaced with  for the parton shower and hadronization modelling with the A14 tune and the

for the parton shower and hadronization modelling with the A14 tune and the  set of PDFs. The decays of bottom and charm hadrons were performed by

set of PDFs. The decays of bottom and charm hadrons were performed by  [75] in all top-quark processes.

[75] in all top-quark processes.

4.4 Additional background samples

The \(\gamma \,+\,\text {jet}\) background is simulated using  at \(\text {NLO}\) in \(\alpha _{\text {s}}\) , similarly to the

at \(\text {NLO}\) in \(\alpha _{\text {s}}\) , similarly to the  \(V+\,\text {jets}\) samples in Sect. 4.1 except for the use of a dynamical merging scale to capture the fragmentation contribution [76]. Due to the large cross-section for this process, a partially data-driven ‘jet-smearing’ technique was used to increase the sample size as discussed in Sect. 7.1.

\(V+\,\text {jets}\) samples in Sect. 4.1 except for the use of a dynamical merging scale to capture the fragmentation contribution [76]. Due to the large cross-section for this process, a partially data-driven ‘jet-smearing’ technique was used to increase the sample size as discussed in Sect. 7.1.

Other processes listed in Table 1 but not described in the previous subsections result in a negligible contribution to the analysis signature. Therefore, no further description is given.

4.5 Higgs boson processes

In the searches for \(H\rightarrow \mathrm {inv.}\) carried out in this paper the target is VBF Higgs boson production, although the small contribution from gluon–gluon fusion (ggF) Higgs boson production processes satisfying the selection criteria is also considered as a contributing signal. For both of these processes, corresponding MC samples were generated according to the details described in the following. The invisible Higgs boson decay was simulated using the SM \(H\rightarrow ZZ^{*}\rightarrow 4\nu \) decay with a 100% branching ratio. The difference in the relevant kinematic distributions between the \(H\rightarrow 4\nu \) process and Higgs boson decays into new undetected particles is negligible.

The VBF Higgs boson production process with an additional photon, as shown in Fig. 2a, was simulated for a Higgs boson mass of 125 \(\text {Ge}\text {V}\), requiring the presence of only electroweak vertices at \(\text {LO}\) and photon \(p_{\text {T}}\) larger than 10 \(\text {Ge}\text {V}\). This process was computed to \(\text {NLO}\) accuracy in \(\alpha _{\text {s}}\) using  interfaced with

interfaced with  [67, 77] for parton shower and non-perturbative hadronization effects, using the

[67, 77] for parton shower and non-perturbative hadronization effects, using the  PDF set [78]. Parton shower uncertainties are computed from the relative difference between the process generated by

PDF set [78]. Parton shower uncertainties are computed from the relative difference between the process generated by  at \(\text {LO}\) in \(\alpha _{\text {s}}\) with showering by

at \(\text {LO}\) in \(\alpha _{\text {s}}\) with showering by  and the same process with showering by

and the same process with showering by  [79] with the dipole recoil shower variable [80] turned on. The parton shower comparison is not made at \(\text {NLO}\) because the dipole recoil option in

[79] with the dipole recoil shower variable [80] turned on. The parton shower comparison is not made at \(\text {NLO}\) because the dipole recoil option in  is available only at \(\text {LO}\) , and without it there is a larger prediction of central emissions.Footnote 4 The same smooth-cone isolation as described in Sect. 4.1 is used to remove the collinear singularity between photons and charged partons.

is available only at \(\text {LO}\) , and without it there is a larger prediction of central emissions.Footnote 4 The same smooth-cone isolation as described in Sect. 4.1 is used to remove the collinear singularity between photons and charged partons.

The ggF Higgs boson production process was simulated at \(\text {N}\) \(\text {NLO}\) accuracy in \(\alpha _{\text {s}}\) using  NNLOPS [71,72,73, 83, 84], which achieves NNLO accuracy for arbitrary inclusive \(gg\rightarrow H\) observables by reweighting the Higgs boson rapidity spectrum in MJ-MiNLO [85,86,87] to that in HNNLO [88]. The

NNLOPS [71,72,73, 83, 84], which achieves NNLO accuracy for arbitrary inclusive \(gg\rightarrow H\) observables by reweighting the Higgs boson rapidity spectrum in MJ-MiNLO [85,86,87] to that in HNNLO [88]. The  PDF set [78] and the AZNLO tune of

PDF set [78] and the AZNLO tune of  were used. This simulation was interfaced with

were used. This simulation was interfaced with  for parton shower and non-perturbative hadronization effects. The ggF prediction from the MC samples is normalized to the next-to-\(\text {N}\) \(\text {NLO}\) cross-section in QCD plus electroweak corrections at \(\text {NLO}\) [89,90,91,92,93,94,95,96,97,98,99]. Photons present in these samples were generated using Photos [100, 101] since no complete matrix element computation of ggF Higgs boson with a photon is available.Footnote 5

for parton shower and non-perturbative hadronization effects. The ggF prediction from the MC samples is normalized to the next-to-\(\text {N}\) \(\text {NLO}\) cross-section in QCD plus electroweak corrections at \(\text {NLO}\) [89,90,91,92,93,94,95,96,97,98,99]. Photons present in these samples were generated using Photos [100, 101] since no complete matrix element computation of ggF Higgs boson with a photon is available.Footnote 5

The simulation of ggF production of a 125 \(\text {Ge}\text {V}\) Higgs boson described above is also used to model its decay into a photon and an invisible massless dark photon (\(\gamma _{\text {d}}\)) again normalized to a 100% branching ratio. The VBF production process was simulated to \(\text {NLO}\) precision in \(\alpha _{\text {s}}\) with the  generator interfaced with

generator interfaced with  for hadronization and showering and the same \(\gamma \gamma _{\text {d}} \) decay, as shown in Fig. 2b. The \(\text {NLO}\) electroweak corrections for VBF Higgs boson production were computed using HAWK [102] and were applied as a function of the Higgs boson’s \(p_{\text {T}}\) . The VBF samples were generated not only for a 125 \(\text {Ge}\text {V}\) Higgs boson, but also for lighter and heavier Higgs bosons, for the interpretation of the search in the context of other scalar mediators. Throughout this paper, the samples with a Higgs boson assume SM couplings and use the narrow width approximation [89] for the various Higgs boson masses, ranging from 60 \(\text {Ge}\text {V}\) to 2 \(\text {Te}\text {V}\).

for hadronization and showering and the same \(\gamma \gamma _{\text {d}} \) decay, as shown in Fig. 2b. The \(\text {NLO}\) electroweak corrections for VBF Higgs boson production were computed using HAWK [102] and were applied as a function of the Higgs boson’s \(p_{\text {T}}\) . The VBF samples were generated not only for a 125 \(\text {Ge}\text {V}\) Higgs boson, but also for lighter and heavier Higgs bosons, for the interpretation of the search in the context of other scalar mediators. Throughout this paper, the samples with a Higgs boson assume SM couplings and use the narrow width approximation [89] for the various Higgs boson masses, ranging from 60 \(\text {Ge}\text {V}\) to 2 \(\text {Te}\text {V}\).

5 Object reconstruction

Objects are reconstructed from detector signatures by using identification algorithms widely deployed in ATLAS analyses. Candidate events are required to have a reconstructed vertex with at least two associated tracks, each with \(p_{\text {T}} > 0.5\ \text {Ge}\text {V}{}\) and originating from the beam collision region in the x–y plane. The primary vertex in the event is selected as the vertex with the highest scalar sum of the squared \(p_{\text {T}}\) of associated tracks [103].

Electrons are reconstructed by matching clustered energy deposits in the EM calorimeters to tracks in the ID [104], including the transition regions between the barrel and endcap EM calorimeters at \(1.37< |\eta | < 1.52\). Electron candidates must have \(p_{\text {T}} > 4.5~\text {Ge}\text {V}{}\) and \(|\eta | < 2.47\), and fulfill loose identification criteria. Depending on the \(p_{\text {T}}\) and \(|\eta |\) range, muons are reconstructed by matching ID tracks to MS tracks or track segments, by matching ID tracks to a calorimeter energy deposit compatible with a minimum-ionizing particle, or by identifying MS tracks passing a loose requirement and compatible with originating from the IP [105]. Muon candidates must have \(p_{\text {T}} > 4~\text {Ge}\text {V}{}\) and \(|\eta | < 2.7\), and fulfill a very loose identification criterion. No isolation requirement is placed on electron or muon candidates used as a lepton veto. In events with one or more leptons associated with the primary vertex, i.e. in control regions described in Sect. 6, muons (electrons) are required to satisfy medium (tight) identification criteria in order to improve the purity of background processes.

Photon candidates are reconstructed from clustered energy deposits in the EM calorimeter [104]. They must have \(p_{\text {T}} > 15~\text {Ge}\text {V}{}\), fulfill tight identification and isolation criteria [104], and lie within \(|\eta | < 2.37\) but not in the transition region (\(1.37< |\eta | < 1.52\)) between barrel and endcap EM calorimeters.

Particle flow (PFlow) jets are reconstructed using the anti-\(k_{t}\) algorithm [106, 107] with a radius parameter of \(R = 0.4\), using charged constituents associated with the primary vertex and neutral PFlow constituents as inputs [108]. The jet energy is calibrated with the effect of pile-up removed [109]. Jets are required to have \(p_{\text {T}} > 20~\text {Ge}\text {V}{}\) and \(|\eta | < 4.5\). For jets with \(p_{\text {T}} < 60~\text {Ge}\text {V}{}\) and \(|\eta | < 2.5\) the jet vertex tagger (JVT) discriminant [110] is used to identify jets originating from the HS interaction through the use of tracking and vertexing. The chosen JVT working point corresponds to a selection efficiency for HS jets of about 97%, evaluated on an inclusive \(Z (\rightarrow \mu \mu )\) + jets sample. For \(|\eta | > 2.5\), the two leading jets must pass a forward jet vertex tagger algorithm (fJVT) [111, 112] that accepts 93% of jets from the HS interaction and rejects about 58% of pile-up jets with \(p_{\text {T}} > 50~\text {Ge}\text {V}\), evaluated on an inclusive \(Z (\rightarrow \mu \mu )\) + jets sample. Jets containing b-hadrons (\(b\text {-jets}\)) are identified using a multivariate discriminant (MV2c10) output distribution [113]. The working point is chosen to provide a 77% \(b\text {-jet}\) efficiency on an inclusive \(t\bar{t}\) sample, with rejection factors of 6 and 134 for charm-hadron jets and light-flavour quark- or gluon-initiated jets, respectively.

To avoid double counting of energy deposits, the reconstructed objects are required to be separated according to the procedure detailed in Table 2. The overlap of photons with electrons, muons and jets is resolved by a \(\Delta R\) criterion. For leptons in the vicinity of jets, the \(\Delta R\) threshold for sufficient separation depends on the \(p_{\text {T}}\) of the lepton to account for the collimation of boosted objects.

The unbalanced momentum in the transverse plane, referred to as missing transverse momentum or \(\vec {E}_{\text {T}}^{\text {miss}}\) , is defined as the negative vectorial sum of the transverse momenta of all selected electrons, muons, photons, and jets, as well as tracks compatible with the primary vertex but not matched to any of those objects, this last contribution being called the soft term [114, 115]. To define an estimate of the boson \(p_{\text {T}}\) in decays with charged leptons, events containing one or more selected leptons have the \(\vec {E}_{\text {T}}^{\text {miss}}\) evaluation modified by treating such leptons as invisible particles. Each prompt lepton \(\vec {p}_{\text {T}}\) is vectorially added to the \(\vec {E}_{\text {T}}^{\text {miss}}\) to define the quantity \(\vec {E}_{\text {T}}^{\text {miss,lep-rm}}\), as described in Ref. [115]. The magnitude of the \(\vec {E}_{\text {T}}^{\text {miss}}\) (\(\vec {E}_{\text {T}}^{\text {miss,lep-rm}}\) ) is denoted by \(E_{\text {T}}^{\text {miss}}\) (\(E_{\text {T}}^{\text {miss,lep-rm}}\) ). A related event property \(E_{\text {T}}^{\text {jets,no-jvt}}\) is the magnitude of the negative vectorial sum of all jets in the event with \(p_{\text {T}} > 20~\text {Ge}\text {V}{}\) before the JVT requirement. This is a powerful variable for rejecting events where the \(E_{\text {T}}^{\text {miss}}\) is generated by inefficiencies of the JVT requirement for HS jets.

Several cleaning requirements are applied to suppress non-collision backgrounds [116]. Misreconstructed jets can be caused by electronic noise, and jets from collisions are identified by requiring a good fit to the expected pulse shape for each constituent calorimeter cell. Beam-halo interactions with the LHC collimators are another source of misreconstructed jets. Those jets are identified by requirements on their energy distribution in the calorimeter and the fraction of their constituent tracks that originate from the primary vertex. The event is rejected if any selected jet is identified as a misreconstructed jet. Residual contributions of non-collision jets are absorbed into the normalization for the \(\gamma \,+\,\text {jet}\) background as described in Sect. 7.1.

6 Event selection

Based on the reconstructed objects in each event final state, the collected data are assigned to disjoint samples identified with specific phase-space regions, used in this analysis for different purposes. The signal regions (SRs) have the highest purity of signal process events, with mostly irreducible background contributions. The control regions (CR) are enriched in background processes and are used to constrain estimates of such contributions. The validation regions (VR), similar in background process content to the CRs, are used to quantify the level of agreement between data and background predicted yields but are not included in the fit.

A baseline set of requirements is applied to select events with a photon, high \(E_{\text {T}}^{\text {miss}}\) , and the VBF jet signature considered in this paper, as described in Sect. 6.1. For the EW \(Z\gamma \,+\,\text {jets}\) cross-section measurement, events satisfying the baseline SR requirements are categorized according to their dijet invariant mass \(m_{\text {jj}}\), as described in Sect. 6.2. In the same section the fiducial volume considered for the cross-section measurement is defined. The SR-selected events for the \(H\rightarrow \mathrm {inv.}\) search are split into categories of different signal purities based on a multivariate analysis discriminant, which is described in Sect. 6.3. The SR selection and fitted discriminant are modified for the resonant kinematics of the \(H\rightarrow \gamma \gamma _{\text {d}}\) search, which are described in Sect. 6.4. In the statistical analysis of the data, described in Sect. 8, CR events are classified according to the same strategy described for the signal regions in the aforementioned measurement and searches.

6.1 Baseline event selection

Stringent background discrimination is made possible by the characteristic features of the VBF process, such as the presence of two highly energetic jets, typically in opposite \(\eta \) hemispheres of the detector, more forward than jets from non-VBF processes at comparable momentum transfer of the initial-state partons. These features lead, for example, to large values of the pseudorapidity separation \(\Delta \eta _{\text {jj}}\) and invariant mass \(m_{\text {jj}}\) of the jet pair. Multijet production predicted by QCD is instead characterized by two back-to-back leading jets in the transverse plane (\(\Delta \phi _{\text {jj}} \sim \pi \)); therefore, to reduce that background contribution, the azimuthal separation of the leading jets, \(\Delta \phi _{\text {jj}} \), is required to be smaller than 2.5 for all SRs.

The requirements defining the different regions considered in the analysis are summarized in Table 3. The following section describes the selection criteria.

The photon produced in association with either the Higgs boson (for the \(H\rightarrow \mathrm {inv.}\) search) or the \(Z\) boson (for the EW \(Z\gamma \,+\,\text {jets}\) cross-section measurement) is usually radiated from the scattering \(W\) bosons, and it is produced within the large rapidity gap between the two leading jets. For this reason the photon centrality \(C_\gamma \) [117] is defined as

where the subscripts 1 and 2 indicate the highest- and second-highest \(p_{\text {T}}\) jets in the event. The value of \(C_\gamma \) is 1 when the photon is centred between the two jets characterizing the VBF signature,1/e when it is aligned with one of the two jets, and tends to zero when the photon is farther forward in \(|\eta |\) than the jets.

Events are assigned to the SR if they satisfy a set of requirements that have been optimized to maximize the sensitivity of the analysis to the EW \(Z\gamma \,+\,\text {jets}\) and VBF \(H\rightarrow \mathrm {inv.}\) signals. These requirements are summarized in the following.

As discussed in Sect. 3, events are selected with the \(E_{\text {T}}^{\text {miss}}\) -trigger algorithm. To ensure a trigger efficiency exceeding 97% in this topology, the offline \(E_{\text {T}}^{\text {miss}}\) , after full offline reconstruction and calibration of all the objects in the events, is required to be larger than 150 \(\text {Ge}\text {V}\). Corrections and uncertainties in those corrections to the simulation modelling of the \(E_{\text {T}}^{\text {miss}}\) trigger are discussed in Sect. 7.2.

The leading and subleading jets are required to have \(p_{\text {T}} > 60~\text {Ge}\text {V}{}\) and 50 \(\text {Ge}\text {V}\), respectively, both satisfying the fJVT requirements mentioned in Sect. 5. These same two leading jets must be located in opposite \(\eta \) hemispheres (\(\eta (j_1)\times \eta (j_2)<\) 0), to be well separated in pseudorapidity (\(|\Delta \eta _{\text {jj}} | > 3.0\)) and not back-to-back in the plane transverse to the beamline (\(\Delta \phi _{\text {jj}} < 2.5\)), and must have large invariant mass (\(m_{\text {jj}} > 0.25~\text {Te}\text {V}{}\)).

Another characteristic of the EW processes in the VBF topology is reduced hadronic activity in the large rapidity gap between the two leading jets, caused by the absence of colour connection between the two quarks. To suppress the contribution from strong \(V\gamma \,+\,\text {jets}\) production with additional jets from QCD radiation in comparison with the benchmark signals, the equivalent centrality \(C_3\) is defined for the third-leading jet in the event, if any, replacing \(\eta _\gamma \) in Eq. (1) with the third-leading jet’s pseudorapidity \(\eta _3\). A third jet with \(p_{\text {T}} > 25~\text {Ge}\text {V}{}\) can be present in the events, and additional jets are allowed only if they have \(p_{\text {T}} < 25~\text {Ge}\text {V}{}\). The third-leading jet is required to be in one of the two forward regions, corresponding to a small centrality value, \(C_3 < 0.7\).

All jets must be well separated in azimuth from the \(\vec {E}_{\text {T}}^{\text {miss}}\) direction, satisfying \(\Delta \phi (j_{i},\vec {E}_{\text {T}}^{\text {miss}} {}) > 1\) for each jet \(j_{i}\) with \(p_{\text {T}} > 25~\text {Ge}\text {V}{}\). At most one \(b\text {-jet}\) identified using the algorithm defined in Sect. 5 is allowed to be present, and the \(E_{\text {T}}^{\text {jets,no-jvt}}\) variable must be larger than 130 \(\text {Ge}\text {V}\) in each event. The requirement on the maximum number of \(b\text {-jets}\) has a negligible effect (<0.1%) on the signal selection efficiencies, while it ensures that the dataset considered in the search for \(H\rightarrow \mathrm {inv.}\) produced through VBF presented in this paper is orthogonal to the dataset considered in the search for invisible decays of Higgs bosons produced in association with \(t\bar{t}\) [118].

Each event must contain a single reconstructed photon with \(15< p_{\text {T}} < 110~\text {Ge}\text {V}{}\), \(\Delta \phi (\vec {E}_{\text {T}}^{\text {miss}},\gamma ) > 1.8\), and \(C_\gamma > 0.4\). The upper bound on the photon \(p_{\text {T}}\) reduces contributions from background processes, especially the \(\gamma \,+\,\text {jet}\) background. The z coordinate of the reconstructed photon extrapolated from the calorimeter to the beamline must be no more than 250 mm from the identified primary vertex to reduce the number of photons from non-collision backgrounds.

In the SR, all events with leptons are vetoed, specifically ensuring the absence of muon or electron candidates fulfilling the criteria described in Sect. 5. Electron and muon candidates considered in this veto are not required to satisfy any isolation or impact parameter requirement, and muons are considered before the overlap removal requirement.

6.2 Event classification and fiducial-volume definition for EW \(Z\gamma \,+\,\text {jets}\) measurement

In the signature considered in this analysis, the \(m_{\text {jj}}\) observable helps in discriminating between the strong and EW \(Z\gamma \,+\,\text {jets}\) processes. The strong production \(Z\gamma \,+\,\text {jets}\) process tends to have a smaller \(m_{\text {jj}}\) than the EW \(Z\gamma \,+\,\text {jets}\) process. In the context of the EW \(Z\gamma \,+\,\text {jets}\) cross-section measurement, the events satisfying the baseline SR requirements described in Sect. 6.1 are therefore binned according to their \(m_{\text {jj}}\) value, with bin boundaries of 250 \(\text {Ge}\text {V}\), 500 \(\text {Ge}\text {V}\), 1000 \(\text {Ge}\text {V}\) and 1500 \(\text {Ge}\text {V}\). The highest \(m_{\text {jj}}\) in the selected data events is 4.0 \(\text {Te}\text {V}\).

The fiducial volume for the EW \(Z\gamma \,+\,\text {jets}\) cross-section measurement is defined to closely follow the SR definition reported in Sect. 6.1. Particle-level requirements are placed on stable particles (defined as having a mean lifetime \(c\tau > 10\) mm) before interaction with the detector but after hadronization. The four-momenta of prompt leptons, i.e. those which do not originate from the decay of a hadron, include the sum of the four-momenta of prompt photons within a cone of size \(\Delta R = 0.1\) around the lepton, and the resulting leptons are referred to as ‘dressed’ leptons. Jets are constructed using an anti-\(k_t\) algorithm with radius parameter of 0.4, excluding electrons, muons, neutrinos, and photons associated with the decay of a \(W \) or \(Z \) boson. Photon isolation momentum \(E_{\text {T}}^{\text {cone20}}\) is defined as the transverse momentum of the system of stable particles within a cone of size \(\Delta R = 0.2\) around the photon, excluding muons, neutrinos, and the photon itself. The photon isolation momentum is required to be less than 7% of the photon transverse momentum. The truth-\(\vec {E}_{\text {T}}^{\text {miss}}\) is defined as the vector sum of the transverse momenta of all neutrinos in the simulated final state, including those resulting from hadronization.

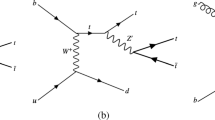

A veto on dressed muons or electrons with \(p_{\text {T}} > 4~\text {Ge}\text {V}{}\) and \(|\eta | < 2.47\) is applied. Jets and photons are required to be separated in angular distance, with \(\Delta R(j, \ell ) > 0.4\). The remaining requirements, similar to the SR ones, are summarized in Table 4, and the acceptance times reconstruction efficiency for the fiducial-volume definition is 0.4%.

6.3 Event classification for \(H\rightarrow \mathrm {inv.}\) search

In the context of the search for \(H\rightarrow \mathrm {inv.}\) signal, a Dense Neural Network (DNN), based on the Keras library [119, 120] with Tensorflow [121] as a backend, was designed and trained to separate such signal from the background contributions by using kinematic features. The DNN assigns signal-like (background-like) events an output score close to 1 (0).

The DNN architecture is composed of three blocks, each of which includes a Dense layer with 384 neurons, a Dropout rate and a Batch Normalization. The Rectified Linear Unit (ReLU) activation function [122] is used for each Dense layer, while a sigmoid activation function is used for the output node. To regularize the training and avoid overtraining, the Dropout and Batch Normalization layers are included in the DNN architecture. Each of the three blocks receives as input the output of the previous block concatenated with the input features.

The DNN is trained using a mixed sample of signal and background events, weighted according to their expected yields in the considered final state. To increase the number of events available for the training, the selection criteria defining the training sample phase space are looser than those for the SR and CRs. Events used for the training are required to have either two or three jets satisfying the identification criteria described in Sect. 5, a single photon, and \(E_{\text {T}}^{\text {miss,lep-rm}} > 140~\text {Ge}\text {V}{}\). The sample for each considered process is split into a training sample and a testing sample composed of 85% and 15% of the original sample, respectively. The training sample is further divided into a subsample used in the proper training (80%) and a sub-sample for validation (20%).

During the DNN training the weights are updated via an optimizer used to minimize the binary Cross-Entropy loss, while at each step a customized metric is evaluated, representative of the expected limit \({\mathcal {E}}\) on the \(H\rightarrow \mathrm {inv.}\) signal. This expected-limit approximation is defined below and the expected-background error in the numerator includes the background yield, the MC statistical uncertainties, and the data background uncertainty in data as provided by the CR:

where \(b_i\) is the total background yield in bin i of the DNN output distribution, \(s_i\) is the signal yield, \(b_i^{V\gamma }\) is the contribution to the background from \(W\gamma \,+\,\text {jets}\) and \(Z\gamma \,+\,\text {jets}\) processes, \(d_i(\mathrm {CR})\) is the number of events in data in the one-lepton CR defined in Sect. 6.5, and \(w_{i,j}\) is the MC weight of each event (\(\mathrm {ev}\)) normalized to the integrated luminosity summed over the events in the considered bin.

After each training epoch the DNN weights are stored only if \({\mathcal {E}}\) is improved and the difference in loss between the training and validation samples is smaller than 3%, which prevents failures from overtraining.

After the composition of the training samples and the DNN architecture were established, a backward elimination procedure was carried out to reduce the number of input features with minimal performance loss. Input features are varied up and down by 10% of their nominal values while evaluating the impact of each as the change in the mean DNN output score. The most relevant input features are expected to have a greater impact on the output if their value is modified, while the least relevant ones will produce smaller variations, and hence can be dropped with no significant loss. When an input feature is dropped the DNN is retrained with the new subset of input features, and the procedure is repeated while the relative change in \({\mathcal {E}}\) from its value with that with all input features is less than 2%. The remaining features have a non-negligible impact on the DNN; hence they are used in the analysis. The 8 most significant kinematic features were selected: the separation of two leading jets in \(\eta \), \(\Delta \eta _{\text {jj}}\), and in \(\phi \), \(\Delta \phi _{\text {jj}}\); the dijet invariant mass \(m_{\text {jj}}\); the photon pseudorapidity \(\eta (\gamma )\); the two leading jet transverse momenta \(p_{T}^{j_1}\) and \(p_{T}^{j_2}\); the subleading jet pseudorapidity \(\eta (j_2)\); and the lepton-subtracted missing transverse momentum \(E_{\text {T}}^{\text {miss,lep-rm}}\) . When their nominal value is perturbed by 10%, \(\Delta \eta _{\text {jj}}\) , \(E_{\text {T}}^{\text {miss,lep-rm}}\) and \(m_{\text {jj}}\) produce the largest change in DNN output score; therefore, they are interpreted as the most important in separating signal from background events.

All events are categorized according to DNN output score into four bins chosen to optimize \({\mathcal {E}}\), subject to the requirements that each bin be at least 0.05 units wide in output score and that there be at least 20 background events expected in each bin in order to have sufficient background yield in the CRs to validate the predictions. The optimal boundaries of the DNN output score bins are [0.0, 0.25, 0.6, 0.8, 1.0]. The acceptance times reconstruction efficiency for VBF Higgs boson production with an additional photon is around 0.7%, which comes from the high \(E_{\text {T}}^{\text {miss}}\) threshold as well as the other selections.

6.4 Event classification for \(H\rightarrow \gamma \gamma _{\text {d}}\) search

The \(H\rightarrow \gamma \gamma _{\text {d}}\) decay presents a striking signature characterized by a kinematic edge at the Higgs boson mass in the distributions of the transverse mass constructed from the photon and \(E_{\text {T}}^{\text {miss}}\), defined as:

To increase the search’s sensitivity to this semi-visible signal, a dedicated signal region \(\hbox {SR}_{\gamma _{\text {d}}}\) is defined by changing some of the selection requirements in Sect. 6.1. No requirement on \(\Delta \phi (\vec {E}_{\text {T}}^{\text {miss}},\gamma )\) is applied when targeting this specific benchmark and, for \(m_{\text {T}}(\gamma ,E_{\text {T}}^{\text {miss}}) > 150~\text {Ge}\text {V}{}\), the upper bound on the photon \(p_{\text {T}}\) is relaxed to \(0.733\times m_{\text {T}}(\gamma ,E_{\text {T}}^{\text {miss}}) \). The requirement on \(\Delta \phi _{\text {jj}} \) is tightened, so that all events have \(\Delta \phi _{\text {jj}} < 2.0\). These selections are changed for both the SRs and the CRs defined later in Sect. 6.5. The events satisfying the \(\hbox {SR}_{\gamma _{\text {d}}}\) requirements are therefore binned according to \(m_{\text {T}}(\gamma ,E_{\text {T}}^{\text {miss}})\) value, with bin boundaries of 0 \(\text {Ge}\text {V}\), 90 \(\text {Ge}\text {V}\), 130 \(\text {Ge}\text {V}\), 200 \(\text {Ge}\text {V}\) and 350 \(\text {Ge}\text {V}\). The events are further separated according to the value of the dijet invariant mass \(m_{\text {jj}}\), into events with \(m_{\text {jj}} < 1.0~\text {Te}\text {V}{}\) and events with \(m_{\text {jj}} {} \ge 1.0~\text {Te}\text {V}{}\). These two \(m_{\text {jj}}\) regions have different relative contributions of the VBF- and ggF-produced Higgs boson signals, so the separation improves the overall sensitivity to the \(H\rightarrow \gamma \gamma _{\text {d}}\) decay. The acceptance times reconstruction efficiency for VBF \(H\rightarrow \gamma \gamma _{\text {d}}\) production is 0.4% for a 125 \(\text {Ge}\text {V}\) scalar mediator but increases to 3.1% for a 500 \(\text {Ge}\text {V}\) scalar mediator.

6.5 Control region definitions

Mutually exclusive CRs are defined in order to constrain the normalization and validate the modelling of kinematic distributions for the two dominant backgrounds from \(W(\rightarrow \ell \nu )\gamma \,+\,\text {jets}\) and strong \(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) production. These CRs have kinematic features similar to those in the SR but are dominated by the background processes. The EW production of \(Z(\rightarrow \nu \nu )\gamma \mathrm { jj}\) events, which is measured in this paper and constitutes an irreducible background contribution in the search for invisible and semi-visible decays of the Higgs boson, is mitigated through the event classification applied to SR events described in Sects. 6.3 and 6.4.

The \(W_{\mu \nu }^\gamma ~\mathrm {CR}\) and \(W_{e\nu }^\gamma ~\mathrm {CR}\) are defined by changing the zero-lepton requirement for the SR to instead require exactly one lepton, either a muon or an electron, respectively. Data events in the region characterized by one electron are collected using a single-electron trigger algorithm, while those characterized by one muon are collected using either the same \(E_{\text {T}}^{\text {miss}}\) trigger algorithm used to select SR events or a single-muon trigger algorithm. In both cases the leptons are required to have \(p_{\text {T}} > 30~\text {Ge}\text {V}{}\) in order to be on the single-lepton trigger efficiency plateau.

In each of these CRs the presence of a single muon (electron) satisfying the medium (tight) identification criteria, passing the loose isolation requirement, and surviving the overlap removal procedure is required. The efficiency of selecting muons (electrons) averaged over \(p_{\text {T}} > 30~\text {Ge}\text {V}{}\) and \(|\eta | < 2.7\) (2.47) is larger than 96% (80%). To ensure that electron and muon candidates originate from the primary vertex, the significance of the track’s transverse impact parameter relative to the beam line must satisfy \(|d_{0}/\sigma (d_{0})| < 3\) (5) for muons (electrons), and the longitudinal impact parameter \(z_0\) is required to satisfy \(|z_{0} \sin (\theta ) | < 0.5\) mm. As done in the SR, the looser identification criteria are applied to define the veto on any additional lepton.

To reduce the contribution from jets faking electrons, \(E_{\text {T}}^{\text {miss}} > 80~\text {Ge}\text {V}{}\) is required. The \(\text {Fake-}e~\mathrm {CR}\) , containing events with \(E_{\text {T}}^{\text {miss}} < 80~\text {Ge}\text {V}{}\), is used to estimate the jets-faking-electrons background. The requirements on the reconstructed jets and photon are the same as for the SR, while requirements on the \(E_{\text {T}}^{\text {miss}}\), other than the one specifically for the \(\text {Fake-}e~\mathrm {CR}\) mentioned above, are replaced by equivalent ones on the \(E_{\text {T}}^{\text {miss,lep-rm}}\).

To check the modelling of the strong \(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) background contribution, a \(Z_{\mathrm {Rev.Cen.}}^{\gamma }~\mathrm {CR}\) is defined by applying all the requirements of the SR definition but reversing the photon centrality requirement, selecting events with \(C_\gamma < 0.4\). There is a 7% EW \(Z(\rightarrow \nu \nu )\gamma \mathrm { jj}\) contribution to the \(Z_{\mathrm {Rev.Cen.}}^{\gamma }~\mathrm {CR}\), which is taken into account in the statistical analysis of the cross-section measurement for this process.

6.6 Validation region for \(\gamma \,+\,\text {jet}\) background

In order to correct the normalization of the \(\gamma \,+\,\text {jet}\) background process and validate the modelling of its kinematic features, an orthogonal sample of events characterized by a small amount of \(E_{\text {T}}^{\text {miss}}\) in the final state is considered. In this region (\(\text {Low-}E_{\text {T}}^{\text {miss}} ~\mathrm {VR}\)) the same selections as the SR are applied, except that the \(E_{\text {T}}^{\text {miss}}\) has to be in the range \(110< E_{\text {T}}^{\text {miss}} < 150~\text {Ge}\text {V}{}\), the upper bound on the photon \(p_{\text {T}}\) is removed, no \(E_{\text {T}}^{\text {jets,no-jvt}}\) requirement is applied, and the leading dijet system’s invariant mass has to satisfy \(0.25< m_{\text {jj}} < 1.0~\text {Te}\text {V}{}\).

For the \(H\rightarrow \gamma \gamma _{\text {d}}\) search, a similar Low-\(E_{\text {T}}^{\text {miss}}\) validation region is defined (Low \(E_{\text {T}}^{\text {miss}}\)-\(\gamma _{\text {d}}\) VR) with the same selection as the \(\text {Low-}E_{\text {T}}^{\text {miss}} ~\mathrm {VR}\) but also requiring \(m_{\text {T}}(\gamma ,E_{\text {T}}^{\text {miss}}) < 110~\text {Ge}\text {V}{}\) without any selection on \(\Delta \phi (\vec {E}_{\text {T}}^{\text {miss}},\gamma )\).

7 Data analysis

The different analyses presented in this paper focus on processes sharing a similar signature. For the EW \(Z\gamma \,+\,\text {jets}\) cross-section measurement, all the SM processes contributing to the considered final-state signature are estimated using both the simulated samples, described in Sect. 4, and data-driven techniques, described in Sect. 7.1. The \(m_{\text {jj}}\) distribution is used to categorize events into SR selections with different signal-to-background ratios, as detailed in Sect. 6.2. In the search for invisible Higgs boson decays, the same strategy is adopted to estimate the SM backgrounds but a different observable is considered. A multivariate discriminant is designed to categorize events falling in the SR according to an increasing expected significance for the invisible Higgs boson decay signal contribution relative to the SM background expectation, as detailed in Sect. 6.3. For the \(H\rightarrow \gamma \gamma _{\text {d}}\) search, events are instead classified according to their kinematic features, as detailed in Sect. 6.4. Control regions enriched in background contributions, described in Sect. 6.5, are considered in the statistical analysis of the data, while validation regions are considered only to qualitatively assess the modelling of specific background contributions, as detailed in Sect. 6.6. The theoretical and experimental uncertainties of the signal and background predictions are described in Sect. 7.2.

7.1 Background contribution estimation

The dominant contributions entering the analysis SR are \(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) and \(W(\rightarrow \ell \nu )\gamma \,+\,\text {jets}\) events in which the lepton from the \(W\) decay is lost mostly because it falls outside of the \(p_{\text {T}}\) or \(\eta \) acceptance. Roughly 28% of \(W\gamma \,+\,\text {jets}\) events in the 0-lepton SR come from hadronic \(\tau \)-lepton decays with truth \(p_{\text {T}} >20\) \(\text {Ge}\text {V}\) and \(|\eta |<2.5\); this fraction is too small to benefit from a veto on reconstructed hadronic \(\tau \)-lepton decays, which would incur additional uncertainties on their reconstruction efficiency. The uncertainty in the modelling of the lost lepton is included in the MC simulation, as detailed in Sect. 7.2. In order to validate the theoretical and experimental uncertainties, these major backgrounds are tested in the CRs described in Sect. 6 characterized by the same requirements as those of the SR but requiring events with exactly one lepton or reversing the \(C_\gamma \) requirement. The CRs with one selected lepton (e.g. \(W_{e\nu }^\gamma ~\mathrm {CR}\) and \(W_{\mu \nu }^\gamma ~\mathrm {CR}\) ) are expected to be dominated by \(W(\rightarrow \ell \nu )\gamma \,+\,\text {jets}\) events while the \(Z_{\mathrm {Rev.Cen.}}^{\gamma }~\mathrm {CR}\) with the reversed \(C_\gamma \) requirement is expected to be dominated by strong production of \(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) events. The background yield compared with the data in the aforementioned CRs is utilized to determine the normalization of the \(W(\rightarrow \ell \nu )\gamma \,+\,\text {jets}\) and \(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) backgrounds by allowing their normalization to float in the different fit models, as described in Sect. 8.

An additional background contribution results from \(Z\,+\,\text {jets}\) or \(W\,+\,\text {jets}\) events in which one of the jets is misreconstructed as a photon. The simulation described in Sect. 4 is not expected to properly reproduce the rate of jets misreconstructed as photons. Therefore, the \(\text {jet}\rightarrow \gamma \) misreconstruction rate is estimated using a technique tuned on data and similar to the one described in Ref. [123]. The method, often referred to as an ‘ABCD’ method, relies on the evaluation of the photon candidate event yield in a two-dimensional plane defined by the amount of transverse energy deposited in clusters of cells within a cone of size \(\Delta R = 0.4\) around the photon, excluding the photon cluster itself (i.e. isolation), and by the quality of the photon identification criteria (tightness) [104]. A photon signal region (region A) is defined by photon candidates that are isolated and satisfy the tight identification requirements described in Sect. 5. Three background regions are defined in the isolation–tightness plane, consisting of photon candidates which are tight but non-isolated (region B), non-tight but isolated (region C) or neither tight nor isolated (region D). A photon candidate is defined as non-isolated if it does not satisfy the requirement on the amount of isolation transverse energy, while it is classified as non-tight if it fails the tight identification but satisfies a modified set of requirements related to four of the selections associated with the shower-shape variables computed from the energy deposits in the first layer of the EM calorimeter. No correlation between the photon identification and isolation requirements would imply that the numbers of photon candidates in the four regions (A, B, C, D) satisfy the condition \(N^A/N^B = N^C/N^D\). Although this condition is almost fully satisfied, the residual correlation between the photon isolation and tightness is taken into account through correction factors estimated from MC simulations, differing from unity by up to 40%. A further correction to the described method is included to take into account the contamination from real photons in the three regions which are supposed to be dominated by fake photons (B, C, D). This contribution is evaluated using MC simulation and is parameterized through coefficients representing the fraction of real photons in each of the aforementioned regions relative to the fraction of real photons observed in region A. With these two corrections, this method determines the \(\text {jet}\rightarrow \gamma \) misreconstruction rate and consequently the contribution due to this background in all the kinematic regions considered in this analysis. The non-tight photon and isolation definitions were varied to compute a relative systematic uncertainty, estimated to be 80–90% for this background process.

The background contribution due to electrons misidentified as photons is estimated through an \(e^\pm \rightarrow \gamma \) misreconstruction rate (fake rate), determined from a comparison of the rates of \(Z\) boson reconstruction in the \(e^\pm \gamma \) and \(e^+e^-\) final states, as in Ref. [124]. This background is small in the EW \(Z\gamma \,+\,\text {jets}\) measurement and in the Higgs boson invisible decays interpretation but is more relevant in the dark-photon interpretation. The full Run 2 dataset is used to select \(Z \rightarrow e{}e\) events in which the electron pairs in the final state are either reconstructed as an \(e^+e^-\) pair or misreconstructed as an \(e^\pm \gamma \) pair. The invariant mass \(m_{ee}\) or \(m_{e\gamma }\) is required to be consistent with the \(Z\) boson mass within 10 \(\text {Ge}\text {V}\). The yields of Z events are then obtained from a simultaneous fit to the \(m_{ee}\) or \(m_{e\gamma }\) distributions, in order to subtract the contamination from jets misidentified as electrons or photons in the two samples using an extrapolation from the sidebands of the pair’s mass. The \(e^\pm \rightarrow \gamma \) misidentification rate, measured as a function of \(|\eta |\) and \(p_{\text {T}}\), varies between 1.5% and 9%, with larger values associated with larger values of \(|\eta |\), since the misidentification rate depends on the amount of material in front of the calorimeter. The background contribution from misidentified electrons is evaluated in the different regions considered in this analysis by applying the calculated \(e^\pm \rightarrow \gamma \) misidentification rates to event yields resulting from the same criteria except that an electron is required instead of a photon. The misidentification rate includes uncertainties from varying the fitted range of the pair mass, turning the jet background subtraction on and off, and accounting for biases in the energy of the photon compared to that of the electron. The total uncertainties vary from 30% for \(p_{\text {T}} = 15~\text {Ge}\text {V}{}\) to 15% for \(p_{\text {T}} = 100~\text {Ge}\text {V}{}\).

The \(\gamma \,+\,\text {jet}\) background is a minor one in this analysis since these events do not have intrinsic \(E_{\text {T}}^{\text {miss}}\), other than the small contribution from the presence of neutrinos in heavy-flavour jet decays. While only a small fraction of such events exceed the \(E_{\text {T}}^{\text {miss}}\) requirement of the SR definition, most of this background contribution results from jet mismeasurement, yielding a significant amount of misreconstructed \(E_{\text {T}}^{\text {miss}}\). The sample of simulated events for this large cross-section process is limited, so a jet smearing approach is used to increase the sample size by a factor of 20. A simulated sample of \(\gamma \,+\,\text {jet}\) events is filtered with a set of criteria looser than those used in the SR definition so that events include one identified photon and two or more reconstructed jets with \(|\Delta \eta _{\text {jj}} |>2.5\). The leading and subleading reconstructed jet \(p_{\text {T}}\) must be larger than 50 and 30 \(\text {Ge}\text {V}\), respectively. The energy of each truth jet, which includes neutrino momenta, in these events is smeared according to the jet energy response evaluated in bins of \(p_{\text {T}}\) of the corresponding truth-level jets; jet \(\phi \) and \(\eta \) are smeared with a Gaussian distribution according to the angular resolution of PFlow jets as measured in 13 \(\text {Te}\text {V}\) data [125]. The pile-up jet energies are not smeared, but they are included in the jet counting. After all the truth jets have been smeared, the corresponding JVT and fJVT tagging scores are recalculated for each jet. The true-\(E_{\text {T}}^{\text {miss}}\) magnitude and direction due to heavy-flavour decay neutrinos are resampled from simulated sample truth information. In addition to this redefinition of the \(E_{\text {T}}^{\text {miss}}\) in the event, corrections due to jet smearing are also propagated to the reconstructed \(E_{\text {T}}^{\text {miss}}\) as well as all other kinematic quantities used to define the SR. The \(\gamma \,+\,\text {jet}\) sample obtained with this procedure is normalized according to the simulation-to-data comparison in the \(\text {Low-}E_{\text {T}}^{\text {miss}} ~\mathrm {VR}\) described in Sect. 6, after subtracting all the other background contributions in that region. Normalization to data absorbs any residual contributions of detector noise or other misreconstructed jets. The resulting normalization, which was found to be statistically consistent over all data-taking years, is \(0.91\pm 0.36\) with the uncertainty coming from the statistical uncertainties of the data. Uncertainties in the extrapolation from the \(\text {Low-}E_{\text {T}}^{\text {miss}} ~\mathrm {VR}\) are computed using the difference between the \(E_{\text {T}}^{\text {miss}}\) trigger efficiencies for \(\gamma \,+\,\text {jet}\) and \(Z(\rightarrow \nu \nu )\gamma \,+\,\text {jets}\) events, which results in a 75% uncertainty in yield. Lastly, the smearing parameterizations are varied within their uncertainties, and the truth-\(E_{\text {T}}^{\text {miss}}\) resampling is turned on and off. The total uncertainty in this background is roughly 90%.

Some of the events in the \(W(\rightarrow \ell \nu )\gamma \,+\,\text {jets}\) CR are events where a jet or a photon is misidentified as a lepton. Events of this kind result from \(\gamma \,+\,\text {jet}\) production in which a jet is misidentified as a lepton, diphoton production where a photon mimics the prompt electron, or to multijet events where one jet mimics the photon and another one mimics the lepton. The contribution from fake-lepton events is found to be negligible in the case of events with one muon. To evaluate this contribution to the \(W_{e\nu }^\gamma ~\mathrm {CR}\), a corresponding CR (\(\text {Fake-}e~\mathrm {CR}\)) is defined, as discussed in Sect. 6.5. The ratio of the yields of fake electrons in the \(W_{e\nu }^\gamma ~\mathrm {CR}\) and \(\text {Fake-}e~\mathrm {CR}\), labelled \({\mathcal {R}}_{\text {fake-}e}\), is estimated in the same regions except that the one electron satisfying the tight identification requirements is replaced by an electron satisfying a loose identification requirement but failing the tight \(W_{e\nu }^\gamma ~\mathrm {CR}\) electron definition. This is called the anti-ID selection. The number of data events in the anti-ID \(W_{e\nu }^\gamma ~\mathrm {CR}\), after subtracting residual contributions from prompt-lepton events using simulation, is divided by the number of data events in the anti-ID \(\text {Fake-}e~\mathrm {CR}\) to compute \({\mathcal {R}}_{\text {fake-}e}\). The ratio \({\mathcal {R}}_{\text {fake-}e}\) is measured to be \(0.14 \pm 0.11\) in the baseline selection (Sect. 6.1) and \(0.26 \pm 0.10\) in the selection used in the \(H\rightarrow \gamma \gamma _{\text {d}}\) search (Sect. 6.4), with the uncertainties coming from statistical uncertainties of the data. This ratio is used to scale the fake-electron contributions in the \(\text {Fake-}e~\mathrm {CR}\) to obtain the expected background in the \(W_{e\nu }^\gamma ~\mathrm {CR}\) .

7.2 Systematic uncertainties

Theoretical uncertainties affect all simulated signal and background processes. They originate from the limited order at which the matrix elements are calculated, the matching of those calculations to parton showers, and the uncertainty of the proton PDFs.

For the \(Z\gamma \,+\,\text {jets}\) and \(W\gamma \,+\,\text {jets}\) processes the higher-order matrix element effects and parton-shower-matching uncertainties are assessed by varying the renormalization and factorization scale choices used in the event generation. The effect of resummation and CKKW matching scale [63] choices are found to be negligible. The factorization and renormalization scales are varied up and down by a factor of two using event weights varied in the  MC simulation of strong production, while they are varied in separate calculations in