Abstract

One of the simplest viable models for dark matter is an additional neutral scalar, stabilised by a \(\mathbb {Z}_2\) symmetry. Using the GAMBIT package and combining results from four independent samplers, we present Bayesian and frequentist global fits of this model. We vary the singlet mass and coupling along with 13 nuisance parameters, including nuclear uncertainties relevant for direct detection, the local dark matter density, and selected quark masses and couplings. We include the dark matter relic density measured by Planck, direct searches with LUX, PandaX, SuperCDMS and XENON100, limits on invisible Higgs decays from the Large Hadron Collider, searches for high-energy neutrinos from dark matter annihilation in the Sun with IceCube, and searches for gamma rays from annihilation in dwarf galaxies with the Fermi-LAT. Viable solutions remain at couplings of order unity, for singlet masses between the Higgs mass and about 300 GeV, and at masses above \(\sim \)1 TeV. Only in the latter case can the scalar singlet constitute all of dark matter. Frequentist analysis shows that the low-mass resonance region, where the singlet is about half the mass of the Higgs, can also account for all of dark matter, and remains viable. However, Bayesian considerations show this region to be rather fine-tuned.

Similar content being viewed by others

1 Introduction

Dark matter (DM) accounts for the majority of the matter in the Universe, but its nature remains a mystery. It has been known for some time [1,2,3] that GeV-scale particle DM can accurately reproduce the observed relic abundance of DM, provided that it has an interaction strength with standard model (SM) particles that is comparable to that of the weak force. This is the Weakly Interacting Massive Particle (WIMP) paradigm.

The simplest WIMP model is the “scalar singlet” or scalar “Higgs-portal” scenario, in which one adds to the SM a massive real scalar field S uncharged under the SM gauge group [4,5,6]. S is stabilised by a \(\mathbb {Z}_2\) symmetry, and never obtains a vacuum expectation value (VEV). The only renormalisable interactions between the singlet and the SM allowed by the symmetries of the SM arise from a Lagrangian term of the form \(S^2H^2\). This term gives the singlet a so-called “Higgs portal” for interacting with the SM, leading to a range of possible phenomenological consequences. These include thermal production in the early Universe and present-day annihilation signals [7,8,9], direct detection and \(h\rightarrow SS\) decays [10]. A number of recent papers have investigated prospects for relaxing these constraints by adding additional scalars [11,12,13]. The singlet has also been implicated in inflation [14,15,16] and baryogenesis [17,18,19].

The simplicity of the scenario and the discovery of the Higgs boson in 2012 [20, 21] have focussed much attention on the singlet model in recent years. XENON100 and WMAP constraints were applied in Ref. [22], and an early global fit of the model using a similar range of data was performed in Ref. [23]. LHC Run I constraints from a CMS vector boson fusion analysis, and monojet and mono-Z analyses were shown to be very weak [24]; indeed, monojet constraints on all minimal Higgs-portal models (i.e. scalar, fermion or vector DM interacting with the SM only via the Higgs portal) are weak [25]. Implications of the Higgs mass measurement and a detailed treatment of direct and indirect detection were explored in Ref. [26], followed by the application of direct limits from the LUX and PandaX experiments [27,28,29]. Anti-proton data can be important in the region of the Higgs resonance [27, 30], and competitive with the LUX limits at higher DM masses, but are ultimately prone to substantial cosmic ray propagation uncertainties. Discovery prospects at future colliders have been explored for the 14 TeV LHC and a 100 TeV hadron collider [31, 32], and the International Linear Collider [33].

The most comprehensive recent studies were presented in Refs. [26, 34, 35]. The first pair of papers examined the scalar singlet scenario in light of recent (and projected) LHC Higgs invisible width measurements [36,37,38], the Planck relic density measurement [39], Planck and WMAP CMB constraints on DM annihilation at the time of recombination [39,40,41], Fermi-LAT analysis of gamma rays in the direction of 15 dwarf spheroidal galaxies using 6 years of Pass 8 data [42], and LUX limits on the spin-independent WIMP-nucleon scattering cross-section [43]. These studies also investigated the prospects for detection in gamma rays by the Cherenkov Telescope Array (CTA; [44,45,46]), and for direct detection by XENON1T [47]. Reference [35] presented a global fit to determine the regions of the scalar singlet model space that can explain the apparent excess of gamma rays observed by Fermi towards the Galactic centre, frequently interpreted as evidence for DM annihilation [48,49,50,51,52,53,54]. This included a treatment of the Planck relic density constraint, LHC invisible Higgs width constraints, direct search data from LUX (and projections for XENON1T and DARWIN), and constraints from Fermi-LAT searches for DM annihilation in dwarf galaxies and \(\gamma \)-ray lines at the Galactic centre.

Although lines and signals from the Galactic centre in the context of this model have received a reasonable amount of attention [8, 35, 55,56,57], in general these signals are relevant only if the singlet is produced non-thermally, as the regions of parameter space where such signals are substantial have quite low thermal relic abundances [26]. Fitting the excess at the Galactic centre requires relatively large couplings, which in turn imply too little DM from thermal freeze-out. Some previous studies have solved this issue by assuming an unspecified additional production mechanism. This reduces the predictability of the theory, as the cosmological abundance of scalar singlets ceases to be a prediction. We will take a different approach, allowing for the possibility that the scalar singlet constitutes only a sub-component of DM, and permitting a different species (e.g. axions) to make up the rest. Indeed, as we show in this paper, experiments are now so sensitive to DM signals that they can probe singlet models constituting less than a hundredth of a percent of the total DM.

The purpose of the present paper is twofold. First and foremost, we provide the most comprehensive study yet of the scalar singlet scenario, in a number of ways. We augment the particle physics model parameters with a series of nuisance parameters characterising the DM halo distribution, the most important SM masses and couplings, and the nuclear matrix elements relevant for the calculation of direct search yields. These are included in the scan as free parameters, and are constrained by a series of likelihoods derived from the best current knowledge of each observable (and in some cases, their correlations). Compared to the constraints used in Refs. [26, 34], we add improved direct detection likelihoods [58] from LUX [59], PandaX [60], SuperCDMS [61] and XENON100 [62], as well as IceCube limits on DM annihilation to neutrinos in the core of the Sun [63, 64]. We also test some benchmark models obtained in our scan for stability of the electroweak vacuum. Given the recent preference for astrophysical explanations of the Fermi-LAT Galactic centre excess [65,66,67,68,69,70,71], we do not add this to the scan as a positive measurement of DM properties, unlike in Ref. [35]. We explore the extended parameter space in more detail than has previously been attempted, using four different scanning algorithms, and more stringent convergence criteria than previous studies. The secondary purpose of this paper is to provide an example global statistical analysis using the Global and Modular Beyond-Standard Model Inference Tool (GAMBIT) [72], for a DM model where extensive comparison literature exists.

In Sect. 2, we describe the Lagrangian and parameters of the scalar singlet model, discuss our astrophysical assumptions, and define the nuisance parameters that we include in our global fit. Sect. 3 gives details of our scan, including the likelihood terms that we include for each constraint, the sampling algorithms we employ, and their settings. We present the latest status of the singlet model in Sect. 4, before concluding in Sect. 5. All input files, samples and best-fit benchmarks produced for this paper are publicly accessible from Zenodo [73].

2 Physics framework

2.1 Model definition

The renormalisable terms involving a new real singlet scalar S, permitted by the \(\mathbb {Z}_2\), gauge and Lorentz symmetries, are

From left to right, these are: the bare S mass, the Higgs-portal coupling, the S quartic self-coupling, and the S kinetic term. Because S never obtains a VEV, the model has only three free parameters: \(\mu _{\scriptscriptstyle S}^2\), \(\lambda _{h\scriptscriptstyle S}\) and \(\lambda _{\scriptscriptstyle S}\). Following electroweak symmetry breaking, the portal term induces \(h^2S^2\), \(v_0hS^2\) and \(v_0^2S^2\) terms, where h is the physical Higgs boson and \(v_0 = 246\) GeV is the VEV of the Higgs field. The additional \(S^2\) term leads to a tree-level singlet mass

Dark matter phenomenology is driven predominantly by \(m_{\scriptscriptstyle S}\) and \(\lambda _{h\scriptscriptstyle S}\), with viable solutions known to exist [26, 34] in a number of regions:

-

1.

the resonance region around \(m_{\scriptscriptstyle S}\sim m_h/2\), where couplings are very small (\(\lambda _{h\scriptscriptstyle S}<10^{-2}\)) but the singlet can nevertheless constitute all of the observed DM,

-

2.

the resonant “neck” region at \(m_{\scriptscriptstyle S}=m_h/2\), with large couplings but an extremely small relic S density, and

-

3.

a high-mass region with order unity couplings.

The parameter \(\lambda _{\scriptscriptstyle S}\) remains relevant when considering DM self-interactions (e.g. [74]), and the stability of the electroweak vacuum. In the SM, the measured values of the Higgs and top quark masses indicate that the electroweak vacuum is not absolutely stable, but rather meta-stable [75]. This means that although the present vacuum is not the global minimum of the scalar potential, its expected lifetime exceeds the age of the Universe. Although this is not inconsistent with the existence of the current vacuum, one appealing feature of scalar extensions of the SM is that the expected lifetime can be extended significantly, or the stability problem solved entirely, by making the current vacuum the global minimum.

The stability of the electroweak vacuum has been a consideration in many studies of scalar singlet extensions to the SM [14, 76,77,78,79,80,81,82,83,84,85,86], typically appearing along with constraints from perturbativity, direct detection experiments and the relic abundance of DM. As such, vacuum stability can be an interesting aspect to study of the scalar singlet model (and indeed, of any UV-complete model). In this paper however, we primarily treat the scalar singlet DM model as a low-energy effective theory, and do not consider \(\lambda _{\scriptscriptstyle S}\) as a relevant parameter. In a future fit, we plan to explore renormalisation of the scalar singlet model over the full range of scales, from electroweak to Planck, including full calculations of perturbativity and the lifetime of the electroweak vacuum. Here, for the sake of interest we simply check the stability of the electroweak vacuum for a few of our highest-likelihood parameter points.

2.2 Relic density and Higgs invisible width

In order to calculate the relic density of S, we need to solve the Boltzmann equation [87]

where \(n_{\scriptscriptstyle S}\) is the DM number density, \(n_{{\scriptscriptstyle S},\mathrm {eq}}\) is the number density if the DM population were in chemical equilibrium with the rest of the Universe, H is the Hubble rate, and \(\langle \sigma v_{\mathrm {rel}}\rangle \) is the thermally averaged self-annihilation cross-section times the relative velocity of the annihilating DM particles (technically the Møller velocity). The non-averaged cross-section \(\sigma v\) depends on the centre-of-mass energy of the annihilation \(\sqrt{s}\), and the thermal average depends on temperature T. The average is given by

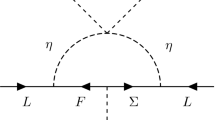

where for convenience we have expressed the result in terms of the relative velocity of the annihilating S particles in the centre-of-mass frame, \(v_\mathrm{cms}=2\sqrt{1-4m_{\scriptscriptstyle S}^2/s}\). For the case of the scalar singlet model, the non-averaged cross-section for annihilation into all final states except hh is [26]

For \(m_{\scriptscriptstyle S}>m_h\), this expression needs to be supplemented with the partial annihilation cross-section into hh, given in Eq. A4 of Ref. [26].

We use the SM Higgs boson width \(\varGamma _h(\sqrt{s})\) as a function of the invariant mass of the resonance \(m_{h^*}=\sqrt{s}\), as implemented in DecayBit [88].Footnote 1 At tree level, the decay width of Higgs bosons to such invisible final states is

This is the standard method for calculating the relic density. It assumes that kinetic decoupling of DM from other species occurs well after chemical freeze-out. If this is not the case, one must solve a coupled system of differential equations rather than the single Boltzmann equation (Eq. 3) [90]. For the scalar singlet model, the standard approach is very accurate except at and below the Higgs resonance, where \(m_{\scriptscriptstyle S}\sim m_h/2\). Here, the impact of a more accurate treatment on the relic density can be up to one order of magnitude in the range \(53~\mathrm{GeV}\lesssim m_\chi \lesssim 63\) GeV [91], as \(\sigma v_{\mathrm {rel}}\) is resonantly enhanced, so even small values of \(\lambda _{h\scriptscriptstyle S}\), where DM undergoes early kinetic decoupling, can avoid thermal overproduction. Although this effect should arguably be included for the sake of completeness in future fits, it has little impact on our final results because it only affects a relatively small mass range.

2.3 Direct detection

The predicted number of events in a direct detection experiment is

with M the detector mass, T the exposure time, and \(\phi (E)\) the detector response function. The latter encodes the fraction of recoil events of some energy E that are expected to be detected, within some analysis region.

The differential recoil rate \(\frac{\mathrm{d}R}{\mathrm{d}E}\) depends on the nuclear scattering cross-section. The scalar singlet model has no spin-dependent interactions with nuclei. The spin-independent WIMP-nucleon cross-section is

where \(m_N\) is the nucleon mass, and \(f_N\) is the effective Higgs–nucleon coupling

The three light quark nuclear matrix elements \(f^{(N)}_{Tq}\) can be calculated from the nuclear matrix elements that describe the quark content of the proton and neutron,

where \(m_l \equiv (1/2) (m_u + m_d)\), and \(N \in \{p,n\}\). See Ref. [26] for details.

Halo uncertainties can have a significant impact on the interpretation of direct searches for DM [92]. For the DM halo in the Milky Way, we assume a generalised NFW profile, with a local Maxwell–Boltzmann speed distribution truncated at the local Galactic escape velocity. The only parameter of the density profile that we retain as a nuisance parameter is \(\rho _0\), the local DM density, although GAMBIT makes it straightforward to also include uncertainties arising from the DM velocity profile in future fits. For the scans of this paper, we assume a most probable speed \(v_0=235\) km s\(^{-1}\) [93, 94], and an escape velocity of \(v_{\mathrm {esc}}=550\) km s\(^{-1}\) [95]. See Ref. [58] for details.

2.4 Indirect detection

The flux of gamma rays from DM annihilation factorises into a part \(\varPhi \) that only depends on the particle physics properties and a part J that depends only on the astrophysical distribution of DM.

For the gamma-ray flux in energy bin i with width \(\varDelta E_i \equiv E_{\text {max},i} - E_{\text {min},i}\), the particle physics factor is

where \(\mathrm{d}N_{\gamma ,j}/\mathrm{d}E\) is the differential gamma-ray multiplicity for single annihilations into final state j, and \(\langle \sigma v\rangle _{0,j}\equiv \sigma v_j|_{v\rightarrow 0} \equiv \sigma v_j|_{s\rightarrow 4m_{\scriptscriptstyle S}^2}\) is the zero-velocity limit of the partial annihilation cross-section into final state j. This is the final-state-specific equivalent of Eq. 5. We compute the partial annihilation cross-sections for the singlet model using the expressions of Appendix A of Ref. [26], as implemented in DarkBit [58]. We obtain the predicted spectra \({\mathrm{d}N_{\gamma }}/{\mathrm{d}E}\) for each model point by using a Monte-Carlo showering simulation, detailed in Ref. [58].

The astrophysics factor for a given target k is

Here, \(\varDelta \varOmega _k\) denotes the solid angle over which the signal is integrated, l.o.s. indicates that the line element \(\mathrm{d}s\) runs along the line of sight to the target object, and \(\rho _{\scriptscriptstyle S}\) is the DM mass density within it.

Neutrino telescopes also place bounds on DM models by searching for high-energy neutrinos from DM annihilation. The most likely signal in this respect comes from DM gravitationally captured by the Sun and concentrated to its core, where it would annihilate [64, 96]. This channel predominantly tests the mass and couplings of DM to nuclei rather than the annihilation cross-section, as the nuclear scattering leading to capture is the rate-limiting step for most models. Because the singlet model has no spin-dependent couplings to nuclei, neutrino telescope searches for annihilation in the Sun provide constraints only on the spin-independent scattering cross-section.

Owing to the uncertainties associated with cosmic ray propagation, we do not consider constraints from charged cosmic rays (primarily anti-protons and positrons). Radio signals coming from synchrotron emission by DM annihilation products generated in strong magnetic fields are not included in our analysis, as the associated field strengths are highly uncertain. Nor are CMB limits on DM annihilation, as Fermi dwarf limits are stronger at all masses of interest for this model. Finally, we do not consider limits implied by gamma-ray observations of the Galactic centre, whether by Fermi or ground-based gamma-ray telescopes, owing to the uncertainties involved in modelling the DM profile and astrophysical gamma-ray emission of the central Milky Way.

3 Scan details

3.1 Parameters and nuisances

A summary of the parameter ranges that we scan over for this paper is given in Tables 1 and 2.

Table 1 gives the singlet model parameters, along with the scanning priors that we use. We carry out two main types of scan: one over the full range of masses from 45 GeV to 10 TeV, intended to sample the entire parameter space, and another centred on lower masses at and below the Higgs resonance \(m_{\scriptscriptstyle S}\sim m_h/2\), in order to obtain a more detailed picture of the resonance region.

In addition to the effect of the singlet parameters, we also consider the effects of varying a number of SM, astrophysical and nuclear parameters within their allowed experimental uncertainties. Table 2 gives the full ranges of the 13 nuisance parameters that we vary in our scans, along with their central values. We assign flat priors to all nuisance parameters in Table 2, as they are all sufficiently well constrained that their priors are effectively irrelevant.

We allow for \({\pm }3\sigma \) excursions from the best estimates of the nuclear couplings. For the local DM density, we scan an asymmetric range about the central value, reflecting the log-normal likelihood that we apply to this parameter (Sect. 3.7). Detailed references for the central values and uncertainties of these parameters can be found in Ref. [58].

The central values of the up and down quark masses come from the 2014 edition of the PDG review [97]; we allow these parameters to vary by \({\pm }20\%\) in our fits, so as to encompass the approximate \(3\sigma \) range of correlated uncertainties associated with the mass ratio likelihoods implemented in PrecisionBit [88]. Given the large impact that the Higgs mass can have on the phenomenology of this model, we scan an extended range for this parameter, covering more than \({\pm }4\sigma \) around the central value quoted in the 2015 update to the PDG review [98] (\(m_h=125.09\pm 0.24\) GeV; see Sect. 3.7). The central value and \(\pm 3\sigma \) scan range for the top quark pole mass come from Ref. [99], and for all other SM nuisance parameters from Ref. [97].

We include the local DM density and nuclear matrix elements as nuisance parameters because of their impacts on direct detection and capture of singlet particles by the Sun. The strong coupling, Higgs VEV (determined by \(G_F\)), Higgs mass and quark masses all enter into the cross-sections for annihilation and/or scattering of S [26]. The electromagnetic coupling does not impact our fit beyond its own nuisance likelihood, but has a small effect on renormalisation of other parameters and therefore vacuum stability, which we investigate for a few benchmarks and will explore in detail in a follow-up paper.

3.2 Scanning procedure

Although 13 of the directions in the 15-dimensional parameter space are well constrained, efficiently sampling all 15 parameters simultaneously requires sophisticated scanning algorithms. We explore this space primarily with two different scanning packages interfaced via ScannerBit: a differential evolution sampler Diver, and an ensemble Markov Chain Monte Carlo (MCMC) known as T-Walk [100]. Both algorithms are the current state of the art when it comes to scaling with dimension [100], and thus are the natural choice for this study.

Both of these algorithms are particularly well suited for multimodal distributions, and each serves a purpose in this study. T-Walk allows efficient and accurate calculation of the Bayesian posterior distribution for the target model. The package can also be used for frequentist studies if the sampling density is amplified by a judicious choice of run parameters. However, T-Walk is far less efficient at sampling the profile likelihood in high-dimensional spaces than Diver [100]. Because we vary 15 parameters in total, we use Diver to produce high-quality profile likelihoods. Having identified all likelihood modes, and therefore all possible locations that might meaningfully contribute to the posterior, we then use T-Walk to produce posterior distributions, checking that it does not fail to locate any of the modes identified by Diver.

In addition to the ensemble MCMC and differential evolution scans, we also combine our results with those from a more traditional MCMC, GreAT, and the nested sampling algorithm MultiNest. These are also interfaced to ScannerBit [100]. Although it is not typically necessary to combine results from four different algorithms, here we demonstrate the power of the GAMBIT package, which allows us to use a range of scanning procedures on the same composite likelihood, in order to produce the most robust results possible.

As discussed in Sect. 2.1, the singlet parameter space features a viable region at \(m_{\scriptscriptstyle S}\approx m_h/2\). In this region, the annihilation of singlet DM to SM particles via s-channel Higgs exchange is resonantly enhanced, and a lower portal coupling is required to achieve the observed relic density. This region is not yet excluded by direct detection. However, probing this region of the parameter space over a large-mass range is difficult, even when using a logarithmic prior on the mass. To properly sample this region, we run a second scan with each sampler, using a flat prior over the range \(m_{\scriptscriptstyle S}\in [45,70]\) GeV. We also carry out an additional specially focussed low-mass scan with Diver in the “neck” region of the resonance, in order to obtain well-sampled contours in the most localised part of the allowed parameter space. We do this by excluding all points outside the range \(m_{\scriptscriptstyle S}\in [61.8,63.1]\) GeV.

The convergence criteria, population size and chain details are controlled by various settings for each sampler. The settings that we use in this paper are presented in Table 3. We chose these settings after extensive testing [100], to give the most stringent convergence and best exploration possible with each scanner and region. To a certain extent, some of these settings are overkill for the problem at hand, and the same physical inference could be achieved with less samples. However, the scans that we present here took only 26,000 core hours in total to compute, and the scan that dominates most of the contours (the full-range Diver scan) took just 3 h on 10 \(\times \) 24-core nodes, i.e. around 700 core hour. Compared to the time required to compute fits that include direct LHC simulations [101,102,103], the additional sampling we do here costs practically nothing—and noticeably improves the resolution of our results. We refer the reader to Ref. [100] for further details of the scanners, their settings and underlying algorithms.

The profile likelihoods that we present in this paper are based on the combination of all samples from all scans, which contain \(5.7\times 10^7\) valid samples altogether. In contrast, the posteriors that we show come exclusively from the full-range T-Walk scan.

We compute and plot profile likelihoods and posteriors using pippi [104], obtaining profile likelihoods by maximising the log-likelihood in parameter bins over all other parameters not shown in a given plot, and posteriors by integrating the posterior density over the parameters not shown in each plot. We compute confidence regions and intervals by determining the appropriate iso-likelihood contour relative the best-fit likelihood for 1 or 2 degrees of freedom, corresponding to 1D and 2D plots, respectively. We compute Bayesian credible regions and intervals as parameter ranges containing the relevant posterior mass according to the maximum posterior density requirement. Further details can be found in Ref. [104].

3.3 Relic density likelihood

To determine the thermal S relic density for each parameter combination, we solve the Boltzmann equation (Eq. 3) numerically with DarkBit [58], taking the partial annihilation rates for different final states from Eq. 5 supplemented at \(m_{\scriptscriptstyle S}> m_h\) with the expression for \(\langle \sigma v\rangle _{0,hh}\) from Appendix A of Ref. [26]. For \(m_{\scriptscriptstyle S}< 150\) GeV we use the SM Higgs partial widths contained in DecayBit (from Ref. [89]), whereas for \(m_{\scriptscriptstyle S}> 150\) GeV we revert to the tree-level expressions from Appendix A of Ref. [26], to avoid the impact of large 1-loop corrections to the Higgs self-interaction. We determine the effective invariant rate \(W_\mathrm{eff}\) from the partial annihilation cross-sections, and pass it on to the numerical Boltzmann solver of DarkSUSY [105] in order to obtain \(\varOmega _{\scriptscriptstyle S} h^2\).

We implement the relic density likelihood as an upper limit only, permitting models where the thermal abundance makes S a fraction of DM. Comparing with the relic abundance measured by Planck [39] (\(\varOmega _\text {DM} h^2 = 0.1188\pm 0.0010\), at \(1\sigma \)), we compute a marginalised Gaussian upper limit likelihood as described in Sec 8.3.4 of Ref. [72]. Models that predict less than the measured relic density are assigned a likelihood contribution equal to that assigned to models that predict the observed value exactly. Models predicting more than the measured relic density are penalised according to a Gaussian function centred on the observed value. We adopt the DarkBit default value of 5% for the theoretical uncertainty on the relic density prediction, adding it in quadrature to the experimental uncertainty on the observed value. We note that this is a very conservative estimate of the theoretical uncertainty for this model, except in the resonance region (see Sect. 2.2).

For models that underpopulate the observed relic density, we rescale all direct and indirect signals to account for the fraction of DM that is detectable using the properties of the S boson. This is internally consistent from the point of view of the model, and conservative in the sense that it suppresses direct and indirect signals in regions where the thermal abundance is less than the Planck value.

3.4 LHC Higgs likelihoods

When \(m_{\scriptscriptstyle S}<m_h/2\), the decay \(h\rightarrow S S\) is kinematically allowed, with a partial width given by Eq. 6. This is entirely invisible at hadron colliders. Constraints can be placed on the scalar singlet model parameters from measurements of Higgs production and decay rates, and the implied limit on invisible decay channels of the Higgs. For the case of SM-like couplings, the 95% confidence level upper limit on the Higgs invisible width from LHC and Tevatron data is presently at the level of 19% [36]. We use the DecayBit implementation of the complete invisible Higgs likelihood, based on an interpolation of Figure 8 of [36].

3.5 Direct detection likelihoods

The dominant constraints on the scalar singlet model come from the LUX [43, 59] and PandaX [60] experiments, with weaker limits also available from DarkBit based on SuperCDMS [61] and XENON100 [62]. We use the DarkBit interface to DDCalc to evaluate a Poisson likelihood for observing \(N_{\mathrm {o}}\) events in a given experiment, given a predicted number of signal events \(N_{\mathrm {p}}\) (Eq. 7),

Here b is the expected number of background events in the analysis region. We model detector efficiency and acceptance effects by interpolating between values in pre-calculated tables contained in DDCalc.

3.6 Indirect detection likelihoods

The lack of evidence for anomalous gamma-ray emission from dwarf spheroidal galaxies in data collected by the Fermi-LAT experiment allows stringent constraints to be placed on the DM annihilation cross-section [42]. We use the Pass 8 analysis of the 6-year dataset, with the composite likelihood

where \(N_\text {dSph}\) and \(N_\text {ebin}\) are the number of considered dSphs and the number of energy bins, respectively. The partial likelihoods \({\mathcal {L}}_{ki}\) are functions of the signal flux, and hence of the quantities \(\varPhi _i\) and \(J_k\) defined in Eqs. 12 and 13, respectively.

The main results of Ref. [42] were obtained by profiling over the \(J_k\) as nuisance parameters, yielding a combined profile likelihood of

where

Here the use of a log-normal distribution to describe the uncertainty on \(J_k\) is a good approximation. Tabulated binned likelihoods have been provided by the Fermi-LAT experiment, and implemented in DarkBit via the gamLike package.Footnote 2

The strongest neutrino indirect detection constraints on DM-nucleon scattering currently come from the IceCube search for annihilation in the Sun [64, 106]. We access the 79-string results via the DarkBit interface to the nulike package [63, 107], which constructs a fully unbinned likelihood using event-level energy and angular information available in the published 79-string IceCube dataset, marginalised over detector systematics. We obtain predicted neutrino spectra at the Earth using WimpSim [108] yield tables contained in DarkSUSY [105]. Although IceCube limits on spin-independent scattering are not competitive with those from LUX or PandaX, for many points in the singlet parameter space they provide constraints stronger than those from SuperCDMS, and almost as strong as XENON100.

Note that the methods that we use for marginalising or profiling out additional systematic uncertainties in neutrino and \(\gamma \)-ray likelihoods are only applicable because the systematics are uncorrelated; the same cannot be done with a common systematic that impacts many experiments, such as the local density of DM (which affects every direct detection experiment).

The dwarf likelihood gives an identical result to what we would obtain if we were to include each of the J factors as nuisance parameters in our own fit, and profile over them. The same is true of the IceCube detector systematics treated by nulike, although in that case the equivalent result would be the Bayesian one, where the corresponding nuisance parameter was marginalised over. Ideally, one would include all such nuisance parameters in the same fit, and then be free to choose at the end of a scan to profile over them all to produce profile likelihoods, or marginalise over them all to produce posterior probability densities. In practice, however, the gain in accuracy achieved by doing so is generally minimal, whereas the speed gain from the ‘inline’ treatment is substantial.

Profile likelihoods for the scalar singlet model, in the plane of the singlet parameters \(\lambda _{h\scriptscriptstyle S}\) and \(m_{\scriptscriptstyle S}\). Contour lines mark out the \(1\sigma \) and \(2\sigma \) confidence regions. The left panel shows the resonance region at low singlet mass, whereas the right panel shows the full parameter range scanned. The best-fit (maximum likelihood) point is indicated with a white star, and edges of the allowed regions corresponding to solutions where S constitutes 100% of dark matter are indicated in orange

Profile likelihoods for the scalar singlet model, in various planes of observable quantities against the singlet mass. Contour lines mark out the \(1\sigma \) and \(2\sigma \) confidence regions. Greyed regions indicate values of observables that are inaccessible to our scans, as they correspond to non-perturbative couplings \(\lambda _{h\scriptscriptstyle S}>10\), which lie outside the region of our scan. Note that the exact boundary of this region moves with the values of the nuisance parameters, but we have simply plotted this for fixed central values of the nuisances, as a guide. The best-fit (maximum likelihood) point is indicated with a white star, and edges of the allowed regions corresponding to solutions where S constitutes 100% of dark matter are indicated in orange. Left Late-time thermal average of the cross-section times relative velocity; centre spin-independent WIMP-nucleon cross-section; right relic density

Profile likelihoods of nuclear scattering (left) and annihilation (right) cross-sections for the scalar singlet model, scaled for the singlet relic abundance and plotted as a function of the singlet mass. Here we rescale the nuclear and annihilation scattering cross-sections by \(f\equiv \varOmega _{\scriptscriptstyle S} / \varOmega _{\text {DM}}\) and \(f^2\), in line with the linear and quadratic dependence, respectively, of scattering and annihilation rates on the dark matter density. Contour lines mark out the \(1\sigma \) and \(2\sigma \) confidence regions. The best-fit (maximum likelihood) point is indicated with a white star

One-dimensional profile likelihoods and posterior distributions of the scalar singlet parameters, and all nuisance parameters varied in our fits. Posterior distributions are shown in blue and profile likelihoods in red. Dashed lines indicate \(1\sigma \) and \(2\sigma \) confidence and credible intervals on parameters

3.7 Nuisance likelihoods

Following Ref. [58], we take the likelihood terms for the hadronic matrix elements \(\sigma _s\) and \(\sigma _l\) to be Gaussian, with central values and \(1\sigma \) uncertainties of \(43\pm 8\) and \(58\pm 9\), respectively.

The canonical value of the local DM density \(\rho _0\) is \(\bar{\rho _0}=0.4\) GeV/cm\(^3\) (e.g. [109]), but this depends on assumptions such as spherical symmetry in the halo. We remain relatively agnostic with respect to this assumption by choosing a log-normal distribution for the likelihood of \(\rho _0\), and assuming an uncertainty of \(\sigma _{\rho _0} = 0.15\) GeV cm\(^{-3}\), such that

where \(\sigma '_{\rho _0} = \ln (1 + \sigma _{\rho _0}/\rho _0)\). More details can be found in Ref. [58].

We use the PrecisionBit implementation of SM nuisance parameter likelihoods. For the \(\overline{MS}\) light quark (u, d, s) masses at \(\mu =2\) GeV, we use a single joint Gaussian likelihood function, combining likelihoods on \(m_u/m_d\), \(m_s/(m_u+m_d)\), and \(m_s\). We take the experimental measurements of these quantities and their uncertainties from the PDG [97]. We use Gaussian likelihoods for \(G_F\), based on the measured value \(G_{F} = (1.1663787 \pm 0.0000006) \times 10^{-5}\) GeV\(^{-2}\), \(\alpha _\mathrm{EM}\), based on the observed \(\alpha _{\mathrm {EM}}(m_{Z})^{-1} = 127.940 \pm 0.014\) (\(\overline{MS}\) scheme) [97], and \(\alpha _{s}\), using the value \(\alpha _{s}(m_{Z}) = 0.1185 \pm 0.0005\) (\(\overline{MS}\) scheme), as obtained from lattice QCD [97]. We use the quoted uncertainties as \(1\,\sigma \) confidence intervals, and apply no additional theoretical uncertainty. We also apply a simple Gaussian likelihood with no theoretical uncertainty to the Higgs mass, based on the 2015 PDG result of \(m_h= 125.09 \pm 0.24\) GeV [98].

4 Results

4.1 Profile likelihoods

Results of our global fit analysis with all nuisances included are presented as 2D profile likelihoods in the singlet parameters in Fig. 1, and in terms of some key observables in Figs. 2 and 3. We also show the one-dimensional profile likelihoods for all parameters in red in Fig. 4.

The viable regions of the parameter space agree well with those identified in the most recent comprehensive studies [26, 34]. Two high-mass, high-coupling solutions exist, one strongly threatened from below by direct detection, the other mostly constrained from below by the relic density. The leading \(\lambda _{h\scriptscriptstyle S}^2\)-dependence of \(\sigma _\text {SI}\) and \(\sigma v\) approximately cancel when direct detection signals are rescaled by the predicted relic density, suggesting that the impacts of direct detection should be to simply exclude models below a given mass. However, the relic density does not scale exactly as \(\lambda _{h\scriptscriptstyle S}^{-2}\), owing to its dependence on the freeze-out temperature, resulting in an extension of the sensitivity of direct detection to larger masses than might be naïvely expected, for sufficiently large values of \(\lambda _{h\scriptscriptstyle S}\).Footnote 3 This is the reason for the division of the large-mass solution into two sub-regions; at large coupling values, the logarithmic dependence of the relic density on \(\lambda _{h\scriptscriptstyle S}\) enables LUX and PandaX to extend their reach up to singlet masses of a few hundred GeV. This is also slightly enhanced by additional \(\lambda _{h\scriptscriptstyle S}^3\) and \(\lambda _{h\scriptscriptstyle S}^4\) terms in \(\langle \sigma v \rangle _{0,hh}\), which are responsible for the ‘kink’ seen in the border of the grey regions at \(m_{\scriptscriptstyle S}\sim 600\) GeV in the left and right panels of Fig. 2.

The resonance region persists, despite being beset from all sides: invisible Higgs from above, relic density from below, indirect detection from higher masses, and direct detection from lower masses. We find a narrow “neck” of degenerate maximum likelihood directly on the resonance, with a best fit located at \(m_{\scriptscriptstyle S}=62.51\) GeV, \(\lambda _{h\scriptscriptstyle S}= 6.5\times 10^{-4}\). The width of this region is set by a number of things:

-

1.

the actual separation between the areas allowed by the invisible width and direct detection constraints, which press in from \(m_{\scriptscriptstyle S}<m_h/2\) and \(m_{\scriptscriptstyle S}>m_h/2\), respectively,

-

2.

the uncertainty on the Higgs mass, which blurs the exact \(m_{\scriptscriptstyle S}\) value of the resonance by \(\sim \)480 MeV at the level of the \(2\sigma \) contours, and

-

3.

the width of the bins into which we sort samples for plotting, which prevents anything from being resolved on scales below \(\varDelta m_{\scriptscriptstyle S}\sim 170\) MeV in the left panel of Fig. 1.

In addition to correctly identifying the allowed region of the parameter space, we obtain additional information from the global fit analysis beyond that seen from pure exclusion studies. Using the relic density as an upper limit, all points for which \(\varOmega _{\scriptscriptstyle S}h^2\le \varOmega _\text {DM} h^2\) have a null log-likelihood contribution, and are thus treated equally above the line in parameter space where \(\varOmega _{\scriptscriptstyle S}h^2=0.1188\). Where \(\varOmega _{\scriptscriptstyle S}h^2<\varOmega _\text {DM} h^2\), we rescale the local DM density (\(\rho _0\)) as well as that in dwarfs \(\rho _{\scriptscriptstyle S}\), so the direct and indirect detection likelihoods are not flat within the allowed region. Were we not to rescale signals self-consistently for the predicted relic density, the areas excluded by direct and indirect detection in the first two panels of Fig. 2 would instead closely track the standard direct and indirect sensitivity curves that many readers will be familiar with. This can be seen more clearly in Fig. 3, where we plot cross-sections rescaled by the appropriate power of \(\varOmega _{\scriptscriptstyle S} / \varOmega _\text {DM}\), together with the experimental constraints from Fermi-LAT, LUX and PandaX.

Were we to instead restrict our fits to only those models that reproduce all of the DM via thermal production to with the Planck uncertainties, we would be left with a narrow band along a small number of edges of the allowed regions we have found. These edges are indicated with orange annotations in Figs. 1 and 2. At high singlet masses, the value of the late-time thermal cross-section (Eq. 4 for \(T=0\)) corresponding to this strip is equal to the canonical ‘thermal’ scale of 10\(^{-26}\) cm\(^3\) s\(^{-1}\). At low masses, this strip runs along the lower edge of the resonance ‘triangle’ only, as indirect detection rules out models with \(\varOmega _Sh^2=0.119\) near the vertical edge (at \(m_{\scriptscriptstyle S}= 62\) GeV).

In Fig. 2, we also show in grey the regions corresponding to Higgs-portal couplings above our maximum considered value, \(\lambda _{h\scriptscriptstyle S}=10\), in order to give some rough idea of the area of these plots that we have not scanned (and the area that should almost certainly be excluded on perturbativity grounds were we to do so). We note that at large \(m_{\scriptscriptstyle S}\), the highest-likelihood regions are all at quite large coupling values, where the annihilation cross-section is so high, and the resulting relic density is so low, that all direct and indirect signals are essentially absent—but where perturbativity of the model begins to become an issue.

4.2 Best-fit point

Our best-fit point is located within the low-mass resonance region, at \(\lambda _{h\scriptscriptstyle S}= 6.5\times 10^{-4}\), \(m_{\scriptscriptstyle S}=62.51\) GeV. This point has a combined log-likelihood of \(\log ({\mathcal {L}})=4.566\), shown broken down into its various likelihood components in Table 4. To put this into context, we also provide the corresponding likelihood components of a hypothetical ‘ideal’ fit, which reproduces positive measurements exactly, and has likelihood equal to the background-only value for those observables with only a limit. The overall combined ideal likelihood is \(\log ({\mathcal {L}})=4.673\), a difference of \(\varDelta \ln {\mathcal {L}}=0.107\) with respect to our best fit. The best fit above the resonance is at \(\lambda _{h\scriptscriptstyle S}=9.9\), \(m_{\scriptscriptstyle S}=132.5\) GeV, with \(\log ({\mathcal {L}})=4.540\), \(\varDelta \ln {\mathcal {L}}=0.133\).

Interpreting \(\varDelta \ln {\mathcal {L}}\) defined this way is somewhat fraught, as we do not know its distribution under the hypothesis that the best fit is correct. However, its definition is almost identical to half the “likelihood \(\chi ^2\)” of Baker and Cousins [110], which is known to follow a \(\chi ^2\) distribution in the asymptotic limit. Our \(\varDelta \ln {\mathcal {L}}\) differs from half the likelihood \(\chi ^2\) only in that some of the components of the ideal likelihood come from the likelihood of a pure-background model, rather than from setting all predictions to their observed values. Assuming that \(2\varDelta \ln {\mathcal {L}}\) follows a \(\chi ^2\) distribution, estimating the effective number of degrees of freedom would still be difficult, as our likelihoods include many upper limits and Poisson terms, some of which have already been conditioned on the background expectation, and some of which have not. The difference between the ideal and the best-fit likelihood does nonetheless give some indication of the degree to which the Singlet DM model can simultaneously explain all data in a consistent way, and how much worse it does than the ideal model. In this sense, it gives information similar in character to the modified p-value method known as CLs [111,112,113], which was explicitly designed for excluding models that gave poorer fits than the background model, by conditioning on the background. Were one to approximate the distribution of \(2\varDelta \ln {\mathcal {L}}\) as \(\chi ^2\) with e.g. 1–2 effective degrees of freedom, this would correspond to a rough p value of between 0.6 and 0.9 in both the resonance and the high-mass region—a perfectly acceptable fit.

Next we consider parameter combinations where the singlet constitutes the entire observed relic density of DM, by restricting the discussion to points with \(\varOmega _Sh^2\) within \(1\sigma \) of the Planck value \(\varOmega _{\text {DM}}h^2 = 0.1188\pm 0.006\) (the uncertainty includes theoretical and observational contributions added in quadrature). In this case, the best fit occurs at the bottom of the resonance, at \(\lambda _{h\scriptscriptstyle S}=2.9 \times 10^{-4}\), \(m_{\scriptscriptstyle S}= 62.27\) GeV. This point has \(\log ({\mathcal {L}})=4.431\), which translates to \(\varDelta \ln {\mathcal {L}}=0.242\) compared the ideal model. In the high-mass region, the best fit able to reproduce the entire observed relic density is at \(\lambda _{h\scriptscriptstyle S}= 3.1\), \(m_{\scriptscriptstyle S}= 9.79\) TeV, and has \(\log ({\mathcal {L}})=4.311\) (\(\varDelta \ln {\mathcal {L}}=0.362\)). If we were to approximate the distribution of \(2\varDelta \ln {\mathcal {L}}\) as \(\chi ^2\) with 1–2 degrees of freedom, this would correspond to p values of between 0.5 and 0.8 for the resonance point, and between 0.4 and 0.7 for the high-mass point. Again, these would suggest that the fit is perfectly reasonable. This indicates that there is no significant preference from data for scalar singlets to make up either all or only a fraction of the observed DM.

The four best-fit points and the corresponding relic densities are presented in Table 5.

4.3 Bayesian posteriors

By using multiple scanning algorithms in our fits, we are also able to consider marginalised posterior distributions for the singlet parameters. In Fig. 4, in blue we also plot one-dimensional marginalised posteriors for all parameters, from our full-range posterior scan with the T-Walk sampler.Footnote 4 The one-dimensional posterior for \(m_{\scriptscriptstyle S}\) shows that although the full-range scan has managed to detect the resonance region, this area has been heavily penalised by its small volume in the final posterior, arising from the volume effect of integrating over nuisance parameters to which points in this region are rather sensitive, such as the mass of the Higgs. The penalty is sufficiently severe that this region drops outside the \(2\sigma \) credible region in the \(m_{\scriptscriptstyle S}\)–\(\lambda _{h\scriptscriptstyle S}\) plane. We therefore focus only on the high-mass modes in the righthand panel of Fig. 5, where we show the posterior from the full-range scan.

Marginalised posterior distributions of the scalar singlet parameters, in low-mass (left) and full-range (right) scans. White contours mark out \(1\sigma \) and \(2\sigma \) credible regions in the posterior. The posterior mean of each scan is shown as a white circle. Grey contours show the profile likelihood \(1\sigma \) and \(2\sigma \) confidence regions, for comparison. The best-fit (maximum likelihood) point is indicated with a grey star

Because it is restricted to the resonance region, the low-range scan (left panel of Fig. 5) shows the expected relative posterior across this region. The fact that the resonance is so strongly disfavoured in the full-range posterior scan is an indication of its heavy fine-tuning, a property that is naturally penalised in a Bayesian analysis. This mode of the posterior accounts for less than 0.4% of the total posterior mass, indicating that it is disfavoured at almost \(3\sigma \) confidence.

For the sake of understanding the prior dependence of our posteriors, we also carried out a single scan of the full parameter range with flat instead of log priors on \(m_{\scriptscriptstyle S}\) and \(\lambda _{h\scriptscriptstyle S}\), using MultiNest with the same full-range settings as in Table 3. Unsurprisingly, the resulting posterior is strongly driven by this (inappropriate) choice of prior, concentrating all posterior mass into the corner of the parameter space at large \(\lambda _{h\scriptscriptstyle S}\) and \(m_{\scriptscriptstyle S}\). The \(1\sigma \) region lies above \(\lambda _{h\scriptscriptstyle S}\sim 3\), \(m_{\scriptscriptstyle S}\sim 3\) TeV, and the \(2\sigma \) region above \(\lambda _{h\scriptscriptstyle S}\sim 1\), \(m_{\scriptscriptstyle S}\sim 1\) TeV.

4.4 Vacuum stability

Finally, we check vacuum stability for some interesting benchmark points.

So far, our calculations have not required any renormalisation group evolution or explicit computation of pole masses. We have simply taken the tree-level expression for \(m_{\scriptscriptstyle S}\) (Eq. 2) to indicate the pole mass, and varied it and \(\lambda _{h\scriptscriptstyle S}\) as free parameters. To test vacuum stability using \(\overline{MS}\) renormalisation group equations (RGEs), we need to instead use these parameters along with the values of the nuisance parameters to set up boundary conditions for a set of \(\overline{MS}\) RGEs. We determine values for the \(\overline{MS}\) parameters that give consistent pole masses using FlexibleSUSY Footnote 5 1.5.1 [116], with SARAH 4.9.1 [117,118,119,120]. In doing this, it becomes necessary to specify the parameter \(\lambda _{\scriptscriptstyle S}\), which we set to zero at the renormalisation scale \(m_Z\). SpecBit can then evolve the \(\overline{MS}\) parameters to higher scales, using the two-loop RGEs of FlexibleSUSY, in order to test vacuum stability and also perturbativity.

For our best-fit point, the Higgs-portal coupling \(\lambda _{h\scriptscriptstyle S}\) is too small to make a noticeable positive contribution to the running of the Higgs self-coupling, which reaches a minimum value of \(-0.0375935\) at \(2.523\times 10^{17}\) GeV. The electroweak vacuum remains meta-stable for this point, with no substantial change in phenomenology compared to the SM, where for the same Higgs and top quark masses the quartic Higgs coupling has a minimum of \(-0.037631\) at \(2.514\times 10^{17}\) GeV.

Next we consider a high-mass point within our \(1\sigma \) allowed region: \(\lambda _{h\scriptscriptstyle S}= 0.5\), \(m_{\scriptscriptstyle S}= 1.3\) TeV. This point has a large enough coupling \(\lambda _{h\scriptscriptstyle S}\) that the minimum quartic Higgs coupling is positive: 0.0522133 at \(1.40006\times 10^9\) GeV. We see that it is certainly possible to stabilise the electroweak vacuum within the singlet model whilst respecting all current constraints.

4.5 Comparison to existing results

The most recent study of the scalar singlet model with a \(\mathbb {Z}_2\) symmetry and a wide range of experimental constraints was that of Beniwal et al. [34]. This recent study is an ideal candidate with which to compare our results, in order to check for consistency and determine the impacts of the newest experimental constraints. There are two important differences in the ingredients of our study and that of Beniwal et al. First, we include stronger DM direct detection constraints from LUX [59] and PandaX [60], which exclude a large part of the parameter space. Second, we scan many relevant nuisance parameters, whereas previous studies have taken them as fixed. The effect of this can be seen along the boundaries of the confidence intervals, where the viable regions are always at least as large in a scan where the nuisances are allowed to vary as in one where they are fixed.

Considering these differences, we see consistency between the results of this paper and Fig. 4 of Beniwal et al. [34], both in the low- and high-mass parts of the \(\lambda _{h\scriptscriptstyle S}\), \(m_{\scriptscriptstyle S}\) parameter space. The increased size of the allowed region resulting from the variable nuisance parameters is evident along all contour edges. The behaviour of the stronger direct detection constraint is also visible, in the top left corner of the triangular part of the allowed region in the left panel of Fig. 1, and on the right side of the “neck”. In the high-mass area of the parameter space (right panel of Fig. 1), we also see LUX and PandaX cutting a large triangular region into the allowed parameter space, essentially separating the high-mass solutions into two separate likelihood modes.

5 Conclusion

The extension of the Standard Model by a scalar singlet stabilised by a \(\mathbb {Z}_2\) symmetry is still a phenomenologically viable dark matter model, whether one demands that the singlet constitutes all of dark matter or not. However, the parameter space is being continually constrained by experimental dark matter searches. This is evident in the global fit that we have presented here, combining the latest experimental results and likelihoods to provide the most stringent constraints to date on the parameter space of this model. Direct detection experiments will fairly soon probe the entire high-mass region of the model, with XENON1T expected to access all but a very small part of each of the high-mass islands [26]. The resonance region will prove more difficult, though some hope certainly exists for ton-scale direct detection to improve constraints from the low-\(m_{\scriptscriptstyle S}\) direction, and for future colliders focussed on precision Higgs physics to probe the edge of the region at \(\lambda _{h\scriptscriptstyle S}\sim 0.02\).

We have seen that the best-fit point found in our scan does not have a notable impact on the stability of the electroweak vacuum, due to its rather small value of \(\lambda _{h\scriptscriptstyle S}\). We have shown that larger values of the portal coupling can completely solve the meta-stability of the electroweak vacuum, even whilst satisfying all experimental constraints. However, the couplings required to do this are not far below where perturbativity starts to become an issue. Investigating how these competing constraints impact the allowed and preferred regions of the singlet model in a global fit will be one of the aims of our follow-up study.

In this study we have demonstrated some powerful features of the GAMBIT framework. It is now possible to easily combine likelihoods and observables from GAMBIT and existing packages in a consistent and computationally efficient way. We have varied 13 nuisance parameters in addition to the two parameters of the scalar singlet model. We have searched this parameter space using the most modern scanning algorithms available, to provide both frequentist and Bayesian statistical interpretations. By using parallel computing resources, we have achieved this with, for example, a maximum runtime for any Diver scan presented in this paper of just 3 h. Finally, due to the modularity and flexibility of the GAMBIT system, it will be possible to include new likelihoods and/or change parts of the calculation at any time in the future, in order to quickly update the analysis to take into account new experimental developments. All input files, samples and best-fit benchmarks produced for this paper are publicly accessible from Zenodo [73].

Notes

This comes from interpolating the results contained in the tables of Ref. [89], and does not (yet) include theoretical uncertainties or the ability to recompute the width for different values of relevant nuisance parameters, such as \(\alpha _s\) or quark masses. Although included in DarkBit, we checked that it makes no difference to our results for \(m_{\scriptscriptstyle S}<m_h/2\) whether or not we modify the width in the denominator of Eq. 5 corresponding to the propagator of the internal Higgs, to take into account the decay channel \(h\rightarrow SS\).

This point is discussed in further detail in Sect. 5 of Ref. [26].

We choose T-Walk for this rather than MultiNest, as we find that MultiNest biases posteriors towards ellipsoidal shapes; see [100] for more details and example posterior maps for this same physical model.

References

L. Bergstrom, Nonbaryonic dark matter: observational evidence and detection methods. Rep. Prog. Phys. 63, 793 (2000). arXiv:hep-ph/0002126

G. Bertone, D. Hooper, J. Silk, Particle dark matter: evidence, candidates and constraints. Phys. Rep. 405, 279–390 (2005). arXiv:hep-ph/0404175

J.L. Feng, Dark matter candidates from particle physics and methods of detection. ARA&A 48 (2010) 495–545. arXiv:arXiv:1003.0904

V. Silveira, A. Zee, Scalar phantoms. Phys. Lett. 161, 136–140 (1985)

J. McDonald, Gauge singlet scalars as cold dark matter. Phys. Rev. 50, 3637–3649 (1994). arXiv:hep-ph/0702143

C.P. Burgess, M. Pospelov, T. ter Veldhuis, The minimal model of nonbaryonic dark matter: a singlet scalar. Nucl. Phys. B 619, 709–728 (2001). arXiv:hep-ph/0011335

C.E. Yaguna, Gamma rays from the annihilation of singlet scalar dark matter. JCAP 3, 003 (2009). arXiv:0810.4267

S. Profumo, L. Ubaldi, C. Wainwright, Singlet scalar dark matter: monochromatic gamma rays and metastable vacua. Phys. Rev. 82, 1–10 (2010). arXiv:1009.5377

C. Arina, M.H.G. Tytgat, Constraints on light WIMP candidates from the isotropic diffuse gamma-ray emission. JCAP 1, 011 (2011). arXiv:1007.2765

Y. Mambrini, Higgs searches and singlet scalar dark matter: combined constraints from XENON 100 and the LHC. Phys. Rev. 84, 115017 (2011). arXiv:1108.0671

S. Bhattacharya, S. Jana, S. Nandi, Neutrino masses and scalar singlet dark matter. Phys. Rev. 95, 055003 (2017). arXiv:1609.03274

R. Campbell, S. Godfrey, H.E. Logan, A. Poulin, Real singlet scalar dark matter extension of the Georgi–Machacek model. Phys. Rev. 95, 016005 (2017). arXiv:1610.08097

J.A. Casas, D.G. Cerdeáo, J.M. Moreno, J. Quilis, Reopening the Higgs portal for single scalar dark matter. JHEP 05, 036 (2017). arXiv:1701.08134

R.N. Lerner, J. McDonald, Gauge singlet scalar as inflaton and thermal relic dark matter. Phys. Rev. 80, 123507 (2009). arXiv:0909.0520

M. Herranen, T. Markkanen, S. Nurmi, A. Rajantie, Spacetime curvature and Higgs stability after inflation. Phys. Rev. Lett. 115, 241301 (2015). arXiv:1506.04065

F. Kahlhoefer, J. McDonald, WIMP dark matter and unitarity-conserving inflation via a gauge singlet scalar. JCAP 11, 015 (2015). arXiv:1507.03600

S. Profumo, M.J. Ramsey-Musolf, G. Shaughnessy, Singlet Higgs phenomenology and the electroweak phase transition. JHEP 8, 010 (2007). arXiv:0705.2425

V. Barger, P. Langacker, M. McCaskey, M. Ramsey-Musolf, G. Shaughnessy, Complex singlet extension of the standard model. Phys. Rev. 79, 015018 (2009). arXiv:0811.0393

J.M. Cline, K. Kainulainen, Electroweak baryogenesis and dark matter from a singlet Higgs. JCAP 1, 012 (2013). arXiv:1210.4196

ATLAS Collaboration: G. Aad et al., Observation of a new particle in the search for the Standard Model Higgs boson with the ATLAS detector at the LHC. Phys. Lett. 716, 1–29 (2012). arXiv:1207.7214

S. Chatrchyan, V. Khachatryan et al., Observation of a new boson at a mass of 125 GeV with the CMS experiment at the LHC. Phys. Lett. 716, 30–61 (2012). arXiv:1207.7235

A. Djouadi, O. Lebedev, Y. Mambrini, J. Quevillon, Implications of LHC searches for Higgs-portal dark matter. Phys. Lett. 709, 65–69 (2012). arXiv:1112.3299

K. Cheung, Y.-L.S. Tsai, P.-Y. Tseng, T.-C. Yuan, A. Zee, Global study of the simplest scalar phantom dark matter model. JCAP 1210, 042 (2012). arXiv:1207.4930

M. Endo, Y. Takaesu, Heavy WIMP through Higgs portal at the LHC. Phys. Lett. 743, 228–234 (2015). arXiv:1407.6882

A. Djouadi, A. Falkowski, Y. Mambrini, J. Quevillon, Direct detection of Higgs-portal dark matter at the LHC. Eur. Phys. J. C 73, 2455 (2013). arXiv:1205.3169

J.M. Cline, K. Kainulainen, P. Scott, C. Weniger, Update on scalar singlet dark matter. Phys. Rev. 88, 055025 (2013). arXiv:1306.4710

A. Urbano, W. Xue, Constraining the Higgs portal with antiprotons. JHEP 03, 133 (2015). arXiv:1412.3798

X.-G. He, J. Tandean, New LUX and PandaX-II results illuminating the simplest Higgs-portal dark matter models. JHEP 12, 074 (2016). arXiv:1609.03551

M. Escudero, A. Berlin, D. Hooper, M.-X. Lin, Toward (finally!) ruling out Z and Higgs mediated dark matter models. JCAP 1612, 029 (2016). arXiv:1609.09079

A. Goudelis, Y. Mambrini, C. Yaguna, Antimatter signals of singlet scalar dark matter. JCAP 12, 008 (2009). arXiv:0909.2799

N. Craig, H.K. Lou, M. McCullough, A. Thalapillil, The Higgs portal above threshold. JHEP 02, 127 (2016). arXiv:1412.0258

H. Han, J.M. Yang, Y. Zhang, S. Zheng, Collider signatures of Higgs-portal scalar dark matter. Phys. Lett. 756, 109–112 (2016). arXiv:1601.06232

P. Ko, H. Yokoya, Search for Higgs portal DM at the ILC. JHEP 08, 109 (2016). arXiv:1603.04737

A. Beniwal, F. Rajec et al., Combined analysis of effective Higgs portal dark matter models. Phys. Rev. 93, 115016 (2016). arXiv:1512.06458

A. Cuoco, B. Eiteneuer, J. Heisig, M. Krämer, A global fit of the \(\gamma \)-ray galactic center excess within the scalar singlet Higgs portal model. JCAP 6, 050 (2016). arXiv:1603.08228

G. Belanger, B. Dumont, U. Ellwanger, J.F. Gunion, S. Kraml, Global fit to Higgs signal strengths and couplings and implications for extended Higgs sectors. Phys. Rev. 88, 075008 (2013). arXiv:1306.2941

ATLAS Collaboration: G. Aad et al., Constraints on new phenomena via Higgs boson couplings and invisible decays with the ATLAS detector. JHEP 11, 206 (2015). arXiv:1509.00672

CMS Collaboration: V. Khachatryan et al., Searches for invisible decays of the Higgs boson in pp collisions at \(\sqrt{s} = 7\), 8, and 13 TeV. JHEP 02, 135 (2017). arXiv:1610.09218

Planck Collaboration, P.A.R. Ade et al., Planck 2015 results. XIII. Cosmological parameters. A&A 594, A13 (2016). arXiv:1502.01589

J.M. Cline, P. Scott, Dark matter CMB constraints and likelihoods for poor particle physicists. JCAP 3, 44 (2013). arXiv:1301.5908

T.R. Slatyer, Indirect dark matter signatures in the cosmic dark ages. I. Generalizing the bound on s-wave dark matter annihilation from Planck results. Phys. Rev. 93, 023527 (2016). arXiv:1506.03811

Fermi-LAT Collaboration: M. Ackermann, A. Albert et al., Searching for dark matter annihilation from Milky Way dwarf spheroidal galaxies with six years of Fermi large area telescope data. Phys. Rev. Lett. 115, 231301 (2015). arXiv:1503.02641

D.S. Akerib, H.M. Araújo et al., Improved limits on scattering of weakly interacting massive particles from reanalysis of 2013 LUX data. Phys. Rev. Lett. 116, 161301 (2016). arXiv:1512.03506

M. Pierre, J.M. Siegal-Gaskins, P. Scott, Sensitivity of CTA to dark matter signals from the Galactic Center. JCAP 6, 24 (2014). arXiv:1401.7330

H. Silverwood, C. Weniger, P. Scott, G. Bertone, A realistic assessment of the CTA sensitivity to dark matter annihilation. JCAP 1503, 055 (2015). arXiv:1408.4131

CTA Consortium: J. Carr et al., Prospects for indirect dark matter searches with the Cherenkov telescope array (CTA). PoS ICRC2015, 1203 (2016). arXiv:1508.06128

E. Aprile, J. Aalbers et al., Physics reach of the XENON1T dark matter experiment. JCAP 4, 027 (2016). arXiv:1512.07501

L. Goodenough, D. Hooper, Possible evidence for dark matter annihilation in the inner Milky Way from the Fermi gamma ray space telescope, FermiLab internal report. arXiv:0910.2998

D. Hooper, L. Goodenough, Dark matter annihilation in the galactic center as seen by the Fermi gamma ray space telescope. Phys. Lett. 697, 412–428 (2011). arXiv:1010.2752

D. Hooper, T. Linden, On the origin of the gamma rays from the galactic center. Phys. Rev. 84, 123005 (2011). arXiv:1110.0006

K.N. Abazajian, M. Kaplinghat, Detection of a gamma-ray source in the galactic center consistent with extended emission from dark matter annihilation and concentrated astrophysical emission. Phys. Rev. 86, 083511 (2012). arXiv:1207.6047 [Erratum: Phys. Rev. 87, 129902 (2013)]

C. Gordon, O. Macias, Dark matter and pulsar model constraints from galactic center Fermi-LAT gamma ray observations. Phys. Rev. 88, 083521 (2013). arXiv:1306.5725 [Erratum: Phys. Rev. 89(4), 049901 (2014)]

T. Daylan, D.P. Finkbeiner et al., The characterization of the gamma-ray signal from the central Milky Way: a case for annihilating dark matter. Phys. Dark Univ. 12, 1–23 (2016). arXiv:1402.6703

F. Calore, I. Cholis, C. McCabe, C. Weniger, A tale of tails: dark matter interpretations of the Fermi GeV excess in light of background model systematics. Phys. Rev. 91, 063003 (2015). arXiv:1411.4647

L. Feng, S. Profumo, L. Ubaldi, Closing in on singlet scalar dark matter: LUX, invisible Higgs decays and gamma-ray lines. JHEP 3, 45 (2015). arXiv:1412.1105

M. Duerr, P. Fileviez Pérez, J. Smirnov, Scalar singlet dark matter and gamma lines. Phys. Lett. B 751, 119–122 (2015). arXiv:1508.04418

M. Duerr, P.F. Pérez, J. Smirnov, Gamma-ray excess and the minimal dark matter model. JHEP 6, 8 (2016). arXiv:1510.07562

GAMBIT Dark Matter Workgroup: T. Bringmann, J. Conrad et al., DarkBit: a GAMBIT module for computing dark matter observables and likelihoods. arXiv:1705.07920

D.S. Akerib, S. Alsum et al., Results from a Search for dark matter in the complete LUX exposure. Phys. Rev. Lett. 118, 021303 (2017). arXiv:1608.07648

PandaX-II Collaboration: A. Tan et al., Dark matter results from first 98.7 days of data from the PandaX-II experiment. Phys. Rev. Lett. 117, 121303 (2016). arXiv:1607.07400

SuperCDMS Collaboration: R. Agnese et al., Search for low-mass weakly interacting massive particles with SuperCDMS. Phys. Rev. Lett. 112, 241302 (2014). arXiv:1402.7137

XENON100 Collaboration, E. Aprile, M. Alfonsi, et al., Dark matter results from 225 live days of XENON100 data. Phys. Rev. Lett. 109, 181301 (2012). arXiv:1207.5988

IceCube Collaboration: M.G. Aartsen et al., Improved limits on dark matter annihilation in the Sun with the 79-string IceCube detector and implications for supersymmetry. JCAP 04, 022 (2016). arXiv:1601.00653

IceCube Collaboration: M.G. Aartsen, R. Abbasi et al., Search for dark matter annihilations in the Sun with the 79-string IceCube detector. Phys. Rev. Lett. 110, 131302 (2013). arXiv:1212.4097

A. Boyarsky, D. Malyshev, O. Ruchayskiy, A comment on the emission from the Galactic Center as seen by the Fermi telescope. Phys. Lett. 705, 165–169 (2011). arXiv:1012.5839

J. Petrovic, P.D. Serpico, G. Zaharijas, Galactic Center gamma-ray “excess” from an active past of the Galactic Centre? JCAP 1410, 052 (2014). arXiv:1405.7928

E. Carlson, S. Profumo, Cosmic ray protons in the inner galaxy and the galactic center gamma-ray excess. Phys. Rev. 90, 023015 (2014). arXiv:1405.7685

S.K. Lee, M. Lisanti, B.R. Safdi, T.R. Slatyer, W. Xue, Evidence for unresolved \(\gamma \)-ray point sources in the inner galaxy. Phys. Rev. Lett. 116, 051103 (2016). arXiv:1506.05124

R. Bartels, S. Krishnamurthy, C. Weniger, Strong support for the millisecond pulsar origin of the Galactic center GeV excess. Phys. Rev. Lett. 116, 051102 (2016). arXiv:1506.05104

I. Cholis, C. Evoli et al., The Galactic Center GeV excess from a series of leptonic cosmic-ray outbursts. JCAP 12, 005 (2015). arXiv:1506.05119

H.A. Clark, P. Scott, R. Trotta, G.F. Lewis, Substructure considerations rule out dark matter interpretation of Fermi Galactic Center excess. MNRAS (2017, submitted). arXiv:1612.01539

GAMBIT Collaboration: P. Athron, C. Balazs et al., GAMBIT: the global and modular beyond-the-standard-model inference tool. arXiv:1705.07908

The GAMBIT Collaboration, Supplementary Data: Status of the scalar singlet dark matter model (2017). doi:10.5281/zenodo.801511. arXiv:1705.07931

M. Heikinheimo, T. Tenkanen, K. Tuominen, V. Vaskonen, Observational constraints on decoupled hidden sectors. Phys. Rev. 94, 063506 (2016). arXiv:1604.02401

G. Degrassi, S. Di Vita et al., Higgs mass and vacuum stability in the Standard Model at NNLO. JHEP 2012 (2012). arXiv:1205.6497

M. Gonderinger, Y. Li, H. Patel, M.J. Ramsey-Musolf, Vacuum stability, perturbativity, and scalar singlet dark matter. JHEP 1, 53 (2010). arXiv:0910.3167

A. Drozd, B. Grzadkowski, J. Wudka, Cosmology of multi-singlet-scalar extensions of the standard model. Acta Phys. Pol. B 42, 2255–2262 (2011). arXiv:1310.2985

C.-S. Chen, Y. Tang, Vacuum stability, neutrinos, and dark matter. JHEP 4, 19 (2012). arXiv:1202.5717

G.M. Pruna, T. Robens, Higgs singlet extension parameter space in the light of the LHC discovery. Phys. Rev. 88, 115012 (2013). arXiv:1303.1150

G. Bélanger, K. Kannike, A. Pukhov, M. Raidal, \(Z_{3}\) scalar singlet dark matter. JCAP 1, 022 (2013). arXiv:1211.1014

N. Khan, S. Rakshit, Study of electroweak vacuum metastability with a singlet scalar dark matter. Phys. Rev. 90, 113008 (2014). arXiv:1407.6015

T. Alanne, K. Tuominen, V. Vaskonen, Strong phase transition, dark matter and vacuum stability from simple hidden sectors. Nucl. Phys. B 889, 692–711 (2014). arXiv:1407.0688

H. Han, S. Zheng, New constraints on Higgs-portal scalar dark matter. JHEP 12, 44 (2015). arXiv:1509.01765

S. Kanemura, M. Kikuchi, K. Yagyu, Radiative corrections to the Higgs boson couplings in the model with an additional real singlet scalar field. Nucl. Phys. B 907, 286–322 (2016). arXiv:1511.06211

T. Robens, T. Stefaniak, Status of the Higgs singlet extension of the standard model after LHC run 1. Eur. Phys. J. C 75, 104 (2015). arXiv:1501.02234

T. Robens, T. Stefaniak, LHC benchmark scenarios for the real Higgs singlet extension of the standard model. Eur. Phys. J. C 76, 268 (2016). arXiv:1601.07880

P. Gondolo, G. Gelmini, Cosmic abundances of stable particles: improved analysis. Nucl. Phys. A 360, 145–179 (1991)

GAMBIT Models Workgroup: P. Athron, C. Balázs et al., SpecBit, DecayBit and PrecisionBit: GAMBIT modules for computing mass spectra, particle decay rates and precision observables. arXiv:1705.07936

LHC Higgs Cross Section Working Group: J.R. Andersen et al., Handbook of LHC Higgs cross sections: 3. Higgs properties, CERN report. arXiv:1307.1347

L.G. van den Aarssen, T. Bringmann, Y.C. Goedecke, Thermal decoupling and the smallest subhalo mass in dark matter models with Sommerfeld-enhanced annihilation rates. Phys. Rev. 85, 123512 (2012). arXiv:1202.5456

T. Binder, T. Bringmann, M. Gustafsson, A. Hryczuk, Early kinetic decoupling of dark matter: when the standard way of calculating the thermal relic density fails. arXiv:1706.07433

M. Benito, N. Bernal, N. Bozorgnia, F. Calore, F. Iocco, Particle Dark Matter constraints: the effect of Galactic uncertainties. JCAP 2, 007 (2017). arXiv:1612.02010

M.J. Reid et al., Trigonometric parallaxes of massive star forming regions: VI. Galactic structure, fundamental parameters and non-circular motions. ApJ 700, 137–148 (2009). arXiv:0902.3913

J. Bovy, D.W. Hogg, H.-W. Rix, Galactic masers and the Milky Way circular velocity. ApJ 704, 1704–1709 (2009). arXiv:0907.5423

M.C. Smith et al., The RAVE Survey: constraining the local galactic escape speed. MNRAS 379, 755–772 (2007). arXiv:astro-ph/0611671

L. Bergström, J. Edsjö, P. Gondolo, Indirect detection of dark matter in km-size neutrino telescopes. Phys. Rev. 58, 103519 (1998). arXiv:hep-ph/9806293

Particle Data Group, K.A. Olive et al., Review of particle physics. Chin. Phys. C 38, 090001 (2014)

Particle Data Group: K.A. Olive et al., Review of particle physics, update to Ref. [96] (2015). http://pdg.lbl.gov/2015/tables/rpp2015-sum-gauge-higgs-bosons.pdf

ATLAS, CDF, CMS and D0 Collaborations, First combination of Tevatron and LHC measurements of the top-quark mass, ATLAS report. arXiv:1403.4427

GAMBIT Scanner Workgroup: G.D. Martinez, J. McKay et al., Comparison of statistical sampling methods with ScannerBit, the GAMBIT scanning module. arXiv:1705.07959

GAMBIT Collider Workgroup: C. Balázs, A. Buckley et al., ColliderBit: a GAMBIT module for the calculation of high-energy collider observables and likelihoods. arXiv:1705.07919

GAMBIT Collaboration: P. Athron, C. Balázs et al., Global fits of GUT-scale SUSY models with GAMBIT. arXiv:1705.07935

GAMBIT Collaboration: P. Athron, C. Balázs et al., A global fit of the MSSM with GAMBIT. arXiv:1705.07917

P. Scott, Pippi—painless parsing, post-processing and plotting of posterior and likelihood samples. Eur. Phys. J. Plus 127, 138 (2012). arXiv:1206.2245

P. Gondolo, J. Edsjö et al., DarkSUSY: computing supersymmetric dark matter properties numerically. JCAP 7, 8 (2004). arXiv:astro-ph/0406204

IceCube Collaboration: M.G. Aartsen et al., Search for annihilating dark matter in the Sun with 3 years of IceCube data. Eur. Phys. J. C 77, 146 (2017). arXiv:1612.05949

P. Scott, C. Savage, J. Edsjö, and the IceCube Collaboration: R. Abbasi et al., Use of event-level neutrino telescope data in global fits for theories of new physics. JCAP 11, 57 (2012). arXiv:1207.0810

M. Blennow, J. Edsjö, T. Ohlsson, Neutrinos from WIMP annihilations obtained using a full three-flavor Monte Carlo approach. JCAP 1, 21 (2008). arXiv:0709.3898

R. Catena, P. Ullio, A novel determination of the local dark matter density. JCAP 1008, 004 (2010). arXiv:0907.0018

S. Baker, R.D. Cousins, Clarification of the use of Chi square and likelihood functions in fits to histograms. Nucl. Instrum. Methods 221, 437–442 (1984)

A.L. Read, Modified frequentist analysis of search results (the \(CL_s\) method), in 1st Workshop on Confidence Limits (CERN, Geneva, Switzerland) (2000), pp. 81–101. CERN-2000-005

A.L. Read, DURHAM IPPP WORKSHOP PAPER: presentation of search results: the CL\(_{s}\) technique. J. Phys. G 28, 2693–2704 (2002)

G. Zech, Upper limits in experiments with background or measurement errors. Nucl. Instrum. Methods A 277, 608–610 (1989)

B.C. Allanach, SOFTSUSY: a program for calculating supersymmetric spectra. Comput. Phys. Commun. 143, 305–331 (2002). arXiv:hep-ph/0104145

B.C. Allanach, P. Athron, L.C. Tunstall, A. Voigt, A.G. Williams, Next-to-minimal SOFTSUSY. Comput. Phys. Commun. 185, 2322–2339 (2014). arXiv:1311.7659

P. Athron, J.-H. Park, D. Stöckinger, A. Voigt, FlexibleSUSY—a spectrum generator generator for supersymmetric models. Comput. Phys. Commun. 190, 139–172 (2015). arXiv:1406.2319

F. Staub, SARAH, arXiv:0806.0538

F. Staub, Automatic Calculation of Supersymmetric renormalization group equations and self energies. Comput. Phys. Commun. 182, 808–833 (2011). arXiv:1002.0840

F. Staub, SARAH 3.2: Dirac Gauginos, UFO output, and more. Comput. Phys. Commun. 184, 1792–1809 (2013). arXiv:1207.0906

F. Staub, SARAH 4: a tool for (not only SUSY) model builders. Comput. Phys. Commun. 185, 1773–1790 (2014). arXiv:1309.7223

Acknowledgements

We thank Jim Cline, Andrew Fowlie, Tomás Gonzalo, Julia Harz, Sebastian Hoof, Kimmo Kainulainen, Roberto Ruiz, Roberto Trotta and Sebastian Wild for useful discussions, and Lucien Boland, Sean Crosby and Goncalo Borges of the Australian Centre of Excellence for Particle Physics at the Terascale for computing assistance and resources. We warmly thank the Casa Matemáticas Oaxaca, affiliated with the Banff International Research Station, for hospitality whilst part of this work was completed, and the staff at Cyfronet, for their always helpful supercomputing support. GAMBIT has been supported by STFC (UK; ST/K00414X/1, ST/P000762/1), the Royal Society (UK; UF110191), Glasgow University (UK; Leadership Fellowship), the Research Council of Norway (FRIPRO 230546/F20), NOTUR (Norway; NN9284K), the Knut and Alice Wallenberg Foundation (Sweden; Wallenberg Academy Fellowship), the Swedish Research Council (621-2014-5772), the Australian Research Council (CE110001004, FT130100018, FT140100244, FT160100274), The University of Sydney (Australia; IRCA-G162448), PLGrid Infrastructure (Poland), Polish National Science Center (Sonata UMO-2015/17/D/ST2/03532), the Swiss National Science Foundation (PP00P2-144674), the European Commission Horizon 2020 Marie Skłodowska-Curie actions (H2020-MSCA-RISE-2015-691164), the ERA-CAN+ Twinning Program (EU and Canada), the Netherlands Organisation for Scientific Research (NWO-Vidi 680-47-532), the National Science Foundation (USA; DGE-1339067), the FRQNT (Québec) and NSERC/The Canadian Tri-Agencies Research Councils (BPDF-424460-2012).

Author information

Authors and Affiliations

Consortia

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Funded by SCOAP3

About this article

Cite this article

The GAMBIT Collaboration: ., Athron, P., Balázs, C. et al. Status of the scalar singlet dark matter model. Eur. Phys. J. C 77, 568 (2017). https://doi.org/10.1140/epjc/s10052-017-5113-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-017-5113-1