Abstract

The MEG (Mu to Electron Gamma) experiment has been running at the Paul Scherrer Institut (PSI), Switzerland since 2008 to search for the decay μ +→e+ γ by using one of the most intense continuous μ + beams in the world. This paper presents the MEG components: the positron spectrometer, including a thin target, a superconducting magnet, a set of drift chambers for measuring the muon decay vertex and the positron momentum, a timing counter for measuring the positron time, and a liquid xenon detector for measuring the photon energy, position and time. The trigger system, the read-out electronics and the data acquisition system are also presented in detail. The paper is completed with a description of the equipment and techniques developed for the calibration in time and energy and the simulation of the whole apparatus.

Similar content being viewed by others

1 Introduction

A search for the Charged Lepton Flavour Violating (CLFV) decay μ +→e+ γ, the MEG experiment (see [1] and references therein for a detailed report of the experiment motivation, design criteria and goals) is in progress at the Paul Scherrer Institut (PSI) in Switzerland. Preliminary results have already been published [2, 3]. The goal is to push the sensitivity to this decay down to ∼5×10−13 improving the previous limit set by the MEGA experiment, 1.2×10−11 [4], by a factor 20.

CLFV processes are practically forbidden in the Standard Model (SM), which, even in presence of neutrino masses and mixing, predicts tiny branching ratios (BR≪10−50) for CLFV decays. Detecting such decays would be a clear indication of new physics beyond the SM, as predicted by many extensions such as supersymmetry [5]. Hence, CLFV searches with improved sensitivity either reveal new physics or constrain the allowed parameter space of SM extensions.

In MEG positive muons stop and decay in a thin target located at the centre of a magnetic spectrometer. The signal has the simple kinematics of a two-body decay from a particle at rest: one monochromatic positron and one monochromatic photon moving in opposite directions each with an energy of 52.83 MeV (half of the muon mass) and being coincident in time.

This signature needs to be extracted from a background induced by Michel (μ +→e+ νν) and radiative (μ +→e+ γνν) muon decays. The background is dominated by accidental coincidence events where a positron and a photon from different muon decays with energies close to the kinematic limit overlap within the direction and time resolution of the detector. Because the rate of accidental coincidence events is proportional to the square of the μ + decay rate, while the rate of signal events is proportional only to the μ + decay rate, direct-current beams allow a better signal to background ratio to be achieved than for pulsed beams. Hence we use the PSI continuous surface muon beam with intensity ∼3×107 μ +/s (see Sect. 2).

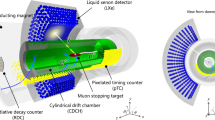

A schematic of the MEG apparatus is shown in Fig. 1. A magnet, COBRA (COnstant Bending RAdius), generates a gradient magnetic field, for the first time among particle physics experiments, with the field strength gradually decreasing at increasing distance along the magnet axis from the centre.

This configuration is optimised to sweep low-momentum positrons from Michel decays rapidly out of the magnet, and to keep the bending radius of the positron trajectories only weakly dependent on their emission angle within the acceptance region (see Sect. 3).

The positron track parameters are measured by a set of very low mass Drift CHambers (DCH) designed to minimise the multiple scattering (see Sect. 4). The positron time is measured by a Timing Counter (TC) consisting of scintillator bars read out by PhotoMultiplier Tubes (PMT) (see Sect. 5).

For γ-ray detection, we have developed an innovative detector using Liquid Xenon (LXe) as a scintillation material viewed by PMTs submersed in the liquid. This detector provides accurate measurements of the γ-ray energy and of the time and position of the interaction point (see Sect. 6).

We monitor the performance of the experiment continuously by introducing a variety of calibration methods (see Sect. 7).

We employ a flexible and sophisticated electronics system for triggering the μ +→e+ γ candidate events along with calibration data (see Sect. 8).

Special considerations influenced the development of the data acquisition system. We record signals from all detectors with a custom-designed waveform digitiser, the Domino Ring Sampler (DRS) (see Sects. 9 and 10).

We evaluate detector response functions using data with help of detector simulations (see Sect. 11).

In this paper, we mostly refer to a cylindrical coordinate system (r,ϕ,z) with origin in the centre of COBRA. The z-axis is parallel to the COBRA axis and directed along the incoming muon beam. The axis defining ϕ=0 (the x-axis of the corresponding Cartesian coordinate system) is directed opposite to the centre of the LXe detector (as a consequence the y-axis is directed upwards). Positrons move along trajectories of decreasing ϕ coordinate. When required, the polar angle θ with respect to the z-axis is also used. The region with z<0 is referred as upstream, that with z>0 as downstream.

The angular acceptance of the experiment is defined by the size of the LXe fiducial volume, corresponding approximately to \(\phi\in (\frac{2}{3}\pi,\frac{4}{3}\pi)\) and |cosθ|<0.35, for a total acceptance of ∼11 %. The spectrometer is designed to fully accept positrons from μ +→e+ γ decays when the γ-ray falls in the acceptance region.

2 Beam line

2.1 Experimental requirements

In order to meet the stringent background requirements necessary for a high-sensitivity, high-rate coincidence experiment such as MEG, one of the world’s highest intensity continuous muon beams, the πE5 channel at PSI, is used in conjunction with the high-performance MEG beam transport system which couples the πE5 channel to the COBRA spectrometer (see Sect. 3). This allows more than 108 μ +/s, with an optimal suppression of beam-correlated background, to be transported to the ultra-thin stopping-target at the centre of COBRA. The main requirements of such a low-energy surface muon beam are: high-intensity with high-transmission optics at 28 MeV/c, close to the kinematic edge of stopped muon decay and to the maximum intensity of the beam momentum spectrum; small beam emittance to minimise the stopping-target size; momentum-biteFootnote 1 of less than 10 % with an achromatic final focus to provide an almost monochromatic beam with a high stopping densityFootnote 2 and enabling muons to be stopped in a minimally thick target; minimisation and separation of beam-related background, such as beam e + originating from π 0-decay in the production target or decay particles produced along the beam line; minimisation of material interactions along the beam line, requiring both vacuum and helium environments to reduce the multiple scattering and the photon-production probability for annihilation-in-flightFootnote 3 or Bremsstrahlung.

Furthermore, the experiment requires an arsenal of calibration and monitoring tools, including, on the beam side, the possibility of sources of monochromatic photons and positrons for calibration purposes. This in turn requires a 70.5 MeV/c stopped negative-pion beam tune to produce photons from the charge-exchange (π − p→π 0 n) and radiative capture reactions (π − p→γn) on a liquid hydrogen target (see Sect. 7.3.2), as well as a 53 MeV/c positron beam tune to produce monochromatic Mott-scattered positrons (see Sect. 7.2).

Finally, the MEG beam transport system also allows the introduction of external targets and detectors, as well as a proton beam line, from a dedicated Cockcroft–Walton (C–W) accelerator (see Sect. 7.3.1), to the centre of the spectrometer helium volume. This is achieved without affecting the gas environment, by means of specially designed end-caps. The protons from C–W accelerator interact with a dedicated target to produce γ-ray lines induced by nuclear reactions.

2.2 πE5 beam line and MEG beam transport system

The πE5 channel is a 165∘ backwards-oriented, windowless, high-acceptance (150 msr), low-momentum (<120 MeV/c), dual-port π −, μ − or e-channel. For the MEG experiment, the channel is tuned to +28 MeV/c with a momentum-bite of between 5–7 % FWHM, depending on the opening of the momentum selecting slits placed in the front-part of the channel. This constitutes an optimal high-intensity surface muon tune (stopped pion-decay at the surface of the production target) at backward pion production angles [6, 7]. A schematic of the πE5 channel and the connecting MEG beam line is shown in Fig. 2. The main production target is a rotating radiation-cooled graphite wheel of 4 cm thickness in the beam direction. It is connected to the πE5 area by a quadrupole and sextupole channel that couples to the initial extraction and focussing elements of the MEG beam line, quadrupole triplet I, which exits the shielding wall and provides optimal high transmission through the Wien-filter (E×B field separator). With an effective length of about 83 cm for both the electric and magnetic fields, together with a 200 kV potential across the 19 cm gap of the electrodes, a mass separation equivalent to an angular separation of +88 mrad and −25 mrad between muons and positrons and between muons and pions is achieved, respectively. This together with Triplet II and a collimator system (equivalent to 11X0 of Pb), placed after the second triplet, separates the muons from the eight-times higher beam positron contamination coming from the production target. A separation quality between muons and beam positrons of 8.1σ μ is so achieved, corresponding to a 12 cm physical separation at the collimator system as shown in Fig. 3. This allows an almost pure muon beamFootnote 4 to propagate to the Beam Transport Solenoid (BTS) (see Sect. 2.3), which couples the injection to the superconducting COBRA spectrometer in an iron-free way. By means of a 300 μm thick Mylar® degrader system placed at the intermediate focus, the BTS also minimises the multiple scattering contribution to the beam and adjusts the muon range for a maximum stop-density at the centre of the muon target, placed inside a helium atmosphere, at the centre of the COBRA spectrometer.

Schematic of the πE5 Channel and the MEG beam transport system showing the beam elements and detectors: Wien-filter, collimator system and the superconducting Beam Transport Solenoid (BTS) and central degrader system. Coupled to these are the He-filled COBRA spectrometer, consisting of a gradient-field magnet and central target system. The downstream insertion system allowing the remote insertion of the Cockcroft–Walton beam pipe, for calibration purposes, is also shown

Separator scan plot, measured post collimator system. The black dots represent on-axis, low discriminator threshold intensity measurements at a given separator magnetic field value during the scan, for a fixed electric field value of −195 kV. The red curve represents a double Gaussian fit to the data points, with a constant background. A separation of 8.1 muon beam σ μ is found, corresponding to more than 12 cm separation of beam-spot centres at the collimator. The raw muon peak measured at low discriminator threshold also contains a contribution from Michel positrons due to decays in the detector. These can easily be distinguished by also measuring at high threshold, where only muons are visible on account of their higher energy loss and hence higher pulse-height (Color figure online)

2.3 The Beam Transport Solenoid (BTS)

The BTS, a 2.8 m long iron-free superconducting solenoid, with a 38 cm warm-bore aperture, coupling directly to the beam line vacuum, is shown in Fig. 4. With a maximal current of 300 A a field of 0.54 T can be reached, higher than the 0.36 T required for an intermediate focus at the central degrader system for muons. The double-layered 2.63 m long coil is made up of a 45 % Ni/Ti, 55 % Cu matrix conductor with an inductance of 1.0 H so yielding a maximum stored energy of 45 kJ.

The Beam Transport Solenoid (BTS), shown coupled to the beam line between Triplet II and the COBRA spectrometer. The main cryogenic tower with the current leads and the Joule–Thompson valve tower with the LHe transfer line from the cryoplant coupled to it as well as the smaller LN2 transfer line in the background can be seen. The two correction dipole magnets on the downstream side are not shown here

On the cryogenic side, a dedicated liquid helium (LHe) port to the central cryoplant is used to transport the supercritical helium at ∼10 bar to two Joule–Thompson valves (in the upper tower in Fig. 4), which are used to liquefy and automatically regulate the level of the 150 ℓ of LHe in the cryostat. A separate liquid nitrogen line cools an outer heat-shield used to minimise the LHe losses.

Furthermore, three iron-free correction dipole magnets (one cosθ-type placed upstream of the BTS) one horizontal and one vertical conventional-dipole type, both mounted on the downstream side of the BTS, are employed to ensure an axial injection into both solenoids. The three dipole magnets compensate for a radial asymmetry in the fringe fields induced by the interaction of the stray field from the large-aperture, iron-free COBRA magnet with an iron component of the hall-floor foundations. An example of the optics in Fig. 5 shows the 2σ beam envelopes both vertically and horizontally along the 22 m long beam line, together with the apertures of the various elements and the dispersion trajectory of particles having a 1 % higher momentum (dotted line).

2nd-order TRANSPORT calculation showing the vertical (top-half) and horizontal (bottom-half) beam envelopes plotted along the beam-axis from close to target E up to the centre of COBRA, a distance of 22 m. The vertical and horizontal scales differ 33:1. The coloured elements show the vacuum chamber apertures of the various beam elements, while the dotted line represents the central dispersion trajectory for particles with a 1 % higher momentum (Color figure online)

2.4 Spectrometer coupling system

The COBRA spectrometer is sealed at both ends by end-caps which constitute the final part of the beam line, separating the vacuum section from the helium environment inside. These specially designed 1160 mm diameter light-weight (35–40 kg), only 4 mm-thick aluminium constructs minimise the material available for possible photon background production, crucial to a background-sensitive experiment. They also integrate the beam vacuum window on the upstream side and an automated thin-windowed (20 μm) bellows insertion system, capable of an extension-length of about 1.7 m. The bellows insertion system is used to introduce various types of targets such as a liquid hydrogen target used in conjunction with the pion beam, or a lithium tetraborate photon production target used together with the C–W accelerator, on the downstream side.

2.5 Commissioning

The commissioning of the MEG beam line was completed with the first “engineering run” in late 2007. During commissioning, several beam optical solutions were studied, including the investigation of both πE5 branches (“U”-branch and “Z”-branch) as well as different beam-correlated background separation methods: a combined degrader energy-loss/magnetic-field separation method or a crossed (E×B)-field separator (Wien filter). The optimum solution favoured the “Z”-channel with a Wien filter, both from the experimental layout and the rate and background-suppression point of view.

In order to maximise the beam transmission rate and the stopping density in the target, an understanding of the momentum spectrum and channel momentum acceptance is necessary. This was carefully studied by measuring the muon momentum spectrum in the vicinity of the kinematic edge of stopped pion decay (29.79 MeV/c). As with all phase-space measurements performed at high rate, a scanning technique was used: either one using a small cylindrical “pill” scintillator of 2 mm diameter and 2 mm thickness coupled to a miniature PMT [8], or one using a thick depletion layer Avalanche PhotoDiode (APD), without a scintillator, in the case of measurements in a magnetic field. Both these solutions gave an optimum pulse-height separation between positrons and muons. This allows integrated beam-spot intensity measurements up to 2 GHz to be made.

For the momentum spectrum, taken with the full 7 % FWHM momentum-bite and shown in Fig. 6, the whole beam line was tuned to successive momentum values and the integral muon rate was then determined by scanning the beam-spot using the beam line as a spectrometer. This allows to determine both the central momentum and the momentum-bite by fitting a theoretical distribution, folded with a Gaussian resolution function corresponding to the momentum-bite, plus a constant cloud-muon background over this limited momentum range. The red curve corresponding to the fitted function shows the expected P3.5 empirical behaviour below the kinematic edge. The cloud-muon content below the surface-muon peak, which has its origin in pion decay-in-flight in the vicinity of the production target, was determined to be 3.3 % of the surface-muon rate. This was determined from negative muon measurements at 28 MeV/c and using the known pion production cross-section differences [9], since negative surface-muons do not exist due to the formation of pionic atoms and hence the small cloud-muon contribution is much easier to measure in the absence of a dominating surface-muon signal.

Measured MEG muon momentum spectrum with fully open slits. Each point is obtained by optimising the whole beam line for the corresponding central momentum and measuring the full beam-spot intensity. The red line is a fit to the data, based on a theoretical P3.5 behaviour, folded with a Gaussian resolution function corresponding to the momentum-bite plus a constant cloud-muon background (Color figure online)

Another important feature investigated was the muon polarisation on decaying: since the muons are born in a 100 % polarised state, with their spin vector opposing their momentum vector, the resultant angular distribution on decay is an important issue in understanding the angular distribution of the Michel positrons and the influence of detector acceptance. The depolarisation along the beam line, influenced by divergence, materials, magnetic fields and beam contaminants was studied and incorporated into the MEG Monte Carlo, which was used to compare results with the measured angular distribution asymmetry (upstream/downstream events) of Michel positrons. The predicted muon polarisation of 91 % was confirmed by these measurements, which yielded a value of 89±4 % [10, 11]. The main single depolarising contribution is due to the mean polarization of the 3.3 % cloud-muon contamination, which opposes that of the surface muons. However, because the depolarisation in the polyethylene target is totally quenched by the strength of the longitudinal magnetic field in COBRA, a high polarization can be maintained [10].

In order to achieve the smallest beam-spot size at the target, the position of the final degrader as well as the polarity combination of COBRA and the BTS were studied. The smallest beam divergences and hence the smallest beam-spot size at the target were achieved by having a reverse polarity magnetic field of the BTS with respect to COBRA. This is due to the fact that the radial field is enhanced at the intermediate focus between the two magnets, thus effectively increasing the radial focussing power and minimising the growth of the beam divergences through the COBRA volume. The final round beam-spot at the target, with σ x,y ∼10 mm is shown in Fig. 7.

Scanned horizontal and vertical muon beam-spot profiles measured at the centre of COBRA. The red lines show the Gaussian fits to the distributions that yield σ x,y ∼10 mm and a total stopping intensity of ∼3×107 μ +/s at a proton beam intensity of 2.2 mA and momentum-bite of 5.0 % FWHM (Color figure online)

2.6 Beam line performance

Although the beam line was tuned for maximal rate with minimal positron beam contamination, the final beam stopping intensity was determined by a full analysis of the detector performance and an evaluation of its sensitivity to the μ +→e+ γ decay. This yielded an optimal muon stopping rate in the target of 3×107 μ +/s.

Furthermore, for both the pion beam and Mott positron beam calibration tunes, mentioned in Sect. 2.1, which involve prompt particle production at the proton target, the central momentum was chosen to give a maximal time-of-flight separation between the wanted particles and the unwanted background particles at the centre of COBRA, based on the use of the accelerator modulo 19.75 ns radio-frequency signal as a clock, which has a fixed time relationship to the production at the proton target. In the case of the Mott positron beam the final momentum should also be close to the signal energy of 52.83 MeV/c. A summary of the final beam parameters is given in Table 1.

2.7 Target

2.7.1 Requirements

Positive muons are stopped in a thin target at the centre of COBRA. The target is designed to satisfy the following criteria:

-

1

Stop >80 % of the muons in a limited axial region at the magnet centre.

-

2

Minimise conversions of γ-rays from radiative muon decay in the target.

-

3

Minimise positron annihilation-in-flight with γ-rays in the acceptance region.

-

4

Minimise multiple scattering and Bremsstrahlung of positrons from muon decay.

-

5

Allow the determination of the decay vertex position and the positron direction at the vertex by projecting the reconstructed positron trajectory to the target plane.

-

6

Be dimensionally stable and be remotely removable to allow frequent insertion of ancillary calibration targets.

Criterion 1 requires a path-length along the incident muon axial trajectory of ∼0.054 g/cm2 for low-Z materials and for the average beam momentum of 19.8 MeV/c (corresponding to a kinetic energy of 1.85 MeV) at the centre of COBRA. To reduce scattering and conversion of outgoing particles, the target is constructed in the form of a thin sheet in a vertical plane, with the direction perpendicular to the target at θ∼70∘, oriented so that the muon beam is incident on the target side facing the LXe detector (see Fig. 1). The requirement that we be able to deduce the positron angle at the vertex sets the required precision on the knowledge of the position of the target in the direction perpendicular to its plane. This derives from the effect of the track curvature on the inferred angle; an error in the projected track distance results in an error in the positron angle at the target due to the curvature over the distance between the assumed and actual target plane position. This angle error is larger when the positrons are incident at a large angle with respect to the normal vector; for an incidence angle of 45∘, an error in the target plane position of 500 μm results in an angle error of ∼5 mrad. This sets the requirements that the target thickness be below 500 μm (eliminating low-density foam as a target material) and that its position with respect to the DCH spectrometer be measured to ∼100 μm.

2.7.2 Realisation

To meet the above criteria, the target was implemented as a 205 μm thick sheet of a low-density (0.895 g/cm3) layered-structure of a polyethylene and polyester with an elliptical shape and semi-major and semi-minor axes of 10 cm and 4 cm. The target was captured between two rings of Rohacell® foam of density 0.053 g/cm3 and hung by a Delrin® and Rohacell stem from a remotely actuated positioning device attached to the DCH support frame. To allow a software verification of the position of the target plane, as described in Sect. 2.7.5, eight holes of radius 0.5 cm were punched in the foil.

2.7.3 Automated insertion

The positioning device consists of a pneumatically operated, bi-directional cylinder that inserts and retracts the target. Physical constraints on the position of the cylinder required the extraction direction to not be parallel to the target plane; hence the position of the plane when inserted was sensitive to the distance by which the piston travelled. This distance was controlled by both a mechanical stop on the piston travel and a sensor that indicated when the target was fully inserted.

2.7.4 Optical alignment

The position of the target plane is measured every run period with an optical survey that images crosses inscribed on the target plane; this survey is done simultaneously with that of the DCH. The relative accuracy of the target and DCH positions was estimated to be ±(0.5, 0.5, 0.8) mm in the (x, y, z) directions.

2.7.5 Software alignment

The optical survey was checked by imaging the holes in the target. We define z′ as the position parallel to the target plane and perpendicular to the y coordinate and x′ as the position perpendicular to the target plane. The y and z′ positions are easily checked by projecting particle trajectories to the nominal target plane and determining the z′ and y hole coordinates by fitting the illuminations to holes superimposed on a beam profile of approximately Gaussian shape. We note that these target coordinates are not important to the analysis, since a translation of the target in the target plane has no effect on the inferred vertex position. The x′ coordinate was checked by imaging the y positions of the holes as a function of the track direction at the target ϕ e . Figure 8 illustrates the technique. The x′ target position is determined by requiring that the target hole images be insensitive to ϕ e . Figure 9 shows the illumination plot for the 2011 data sample. Figure 10 shows the normalised y distribution of events with 20∘<ϕ e <30∘ in a slice at fixed z′ centred on a target hole (in red) and in adjacent slices (in blue). The difference (in black) is fitted with a Gaussian. Figure 11 shows the apparent y position of one of the target holes versus ϕ e , fitted with a tan(ϕ e ) function, from which we infer that the x′ offset with respect to the optical survey is 0.2±0.1 mm. By imaging all the holes (i.e. finding x′(y,z′)) both the planarity and the orientation of the target plane was determined.

An illustration of the target hole imaging technique. If there is an offset in the x′ direction between the assumed target position and the true target position, the apparent y position of a target hole will depend on the angle of emission at the target ϕ e . The shift in y is approximately proportional to the shift in the x′ direction by tan(ϕ e )

2.7.6 Multiple scattering contribution from the target

The target itself constitutes the most relevant irreducible contribution to the positron multiple scattering and therefore to the angular resolution of the spectrometer.

The simulated distribution of the μ + decay vertex along x′ is shown in Fig. 12. The average is slightly beyond the centre of the target. Averaging over the decay vertex position and over the positron direction in the MEG acceptance region, the difference between the positron direction at the μ + decay vertex and that at the target boundary follows a Gaussian distribution with σ=5.2 mrad.

3 COBRA magnet

3.1 Concept

The COBRA (COnstant Bending RAdius) magnet is a thin-wall superconducting magnet generating a gradient magnetic field which allows stable operation of the positron spectrometer in a high-rate environment. The gradient magnetic field is specially designed so that the positron emitted from the target follows a trajectory with an almost constant projected bending radius weakly dependent on the emission polar angle θ (Fig. 13(a)). Only high-momentum positrons can therefore reach the DCH placed at the outer radius of the inner bore of COBRA. Another good feature of the gradient field is that the positrons emitted at cosθ∼0 are quickly swept away (Fig. 13(b)). This can be contrasted to a uniform solenoidal field where positrons emitted transversely would turn repeatedly in the spectrometer. The hit rates of Michel positrons expected for the gradient and uniform fields are compared in Fig. 14 and indicate a significant reduction for the gradient field.

The central part of the superconducting magnet is as thin as 0.197X 0 so that only a fraction of the γ-rays from the target interacts before reaching the LXe detector placed outside COBRA. The COBRA magnet (see Fig. 15) is equipped with a pair of compensation coils to reduce the stray field around the LXe detector for the operation of the PMTs.

The parameters of COBRA are listed in Table 2.

3.2 Design

3.2.1 Superconducting magnet

The gradient magnetic field ranging from 1.27 T at the centre to 0.49 T at either end of the magnet cryostat is generated by a step structure of five coils with three different radii (one central coil, two gradient coils and two end coils) (Fig. 16). The field gradient is optimised by adjusting the radii of the coils and the winding densities of the conductor. The coil structure is conductively cooled by two mechanical refrigerators attached to the end coils; each is a two-stage Gifford–McMahon (GM) refrigerator [12] with a cooling power of 1 W at 4.2 K. The thin support structure of the coil is carefully designed in terms of the mechanical strength and the thermal conductivity. The basic idea is to make the coil structure as thin as possible by using a high-strength conductor on a thin aluminium mechanical support cylinder. Figure 17 shows the layer structure of the central coil, which has the highest current density. A high-strength conductor is wound in four layers inside the 2 mm-thick aluminium support cylinder. Pure aluminium strips with a thickness of 100 μm are attached on the inner surface of the coil structure in order to increase the thermal conductivity. Several quench protection heaters are attached to all coils in order to avoid a local energy dump in case of a quench. The high thermal conductivity of the coil structure and the uniform quench induced by the protection heaters are important to protect the magnet.

A high-strength aluminium stabilised conductor is used for the superconducting coils to minimise the thickness of the support cylinder. The cross-sectional view of the conductor is shown in Fig. 17. A copper matrix NbTi multi-filamentary core wire is clad with aluminium stabiliser which is mechanically reinforced by means of “micro-alloying” and “cold work hardening” [13, 14]. The overall yield strength of the conductor is measured to be higher than 220 MPa at 4.4 K. The measured critical current of the conductor as a function of applied magnetic field is shown in Fig. 18 where the operating condition of COBRA is also shown (the highest magnetic field of 1.7 T is reached in the coils with the operating current of 359.1 A). This indicates that COBRA operates at 4.2 K with a safety margin of 40 % for the conductor.

3.2.2 Compensation coils

The COBRA magnet is equipped with a pair of resistive compensation coils in order to reduce the stray field from the superconducting magnet around the LXe detector (Fig. 15). The stray field should be reduced below 5×10−3 T for the operation of the PMTs of the LXe detector. The stray field from the superconducting magnet is efficiently cancelled around the LXe detector since the distribution of the magnetic field generated by the compensation coils is similar to that of the superconducting magnet around that region but the sign of the field is opposite. Figure 19 shows that the expected distribution of the residual magnetic field in the vicinity of the LXe detector is always below 5×10−3 T.

3.3 Performance

3.3.1 Excitation tests

The superconducting magnet was successfully tested up to 379 A coil current, which is 5.6 % higher than the operating current. The temperature and stress distribution are monitored by using resistive temperature sensors and strain gauges attached to the coils, respectively. The temperatures of all the coils are found to stay lower than 4.5 K even at the highest coil current.

The strains of the coils are measured by the strain gauges up to 379 A coil current at the central coils and the support cylinder where the strongest axial electromagnetic force is expected. We observe a linear relation between the strains and the square of the coil current, which is proportional to the electromagnetic force acting on the coils. This indicates that the mechanical strength of the coils and the support strength are sufficient up to 379 A.

The effect of the compensation coils is also measured. The gain of the PMTs of the LXe detector, measured using LEDs (see Sect. 7.1.1), is suppressed by a factor of 50 on average in the stray magnetic field from the superconducting magnet, whereas it recovers up to 93 % of the zero field value with the compensation coils.

3.3.2 Mapping of magnetic field

The magnetic field of COBRA was measured prior to the experiment start with a commercial three-axis Hall probe mounted on a wagon moving three-dimensionally along z, r and ϕ. The ranges of the measurement are |z|<110 cm with 111 steps, 0∘<ϕ<360∘ with 12 steps and 0 cm<r<29 cm with 17 steps, which mostly cover the positron tracking volume. The probe contains three Hall sensors orthogonally aligned and is aligned on the moving wagon such that the three Hall sensors can measure B z , B r and B ϕ individually. The field measuring machine, the moving wagon and the Hall probe are aligned at a precision of a few mrad using a laser tracker. However, even small misalignments could cause a relatively large effect on the secondary components, B r and B ϕ due to the large main component B z , while the measurement of B z is less sensitive to misalignments. Only the measured B z is, therefore, used in the analysis to minimise the influence of misalignments and the secondary components B r and B ϕ are computed from the measured B z using the Maxwell equations as

The computations require the measured values for B r and B ϕ only at the plane defined by z=z 0. This plane is chosen at z 0=1 mm near the symmetry plane at the magnet centre where B r is measured to be small (|B r |<2×10−3 T) as expected. The effect of the misalignment of the B ϕ -measuring sensor on B ϕ (z 0,r,ϕ) is estimated by requiring the reconstructed B r and B ϕ be consistent with all the other Maxwell equations.

Since r=0 is a singularity of Eqs. (1) and (2) and the effect of the misalignment of the B z -measuring sensor is not negligible at large r due to large B r , the secondary components are computed only for 2 cm<r<26 cm. The magnetic field calculated with a detailed coil model is used outside this region and also at z<−106 cm and z>109 cm where the magnetic field was not measured.

The magnetic field is defined at the measuring grid points as described above and a continuous magnetic field map to be used in the analysis is obtained by interpolating the grid points by a B-spline fit.

4 Drift chamber system

4.1 Introduction

The Drift CHamber (DCH) system of the MEG experiment [15] is designed to ensure precision measurement of positrons from μ +→e+ γ decays. It must fulfil several stringent requirements: cope with a huge number of Michel positrons due to a very high muon stopping rate up to 3×107 μ +/s, be a low-mass tracker as the momentum resolution is limited by multiple Coulomb scattering and in order to minimise the accidental γ-ray background by positron annihilation-in-flight, and finally provide excellent resolution in the measurement of the radial coordinate as well as in the z coordinate.

The DCH system consists of 16 independent modules, placed inside the bore of COBRA (see Sect. 3) aligned in a half circle with 10.5∘ intervals covering the azimuthal region ϕ∈(191.25∘,348.75∘) and the radial region between 19.3 cm and 27.9 cm (see Fig. 20).

4.2 Design of DCH module

All 16 DCH modules have the same trapezoidal shape (see Fig. 21) with base lengths of 40 cm and 104 cm, without any supporting structure on the long side (open frame geometry) to reduce the amount of material. The modules are mounted with the long side in the inner part of the spectrometer (small radius) and the short one positioned on the central coil of the magnet (large radius).

Each module consists of two detector planes which are operated independently. The two planes are separated by a middle cathode made of two foils with a gap of 3 mm. Each plane consists of two cathode foils with a gas gap of 7 mm. In between the foils there is a wire array containing alternating anode and potential wires. They are stretched in the axial direction and are mounted with a pitch of 4.5 mm. The longest wire has a length of 82.8 cm, the shortest of 37.6 cm. The anode-cathode distance is 3.5 mm. The two wire arrays in the same module are staggered in radial direction by half a drift cell to resolve left-right ambiguities (see Fig. 22). The isochronous and drift lines in a cell calculated with GARFIELD [16] are shown in Fig. 23 for B=1.60 T.

The two detector planes are enclosed by the so-called hood cathode. The middle as well as the hood cathodes are made of a 12.5 μm-thick polyamide foil with an aluminium deposition of 2500 Å.

Thanks to such a low-mass construction and the use of a helium-based gas mixture, the average amount of material in one DCH module sums up to only 2.6×10−4X0, which totals 2.0×10−3X0 along the positron track.

All frames of the DCH modules are made of carbon fibre. Despite being a very light material, it offers the advantage of being rather rigid. Consequently, there is only a negligible deformation of the frames due to the applied pretension compensating the mechanical tension of the wires (1.7 kg) or the cathode foils (12.0 kg).

4.3 Charge division and vernier pads

A preliminary determination of the hit z coordinate, z anode, is based on the principle of charge division. To achieve this goal, the anodes are resistive Ni–Cr wires with a resistance per unit length of 2.2 kΩ/m. Firstly, the z coordinate, is measured from the ratio of charges at both ends of the anode wire to an accuracy better than 2 % of the wire length.

Secondly the information from the cathodes is used to improve the measurement, by using a double wedge or vernier pad structure [17–19] with pitch λ=5 cm, etched on the cathode planes on both sides of the anode wires (see Fig. 24) with a resistance per unit length of 50 Ω/m.

Due to the double wedge structure, the fraction of charge induced on each pad depends periodically on the z coordinate. The period λ is solved by means of z anode. In total there are four cathode pad signals for each wire. To increase the capability of this method the vernier pads of one cathode plane is staggered by λ/4 in the axial direction with respect to its partner plane.

4.4 Counting gas

In order to reduce the amount of material along the positron trajectory, the helium-based gas mixture He:C2H6=50:50 is adopted as a counting gas [20, 21]. For this mixture the disadvantages of small primary ionisation and large diffusion of helium is balanced by the high primary ionisation, good quenching properties and high voltage (HV) stability of ethane. A reliable HV stability is very important as the DCHs are operated in a high-rate environment and with a very high gas gain of several times 105. The main advantages of this mixture are a rather fast and saturated drift velocity, ∼4 cm/μs, a Lorentz angle smaller than 8∘ and a long radiation length (X 0/ρ=640 m).

4.5 Pressure regulation system

A specially designed pressure control system manages the gas flows and gas pressures inside the DCH modules and inside the bore of COBRA, which is filled with almost pure He to also reduce multiple scattering along the positron path outside the DCH volume. The pressure regulation value, defined as the pressure difference between inside and outside the DCH, is on average 1.2×10−5 bar regulated to a precision better than 0.2×10−5 bar. This sensitivity is required as larger pressure differences would induce large distortions in the thin cathode foils and consequently lead to changes in the anode-cathode distances resulting in huge gain inhomogeneities along the anode wire as well as from wire to wire.

4.6 HV system

The DCHs are operated with a voltage of ∼1800 V applied to the anode wires. In the first years of operation a HV system based on the MSCB system (see Sect. 10.1.4) was used. In this case one power supply [22] was used as the primary HV device which fed 16 independent regulator units with 2 channels each. The primary HV was daisy-chained along the 16 regulator modules.

In 2011 the MSCB.based HV system was replaced by a new commercial one. Two plug-in units [23] with 16 independent channels each, operated in a Wiener Mpod mini crate, were chosen to reduce the high-frequency electronic noise contribution (mainly 14 MHz).

4.7 Readout electronics

Cathode and anode signals are conducted via printed circuit board tracks to the pre-amplifiers, which are mounted at the frames of the DCH modules. The cathode signal is directly connected to the input of the pre-amplifiers, whereas the anode signal is decoupled by a 2.7 nF capacitor as the anode wire is on positive HV potential.

The pre-amplifier was custom-designed to match the geometrical boundary conditions and electrical requirements of the DCH system. The circuit is based on two operational amplifiers with feedback loops and protection diodes. The total gain of the pre-amplifier is ∼50 and the bandwidth is 140 MHz for the cathode signals and 190 MHz for the anode signals. In addition, the anode output is inverted to match the required positive input of the DRS read-out chip. The noise contribution of the pre-amplifier is 0.74 mV.

All output signals from the pre-amplifiers are individually transferred by non-magnetic, coaxial cables (Radiall MIL-C-17/93-RG178) to the back-end electronics. At the end-cap of the COBRA spectrometer there is a feedthrough patch panel which has resistive dividers splitting the anode signals into two outputs in the proportion 1:9. The larger signal goes to the DRS input, whereas the smaller one is amplified by a feedback amplifier and summed with several other anode outputs to form a DCH self-trigger signal.

4.7.1 Alignment tools and optical survey

As the DCH is a position-sensitive detector, the position of the wires and the pads must be known very precisely. The alignment procedure consists of two parts, a geometrical alignment and a software alignment using cosmic rays and Michel positrons.

During the construction of each single frame the positions of the anode wires and of the cathode pads are measured with respect to an alignment pin located at the bottom left edge of each frame. Each cathode hood is equipped with two target marks (cross hairs) and two corner cube reflectors placed on the most upstream and most downstream upper edge of the cathode hood (see Fig. 25). After assembly of a DCH module the position of these identification marks was measured with respect to the alignment pin, which allows the alignment of the different frames within the assembled DCH module and acts as a reference for the wire as well as the pad positions. Even though the chamber geometry is an open frame construction a geometrical precision of ∼50 μm was achieved over the full length of the chamber for the position of the wires and the pads.

The support structure between two adjacent DCH modules is also equipped with target marks and corner cube reflectors on both the downstream and the upstream sides.

After the installation of the DCH system inside COBRA, an optical survey was performed. The positions of the target marks and of the corner cube reflectors on the cathode hoods and on the support structure were measured with respect to the beam axis and the downstream flange of the magnet using a theodolite (target marks) and a laser tracker system (corner cube reflectors) with a precision of 0.2 mm.

4.7.2 Track-based alignment: the Millipede

The optical survey measurements described above allow the determination of the wire positions in the absolute coordinate system of the experiment. To achieve a better accuracy, we developed a track-based alignment procedure consisting of three steps: (i) an internal alignment of DCH modules; (ii) the placement of the so-obtained DCH frame in the spectrometer, with a fine adjustment of the relative DCH-target-COBRA position; (iii) the alignment of the spectrometer with respect to the LXe detector (see Sect. 4.7.4).

The first step is accomplished by recording cosmic ray tracks during the COBRA shutdown periods, to make the internal DCH alignment independent of the magnetic field map. Events are triggered by dedicated scintillation Cosmic Ray Counters (CRC), located around the magnet cryostat, which also provide a reference for the drift-time measurement. The alignment procedure utilises the reconstructed position of hit DCH modules to minimise the residuals with respect to straight muon tracks, according to the Millipede algorithm [24]. Global parameters, associated with displacements of each DCH module from its initial position, are obtained with an accuracy better than 150 μm for each coordinate.

The second step relies on consistency checks based on a sample of double-turn Michel positron tracks in the magnet volume. The relative DCH-COBRA position is fine-tuned until the track parameters related to each single turn (which are reconstructed as independent tracks by the fitting algorithm) are consistent within the fit uncertainties.

4.7.3 Michel alignment

The chamber positions from the optical survey are also cross-checked by using Michel positrons from μ + decays. Both the radial chamber alignment and the alignment in z are analysed by looking at the difference between the measured coordinate and that predicted by a fitted trajectory for each chamber plane. Because this diagnostic is insensitive to the absolute chamber positions, the overall location of the support structure in space is constrained by fixing the average radial and z positions over all planes to those of the optical survey. Each chamber plane is then shifted to bring the mean of its pull distribution closer to the average over all planes. The shifts are found to be consistent with the results in Sect. 4.7.2. Additional effects are investigated with Michel positrons such as tilts in the (r–z) plane, and displacement in the (x–y) plane. However no such effects have been found.

4.7.4 Alignment with the LXe detector

Cosmic rays penetrating both the DCHs and the LXe detector are used to measure the position of the DCH frame relative to the LXe detector. From the distribution of the difference between the position of incidence on the LXe detector reconstructed by the PMTs and that extrapolated from the DCHs, the two detectors can be aligned relative to each other with an accuracy of 1 mm in the horizontal and vertical directions.

4.8 Calibrations

4.8.1 Time calibration

Systematic time offsets between signals are calibrated by looking at the distribution of the drift times. It is a broad distribution, whose width is the maximum drift time in the cell, but it has a steep edge for particles passing close to the sense wire. The position of this edge shows systematic offsets for different channels (wires and pads), even in the same cell, due to different delays in the electronics. The determination of the edge position allows us to realign these offsets.

An essential ingredient of this procedure is the determination of the track time, to be subtracted from the time of each hit for determining the drift time. If positron tracks from muon decays are used, the time can be provided by the track reconstruction itself (see Sect. 4.10). Yet, a better performance is obtained if cosmic rays are used, with the track time provided with high precision by the CRC and corrected for the time of flight from the reference counter to the cell. This approach disentangles the calibration from the positron tracking procedure, and guarantees a relative time alignment at the level of 500 ps, comparable with the counter time resolution. The track reconstruction uncertainty due to these residual offset errors is negligible with respect to other contributions.

The same procedure can be used for both wire and pad signals. Alternatively, to avoid problems due to the smaller size of pad signals, wire offsets can be aligned first, and then the average time difference of wire and pad signal peaks in the same cell can be used to align the pads with respect to the wires.

4.8.2 z coordinate calibration

A variety of electronic hardware properties influence the accuracy of the anode wire and cathode pad measurements of the z coordinate. As discussed in Sect. 4.3, the hit z coordinate is first estimated by charge division using the anode wire signals and then refined using the charge distributions induced on the cathode pads. The anode charge asymmetry is defined as

where \(Q^{\mathrm{anode}}_{u(d)}\) is the measured anode charge at the upstream (downstream) end. The cathode pad charge asymmetry is defined similarly with \(Q^{\mathrm{cathode}}_{u(d)}\) replacing \(Q^{\mathrm{anode}}_{u(d)}\), where cathode is either the middle or the hood cathode of the cell. Calculations show that the cathode charge asymmetry depends sinusoidally on z to high precision. One group of calibrations involves adjusting the parameters entering into the anode z calculation; this improve the probability of obtaining the correct pad period from the initial determination of z.

The anode z coordinate is given by:

where L is the wire length, R the pre-amplifier input impedance, and ρ the wire resistivity.

Figure 26 demonstrates the fundamental calibration tool: the measured cathode charge asymmetry versus the measured z anode coordinate for each cell. The sinusoid period is forced to the pad pitch (λ=5 cm) by adjusting the factor \((\frac{L}{2}+\frac{R}{\rho})\) and its phase is forced to be 0 for z anode=0 by calibrating the relative gain between anode charges \(Q^{\mathrm{anode}}_{d}\) and \(Q^{\mathrm{anode}}_{u}\).

A calibration of the relative gain between cathode pads is performed to achieve optimal z resolution. This procedure uses plots similar to Fig. 26, except that z

anode is replaced with the more precisely determined coordinate from fitted tracks z

track. A vertical offset indicates a relative difference between upstream and downstream cathode pad gains, which is zeroed by adjusting the cathode pad gains. The systematic uncertainty in the cathode pad gains from this procedure is estimated by comparing the results using z

track and z

anode as the abscissa, to be  .

.

The chambers, being operated at slight overpressure (see Sect. 4.5), experience bowing of the outer cathode hood foils. The bulge of the hood is largest near z=0 and at middle cell numbers because the foil is only attached at the edges of the chamber. This means that the two cathode foils are not equidistant from the anode wires, but instead the distance varies as a function of z even within the same cell. The induced charge, as well as the magnitude of the charge asymmetry, also differ between the two planes depending on z.

This effect is calibrated by correcting for the mismatch between asymmetry amplitudes. For a given cell,

which is related to the ratio of asymmetry magnitudes for geometric reasons, exhibits a clear and expected dependence on z, as shown in Fig. 27. The dependence for each wire and a preliminary evaluation of the pad z coordinate are used to rescale the asymmetries on an event-by-event basis for a final evaluation of the pad z coordinate. The charge ratio dependence of the charge asymmetry magnitude ratio is obtained by a scatter plot of those two variables summed over all wires and values of z.

4.9 Tracking

A chain of software algorithms first measures the arrival time and charge on each wire and pad associated with the passage of a charged particle through a cell. The arrival times and charges are converted into spatial coordinates. The information from each cell, referred to as a hit, is cross-checked with other hits in the same chamber, and groups of hits consistent with coming from the same particle are then collected into clusters. Patterns of clusters consistent with coming from the same particle are then collected into tracks.

Finally, a list of clusters associated with each track is passed to a Kalman filter to fit a trajectory. The fit incorporates average energy loss, multiple scattering, and the measured magnetic field. It also attempts to link multiple turns consistent with belonging to a single trajectory. The Kalman filter provides the track momentum, decay vertex, positron emission angles, the predicted impact location at the TC and the total path length from the target to the TC. An event-by-event indicator of the resolution in each of these quantities is also given.

4.10 Performance

This section reports on the DCH performance regarding spatial, angular, momentum and vertex resolutions.

4.10.1 Single hit resolution

We refer to the uncertainty in a single hit position measurement as the intrinsic resolution, i.e. not affected by multiple scattering. A technique for measuring the intrinsic radial coordinate resolution is illustrated in Fig. 28. The method considers clusters with exactly one hit in each chamber plane, on adjacent cells. A local track circle in the (x, y) plane is calculated from the position of this cluster and the two neighbouring clusters. Each hit is then propagated to the central chamber plane using this trajectory and the difference in the two radial coordinates at the central plane is interpreted as a measure of the radial intrinsic resolution. Figure 29 shows the distribution of R plane 0−R plane 1. This distribution is fit to the convolution of two double Gaussian functions.

The reason for using a double Gaussian function is that the single hit resolution for drift chambers usually has large tails, which are due to the non-Gaussian fluctuations of the primary ionisation, to the large diffusion and the irregular shape of the time-to-distance relations for hits produced in the peripheral regions of drift cells, to wrongly left/right assignments, frequent for hits at small drift distances, to wrong drift time measurements in some unusually noisy signals, etc. Along with the non-Gaussian material effects (multiple scattering and dE/dx), it produces tails in the track parameter resolutions (angles, momentum and vertex).

The result is a radial coordinate resolution of  in the core (87 %) and

in the core (87 %) and  in the tail. The design resolution was parametrised by a single Gaussian with

in the tail. The design resolution was parametrised by a single Gaussian with  .

.

A technique for measuring the intrinsic z coordinate resolution is illustrated in Fig. 30. Two-hit clusters belonging to a track segment are selected in the same way described for the radial coordinate resolution measurement to compare the two measured z coordinates. The calculated track circle in the (x, y) plane is used to define a local coordinate system, with the centre of the circle defining the origin. Within this coordinate system, the polar angle ϕ is calculated for each overall cluster position and for each hit within the cluster, as shown in Fig. 30(a). From the three cluster coordinates, a quadratic trajectory for z as a function of the local ϕ is computed. This trajectory is used to project the measured z coordinates of the two hits in question to a common ϕ and the resulting distribution of the difference of the projected z coordinates, z

late−z

early, is used to infer the intrinsic z resolution, as pictured in Fig. 30(b). This distribution, shown in Fig. 31, is fit with the convolution of two double Gaussian functions, resulting in a z coordinate resolution of  in the core (91 %) and \(\sigma^{\mathrm{DCH}}_{z} = 2.1~\mathrm{mm}\) in the tail. The largest known contribution to the z coordinate resolution comes from the stochastic fluctuations of the baseline in the presence of noise; this is estimated to be

in the core (91 %) and \(\sigma^{\mathrm{DCH}}_{z} = 2.1~\mathrm{mm}\) in the tail. The largest known contribution to the z coordinate resolution comes from the stochastic fluctuations of the baseline in the presence of noise; this is estimated to be  on average. The design z coordinate resolution was

on average. The design z coordinate resolution was  .

.

4.10.2 Angular resolution

The resolutions in the measurements of the positron angles at the target are measured from data by exploiting events where the positron makes two turns in the DCHs. Each turn is treated as an independent track, fitted, and propagated to the beam line where the track angles are compared. The distributions of the difference of the two measured angles in double-turn events are shown in Fig. 32 for θ e and in Fig. 33 for ϕ e . The resolution in each turn is assumed to be the same and these distributions are fit to the convolution of a single (θ e ) or double (ϕ e ) Gaussian functions. These functions represent the resolution function of the positron angles.

A fit to the distribution of \(\delta \phi_{e} \equiv \phi^{1\mathrm{st}\ \mathrm{turn}}_{e} -\phi^{2\mathrm{nd}\ \mathrm{turn}}_{e}\) on double-turn events. The distribution is fitted with a double Gaussian function convolved with itself, and the corresponding core and tail widths are shown, along with the fraction of events in the core component

According to Monte Carlo studies, this method provides a significant overestimate of the true resolution. After correcting for this, we obtain a single Gaussian resolution \(\sigma_{\theta_{e}} = 9.4 \pm 0.5~\mathrm{mrad}\) and a double Gaussian ϕ e resolution of \(\sigma_{\phi_{e}} = 8.4 \pm 1.4~\mathrm{mrad}\) in the core (80 %) and \(\sigma_{\phi_{e}} = 38 \pm 6~\mathrm{mrad}\) in the tail, where the errors are dominated by the systematic uncertainty of the correction. The Monte Carlo resolutions are \(\sigma_{\theta_{e}}\sim 9~\mathrm{mrad}\) \(\sigma_{\phi_{e}}\sim 8~\mathrm{mrad}\), while the design resolutions were \(\sigma_{\theta_{e},\phi_{e}}\sim 5~\mathrm{mrad}\).

It is also important to stress that these resolutions are affected by correlations among the other positron observables, which can be treated on an event-by-event basis, so that the effective resolutions determining the experimental sensitivity are \(\sigma_{\theta_{e}} = 8.5 \pm 0.5~\mathrm{mrad}\) and \(\sigma_{\phi_{e}} = 7.7\pm 1.4~\mathrm{mrad}\) in the core.

The multiple scattering contribution to these resolution is \(\sigma_{\theta_{e},\phi_{e}} \sim 6.0~\mathrm{mrad}\), the rest is due to the single hit resolution.

4.10.3 Vertex resolution

The resolution in the position of the decay vertex on the target is dominated by the positron angular resolution. For a proper evaluation of the angular resolution function, a precise knowledge of the correlations between positron angle error and vertex position error is required. The average vertex position resolutions, however, can be measured directly by comparing the projected point of interception at the target plane on double-turn events. The difference in vertex z coordinates of the two turns is fit to the convolution of a single Gaussian function with itself, while that of the vertex y coordinates is fit to the convolution of a double Gaussian function with itself. After the Monte Carlo corrections are applied, the resolutions are \(\sigma_{y_{e}} = 1.1 \pm 0.1~\mathrm{mm}\) in the core (86.7 %), \(\sigma_{y_{e}} = 5.3 \pm 3.0~\mathrm{mm}\) in the tail and \(\sigma_{z_{e}} =2.5 \pm 1.0~\mathrm{mm}\). The resolutions for Monte Carlo events are \(\sigma^{\mathrm{MC}}_{y_{e}} = 1.0 \pm 0.1~\mathrm{mm}\) in the core and \(\sigma^{\mathrm{MC}}_{z_{e}} =2.9 \pm 0.3~\mathrm{mm}\). The values of \(\sigma_{y_{e}}\) are corrected for the correlation with the positron energy assumed to be the signal energy.

The design resolution was \(\sigma_{y_{e},z_{e}} \sim 1.0~\mathrm{mm}\) without correcting for correlation.

4.10.4 Energy resolution

The positron energy resolution is measured with a fit of the energy distribution to the unpolarised Michel spectrum multiplied by an acceptance function and convolved with a resolution function:

Functional forms for both the acceptance and the resolution functions are based on the guidance provided by Monte Carlo simulation. The acceptance function is assumed to be:

and the resolution function is taken to be a double Gaussian. The acceptance and the resolution parameters are extracted from the fit, as shown in Fig. 34. This gives an average resolution of \(\sigma_{E_{e}} = 330 \pm 16~\mathrm{keV}\) in the core (82 %) and \(\sigma_{E_{e}} = 1.13 \pm 0.12~\mathrm{MeV}\) in the tail. There is also a 60 keV systematic underestimation of the energy, to which we associate a conservative 25 keV systematic uncertainty from Monte Carlo studies. This is to be compared with the resolution goal of \(\sigma_{E_{e}}=180~\mathrm{keV}\) (0.8 % FWHM).

A complementary approach to determining the positron energy resolution is possible by using two-turn events as for the angular resolution. Figure 35 shows the distribution of the energy difference between the two turns. This is fit to the convolution of a double Gaussian function with itself, the same shape assumed in the fit of the edge of the Michel spectrum. A disadvantage of this technique is its inability to detect a global shift in the positron energy scale. This technique gives an average resolution of \(\sigma_{E_{e}} = 330~\mathrm{keV}\) in the core (79 %) and \(\sigma_{E_{e}} = 1.56~\mathrm{MeV}\) in the tail, in reasonable agreement with the results obtained from the fit of the Michel spectrum. A systematic offset of 108 keV between the energies of the two turns also appears; the energy of the first turn is systematically larger than the energy of the second turn. A related effect is the dependence of the measured Michel edge on θ e . These effects point to errors in the magnetic field mapping.

A fit to the distribution of \(\delta E_{e} \equiv E^{1\mathrm{st}\ \mathrm{turn}}_{e} -E^{2\mathrm{nd}\ \mathrm{turn}}_{e}\) on double-turn events. The distribution is fitted with a double Gaussian function convolved with itself, and the corresponding core and tail widths are shown, along with the fraction of events in the core component

4.10.5 Chamber detection efficiency

The relative efficiency of each chamber plane is measured as the probability to have a reconstructed hit when its neighbouring plane in the same chamber has at least one hit associated to a track. This probability is called the “hardware” efficiency, while the probability to have a hit associated to the track is called the “software” efficiency. Low-efficiency planes can be traced to operation at voltages below the nominal voltage. For the other planes the average hardware efficiency is ∼95 % and the average software efficiency is ∼90 %.

4.10.6 DCH efficiency

The absolute efficiency of the DCH system for signal and Michel positrons is difficult to measure. Yet it is not used in the physics analysis, because the μ +→e+ γ Branching Ratio is normalised with respect to the number of reconstructed Michel or radiative muon decays, so that the absolute positron reconstruction efficiency cancels. Nevertheless in Fig. 34, the fit to the Michel positron spectrum relative to tracks associated to a TC hit returns an estimate of the relative efficiency versus the positron momentum. In particular the relative efficiency for Michel (48 MeV<E e ) versus signal positrons is ∼0.70. This relative efficiency can also be estimated by Monte Carlo simulation to be ∼0.75 in good agreement.

The absolute efficiency of DCH only for Michel positron (48 MeV<E e ) as estimated from Monte Carlo is \(\epsilon_{e,\mathrm{DCH}}^{\mathrm{MC},\mathrm{Michel}} \sim 90~\%\), while for signal \(\epsilon_{e,\mathrm{DCH}}^{\mathrm{MC}}\sim 83~\%\). The largest source of inefficiency for the DCH alone are the tracks emitted at θ e ∼90∘, where multiple turns generate inefficiency in the track-finding algorithm.

5 Timing Counter

The Timing Counter (TC) is dedicated to precisely measuring the impact time of positrons to infer their emission time at the decay vertex in the target by correcting for the track length obtained from the DCH information.

The main requirements of the TC are:

-

fast response to be used in the online trigger algorithms and to avoid rate effects;

-

online fast and approximate (∼5 cm) impact point position reconstruction for trigger purposes;

-

capability of determining the positron time with an accuracy ∼50 ps;

-

good (∼1 cm) impact point position reconstruction in the offline event analysis;

-

reliable operation in a harsh environment: high and non-uniform magnetic field, helium atmosphere, possibility of ageing effects;

-

cover the full acceptance region for signal while matching the tight mechanical constraints dictated by the DCH and COBRA.

These goals were achieved through extensive laboratory and beam tests [25–28].

5.1 Concept and design of the Timing Counter

As mentioned in Sect. 1 and visible in Fig. 1, the TC matches the signal kinematics and is compatible with the mechanical constraints by the placement of one module (sector) upstream and the other downstream.

Each sector is barrel-shaped and consists of two sub-detectors, mounted on top of each other, with full angular coverage for positrons from μ +→e+ γ decays when the γ-ray points to the LXe detector (see Fig. 36 for a sketch of a sector).

At the outer radius, the longitudinal detector, consisting of scintillating bars read out at each end by PMTs, is dedicated mainly to time measurements and, thanks to its segmentation along ϕ, also provides a measurement of the positron impact ϕ coordinate. It also provides a measurement of the positron impact z coordinate by exploiting the separate time measurements of both PMTs. An approximate estimate of these variables is obtained online with fast algorithms for trigger purposes. This detector is therefore sufficient to reconstruct all positron variables required to match a DCH track and recover the muon decay time by extrapolating the measured time back to the target.

At the inner radius, the transverse detector, consisting of scintillating fibres, is devoted to determining with high precision the impact z coordinate to improve the matching between the DCH track and TC point.

Each sector is surrounded by a bag made of a 0.5 mm-thick EVAL® foil that separates the helium-filled volume of the magnet bore from the PMTs, which are sensitive to helium leakage through the borosilicate window [29, 30]. A buildup of helium inside the PMTs would lead to internal discharge making them unusable. The bag volume is continuously flushed with nitrogen to guarantee a stable operating condition.

5.2 The longitudinal detector

Each sector of the longitudinal detector consists of an array of 15 scintillating bars (Bicron BC404 [25, 31]) with approximate square section and size 4.0×4.0×79.6 cm3; each bar is read out by a couple of fine-mesh 2″ PMTs for high magnetic fields [32] glued at the ends. The bars are arranged in a barrel-like shape to fit the COBRA inner profile with a 10.5∘ gap between adjacent bars matching the DCH pitch. The scintillator type was chosen from among fast scintillator candidates through a series of beam tests by comparing their timing performances. The PMTs were chosen to match the bar size taking into account the magnetic field strength and orientation [33] as well as the mechanical constraints.

The number of bars is defined by requirements on the size of the trigger regions defined in the (ϕ,θ) plane mapped onto the TC; the ϕ width corresponds to the gap between adjacent bars.

The bar thickness was chosen to acquire sufficient energy deposition from positrons to obtain the design time resolution.

The bar width is dictated by the requirement of providing full efficiency for positrons crossing the TC, leaving no gap between bars. Full acceptance of positrons emitted in the experiment’s angular range defines the bar length.

Furthermore, the details of the bar geometry are also dictated by the mechanical constraints coming from the TC transverse detector and the DCH system, resulting in the bar geometry in Fig. 37.

5.2.1 Readout electronics

The signals from the PMTs are interfaced with the DRS chip fast digitiser (see Sect. 9.2.1) with the read-out scheme depicted in Fig. 38. Each PMT signal is passively split into three channels with 80 %, 10 % and 10 % amplitude fractions, respectively. The highest fraction is sent to a Double Threshold Discriminator (DTD) specifically designed for time analysis purposes.

Schematic picture of the read-out electronics of the TC longitudinal detector. Signals from each PMT are passively split and fed into dedicated electronics channels. Different splitting ratios are used to optimise time and pulse reconstruction. The PMT charge monitor was deactivated in 2011 and its fraction is added to DRS/trigger input

DTD is a high-bandwidth, low-noise discriminator with two different tunable thresholds: the lower one allows precise pulse-time measurements (see Sect. 5.4.2) while the higher one acts as a veto against low energy hits. The value of the high threshold determines the detector efficiency and is also related to the trigger threshold as discussed in Sect. 5.4. When the DTD is fired, a standard NIM waveform is generated and then digitised by the DRS boards together with a copy of the PMT waveform. The NIM and PMT waveforms are analysed offline to extract the hit time. Several algorithms can be applied, the best one (used as default) fits the NIM waveform. This method reduces the contribution from the DRS jitter and dynamic range to the overall time resolution.

The first 10 % copy of each signal is duplicated and fed to the trigger system and the DRS: in this stage an active splitter described in Sect. 9.2.2 is used for the level translation needed to match the input ranges of the trigger (see Sect. 8) and DRS boards. The second 10 % signal copy is sent to a charge integrator to monitor the PMT ageing. Since 2011 we implemented a charge monitor based on pulse analysis. Consequently we feed 20 % of the PMT signals to the DRS/trigger inputs.

5.3 The transverse detector

The transverse detector consists of 256 scintillating multi-clad fibres [34] coupled to Avalanche PhotoDiodes (APD) [35] covering the inner surface of a TC sector.

The small sectional area of the fibres (5×5 mm2 plus 2×0.5 mm wrapping thickness totalling 6.0 mm in size along z) satisfies the required space resolution and matches the 5×5 mm2 APD sensitive area. In order to comply with the mechanical constraints, the fibre ends at the APD side are bent in two different ways: one set has almost straight termination while the other is “S” shaped with small curvature radii (∼2 cm) and shows a significant light leakage, recovered with a high-reflectance wrapping [36].

The APD advantages can be summarised as: small size, insensitivity to magnetic field, and fast response. The main disadvantage is the low gain, at most ∼103 if biased near the breakdown voltage, and the high capacitance that make them sensitive to noise. For this reason a single APD current pulse is read out as voltage across a load resistor and amplified. Bundles of 8 APDs are mounted on distinct boards carrying amplifying stages, power supplies, HV bias and ancillary control signals. Due to mechanical constraints, the fibre bundle mounted on a single front-end board is interleaved with the fibre bundle connected to the adjacent board: the resulting assembly, 16 consecutive fibres read out by two adjacent boards, covers 9.6×40.0 cm2 and is viewed in the trigger system as a single block.

Each APD has an independent discriminated output, acquired via a FPGA based board and stored. Therefore the transverse detector delivers the list of fired fibres for each event.

The reconstructed coordinate of the hit fibre z fibre can be matched to the z bar measured by the longitudinal detector. The distribution of z bar−z fibre for the downstream sector obtained with cosmic rays is shown in Fig. 39. Some tails are related to a \(\mathcal{O}(10~\%)\) inefficiency of the APD detector due to its geometry: incomplete coverage and 1 mm gap between fibres.

5.4 Commissioning and in-situ performance

The integration of the TC in MEG requires a set of calibrations to determine the detector parameters optimising its performance. Hereinafter only those involving the TC alone are described. Additional calibrations, involving other sub-detectors (e.g. time alignments with the DCH and LXe detectors) are described in Sect. 7.3.1 and in [37].

5.4.1 Gain equalisation

Due to the non-solenoidal configuration of the magnetic field, the inner and outer PMTs are subject to different field intensities and orientations and therefore operate in different working conditions resulting in different gains. Gains need to be equalised for their use in triggering and analysis.

The PMT gain equalisation procedure exploits cosmic rays hitting the TC: due to the uniformity of cosmic ray hit distribution along the bars the charge and amplitude spectra of the inner and outer PMTs are only marginally affected by geometrical effects. It is therefore possible to tune the PMT gains acting on the bias high voltages and equalise the peaks of the Landau distributions (Fig. 40) within 15 % or better. In the trigger algorithm a further software equalisation is performed online to keep a uniform threshold on the whole detector. By means of dedicated weights for each PMT a percent level uniformity is obtained.

5.4.2 Time Walk effect

The Time Walk effect is the dependence on the pulse amplitude of the threshold-crossing time delay as shown in Fig. 41. The analog waveforms sampled at 1.6 GHz are averaged and interpolated separately for each PMT over many events to obtain a template waveform, used to evaluate the correction required in time reconstruction. In the leading edge region of each template waveform, the dependence of the delay time versus the pulse amplitude is fitted with the empirical function:

where x is the ratio of the DTD Low Level Threshold (LLT) value to the PMT pulse amplitude and A, B, C are the parameters fit separately for each PMT. These parameters are recalculated every year to account for possible change in the PMT pulse shape.

The correction in Eq. (8) is applied on an event-by-event basis. As shown in Fig. 42, this function reproduces well the experimental data.

The optimal LLT value balancing Time Walk immunity and noise rejection was found by evaluating the timing resolution of each bar with dedicated tests (see Sect. 10).

Figure 43 shows the comparison between the time resolutions obtained with two different LLT values (10 mV and 25 mV) on the double bar sample (see Sect. 10). The difference between the time resolutions for the two thresholds systematically favours 25 mV. From systematic studies of the double bar time resolution for LLT values ranging from 5 mV to 35 mV in 5 mV step, the optimal value of LLT was found to be 25 mV.

5.4.3 Time offsets

Inter-bar time offsets due to the electronic chains are evaluated using cosmic rays. All PMTs are equipped with 10 m of low-loss signal cable to preserve the leading edge of the pulses [38]; the equal length for all PMTs minimises the possibility of time offsets drifting during the data-taking period (several months) and, together with a continuous monitoring of the relevant variables, ensures stable operation. For each bar, the distribution of the time difference between the inner and outer PMTs is acquired. Due to the uniformity and isotropy of cosmic rays this distribution is expected to be flat and centred at zero; the mean values of these distributions are direct measurements of the relative offsets between PMTs on each scintillating bar. The time offsets between different bars and between the TC and the LXe detector are measured using positrons from Michel decays as well as different calibration approaches [39].

Figure 44 shows the effect of accounting for time offsets on a two-dimensional (z versus ϕ) cosmic ray hit map. Using the measured time offsets (lower panel), the z reconstruction algorithm returns the correct position.

5.5 Trigger settings

The TC plays a crucial role in trigger (see Sect. 8.3): it detects the positron and provides a preliminary estimation of its timing and direction.

The trigger position resolution is a key parameter for the direction match with the γ-ray entering the LXe detector and is evaluated by comparing the trigger-level reconstruction of the impact point from Eq. (17) with the one obtained from Eq. (13) in Sect. 5.8. It was σ z ∼7.3 cm in 2009 and has improved to σ z ∼5.0 cm since 2010 after refining the algorithms.

From the point of view of efficiency at the analysis level, the TC trigger and DTD conditional efficiency studies are motivated by the need for all triggered events to fire the DTD to allow event reconstruction. The relevant parameter is the threshold efficiency ϵ DTD defined, for each bar, as the ratio of the number of events with NIM signals to the total number of events triggered on the same bar, The high-level threshold (HLT) is set slightly lower than the trigger one, in order to acquire only events with NIM pulse information. Figure 45 presents the efficiency ϵ DTD versus the HLT. Averaging over all bars yields ϵ DTD≥99.9 % for HLT≤400 mV.

5.6 Timing resolution of TC

If a positron impinges on a TC bar at time T TC, the times measured by the inner (in) and outer (out) PMTs read as:

where b in,out are time offsets depending on the read-out chain, TW in,out are contributions from Time Walk effect, v eff is the effective velocity of light in the bar and L is the bar length; the z axis points along the main axis of the bar and its origin is in the middle of the bar. From Eq. (9) the impact time is:

A more detailed discussion on the determination of the factors b is in Sect. 5.4.3 and of the factors TW in Sect. 5.4.2.