Abstract

A new framework is presented for evaluating the performance of self-consistent field methods in Kohn–Sham density functional theory (DFT). The aims of this work are two-fold. First, we explore the properties of Kohn–Sham DFT as it pertains to the convergence of self-consistent field iterations. Sources of inefficiencies and instabilities are identified, and methods to mitigate these difficulties are discussed. Second, we introduce a framework to assess the relative utility of algorithms in the present context, comprising a representative benchmark suite of over fifty Kohn–Sham simulation inputs, the scf-xn suite. This provides a new tool to develop, evaluate and compare new algorithms in a fair, well-defined and transparent manner.

Export citation and abstract BibTeX RIS

Original content from this work may be used under the terms of the Creative Commons Attribution 3.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

1. Preface

Compute power, which refers here to both the performance and accessibility of computer hardware, has grown significantly over the past half-century. This increase has led to the rise of computational science as a discipline. In the present context, we are concerned with the hierarchy of methods that has emerged for calculating the properties of molecular and solid state systems by approximating the Schrödinger equation [1–3]. In particular, the most prominent method from this hierarchy over the past few decades has proven to be density functional theory (DFT) within the Kohn–Sham framework [4, 5]. For a variety of reasons, practitioners in both the physics and chemistry communities have deemed this level of theory appropriate to tackle a range of problems at an acceptable computational cost [6–8]. It is, therefore, of paramount importance that implementations of Kohn–Sham DFT optimally utilise the available computational resources.

Many distinct implementations to Kohn–Sham theory exist, differing according to the choice of basis set, whether to use a density matrix or explicit wavefunction formulation etc, each with advantages and disadvantages in the computational domain [2, 9–18]. When one has decided on such an approach, its effectiveness is limited by the efficiency and reliability of the available numerical algorithms. This work reviews an aspect of Kohn–Sham theory that is more-or-less universal across many of these approaches; that is, how one iterates a density towards so-called self-consistency. This is conventionally referred to as the self-consistent field procedure, and is the most common source of numerical divergence when solving the equations of Kohn–Sham theory in silico [19]. This work examines the effectiveness of the methods and algorithms used in the self-consistent field procedure, reviewing a wide range of available methods drawn from the literature, studying the causes of divergences and inefficiencies and exploring how the available algorithms mitigate these potential issues. In order to assess the performance of the algorithms, a test suite is presented comprising a wide range of representative simulations. This test suite allows the algorithms to be judged according to both their robustness (ability to find a solution to the Kohn–Sham equations) and efficiency (speed with which a given solution is found) in a transparent and unbiased manner. The test suite and the associated workflow constitute a powerful new framework for the development, testing and assessment of new methods and algorithms. Throughout this work care has been taken to present the wide range of different methods in a consistent way, such that the similarities and differences of the methods are readily apparent.

2. Introduction

2.1. Background

The concept of self-consistency has been prevalent across many domains of physics, typically as a characteristic requirement when one invokes a mean-field approximation. For example, Hartree theory replaces the two-body Coulomb interaction between electrically-charged quantum particles with a mean-field, the Hartree potential, generated by the distribution of the electric charge in the system. Each particle is influenced by the Hartree potential, which in turn alters the distribution of charge in the system. This charge distribution can then be used to construct a new Hartree potential. The Hartree potential is self-consistent when these two fields are the same, i.e. the potential leads to a charge distribution which gives rise to the same potential. In fact, this was the context in which self-consistency was first introduced,

'If the final field is the same as the initial field, the field will be called 'self-consistent', and the determination of self-consistent fields for various atoms is the main object of this paper'.

–D.R. Hartree (1927) [20].

Later refined by Fock [21] and Slater [22], Hartree–Fock theory became widely adopted in computational quantum chemistry to compute ground state properties of molecules [3]. Whilst Hartree and Hartree–Fock theory are mean-field approximations, Hohenberg et al [5, 23] showed that a mean-field exists which reproduces the ground-state energy and particle density exactly. This 'density functional theory' allows, in principle, the computation of the exact electronic structure of any quantum system; however the exact density functional is not known, and must be approximated in any practical application of DFT. For a more detailed examination of the origins and physical foundations of Kohn–Sham theory, the reader is directed to the following resources [1, 4, 24], and references therein.

This work concerns the need to achieve self-consistency in the context of DFT simulations of atoms, molecules and materials. Namely, we focus on computing the particle density  for a set of atomic species and positions within the framework of Kohn–Sham DFT. Each of the N particles in the system are influenced by an external potential

for a set of atomic species and positions within the framework of Kohn–Sham DFT. Each of the N particles in the system are influenced by an external potential  which is uniquely defined by the species and positions of the atoms, the level of approximation employed, and more. For the purposes of this article, finding the ground state energy E in Kohn–Sham theory is viewed as a constrained minimisation problem,

which is uniquely defined by the species and positions of the atoms, the level of approximation employed, and more. For the purposes of this article, finding the ground state energy E in Kohn–Sham theory is viewed as a constrained minimisation problem,

where atomic units are used, and, for now, spin degrees of freedom are omitted. The particle density,  , is defined in terms of the single-particle orbitals,

, is defined in terms of the single-particle orbitals,  , via

, via

That is, one must minimise the Kohn–Sham objective functional equation (1) over a set of N orthogonal, normalisable functions  whose first derivative is also normalisable, i.e. they exist in the Sobolev space

whose first derivative is also normalisable, i.e. they exist in the Sobolev space  . The exchange-correlation functional

. The exchange-correlation functional  is a yet undetermined functional of the density designed to capture the effects of exchange and correlation missing from the remainder of the functional. In principle, the Hohenberg–Kohn theorems guarantee that the Kohn–Sham objective functional is a functional of the density alone [23]. However, in the case of Kohn–Sham theory, recourse to an orbital-dependent functional is necessitated by the definition of the single-particle kinetic energy.

is a yet undetermined functional of the density designed to capture the effects of exchange and correlation missing from the remainder of the functional. In principle, the Hohenberg–Kohn theorems guarantee that the Kohn–Sham objective functional is a functional of the density alone [23]. However, in the case of Kohn–Sham theory, recourse to an orbital-dependent functional is necessitated by the definition of the single-particle kinetic energy.

Explicit constrained variation of the orbitals allows one to approach the optimisation problem in equation (1) directly. This can be done, for example, with a series of line searches in the direction of steepest descent of  with respect to the orbitals [25, 26]. Alternatively, assuming differentiability [27], the associated Lagrangian problem can be formulated, and the functional derivative of the Lagrangian set to zero. This yields the Euler–Lagrange equations for the problem, the solution of which is a stationary point of the functional. In the present context, the Euler–Lagrange equations constitute a nonlinear eigenvalue problem,

with respect to the orbitals [25, 26]. Alternatively, assuming differentiability [27], the associated Lagrangian problem can be formulated, and the functional derivative of the Lagrangian set to zero. This yields the Euler–Lagrange equations for the problem, the solution of which is a stationary point of the functional. In the present context, the Euler–Lagrange equations constitute a nonlinear eigenvalue problem,

where the Hamiltonian operator  depends on its eigenvectors via

depends on its eigenvectors via

These are the Kohn–Sham equations. The eigenvalues (quasi-particle energies)  are the Lagrange multipliers associated with the orbital orthonormality constraint. Solving the Kohn–Sham equations to find a stationary point of the Kohn–Sham functional is a necessary but not sufficient condition for (local) optimality. A sufficient condition would require the second derivative (curvature) about the stationary point to be everywhere positive. Furthermore, in general, the Kohn–Sham functional for some approximate

are the Lagrange multipliers associated with the orbital orthonormality constraint. Solving the Kohn–Sham equations to find a stationary point of the Kohn–Sham functional is a necessary but not sufficient condition for (local) optimality. A sufficient condition would require the second derivative (curvature) about the stationary point to be everywhere positive. Furthermore, in general, the Kohn–Sham functional for some approximate  is not a convex functional of the orbitals, meaning that verifying global optimality is a difficult task. In practice, solving the Kohn–Sham equations with certain methods of biasing the solution toward a (possibly local) minimum are often chosen rather than direct minimisation methods [28]. The advantages and drawbacks of each approach will be examined in section 4.

is not a convex functional of the orbitals, meaning that verifying global optimality is a difficult task. In practice, solving the Kohn–Sham equations with certain methods of biasing the solution toward a (possibly local) minimum are often chosen rather than direct minimisation methods [28]. The advantages and drawbacks of each approach will be examined in section 4.

It is now possible to formally define what is meant by self-consistency. In order to construct the Kohn–Sham Hamiltonian, one requires a density as input  to compute the Hartree and exchange-correlation potentials. An output density

to compute the Hartree and exchange-correlation potentials. An output density  is then calculated (non-linearly) from the eigenfunctions of the Kohn–Sham Hamiltonian

is then calculated (non-linearly) from the eigenfunctions of the Kohn–Sham Hamiltonian

In general, the input density is not equal to the output density. For a given external potential and exchange-correlation functional, the density  is self-consistent when

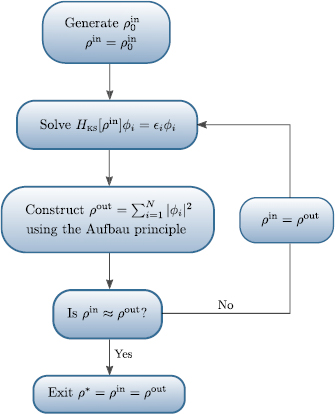

is self-consistent when  , and hence the non-linear eigenvalue problem of equations (8) and (9) is solved. The non-linearity in equation (9) necessitates an iterative procedure that takes an initial estimate of the density as input and iterates this density toward a self-consistent solution of the Kohn–Sham equations: the self-consistent field procedure, figure 1. As one might expect, an infinity of self-consistent densities exist for a given external potential and exchange-correlation functional [29]. However, we are interested primarily in the subset of these densities that are local minima of the Kohn–Sham objective functional.

, and hence the non-linear eigenvalue problem of equations (8) and (9) is solved. The non-linearity in equation (9) necessitates an iterative procedure that takes an initial estimate of the density as input and iterates this density toward a self-consistent solution of the Kohn–Sham equations: the self-consistent field procedure, figure 1. As one might expect, an infinity of self-consistent densities exist for a given external potential and exchange-correlation functional [29]. However, we are interested primarily in the subset of these densities that are local minima of the Kohn–Sham objective functional.

Figure 1. An iterative algorithm generates a series of perturbations to the density  in order to converge the initial guess density (top) to the fixed-point density

in order to converge the initial guess density (top) to the fixed-point density  (bottom). Example for an fcc four atom aluminium unit cell.

(bottom). Example for an fcc four atom aluminium unit cell.

Download figure:

Standard image High-resolution imageModern computational implementations of Kohn–Sham theory can vary significantly due to various factors. The key distinguishing factor is the choice of basis set, which leads to the related problem of whether one treats all the electrons in the computation explicitly, or treats core electrons with a pseudopotential [30]. Despite these differences, perhaps with the exception of linear scaling methods [14], the self-consistent field techniques to be discussed here are adaptable to most implementations. Indeed, some of the most popular software, such as vasp [15, 31], abinit [10, 32], quantum espresso [33], and castep [34], use similar default methods to achieve self-consistency: preconditioned multisecant methods, which are discussed in section 4.

2.2. Review purpose and structure

The overarching goal of this work is to quantify the utility of a given algorithm for reaching self-consistency in Kohn–Sham theory. In turn, this allows us to compare and analyse the performance of a sample of existing algorithms from the literature. Assessing these algorithms requires the creation of a test suite of Kohn–Sham inputs, representative of a range of numerical issues. This test suite generates a standard which can be used to test, improve, and present new algorithms designed by method developers. Furthermore, the test suite allows DFT developers to more effectively assess which algorithms they wish to implement. With these aims in mind, this article is structured in two partitions, as follows.

The first part constitutes a review of self-consistency in Kohn–Sham theory. As such, the relevant sections are ideal for an interested party who is not actively involved in development to gain a more in-depth understanding of self-consistency from an algorithmic perspective. In particular, this review collates decades of past literature on self-consistency in Kohn–Sham theory, thus elucidating conclusions that have become conventional wisdom. Section 3 examines the Kohn–Sham framework abstractly from a mathematical and computational perspective in order to study where and why algorithms encounter difficulties. This involves, for example, a discussion on the nature of so-called 'charge-sloshing', the initial guess density, sources of ill-conditioning, and more. Section 4 then examines and categorises the range of available algorithms in present literature. A focus will be placed on detailing the algorithms which have proven to be particularly successful.

The second part then utilises the analysis presented in the prior sections to perform a study akin to recent benchmarking efforts such as GW100 [35] and the  -project [36] for assessing reproducibility in GW and DFT codes respectively. Whilst the study presented here will take on a similar structure to these examples, it differs in the following way. The aim of the

-project [36] for assessing reproducibility in GW and DFT codes respectively. Whilst the study presented here will take on a similar structure to these examples, it differs in the following way. The aim of the  -project is to assess an 'error' for each DFT software in a given computable property compared to a reference software over a set of test systems. Here, we instead aim to assess the utility of an algorithm, rather than an error, which we do with two competing measures: efficiency and robustness, defined in section 5. A test suite of Kohn–Sham inputs is then constructed to target weaknesses in contemporary algorithms and exploit the difficulties discussed in section 3. This test suite is designed to be representative of the range of systems practitioners may encounter and with which they may have difficulties reaching convergence. Each algorithm is then assigned a robustness and efficiency score when tested over the full suite. The methods of Pareto analysis then provide a prescription for the definition of optimal when there exist two or more competing measures of utility. Section 6 demonstrates these concepts by using this workflow on a selection of algorithms described in section 4, implemented in the plane-wave, pseudopotential software castep. This study allows conclusions to be drawn about the current state of self-consistency algorithms in Kohn–Sham codes. Finally, we discuss how one might utilise the test suite and workflow demonstrated here to present and assess future methods and algorithms.

-project is to assess an 'error' for each DFT software in a given computable property compared to a reference software over a set of test systems. Here, we instead aim to assess the utility of an algorithm, rather than an error, which we do with two competing measures: efficiency and robustness, defined in section 5. A test suite of Kohn–Sham inputs is then constructed to target weaknesses in contemporary algorithms and exploit the difficulties discussed in section 3. This test suite is designed to be representative of the range of systems practitioners may encounter and with which they may have difficulties reaching convergence. Each algorithm is then assigned a robustness and efficiency score when tested over the full suite. The methods of Pareto analysis then provide a prescription for the definition of optimal when there exist two or more competing measures of utility. Section 6 demonstrates these concepts by using this workflow on a selection of algorithms described in section 4, implemented in the plane-wave, pseudopotential software castep. This study allows conclusions to be drawn about the current state of self-consistency algorithms in Kohn–Sham codes. Finally, we discuss how one might utilise the test suite and workflow demonstrated here to present and assess future methods and algorithms.

3. Self-consistency in Kohn–Sham theory

In computational implementations of Kohn–Sham theory, when a user has supplied the external potential (e.g. atomic species and positions) and exchange-correlation approximation, the Kohn–Sham energy functional is completely specified. The remaining parameters that are not related to self-consistency, such as Brillouin-zone sampling ('k-point sampling'), symmetry tolerances, and so on, tune either the accuracy or efficiency of the calculation. In the context of self-consistency, the user has control over a variety of parameters that can alter the convergence properties of the calculation. Hence, if a calculation is diverging due to the self-consistent field iterations (or converging inefficiently), the user has two options: adjust the parameters of the self-consistency method, or switch to a more reliable fall-back method. This section elucidates the self-consistent field iterations so one can more transparently see why one's iterations may be divergent or inefficient. No claim is made for providing a strictly detailed and rigorous treatment of the mathematical problem at hand. Instead, literature is cited throughout such that the interested reader can venture further in detail than this article provides.

3.1. Computational implementation

The central approximation involved in converting the framework of Kohn–Sham theory into a form suitable for computation is called the finite-basis approximation, or the Galerkin approximation [28]. The orbitals  are continuous functions of a continuous three dimensional variable, x. These functions are equivalent to vectors existing in an infinite dimensional vector space, spanned by a complete basis

are continuous functions of a continuous three dimensional variable, x. These functions are equivalent to vectors existing in an infinite dimensional vector space, spanned by a complete basis  . Provided this basis does indeed span the space, the orbitals can be expressed exactly as

. Provided this basis does indeed span the space, the orbitals can be expressed exactly as

Note that the basis set is required to be complete, but not necessarily orthogonal,

If the basis is non-orthogonal, the finite-basis Kohn–Sham equations generalise to include the overlap matrix,  , see [28] for more details. This distinction does not affect the ensuing analysis, and so the basis set is hereafter taken to be orthogonal for clarity. Once the basis is specified, the equations can be rearranged and solved for the infinity of coefficients to the basis

, see [28] for more details. This distinction does not affect the ensuing analysis, and so the basis set is hereafter taken to be orthogonal for clarity. Once the basis is specified, the equations can be rearranged and solved for the infinity of coefficients to the basis  . In practice, one must truncate the basis such that it is no longer complete and instead captures only the most relevant regions of the formally infinite Hilbert space,

. In practice, one must truncate the basis such that it is no longer complete and instead captures only the most relevant regions of the formally infinite Hilbert space,

The characteristic size of the basis Nb will depend primarily on the choice of basis functions. Within the finite-basis approximation, the Kohn–Sham Hamiltonian becomes an  matrix, of which a subset of the eigenvalues and eigenvectors is required to progress toward a solution of the non-linear eigenvalue problem, equations (8) and (9). Basis functions which are localised about the atomic cores [37] are a popular choice. These tend to be particularly accurate per basis function, meaning Nb is typically the same order of magnitude as the number of electrons, N. Methods utilising local basis functions are often able to form and diagonalise the Kohn–Sham Hamiltonian matrix explicitly. In such implementations, the Kohn–Sham energy functional can be expressed in terms of the density matrix,

matrix, of which a subset of the eigenvalues and eigenvectors is required to progress toward a solution of the non-linear eigenvalue problem, equations (8) and (9). Basis functions which are localised about the atomic cores [37] are a popular choice. These tend to be particularly accurate per basis function, meaning Nb is typically the same order of magnitude as the number of electrons, N. Methods utilising local basis functions are often able to form and diagonalise the Kohn–Sham Hamiltonian matrix explicitly. In such implementations, the Kohn–Sham energy functional can be expressed in terms of the density matrix,

rather than the orbitals, where the density matrix is also of dimension  . The Kohn–Sham energy functional has a closed-form expression in terms of the density matrix (see [28] and section 4.2), and therefore the constrained optimisation in equation (1) becomes an optimisation over allowed variations in the density matrix. From the point of view of the work to follow, the ability to construct, store, and optimise the density matrix directly is the distinguishing characteristic of localised basis sets with respect to the basis set considered in the following work: namely, the set of Nb plane-waves,

. The Kohn–Sham energy functional has a closed-form expression in terms of the density matrix (see [28] and section 4.2), and therefore the constrained optimisation in equation (1) becomes an optimisation over allowed variations in the density matrix. From the point of view of the work to follow, the ability to construct, store, and optimise the density matrix directly is the distinguishing characteristic of localised basis sets with respect to the basis set considered in the following work: namely, the set of Nb plane-waves,

with the same periodicity as the unit cell [15, 31], labelled by the frequency of the plane-wave G. This basis set is delocalised, meaning the functions  are non-zero across the whole unit cell. The introduction of a delocalised basis results in a reduction in accuracy per basis function, which in turn necessitates a much larger value of Nb to reproduce the same accuracy as a computation using localised basis sets. The advantage of a plane-wave, or similar, basis set lies elsewhere [15, 31]. This will become relevant in section 4, as certain algorithms exploit the ability to construct

are non-zero across the whole unit cell. The introduction of a delocalised basis results in a reduction in accuracy per basis function, which in turn necessitates a much larger value of Nb to reproduce the same accuracy as a computation using localised basis sets. The advantage of a plane-wave, or similar, basis set lies elsewhere [15, 31]. This will become relevant in section 4, as certain algorithms exploit the ability to construct  explicitly. Nevertheless, much of the analysis to follow in this section will remain largely independent of basis set. The discussion will, however, be framed in the language of an entirely plane-wave basis set.

explicitly. Nevertheless, much of the analysis to follow in this section will remain largely independent of basis set. The discussion will, however, be framed in the language of an entirely plane-wave basis set.

3.2. The Kohn–Sham map

As already stated, Kohn–Sham theory is a constrained optimisation problem, equation (1). The associated Euler–Lagrange equations provide a method for transforming the optimisation problem into a fixed-point problem: the Kohn–Sham equations. That is, we seek the density  such that it is a fixed-point of the discretised Kohn–Sham map,

such that it is a fixed-point of the discretised Kohn–Sham map,

In general, ![$K[\rho^{{\rm in}}] = \rho^{{\rm out}}$](https://content.cld.iop.org/journals/0953-8984/31/45/453001/revision2/cmab31c0ieqn029.gif) , where K is defined using equations (8) and (9). That is, K takes an input density which is used to construct the Hartree and exchange-correlation potentials, then the associated Kohn–Sham Hamiltonian is diagonalised, and an output density is constructed as the sum of the square of N eigenfunctions. Formally, the Kohn–Sham map is a map from the set of non-interacting

, where K is defined using equations (8) and (9). That is, K takes an input density which is used to construct the Hartree and exchange-correlation potentials, then the associated Kohn–Sham Hamiltonian is diagonalised, and an output density is constructed as the sum of the square of N eigenfunctions. Formally, the Kohn–Sham map is a map from the set of non-interacting  -representable densities onto itself. Here, a non-interacting

-representable densities onto itself. Here, a non-interacting  -representable density is a density that can be constructed via equation (9) for a given Kohn–Sham Hamiltonian. The 'size' of this set, as a subset of

-representable density is a density that can be constructed via equation (9) for a given Kohn–Sham Hamiltonian. The 'size' of this set, as a subset of  , is an open problem [27]. Hence, it is entirely possible that algorithms generate input densities that are not non-interacting

, is an open problem [27]. Hence, it is entirely possible that algorithms generate input densities that are not non-interacting  -representable; however this appears to not be an issue in practice4. The aim now is to generate a converging sequence of densities

-representable; however this appears to not be an issue in practice4. The aim now is to generate a converging sequence of densities  starting from an initial guess density

starting from an initial guess density  , where

, where  to within some desired tolerance. The ease with which this sequence can be generated in practice depends on the functional properties of K, which are examined later in this section.

to within some desired tolerance. The ease with which this sequence can be generated in practice depends on the functional properties of K, which are examined later in this section.

3.3. Defining convergence

The Kohn–Sham map K, can be used to define a new map R, the residual

which transforms the fixed-point problem into a root-finding problem. An absolute scalar measure of convergence is thus provided by the norm of the residual ![$||R[\rho^{{\rm in}}]||_2$](https://content.cld.iop.org/journals/0953-8984/31/45/453001/revision2/cmab31c0ieqn037.gif) , where ||.||2 is used to denote the vector L2-norm. However, ||R||2 is a quantity which lacks transparent physical interpretation, making it difficult to assess just how converged a calculation is by consideration of ||R||2 alone. Hence, convergence is conventionally defined in terms of fluctuations in the total energy, a more tractable measure. When fluctuations in the total energy are sufficiently low to satisfy the accuracy requirements of the users' calculation, the iterations are terminated and the calculation is converged. In practice, the total energy is often not calculated by evaluating the Kohn–Sham energy functional

, where ||.||2 is used to denote the vector L2-norm. However, ||R||2 is a quantity which lacks transparent physical interpretation, making it difficult to assess just how converged a calculation is by consideration of ||R||2 alone. Hence, convergence is conventionally defined in terms of fluctuations in the total energy, a more tractable measure. When fluctuations in the total energy are sufficiently low to satisfy the accuracy requirements of the users' calculation, the iterations are terminated and the calculation is converged. In practice, the total energy is often not calculated by evaluating the Kohn–Sham energy functional ![$E_{\tiny{\rm KS}}[\rho_n^{{\rm in}}]$](https://content.cld.iop.org/journals/0953-8984/31/45/453001/revision2/cmab31c0ieqn038.gif) . Instead, the Harris-Foulkes functional

. Instead, the Harris-Foulkes functional  is defined [38],

is defined [38],

which can be shown to give the exact ground state energy correct to quadratic order in the density error about the fixed-point density  —i.e. it is correct to

—i.e. it is correct to  . Note that it is not the Harris-Foulkes functional that is minimised during the computation, as it possesses incorrect behaviour away from

. Note that it is not the Harris-Foulkes functional that is minimised during the computation, as it possesses incorrect behaviour away from  [39, 40]. However, evaluating the energy using this functional when near

[39, 40]. However, evaluating the energy using this functional when near  allows one to terminate the iterations at a desired accuracy earlier than if one evaluates the energy using the Kohn–Sham functional, which is correct to linear order in the density. Finally, recall that

allows one to terminate the iterations at a desired accuracy earlier than if one evaluates the energy using the Kohn–Sham functional, which is correct to linear order in the density. Finally, recall that  is the criterion for solving the Kohn–Sham equations, not for finding a minimum of the Kohn–Sham functional. Indeed, to verify that a local minimiser of the Kohn–Sham functional is obtained, one would need to ensure all eigenvalues of the Hessian were positive. Such a procedure is not practical in plane-wave codes, and hence the exit criterion for algorithms in section 4 is based solely on fluctuations in the total energy.

is the criterion for solving the Kohn–Sham equations, not for finding a minimum of the Kohn–Sham functional. Indeed, to verify that a local minimiser of the Kohn–Sham functional is obtained, one would need to ensure all eigenvalues of the Hessian were positive. Such a procedure is not practical in plane-wave codes, and hence the exit criterion for algorithms in section 4 is based solely on fluctuations in the total energy.

3.4. Some unique properties of K

Identifying properties unique to K can help guide and narrow the choice of algorithms in section 4. Firstly, we note that it is computationally expensive to 'query the oracle', meaning evaluate K for a given input density to generate the pair  on the ith iteration. This is because, when one has specified

on the ith iteration. This is because, when one has specified  , finding the corresponding

, finding the corresponding  requires one to construct and diagonalise the Kohn–Sham Hamiltonian. In plane-wave codes, this diagonalisation is done iteratively, and only the relevant N eigenfunctions and eigenvalues are computed. This procedure scales as approximately

requires one to construct and diagonalise the Kohn–Sham Hamiltonian. In plane-wave codes, this diagonalisation is done iteratively, and only the relevant N eigenfunctions and eigenvalues are computed. This procedure scales as approximately  , and is (in a sense) the bottleneck of the computation [15]. Hence, an algorithm that uses all past iterative data optimally so as to reduce evaluations of K is desirable. Here, the past iterative data constitutes the set of n iterative density pairs

, and is (in a sense) the bottleneck of the computation [15]. Hence, an algorithm that uses all past iterative data optimally so as to reduce evaluations of K is desirable. Here, the past iterative data constitutes the set of n iterative density pairs ![$\{(\rho^{{\rm in}}_i, \rho^{{\rm out}}_i) \ | \ i \in [0,n] \}$](https://content.cld.iop.org/journals/0953-8984/31/45/453001/revision2/cmab31c0ieqn049.gif) . In order to utilise this set to generate the subsequent density

. In order to utilise this set to generate the subsequent density  from some algorithm, one is required to store the history of iterative densities in memory. Each density is represented by an array that scales with Nb5, meaning as the iteration number n grows large, so does the memory requirement of storing the entire history. Therefore, a limited memory algorithm is also desirable here, meaning no more than m of the most recent density pairs are stored. The final feature of computational Kohn–Sham theory that we will mention here is the accuracy of the initial guess,

from some algorithm, one is required to store the history of iterative densities in memory. Each density is represented by an array that scales with Nb5, meaning as the iteration number n grows large, so does the memory requirement of storing the entire history. Therefore, a limited memory algorithm is also desirable here, meaning no more than m of the most recent density pairs are stored. The final feature of computational Kohn–Sham theory that we will mention here is the accuracy of the initial guess,  . A discussion on the generation of the initial guess is left to later in this section, but it suffices to note that the initial guess is typically 'close' to the converged density

. A discussion on the generation of the initial guess is left to later in this section, but it suffices to note that the initial guess is typically 'close' to the converged density  . By 'close' we mean that a linear response approximation can be employed effectively, see section 4. As perhaps would be expected when this is the case, some of the most successful algorithms are able to utilise the past iterations cleverly with limited memory requirements, and employ some form of linearising approximation.

. By 'close' we mean that a linear response approximation can be employed effectively, see section 4. As perhaps would be expected when this is the case, some of the most successful algorithms are able to utilise the past iterations cleverly with limited memory requirements, and employ some form of linearising approximation.

3.5. Fixed-point and damped iterations

As mentioned previously, convergence of the self-consistent field iterations depends on the functional properties K, where we recall that each K is specified by the framework of Kohn–Sham theory plus an exchange-correlation approximation and external potential. Despite little being known about the precise functional properties of K [41–43], empirical wisdom allows us to make certain broad statements about it. For the sake of analysis, we now introduce the fixed-point iteration,

This is perhaps the most simple iterative scheme one could envisage, yet it remains profoundly important from the point of view of functional analysis [44]. An example algorithm that makes use of the fixed-point iteration scheme is given in figure 2. This algorithm, on iteration n, constructs and diagonalises the Kohn–Sham Hamiltonian for a given  , and computes the output density

, and computes the output density  from the N eigenvectors corresponding to the lowest N eigenvalues, otherwise known as the aufbau principle. The fixed-point iteration is then used as one sets

from the N eigenvectors corresponding to the lowest N eigenvalues, otherwise known as the aufbau principle. The fixed-point iteration is then used as one sets  , and the procedure is repeated. For the algorithm in figure 2 to converge, K must be so-called locally k-contractive in the region of the initial guess. For the Kohn–Sham map to be k-contractive under the L2-norm, it must satisfy

, and the procedure is repeated. For the algorithm in figure 2 to converge, K must be so-called locally k-contractive in the region of the initial guess. For the Kohn–Sham map to be k-contractive under the L2-norm, it must satisfy

for some real number 0 < k < 1. The intuition here is that, for any two points in the 'contractive region', the map K brings these points closer in the L2-norm. A contractive region is simply defined as a domain in density space where equation (21) is true for all densities within this domain. Successive application of K—the fixed-point iteration scheme—thus continues to bring these points closer toward a locally unique fixed-point,  . (See the Banach fixed-point theorem [45] or its generalisations [46] for k-contractive maps). Unfortunately, as section 6 shows, the Kohn–Sham map is not locally k-contractive for the vast majority of Kohn–Sham inputs. However, perhaps surprisingly, certain calculations do lead to a k-contractive Kohn–Sham map, such as spin-independent fcc aluminium at the PBE [47] level of theory, figure 3. In these cases, sophisticated acceleration algorithms tend to do little-to-nothing to assist convergence. The fixed-point iteration is also referred to as the Roothaan iteration in the physics and quantum chemistry communities [48]. It has been demonstrated that, in the context of Hartree–Fock theory, the Roothaan algorithm either converges linearly toward a solution or oscillates between two densities about the solution [49]. It is expected that this behaviour will carry over to Kohn–Sham theory [50].

. (See the Banach fixed-point theorem [45] or its generalisations [46] for k-contractive maps). Unfortunately, as section 6 shows, the Kohn–Sham map is not locally k-contractive for the vast majority of Kohn–Sham inputs. However, perhaps surprisingly, certain calculations do lead to a k-contractive Kohn–Sham map, such as spin-independent fcc aluminium at the PBE [47] level of theory, figure 3. In these cases, sophisticated acceleration algorithms tend to do little-to-nothing to assist convergence. The fixed-point iteration is also referred to as the Roothaan iteration in the physics and quantum chemistry communities [48]. It has been demonstrated that, in the context of Hartree–Fock theory, the Roothaan algorithm either converges linearly toward a solution or oscillates between two densities about the solution [49]. It is expected that this behaviour will carry over to Kohn–Sham theory [50].

Figure 2. A flowchart detailing an example algorithm for achieving self-consistency using fixed-point (or Roothaan) iterations.

Download figure:

Standard image High-resolution imageFigure 3. Iterative convergence in the residual L2-norm ||R||2 toward a fixed-point using fixed-point iterations. Simulation of a four atom fcc aluminium unit cell, with k-point spacing of

using the PBE functional.

using the PBE functional.

Download figure:

Standard image High-resolution imageWe now define a new iterative scheme, the damped iteration (or one of its many other aliases, such as Krasnosel'skii–Mann or averaged iteration [51, 52]) such that

Hereafter, we refer to a scheme utilising the damped iteration as linear mixing. This scheme constitutes a series of steps in the residual L2-norm steepest descent direction R weighted by the parameter  . It can be shown that provided K is non-expansive, there always exists some

. It can be shown that provided K is non-expansive, there always exists some  such that the damped iteration converges [53–55]. Here, non-expansive refers to an instance whereby k = 1 in equation (21), i.e. densities do not get further apart upon successive application of K. This property is typically assumed, just as we also assume differentiability of

such that the damped iteration converges [53–55]. Here, non-expansive refers to an instance whereby k = 1 in equation (21), i.e. densities do not get further apart upon successive application of K. This property is typically assumed, just as we also assume differentiability of  , in theorems relating to convergence features of algorithms discussed in section 4 (e.g. [56]). Indeed, the past few decades of computation using Kohn–Sham theory has lead to the wisdom that one can always find some

, in theorems relating to convergence features of algorithms discussed in section 4 (e.g. [56]). Indeed, the past few decades of computation using Kohn–Sham theory has lead to the wisdom that one can always find some  such that one's calculation converges [50], albeit often impractically slowly. Fortunately, rather large damping parameters of

such that one's calculation converges [50], albeit often impractically slowly. Fortunately, rather large damping parameters of  are sometimes able to significantly improve convergence, as demonstrated in figure 4 [57]. In this sense, the Kohn–Sham map is relatively well-behaved, although many problems of physical interest are not so well-behaved. In these cases, sophisticated algorithms are required in order to accelerate and stabilise convergence. However, as section 6 demonstrates, even when recourse to a sophisticated algorithm is required, most inputs excluding those belonging to certain problematic classes are able to converge effectively. This is a testament to the Kohn–Sham map often being dominated by its linear response within some relatively large region about the current iterate, a property which is examined further in section 3.7.

are sometimes able to significantly improve convergence, as demonstrated in figure 4 [57]. In this sense, the Kohn–Sham map is relatively well-behaved, although many problems of physical interest are not so well-behaved. In these cases, sophisticated algorithms are required in order to accelerate and stabilise convergence. However, as section 6 demonstrates, even when recourse to a sophisticated algorithm is required, most inputs excluding those belonging to certain problematic classes are able to converge effectively. This is a testament to the Kohn–Sham map often being dominated by its linear response within some relatively large region about the current iterate, a property which is examined further in section 3.7.

Figure 4. Iterative convergence in the residual L2-norm ||R||2 using damped iterations with  , and undamped iterations

, and undamped iterations  . Simulation of a four atom fcc silicon unit cell, with k-point spacing of

. Simulation of a four atom fcc silicon unit cell, with k-point spacing of

using the PBE functional.

using the PBE functional.

Download figure:

Standard image High-resolution imageThe behaviour of K discussed here could be interpreted as arising due to the lack of convexity of the underlying functional  used to generate it. Convexity is defined formally in section 4, but for now it suffices to note that it can be taken to mean

used to generate it. Convexity is defined formally in section 4, but for now it suffices to note that it can be taken to mean  has a unique minimum, which is the unique fixed-point of K, and moreover this minimum is global [58]. In other words, solving the Euler–Lagrange equations is a necessary and sufficient condition to verify global optimality. While this is clearly an attractive quality for an energy functional, not least because only the global minimum has direct physical meaning in Kohn–Sham theory, it is not the case here (in general). The lack of convexity of

has a unique minimum, which is the unique fixed-point of K, and moreover this minimum is global [58]. In other words, solving the Euler–Lagrange equations is a necessary and sufficient condition to verify global optimality. While this is clearly an attractive quality for an energy functional, not least because only the global minimum has direct physical meaning in Kohn–Sham theory, it is not the case here (in general). The lack of convexity of  is particularly pronounced in spin-dependent Kohn–Sham theory, where it is not uncommon for many minima to exist, which are interpreted as representing different meta-stable spin states of the system [59]. In this case, one could, for example, employ some form of global optimisation in an attempt to explore the landscape of local minima with hopes of finding the global minimum.

is particularly pronounced in spin-dependent Kohn–Sham theory, where it is not uncommon for many minima to exist, which are interpreted as representing different meta-stable spin states of the system [59]. In this case, one could, for example, employ some form of global optimisation in an attempt to explore the landscape of local minima with hopes of finding the global minimum.

In summary, while a large class of Kohn–Sham inputs are well-behaved and convergent for relatively high values of the damping parameter, many inputs, especially the increasingly complex ones involved in modern technologies, are not. The remainder of this section explores the precise characteristics of K that lead to ill-behaved convergence.

3.6. The Aufbau principle and fractional occupancy

The question remains of how one might go about choosing which N eigenfunctions of the Kohn–Sham Hamiltonian are used to iteratively construct the output densities toward convergence. For Nb ≫ N, there is of course a large number of permutations of N eigenfunctions from which to choose. While it is perhaps taken for granted, [29] demonstrates that, in the case of Hartree–Fock theory, the lowest energy solution to the Hartree–Fock equations will necessarily be one which corresponds to the N eigenvectors with the lowest eigenvalues of  . This is otherwise known as the aufbau principle, and appears in the algorithm presented in figure 2. These eigenfunctions

. This is otherwise known as the aufbau principle, and appears in the algorithm presented in figure 2. These eigenfunctions  are termed 'occupied' orbitals, with associated quasi-particle energies

are termed 'occupied' orbitals, with associated quasi-particle energies  . However, just because the exact ground state solution satisfies the aufbau principle does not guarantee that doing so at each iteration is optimal [43, 49, 60]. Furthermore, iteratively satisfying the aufbau principle does not guarantee a global, or even local, minimum of

. However, just because the exact ground state solution satisfies the aufbau principle does not guarantee that doing so at each iteration is optimal [43, 49, 60]. Furthermore, iteratively satisfying the aufbau principle does not guarantee a global, or even local, minimum of  will be obtained as a solution to the Kohn–Sham equations [28]. Nevertheless, iteratively satisfying the aufbau pricinple has proven a successful heuristic for finding minima of

will be obtained as a solution to the Kohn–Sham equations [28]. Nevertheless, iteratively satisfying the aufbau pricinple has proven a successful heuristic for finding minima of  via the Kohn–Sham equations. Here, the aufbau principle serves to bias our solution of the Kohn–Sham equations toward a minimum of

via the Kohn–Sham equations. Here, the aufbau principle serves to bias our solution of the Kohn–Sham equations toward a minimum of  , rather than an inflection point or maximum.

, rather than an inflection point or maximum.

Iterative procedures utilising the aufbau principle are well-defined and work best primarily when the input possesses a Kohn–Sham gap, i.e. when it is not a (Kohn–Sham) metal. The Kohn–Sham gap is defined in the limit of large system size as

otherwise known as the HOMO-LUMO gap—the difference in energy between the highest energy occupied and lowest energy unoccupied (molecular) orbitals. When this gap disappears, meaning there exists a non-zero density of states at the Fermi energy, convergence becomes increasingly difficult [25, 26]. Here, the Fermi energy  is defined as the energy of the highest occupied orbital, which is the Lagrange multiplier corresponding to the constraint that the particle number N remain fixed in the minimisation of the Kohn–Sham energy functional. Such cases are prone to the phenomenon of occupancy sloshing: iterations become hindered by a continual iterative switching of binary occupation of orbitals whose energies are close to the Fermi energy. In some circumstances, an aufbau solution to the Kohn–Sham equations does not exist for binary occupation of orbitals [27, 61–63]. For example, [61] demonstrates that, in the case of the C2 molecule, the Kohn–Sham solution possesses a 'hole' below the highest occupied orbital. In the context of self-consistent field iterations, this would mean any algorithm would continue to switch orbital occupancies at each iteration ad infinitum. This occurrence is a consequence of degeneracy in the highest occupied Kohn–Sham orbitals, which can occur even in the absence of symmetry and degeneracy in the exact many-body system. Here, and in other cases like this, the density should be constructed from a density matrix

is defined as the energy of the highest occupied orbital, which is the Lagrange multiplier corresponding to the constraint that the particle number N remain fixed in the minimisation of the Kohn–Sham energy functional. Such cases are prone to the phenomenon of occupancy sloshing: iterations become hindered by a continual iterative switching of binary occupation of orbitals whose energies are close to the Fermi energy. In some circumstances, an aufbau solution to the Kohn–Sham equations does not exist for binary occupation of orbitals [27, 61–63]. For example, [61] demonstrates that, in the case of the C2 molecule, the Kohn–Sham solution possesses a 'hole' below the highest occupied orbital. In the context of self-consistent field iterations, this would mean any algorithm would continue to switch orbital occupancies at each iteration ad infinitum. This occurrence is a consequence of degeneracy in the highest occupied Kohn–Sham orbitals, which can occur even in the absence of symmetry and degeneracy in the exact many-body system. Here, and in other cases like this, the density should be constructed from a density matrix

via

The wavefunctions  are Slater determinants of Kohn–Sham orbitals corresponding to each degenerate solution within some q-fold degenerate subspace. After rearrangement, we find that the density can now be written as

are Slater determinants of Kohn–Sham orbitals corresponding to each degenerate solution within some q-fold degenerate subspace. After rearrangement, we find that the density can now be written as

where the fractional occupancies fi are determined as some combination of the weights  in equation (24). This form of the density allows one to see more transparently that we have now introduced fractional occupancy of the orbitals whose energy is degenerate at the Fermi energy. In the example of C2 in [61], the degenerate subspace is first identified, and then the occupancies f i are varied smoothly until the energies of the identified orbitals are equal. This procedure, termed evaporation of the hole, yielded accurate energy predictions when compared to configuration interaction calculations. In this case, the Kohn–Sham degeneracy is interpreted as being due to the presence of strong electron correlation. These degeneracies lead to densities that are so-called ensemble non-interacting v-representable. That is, the exact Kohn–Sham density can no longer be constructed from a pure state via the sum of the square of orbitals as in equation (3), but instead must be constructed from some ensemble of states via equations (24) and (25). The extension of Kohn–Sham theory to include fractional occupancy is described well in [64, 65].

in equation (24). This form of the density allows one to see more transparently that we have now introduced fractional occupancy of the orbitals whose energy is degenerate at the Fermi energy. In the example of C2 in [61], the degenerate subspace is first identified, and then the occupancies f i are varied smoothly until the energies of the identified orbitals are equal. This procedure, termed evaporation of the hole, yielded accurate energy predictions when compared to configuration interaction calculations. In this case, the Kohn–Sham degeneracy is interpreted as being due to the presence of strong electron correlation. These degeneracies lead to densities that are so-called ensemble non-interacting v-representable. That is, the exact Kohn–Sham density can no longer be constructed from a pure state via the sum of the square of orbitals as in equation (3), but instead must be constructed from some ensemble of states via equations (24) and (25). The extension of Kohn–Sham theory to include fractional occupancy is described well in [64, 65].

This so-called ensemble extension to Kohn–Sham theory is also utilised when constructing a non-interacting theory of Mermin's finite temperature formulation of DFT [66]. It is this version of Kohn–Sham theory that is usually used in modern Kohn–Sham codes that include fractional occupancy. As we are interested primarily in how this extension mitigates convergence issues, the reader interested in an in-depth discussion of finite temperature Kohn–Sham theory is referred to [25, 64], and references therein. Here, it suffices to observe that we now seek to minimise the following free energy functional

where the entropy functional and density are defined respectively as

The real-valued fractional occupancies ![$f_i \in [0,1]$](https://content.cld.iop.org/journals/0953-8984/31/45/453001/revision2/cmab31c0ieqn080.gif) now constitute additional variational parameters alongside the orbitals. Minimisation of the finite temperature Kohn–Sham functional can be tackled directly as in [26], which is discussed in section 4 and tested in section 6. Alternatively, the associated fixed-point problem can be formulated, whereby the occupancies are given a fixed functional form dependent on both T and the Kohn–Sham Hamiltonian eigenenergies

now constitute additional variational parameters alongside the orbitals. Minimisation of the finite temperature Kohn–Sham functional can be tackled directly as in [26], which is discussed in section 4 and tested in section 6. Alternatively, the associated fixed-point problem can be formulated, whereby the occupancies are given a fixed functional form dependent on both T and the Kohn–Sham Hamiltonian eigenenergies  . This is otherwise known as the smearing scheme, an example of which is the Fermi–Dirac function,

. This is otherwise known as the smearing scheme, an example of which is the Fermi–Dirac function,

The electronic temperature T is now an input parameter which determines the degree of broadening of occupancies about the Fermi energy, figure 5. At each iteration, the occupancies are updated with new values of  , and this process is continued toward convergence. This procedure demonstrably mitigates occupancy sloshing for Kohn–Sham metals with large density of states at the Fermi energy [67, 68]6. Furthermore, introducing finite temperature also assists with sampling of the Brillouin zone in periodic Kohn–Sham codes. That is, interpolation techniques for evaluating integrals across the Brillouin zone are inaccurate when many band crossings (discontinuous changes of occupancy) exist, i.e. in Kohn–Sham metals. This necessitates a fine sampling of k-space in order to accurately evaluate the integrals. As discussed, fractional occupancies negate these discontinuities, allowing for a coarser sampling of the Brillouin zone, meaning interpolation techniques become increasingly accurate—see [67, 69] for more details. In any case, finite electronic temperatures are a valuable numerical tool to assist convergence of the self-consistent field iterations in the event of inputs with large density of states at the Fermi energy. Hence, the test suite in section 5 includes many such systems, and in particular a variety of electronic temperatures are considered.

, and this process is continued toward convergence. This procedure demonstrably mitigates occupancy sloshing for Kohn–Sham metals with large density of states at the Fermi energy [67, 68]6. Furthermore, introducing finite temperature also assists with sampling of the Brillouin zone in periodic Kohn–Sham codes. That is, interpolation techniques for evaluating integrals across the Brillouin zone are inaccurate when many band crossings (discontinuous changes of occupancy) exist, i.e. in Kohn–Sham metals. This necessitates a fine sampling of k-space in order to accurately evaluate the integrals. As discussed, fractional occupancies negate these discontinuities, allowing for a coarser sampling of the Brillouin zone, meaning interpolation techniques become increasingly accurate—see [67, 69] for more details. In any case, finite electronic temperatures are a valuable numerical tool to assist convergence of the self-consistent field iterations in the event of inputs with large density of states at the Fermi energy. Hence, the test suite in section 5 includes many such systems, and in particular a variety of electronic temperatures are considered.

Figure 5. Functional dependence of the occupancy f of a given eigenenergy  for various temperatures using the Fermi–Dirac smearing scheme.

for various temperatures using the Fermi–Dirac smearing scheme.

Download figure:

Standard image High-resolution image3.7. The initial guess

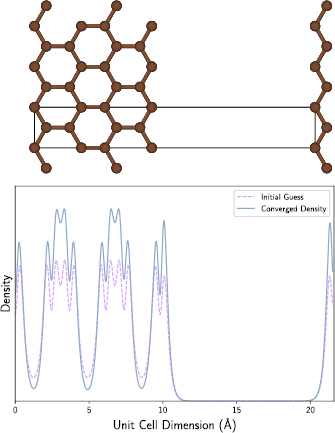

As one might expect, a more accurate initial guess of the variable to be optimised leads monotonically to more efficient and stable convergence rates [70]. In the case of self-consistent field methodology, and for plane-wave and similar codes, the initial guess charge density is often computed as a sum of pseudoatomic densities [15, 31]. That is, once the exchange-correlation and pseudopotential for the atomic species in the computation has been specified, the charge density for these atoms in vacuum is calculated. Then, each individual density is overlaid at positions centered on the atomic cores in order to construct the initial guess density, figure 6. This figure demonstrates visually the accuracy of this prescription for generating initial guess charge densities. Note that different considerations are required in order to generate an initial guess for the density matrix or orbitals. The accuracy of the initial guess is, in part, responsible for the relative success of methods that employ linearising approximations, such as quasi-Newton methods, see section 4. Notable cases in which the initial guess density is relatively poor include polar materials such as magnesium oxide. The initial guess is charge neutral by construction, meaning the charge is required to shift onto the electro-negative species for convergence. Furthermore, inputs whereby the atomic species are subject to large inter-atomic forces can also lead to inaccurate initial guesses. This is partly due to the fact that the initial guess becomes exact in the limit of large atomic separation, and since large inter-atomic forces imply low inter-atomic separation, this can result in potentially inaccurate initial guess densities. Such inputs are generated routinely during structure searching applications [71]. The test suite includes various examples of these 'far-from-equilibrium' systems.

Figure 6. The difference between the initial guess density, constructed from a sum of isolated pseudoatomic densities, and the converged density, for a graphene nanoribbon along one dimension (above). The density is plotted along a one-dimensional slice across the axis shown in the above image.

Download figure:

Standard image High-resolution imageSpin polarised Kohn–Sham theory presents more serious issues: there is no widely successful method for generating initial guess spin densities. In the spin polarised or 'unrestricted' formalism, the following spin densities are introduced (see [2]),

generated from spin up and down particles occupying separate spin orbitals  . This leads now to two coupled non-linear eigenvalue problems, one for each spin. A method for generating the initial guess spin densities is thus required, rather than just the initial guess charge density. As one, in general, has no knowledge of the spin state a priori, this initial guess can be relatively far away from the ground state. In practice, one conventionally deals with charge and spin densities, rather than spin up and spin down densities,

. This leads now to two coupled non-linear eigenvalue problems, one for each spin. A method for generating the initial guess spin densities is thus required, rather than just the initial guess charge density. As one, in general, has no knowledge of the spin state a priori, this initial guess can be relatively far away from the ground state. In practice, one conventionally deals with charge and spin densities, rather than spin up and spin down densities,

The charge density can be initialised similarly to the spin-independent case, with a sum of independent pseudoatomic charge densities. The spin density can be initialised to zero, or be scaled by specifying some magnetic character on the atoms, e.g. ferromagnetic. Such a prescription typically leads to initial guess densities that are further away in the residual L2-norm than spin-independent initial guess charge densities. This observation at least partially accounts for the reason that spin polarised systems tend to be much harder to converge than spin unpolarised systems. For this reason, and others cited in the following section, many spin polarised inputs are included in the test suite. Recently, various schemes have been proposed that aim to better predict self-consistent densities to use as the initial guess [70, 72]. In particular, [70] considers a data-derived approach to predicting and assessing uncertainty in a guess density away from the ground state.

3.8. Ill-conditioning and charge sloshing

The condition of a problem, loosely speaking, can be taken as characteristic of the difficulty a black-box algorithm will have in solving the problem. Due to the complexity of the Kohn–Sham map, evaluating its condition number directly is impossible in practice. However, within the context of linear response theory, it is possible to explore certain causes of ill-conditioning generic to either all, or certain broad classes, of inputs. Hence, we begin by linearising the map K about a fixed-point7,

This is the definition of linearisation in the present context, i.e. a small change in the input density yields a change in the output density proportional to the initial change, where the constant of proportionality is shown by the components of the Jacobian of the map K,

Within the language of linear response theory, the Jacobian can be identified with the non-interacting charge dielectric via

which is the linear response function of the residual map, rather than the Kohn–Sham map. The dielectric can be expanded as such

where  , which are the only two potentials which have a dependence on the density. Hence, the dielectric is given is terms of the non-interacting susceptibility

, which are the only two potentials which have a dependence on the density. Hence, the dielectric is given is terms of the non-interacting susceptibility  as

as

where  and

and  are the kernels of the Hartree (Coulomb) and exchange-correlation integrals. Therefore, the linear response of a system to a density perturbation is given by the interplay between the exchange-correlation and Coulomb kernels, and the susceptibility

are the kernels of the Hartree (Coulomb) and exchange-correlation integrals. Therefore, the linear response of a system to a density perturbation is given by the interplay between the exchange-correlation and Coulomb kernels, and the susceptibility

which is highly system dependent [57, 73]. As the non-interacting susceptibility plays a central role in the description of many physical phenomena, such as absorption spectra, it is a relatively well-studied object [74, 75]. The remainder of this section classifies certain generic behaviours of  so that causes of divergence in the self-consistency iterations can be studied. First, in order to see why the linear response function is important for self-consistency iterations, note that one may consider each iteration as a perturbation in the density about the current iterate. Knowledge of the exact response function

so that causes of divergence in the self-consistency iterations can be studied. First, in order to see why the linear response function is important for self-consistency iterations, note that one may consider each iteration as a perturbation in the density about the current iterate. Knowledge of the exact response function  , and subsequently

, and subsequently  , would thus allow one to take a controlled step toward the fixed-point density, depending on how well-behaved8 the map is about the current iterate. An iterative scheme utilising the exact response function is given by

, would thus allow one to take a controlled step toward the fixed-point density, depending on how well-behaved8 the map is about the current iterate. An iterative scheme utilising the exact response function is given by

which one may recognise as Newton's method. While Newton's method is not global, it has many attractive features, see section 4.1. However, one is rarely privileged with knowledge of the exact dielectric as it is vastly expensive to compute and store [78–81]. In practice, one is left to estimate, or iteratively build, this response function. Cases in which the input is very sensitive to density perturbations, characterised by large eigenvalues of the discretised  , tend to amplify errors in iterates, and thus potentially move one away from the fixed-point.

, tend to amplify errors in iterates, and thus potentially move one away from the fixed-point.

Consider now completely neglecting higher order terms in the Taylor expansion of the Kohn–Sham map, and let us examine the map as if it were linear. This allows us to borrow results from numerical analysis of linear systems, and apply these results as well-motivated heuristics to convergence in the non-linear case. In particular, assuming linearity, absolute convergence can be identified as

using equation (35), meaning  for all i, where

for all i, where  are the eigenvalues of the inverse dielectic matrix, which have been shown to be real and positive for some appropriate

are the eigenvalues of the inverse dielectic matrix, which have been shown to be real and positive for some appropriate  [57]. Hence, simply multiplying the dielectric by a scalar

[57]. Hence, simply multiplying the dielectric by a scalar  such that

such that  is below unity can ensure convergence. This comes at the cost of reducing the efficiency of convergence for components of the density corresponding to low eigenvalues of the dielectric matrix. Defining the condition number of the dielectric as

is below unity can ensure convergence. This comes at the cost of reducing the efficiency of convergence for components of the density corresponding to low eigenvalues of the dielectric matrix. Defining the condition number of the dielectric as

it can be seen that the efficiency of the linear mixing procedure is limited by how close this ratio is to unity. One ansatz for the scalar premultiplying the dielectric is

which ensures, as much as the linear approximation is valid, that components of the density corresponding to the maximal and minimal eigenvalues of  converge at the same rate [57, 73, 82]. However, this form of

converge at the same rate [57, 73, 82]. However, this form of  ignores the distribution (e.g. clustering) of eigenvalues [83], and is not commonly used in conjunction with more sophisticated schemes such as those in section 4. An additional strategy to improve convergence would be to construct a matrix, the preconditioner, such that when the preconditioner is applied to

ignores the distribution (e.g. clustering) of eigenvalues [83], and is not commonly used in conjunction with more sophisticated schemes such as those in section 4. An additional strategy to improve convergence would be to construct a matrix, the preconditioner, such that when the preconditioner is applied to  , the eigenspectrum of the product is compressed toward unity. This is done in practice, see [15] for example, and is the core idea behind the Kerker preconditioner [84, 85], as discussed shortly.

, the eigenspectrum of the product is compressed toward unity. This is done in practice, see [15] for example, and is the core idea behind the Kerker preconditioner [84, 85], as discussed shortly.

It is clear from equation (43) that the convergence depends critically on the spectrum of the inverse dielectric matrix. The minimum eigenvalue is unity, and the large eigenvalues are dominated by the contributions from the Coulomb kernel, rather than the exchange-correlation kernel [73, 78, 86]. To see why this is, it is first asserted that the discretised Coulomb kernel possesses large (divergent) eigenvalues due to its  dependence, which will be demonstrated in the work to follow shortly. These large eigenvalues act as a generic amplification factor when multiplied by the eigenvalues of

dependence, which will be demonstrated in the work to follow shortly. These large eigenvalues act as a generic amplification factor when multiplied by the eigenvalues of  in equation (39), thus resulting in large (divergent) eigenvalues in the dielectric. Conversely, the exchange-correlation kernel does not generically possess such large eigenvalues. In semi-local Kohn–Sham theory, the exchange-correlation kernel is a polynomial of the density and potentially its higher order derivatives. Crucially, it has a simple, local (explicit) dependence on position given by

in equation (39), thus resulting in large (divergent) eigenvalues in the dielectric. Conversely, the exchange-correlation kernel does not generically possess such large eigenvalues. In semi-local Kohn–Sham theory, the exchange-correlation kernel is a polynomial of the density and potentially its higher order derivatives. Crucially, it has a simple, local (explicit) dependence on position given by  ,

,

that cannot, by definition, introduce x-dependent ill-conditioning. As such, no generic amplification of the eigenvalues of  occurs, and hence the exchange-correlation kernel can be ignored relative to the Coulomb kernel from the perspective of ill-conditioning. In other words, the following analysis utilises in the random phase approximation (RPA) by setting

occurs, and hence the exchange-correlation kernel can be ignored relative to the Coulomb kernel from the perspective of ill-conditioning. In other words, the following analysis utilises in the random phase approximation (RPA) by setting  in equation (39). As [78] notes, even in situations whereby the density vanishes in some region, meaning that negative powers of the density are divergent, the linear response function tempers this divergence, and the exchange-correlation contribution remains well-conditioned.

in equation (39). As [78] notes, even in situations whereby the density vanishes in some region, meaning that negative powers of the density are divergent, the linear response function tempers this divergence, and the exchange-correlation contribution remains well-conditioned.

The principle categorisation one can make when analysing generic behaviour of the response function is the distinction between Kohn–Sham metals and insulators. Consider a homogeneous and isotropic system, i.e. the homogeneous electron gas, such that  , which satisfies

, which satisfies

This is a convolution in real space, and hence a product in reciprocal space

where we label the Fourier components G by convention. This susceptibility is local and homogeneous in reciprocal space, and relates perturbations in the input density to a response by the output density (within the RPA) via

The susceptibility of the homogeneous electron gas, which constitutes a simple metal in the present context, is derived from Thomas–Fermi theory as the Thomas–Fermi wavevector  , which is constant9 [87]. It can therefore be seen that if there is any error in a trial input density, generated by an iterative algorithm, away from the optimal update, then this error is amplified by a factor of |G|−2 for

, which is constant9 [87]. It can therefore be seen that if there is any error in a trial input density, generated by an iterative algorithm, away from the optimal update, then this error is amplified by a factor of |G|−2 for  , where

, where  does not contribute. This sensitivity to error in iterates is identified as the source of charge sloshing, and is a somewhat generic feature of Kohn–Sham metals. Whilst the above derivation utilises Thomas–Fermi theory of the homogeneous electron gas to demonstrate constant susceptibility, it can be shown that all Kohn–Sham metals display this behaviour in the small

does not contribute. This sensitivity to error in iterates is identified as the source of charge sloshing, and is a somewhat generic feature of Kohn–Sham metals. Whilst the above derivation utilises Thomas–Fermi theory of the homogeneous electron gas to demonstrate constant susceptibility, it can be shown that all Kohn–Sham metals display this behaviour in the small  limit [75, 88]. A demonstration of charge sloshing is illustrated in figure 7, whereby a linear mixing algorithm purposefully takes slightly too large steps in the density. This leads to vast over-corrections in each iteration, giving the appearance that charge is 'sloshing' about the unit cell. This is not the only source of large eigenvalues of the dielectric in Kohn–Sham metals, as the susceptibility possesses inherently divergent eigenvalues independent from the amplification by the Coulomb kernel. To see this, consider the Adler–Wiser equation which is defined as

limit [75, 88]. A demonstration of charge sloshing is illustrated in figure 7, whereby a linear mixing algorithm purposefully takes slightly too large steps in the density. This leads to vast over-corrections in each iteration, giving the appearance that charge is 'sloshing' about the unit cell. This is not the only source of large eigenvalues of the dielectric in Kohn–Sham metals, as the susceptibility possesses inherently divergent eigenvalues independent from the amplification by the Coulomb kernel. To see this, consider the Adler–Wiser equation which is defined as

which is an expression from perturbation theory for the exact Kohn–Sham susceptibility [74, 75]. As [82] originally noted, the denominator  approaches zero when the input is gapless, i.e. it has a large density of states about the Fermi energy. If left untreated, this observation, in conjunction with the amplifying factor from the low

approaches zero when the input is gapless, i.e. it has a large density of states about the Fermi energy. If left untreated, this observation, in conjunction with the amplifying factor from the low  components of the Coulomb kernel, lead to significant ill-conditioning. The largest condition numbers arise when

components of the Coulomb kernel, lead to significant ill-conditioning. The largest condition numbers arise when  is extremely small; since G is a reciprocal lattice vector, this will occur for unit cells that are large in any (or all) of the three real-space dimensions. Whilst the dependence of the eigenvalues of the dielectric on unit cell size is in practice complicated [73], it suffices to note that increased unit cell size is a significant source of ill-conditioning. As compute power continues to grow, larger and larger systems are being tackled using Kohn–Sham theory, and the increase in required number of self-consistency iterations as a result of this instability poses serious issues for Kohn–Sham calculations. Inefficiencies of this kind are best dealt with using preconditioners, as section 4 demonstrates. On the other hand, insulators possess no such divergences in the eigenvalues of the dielectric matrix. It can be shown that in the low