Abstract

Defining and measuring progress in adaptation are important questions for climate adaptation science, policy, and practice. Here, we assess the progress of urban adaptation planning in 327 European cities between 2005 and 2020 using three ‘ADAptation plan Quality Assessment’ indices, called ADAQA-1/ 2/ 3, that combine six plan quality principles. Half of the cities have an adaptation plan and its quality significantly increased over time. However, generally, plan quality is still low in many cities. Participation and monitoring and evaluation are particularly weak aspects in urban adaptation policy, together with plan ‘consistency’. Consistency connects impacts and vulnerabilities with adaptation goals, planned measures, actions, monitoring and evaluation, and participation processes. Consistency is a key factor in the overall quality of plans. To help evaluate the quality of plans and policies and promote learning, we suggest incorporating our ADAptation plan Quality Assessment indices into the portfolio of adaptation progress assessments and tracking methodologies.

Similar content being viewed by others

Introduction

Since the Paris Agreement (PA) in 20151, there has been an increasing focus on assessing the progress of climate change adaptation across multiple sectors and regions2,3,4, including subnational jurisdictions such as local and regional authorities5,6,7,8,9,10,11,12,13,14,15,16. The Paris Agreement set an agenda for the Global Stocktake of adaptation, with a view to ‘review the overall progress made in achieving the global goal on adaptation’ (PA, art. 14d). Hence, an important question is what ‘progress’ means and how it could be assessed, at the international, national, and local levels. With the first Global Stocktake due in 2023, researchers have sought to address the issue, whilst acknowledging ‘the challenge of lacking consensus on how adaptation at this level can be tracked’17.

Hitherto, there is a wealth of information on climate responses at sub-national levels18. Cities and urban areas are increasingly recognized as important actors in climate responses1,19, with the potential to influence neighbouring as well as upper levels of government. In urban adaptation studies, most assessments focus on tracking and analyzing outputs, such as approved adaptation plans, as these currently represent the majority of adaptation activities on the ground20,21 and are often more easily comparable and trackable22 than, e.g., impacts and outcomes. Analyzing plans cannot tell the whole story in terms of actual progress in collective reduction (or redistribution) of climate risks23,24. However, it can provide information about the quality and relevance of adaptation processes and actions (also referred to as ‘measures’ in policy and planning literature), and help to assess the likelihood that we are advancing adaptation goals by reducing risks and increasing resilience in an equitable manner25,26. Scholars argue that ‘the best method to ensuring robust adaptation is to ensure rigorous adaptation planning processes’27. Indeed, higher quality plans have been found to decrease the cost of disasters more than lower quality plans28, and to deliver on implementation25,29, while having fewer trade-offs and more synergies with other societal goals25. Plan quality is here defined as the strengths of plans assumed to lead to effective implementation and reduced trade-offs with other societal goals, i.e. avoiding ‘maladaptation’30,31, measured by the degree of alignment with criteria of plan quality agreed on in the scientific literature (see further down).

Empirical evaluations of adaptation planning have so far helped to identify factors (or the lack thereof) that contribute to higher-quality plans. For example, a large majority of planned coastal adaptation measures worldwide are not driven by information on current or future climate impacts or risks;14 about half of coastal cities worldwide do not include guidance on implementation in their plans;14 and cities in the U.S.A.26 (as well as coastal cities worldwide22) often do not include any prioritization of adaptation initiatives. Plans often neglect equity and justice issues, i.e. they do not target those most in need and normally include limited information about who is benefitting from planned adaptation actions22,26. Empirical insights have helped to improve our knowledge of the kinds of adaptation actions necessary32, as well as the qualities of strong adaptation planning33 and the effectiveness of specific measures34,35. These contributions are crucial when assessing adaptation quality to enable adaptation learning processes.

Indeed, whether local governments are learning and improving in their abilities to plan for adaptation over time is an important and under-explored question. We assume that processes of collective learning36 through parallel and sequential peer-to-peer transfer of knowledge and capacity building and transnational networks and other types of science-policy collaborations enhance urban adaptation planning. We hypothesize that collective learning for adaptation is taking place and that it is critical in shaping positive adaptation policy outputs, i.e. that the quality of adaptation plans increases over time.

To test this hypothesis, we create an index of adaptation plan quality—the ADAptation plan Quality Assessment index ADAQA. ADAQA is based on six well-established principles of plan quality (1. fact base; 2. goals; 3. measures; 4. implementation; 5. monitoring & evaluation of measures; and 6. societal participation; see Methods Tab. 1)7,26,33,37,38,39,40. We develop three sub-indices ADAQA-1/2/3—varying in complexity and focus—scrutinizing the sensitivity of the index construction method (Fig. 1 and Supplementary Fig. 1). ADAQA-1 stands for depth, particularly in relation to fact base and measures (the principles with most response options), allocating 1 point per response option. ADAQA-2 stands for breadth, mainly with regard to adaptation measures, allocating one point per topic question in the assessment questionnaire. ADAQA-3 combines the most important quality criteria based on our expert judgement, standing for a combination of consistency and justice between risks, measures, monitoring and evaluation, and participation.

ADAQA-1 is calculated by allocating one point to each response option—representing depth and detailedness of plans, particularly concerning fact base and measures (the principles with most response options). ADAQA-2 is calculated by allocating one point to each addressed topic/ question, representing breadth and diversity with a focus on different sectoral measures (principle III. has the most questions). ADAQA-3 is an elaborate index reflecting the equal importance of adaptation principles (1/6 for each principle; not reflected in the figure) and topics therein, while at the same time stressing the need for consistency between impacts/ risks/ vulnerabilities, adaptation measures, monitoring and evaluation, and participation (a more detailed version of the index construction rationale is shown as Supplementary Fig. 1).

We mainly show the results of the most elaborate sub-index ADAQA-3 in the main text, and the results of the other two sub-indices as Supplementary Information (SI). We use the index to track and evaluate the quality of urban adaptation plans from 2005 to 2020 using a representative sample of 327 large and medium-sized cities across the EU-28. We compare urban adaptation plan quality evolution and draw conclusions on the question of progress and learning for adaptation, with a special focus on climate risk assessment, vulnerability and equity/ justice considerations in adaptation planning.

Results

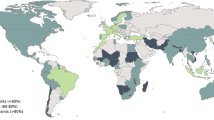

Across the representative sample of 327 European cities (cities of the former EU-28 as in Eurostat’s City statistics database, formerly known as the Urban Audit database30,41), 167 (51%) cities have an adaptation plan (Fig. 2 and Supplementary Table 1). Most plans are found in the United Kingdom (UK) (30 plans), Poland and France (22 plans each), and Germany (19 plans). A total of 53 of these 167 cities (32%) developed it under a national, regional, or local law that requires municipalities (sometimes above a certain threshold of population size) to develop an urban climate adaptation plan. At the time of plan publication (if available, the date that features on the pdf-document; if not available, we refer to the date of adoption, or approval by the council, or year of enforcement start—in that order of availability), this applied to cities in Denmark, Ireland, the UK and France. For those cities that published multiple plans, we examined the most recent version, given that we wished to identify whether the plans of our entire sample were improving in quality over time.

For cities with adaptation plan(s): the quality of ADAQA-3 is shown by the size of the hexagon. Colours refer to the age of the plan, i.e. the year of publication, with roughly equal cities in each age group (dividing the total of 167 cities with adaptation plan(s) by three). Yellow depicts plans that are published before mid-2015. Blue depicts plans that are published between mid-2015 and mid-2018. Green refers to plans that are published after mid-2018. Cities without an adaptation plan/ plans are shown by small grey dots. Shaded countries have national legislation that requires cities to develop urban climate adaptation plans (France, the UK, Ireland, and Denmark).

The average score of ADAQA-3, our most detailed and comprehensive index of plan quality, is 34 compared to a maximum score of 100 (with a standard deviation of SD = 13.6). This means that the average adaptation plan in European cities is just about one-third of the total possible quality score. The minimum of ADAQA-3 is 5.15 (Belfast) and the maximum is 65.57 (Galway). ADAQA-1 and ADAQA-2 yield an average of 22.19 and 53.06 points (scaled to a maximum of 100 points), respectively, showing that adaptation plans reach on average one-fifth in depth and about a half in breadth (details are provided as Supplementary Table 2).

Adaptation plan quality over time

The oldest urban adaptation plan in our sample was published in 2005 (Lincoln, UK). Since then, steadily more plans were published with a total of 35 plans in 2019 and 27 plans in 2018 (21% and 16% of cities with plans), respectively. Plan quality differs significantly between older (<mid-2015), medium-old (mid-2015 to mid-2018) and recent plans (>mid-2018), steadily and significantly increasing from older to medium-old to recent plans, for all three indices (Tab. 1, details provided as Supplementary Tables 3.1–3.4).

Plan quality also improved on an annual basis, ranging from a score of 12.91 in 2005 to 33.41 in 2020 (Fig. 3) with a peak of 44.55 in 2019. Assuming a linear relationship, the annual increase in plan quality is significant for ADAQA-3 (R² = 0.57; F(df1, 14) = 18.41, p < 0.001) as well as for ADAQA-1 (R² = 0.26; F(df1, 14) = 5.02, p = 0.042), but not for ADAQA-2 (R² = 0.15; F(df1, 14) = 2.44, p = 0.140), meaning that plans generally improved over time and became more detailed and facetted within certain topics, but were not necessarily broader in terms of the topics and sectors they addressed (details provided as Supplementary Tables 4.1–4.6 and Supplementary Figs. 2.1 and 2.2). Assuming linearity, plan quality increased by about 1.3 points per year from 2005 to 2020.

The scores are displayed per city and year in which the adaptation plan was published, plus averages of each year and linear trend line, 2005–2020. Each dot represents the plan/ plans in one city. The dot colours indicate the temporal group the adaptation plan belongs to, i.e. yellow: older (before mid-2015), blue: medium-old (mid-2015 to mid-2018) and green: recent plans (after mid-2018), with equal plans in each group. We call out the first three cities with the largest adaptation plan quality score in each temporal group. The exact scores of each city for ADAQA-1/2/3 are provided as Supplementary Table 1.

Top ranking cities

The cities of Sofia (BG), Galway (IE), and Dublin (IE) scored highest according to ADAQA-3. According to ADAQA-2, Sofia, Potsdam (DE) and Galway rank highest, while based on ADAQA-1, the cities of Sofia, Waterford (IE), and Dublin (IE) lead the ranking (see Supplementary Table 1). With that, the cities of Galway, Sofia, and Dublin are in the top three for at least two of the three sub-indices. Potsdam’s plan stresses measures/ policies; while Waterford excels in depth and detailedness.

Notably, Galway, Dublin, and Waterford are required by the Irish government to produce adaptation plans that included certain features (e.g. an assessment of climate risks to the urban area), and this contributed towards their high scores. The western port city of Galway achieves the highest score and performs particularly well against Principles 1 (fact base: impacts), 4 (implementation), and 6 (participation) in ADAQA-3. This illustrates that it developed an extensive knowledge base of past, present and future risks, detailed implementation procedures and an inclusive approach to public participation. It also did well to take account of vulnerable sectors in its plan, contributing towards a score for Principle 1 that was close to the maximum. Galway achieves the maximum score for Principle 4 because it set clear priorities for different actions, identified responsible parties, set out a timeline for implementation, and developed a detailed budget. Furthermore, because it involved a wide range of stakeholders in the plan-making process; the city scored higher than any other under Principle 6.

The Bulgarian capital city of Sofia was in second place, scoring significantly above average against Principles 1 (fact base: impacts), 3 (measures), and 4 (implementation). Similar to Galway, its plan included details of past, present, and future impacts and risks and also considered climate impacts in several sectors. Sofia’s plan paid particular attention to vulnerable societal groups, scoring higher than any other city in this category. Regarding Principle 3, the city included measures for almost every sector except agriculture and forestry in its strategy, contributing to a high score for measures/ policies. It also achieved the maximum score for Principle 6 by actively involving many stakeholders in the plan-making process.

The Irish capital Dublin was in third place, largely due to its high scores against Principles 1 (fact base: impacts), 2 (goals), 4 (implementation), and 5 (monitoring & evaluation). Like Galway and Sofia, it included comprehensive information about potential climate impacts over time (Principle 1), but Dublin performed particularly well against Principle 2 because it formulates a number of concrete adaptation goals relating to identified risks; moreover, it proposed assessing these adaptation goals quantitatively. The city’s high score against Principle 4 reflects its well-structured description of implementation tools regarding priority, responsibility, timeline, and budget. Finally, Dublin included detailed monitoring and evaluation tools in its plan, which helped it to achieve the maximum score in category 5.

Adaptation plan quality principles over time

Plan quality has generally increased across all principles over time for ADAQA-3 (Fig. 4, details provided as Supplementary Tables 5.1–5.12). Figure 4 reveals that plans are generally best in detailing adaptation measures (50.68% of achievable points), followed by naming adaptation goals (49.60% of achievable points) and implementation tools & processes (45.55%). They report much less on public participation during plan creation (16.89%) and monitoring and evaluation (20.38%).

While plan quality has increased across all principles over time for ADAQA-3 (Fig. 4 and Supplementary Tables 5.1–5.12) this has not always been the case in a statistically significant way. The increase of ‘Principle 2- adaptation goals’ from medium-old to recent plans (ADAQA-3: p = 0.015) was statistically significant, as was ‘Principle 5 - monitoring and evaluation’ from older to medium-old plans (ADAQA-3: p = 0.027). Looking at ADAQA-1, plans significantly increased in depth from older to medium-old plans regarding fact base and monitoring and evaluation, and from medium-old to recent plans concerning adaptation goals, adaptation measures, and participation (Supplementary Tables 5.1–5.12). Looking at ADAQA-2, plans got significantly broader from older to medium-old plans with regard to monitoring and evaluation, and from medium-old to recent plans concerning participation (Supplementary Tables 5.1–5.12). This means that across all indices, the quality of adaptation plans increased significantly with regard to monitoring and evaluation from older to medium-old plans (before and after mid-2015), and regarding participation from medium-old to recent plans (from mid-2015/ mid-2018, to after that time).

Figure 5 displays the scores of each plan principle per city, along with the year of plan publication. Assuming a linear relationship between annual averages and year of the plan, regression analysis reveals a significant annual increase of plans in Principle 2 - adaptation goals (R² = 0.26; F(df1, 14) = 4.82, p = 0.048), Principle 3 - adaptation measures (R² = 0.55; F(df1, 14) = 19.55, p < .0.001), Principle 4 - implementation tools and processes (R² = 0.30; F(df1, 14) = 5.90, p = 0.029), Principle 5 - monitoring and evaluation (R² = 0.49; F(df1, 14) = 15.48, p = 0.001), and Principle 6 - participation (R² = 0.43; F(df1, 14) = 12.12, p = 0.004; all ADAQA-3; see Supplementary Tables 6.1–6.36 for details). As regards ADAQA-1, plans got annually significantly deeper regarding Principles 2, 5 and 6; and annually significantly broader regarding Principles 4 and 5, as shown by ADAQA-2 (Supplementary Tables 6.1–6.36). Therefore, Principle 5—monitoring and evaluation—is the one principle that increased annually significantly across all index construction methods. However, there are still cities in each principle and each temporal group that score zero or very low.

The scores and the annual averages of the six plan quality principles (see Fig. 1) for individual cities over time. a The individual scores for fact base, b for goals, c for measures, d for implementation, e for monitoring and evaluation, f for participation. Each dot represents the plan/plans in one city. The dot colours indicate the temporal group the adaptation plan belongs to, i.e. yellow: older (prior mid-2015), blue: medium-old (mid-2015 to mid-2018) and green: recent plans (after mid-2018), with equal plans in each group.

Consistency over time

One of the central characteristics of ADAQA-3 is its focus on consistency between identified climate risks on the one hand, and the measures that the city plans and monitors on the other. ADAQA-3 contains five consistency indicators testing whether the following are aligned:

-

Identified impacts/risks for the city and the city’s adaptation goals (consistency 1),

-

Identified impacts/risks for vulnerable sectors/ industries and planned adaptation measures (consistency 2),

-

Identified impacts/risks for vulnerable groups and planned adaptation measures (consistency 3),

-

Planned adaptation measures for vulnerable groups and monitoring/ evaluation processes (consistency 4), and

-

Identified impacts/risks for vulnerable groups and participation in plan creation (consistency 5).

On average, consistency in ADAQA-3 improved slightly over time, mostly from medium-old to recent plans (Fig. 6f). In particular, the alignment of impacts/risks with adaptation goals (consistency 1), and of vulnerable sectors/ industries with adaptation measures (consistency 2) increased over time. Also, the alignment of impacts/risks for vulnerable groups and participation (consistency 5) and the alignment of adaptation measures with a focus on vulnerable groups and monitoring & evaluation (consistency 4) improved, although they remain generally low. However, plans became less aligned concerning the impacts/risks they mention for vulnerable groups and their adaptation measures (consistency 3); i.e. earlier plans were more consistent against this indicator. We see that the plans in our sample refer most frequently to ‘infants, kids, young people’, ‘the poor, including low socio-economic status’, ‘the elderly’ and ‘sick people and those in hospitals/care institutions’ when referring to vulnerable groups.

The 5 measures of consistency used in ADAQA-3 (a–e), and the average of all 5 consistency measures across temporal groups in (f). Checking for consistency includes, for examples, comparing the alignment between impacts/risks mentioned in the impact section and those impacts/risks addressed via adaptation goals (consistency 1), or between impacts/risks mentioned for vulnerable sectors/industries and adaptation measures (consistency 2), or between impacts/ risks mentioned for vulnerable groups and those addressed via adaptation measures (consistency 3), or between vulnerable groups targeted by adaptation measures and the involvement of these groups in monitoring and evaluation (consistency 4), or between impacts/ risks mentioned for vulnerable groups and the involvement of these groups in plan creation (participation) (consistency 5). The bar colours indicate the temporal group in which the adaptation plan was published, i.e. yellow: older (prior mid-2015), blue: medium-old (mid-2015 to mid-2018) and green: recent plans (after mid-2018) with nearly equal plans in each group.

In relation to individual consistency indicators:

-

Consistency 1 - Alignment of impacts/risks and adaptation goals (Fig. 6a): In total 152 cities (91%) included information on particular impacts/risks on their jurisdiction in their plans. On average, 3.8 impacts/ risks (SD = 1.8) were mentioned in the impact/ risk section, with urban temperature (#140; 83%) and precipitation variation (#138; 83%) most common. Landslides (#32; 19%) and coastal flooding (#50; 30%) were mentioned least often. Adaptation goals specific to impacts/risks were mentioned by 120 (72 %) of cities, with an average of 2.2 impacts/risks (SD = 1.9) per plan. Hence substantially fewer goals addressing impacts/risks were set than impacts/risks mentioned. Inland flooding was targeted most often in the section on goals (#86; 51%), followed by urban temperature (#79; 47%) and precipitation variation (#79; 47%). The performance of our top-ranking cities is diverse: Potsdam scores lowest (score 0); there is no alignment between impacts/ risks and goals. Galway and Dublin are partly aligned (scores of 2.9 and 2.5, respectively); while Waterford’s plan is highly aligned (maximum score of 5.0).

-

Consistency 2 - Alignment of vulnerable sectors/ industries and adaptation measures (Fig. 6b): About 80% (#133 of 167) of the plans mentioned vulnerable sectors or industries, topped by the building (#88; 53%) and water sector (#87; 52%), followed by transport (#84; 50%) and health (#77; 46%). Of the sectors listed in our survey, social institutions and services (#28; 17%), civil protection (#30; 18%) and waste (#31; 19%) were mentioned least often. However, the picture is slightly different when it comes to the sectors in which measures are planned. Top of the list here was environment, greenery, and biodiversity (#147; 88%), followed by the water (#141; 84%) and building sectors (#129; 77%). The fewest measures are planned in the tourism sector (#39; 23% of plans). We note, however, that planned measures are not only effective in the sector they are implemented. In our top-ranking cities, all plans are highly aligned in terms of vulnerable sectors/ industries and adaptation measures (scores of 5 for Potsdam and Sofia; score of 4.5 for Galway, Waterford, and Dublin).

-

Consistency 3 and 5 - Alignment of impacts/risks for vulnerable groups with corresponding adaptation measures (Fig. 6c), and with participation (Fig. 6d): Two-thirds of plans (#111; 67%) mentioned impacts/risks for vulnerable groups, while fewer than half include respective adaptation measures (#74; 44%). The elderly and infants are mentioned most often, both when it comes to impacts (elderly: #76; 46%; infants: #57; 34%) as well as planned adaptation measures (elderly: #49; 29%; infants: #35; 21%). Only 5% of plans (#9) include vulnerable groups in their participation strategy, mostly children (#3; 2%). Among the five top-ranking cities, Galway, Waterford and Sofia have high scores for consistency #3 (maximum of 5); while for consistency #5 the only city with maximum scores is Galway. Galway was the only city to mention that vulnerable groups were involved in the plan making process.

-

Consistency 4 - Alignment of adaptation measures for vulnerable groups and monitoring and evaluation (Fig. 6e): While about 44% of plans (#74) included measures that target particular vulnerable groups, only 7% of plans (#12) also included these groups in the monitoring and evaluation processes. With most measures currently planned for the elderly (#49; 29%) and infants (#35; 21%), the poor are often part of monitoring and evaluation, although at a negligible rate (#3; 2%). In general, it is extremely rare that vulnerable groups are included in monitoring and evaluation. Even in the list of top-ranking cities there is only one case, Galway.

Overall, adaptation plans get better in aligning goals with impacts/risks (Fig. 6a), but this is still not done comprehensively. In addition, plans focus more on impacts/risks of vulnerable sectors/industries (Fig. 6b), than on the needs of vulnerable groups of citizens (Fig. 6c). Also, the consistency between impacts/risks and adaptation measures is larger for vulnerable sectors/ industries than for vulnerable social groups. Moreover, vulnerable groups are rarely involved in participation processes (Fig. 6d) and the vast majority of plans make no mention of monitoring & evaluation to address their specific needs (Fig. 6e).

Climate Change Adaptation Scoring tool: Online tool calculating ‘ADAptation plan Quality Assessment’ (ADAQA) indices

In order to promote open science and good practices, the heuristics proposed in this study are available as an online tool. Any municipality, region, other sub-national or non-state actor as well as national governments can assess the quality of its adaptation planning process and related documents via our indices. The tool is able to calculate all three indices ADAQA-1/ 2/ 3 and allows comparison with other localities, either inside or outside our city sample. It is available at: https://www.lcp-initiative.eu/. Further details are given as Supplementary Notes 1.

Discussion

In the absence of comparable, globally available indicators of adaptation and its outcomes17, a plan quality evaluation framework is a valuable proxy indicator—assuming good plans are necessary, though certainly not sufficient, for successful advances in adaptation implementation. Quality assessments of climate adaptation plans and policies should be included in the portfolio of adaptation evaluations regarding success and effectiveness, such as the Global Stocktake of the Paris Agreement in 2023 and related adaptation monitoring and tracking procedures.

Although plan evaluation techniques have been created to harmonize and compare plans intending to manage similar domains or sectors, urban adaptation planning should also be context-specific, and thus require a specific evaluation framework to recognize that. What is important, and what is not, will depend on the climate action priorities and context-specific vulnerabilities, as well as on the planning culture, legal framework and local administration competences in each city, and its geographical context. Inappropriately planning for climate threats could entail as many risks as the climate impacts themselves17. Adaptation plans need to identify and set out how to coherently address specific climate threats. In this study, we identify critical planning components and use them to evaluate the quality and progress of urban adaptation planning in European cities, hypothesizing that these cities are improving and preparing better plans over time.

Based on six well-established principles of plan quality and consistency measures, we developed the ADAQA index and its three related sub-indices to calculate the adaptation plan quality for a representative sample of 327 large and medium-sized cities across the EU-28, half of which had adaptation plans, and to assess their development over time. With the most elaborate index (ADAQA-3) focusing on consistency of plan content, in particular regarding climate risk assessment, vulnerability and equity/justice considerations14,42, we found that the average adaptation plan in a European city attained around one-third of the total possible quality score. On average, plans covered half of the possible scores regarding adaptation measures and adaptation goals, and a little less (45% of attainable score) regarding implementation tools and processes. We found particularly little information on public participation in plan creation (17% of attainable score) and monitoring and evaluation (20% of attainable score) in our city sample.

European adaptation plans have steadily improved in quality over time (across all sub-indices), both in total per year and across the three nearly equally-split temporal groups (<mid-2015, mid-2015 to mid-2018, >mid-2018), therefore confirming our hypothesis. Plan quality increased by about 1.3 points per year from 2005 to 2020, assuming a linear progression. We suggest that collective learning36 through parallel and sequential peer-to-peer transfer of knowledge and capacity building, in particular through national guidelines, transnational networks and science-policy collaborations play a crucial part in this process. The fact that three Irish cities are ranked in the top five of our sample supports this assumption. In this context, regional, national and international organizations have sought to harmonize urban adaptation planning through guidelines and manuals, including the ISO/TS 14092:2020: Adaptation to climate change—Requirements and guidance on adaptation planning for local governments and communities43.

However, the quality has not evenly increased for every principle. It was significant for monitoring and evaluation (from the older to medium-old plans, i.e. before and after mid-2015), and public participation (from medium-old to recent plans, i.e. from mid-2015 to mid-2018, to after that time). However, although cities are involving the public more in developing plans recently, and are undertaking more monitoring and evaluation over time, both remain on a very low level.

Consistency between identified risks and planned adaptation measures and actions is a crucial factor for the overall quality of urban adaptation plans. Consistency increased slightly over time, mostly from medium-old to recent plans and in particular with relation to the alignment of impacts/risks with adaptation goals (consistency 1), and of vulnerable sectors/industries with adaptation measures (consistency 2). The plans’ consistency declined regarding the alignment of impacts/risks for vulnerable groups and related measures (consistency 3). Therefore, plans focus more on the impacts/risks for vulnerable sectors/ industries than on the needs of vulnerable groups of citizens. Although the focus on vulnerable industries may indirectly benefit vulnerable social groups, this is not recognized across plans. As evidence of this, while about 45% of plans included measures that target specific vulnerable groups, only 5% of plans include them in their participation strategy (consistency 5), and only 7% include the respective vulnerable groups in the monitoring and evaluation processes (consistency 4). The calculated consistency scores remained very low over time, in particular with regard to public participation of vulnerable groups and their involvement in monitoring and evaluation.

Our study is limited by the reliability of data collected by a large group of people speaking different languages and working in different scientific and socio-political contexts. Although our questionnaire to evaluate the plans consisted mainly of closed questions to avoid inconsistencies, we still found some questions particularly difficult that may explain outliers across the submitted forms. For example, some cities may have scored higher against certain principles than others, because they are more exposed to particular climate risks such as coastal flooding, forest fires, or landslides. Similarly, our analysis does not account for local demographics (e.g. a higher or lower proportion of older people could lead to proportionally more or less attention paid to the vulnerabilities of the elderly) or economy (the presence of industries that are more or less susceptible to climate risks could shape urban adaptation planning accordingly). Finally, since urban climate adaptation planning in the EU is a highly dynamic process, keeping our database up-to-date was a recurrent challenge. Therefore, our results should be regarded as a snapshot of the state of progress of urban climate adaptation planning in the EU until early 2020.

We found that collective learning on good adaptation planning is taking place, but also that plan quality as measured by our ADAQA indices was still very low across our sampled cities. The average adaptation plan records just about one-third of the total possible quality score, with Galway in Ireland attaining the highest mark of 67 (of 100). Our analysis shows room for improvement in European urban adaptation planning, in particular with regard to consistency in plans, the follow-up of measures, and the involvement of vulnerable groups. To help evaluate the quality of plans and policies and promote learning, we suggest incorporating our ADAQA indices into the portfolio of adaptation progress assessments and tracking methodologies.

Our study did not set out to prove that higher-quality plans result in better implementation; that is a matter for further research. Assuming this relationship exists for adaptation (in the same way as in other sectors according to plan quality literature), our findings have significant relevance for scholars, practitioners and decision-makers working in the area of urban climate adaptation. In particular, our adaptation quality indices allow us to rate the individual performance of cities, thereby providing a knowledge base of the individual state and quality of adaptation planning in cities across the globe. Furthermore, our study enables us to learn from progress within and across cities and plans, now and over time. What is more important, is the selection of components that, based on specialized adaptation planning literature, are combined and used to track the quality of urban adaptation plans. For example, by applying and comparing the indices, we can foresee a series of improvements for the next generation of plans and attempt to identify a series of enablers that could fast-track such improvements. This might include guidance on using risk information for adaptation actions; formulating crisp and quantitative adaptation goals; and incorporating justice and equity considerations in adaptation planning and monitoring. We can prompt governments and agencies to provide resources to cities to acquire these capacities. The ADAQA indices can therefore serve as a benchmark to facilitate learning, and to draw scientific conclusions and policy recommendations. The accessibility of the online tool and the self-assessment option is also a good opportunity to provide science-policy arenas for knowledge transfer and collective learning.

Methods

Methodology and framework

In meta-analyses of plan evaluation studies, Berke and Godschalk38 and Lyles and Stevens37 identified six principles commonly used for plan evaluation: (1) goals, (2) fact base, (3) policies, (4) public participation in plan creation, (5) interorganizational coordination, and (6) details regarding implementation and monitoring26. These six principles are increasingly viewed as ‘standard’ principles for plan evaluation39 and are considered to be applicable across planning domains and scales (e.g., local, regional and national/federal37).

Principles of plan evaluation are derived from and partly aligned with stylized adaptation planning and implementation frameworks (Climate adaptation strategy development process;44,45 European Environment Agency (EEA) Adaptation Support Tool;46 Knowledge to Action (KTA) Framework47 with integration of guideline adaptation; CAN-IMPLEMENT;48 Climate-change adaptation and risk management process of the IPCC;49 Integrated urban climate change planning47,48). Across the literature, stylized adaptation frameworks/ processes slightly differ in their level of detail and focus on different stages and sub-stages. However, they all picture a decision cycle (sometimes with sub-cycles49) that roughly lays out the main steps of an ideal adaptation planning and implementation process, corresponding to a sequence of steps where planners are thought to review existing and future climate and weather conditions, formulate goals, identify potential strategies to achieve the goals, analyze costs, effectiveness, synergies and trade-offs between goals, and then select the optimal set of strategies for implementation, before moving on the monitoring and evaluation. By picturing an idealized adaptation process, these frameworks are assumed to be good guides for assessing the quality—i.e. strength and weaknesses—of urban adaptation plans26.

In order to account for the state of the art, we use an updated form of the principles compared to those given in Lyles and Stevens37 and Berke and Gottschalk38, dropping the principle of interorganizational coordination and stressing the need for more information on implementation50, monitoring, evaluation and learning46, as done in Woodruff and Stults26. Assessing interorganizational coordination would also be difficult based on plans and policies in European cities. Additionally, we add aspects of procedural justice, i.e. participation of vulnerable groups in plan creation as well as aspects of distributional justice, i.e. vulnerable groups mentioned as part of establishing the fact base, adopting policies & measures, and monitoring & evaluation. Figure 1 shows a stylized adaptation process and related plan quality principles, as used in this study. These principles and related indicators are summarized in the ADAptation plan Quality Assessment (ADAQA) indices that can be used to assess the change of plan quality over time and space (see section on Plan Quality Index further down).

Cities are the unit of analysis (in contrast to rural areas) as climate change planning is associated with the size and the density of a community51,52. We concentrate on European cities because their need for adaptation—many European cities are characterized by severe impacts and risks21,53,54—and the diversity of adaptation policies and plans available on the national50 and local level55. Also, the availability of a representative, uniform sample of cities and city characteristics allow comparison. We also rely on an established network of European researchers (https://www.lcp-initiative.eu) who are able to speak all former EU-28 (EU-27 + the United Kingdom) languages in a fully proficient way and have extensive knowledge of the related local, regional and national climate policies of their analyzed cities.

Data collection

City sample: We base the analysis on a representative sample of 327 cities across the former 28 European Union (EU) member states (EU-27 plus the United Kingdom) (EU, 2011). The selected cities are part of the Urban Audit (UA), a Europe-wide initiative for statistical monitoring of major European cities since 2003. The UA regularly collects secondary statistics according to a common protocol from national statistical offices in order to observe the development of cities and their functional urban areas (as defined by the European Commission) on their demographic, social, economic, environmental and cultural development. The UA database thus provides reliable and internationally comparable information on cities for the purposes of regional and urban planning, prosperity and sustainable development.

The UA cities were selected by the European Commission, Eurostat and the national statistical offices based on the following criteria:56 (i) approximately 20% of the population is covered in each country; (ii) national capitals and, where possible, regional capitals are included; (iii) large (more than 250,000 people) and medium-sized urban areas (roughly 50,000 and maximum 250,000 population) are to be included; and (iv) urban areas should be geographically dispersed within countries. The UA cities are therefore a balanced and regionally representative sample of cities in Europe.

We analyze all UA core cities of the former 28 European countries. Authors have worked in and are familiar with the language and respective urban and climate policies. However, as cities are developing, the UA selection of cities is changing. Slightly different groups of cities per country could be included in different data collection periods. We use this sample (see SI), to allow comparison with previous publications using the exact same city sample9.

Urban climate (change) adaptation plans: Cities and other local municipalities develop a range of plans and policies that address multiple sectors, topics and jurisdictions55, leading to various forms of local climate plans or policies6. Therefore, inclusion and exclusion criteria have to be set up that allow selecting a uniform sample of plans across the selected cities and countries (EU-28).

Based on Reckien et al. 6,55 we use Type-A 1/2/3 Local Climate Change Adaptation Plans (plans that are dedicated to addressing climate issues, as single adaptation plans or joint adaptation-mitigation plans) and Type B plans (broader plans dedicated to resilience and sustainability). For our definition of Type-A and Type-B local climate change adaptation plans, we adapted the approach of Woodruff & Stults26 slightly, by including plans in which (1) the central topic of the plan is climate change adaptation, climate resilience, climate preparedness and/or sustainability; (2) the plan is written by or for an entire local community/ municipality (not only for parts of it); (3) the plan took a comprehensive approach to adaptation/resilience/sustainability by focusing on more than just one or two topics or adaptation sectors (that is, we exclude sector-based adaptation plans).

This definition excludes heatwave plans (as single documents), flood plans (as single documents) (both Type C, as defined in Reckien6,55, plans on municipal operations (Type D) as well as municipal emergency and civil protection plans (both Type E). However, we use all related and relevant documentation regarding adaptation to answer the questionnaire (see DANSEASY57). Sometimes, an adaptation strategy, an action plan, an implementation plan, and/or a monitoring plan exist (whereas, e.g., only the strategy or the action plan might be formally adopted by the local council/ municipality). In such cases we code and analyze the information in all related documents. We also used other relevant adaptation information listed on the respective municipal websites. All extracted data, including names and websites of plans, secondary statistics, and extracted data of plans are available as a Datafile on DANSEASY57. All coded and analyzed plans are available as .pdfs upon request.

We analyze all climate adaptation plans within the UA core cities of the former 28 European countries. If updates were available we analyzed the last updated plans.

Coding for plan quality topics, indicators and index: As mentioned above this study uses plan quality principles that are aligned with the idealized adaptation planning and implementation process, slightly modified from Woodruff and Stults26 (see Fig. 1). Table 1 shows the plan quality principles used in this study, compares them with those in Woodruff and Stults26, and details out the related indicators (i.e. questions to each quality principle and related response options). The latter are used to form an index that allows to grade the quality of plans, using the binary response options as an input to a composite index.

The questions posed for each plan quality principle and in particular their response options (Supplementary Methods 1, columns 3 and 4) are based on the scholarly literature and practitioner guidelines, such as: the European Environment Agency’s Adaptation Support Tool46, together with the coded impacts as identified in Araos et al. 5, after Revi et al. 58 In addition:

-

Fact base and the adaptation policies, sectors and systems come from Araos et al. 5, complemented only by measures in the sectors of Food & Agricultural and Waste management,

-

Adaptation measures: Additional input comes from Connell et al. 59, Guyadeen60, and Austin et al. 61.

-

Besides those established plan quality topics and indicators, aspects of equity and justice are included where appropriate across the evaluation scheme. This is a consequential addition respecting that equity dimensions are increasingly recognized as important for adaptation policy62.

In general, response options are in the form of a binary value (yes/no; 0/1) (Supplementary Methods 1, column 5), but these can relate to the general, overarching question (plan topic and indicators) or to more detailed examples to an overarching question. For example, to question ‘4.2 Transport: Does the plan include at least one measure on transportation and, if yes, which ones?’ (Supplementary Methods 1, column 3) there is the possibility to choose between ‘None - Public transport – Bicycle-oriented development – Pedestrian-oriented development – Other’ (Supplementary Methods 1, column 4) and indicate each by a binary score, while for the question ‘4.5 Waste: Does the plan include at least one measure on waste management?’ there is just the possibility to globally answer yes/ no. The response options and their details are based on the scholarly literature and assumed to cover and represent the currently most prominent adaptation characteristics in Europe. Depending on the ADAQA index, each response option contributes differently to the overall score (Supplementary Fig. 1).

We coded for the plan quality topics and indicators presented as Supplementary Methods 1 as well as for general information of the adaptation plans and their governance context, e.g. the type of plan6; the year of adoption, publication, or approval of the plan; the availability of a national adaptation framework/policy; the availability of previous plans; web location, and others57.

The questionnaire was reviewed by all members of the team and subsequently piloted using 20 plans from five different European countries. A number of issues with interpretation and understanding across various cultural contexts could be identified through the pilots and subsequently erased for the final study.

Content analysis: Content analysis is commonly used for plan evaluation studies involving the systematic reading of a body of text, images and symbolic matter63. Using content analysis researchers can determine the presence of certain words or concepts within text as well as quantify and analyze the presence, meanings and relationships of such certain words or concepts. Generally, there are two types of content analysis used in qualitative research: conceptual analysis and relational analysis. ‘Conceptual analysis determines the existence and frequency of concepts in a text. Relational analysis develops the conceptual analysis further by examining the relationships among concepts in a text’64. Here, we use conceptual analysis looking for the existence of certain words, terms and concepts in the documents.

Columbia University64 lists eight general steps for conducting a conceptual analysis. The first six steps involve important decisions before the actual coding can begin. Supplementary Methods 2 gives an overview of these steps and which decision has been made for the content analysis in this study.

Reliability and Validity: Reliability (consistency of measurement): When teams of analysts code information based on a common evaluation scheme or coding system, interrater reliability can be an issue, i.e. that coders code the same information in different forms. Because of the human nature of researchers, coding errors can never be eliminated but only minimized. Three criteria comprise the reliability of a content analysis:64 (1) stability: the tendency for coders to consistently re-code the same data in the same way over a specific period of time; (2) reproducibility: tendency for a group of coders to classify categories membership in the same way; (3) accuracy: extent to which the classification of text statistically corresponds to a standard or norm.

Validity (accuracy of the measurement): Validity of a content analysis can relate to three aspects:64 (1) Closeness of categories; (2) Conclusions drawn; and 3) Generalizability of the results to a theory. In our study, potential issues regarding (1) need to be checked. In order to minimize the risk of validity issues under (1) we used multiple classifiers (multiple choice questions) and included a field for additional information. We also gave examples for each category.

Data cleaning

Interrater reliability can be an issue with our data, having involved 36 people in coding overall. To check interrater reliability, we re-checked one topic (V. Monitoring and Evaluation). To do so, one team member who was not involved in the original coding, re-coded all the data entries for V. Monitoring and Evaluation (M&E), while additionally separating questions that could be separated into multiple codes. This resulted in a deviation of 15 data points, i.e. 15 coded entries of 167 data points in total (167 cities with an urban adaptation plan) had to be corrected. This translates into a reliability of 91%, which is a good outcome. Generally, 80% is an acceptable margin for reliability64. We did not re-check other data entries.

However, during data cleaning we realized some potential issues with the closeness of the categories. Decisions are reported as Supplementary Methods 3.

We do not see issues with other questions than those that have been mentioned and hence corrected. Nevertheless, issues of closeness cannot be ruled out completely, and a code book with definitions of each explicit variable with synonyms is a relevant step forward.

Finally, a number of columns and cases were cross-checked by other author(s), which include the data on M&E (all topics/questions, all cities), the time data (year and month of publication, adoption, approval, enforcement start, enforcement end of all plans), and all data of the cities of Ireland.

Regarding the temporal dimension: Each plan in the sample corresponds to a particular year (of publication, adoption, and/ or enforcement start, enforcement end). Based on that, plans are grouped into three temporal clusters of older (<mid-2015), medium-old (mid-2015 to mid-2018) and recent plans (>mid-2018) using the following rationales:

-

1.

We identified time based on the publication, adoption, enforcement start, enforcement end date of plans.

-

2.

If all of these dates were available, we choose the year of publication to determine the cluster, because it is closest to the actual production/ development of the plan.

-

3.

If any of the dates were missing, we used the first available date in the given order: publication, adoption, and/ or enforcement start, enforcement end.

-

4.

In cities where more than one plan was available, we used the date of the plan where most of the information on adaptation measures was documented, as most plans currently focus on these aspects; hence, our index and questionnaire are also stressing the options and measures taken and their implementation.

-

5.

Based on the temporal information, plans were clustered in groups of nearly equal sample size (55 cities in the first, and 56 cities each in the latter groups). This demanded the separation of plans published/adopted in 2015 and 2018 to be grouped either into group 1 or 2, and 2 or 3. To aid the separation we used months of publication/ adoption where available. If the month of the plan was not available, plans were randomly grouped into the one or other group, i.e., if we have 4 plans in 2015 without a month, we randomly grouped 2 into group1 and 2 into group 2.

Data processing

Index construction rationale: We create the urban ADAptation plan Quality Assessment index (ADAQA), based on six well-established principles identified across specialized literature (fact base; goals; policies; implementation; monitoring & evaluation of measures; and societal participation)7,26,33,37,38,39,40. However, as the calculation of index scores can be done in multiple ways, we develop three different indices (see Method Fig. 1):

-

ADAQA-1 is calculated by allocating one point per response option—representing depth and detailedness.

-

ADAQA-2 is calculated by allocating one point per question in the questionnaire (see Method Fig. 1), focussing on breadth, particularly adaptation measures (the principle with largest number of questions).

-

ADAQA-3 uses complex heuristics and our expert judgement26,40, stressing the need for consistency between different parts of the plan and considerations of equity and justice, i.e. the focus on and participation of vulnerable groups in plan creation and monitoring and evaluation. ADAQA-3 is calculated by the premises that:

-

plan quality is higher if there is consistency between:

-

identified impacts/risks and adaptation goals (consistency 1),

-

vulnerable sectors/ industries and planned adaptation measures (consistency 2),

-

the impacts/risks of vulnerable groups and planned adaptation measures (consistency 3),

-

planned adaptation measures for vulnerable groups and monitoring and evaluation (consistency 4), and

-

impacts/risk for vulnerable groups and participation (consistency 5).

-

-

scores within each principle are allocated according to relevance (e.g. past, present and future risk each getting 1 point), and representation of topics (each topic yielding a maximum of 5–6.5 points; asymptotic increase of points for questions with large number of response options);

-

scores given for each principle should be equal (1/6 of the total score; i.e. 16.6% for each of the six plan quality principles; see Supplementary Fig. 1 for details).

-

Data analysis

We developed a database of the characteristics of the 327 representative cities of the (former) EU-28 along with the coded information of their adaptation policies and plans, using the structure of a stylized planning process. This database and the underlying policies can be regarded as the latest, most-up-to-date and most comprehensive information on urban climate planning in cities across Europe. Because our cities are considered a representative sample of European cities as regards population size, geographic spread and political significance, but also because we have been collecting data according to a similar protocol, we assume that the database is the best currently available knowledge on urban climate adaptation policy in Europe.

We analyse data per city. We do not analyse information per adaptation plan.

We employ:

-

Descriptive statistics to explore the state and quality of adaptation planning;

-

Linear regression to unearth a potential statistical relationship between average of plan quality per year across years 2005 and 2020 (interpolating for the years 2006 and 2007),

-

One-way ANOVA with Tukey Kramer Multiple Comparison Post-Hoc tests to determine a statistical difference of plan quality across groups of older, medium-old, and recent plans.

-

Statistical significance is based on critical values of studentized range Q with an alpha=0.05 and a number of group k = 3.

-

-

All hypothesis tests (linear regression and ANOVA) are two-sided.

We used IBM SPSS Statistics, Version: 28.0.1.0 (142) for data analysis.

Reporting summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Data availability

The questionnaire used for data extraction by way of document analysis of urban climate adaptation plans, and the data are available at: https://easy.dans.knaw.nl/ui/datasets/id/easy-dataset:24837157. The download of data is restricted until 30th April 2024 to allow authors to publish further analyses and findings. Until that date all data are available from the corresponding author upon reasonable request.

References

UNFCC-United Nations Framework Convention on Climate Change. Paris Agreement. https://unfccc.int/sites/default/files/resource/parisagreement_publication.pdf. (2015).

Lesnikowski, A. et al. What does the Paris Agreement mean for adaptation? Clim. Policy 17, 825–831 (2017).

Tompkins, E. L., Vincent, K., Nicholls, R. J. & Suckall, N. Documenting the state of adaptation for the global stocktake of the Paris Agreement. Wiley Interdiscip. Rev. Clim. Chang. 9, e545 (2018).

Berrang-Ford, L. et al. Tracking global climate change adaptation among governments. Nat. Clim. Change. 9, 440–449 (2019).

Araos, M. et al. Climate change adaptation planning in large cities: a systematic global assessment. Environ. Sci. Policy 66, 375–382 (2016).

Reckien, D. et al. How are cities planning to respond to climate change? Assessment of local climate plans from 885 cities in the EU-28. J. Clean. Prod. 191, 207–219 (2018).

Guyadeen, D., Thistlethwaite, J. & Henstra, D. Evaluating the quality of municipal climate change plans in Canada. Clim. Change 152, 121–143 (2019).

Neder, E. A. et al. Urban adaptation index: assessing cities readiness to deal with climate change. Clim. Change 166, 1–20 (2021).

Salvia, M. et al. Climate mitigation in the Mediterranean Europe: An assessment of regional and city-level plans. J. Environ. Manage. 295, 113146 (2021).

Aguiar, F. C. et al. Adaptation to climate change at local level in Europe: an overview. Environ. Sci. Policy 86, 38–63 (2018).

Grafakos, S. et al. Integration of mitigation and adaptation in urban climate change action plans in Europe: a systematic assessment. Renew. Sustain. Energy Rev. 121, 109623 (2020).

Pietrapertosa, F. et al. Urban climate change mitigation and adaptation planning: Are Italian cities ready? Cities 91, 93–105 (2019).

Pietrapertosa, F. et al. Multi-level climate change planning: an analysis of the Italian case. J. Environ. Manage. 289, 112469 (2021).

Olazabal, M., Ruiz De Gopegui, M., Tompkins, E. L., Venner, K. & Smith, R. A cross-scale worldwide analysis of coastal adaptation planning. Environ. Res. Lett. 14, 124056 (2019).

Kristianssen, A. C. & Granberg, M. Transforming local climate adaptation organization: Barriers and progress in 13 swedish municipalities. Climate 9, 52 (2021).

Scott, H. & Moloney, S. Completing the climate change adaptation planning cycle: monitoring and evaluation by local government in Australia. J. Environ. Plan. Manag. 65, 650–674 (2022).

New, M. et al. Climate Change 2022: Impacts, Adaptation, and Vulnerability. Contribution of Working Group II to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change (eds Pörtner, H.-O. et al.) (Cambridge University Press, 2022).

Hale, T. N. et al. Sub- and non-state climate action: a framework to assess progress, implementation and impact. Clim. Policy 21, 406–420 (2021).

Sanchez Rodriguez, R., Ürge-Vorsatz, D. I. & Barau, A. S. Sustainable development goals and climate change adaptation in cities. Nat. Clim. Change. 8, 181–183 (2018).

Castán Broto, V. & Westman, L. K. Ten years after Copenhagen: reimagining climate change governance in urban areas. Wiley Interdiscip. Rev. Clim. Change 11, e643 (2020).

Dodman, D. et al. Climate Change 2022: Impacts, Adaptation, and Vulnerability. Contribution of Working Group II to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change (eds Pörtner, H.-O. et al.) (Cambridge University Press, 2022).

Olazabal, M. & Ruiz De Gopegui, M. Adaptation planning in large cities is unlikely to be effective. Landsc. Urban Plan. 206, 103974 (2021).

Dilling, L. et al. Is adaptation success a flawed concept? Nat. Clim. Change. 9, 572–574 (2019).

Moser, S. C. & Boykoff, M. T. Successful Adaptation to Climate Change: Linking Science and Policy in a Rapidly Changing World (eds Moser, S. C. & Boykoff, M. T.) 1–335 (Taylor and Francis, 2013).

Olazabal, M., Galarraga, I., Ford, J., Sainz De Murieta, E. & Lesnikowski, A. Are local climate adaptation policies credible? A conceptual and operational assessment framework. Int. J. Urban Sustain. Dev. 11, 277–296 (2019).

Woodruff, S. C. & Stults, M. Numerous strategies but limited implementation guidance in US local adaptation plans. Nat. Clim. Change. 6, 796–802 (2016).

Preston, B. L., Westaway, R. M. & Yuen, E. J. Climate adaptation planning in practice: An evaluation of adaptation plans from three developed nations. Mitig. Adapt. Strateg. Glob. Chang. 16, 407–438 (2011).

Burby, R. J. Hurricane Katrina and the Paradoxes of government disaster policy: bringing about wise governmental decisions for hazardous areas. Ann. Am. Acad. Pol. Soc. Sci. 604, 171–191 (2006).

Olazabal, M., Chiabai, A., Foudi, S. & Neumann, M. B. Emergence of new knowledge for climate change adaptation. Environ. Sci. Policy 83, 46–53 (2018).

Schipper, E. L. F. Maladaptation: when adaptation to climate change goes very wrong. One Earth 3, 409–414 (2020).

Magnan, A. K. et al. Addressing the risk of maladaptation to climate change. Wiley Interdiscip. Rev. Clim. Change 7, 646–665 (2016).

Berrang-Ford, L. et al. A systematic global stocktake of evidence on human adaptation to climate change. Nat. Clim. Change. 11, 989–1000 (2021).

Meerow, S. & Woodruff, S. C. Seven principles of strong climate change planning. J. Am. Plan. Assoc. 86, 39–46 (2020).

Owen, G. What makes climate change adaptation effective? A systematic review of the literature. Glob. Environ. Chang. 62, 102071 (2020).

Singh, C. et al. Interrogating ‘effectiveness’ in climate change adaptation: 11 guiding principles for adaptation research and practice. Clim. Dev. https://doi.org/10.1080/17565529.2021.1964937 (2021).

Heikkila, T. & Gerlak, A. K. Building a conceptual approach to collective learning: lessons for public policy scholars. Policy Stud. J. 41, 484–512 (2013).

Lyles, W. & Stevens, M. Plan quality evaluation 1994–2012: growth and contributions, limitations, and new directions. J. Plan. Educ. Res. 34, 433–450 (2014).

Berke, P. & Godschalk, D. Searching for the good plan: a meta-analysis of plan quality studies. J. Plan. Lit. 23, 227–240 (2009).

Stevens, M. R. Evaluating the quality of official community plans in Southern British Columbia. J. Plan. Educ. Res. 33, 471–490 (2013).

Otto, A., Kern, K., Haupt, W., Eckersley, P. & Thieken, A. H. Ranking local climate policy: assessing the mitigation and adaptation activities of 104 German cities. Clim. Change 167, 5 (2021).

Bretagnolle, A. et al. Technical Report LUZ specifications (Urban Audit 2004) https://doczz.net/doc/1215288/luz-specifications--urban-audit-2004- (ESPON, 2011).

Araos, M. et al. Equity in human adaptation-related responses: a systematic global review. One Earth 4, 1454–1467 (2021).

International Organization for Standardization (ISO). Adaptation to climate change—Requirements and guidance on adaptation planning for local governments and communities. ISO/TS 14092:2020. https://www.iso.org/standard/68509.html (2020).

Boswell, M., Greve, A. & Seale, T. Climate Action Planing: A Guide to Creating Low-Carbon, Resilient Communities Vol. 46 (Choice Reviews Online, 2019).

Boswell, M. R., Greve, A. I. & Seale, T. L. Local Climate Action Planning (Island Press, 2012).

European Environment Agency (EEA) Adaptation Support tool. https://climate-adapt.eea.europa.eu/en/knowledge/tools/adaptation-support-tool (Accessed: 15th February 2023) (2022).

Graham, I. D. et al. Lost in knowledge translation: time for a map? J. Contin. Educ. Health Prof 26, 13–24 (2006).

Harrison, M. B. et al. Guideline adaptation and implementation planning: a prospective observational study. Implement. Sci. 8, 1–14 (2013).

IPCC. limate Change 2014: Impacts, Adaptation, and Vulnerability. Part A: Global and Sectoral Aspects. Contribution of Working Group II to the Fifth Assessment Report of the Intergovernmental Panel on Climate Change (eds Field, C. B. et al.) 34 (Cambridge University Press, 2022).

European Environment Agency (EEA). Climate-ADAPT. The European Climate Adaptation Platform. https://climate-adapt.eea.europa.eu/. (Accessed: 24th June 2022) (2021).

Reckien, D., Flacke, J., Olazabal, M. & Heidrich, O. The influence of drivers and barriers on urban adaptation and mitigation plans-an empirical analysis of European Cities. PLoS One 10, 1–21 (2015).

Rivas, S., Urraca, R., Bertoldi, P. & Thiel, C. Towards the EU Green Deal: Local key factors to achieve ambitious 2030 climate targets. J. Clean. Prod. 320, 128878 (2021).

European Environment Agency (EEA). Urban adaptation in Europe: how cities and towns respond to climate change. EEA Report No. 12/2020 https://doi.org/10.2800/32462 (Accessed: 24th June 2022) (European Environment Agency, 2020).

IPCC. Climate Change 2022: Impacts, Adaptation, and Vulnerability. Contribution of Working Group II to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change (Cambridge University Press, 2022).

Reckien, D. et al. Dedicated versus mainstreaming approaches in local climate plans in Europe. Renew. Sustain. Energy Rev. 112, 948–959 (2019).

Eurostat. European Regional and Urban Statistics – Reference Guide. Methodologies and Working Papers (Luxembourg: Publications Office of the European Union, 2010).

Reckien, D. et al. Data on plan quality characteristics of local climate adaptation plans in Europe. DANSEASY https://doi.org/10.17026/dans-xd6-w7pc (2022).

Revi, A. et al. Towards transformative adaptation in cities: the IPCC’s Fifth Assessment. Environ. Urban. 26, 11–28 (2014).

Connell, D. J. & Daoust-Filiatrault, L.-A. Better than good: three dimensions of plan quality. J. Plan. Educ. Res. https://doi.org/10.1177/0739456X17709501 (2017).

Guyadeen, D. Do practicing planners value plan quality? Insights from a survey of planning professionals in Ontario, Canada. J. Am. Plan. Assoc. 84, 21–32 (2018).

Austin, S. et al. Public health adaptation to climate change in OECD countries. Int. J. Environ. Res. Public Health 13, 889 (2016).

Pelling, M. & Garschagen, M. Put equity first in climate adaptation. Nature 569, 327–329 (2019).

Krippendorff, K. H. Content Analysis: An Introduction to its Methodology 4th edn (SAGE Publications, Inc., 2018).

Columbia Public Health. Content Analysis Method and Examples. https://www.publichealth.columbia.edu/research/population-health-methods/content-analysis. (Accessed: 15th February 2023) (2021).

Acknowledgements

This work would not have been possible without the collaboration of many fellow researchers that helped to compile and analyze urban climate plans of the cities in the data sample. This collaboration runs under the name EURO-LCP Initiative (www.lcp-initiative.eu). We thank all members of the EURO-LCP Initiative for their fruitful collaboration over the years, in particular those members that are not co-authors of this paper: Jon Marco Church; Sergiu Vasilie; Nataša Belšak Šel; Eva Streberova; Corinna Altenburg; Viera Bastakova; lana Coste and Eliška Lorencová. Thanks also go to Nikolas Afxentiou, who helped developing the Climate Change Adaptation Scoring tool (https://www.lcp-initiative.eu/climate-change-scoring-tool/). DR’s work is funded by the European Union’s Horizon 2020 research and innovation programme under grant agreement No. 101019707 (2021–2024), No. 101036458 (2021–2025), and a JPI Urban Europe Grant, funded by NWO grant agreement No. 438.21.445 (2022–2025). MO’s research is supported by María de Maeztu excellence accreditation 2018-2022 (Ref. MDM-2017-0714), funded by MCIN/AEI/10.13039/501100011033/; and by the Basque Government through the BERC 2022-2025 programme. The work of MS and FP is supported by the National Biodiversity Future Centre 2022-2025 (id. code CN000033), funded by the Italian National Recovery and Resilience Plan (PNRR Mission 4, Component 2, Investment 1.4).

Author information

Authors and Affiliations

Contributions

D.R. conceptualized the study, conducted the majority of statistical analyses, wrote main parts of the manuscript, and developed Figs. 1, 3, 4, 5 and 6. M.S. supported development of Fig. 1. L.T. developed Fig. 2. A.B. and M.O. supported conceptualization. A.B., N.-A.S., and S.G.S. supported the statistical analyses. A.B., M.O., P.E., M.S., and F.P. wrote parts of the manuscript and helped reviewing. P.F. developed the online tool. All authors, i.e. D.R., A.B., M.O., N.-A.S., P.E., S.G.S., M.S., F.P., P.F., S.M.G., L.T., M.V.B., C.L.d.B., S.d.G.-H., E.F., A.Fl., A.Fo., D.G., S.G., O.H., B.I., A.K.-R., M.M., H.O., K.O., I.P., K.R., M.S., M.S.C., V.V., and A.W. have contributed to data gathering. All authors, i.e. D.R., A.B., M.O., N.-A.S., P.E., S.G.S., M.S., F.P., P.F., S.M.G., L.T., M.V.B., C.L.d.B., S.d.G.-H., E.F., A.F.l., A.Fo., D.G., S.G, O.H., B.I., A.K.-R., M.M., H.O., K.O., I.P., K.R., M.S., M.S.C., V.V., and A.W. reviewed, commented, and helped sharpen the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Reckien, D., Buzasi, A., Olazabal, M. et al. Quality of urban climate adaptation plans over time. npj Urban Sustain 3, 13 (2023). https://doi.org/10.1038/s42949-023-00085-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s42949-023-00085-1

This article is cited by

-

Integrating science and the arts to deglobalise climate change adaptation

Nature Communications (2024)

-

European patterns of local adaptation planning—a regional analysis

Regional Environmental Change (2024)

-

Adapting to climate change: promises and pitfalls in the diffusion of solutions

Regional Environmental Change (2024)

-

Heatwave resilience

Nature Geoscience (2023)

-

Status of global coastal adaptation

Nature Climate Change (2023)