Abstract

We used deep-learning-based models to automatically obtain elastic moduli from resonant ultrasound spectroscopy (RUS) spectra, which conventionally require user intervention of published analysis codes. By strategically converting theoretical RUS spectra into their modulated fingerprints and using them as a dataset to train neural network models, we obtained models that successfully predicted both elastic moduli from theoretical test spectra of an isotropic material and from a measured steel RUS spectrum with up to 9.6% missing resonances. We further trained modulated fingerprint-based models to resolve RUS spectra from yttrium–aluminum-garnet (YAG) ceramic samples with three elastic moduli. The resulting models were capable of retrieving all three elastic moduli from spectra with a maximum of 26% missing frequencies. In summary, our modulated fingerprint method is an efficient tool to transform raw spectroscopy data and train neural network models with high accuracy and resistance to spectra distortion.

Similar content being viewed by others

Introduction

Elastic moduli are important thermodynamic parameters of extended solid-state phases, which are also closely correlated with structural features, such as lattice symmetry, grain boundary, and material defects1,2,3,4,5,6,7. Such elastic information provides the foundation for studying the functionality of materials, including superconductors, bionic materials, and semiconductors7,8,9. Conventionally, elastic moduli are measured by static and dynamic methods. Measuring static moduli requires stretching bulk samples by a universal testing machine (UTM, widely used in materials industry) or a dynamic mechanical analysis (DMA, specialized for analyzing polymer)10,11,12. In the dynamic method, samples are vibrated by waves, e.g., ultrasonic wave. One can then calculate the dynamic elastic moduli by using the velocity of wave, density, and Poisson’s ratio of materials13,14.

Resonant ultrasound spectroscopy (RUS) obtains the elastic moduli from natural resonance frequencies, yielding all elastic moduli from a single measurement15,16. By knowing all elastic moduli, one can calculate different thermodynamic parameters, e.g., heat capacity, Debye temperature17,18. RUS is an affordable and accurate method to obtain such thermodynamic properties compared with thermochemical methods (e.g., calorimetry) or spectroscopy methods (e.g., X-ray absorption spectroscopy, XAS). RUS has been commonly used to characterize new materials, including high entropy alloys and ceramic-based superconductors7,9,15,16. However, there are still two major drawbacks for implementing RUS. First, to derive moduli from a RUS spectrum, one needs initial guesses of elastic moduli, which ideally are close to their true values. Nevertheless, it is sometime challenging to make the guessed moduli closed to the actual ones, especially when the investigated material is novel without previous knowledge. Bad values of initial guesses may lead fitting to diverge or converge on an incorrect local minimum15. Second, the experimental RUS spectra may contain missing resonance frequencies caused by vibrational nodes at the transducer contact point. This also makes the determination of elastic moduli prone to subjective guessing. In short, using RUS not only requires knowledge of the materials of interest but also some practical experience. Thus, it is necessary to develop new methods to analyze RUS spectra without user intervention.

Herein, we introduced neural network-based deep learning to analyze RUS spectrum and automatically retrieve elastic moduli. As a supervised machine learning method, deep learning has advantages for modeling complicated non-linear relationships due to its distinctive “layers of nodes” structure. A deep learning algorithm can unveil the relationship between features and labels if sufficient data is fed for the training of neural network model19,20,21. After proper training, the resulting models are capable of independently predicting a label (e.g., elastic modulus) from a not-yet-seen feature (e.g., experimental spectrum). The intent is to use this algorithm to minimize human intervention that is conventionally required for deriving elastic moduli from RUS. Previously, similar deep learning methods were successfully applied to analyze spectra from various characterization techniques, including XAS, infrared (IR) and UV–Vis spectroscopy, X-ray diffraction (XRD), and fluorescence22,23,24,25,26,27,28. For instance, different models were developed to identify the structure of nanoparticles from the X-ray absorption fine structure (XAFS) spectra26,29,30. Because of the characteristics of these spectroscopy methods, it is possible to use the raw or slightly preprocessed (e.g. subtracting nano particle spectra from bulk material spectrum)26, computed or experimental spectra to train the models.

For applying deep learning methods to the analysis of RUS spectra, we identified several challenges. In previous deep learning studies of XRD and XAS, the training datasets consisted of hundreds of thousands measured spectra (XRD, obtained from open-source databases) or computed spectra (XAS, generated by Feff code)26,28,29. These spectra are essentially two-dimensional data (e.g., with useful information from both diffraction angle/energy and intensity). Therefore, only minimal preprocessing is needed. In contrast, the computed RUS spectra are lists of resonances frequencies corresponding to a specified set of elastic tensor dimension, and density of samples—amplitude is not possible to compute because of the extreme variability of transducer contact and displacements15,16,31. Therefore, it is unfeasible to efficiently train models without strategically preprocessing such one-dimensional data. This issue can even become more complicated, considering experimental errors in sample dimensions, shape, and morphology. For instance, shifting and missing modes are common15. Missing modes are less predictable during experiments, in terms of whether missing modes occur, and if so, which modes are missing15,32. In contrast, the shifting of modes can be attributed to an asymmetrical distortion of crystallographic structure and thus, to changes in elastic moduli33,34. In total, a strategically designed data preprocessing method is important to convert raw data (both computed and experimental) into an easy-to-train format. To our knowledge, there has only been so far a single deep learning work for the analysis of RUS spectra that revealed the hidden order transition temperature (THO) of URu2Si235, where the model successfully classified the hidden order of THO (one-component or two-component).

Previous successful applications of deep learning to spectroscopy, and particularly RUS, have motivated us to pursue models that can directly obtain elastic moduli from RUS spectra with tolerance in imperfect experimental RUS spectra containing shifting or missing modes. Inspired by image classification and recognition studies, we modulated the raw computed RUS spectra into an image-like format as input for training a neural network (NN). We first trained a regression model to recognize two elastic moduli (C11 and C12) for a steel cylinder. After proper training process, the resulting NN model automatically recognized the two elastic moduli from a test dataset containing computed RUS spectra and an experimentally measured spectrum, both containing randomly missing resonant frequencies. Lastly, we successfully applied NN models on retrieving the elastic moduli from both isotropic and anisotropic cerium-doped yttrium aluminum garnet (Ce:YAG) ceramic samples, demonstrating the potential of NN models for broader inorganic material systems.

Method

Software libraires

All software libraries we used in this study were listed below: TensorFlow2, NumPy, Pandas36,37,38,39,40. We developed software to compute RUS spectra based on Lagrange minimization41. The python packages NumPy and Pandas were used to preprocess the raw theoretical spectra (described below). TensorFlow2 was used to construct the NN and produce the models. The TensorFlow2 code was written using Python 3.8.

Theoretical RUS dataset

The theoretical resonant modes were obtained by locating the stationary points of the Lagrangian, which is given by Eq. (1)42:

where V is the sample’s volume, KE and PE are respectively the kinetic and potential energy density as shown in Eq. (2) and (3):

where ρ is the sample density, ω is the angular frequency, ui is the ith component of displacement vector, and Cijkl is the stiffness tensor. The displacement vector is expanded by the Eq. (4):

where aiα is the expansion coefficients and \({\varphi }_{\alpha }\left(x,y,z\right)\) is the basis function, which is represented by Legendre polynomials in this work. By substituting the displacement vector into the Lagrangian, the stationary points of the first derivative of the Lagrangian are determined by the eigenvalues as shown in Eq. (5):

where a is the motion assembled from the basis set, E is the kinetic energy term and Γ is the elastic energy term.

Theoretical resonance modes were obtained by varying the elastic constants in the energetic term. Generated datasets are used to train and validate the NN model (Table S1). For steel, the C11 and C12 range were 170–370 GPa and 30–130 GPa in 1.0 GPa increments. For the three Ce:YAG datasets with 0.0025%, 0.01%, and 1% Ce in terms of weight, three elastic moduli ranges, C11, C12, and C44, were 270 to 370 GPa, 60 to 160 GPa, and 60 to 160 GPa in 5 GPa increments, respectively. Sample diameter and mass were fixed during the computed spectra generation. Robustness of the inverse method was also tested against experimental uncertainties. To simulate possible imperfect experimental data, resonance shifting of modes were intentionally introduced into the dataset by adding random frequency shifting of − 1.5% to + 1.5% and. By doing so, we obtained 7 spectra from every single computed spectrum generated by Eq. (5). The randomly missing modes were simulated by a dropout layer, which will be discussed in NN model architecture section. Every dataset of steel cylinder and Ce:YAG samples contained totally about 140000 computed spectra. The data distributed eventually in these datasets. There is no specified range inside the dataset showing either a higher or a lower data density than other ranges. We divided this dataset into three subsets, namely train, validation, and test datasets with a ratio of 5:1:1.

Measured spectra

All measured RUS spectra used in this study were taken with an ACE RUS-008 model instrument31. The steel spectrum was obtained from a cylinder standard sample made in accordance with ASME B 18.8.2, hardened ground machined with a core hardness of RC 47–58 and a minimum case hardness of RC 60. Surface finish is about 0.2 microns maximum and dimensional accuracy of about 25 microns for length and 2 microns for diameter. The three Ce:YAG experimental spectra were obtained from the ceramic samples prepared in our previous study7. Detailed sample parameters and experimental configurations were listed in Table S2. Specifically, the frequency range for collecting RUS spectrum from steel cylinder standard sample was described by the operation manual of the ACE RUS-008 instrument in the calibration section. While the frequency range of collecting spectra from the three Ce:YAG samples was determined based on our pervious study7.

Data preprocessing and input format

Three data preprocessing methods were proposed and studied, direct input, derivative input, and modulated fingerprint. In general, a computed RUS spectrum consisted of a series of resonant frequencies, fn, corresponding to specified elastic moduli, mass, and diameter of sample. We defined the computed spectra as the features and the corresponding elastic moduli as the labels. The sample diameter and mass were fixed and they are parameters easy to measure. In direct input method, we did not heavily preprocess the computed RUS spectra. We obtained a sequence of the first 64 resonant frequencies from every computed spectrum, f1 to f64, with a single increase order. These sequences and corresponding elastic moduli were utilized to train the NN models. Empirically, in RUS data processing, the first 25 peaks were sufficient to derive elastic moduli7.

In the derivative method, the frequency differences between two nearby modes, Δfn, were used as the features. The Δfn was obtained by Eq. (6).

where fn+1 and fn were two nearby resonant frequencies. To obtain the Δfn sequence with n between 1 to 64, the first 65 resonant frequencies, f1 to f65, from a computed spectrum were used. This process is equivalent of calculating the first derivative between resonant frequencies values versus order of frequencies.

The fn and Δfn in every sequence of features of direct input and derivative-input-based datasets were rescaled between 0 and 1 by considering the maximum and minimum frequencies or different of frequencies values in each of the datasets (Eq. 7 and 8).

where fmin and fmax were the minimum and maximum frequencies, while Δfmin and Δfmax were the minimum and maximum difference of frequencies in the whole dataset.

Modulated fingerprint method has a different approach for preprocessing computed RUS spectra (see Fig. S1). Firstly, the frequency range of interest (F) in a computed RUS spectrum is evenly divided into specified number of bins (B), from 64 (8 × 8) to 1024 (32 × 32). Every bin shares the same length (l) in terms of range of frequency (see Eq. 9).

We then count the number of modes (Nf) inside each bin with assigning its number as bn. The range of n is from 1 to B (see Eq. 10).

The sequence of bn from 1 to B corresponds to a square image with B1/2 × B1/2 resolution, where bn represents the grayscale of every pixel inside the image.

The elastic moduli, C11, C12, and C44, in direct input, derivative input, and modulated fingerprint input were also rescaled between 0 and 1by Eq. (11).

NN model architecture

The NN used in this study consisted of four to five layers: an input layer, a dropout layer, one or two hidden dense layers, and an output layer. The features, e.g., sequences of rescaled fn, Δfn, or bn, were first introduced into the NN by the input layer. For the direct input and derivative input, the number of nodes at the input layer was 64 corresponding to 64 fn or Δfn in the sequences. For the modulated fingerprint, seven different configurations were used, 64, 144, 256, 400, 576, 784, and 1024 corresponding to B, the number of bins. A dropout layer was assigned after the input layer. This layer randomly sets exact to 10% of input nodes as 0 at every training step. Therefore, this dropout layer was useful to enhance the resistance of models against missing frequencies. We then built models either with one hidden dense layer (with 48, 64, 80, 96 and 112 nodes inside, respectively) or two hidden dense layers (64 nodes for the first hidden layer and 16 nodes for the second hidden layer). TensorFlow optimized the weight and bias parameters in every node in the dense layer(s) during training process. The goal of such optimization was to automatically build a function to calculate elastic moduli from input spectra. Lastly, we assigned two nodes for the steel dataset or three nodes for the Ce:YAG dataset at the output layer. The nodes in the output layer were to show the rescaled elastic moduli, e.g., C11, C12, and C44, for the input spectra. This is because steel is an isotropic material containing only two elastic moduli (C11, and C12). For the YAG material, it may exhibit an anisotropic behavior when doping with Ce. Therefore, it has maximum three elastic moduli (C11, C12, and C44). After reversing the rescaling process, the human readable elastic moduli were obtained.

Training notes

A conventional desktop computer (processer: Intel I5-9400F, graphical processing unit: Nvidia RTX 3070, memory: 16 GB) was used to train all models. We used Adam optimizer to train the NN models and mean square error (MSE) as the loss function. Adam optimizer is an efficient gradient descent algorithm for training NN models43. During training, the NN determines the relation between features and labels (in this case, computed RUS spectra and elastic moduli) through an iterative process by optimizing the parameters of every node in the dense layers. The MSE loss function shows the mean square error between the true elastic moduli and calculated elastic moduli. Therefore, the MSE is the metric for measuring the accuracy of the fitting (see Eq. 12).

Adam optimizer guides the iterative process towards convergence by minimizing MSE. The function for calculating the elastic moduli from computed RUS spectra was obtained from the deep learning model. An example equation for obtaining C11 from model with one hidden layer is shown below.

where f2 is the activation function (ReLU in this case), b2 is the bias, W(k-1, k, i, j) is the weight matrix between two layers of nodes. Gi is the input nodes44. Bias was not applied to the input layer. The number of nodes in the first layer (input layer) and second layer (hidden layer) is n1 and n2. We set the initial learning rate for the Adam optimizer as 1 × 10–3. During the iterative process, the learning rate was then divided by 5 until 1 × 10–6 if MSE did not decrease after 10 epochs. The batch size of the training dataset was set to 60. Therefore, the NN adjusted all the internal parameters one time after seeing every 60 spectra during each training epoch. The training dataset was shuffled, and it was repeated 4 times during every epoch. Although the model learned every piece of training data 4 times, but the data was slightly different due to the dropout of 10% of nodes.

About 100,000 and 20,000 computed RUS spectra were used in training and validation of the model. As the sample parameters, e.g., density and diameter, were fixed during dataset generation, the resulting model was corresponded with one specified sample. With this configuration, the training time was relatively short even using a conventional computer (about 6 h). In contrast, although training a universal model to predict elastic moduli from all different samples is theoretically plausible, the training dataset of such a model would consist of millions, if not billions, of computed RUS spectra. It is hard to train such a model without using a significant amount of computational power. Therefore, we believe that training a model for a specific sample is more feasible than training a universal model.

Training and evaluation of different NN models

To study the effect of preprocessing methods on the accuracy of models, we trained the NN models with the computed steel cylinder dataset with 2048 epochs. To evaluate the accuracy of models, we randomly chose 128 spectra from the test dataset, which we did not use during training and validation of NN models. Then we utilized models to obtain elastic moduli from the 128 spectra. We fed the models with both intact spectra and spectra with 6% of missing modes. Mean absolute percentage error (MAPE) was adopted to compare the true and retrieved elastic moduli from the models (see Eq. 14). C11, C12, were compared individually.

We prefer using MAPE to evaluate accuracy of models because it directly shows the difference between ground truth and prediction of elastic moduli.

While studying the effect of fingerprint resolution on the accuracy of models, we trained all three models with 512 epochs with the computed steel cylinder dataset. Then we utilized the resulting models to retrieve elastic moduli from the test dataset. To evaluate the stability of the model against missing modes, we observed the MAPEs of C11 and C12 from spectra with up to 9.6% of missing frequencies. We further input the experimental steel cylinder spectrum into the best model to evaluate the accuracy of the model when solving experimental spectrum. Like the computed spectra in the test dataset, we randomly removed up to 12.5% of frequencies to simulate missing modes.

We also trained three models to retrieve elastic moduli from RUS experimental spectra of three Ce:YAG ceramic samples with 2048 epochs. Since the three samples had different diameters and mass, we generated different computed RUS spectra datasets corresponding to the samples. During the human resolving, we noticed that these experimental spectra intrinsically contain missing and shifting of modes7. We then directly compared the difference between the human resolved elastic moduli to the retrieved elastic moduli from NN models.

Results and discussion

Data preprocessing is crucial for combining deep learning and materials science. Proper data preprocessing methods optimize the training dataset and therefore improve the accuracy of the resulting NN models. Here, we reported three different data preprocessing methods (direct input, derivative input, and modulated fingerprint) to optimize theoretical RUS spectra for training of NN models to obtain elastic moduli. The modulated fingerprint is the only method in this study to promote the NN models recognizing patterns between computed steel RUS spectra and two elastic moduli (C11 and C12, see Fig. 1a). The resulting model correctly recognized C11 and C12 from both synthetic RUS data that were not used in the training, and experimental RUS data of a steel cylinder, which are also robust in dealing with randomly removed modes (up to 12.5% compared to total modes in data, see Fig. 1b). We further trained NN models based on modulated fingerprint to analyze experimental RUS spectra from Ce-doped yttrium aluminum garnet (Ce:YAG) samples to retrieve three elastic moduli (C11, C12, C44), which also turned to be successfully despite mode missing.

Comparison of three data preprocessing methods for accuracy and robustness

The training, validation, and test steel RUS spectra dataset with specified C11 and C12 ranges were computed by Lagrange equation (see Table S1 for all parameters we used to compute the dataset). The C11 and C12 range were determined from a combination of past studies on different steel samples and our empirical experience on RUS data analysis45,46,47. In this work, we generated about 20 thousands synthetic RUS spectra in the dataset for training of NN-based models. As one needs to pick the correct combination of C11 and C12 from such a large range of data to solve the experimental steel cylinder data, manual fitting of RUS data is generally slow or impossible. As shown in Fig. 2a, each computed RUS spectrum is a list of potential resonant frequencies corresponding to specified parameters of samples, such as elastic moduli set, spatial parameters, and density15. To increase the model accuracy and decrease training time, it is necessary to preprocess this dataset before training NN26,30,48,49. When designing the preprocessing methods (direct input, derivative input, and modulated fingerprint), addressing two goals were needed. First, the method should amplify the distinction of features from computed RUS spectra with different elastic moduli. Second, missing frequencies should not significantly change the features from the same computed RUS spectra.

Influence of data preprocessing methods on accuracy of NN models. (a) A typical raw computed steel cylinder RUS spectrum (C11: 174.0 GPa, C12: 125.0 GPa). (b–d) Three methods preprocessing theoretical RUS spectra dataset for neural network training: direct input (b), derivative input (c), and modulated fingerprint (d). (e) Structure of neural network utilized in this study.

As shown in Fig. 2b, Figs. S2a and b, the direct input method did not distinguish computed spectra with different elastic moduli. This lack may potentially impact the generalization of NN between features (spectra) and labels (elastic moduli). To overcome this issue, it is necessary to enhance the difference among all computed spectra26. Compared with direct input, the derivative input distinguishes spectra corresponding to different elastic moduli sets, such as position and amplitude of peaks (see Fig. 2c, Figs. S2c and d). The concept of the derivative of a spectrum is widely adopted for enhancing the signal strength in signal processing50,51. However, neither direct input nor derivative input is robust against missing resonant frequencies, which is a common issue in the experimental RUS data35,52. This is because the missing resonant frequencies change the sequence of frequencies, and that potentially impacts the accuracy of NN models when retrieving elastic moduli. Inspired by the exceptional image classification ability of NN and relating studies53,54, we designed a modulated fingerprint method to preprocess and optimize the computed RUS spectra dataset. This method reduces the RUS data from a list of frequencies into an image-like format and breaks the relationship between the sequence of modes and the mode frequencies. As shown in Fig. 2d, Figs. S2e and f, the modulated fingerprints from spectra corresponding to different elastic moduli are easily distinguishable. Such distinguishable features were beneficial for the training of NN-models. We then generated a modulated fingerprint from a compromised spectrum with 6% missing modes and compared it to the fingerprint image from the original intact spectrum. The two fingerprint images were similar (see Fig. S3). Most of the grey pixels (px) did not change their locations. As a result, most of the features of the spectrum were maintained even after missing several modes.

All three datasets from direct input, derivative input, and modulated fingerprint were used train NN models. This NN was consisted of an input layer, a dropout layer, a dense layer with 64 nodes, and an output layer with 2 nodes corresponding to the 2 elastic moduli (Fig. 2e, also see method section for detailed information). The dropout layer randomly set 10% of the input nodes to 0 during every training epoch to simulate the unintended missing of modes in RUS data. The percentage of dropping out modes was determined by our preliminary attempts after trying many different percentages of dropout rate from 2.5% to 20%. Four datasets were used train models, including the dataset of the steel cylinder and three datasets for three Ce:YAG ceramic samples, which will discuss later. Both the intact spectra (Fig. S4) and defected spectra (Fig. 3) with missing frequencies were used to evaluate the performance of resulting models. The accuracy of these models was evaluated by comparing the true elastic moduli to the predicted elastic moduli with mean absolute percentage error (MAPE). As shown in Fig. S4a and Fig. 3a, direct input-based model did not resolve C11 regardless of feeding intact or 6% mode removed test spectra. The NN model believed the C11 values from all test spectra were about 270.0 GPa, the middle of the C11 range used for training. The direct input-based model resolved the C12 from some of the test spectra with specified C12 range, from 30.0 GPa to 80.0 GPa. Overall, the MAPEs of retrieved C11 and C12 from intact data, 20.1% and 8.4%, and defected data, 20.2% and 7.8%, did not exhibit significant difference. As the direct input does not strategically amplify the difference among spectra, the NN model did not generalize the relation between spectra and elastic moduli. When resolving C11 from intact spectra, derivative input-based model exhibited higher accuracy, 9.7% of MAPE (Fig. S4b). The MAPE of C12, 14.2%, was higher than that of direct input method, but there was a clear trend between true and retrieved value. The retrieved C12 values were always about 7.0 GPa lower than the true C12. However, when feeding the model with spectra containing missing modes, the model failed to recognize any C11 values resulting in a large MAPE, 26.2% (Fig. 3b). The accuracy of C12 resolving was also decreased resulting in a large MAPE at 33.7%. Overall, both models were not capable of handling missing resonant frequencies in RUS spectra.

Comparison between real & predict C11 and C12 values from neural network models using direct input (a), derivative input (b), and modulated fingerprint (c) preprocessing methods. We randomly dropped 6% of peaks from every theoretical RUS spectrum in test dataset to simulate missing peaks in RUS experimental spectra.

The modulated fingerprint-based model retrieved the C11 and C12 values from pristine spectra (Fig. S4c) with the highest accuracy among the three methods. The MAPEs were 8.6% and 2.3% when retrieving C11 and C12 from intact spectra. More importantly, the modulated fingerprint-based model maintained similar accuracy when feeding test spectra with missing modes. The resulting MAPEs of C11 and C12 retrieving were 10.6% and 3.5%, respectively (Fig. 3c), demonstrating the success and robustness of the modulated fingerprint method in retrieving elastic moduli from spectra.

Examining modulated fingerprint based NN structures for accuracy in predicting elastic moduli

To understand the reason why the modulated fingerprint-based model tolerates missing frequencies, we further investigated how the NN models retrieve elastic moduli from spectra. In a nutshell, NN models automatically build functions to calculate elastic moduli from the computed RUS spectra during training process. The different preprocessing methods determine the appearance of the input data to the NN and therefore affect the function building of the NN. The models then use the built functions to retrieve elastic moduli from RUS spectra in the test dataset, which is unseen in the training process. The derivative preprocessing method fed sequences of Δfn versus elastic moduli to train the NN. The resulted model relies on value and order of Δfn to calculate the corresponding elastic moduli. Therefore, the integrity of the computed spectra is crucial to generate the sequences of Δfn correctly represent elastic moduli. Missing even one mode can change all subsequent Δfn resulting in inaccurate calculation of elastic moduli. In contrast, in the modulated fingerprint method, missing fn does not significantly change the resulting image-like data (Figs. S3a and b), in which most features, such as location of the grey and dark px, were not changed after randomly removing 6% of modes from the spectrum. Hence, the modulated fingerprint based model exhibited similar accuracy while feeding intact and compromised test spectra.

We then investigated the influence of neural network structures on accuracy. We firstly decreased the number of nodes inside the hidden dense layer from 64 to 48. As shown in Fig. S5a, the resulted model predicted all C11 value as 370 GPa from the spectra in the test dataset, the maximum value inside the steel cylinder dataset, independent of the input spectra. In contrast, the model correctly recognized the values of C12. This result suggests that the hidden layer with only 48 nodes is not capable to recognize relationship between modulated fingerprint and elastic moduli. A hidden layer containing 64 nodes is the minimal requirement to solve RUS spectra. (Fig. S5b). Further increasing the number of nodes (80, 96, and 112 nodes, see Figs. S5c, d, and e) and adding one more hidden layer (see Fig. S5f.), did not result in significantly improved accuracy in terms lowering MAPE, especially for predicting C11 values. Another common practice for training different neural networks with the same dataset is to catch some unseen phenomena by observing the predicting results from all different neural network models and pinpointing the ensemble deviation in the common poor-performance regions55,56. However, most neural network models, except the one with a hidden dense layer of 48 nodes, exhibited similar performance among the whole region of interest for both elastic moduli. Thus, we adopted the model with one hidden dense layer with 64 nodes inside because it was the simplest model determining both elastic moduli correctly.

In NN model-based image recognition, resolution significantly affects the accuracy of the models57,58,59. We studied seven different resolutions (64, 144, 256, 400, 576, 784, and 1024 px). To analyze the difference among all the resolutions, we defined information density (dens.) as the percentage of pixels containing modes versus all pixels in the fingerprint images. We then averaged this ratio from every spectrum in the training dataset (See Table S3). The information density is gradually decreased with the increasing of resolution of the image-like data. Figure 4a showed three examples with different resolutions (64, 256, and 1024 px) from a computed RUS spectrum of a steel cylinder (C11: 270.0 GPa, C12: 100.0 GPa, diameter: 4.76 mm, height: 3.45 mm, mass: 0.48 g) to highlight the influence of resolution on the input data. In 64 px, the gray and dark blocks occupy most of the image resulting in high information density (76.9%) in the training dataset. As we split the frequency range of interest (0.2 MHz to 1.3 MHz) into only 64 bins, every bin represents a large frequency range (17.2 kHz per bin). Most bins contain one or more peaks. In 256 px, the gray and dark px contain lower information density (34.8%), with each bin occupying 4.3 kHz. In 1024 px, the mode containing px are sparse (information density: 9.9%) because of the increased number of bins and small bin extent (only 1.1 kHz).

Effect of resolution of modulated fingerprint on the image-like data and accuracy of neural network models. (a) Example fingerprints (steel cylinder, C11: 270.0 GPa, C12: 100.0 GPa) with 64 pixels, 256 pixels and 1024 pixels and averaged data density in modulated fingerprints. (b) Effect of resolution on the neural network training process. (c) and (d) Accuracy of models trained by fingerprints with different resolutions while retrieving C11 (c) and C12 (d) from 128 randomly chosen computed steel cylinder RUS spectra in test dataset. Specified percentage of modes were removed to observe model stability.

We fed all seven datasets to train the NN models to examine the impact of the resolution of the image-like data on accuracy of the resulted models, monitored and validated by their validation mean square error (MSE). As shown in Fig. 4b, 64 px-based NN model struggled to generalize the relationship between features (fingerprints) and labels (elastic moduli), with a highest validation MSE among all models. Increasing the px of the image-like data from 64 px to 144 and 256 px decreases the validation MSE during training. The model trained by 256 px dataset exhibits the lowest MSE in this study. Increasing the resolution to 400 px results in a slightly lower accuracy (mild increase of MSE). All image-like datasets with higher resolutions, including 576, 784 and 1024 px, cause significant increase of validation MSE, which indicates low accuracy in the validation step while training. In total, based on the validation MSE data, the models trained by 256 or 400 px dataset demonstrated better performance than others during training and validation.

The accuracies of the seven models were further examined by solving randomly selected synthetic RUS data in the test dataset. Similar to the validation MSE during training, models trained by dataset with 256 and 400 px exhibit better accuracy. Figure 4c shows the MAPEs of C11 solved by all seven models when specified percentage (from 0 to 9.6%) of modes were randomly removed. As expected, increasing in mode removal results in increasing of MAPE for all models. The 64 and 144 px dataset-based models exhibit relatively high error than other models (15.0 to 18.2% for 64 px, and 12.0 to 16.4% for 144 px). The models trained by 256 and 400 px dataset exhibit the lowest MAPEs (9.2 to 13.4% for 256 px, and 9.7 to 13.9% for 400 px). Further increasing the resolution of the image-like data does not result in a lower MAPE. Models trained by 576, 784 and 1024 px dataset all show higher MAPE than those by 256 and 400 px, which are about 10.0% when dropping 0% modes, and about 15.0% when dropping 9.6% modes. The C12 solved by the seven models exhibit much better accuracies with lower MAPEs than those of C11, with the trend of MAPEs generally the same (Fig. 4d). The 400 px based model preforms slightly better than the 256 px: MAPEs of 2.1% versus 2.7% at 0% mode dropping, 2.6% versus 2.7% at 2.4% mode dropping, and 3.4% versus 3.7% at 4.8% mode dropping of the test dataset.

We have the following rationale for the impact of the resolution of the image-like data (or modulated fingerprint) on the model performance. Noted that the image-like data obtained from modulation is a reduced form of the raw RUS data, its resolution controls the level of the resulted reduction. Lower resolution gives higher reduction level. Theoretically, the image-like data with infinite resolution contains all information from the raw data, both necessary and unnecessary. Low resolutions, such as 64 and 144 px, yield over-reduction. Some information useful for the NN learning may lose during modulation. To the contrary, high-resolution data (e.g., 576, 784, and 1024 px) carries excessive information that may be unnecessary that can confuse neural network. Data with 256 and 400 px reached a balance between reducing the complexity of the raw data while keeping useful information, making patterns easier to be recognized by NN during model training. Considering that the model trained by 256 px dataset exhibits the lowest validation MSE (see Fig. 4b), we choose this resolution to train all subsequent models.

Application of modulated fingerprint based NN model for RUS of steel and ceramics

Steel has been extensively studied by RUS61,62,63,64,65,66, which provides a good benchmark for testing our fingerprint-based model. We examined the model using a measured spectrum collected from a steel cylinder (see Table S2 for the sample dimension and weight). Before feeding the experimental spectrum into NN model, we manually resolved it and accurately obtained both C11 and C12, as 270.0 GPa and 80.0 GPa, respectively. Missing modes were not observed while resolving this spectrum. As shown in Fig. 5a, we first converted the raw spectrum into the corresponding fingerprint. We randomly removed some resonant frequencies (up to 12.5%) during the fingerprint conversion to simulate missing frequencies. Then, we fed the model with resulting fingerprints and compared the difference between human resolved and NN resolved elastic moduli (C11 and C12, see Table 1) by using MAPE. As shown in Fig. 5b, the absolute error of C11 did not significant increase with the increasing of missing modes, varying between 7.7% and 10.6%. However, the standard deviation surged from 1.5% (missing 3.1% frequencies) to 5.9% (missing 6.2% of frequencies), then gradually increased to 7.2% (missing 12.5% frequencies). The model exhibited higher accuracy and precision while resolving C12 (Fig. 5c). The error was only 3.9% when 9.4% of modes were removed. Only when 12.5% frequencies were removed, the absolute error was significantly increased to 6.2%. Overall, the 256 px fingerprint-based model demonstrated relatively good robustness against missing modes. Moreover, standard deviations for the retrieved C12 (0.3–1.6%) were smaller than those of C11. Although the resolving of C11 may lead to a larger error (about 8.6% or 23.3 GPa compared to manual derivation), it is still in good agreement with the true C11 value. Even values of C11 within the error range provide a good starting point for performing iterations of manual fitting, which greatly accelerates the RUS analysis process by narrowing down the initialization of C11 and C12 from tens of thousands of potential combinations.

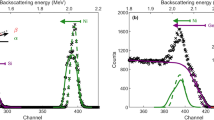

Using neural network model to obtain C11 and C12 from an experimental spectrum of a steel cylinder. (a) Experimental steel cylinder RUS spectrum and its corresponding fingerprint. (b) and (c) Using neural network model to obtain C11(b) and C12 (c) from experimental data. We randomly dropped specified number of peaks to observe model stability.

We further applied the modulated fingerprint method to train NN models and resolved three elastic moduli (C11, C12, and C44) from Ce:YAG samples with different amount of Ce (0.025, 0.1 and 1 wt.%). Similar to the steel cylinder, we determined the C11, C12, and C44 range based on previous studies7,67,68. In our previous study, we manually resolved the elastic moduli of these Ce:YAG samples and discovered that two samples (0.025 and 0.1 wt.% of Ce) were isotropic7, where C44 was the linear combination from C11 and C12 (C44 = 0.5 × (C11–C12)). Ce:YAG with 1 wt.% Ce, being anisotropic, contains three independent elastic moduli. In 0.025 wt.% Ce sample (Fig. 6a), the C11, C12, and C44 previously obtained were 320.7 GPa, 110.6 GPa, and 108.0 GPa, respectively. The NN model retrieved similar values of C11 (323.7 GPa) and C44 (113.0 GPa). The retrieved C12 (93.6 GPa) is slightly different. During the manual mapping, we noticed 26% of modes (10 of 38 modes) were missing by comparing experimental (blue) and theoretical (gray) spectra. Despite such differences, the elastic moduli from NN-based model were closed to those fitted by human. For the 0.1 wt.% Ce sample (Fig. 6b), the NN-based model yielded 326.9 GPa for C11, 101.1 GPa for C12, 105.6 GPa for C44, all in excellent agreement with the human resolved results, 325.6 GPa for C11, 110.5 GPa for C12, and 110.7 GPa for C44. The experimental spectrum from 0.1 wt.% Ce sample is the least distorted among three without any missing modes. Therefore, the NN model solved the elastic moduli with good accuracy.

The human mapped and NN resolved results were more different while analyzing the 1 wt.% Ce sample (Fig. 6c). The only similar elastic modulus was C11 (298.1 GPa vs. 306.1 GPa). C12 and C44 from NN and human were far from each other (100.4 GPa vs. 157.8 GPa for C12, and 89.3 GPa vs. 107.3 GPa for C44). In this sample, the frequency differences between experimental and theoretical spectra were more severe than those in the previous samples. Nearly one third of modes were missing (13 of 42 modes). Some of the remaining experimental resonant frequencies (highlighted by the red boxes) shifted about 3% from their theoretical representation, significantly higher than the distortion we introduced into the training dataset (± 1.5%). The model as expected produced elastic moduli different from those in human determined. Although, the NN-produced moduli are within a “good-guess” range, if one does not know the true values and used them as initialized parameters for performing more rigorous conventional RUS fitting.

The above result showed that the modulated fingerprint-based models (1) tolerated some degree of resonant frequency differences between computed training and experimental data, and (2) successfully retrieved the elastic moduli for 0.025% and 0.1% Ce samples as their final values; and also provide reasonable moduli values for the 1 wt.% Ce sample for further human intervention, significantly lower time and effort to search good initialized moduli sets. Future improvement can be made by using datasets with more variety of theoretical spectra (e.g., higher mode shifting range). Overall, the fingerprint-based models handled both the artificial missing peak (random peak dropping in steel cylinder RUS spectrum), and intrinsic peak differences associated with sample preparation and homogeneity (Ce:YAG RUS spectra). We believe that this modulated fingerprint method is useful to produce high quality NN-based models to retrieve elastic moduli from RUS spectra of various materials.

Conclusion

In this study, we demonstrated the modulated fingerprint based NN method to be able to (1) preprocess and optimize RUS spectra, (2) tolerate missing or shifting modes, and (3) successfully retrieve elastic moduli either as final values or good initialized ones. We investigated the influence of the fingerprint resolution on the accuracy of the models and found out that 256 px fingerprint-based model exhibited the highest accuracy and robustness in this study. The C11 and C12 MAPEs of 256 px fingerprint-based models were only 10% and 4% when using test spectra with up to 7.2% missing resonant frequencies. Using the optimized model to retrieve elastic moduli from an experimental steel RUS spectrum showed that the model maintained its accuracy. We further examined the modulated fingerprint-based NN method by retrieving elastic moduli from three Ce:YAG samples. The models tolerated up to 26% of missing modes and retrieved both C11 and C12 from two isotropic YAG containing 0.025 and 0.1 wt.% Ce. Applying the NN model on the anisotropic 1% wt. Ce containing YAG sample retrieved C11 accurately, while only providing good guessed values for C12 and C44, due to the severely distorted spectrum containing 30% of missing modes and over 3% of shifting mode frequency. Overall, this study demonstrated that proper data preprocessing and neural network models have the potential of realizing automatic analysis of RUS spectra, which can provide direct solutions or significantly lower time and effort to obtain elastic moduli. Lastly, converting one-dimensional RUS spectra to an image-like modulated fingerprint makes the use of advanced networks, e.g., convolutional neural network, possible in the future studies and transformative in developing deep learning process of other characterization techniques (e.g., XRD, XAFS, mass spectrometry, etc.).

Data availability

The programs and scripts for generating training dataset, training and evaluating NN models, and analyzing experimental RUS spectra are available at https://github.com/juejing-liu/RUS_TF_GuoGroup under MIT license. Experimental RUS data and models used in this study will be available on request to J.L and X.G.

References

Abeele, K. V. D. Multi-mode nonlinear resonance ultrasound spectroscopy for defect imaging: An analytical approach for the one-dimensional case. J. Acoust. Soc. Am. 122, 73–90. https://doi.org/10.1121/1.2735807 (2007).

Flynn, K. & Radovic, M. Evaluation of defects in materials using resonant ultrasound spectroscopy. J. Mater. Sci. 46, 2548–2556. https://doi.org/10.1007/s10853-010-5107-y (2011).

Nakamura, N., Nakashima, T., Ogi, H., Hirao, M. & Nishiyama, M. Fast recovery of elastic stiffness in Ag thin film studied by resonant-ultrasound spectroscopy. Japn. J. Appl. Phys. 48, 07GA02. https://doi.org/10.1143/jjap.48.07ga02 (2009).

Nakamura, N., Nakashima, T., Oura, S., Ogi, H. & Hirao, M. Resonant-ultrasound spectroscopy for studying annealing effect on elastic constant of thin film. Ultrasonics 50, 150–154. https://doi.org/10.1016/j.ultras.2009.08.013 (2010).

Peng, C., Tran, P., Nguyen-Xuan, H. & Ferreira, A. J. M. Mechanical performance and fatigue life prediction of lattice structures: Parametric computational approach. Compos. Struct. 235, 111821. https://doi.org/10.1016/j.compstruct.2019.111821 (2020).

Mukhopadhyay, T. & Adhikari, S. Effective in-plane elastic moduli of quasi-random spatially irregular hexagonal lattices. Int. J. Eng. Sci. 119, 142–179. https://doi.org/10.1016/j.ijengsci.2017.06.004 (2017).

Goncharov, V. G. et al. Elastic and thermodynamic properties of cerium-doped yttrium aluminum garnets. J. Am. Ceram. Soc. 104, 3478–3496. https://doi.org/10.1111/jace.17679 (2021).

Feig, V. R., Tran, H., Lee, M. & Bao, Z. Mechanically tunable conductive interpenetrating network hydrogels that mimic the elastic moduli of biological tissue. Nat. Commun. 9, 2740. https://doi.org/10.1038/s41467-018-05222-4 (2018).

Ghosh, S. et al. Thermodynamic evidence for a two-component superconducting order parameter in Sr2RuO4. Nat. Phys. 17, 199–204. https://doi.org/10.1038/s41567-020-1032-4 (2021).

Riaño, L., Belec, L., Chailan, J.-F. & Joliff, Y. Effect of interphase region on the elastic behavior of unidirectional glass-fiber/epoxy composites. Compos. Struct. 198, 109–116. https://doi.org/10.1016/j.compstruct.2018.05.039 (2018).

Yang, G. et al. Anomalously high elastic modulus of a poly(ethylene oxide)-based composite electrolyte. Energy Storage Mater. 35, 431–442. https://doi.org/10.1016/j.ensm.2020.11.031 (2021).

Xu, X. & Gupta, N. Determining elastic modulus from dynamic mechanical analysis data: Reduction in experiments using adaptive surrogate modeling based transform. Polymer 157, 166–171. https://doi.org/10.1016/j.polymer.2018.10.036 (2018).

Song, I., Suh, M., Woo, Y.-K. & Hao, T. Determination of the elastic modulus set of foliated rocks from ultrasonic velocity measurements. Eng. Geol. 72, 293–308. https://doi.org/10.1016/j.enggeo.2003.10.003 (2004).

Lokajicek, T. et al. The determination of the elastic properties of an anisotropic polycrystalline graphite using neutron diffraction and ultrasonic measurements. Carbon 49, 1374–1384. https://doi.org/10.1016/j.carbon.2010.12.003 (2011).

Balakirev, F. F., Ennaceur, S. M., Migliori, R. J., Maiorov, B. & Migliori, A. Resonant ultrasound spectroscopy: The essential toolbox. Rev. Sci. Instrum. 90, 121401. https://doi.org/10.1063/1.5123165 (2019).

Migliori, A. & Maynard, J. D. Implementation of a modern resonant ultrasound spectroscopy system for the measurement of the elastic moduli of small solid specimens. Rev. Sci. Instrum. 76, 121301. https://doi.org/10.1063/1.2140494 (2005).

Sanati, M., Albers, R. C., Lookman, T. & Saxena, A. Elastic constants, phonon density of states, and thermal properties of UO2. Phys. Rev. B 84, 014116. https://doi.org/10.1103/PhysRevB.84.014116 (2011).

Liu, J. et al. Thermal conductivity and elastic constants of PEDOT: PSS with high electrical conductivity. Macromolecules 48, 585–591. https://doi.org/10.1021/ma502099t (2015).

Ryan, K., Lengyel, J. & Shatruk, M. Crystal structure prediction via deep learning. J. Am. Chem. Soc. 140, 10158–10168. https://doi.org/10.1021/jacs.8b03913 (2018).

Li, X.-T., Chen, L., Shang, C. & Liu, Z.-P. In situ surface structures of pdag catalyst and their influence on acetylene semihydrogenation revealed by machine learning and experiment. J. Am. Chem. Soc. 143, 6281–6292. https://doi.org/10.1021/jacs.1c02471 (2021).

Cabán-Acevedo, M. et al. Ionization of high-density deep donor defect states explains the low photovoltage of iron pyrite single crystals. J. Am. Chem. Soc. 136, 17163–17179. https://doi.org/10.1021/ja509142w (2014).

Ju, L., Lyu, A., Hao, H., Shen, W. & Cui, H. Deep learning-assisted three-dimensional fluorescence difference spectroscopy for identification and semiquantification of illicit drugs in biofluids. Anal. Chem. 91, 9343–9347. https://doi.org/10.1021/acs.analchem.9b01315 (2019).

Trejo, O. et al. Elucidating the evolving atomic structure in atomic layer deposition reactions with in situ XANES and machine learning. Chem. Mater. 31, 8937–8947. https://doi.org/10.1021/acs.chemmater.9b03025 (2019).

Rankine, C. D., Madkhali, M. M. M. & Penfold, T. J. a deep neural network for the rapid prediction of X-ray absorption spectra. J. Phys. Chem. A 124, 4263–4270. https://doi.org/10.1021/acs.jpca.0c03723 (2020).

Trummer, D. et al. Deciphering the phillips catalyst by orbital analysis and supervised machine learning from Cr Pre-edge XANES of molecular libraries. J. Am. Chem. Soc. 143, 7326–7341. https://doi.org/10.1021/jacs.0c10791 (2021).

Timoshenko, J., Lu, D., Lin, Y. & Frenkel, A. I. Supervised machine-learning-based determination of three-dimensional structure of metallic nanoparticles. J. Phys. Chem. Lett. 8, 5091–5098. https://doi.org/10.1021/acs.jpclett.7b02364 (2017).

Pashkov, D. M. et al. Quantitative analysis of the UV–Vis spectra for gold nanoparticles powered by supervised machine learning. J. Phys. Chem. C 125, 8656–8666. https://doi.org/10.1021/acs.jpcc.0c10680 (2021).

Lee, J. W., Park, W. B., Lee, J. H., Singh, S. P. & Sohn, K. S. A deep-learning technique for phase identification in multiphase inorganic compounds using synthetic XRD powder patterns. Nat. Commun. 11, 86. https://doi.org/10.1038/s41467-019-13749-3 (2020).

Timoshenko, J. et al. subnanometer substructures in nanoassemblies formed from clusters under a reactive atmosphere revealed using machine learning. J. Phys. Chem. C 122, 21686–21693. https://doi.org/10.1021/acs.jpcc.8b07952 (2018).

Timoshenko, J. & Frenkel, A. I. “Inverting” X-ray absorption spectra of catalysts by machine learning in search for activity descriptors. ACS Catal. 9, 10192–10211. https://doi.org/10.1021/acscatal.9b03599 (2019).

Leisure, R. G. & Willis, F. A. Resonant ultrasound spectroscopy. J. Phys.: Condens. Matter 9, 6001–6029. https://doi.org/10.1088/0953-8984/9/28/002 (1997).

Migliori, A. & Maynard, J. D. Implementation of a modern resonant ultrasound spectroscopy system for the measurement of the elastic moduli of small solid specimens. Rev. Sci. Instrum. https://doi.org/10.1063/1.2140494 (2005).

Jakobsen, V. B. et al. Stress-induced domain wall motion in a ferroelastic mn3+ spin crossover complex. Angew. Chem. Int. Ed. 59, 13305–13312. https://doi.org/10.1002/anie.202003041 (2020).

Schiemer, J. et al. Magnetic field and in situ stress dependence of elastic behavior in EuTiO3 from resonant ultrasound spectroscopy. Phys. Rev. B 93, 054108. https://doi.org/10.1103/PhysRevB.93.054108 (2016).

Ghosh, S. et al. One-component order parameter in URu2Si2 uncovered by resonant ultrasound spectroscopy and machine learning. Sci. Adv. 6, 4074. https://doi.org/10.1126/sciadv.aaz4074 (2020).

Harris, C. R. et al. Array programming with NumPy. Nature 585, 357–362. https://doi.org/10.1038/s41586-020-2649-2 (2020).

Abadi, M. et al. in OSDI. 265–283.

Mckinney, W. in Proceedings of the 9th Python in Science Conference (ed Jarrod Millman Stéfan van der Walt) 55–61.

TensorFlow v. 2.8.0 (Zenodo, 2022).

pandas-dev/pandas: Pandas 1.2.2 v. 1.2.2 (Zenodo, 2021).

Migliori, A. et al. Resonant ultrasound spectroscopic techniques for measurement of the elastic moduli of solids. Physica B 183, 1–24. https://doi.org/10.1016/0921-4526(93)90048-B (1993).

Migliori, A. & Maynard, J. Implementation of a modern resonant ultrasound spectroscopy system for the measurement of the elastic moduli of small solid specimens. Rev. Sci. Instrum. 76, 121301 (2005).

Kingma, D. P. & Ba, J. (2015).

Desgranges, C. & Delhommelle, J. A new approach for the prediction of partition functions using machine learning techniques. J. Chem. Phys. 149, 044118. https://doi.org/10.1063/1.5037098 (2018).

Danilkin, S. A., Fuess, H., Wieder, T. & Hoser, A. Phonon dispersion and elastic constants in Fe–Cr–Mn–Ni austenitic steel. J. Mater. Sci. 36, 811–814. https://doi.org/10.1023/A:1004801823614 (2001).

Ledbetter, H. M. Monocrystal-polycrystal elastic constants of a stainless steel. Phys. Status Solidi 85, 89–96. https://doi.org/10.1002/pssa.2210850111 (1984).

Ledbetter, H. M., Frederick, N. V. & Austin, M. W. Elastic-constant variability in stainless-steel 304. J. Appl. Phys. 51, 305–309. https://doi.org/10.1063/1.327371 (1980).

Butler, K. T., Davies, D. W., Cartwright, H., Isayev, O. & Walsh, A. Machine learning for molecular and materials science. Nature 559, 547–555. https://doi.org/10.1038/s41586-018-0337-2 (2018).

Chen, C. et al. A critical review of machine learning of energy materials. Adv. Energy Mater. https://doi.org/10.1002/aenm.201903242 (2020).

Peeters, C., Antoni, J. & Helsen, J. Blind filters based on envelope spectrum sparsity indicators for bearing and gear vibration-based condition monitoring. Mech. Syst. Signal Proc. https://doi.org/10.1016/j.ymssp.2019.106556 (2020).

Jia, X., Zhao, M., Di, Y., Jin, C. & Lee, J. Investigation on the kurtosis filter and the derivation of convolutional sparse filter for impulsive signature enhancement. J. Sound Vib. 386, 433–448. https://doi.org/10.1016/j.jsv.2016.10.005 (2017).

Goodlet, B. R., Bales, B. & Pollock, T. M. A new elastic characterization method for anisotropic bilayer specimens via bayesian resonant ultrasound spectroscopy. Ultrasonics 115, 106455. https://doi.org/10.1016/j.ultras.2021.106455 (2021).

De Fauw, J. et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. Nat. Med. 24, 1342–1350. https://doi.org/10.1038/s41591-018-0107-6 (2018).

McKinney, S. M. et al. International evaluation of an AI system for breast cancer screening. Nature 577, 89–94. https://doi.org/10.1038/s41586-019-1799-6 (2020).

Kulichenko, M. et al. The rise of neural networks for materials and chemical dynamics. J. Phys. Chem. Lett. 12, 6227–6243. https://doi.org/10.1021/acs.jpclett.1c01357 (2021).

Seung, H. S., Opper, M. & Sompolinsky, H. in Proceedings of the fifth annual workshop on Computational learning theory 287–294 (Association for Computing Machinery, Pittsburgh, Pennsylvania, USA, 1992).

Ziatdinov, M. et al. Deep learning of atomically resolved scanning transmission electron microscopy images: Chemical identification and tracking local transformations. ACS Nano 11, 12742–12752. https://doi.org/10.1021/acsnano.7b07504 (2017).

Wang, Y. D., Armstrong, R. T. & Mostaghimi, P. Boosting resolution and recovering texture of 2D and 3D micro-CT images with deep learning. Water Resour. Res. https://doi.org/10.1029/2019wr026052 (2020).

Huang, H.-W., Li, Q.-T. & Zhang, D.-M. Deep learning based image recognition for crack and leakage defects of metro shield tunnel. Tunn. Undergr. Space Technol. 77, 166–176. https://doi.org/10.1016/j.tust.2018.04.002 (2018).

Ullman, S., Assif, L., Fetaya, E. & Harari, D. Atoms of recognition in human and computer vision. Proc. Natl. Acad. Sci. U. S. A. 113, 2744–2749. https://doi.org/10.1073/pnas.1513198113 (2016).

Garlea, E. et al. Variation of elastic mechanical properties with texture, porosity, and defect characteristics in laser powder bed fusion 316L stainless steel. Mater. Sci. Eng. A 763, 138032. https://doi.org/10.1016/j.msea.2019.138032 (2019).

Koopman, M. et al. Determination of elastic constants in WC/Co metal matrix composites by resonant ultrasound spectroscopy (RUS) and impulse excitation. Adv. Eng. Mater. 4, 37–42 (2002).

Tane, M., Ichitsubo, T., Ogi, H. & Hirao, M. Elastic property of aged duplex stainless steel. Scripta Mater. 48, 229–234 (2003).

Sedmák, P. et al. Application of resonant ultrasound spectroscopy to determine elastic constants of plasma-sprayed coatings with high internal friction. Surf. Coat. Technol. 232, 747–757. https://doi.org/10.1016/j.surfcoat.2013.06.091 (2013).

Garlea, E. et al. Variation of elastic mechanical properties with texture, porosity, and defect characteristics in laser powder bed fusion 316L stainless steel. Mater. Sci. Eng. A. https://doi.org/10.1016/j.msea.2019.138032 (2019).

Lei, M. Cracks in steel rollers: Detection using resonant ultrasound spectroscopy. J. Acoust. Soc. Am. 99, 2593–2603. https://doi.org/10.1121/1.415271 (1996).

Djemia, P. et al. Elasticity and lattice vibrational properties of transparent polycrystalline yttrium–aluminium garnet: Experiments and pair potential calculations. J. Eur. Ceram. Soc. 27, 4719–4725. https://doi.org/10.1016/j.jeurceramsoc.2007.02.216 (2007).

Monteseguro, V., Rodríguez-Hernández, P. & Muñoz, A. Yttrium aluminium garnet under pressure: Structural, elastic, and vibrational properties from ab initio studies. J. Appl. Phys. 118, 245902. https://doi.org/10.1063/1.4938193 (2015).

Acknowledgements

We acknowledge the support by the institutional funds from the Department of Chemistry, and the National Science Foundation (NSF), Division of Materials Research, under award No. 2144792. We also thank Albert Migliori, Los Alamos National Laboratory, Materials Synthesis and Integrated Devices Group, and the National High Magnetic Field Laboratory for helpful discussions on the practice and theory of resonant ultrasound spectroscopy. Lastly, Juejng Liu also thanks CD Project Red and Cyberpunk 2077. Cyberpunk 2077 encouraged him to obtain a graphical processing unit used in this study right before the cryptocurrency blooming in 2021.

Author information

Authors and Affiliations

Contributions

X.G. conceived and designed the research. J.L. constructed and performed the machine learning analyses, and wrote the manuscript. X.Z. and K.Z. helped create training dataset for input. V.G.G. generated the experimental results for input. J.D. gave scientific advice. All authors (J.L., X.Z., K.Z., V.G.G, J.D., J.L., X.G.) participated in discussions, editing, and reviewing of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liu, J., Zhao, X., Zhao, K. et al. A modulated fingerprint assisted machine learning method for retrieving elastic moduli from resonant ultrasound spectroscopy. Sci Rep 13, 5919 (2023). https://doi.org/10.1038/s41598-023-33046-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-023-33046-w

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.