Abstract

A degree-corrected distribution-free model is proposed for weighted social networks with latent structural information. The model extends the previous distribution-free models by considering variation in node degree to fit real-world weighted networks, and it also extends the classical degree-corrected stochastic block model from un-weighted network to weighted network. We design an algorithm based on the idea of spectral clustering to fit the model. Theoretical framework on consistent estimation for the algorithm is developed under the model. Theoretical results when edge weights are generated from different distributions are analyzed. We also propose a general modularity as an extension of Newman’s modularity from un-weighted network to weighted network. Using experiments with simulated and real-world networks, we show that our method significantly outperforms the uncorrected one, and the general modularity is effective.

Similar content being viewed by others

Introduction

Network data analysis is an important research topic in a range of scientific disciplines in recent years, particularly in the biological science, social science, physics and computer science. Many researchers aim at analyzing these networks by developing models, quantitative tools and theoretical framework to have a deeper understanding of the underlying structural information. A problem in network science that is of major interest is “community detection”. The Stochastic Blockmodels (SBM)1 is a classic model to model un-weighted networks for community detection. In SBM, every node in the same community shares the same expectation degree, which is unrealistic for real-world networks since nodes degrees vary in most real-world networks. To overcome this limitation of SBM, the popular model Degree Corrected Stochastic Blockmodels (DCSBM) proposed in2 considers node heterogeneity to extend SBM by allowing that nodes in the same community can have various expectation degrees. Many community detection methods and theoretical studies have been developed under SBM and DCSBM, to name a few3,4,5,6,7,8, and references therein.

However, most works built under SBM and DCSBM require the elements of adjacency matrix of the network to follow Bernoulli distribution, which limits the network to being un-weighted. Modeling and designing methods to quantitatively detecting latent structural information for weighted networks are interesting topics. Recent years, some Weighted Stochastic Blockmodels (WSBM) have been developed for weighted networks, to name a few9,10,11,12,13,14,15. However, though these models for weighted networks are attractive, they always require all elements of connectivity matrix to be nonnegative or all elements of adjacency matrix must follow some specific distributions as found in16. Furthermore, spectral clustering is widely used to study the structure of networks under SBM and DCSBM, for example17,18,19,20,21,22. Another limitation of the above WSBMs is, it is challenging to develop methods by taking the advantage of the spectral clustering idea under these WSBMs for their complex forms or strict constraint on edge distribution. To overcome limitations of these weighted models16, proposes a Distribution-Free Models (DFM) which has no requirement on the distribution of adjacency matrix’s elements and allows developing methods to fit the model by taking the advantage of spectral clustering. DFM can be seen as a direct extension of SBM, and nodes within the same community under DFM shares same expectation degrees, which is unrealistic for empirical networks with various nodes degrees.

In this paper, we develop a model called Degree-Corrected Distribution-Free Model (DCDFM) as an extension of DFM by considering node heterogeneity. We extend the previous results in the following ways:

(a) DCDFM models weighted networks by allowing nodes within the same community to have different expectation degrees. Though the WSBM developed in12 also considers node heterogeneity, it requires all elements of connectivity matrix to be nonnegative, and fitting it by spectral clustering is challenging. Our DCDFM inherits the advantages of DFM such that it has no constraint on distribution of adjacency matrix, allows connectivity matrix to have negative entries, and allows applying the idea of spectral clustering to fit it. Meanwhile, as an extension of DFM, similar as the relationship between SBM and DCSBM, nodes within the same community can have different expectation degrees under our DCDFM, and this ensures that DCDFM can model real-world weighted networks in which nodes have various degrees.

(b) To fit DCDFM, an efficient spectral clustering algorithm called nDFA is designed. We build theoretical framework on consistent estimation for the proposed algorithm under DCDFM. Benefited from the distribution-free property of DCDFM, our theoretical results under DCDFM are general. Especially, when DCDFM reduces to DFM, our theoretical results are consistent with those under DFM. When DCDFM degenerates to DCSBM, our results also match classical results under DCSBM. Numerical results of both simulated and real-world networks show the advantage of introducing node heterogeneity to model weighted networks.

(c) To measure performances of different methods on real-world weighted network with unknown information on nodes labels, we propose a general modularity as an extension of classical Newman’s modularity23. For weighted network in which all edge weights are nonnegative, the general modularity is exactly the Newman’s modularity. For weighted network in which some edge weights are negative, the general modularity considers negative edge weights. Numerical results on simulated network generated under DCDFM for different distributions, and empirical un-weighted and weighted networks with known ground-truth nodes labels support the effectiveness of the general modularity. By using two community-oriented topological measures introduced in24, we find that the modularity is effective and our nDFA returns reasonable community partition for real-world weighted networks with unknown ground-truth nodes labels.

Notations. We take the following general notations in this paper. For any positive integer m, let \([m]:= \{1,2,\ldots ,m\}\) and \(I_{m}\) be the \(m\times m\) identity matrix. For a vector x, \(\Vert x\Vert _{q}\) denotes its \(l_{q}\)-norm. \(M'\) is the transpose of the matrix M, and \(\Vert M\Vert \) denotes the spectral norm, \(\Vert M\Vert _{F}\) denotes the Frobenius norm, and \(\Vert M\Vert _{0}\) denotes the \(l_{0}\) norm by counting the number of nonzero entries in M. For convenience, when we say “leading eigenvalues” or “leading eigenvectors”, we are comparing the magnitudes of the eigenvalues and their respective eigenvectors with unit-norm. Let \(\lambda _{k}(M)\) be the k-th leading eigenvalue of matrix M. M(i, : ) and M( : , j) denote the i-th row and the j-th column of matrix M, respectively. M(S, : ) denotes the rows in the index sets S of matrix M. \(\mathrm {rank}(M)\) denotes the rank of matrix M.

Degree-corrected distribution-free model

Let \({\mathscr {N}}\) be an undirected weighted network with n nodes. Let A be the \(n\times n\) symmetric adjacency matrix of \({\mathscr {N}}\), and A(i, j) denotes the weight between node i and node j for all node pairs. Since we consider weighted network, A(i, j) is finite real values, and it can even be negative for \(i,j\in [n]\). Throughout this article, we assume that in network \({\mathscr {N}}\), all nodes belong to

Let \(\ell \) be an \(n\times 1\) vector such that \(\ell (i)=k\) if node i belongs to community k for \(i\in [n], k\in [K]\). For convenience, let \(Z\in \{0,1\}^{n\times K}\) be the membership matrix such that for \(i\in [n]\)

\(\mathrm {rank}(Z)=K\) means that each community \({\mathscr {C}}_{k}\) has at least one node for \(k\in [K]\). \(\mathrm {and~}Z(i,\ell (i))=1, \Vert Z(i,:)\Vert _{1}=1\) mean that \(Z(i,k)=1\) if \(k=\ell (i)\) and \(Z(i,k)=0\) if \(k\ne \ell (i)\), for \(i\in [n],k\in [K]\), i.e., each node only belongs to one of the K communities.

Let \(n_{k}=|i\in [n]:\ell (i)=k|\) be the size of community k for \(k\in [K]\). Set \(n_{\mathrm {max}}=\mathrm {max}_{k\in [K]}n_{k}, n_{\mathrm {min}}=\mathrm {min}_{k\in [K]}n_{k}\). Let the connectivity matrix \(P\in {\mathbb {R}}^{K\times K}\) satisfy

where \(|P_{\mathrm {max}}|=\mathrm {max}_{k,l\in [K]}|P(k,l)|\). Eq. (3) means that P is a full rank symmetric matrix, and we set the maximum absolute value of P’s entries as 1 mainly for convenience. Meanwhile, it should be emphasized that Eq. (3) allows P to have negative elements. Unless specified, K is assumed to be known in this paper.

Let \(\theta \) be an \(n\times 1\) vector such that \(\theta (i)\) is the node heterogeneity parameter (also known as degree heterogeneity) of node i, for \(i\in [n]\). Let \(\Theta \) be an \(n\times n\) diagonal matrix whose i-th diagonal element is \(\theta (i)\). For convenience, set \(\theta _{\mathrm {max}}=\mathrm {max}_{i\in [n]}\theta (i)\), and \(\theta _{\mathrm {min}}=\mathrm {min}_{i\in [n]}\theta (i)\). Since all entries of \(\theta \) are node heterogeneities, we have

For arbitrary distribution \({\mathscr {F}}\), and all pairs of (i, j) with \(i,j\in [n]\), our model assumes that A(i, j) are independent random variables generated according to \({\mathscr {F}}\) with expectation

Eq. (5) means that we only assume all elements of A are independent random variables and \({\mathbb {E}}[A]=\Theta ZPZ'\Theta \) without any prior knowledge on specific distribution of A(i, j) for \(i,j\in [n]\) since distribution \({\mathscr {F}}\) can be arbitrary. The rationality of our assumption on the arbitrariness of distribution \({\mathscr {F}}\) comes from the fact that we can generate a random number A(i, j) from distribution \({\mathscr {F}}\) with expectation \(\Omega (i,j)\). So, instead of fixing \({\mathscr {F}}\) to be a special distribution, A is allowed to be generated from any distribution \({\mathscr {F}}\) as long as the block structure in Eq. (5) holds under DCDFM.

Definition 1

Call model (1)–(5) the Degree-Corrected Distribution-Free Model (DCDFM), and denote it by \(DCDFM_{n}(K,P,Z,\Theta )\).

Our model DCDFM also inherits the distribution-free property of DFM by Eq. (5). Remarks on understanding \(Z,P,\theta \), node degree, network connectivity and self-connected nodes are provided below.

Remark 1

For DCDFM, node label \(\ell \) is defined by Z satisfying Eq. (2). Actually, when defining \(\ell \), we can reduce requirement on Z such that \(Z\in {\mathbb {R}}^{n\times K}\) has K distinct rows. For such case, to define node memberships, let two distinct nodes i and j be in the same cluster as long as \(Z(i,:)=Z(j,:)\). All theoretical results in this paper remains the same under DCDFM constructed for such Z. In this paper, we consider Z satisfying Eq. (2) mainly for convenience.

Remark 2

Under DCDFM, the intuition of considering connectivity matrix P comes from the fact we need a block matrix to generate \({\mathbb {E}}[A]\), similar as the SBM and DCSBM models. In detail, under DCDFM, if we do not consider connectivity matrix P (i.e., if P is an identity matrix), since \({\mathbb {E}}[A(i,j)]=\Omega (i,j)=\theta (i)\theta (j)P(\ell (i),\ell (j))\), we have \({\mathbb {E}}[A(i,j)]=0\) if nodes i and j are in different communities, and this limits the popularity of the applicability of a model. Therefore, we need to consider a connectivity matrix in our model. Note that P is not a matrix with probabilities unless \({\mathscr {F}}\) is Bernoulli or Poisson or Binomial distributions. See, when \({\mathscr {F}}\) is Normal distribution, we can let P has negative values such that \({\mathbb {E}}[A(i,j)]=\theta (i)\theta (j)P(\ell (i),\ell (j))\), i.e., A can have negative values. For example25, generates its multi-way blockmodels by letting their adjacency matrix generated from a Normal distribution with a block matrix which can have negative entries.

Remark 3

Relationship between DCDFM and DFM is similar as that between DCSBM and SBM. When \(\Theta =\sqrt{\rho }I_{n}\) for \(\rho >0\), we have \({\mathbb {E}}[A(i,j)]=\rho P(\ell (i),\ell (j))\), i.e., A(i, j)’s expectation only depends on \(\rho P(\ell (i),\ell (j))\); instead, if \(\Theta \ne \sqrt{\rho }I_{n}\), we have \({\mathbb {E}}[A(i,j)]=\theta (i)\theta (j)P(\ell (i),\ell (j))\), i.e., A(i, j)’s expectation can be modeled by not only the community information but also the individual characters of nodes i and j. Thus, we see that by considering \(\theta \), DCDFM is more applicable than DFM since DCDFM considers node individuality.

Remark 4

When \({\mathscr {F}}\) is Bernoulli or Poisson or Binomial distributions, all entries of A take values from \(\{0,1,2,\ldots ,m\}\) for some positive integer m, there may exist a subset of nodes never connect in A since many entries of A are 0 while other entries are positive integers, i.e., A may be dis-connected and this happens when the network is sparse. However, when \({\mathscr {F}}\) is a distribution of continuous random variables (for example, when \({\mathscr {F}}\) is Normal distribution), all node pairs are connected and A is a connected matrix naturally.

Remark 5

Our model DCDFM is applicable for network in which nodes may be self-connected, and this is also verified by the proof of Lemma 2 which has no restriction on diagonal entries of A.

Next proposition guarantees the identifiability of DCDFM, and such identifiability is similar as that of DCSBM.

Proposition 1

(Identifiability of DCDFM). DCDFM is identifiable for membership matrix: For eligible \((P,Z, \Theta )\) and \(({\tilde{P}}, {\tilde{Z}},{\tilde{\Theta }})\), if \(\Theta ZPZ'\Theta ={\tilde{\Theta }}{\tilde{Z}}{\tilde{P}}{\tilde{Z}}'{\tilde{\Theta }}\), then \(Z={\tilde{Z}}\).

Proof

Set \({\tilde{\ell }}(i)=k\) if \({\tilde{Z}}(i,k)=1\) for \(i\in [n],k\in [K]\). By Lemma 1, under DCDFM, \(U_{*}=ZB\) gives \(U_{*}(i,:)=Z(i,:)B=B(\ell (i),:)={\tilde{Z}}(i,:)B=B({\tilde{\ell }}(i),:)\), which gives \(Z={\tilde{Z}}\). \(\square \)

Compared with the DCSBM of2, our model DCDFM has no distribution constraint on all entries of A and allows P to have negative entries while DCSBM requires that A follows Bernoulli or Poisson distribution and all entries of P are nonnegative. Such differences make that our DCDFM can model both un-weighted networks and weighted networks while DCSBM only models un-weighted networks. Sure, DCDFM is a direct extension of DFM by considering node heterogeneity. Meanwhile, though the WSBM introduced in12 also considers node heterogeneity, it requires all entries of P to be nonnegative, and this limits the generality of WSBM.

Algorithm: nDFA

We will now introduce our inference algorithm aiming at estimating \(\ell \) given A and K under DCDFM. Since \(\mathrm {rank}(P)=K, \mathrm {rank}(Z)=K\) and \(\mathrm {rank}(\Omega )=K\), \(\Omega \) has K nonzero eigenvalues by basic algebra. Let \(\Omega =U\Lambda U'\) be the compact eigenvalue decomposition of \(\Omega \) with \(U\in {\mathbb {R}}^{n\times K}, \Lambda \in {\mathbb {R}}^{K\times K}\), and \(U'U=I_{K}\) where \(I_{K}\) is a \(K\times K\) identity matrix. Let \(U_{*}\in {\mathbb {R}}^{n\times K}\) be the row-normalized version of U such that \(U_{*}(i,:)=\frac{U(i,:)}{\Vert U(i,:)\Vert _{F}}\) for \(i\in [n]\). Let \({\mathscr {I}}\) be the indices of nodes corresponding to K communities, one from each community. The following lemma provides the intuition about designing our algorithm to fit the proposed model.

Lemma 1

Under \(DCDFM_{n}(K,P,Z,\Theta )\), we have \(U_{*}=ZB\), where \(B=U_{*}({\mathscr {I}},:)\).

Proof

The facts \(\Omega =\Theta ZPZ'\Theta =U\Lambda U'\) and \(U'U=I_{K}\) give \(U=\Theta ZPZ'\Theta U\Lambda ^{-1}=\Theta Z{\tilde{B}}\) where we set \({\tilde{B}}=PZ'\Theta U\Lambda ^{-1}\) for convenience. For \(i\in [n]\), \(U=\Theta Z{\tilde{B}}\) gives \(U(i,:)=\theta (i)Z(i,:){\tilde{B}}=\theta (i){\tilde{B}}(\ell (i),:)\), then we have \(U_{*}(i,:)=\frac{U(i,:)}{\Vert U(i,:)\Vert _{F}}=\frac{{\tilde{B}}(\ell (i),:)}{\Vert {\tilde{B}}(\ell (i),:)\Vert _{F}}\). When \(\ell (i)=\ell ({\bar{i}})\), we have \(U_{*}(i,:)=U_{*}({\bar{i}},:)\), and this gives \(U_{*}=ZB\), where \(B=U_{*}({\mathscr {I}},:)\). \(\square \)

Remark 6

In the proof of Lemma 1, we see that to make \(U_{*}(i,:)=\frac{U(i,:)}{\Vert U(i,:)\Vert _{F}}=\frac{{\tilde{B}}(\ell (i),:)}{\Vert {\tilde{B}}(\ell (i),:)\Vert _{F}}\) hold such that \(U_{*}(i,:)=U_{*}({\bar{i}},:)\) holds if \(\ell (i)=\ell ({\bar{i}})\), Eq. (4) should hold. Otherwise, if some entries of \(\theta \) are negative while others are positive, we have \(U_{*}(i,:)=\frac{U(i,:)}{\Vert U(i,:)\Vert _{F}}=\frac{\theta (i)}{|\theta (i)|}\frac{{\tilde{B}}(\ell (i),:)}{\Vert {\tilde{B}}(\ell (i),:)\Vert _{F}}\), which gives that \(U_{*}(i,:)=U_{*}({\bar{i}},:)\) may not hold when \(\ell (i)=\ell ({\bar{i}})\) unless we assume that \(\frac{\theta (i)}{|\theta (i)|}=\frac{\theta ({\bar{i}})}{|\theta ({\bar{i}})|}\) when \(\ell (i)=\ell ({\bar{i}})\).

Lemma 1 says that rows of \(U_{*}\) corresponding to nodes of the same clusters are equal. This suggests that applying k-means algorithm on all rows of \(U_{*}\) assuming there are K communities exactly returns nodes memberships up to a permutation of nodes labels since \(U_{*}\) has K different rows and \(U_{*}(i,:)=U_{*}({\bar{i}},:)\) if nodes i and \({\bar{i}}\) belong to the same community for \(i,{\bar{i}}\in [n]\).

The above analysis is under the oracle case when \(\Omega \) is given under DCDFM, now we turn to the real case where we only have A obtained from the weighted network \({\mathscr {N}}\) and the known number of communities K. Since labels vector \(\ell \) is unknown for the real case, our goal is to use (A, K) to predict it. Let \({\tilde{A}}={\hat{U}}{\hat{\Lambda }}{\hat{U}}'\) be the leading K eigen-decomposition of A such that \({\hat{U}}\in {\mathbb {R}}^{n\times K}, {\hat{\Lambda }}\in {\mathbb {R}}^{K\times K}, {\hat{U}}'{\hat{U}}=I_{K}\), and \({\hat{\Lambda }}\) contains the leading K eigenvalues of A. Let \({\hat{U}}_{*}\in {\mathbb {R}}^{n\times K}\) be the row-normalized version of \({\hat{U}}\) such that \({\hat{U}}_{*}(i,:)=\frac{{\hat{U}}(i,:)}{\Vert {\hat{U}}(i,:)\Vert _{F}}\) for \(i\in [n]\). The detail of our normalized Distribution-Free Algorithm (nDFA for short) is described in Algorithm 1, and it can be programmed by only a few lines of Matlab codes.

We name our algorithm as nDFA to stress the normalization procedure aiming at cancelling the effect of node heterogeneity and the distribution-free property aiming at modeling weighted networks. Using the idea of normalizing each row of \({\hat{U}}\) to remove the effect of node heterogeneity can also be found in18,20. Using the idea of entry-wise ratios between the leading eigenvector and other leading eigenvectors of A proposed in19 is also possible to remove the effect of \(\Theta \), and we leave studies of it under DCDFM for our future work.

Here, we provide the complexity analysis of nDFA. For nDFA’s computational complexity, the most expensive step is the eigenvalue decomposition which requires \(O(n^{3})\) times26. The row-normalization step costs \(O(n^{2})\), and the k-means step costs \(O(nlK^{2})\), where l is the number of k-means iterations, and we set \(l=100\) for our nDFA in this article. So the overall computational complexity of nDFA is \(O(n^{3})\). Though it is time demanding when n becomes huge, many wonderful works focus on spectral clustering for un-weighted network community detection, see17,18,19,20,22,27,28,29,30,31,32,33,34. Meanwhile, though using the random-projection and random-sampling ideas developed in35 to accelerate nDFA is possible, it is out of the scope of this article, and we leave it for our future work.

Consistency of nDFA

To build theoretical guarantee on nDFA’s consistency under DCDFM, we need below assumption.

Assumption 1

Assume

-

\(\tau =\mathrm {max}_{i,j\in [n]}|A(i,j)-\Omega (i,j)|\) is finite.

-

\(\gamma =\mathrm {max}_{i,j\in [n]}\frac{\mathrm {Var}(A(i,j))}{\theta (i)\theta (j)}\) is finite.

The above assumption is mild since it only requires that all elements of A and \(\Omega \), and variances of A’s entries are finite. We’d emphasize that Assumption 1 has no prior knowledge on any specific distribution of A(i, j) under DCDFM for all nodes, thus it dose not violate the distribution-free property of the proposed model. To build theoretical guarantee on consistent estimation, we need the following assumption.

Assumption 2

Assume \(\gamma \theta _{\mathrm {max}}\Vert \theta \Vert _{1}\ge \tau ^{2}\mathrm {log}(n)\).

On the one hand, when all elements of A are nonnegative, Assumption 2 guarantees a lower bound requirement on network sparsity. To have a better understanding on network sparsity, consider the case that \({\mathscr {F}}\) is a distribution such that all entries of A are nonnegative. We have \(\sum _{j=1}^{n}A(i,j)\) is the degree of node i and \(\sum _{j=1}^{n}\Omega (i,j)=\theta (i)\sum _{j=1}^{n}\theta (j)P(\ell (i),\ell (j))\) is the expectation degree of node i. Especially, when \(\Theta =\sqrt{\rho }I_{n}\) and \({\mathscr {F}}\) is Bernoulli or Poisson or Binomial distribution, we have \(\Omega =\rho ZPZ'\), which gives \({\mathbb {P}}(A(i,j)=m)=\rho P(\ell (i),\ell (j))\) for some \(m>0\), we see that \(\rho \) controls the sparsity of such weighted network or un-weighted network. Meanwhile, the sparsity assumption is common when proving estimation consistency for spectral clustering method, for example, consistency works for un-weighted network community detection like19,20. Especially, when \({\mathscr {F}}\) is Bernoulli distribution and \(\Theta =\sqrt{\rho }I_{n}\) such that DCDFM reduces to SBM, \(\gamma \) and \(\tau \) have a upper bound 1, and Assumption 2 turns to require that \(\rho n\ge \mathrm {log}(n)\), which is consistent with the sparsity requirement under SBM in20, and this guarantees that our requirement on network sparsity matches with classical result when DCDFM degenerates to SBM. On the other hand, for the case that \({\mathscr {F}}\) allows A to have negative entries, \(\theta \) is not related with network sparsity but only heterogeneity parameter because it is meaningless to define sparsity in an adjacency matrix with negative elements. For this case, Assumption 2 merely controls \(\theta \) for our theoretical framework.

Though \(\gamma \) is assumed to be a finite number, we also consider it in our Assumption 2 due to the fact that \(\gamma \) is directly related with the variance term of A’s elements, i.e., \(\gamma \) has a close relationship with the distribution \({\mathscr {F}}\) though \({\mathscr {F}}\) can be arbitrary distribution. After obtaining our main results for nDFA, we will apply some examples to show that \(\tau \) and \(\gamma \) are always finite or at least can be set as finite numbers to make Assumption 2 hold under different choices of \({\mathscr {F}}\). Meanwhile, we make Assumptions 1 and 2 mainly for the convenience of theoretical analysis on nDFA’s consistent estimation, and this two assumptions are irrelevant to the identifiability of our model DCDFM. Based on the above two assumptions, the following lemma bounds \(\Vert A-\Omega \Vert \) with an application of Bernstein inequality36.

Lemma 2

Under \(DCDFM_{n}(K,P,Z,\Theta )\), when Assumptions 1 and 2 hold, with probability at least \(1-o(n^{-3})\), we have

Proof

Set \(H=A-\Omega \), we have \(H=\sum _{i=1}^{n}\sum _{j=1}^{n}H(i,j)e_{i}e'_{j}\). Set \(H^{(i,j)}=H(i,j)e_{i}e'_{j}\). Since \({\mathbb {E}}[H(i,j)]={\mathbb {E}}[A(i,j)-\Omega (i,j)]=0\), we have \({\mathbb {E}}[H^{(i,j)}]=0\) and

where the last inequality holds by Assumption 1. Set \(R=\tau \), and \(\sigma ^{2}=\Vert \sum _{i=1}^{n}\sum _{j=1}^{n}{\mathbb {E}}[H^{(i,j)}(H^{(i,j)})']\Vert \). Since \({\mathbb {E}}[H^{2}(i,j)]={\mathbb {E}}[(A(i,j)-\Omega (i,j))^{2}]=\mathrm {Var}(A(i,j))\le \gamma \theta (i)\theta (j)\) by Assumption 1, we have

which gives \(\sigma ^{2}\le \gamma \theta _{\mathrm {max}}\Vert \theta \Vert _{1}\). Set \(t=\frac{\alpha +1+\sqrt{\alpha ^{2}+20\alpha +19}}{3}\sqrt{\gamma \theta _{\mathrm {max}}\Vert \theta \Vert _{1}{\mathrm {log}}(n)}\). Theorem 1.4 (the Matrix Bernstein) of36 gives

where the last inequality comes from Assumption 2. Set \(\alpha =3\), the claim follows. \(\square \)

Bound obtained in Lemma 2 is directly related with our main result for nDFA. To measure nDFA’s performance theoretically, we apply the clustering error of22 for its theoretical convenience. Set \(\hat{{\mathscr {C}}}_{k}=\{i: {\hat{\ell }}(i)=k\}\) for \(k\in [K]\). Define the clustering error as

where \(S_{K}\) is the set of all permutations of \(\{1,2,\ldots ,K\}\). Actually, using clustering errors in18,19,20 to measure nDFA’s performance also works, and we use \({\hat{f}}\) in this paper mainly for its convenience in proofs. The following theorem is the main result for nDFA, and it shows that nDFA enjoys asymptotically consistent estimation under the proposed model.

Theorem 1

Under \(DCDFM_{n}(K,P,Z,\Theta )\), let \({\hat{\ell }}\) be obtained from nDFA, when Assumptions 1 and 2 hold, with probability at least \(1-o(n^{-3})\), we have

Proof

The following lemma provides a general lower bound of \(\sigma _{K}(\Omega )\) under DCDFM, where this lower bound is directly related with model parameters.

Lemma 3

Under \(DCDFM_{n}(K,P,Z,\Theta )\), we have \(|\lambda _{K}(\Omega )|\ge \theta ^{2}_{\mathrm {min}}|\lambda _{K}(P)|n_{\mathrm {min}}\).

Proof

where we have used the fact that \(\lambda _{K}(Z'Z)=n_{\mathrm {min}}\) in the last equality. \(\square \)

By Lemma 5.1 of20, there is a \(K\times K\) orthogonal matrix \({\mathscr {O}}\) such that

where we have applied Lemma 3 in the last inequality.

For \(i\in [n]\), by basic algebra, we have \(\Vert {\hat{U}}_{*}(i,:){\mathscr {O}}-U_{*}(i,:)\Vert _{F}\le \frac{2\Vert {\hat{U}}(i,:){\mathscr {O}}-U(i,:)\Vert _{F}}{\Vert U(i,:)\Vert _{F}}\). Set \(m_{U}=\mathrm {min}_{i\in [n]}\Vert U(i,:)\Vert _{F}\), we have

According to the proof of Lemma 3.5 of37 where this lemma is distribution-free, we have \(\frac{1}{m_{U}}\le \frac{\theta _{\mathrm {max}}\sqrt{n_{\mathrm {max}}}}{\theta _{\mathrm {min}}}\), which gives

By Lemma 2 in22, for each \(1\le k\ne l\le K\), if one has

then \({\hat{f}}=O(\varsigma ^{2})\). Since \(\Vert B(k,:)-B(l,:)\Vert _{F}=\sqrt{2}\) for any \(k\ne l\) by Lemma 3.1 of37, setting \(\varsigma =\frac{2}{\sqrt{2}}\sqrt{\frac{K}{n_{\mathrm {min}}}}\Vert U_{*}-{\hat{U}}_{*}{\mathscr {O}}\Vert _{F}\) makes Eq. (6) hold for all \(1\le k\ne l\le K\), and then we have \({\hat{f}}=O(\varsigma ^{2})=O(\frac{K\Vert U_{*}-{\hat{U}}_{*}{\mathscr {O}}\Vert ^{2}_{F}}{n_{\mathrm {min}}})\). Thus,\({\hat{f}}=O(\frac{\theta ^{2}_{\mathrm {max}}K^{2}n_{\mathrm {max}}\Vert A-\Omega \Vert ^{2}}{\lambda ^{2}_{K}(P)\theta ^{6}_{\mathrm {min}}n^{3}_{\mathrm {min}}})\). Finally, this theorem follows by Lemma 2. \(\square \)

From Theorem 1, we see that decreasing \(\theta _{\mathrm {min}}\) increases the upper bound of error rate, and this can be understood naturally since a smaller \(\theta _{\mathrm {min}}\) gives a higher probability to generate an isolated node having no connections with other nodes, and thus a harder case for community detection, where such fact is also found in19 under DCSBM. It is also harder to detect nodes labels for a network generated under a smaller \(|\lambda _{K}(P)|\) and \(n_{\mathrm {min}}\), and such facts are also found in20 under DCSBM. Add some conditions on model parameters, we have below corollary by basic algebra.

Corollary 1

Under \(DCDFM_{n}(K,P,Z,\Theta )\), and conditions in Theorem 1 hold, with probability at least \(1-o(n^{-3})\),

-

when \(K=O(1), \frac{n_{\mathrm {max}}}{n_{\mathrm {min}}}=O(1)\), we have \( {\hat{f}}=O(\frac{\gamma \theta ^{3}_{\mathrm {max}}\Vert \theta \Vert _{1}\mathrm {log}(n)}{\lambda ^{2}_{K}(P)\theta ^{6}_{\mathrm {min}}n^{2}})\).

-

when \(\theta _{\mathrm {max}}=O(\sqrt{\rho }), \theta _{\mathrm {min}}=O(\sqrt{\rho })\) for \(\rho >0\), we have \({\hat{f}}=O(\frac{\gamma K^{2}n_{\mathrm {max}}n\mathrm {log}(n)}{\lambda ^{2}_{K}(P)\rho n^{3}_{\mathrm {min}}})\).

-

when \(K=O(1), \frac{n_{\mathrm {max}}}{n_{\mathrm {min}}}=O(1), \theta _{\mathrm {max}}=O(\sqrt{\rho })\) and \(\theta _{\mathrm {min}}=O(\sqrt{\rho })\) for \(\rho >0\), we have \({\hat{f}}=O(\frac{\gamma \mathrm {log}(n)}{\lambda ^{2}_{K}(P)\rho n})\).

When \(\theta (i)=\sqrt{\rho }\) for \(i\in [n]\) such that DCDFM reduces to DFM, theoretical results for nDFA under DCDFM are consistent with those under DFM proposed in Theorem 1 of16. For the third bullet of Corollary 1, we see that \(|\lambda _{K}(P)|\) should shrink slower than \(\sqrt{\frac{\gamma \mathrm {log}(n)}{\rho n}}\) for consistent estimation, and it should shrink slower than \(\sqrt{\frac{\mathrm {log}(n)}{n}}\) when \(\rho \) is a constant and \(\gamma \) is finite. When \(\lambda _{K}(P)\) and \(\gamma \) are fixed, we see that \(\rho \) should shrink slower than \(\frac{\mathrm {log}(n)}{n}\), and this is consistent with assumption 2. Generally speaking, the finiteness of \(\gamma \) is significant for the fact that we can ignore the effect of \(\gamma \) in our theoretical bounds as long as \(\gamma \) is finite. Next, we use some examples under different distributions to show that \(\tau \) and \(\gamma \) are finite or we can always set them as finite.

Follow similar analysis as16, we let \({\mathscr {F}}\) be some specific distributions as examples to show the generality of DCDFM as well as nDFA’s consistent estimation under DCDFM. For \(i,j\in [n]\), we mainly bound \(\gamma \) to show that \(\gamma \) is finite (i.e., the 2nd bullet in assumption 1 holds under different distributions) and then obtain error rates of nDFA by considering below distributions under DCDFM, where details on probability mass function or probability density function on these distributions can be found in http://www.stat.rice.edu/~dobelman/courses/texts/distributions.c &b.pdf.

Example 1

when \({\mathscr {F}}\) is Normal distribution such that \(A(i,j)\sim \mathrm {Normal}(\Omega (i,j),\sigma ^{2}_{A})\) and all entries of A are finite real numbers. Since mean of Normal distribution can be negative, DCDFM allows P to have negative entries as long as P is full rank. Sure, in this case, \(\gamma =\mathrm {max}_{i,j\in [n]}\frac{\sigma ^{2}_{A}}{\theta (i)\theta (j)}\le \frac{\sigma ^{2}_{A}}{\theta ^{2}_{\mathrm {min}}}\) is finite, and assumption 2 requires \(\frac{\sigma ^{2}_{A}}{\theta ^{2}_{\mathrm {min}}}\frac{\theta _{\mathrm {max}}\Vert \theta \Vert _{1}}{\mathrm {log}(n)}\rightarrow \infty \) as \(n\rightarrow \infty \). Set \(\gamma =O(\frac{\sigma ^{2}_{A}}{\theta ^{2}_{\mathrm {min}}})\) in Theorem 1, nDFA’s error bound is

From this bound, we see that increases \(\sigma ^{2}_{A}\) increases error rate, and a smaller \(\sigma ^{2}_{A}\) is preferred which is also verified by Experiment 1[b] in Section 5. For convenience, setting \(\sigma ^{2}_{A}\le C\theta ^{2}_{\mathrm {min}}\) for some \(C>0\) makes assumption 2 equal to require \(\frac{\theta _{\mathrm {max}}\Vert \theta \Vert _{1}}{\mathrm {log}(n)}\rightarrow \infty \) as \(n\rightarrow \infty \) since \(\tau \) is finite and \({\hat{f}}=O(\frac{\theta ^{3}_{\mathrm {max}}K^{2}n_{\mathrm {max}}\Vert \theta \Vert _{1}\mathrm {log}(n)}{\lambda ^{2}_{K}(P)\theta ^{6}_{\mathrm {min}}n^{3}_{\mathrm {min}}})\).

Example 2

When \({\mathscr {F}}\) is Binomial distribution such that \(A(i,j)\sim \mathrm {Binomial}(m,\frac{\Omega (i,j)}{m})\) for some positive integer m and all entries of A are integers in \(\{0,1,2,\ldots ,m\}\). Sure, \(\tau \le m\) here. For this case, since \(\frac{\Omega (i,j)}{m}\) is probability, all elements of P should be nonnegative. Sure, we have \({\mathbb {E}}[A(i,j)]=\Omega (i,j)\) and \(\mathrm {Var}(A(i,j))=\Omega (i,j)(1-\frac{\Omega (i,j)}{m})\le \Omega (i,j)\le \theta (i)\theta (j)\) by the property of Binomial distribution. Thus, \(\gamma =1\), and error rate in this case can be obtained immediately.

Example 3

When \({\mathscr {F}}\) is Bernoulli distribution, we have \(A(i,j)\sim \mathrm {Bernoulli}(\Omega (i,j))\), all entries of P are nonnegative, and DCDFM reduces to DCSBM considered in literature19,20. For this case, all entries of A are either 0 or 1, i.e., un-weighted network and \(\tau \le 1\). Since \(A(i,j)\sim \mathrm {Bernoulli}(\Omega (i,j))\) and \(\Omega (i,j)\) is a probability in [0, 1], we have \(\mathrm {Var}(A(i,j))=\Omega (i,j)(1-\Omega (i,j))\le \Omega (i,j)=\theta (i)\theta (j)P(\ell (i),\ell (j))\le \theta (i)\theta (j)\), suggesting that \(\gamma =1\) is finite and Assumption 1 holds. Setting \(\gamma =1\) in Theorem 1 obtains the theoretical upper bound of nDFA’s error rate under DCDFM immediately.

Example 4

when \({\mathscr {F}}\) is Poisson distribution such that \(A(i,j)\sim \mathrm {Poisson}(\Omega (i,j))\) as in2 and all entries of A are nonnegative integers. For Poisson distribution, all entries of P should be nonnegative and \(\tau \) is finite as long as A’s elements are generated from Poisson distribution. Meanwhile, \({\mathbb {E}}[A(i,j)]=\Omega (i,j)\) and \(\mathrm {Var}(A(i,j))=\Omega (i,j)\le \theta (i)\theta (j)\) holds by Poisson distribution’s expectation and variance. Thus, \(\gamma =1\) is finite and we can obtain bound of nDFA’s error rate from Theorem 1. For this example, under conditions in the 3rd bullet of Corollary 1, our theoretical result matches that of Theorem 4.220 up to logarithmic factors, and this guarantees the optimality of our theoretical studies.

Example 5

When \({\mathscr {F}}\) is Logistic distribution such that \(A(i,j)\sim \mathrm {Logistic}(\Omega (i,j),\beta )\) for \(\beta >0\) and all entries of A are real values. For this case, P’s entries are real values, \(\tau \) is finite when A’s entries come from Logistic distribution, \({\mathbb {E}}[A(i,j)]=\Omega (i,j)\) satisfying Eq. (5), and \(\mathrm {Var}(A(i,j))=\frac{\pi ^{2}\beta ^{2}}{3}\), i.e., \(\gamma \le \frac{\pi ^{2}\beta ^{2}}{3\theta ^{2}_{\mathrm {min}}}\) and \(\gamma \) is finite.

Example 6

DCDFM can also generate signed network by setting \({\mathbb {P}}(A(i,j)=1)=\frac{1+\Omega (i,j)}{2}\) and \({\mathbb {P}}(A(i,j)=-1)=\frac{1-\Omega (i,j)}{2}\) such that all non-diagonal elements of A are either 1 or \(-1\). For this case, all entries of P are real values and \(\Omega (i,j)\) should be set such that \(-1\le \Omega (i,j)\le 1\). Sure, \({\mathbb {E}}[A(i,j)]=\Omega (i,j)\) satisfies Eq. (5), and \(\mathrm {Var}(A(i,j))=1-\Omega ^{2}(i,j)\le 1\), i.e., \(\gamma \le \frac{1}{\theta ^{2}_{\mathrm {min}}}\) is finite.

Since our model DCDFM has no limitation on the choice of distribution \({\mathscr {F}}\) as long as Eq. (5) holds, setting \({\mathscr {F}}\) as any other distribution (see, Double exponential, Exponential, Gamma and Uniform distributions in http://www.stat.rice.edu/~dobelman/courses/texts/distributions.c &b.pdf) obeying Eq. (5) is also possible and this guarantees the generality of our model as well as our theoretical results.

Experimental results

Both simulated and empirical data are presented to compare nDFA with existing algorithm DFA developed in16 for weighted networks, where DFA applies k-means on all rows of \({\hat{U}}\) with K clusters to estimate nodes labels. Meanwhile, codes for all experimental results in this paper are executed by MATLAB R2021b. Though our model DCDFM and our algorithm nDFA are also applicable for network with self-connected nodes, unless specified, we do not consider loops in this part. Before presenting experimental results, we introduce general modularity for weighted network community detection in next subsection, where the general modularity can be seen as a measure of performance for any algorithm designed for weighted network and we will also test the effectiveness of the general modularity in both simulated and empirical networks.

General modularity for weighted networks

Unlike un-weighted network, the node degree in weighted network is slightly different, especially when A has negative elements. For un-weighted network in which all entries of A take values either 0 or 1, and weighted network in which all entries of A are nonnegative, degree for node i is always defined as \(\sum _{j=1}^{n}A(i,j)\). However, for weighted network in which A has negative entries, \(\sum _{j=1}^{n}A(i,j)\) does not measure the degree of node i. Instead, to measure node degree for all kinds of weighted networks, we define degree of node i as below: let \(A^{+}, A^{-}\in {\mathbb {R}}^{n\times n}\) such that \(A^{+}(i,j)=\mathrm {max}(A(i,j),0)\) and \(A^{-}(i,j)=\mathrm {max}(-A(i,j),0)\) for all i, j. Then we have \(A=A^{+}-A^{-}\). Let \(d^{+}(i)=\sum _{j=1}^{n}A^{+}(i,j)\) and \(d^{-}(i)=\sum _{j=1}^{n}A^{-}(i,j)\) be the positive and negative degrees of node i. Meanwhile, since \({\mathbb {E}}[\sum _{j=1}^{n}|A(i,j)|]=\sum _{j=1}^{n}{\mathbb {E}}[|A(i,j)|]=\theta (i)\sum _{j=1}^{n}\theta (j)|P(\ell (i),\ell (j))|\), we see that \(\theta (i)\) is a measure of the “degree” of node i, especially when all entries of A are nonnegative. Let \(m^{+}=\sum _{i=1}^{n}d^{+}(i)\) and \(m^{-}=\sum _{i=1}^{n}d^{-}(i)\). Now, we are ready to define the general modularity Q as below

where

\({\hat{\ell }}\) is the \(n\times 1\) vector such that \({\hat{\ell }}(i)\) denotes the cluster that node i belongs to, and

For weighted network in which all entries of A are nonnegative (i.e, \(Q=Q^{+}\) since \(Q^{-}=0\) when \(A(i,j)\ge 0\) for all i, j), Q reduces to the classical Newman’s modularity23. For weighted network in which A contains negative entries, Q is an extension of classical modularity by considering negative entries of A. The intuition of designing \(Q^{-}\) by summarizing absolute values of \((A^{-}(i,j)-\frac{d^{-}(i)d^{-}(j)}{2m^{-}})\delta ({\hat{\ell }}_{i},{\hat{\ell }}_{j})\) comes from the fact that a negative A(i, j) may not mean that nodes i and j tend to be in different cluster since A’s negative elements may be generated from Normal distribution, Logistic distribution or some other distributions. We set \(Q=Q^{+}-Q^{-}\) empirically since such modularity is a good measure to investigate the performance of different algorithms.

In Eq. (7), we write Q as \(Q(\bullet )\) for convenience where \(\bullet \) denotes certain community detection method since \({\hat{\ell }}\) is obtained by running the community detection method \(\bullet \) to A with K communities. Next, we define the effectiveness of the general modularity Q. Since \({\hat{f}}\) is stronger criterion than the Hamming error19, for numerical studies, the Hamming error rate defined below is applied to investigate performances of algorithms.

where \({\mathscr {P}}_{K}\) is the set of all \(K\times K\) permutation matrices, the matrix \({\hat{Z}}\in {\mathbb {R}}^{n\times K}\) is defined as \({\hat{Z}}(i,k)=1\) if \({\hat{\ell }}(i)=k\) and 0 otherwise for \(i\in [n],k\in [K]\), and \({\hat{\ell }}\) is the label vector returned from applying method \(\bullet \) to A with K communities. Sure, \({\hat{f}}(\bullet 1)<{\hat{f}}(\bullet 2)\) means method \(\bullet 1\) outperforms method \(\bullet 2\). Now, we are ready to define the effectiveness of Q when \({\hat{f}}(\bullet 1)\ne {\hat{f}}(\bullet 2)\):

where we do not consider the case \({\hat{f}}(\bullet 1)={\hat{f}}(\bullet 2)\) because \(Q(\bullet 2)=Q(\bullet 1)\) for this case and it does not tell the effectiveness of Q. On the one hand, \(E_{Q}(\bullet 1,\bullet 2)=1\) means that if method \(\bullet 1\) outperforms method \(\bullet 2\) (i.e., \({\hat{f}}(\bullet 1)<{\hat{f}}(\bullet 2)\)), then we also have \(Q(\bullet 1)\ge Q(\bullet 2)\), i.e., the generality modularity Q is effective. On the other hand, \(E_{Q}(\bullet 1,\bullet 2)=-1\) means that if method \(\bullet 1\) outperforms method \(\bullet 2\), we have \(Q(\bullet 1)<Q(\bullet 2)\) which means that Q is ineffective. For any experiment, suppose we generate N adjacency matrices A under a community detection model, we obtain N numbers of \(E_{Q}(\bullet 1,\bullet 2)\). The ratio of effectiveness is defined as

where \(N_{0}\) is the number of adjacency matrices such that \({\hat{f}}(\bullet 1)={\hat{f}}(\bullet 2)\) since the effectiveness of the generality modularity is defined when \({\hat{f}}(\bullet 1)\ne {\hat{f}}(\bullet 2)\). Sure, a lager \(R_{E_{Q}(\bullet 1,\bullet 2)}\) indicates the effectiveness of the generality modularity Q obtained by applying methods \(\bullet 1\) and \(\bullet 2\).

Simulations

In numerical simulations, we aim at comparing nDFA with DFA under DCDFM by reporting \({\hat{f}}(nDFA)\) and \({\hat{f}}(DFA)\), and investigating the effectiveness of Q by reporting \(R_{E_{Q}(DFA,nDFA)}\) computed from nDFA and DFA.

In all simulated data, unless specified, set \(n=400, K=4\), generate \(\ell \) such that node belongs to each community with equal probability, and let \(\rho >0\) be a parameter such that \(\theta (i)=\rho \times \mathrm {rand}(1)\), where \(\mathrm {rand}(1)\) is a random value in the interval (0, 1). \(\rho \) is regarded as sparsity parameter controlling the sparsity of network \({\mathscr {N}}\). When \(P, Z, \Theta \) are set, \(\Omega \) is \(\Omega =\Theta ZPZ'\Theta \). Generate the symmetric adjacency matrix A by letting A(i, j) generated from a distribution \({\mathscr {F}}\) with expectation \(\Omega (i,j)\). Different distributions will be studied in simulations, and we show the error rates of different methods, averaged over 100 random runs for each setting of some model parameters.

Experiment 1: normal distribution

This experiment studies the case when \({\mathscr {F}}\) is Normal distribution. Set P as

Since \({\mathscr {F}}\) is Normal distribution, elements of P are allowed to be negative under DCDFM. Generate the symmetric adjacency matrix A by letting A(i, j) be a random variable generated from \(\mathrm {Normal}(\Omega (i,j),\sigma ^{2}_{A})\) for some \(\sigma ^{2}_{A}\). Note that the only criteria for choosing P is, P should satisfy Eq. (5), and elements of P should be positive or negative depending on distribution \({\mathscr {F}}\). See, if \({\mathscr {F}}\) is Normal distribution as in this experiment, P can have negative entries; if \({\mathscr {F}}\) is Bernoulli or Poisson or Binomial distribution, all entries of P should be nonnegative.

Experiment 1[a]: Changing \(\rho \). Let \(\sigma ^{2}_{A}=4\), and \(\rho \) range in \(\{1,2,\ldots ,10\}\). In panel (a) of Fig. 1, we plot the error against \(\rho \). For larger \(\rho \), we get denser networks, and the two methods perform better. When \(\rho \) is larger than 5, nDFA outperforms DFA. Meanwhile, Experiment 1[a] generates totally \(10\times 100=1000\) adjacency matrices, where 10 is the cardinality of \(\{1,2,\ldots ,10\}\), and 100 is the repetition for each \(\rho \). Among the 1000 adjacency matrices, we calculate \(R_{E_{Q}(DFA,nDFA)}\) based on DFA and nDFA. \(R_{E_{Q}(DFA,nDFA)}\) for Experiment 1[a] is reported in Table 1, and we see that \(R_{E_{Q}(DFA,nDFA)}\) is \(82.59\%\) (a value much larger than 50%), suggesting the effectiveness of the general modularity. Similar illustrations on the calculation of \(R_{E_{Q}(DFA,nDFA)}\) hold for other simulated experiments in this paper.

Experiment 1[b]: Changing \(\sigma ^{2}_{A}\). Let \(\rho =10\), and \(\sigma ^{2}_{A}\) range in \(\{1,2,\ldots ,10\}\). In panel (b) of Fig. 1, we plot the error against \(\sigma ^{2}_{A}\). For larger \(\sigma ^{2}_{A}\), theoretical upper bound of \({\hat{f}}\) is larger for nDFA as shown by the first bullet given after Corollary 1. Thus, the increasing error of nDFA when \(\sigma ^{2}_{A}\) increases is consistent with our theoretical findings. Meanwhile, the numerical results also tell us that nDFA significantly outperforms DFA since DFA always performs poor when there exists node heterogeneity for each node.

Experiment 2: binomial distribution

This experiment considers the case when \({\mathscr {F}}\) is Binomial distribution. For binomial distribution, as discussed in Example 2, P should be an nonnegative matrix. Set P as

Since \(\Omega =\Theta ZPZ'\Theta \), generate the symmetric matrix A such that A(i, j) is a random variable generated according to \(\mathrm {Binomial}(m,\frac{\Omega (i,j)}{m})\) for some positive integer m.

Experiment 2[a]: Changing \(\rho \). Let \(m=5\), and \(\rho \) range in \(\{0.5,1,\ldots ,4\}\). Note that since \(\frac{\Omega (i,j)}{m}\) is a probability and \(\Omega (i,j)\le \rho \), \(\rho \) should be set lesser than m. In panel (c) of Fig. 1, we plot the error against \(\rho \). We see that the two methods perform better as \(\rho \) increases and nDFA behaves much better than DFA.

Experiment 2[b]: Changing m. Let \(\rho =1\), and m range in \(\{1,2,\ldots ,20\}\). In panel (d) of Fig. 1, we plot the error against m. For larger m, both two methods perform poorer, and this phenomenon occurs because A(i, j) may take more integers as m increases when \({\mathscr {F}}\) is Binomial distribution. The results also show that nDFA performs much better than DFA when considering variation of node degree.

Remark 7

For visuality, we plot A generated under DFM when \({\mathscr {F}}\) is Binomial distribution. Let \(n=24,K=2\). Let \(Z(i,1)=1\) for \(1\le i\le 12\), \(Z(i,2)=1\) for \(13\le i\le 24\). Let \(m=5\), and \(\Theta =0.7I\) (i.e., a DFM case). Set P as

For above setting, two different adjacency matrices are generated under DFM in Fig. 2 where we also report error rates for DFA and nDFA. Meanwhile, since A and Z are known here, one can run DFA and nDFA directly to A in Fig. 2 with two communities to check the error rates of DFA and nDFA. Furthermore, we also plot adjacency matrices for Bernoulli distribution, Poisson distribution and Signed network.

Experiment 3: bernoulli distribution

In this experiment, let \({\mathscr {F}}\) be Bernoulli distribution such that A(i, j) is random variable generated from \(\mathrm {Bernoulli}(\Omega (i,j))\). Set P same as Experiment 2.

Experiment 3: Changing \(\rho \). Let \(\rho \) range in \(\{0.1,0.2,\ldots ,2\}\). In panel (e) of Fig. 1, we plot the error against \(\rho \). Similar as Experiment 2[a], nDFA outperforms DFA.

Remark 8

For visuality, we plot A generated under DFM when \({\mathscr {F}}\) is Bernoulli. Let \(n,K,Z,\Theta ,P\) be the same as Remark 7. Two different adjacency matrices shown in Fig. 3 are generated under above setting.

Experiment 4: poisson distribution

This experiment focuses on the case when \({\mathscr {F}}\) is Poisson distribution such that A(i, j) is random variable generated from \(\mathrm {Poisson}(\Omega (i,j))\). Set P same as Experiment 2.

Experiment 4: Changing \(\rho \). Let \(\rho \) range in \(\{0.1,0.2,\ldots ,2\}\). In panel (f) of Fig. 1, we plot the error against \(\rho \). The results are similar as that of Experiment 2[a], and nDFA enjoys better performance than DFA.

Remark 9

For visuality, we plot A generated under DFM when \({\mathscr {F}}\) is Poisson. \(n,K,Z,\Theta ,P\) are set the same as Remark 7. Two different adjacency matrices shown in Fig. 4 are generated under above setting.

Experiment 5: logistic distribution

In this experiment, let \({\mathscr {F}}\) be Logistic distribution such that A(i, j) is random variable generated from \(\mathrm {Logistic}(\Omega (i,j),\beta )\). Set P same as Experiment 1.

Experiment 5[a]: Changing \(\rho \). Let \(\beta =1\), and \(\rho \) range in \(\{1,2,\ldots ,7\}\). In panel (g) of Fig. 1, we plot the error against \(\rho \). The results are similar as that of Experiment 1[a], and nDFA outperforms DFA.

Experiment 5[b]: Changing \(\beta \). Let \(\rho =7\), and \(\beta \) range in \(\{1,2,\ldots ,5\}\). Panel (h) of Fig. 1 plots the error against \(\rho \). The results are similar as that of Experiment 1[b], and nDFA outperforms DFA.

Experiment 6: signed network

In this experiment, let \({\mathbb {P}}(A(i,j)=1)=\frac{1+\Omega (i,j)}{2}\) and \({\mathbb {P}}(A(i,j)=-1)=\frac{1-\Omega (i,j)}{2}\) such that all elements of A are either 1 or \(-1\). Set P same as Experiment 1.

Experiment 6: Changing \(\rho \). Let \(n=1600\), and \(\rho \) range in \(\{0.8,0.85,0.9,0.95,1\}\). In panel (i) of Fig. 1, we plot the error against \(\rho \). And nDFA outperforms DFA.

Remark 10

For visuality, we plot A generated under DFM when \({\mathbb {P}}(A(i,j)=1)=\frac{1+\Omega (i,j)}{2}\) and \({\mathbb {P}}(A(i,j)=-1)=\frac{1-\Omega (i,j)}{2}\) for signed network. \(n,K,Z,\Theta \) are set the same as Remark 7, and P is set as

Two different adjacency matrices generated under above setting are shown in Fig. 5.

Real data

In real data analysis, instead of simply using our general modularity for comparative analysis, we also consider the topological comparative evaluation framework proposed in24. We only consider two topological approaches embeddedness which measures how much the direct neighbours of a node belong to its own community and community size which is an important characteristic of the community structure24, because the internal transitivity, scaled density, average distance and hub dominance introduced in24 only work for un-weighted networks while we will consider weighted networks in this part. Now we provide the definition of embeddedness24,38: for node i, let \(d_{\mathrm {int}}(i)=\sum _{j:{\hat{\ell }}(j)={\hat{\ell }}(i)}A(i,j)\) be the internal degree of node i belonging to cluster \({\hat{\ell }}(i)\) and \(d(i)=\sum _{j=1}^{n}A(i,j)\) be the total degree of node i, where \({\hat{\ell }}\) is the estimated nodes labels for certain method \(\bullet \). The embeddedness of node i is defined as

where this definition of embeddedness extends that of24,38 from un-weighted network to weighted network whose adjacency matrix is connected and has nonnegative entries. Extending the definition of embeddedness for adjacency matrix in which there may exist negative elements is an interesting problem, and we leave it for our future work. Meanwhile, e(i) is only defined for one node i, to capture embeddedness for all nodes, we define the overall embedbedness (OE for short) depending on method \(\bullet \) as

As analyzed in24, the maximal \(OE(\bullet )\) of 1 is reached when all the neighbours are in its community for all nodes (i.e., \(d_{\mathrm {int}}(i)=d(i)\) for all i). However, if method \(\bullet \) puts all nodes (or a majority of nodes) into one community, then it can also make \(OE(\bullet )\) equal to 1 (or close to 1). Therefore, simply using the overall embedbedness to compare the performances of different community detection methods is not enough, we need to consider the general modularity \(Q(\bullet )\) and community size. Set

where \(\tau (\bullet )\) measures how much the size of the largest estimated cluster to the network size. If \(\tau (\bullet )\) is 1 (or close to 1), it means that method \(\bullet \) puts all nodes (or a majority of nodes) into one community. For real-world networks with known true labels, we let \(error(\bullet )\) denotes the error rate of method \(\bullet \). Finally, \(T(\bullet )\) denote the run-time of method \(\bullet \). For real-world networks analyzed in this paper, we will report the general modularity Q, the overall embeddedness OE, community size parameter \(\tau \), error rate error (for real-world network with known true labels) and run-time T of nDFA and DFA for our comparative analysis.

Real-world un-weighted networks

In this section, four real-world un-weighted networks with known labels are studied to investigate nDFA’s empirical performance. Some basic information of the four data are displayed in Table 2, where Karate, Dolphins, Polbooks and Weblogs are short for Zachary’s karate club, Dolphin social network, Books about US politics and Political blogs, and the four datasets can be downloaded from http://www-personal.umich.edu/~mejn/netdata/. For these real-world un-weighted networks, their true labels are suggested by the original authors, and they are regarded as the “ground truth”. Brief introductions of the four networks can be found in2,18,19,39, and reference therein. Similar as the real data study part in16, since all entries of adjacency matrices of the four real data sets are 1 or 0 (i.e., the original adjacency matrices of the four real data are un-weighted), to construct weighted networks, we assume there exists noise such that we have the observed matrix \({\hat{A}}\) at hand where \({\hat{A}}=A+W\) with the noise matrix \(W\sim \mathrm {Normal}(0,\sigma ^{2}_{W})\), i.e, use \({\hat{A}}\) as input matrix in nDFA and DFA instead of using A. We let \(\sigma ^{2}_{W}\) range in \(\{0,0.01,0.02,\ldots ,0.2\}\). For each \(\sigma ^{2}_{W}\), we report error rate of different methods averaged over 50 random runs and aim to study nDFA’s behaviors when \(\sigma ^{2}_{W}\) increase. Note that similar as in the perturbation analysis of16, we can add a noise matrix W whose entries have mean 0 and finite variance in our theoretical analysis of nDFA, and we do not consider perturbation analysis during our theoretical study of nDFA for convenience in this paper. We only consider the influence of noise matrix in our numerical study part to reveal the performance stability of our algorithm nDFA.

Figure 6 displays the error rates against \(\sigma ^{2}_{W}\) for the four real-world social networks. When noise matrix W has small variance, nDFA has stable performances. When elements of W varies significantly, nDFA’s error rates increases. DFA also has stable performances when \(\sigma ^{2}_{W}\) is small, except that DFA always performs poor on Dolphins and Weblogs networks even for the case that there is no noise (\(\sigma ^{2}_{W}=0\) means a case without noise). For the two networks Karate and Polbooks, nDFA has similar performances as DFA and both methods enjoy satisfactory performances. For Dolphins and Weblogs, nDFA performs much better than DFA. Especially, for Weblogs network, DFA’s error rates are always around \(35\%\), which is a large error rate, while nDFA’s error rates are always lesser than \(15\%\) even for a noise matrix with large variance. This can be explained by the fact that the node degree in Weblogs network varies heavily, as analyzed in2,19. Since nDFA is designed under DCDFM considering node heterogeneity while DFA is designed under DFM without considering node heterogeneity, naturally, nDFA can enjoy better performances than DFA on real-world networks with variation in node degree.

Meanwhile, \(Q,OE,error,\tau \) and T obtained by applying DFA and nDFA to adjacency matrices A for the above four real-world networks with known nodes labels are reported in Table 3. Combine results in Table 3 and Fig. 6, we see that when nDFA has smaller error rates than DFA, nDFA has larger modularity than DFA, and this suggests the general modularity is effective for un-weighted networks (note that, the general modularity is exactly the Newman’s modularity when all entries of A are nonnegative). For Dolphins, Polbooks and Weblogs, we see that both the overall embeddedness and modularity of nDFA are larger than that of DFA, which suggests that nDFA returns more accurate estimation on nodes labels than DFA, and this is consistent with the fact that nDFA has smaller error rates than DFA for these three networks. Compared with nDFA whose error rates are small, \(\tau \) of DFA for Dolphins and Weblogs are much larger than that of nDFA, which suggests that DFA tends to put nodes into one community. Meanwhile, small error rates, large overall embeddedness (close to 1), and medium size of the largest estimated community of nDFA suggest that these four networks enjoy nice community structure for community detection. Sure, both methods run fast on these four networks.

Real-world weighted networks

In this section, we apply nDFA and DFA to five real-world weighted networks Karate club weighted network (Karate-weighted for short), Gahuku-Gama subtribes network, the Coauthorships in network science network (CoauthorshipsNet for short), Condensed matter collaborations 1999 (Con-mat-1999 for short) and Condensed matter collaborations 2003 (Con-mat-2003 for short). For visualization, Fig. 7 shows adjacency matrices of the first two weighted networks. Table 4 summaries basic information for the five networks. Detailed information of the five networks can be found below.

Karate-weighted: This weighted network is collected from a university karate club. In this weighted network, node denotes member, and edge between two nodes indicates the relative strength of the associations. Actually, this network is the weighted version of Karate club network. So, the number of communities is 2 and true labels for all members are known for Karate-weighted. This data can be downloaded from http://vlado.fmf.uni-lj.si/pub/networks/data/ucinet/ucidata.htm#kazalo.

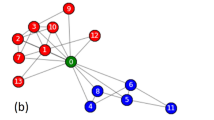

Gahuku-Gama subtribes: This data is the signed social network of tribes of the Gahuku-Gama alliance structure of the Eastern Central Highlands of New Guinea. This network has 16 tribes, and positive or negative link between two tribes means they are allies or enmities, respectively. Meanwhile, there are 3 communities in this network, and we use nodes labels shown in Fig. 9b from40 as ground truth. This data can be downloaded from http://konect.cc/(see also41). Note that since the overall embeddedness is defined for adjacency matrix with nonnegative entries, it is not applicable for this network.

CoauthorshipsNet: This data can be downloaded from http://www-personal.umich.edu/~mejn/netdata/. In CoauthorshipsNet, node means scientist and weights mean coauthorship, where weights are assigned by the original papers. For this network, there is no ground truth about nodes labels, and the numbers of communities are unknown. The CoauthorshipsNet has 1589 nodes, however its adjacency matrix is disconnected. Among the 1589 nodes, there are totally 396 disconnected components, and only 379 nodes fall in the largest connected component. For convenience, we use CoauthorshipsNet1589 to denote the original network, and CoauthorshipsNet379 to denote the giant component. To find the number of communities for CoauthorshipsNet, we plot the leading 40 eigenvalues of their adjacency matrices. Results shown in Fig. 8 suggest that the number of communities is 2, where27 also applies the idea of eigengap to estimate the number of communities for real-world networks. Note that though CoauthorshipsNet1589 is disconnected, we can still apply nDFA and DFA on it since there is no requirement on network connectivity when applying DFA and nDFA. Note that since the overall embeddedness is defined for adjacency matrix that is connected, it is not applicable for CoauthorshipsNet1589.

Con-mat-1999: This data can be downloaded from http://www-personal.umich.edu/~mejn/netdata/. In this network, node denotes scientists and edge weights are provided by the original papers. The largest connected component for this data has 13861 nodes. Figure 8 suggests \(K=2\) for this data.

Con-mat-2003: It is updated network of Con-mat-1999 and the largest connected component has 27519 nodes. Figure 8 suggests \(K=2\) for Con-mat-2003.

We apply nDFA and DFA on Karate-weighted and Gahuku-Gama subtribes, and find that error rates for both methods on both data are zero, suggesting that nDFA and DFA perform perfect on this two networks. For visualization, Figs. 9, 10 and 11 show community detection results by applying nDFA on these weighted networks except Con-mat-2003 whose size is too large to plot using the graph command of MATLAB. Note that disconnected components and isolated nodes can also be classified by nDFA as shown in panel (a) of Fig. 10, and this guarantees the widely applicability of nDFA since it can deal with disconnected weighted network even with isolated nodes. Table 5 records \(Q,OE,\tau \) and T for the five weighted networks, and we find that \(Q(\mathrm {nDFA})\) is much larger than \(Q(\mathrm {DFA})\) for CoauthorshipsNet, Con-mat-1999 and Con-mat-2003, suggesting that nDFA returns more accurate results on community detection than DFA. For CoauthorshipsNet1589, DFA puts 1585 among 1589 nodes into one community, and nDFA puts 1025 among 1589 nodes into one community. Recall that Q(nDFA) is much larger than Q(DFA) for CoauthorshipsNet1589, we see that DFA performs poor by tending to put nodes into one community while nDFA performs nice for returning a reasonable community structure. For CoauthorshipsNet379, though the overall embeddedness of nDFA is larger than DFA, nDFA’s \(\tau \) is much smaller than DFA, which suggests that nDFA returns more reasonable community partition for CoauthorshipsNet379 than DFA since DFA puts almost all nodes into one community. For Con-mat-1999 and Con-mat-2003, though OE(DFA) is larger than OE(nDFA), DFA again puts almost all nodes into one community for its large \(\tau \). For run-time, we see that nDFA processes real-world weighted networks of up to 28000 nodes within tens of seconds. Generally, we see that nDFA returns larger general modularity, smaller \(\tau \) than that of DFA, suggesting nDFA provides more reasonable community partition. For comparative evaluation, simply using the overall embeddedness OE is not enough, and we should combine OE and \(\tau \) for comparative analysis. Method returns larger OE and smaller \(\tau \) returns more reasonable community division, while method with larger general modularity always enjoys larger OE and smaller \(\tau \), i.e., Q functions similar as larger OE and smaller \(\tau \) when a method gives reasonable community partition, just as how our nDFA performs on all real-world networks used in this paper. And this supports the effectiveness of our general modularity.

Panel (a) and panel (b) show nDFA’s detection results of CoauthorshipsNet1589 and CoauthorshipsNet379, respectively. Here, different colors are used to distinguish different communities. For visualization, two nodes are connected by a line if there is a positive edge weight between them, and we do not show edge weights here.

Conclusion

In this paper, we introduced the Degree-Corrected Distribution-Free Model (DCDFM), a model for community detection on weighted networks. The proposed model is an extension of previous Distribution-Free Models by incorporating node heterogeneity to model real-world weighted networks in which nodes degrees vary, and it also extends the classical degree-corrected stochastic blockmodels to weighted networks by allowing connectivity matrix to have negative elements and allowing elements of adjacency matrix A generated from arbitrary distribution as long as the expectation adjacency matrix \(\Omega \) enjoys the block structure in Eq. (5). We develop an efficient spectral algorithm for estimating nodes labels under DCDFM by applying k-means algorithm on all rows in the normalized eigenvectors of the adjacency matrix. Theoretical results obtained by delicate spectral analysis guarantee that the algorithm is asymptotically consistent. The distribution-free property of our model allows that we can analyze the behaviors of our algorithm when \({\mathscr {F}}\) is set as different distributions. When DCDFM degenerates to DFM or DCSBM, our theoretical results match those under DFM or DCSBM. Numerical results of both simulated and empirical weighted networks demonstrate the advantage of our method designed by considering the effect of node heterogeneities. Meanwhile, to compare performances of different methods on weighted networks with unknown information on nodes communities, we proposed the general modularity as an extension of Newman’s modularity. Results of simulated weighted networks and real-world un-weighted networks suggest the effectiveness of the general modularity. The tools developed in this paper can be widely applied to study the latent structural information of both weighted networks and un-weighted networks. Another benefit of DCDFM is the potential for simulating weighted networks under different distributions. Furthermore, there are many dimensions where we can extend our current work. For example, K is assumed to be known in this paper. However, for most real-world weighted networks, K is unknown. Thus, estimating K is an interesting topic. Some possible techniques applied to estimate K can be found in46,47,48. Similar as4, studying the influence of outlier nodes theoretically for weighted networks is an interesting problem. Developing method for weighted network’s community detection problem based on modularity maximization under DCDFM similar as studied in6 is also interesting. Meanwhile, spectral algorithms accelerated by the ideas of random-projection and random-sampling developed in35 can be applied to handle with large-scale networks, and we can take the advantage of the random-projection and random-sampling ideas directly to weighted network community detection under DCDFM. We leave studies of these problems for our future work.

Data availability

All data and codes that support the findings of this study are available from the corresponding author upon reasonable request.

References

Holland, P. W., Laskey, K. B. & Leinhardt, S. Stochastic blockmodels: First steps. Soc. Netw. 5, 109–137 (1983).

Karrer, B. & Newman, M. E. J. Stochastic blockmodels and community structure in networks. Phys. Rev. E 83, 16107 (2011).

Abbe, E. & Sandon, C. Community detection in general stochastic block models: Fundamental limits and efficient algorithms for recovery. In 2015 IEEE 56th Annual Symposium on Foundations of Computer Science, 670–688 (2015).

Cai, T. T. & Li, X. Robust and computationally feasible community detection in the presence of arbitrary outlier nodes. Ann. Stat. 43, 1027–1059 (2015).

Abbe, E., Bandeira, A. S. & Hall, G. Exact recovery in the stochastic block model. IEEE Trans. Inf. Theory 62, 471–487 (2016).

Chen, Y., Li, X. & Xu, J. Convexified modularity maximization for degree-corrected stochastic block models. Ann. Stat. 46, 1573–1602 (2018).

Amini, A. A. & Levina, E. On semidefinite relaxations for the block model. Ann. Stat. 46, 149–179 (2018).

Su, L., Wang, W. & Zhang, Y. Strong consistency of spectral clustering for stochastic block models. IEEE Trans. Inf. Theory 66, 324–338 (2020).

Aicher, C., Jacobs, A. Z. & Clauset, A. Learning latent block structure in weighted networks. Journal of Complex Networks 3, 221–248 (2015).

Jog, V. & Loh, P.-L. Information-theoretic bounds for exact recovery in weighted stochastic block models using the renyi divergence. arXiv preprint arXiv:1509.06418 (2015).

Ahn, K., Lee, K. & Suh, C. Hypergraph spectral clustering in the weighted stochastic block model. IEEE J. Sel. Top. Signal Process. 12, 959–974 (2018).

Palowitch, J., Bhamidi, S. & Nobel, A. B. Significance-based community detection in weighted networks. J. Mach. Learn. Res. 18, 1–48 (2018).

Peixoto, T. P. Nonparametric weighted stochastic block models. Phys. Rev. E 97, 12306 (2018).

Xu, M., Jog, V. & Loh, P.-L. Optimal rates for community estimation in the weighted stochastic block model. Ann. Stat. 48, 183–204 (2020).

Ng, T. L. J. & Murphy, T. B. Weighted stochastic block model. Statistical Methods and Applications (2021).

Qing, H. Distribution-free models for community detection. arXiv preprint arXiv:2111.07495 (2021).

Rohe, K., Chatterjee, S. & Yu, B. Spectral clustering and the high-dimensional stochastic blockmodel. Ann. Stat. 39, 1878–1915 (2011).

Qin, T. & Rohe, K. Regularized spectral clustering under the degree-corrected stochastic blockmodel. Adv. Neural Inf. Process. Syst. 26(26), 3120–3128 (2013).

Jin, J. Fast community detection by SCORE. Ann. Stat. 43, 57–89 (2015).

Lei, J. & Rinaldo, A. Consistency of spectral clustering in stochastic block models. Ann. Stat. 43, 215–237 (2015).

Sengupta, S. & Chen, Y. Spectral clustering in heterogeneous networks. Stat. Sin. 25, 1081–1106 (2015).

Joseph, A. & Yu, B. Impact of regularization on spectral clustering. Ann. Stat. 44, 1765–1791 (2016).

Newman, M. E. Modularity and community structure in networks. Proc. Natl. Acad. Sci. 103, 8577–8582 (2006).

Orman, G. K., Labatut, V. & Cherifi, H. Comparative evaluation of community detection algorithms: A topological approach. J. Stat. Mech: Theory Exp. 2012, P08001 (2012).

Airoldi, E. M., Wang, X. & Lin, X. Multi-way blockmodels for analyzing coordinated high-dimensional responses. Annals Appl. Stat. 7, 2431 (2013).

Tsironis, S., Sozio, M., Vazirgiannis, M. & Poltechnique, L. Accurate spectral clustering for community detection in mapreduce. In Advances in Neural Information Processing Systems (NIPS) Workshops, 8 (Citeseer, 2013).

Rohe, K., Qin, T. & Yu, B. Co-clustering directed graphs to discover asymmetries and directional communities. Proc. Natl. Acad. Sci. 113, 12679–12684 (2016).

Jin, J., Ke, Z. T. & Luo, S. Estimating network memberships by simplex vertex hunting. arXiv: Methodology (2017).

Mao, X., Sarkar, P. & Chakrabarti, D. Estimating mixed memberships with sharp eigenvector deviations. J. Am. Stat. Assoc. 1–13 (2020).

Zhang, Y., Levina, E. & Zhu, J. Detecting overlapping communities in networks using spectral methods. SIAM J. Math. Data Sci. 2, 265–283 (2020).

Mao, X., Sarkar, P. & Chakrabarti, D. Overlapping clustering models, and one (class) svm to bind them all. Adv. Neural Inf. Process. Syt. 31, 2126–2136 (2018).

Jing, B., Li, T., Ying, N. & Yu, X. Community detection in sparse networks using the symmetrized laplacian inverse matrix (slim). Statistica Sinica (2021).

Zhou, Z. & Amini, A. A. Analysis of spectral clustering algorithms for community detection: The general bipartite setting. J. Mach. Learn. Res. 20, 1–47 (2019).

Wang, Z., Liang, Y. & Ji, P. Spectral algorithms for community detection in directed networks. J. Mach. Learn. Res. 21, 1–45 (2020).

Zhang, H., Guo, X. & Chang, X. Randomized spectral clustering in large-scale stochastic block models. J. Comput. Graphical Stat. 1–20 (2022).

Tropp, J. A. User-friendly tail bounds for sums of random matrices. Found. Comput. Math. 12, 389–434 (2012).

Qing, H. & Wang, J. Consistency of spectral clustering for directed network community detection. arXiv preprint arXiv:2109.10319 (2021).

Lancichinetti, A., Kivelä, M., Saramäki, J. & Fortunato, S. Characterizing the community structure of complex networks. PLoS ONE 5, e11976 (2010).

Jin, J., Ke, Z. T. & Luo, S. Improvements on score, especially for weak signals. Sankhya A 1–36 (2021).

Yang, B., Cheung, W. & Liu, J. Community mining from signed social networks. IEEE Trans. Knowl. Data Eng. 19, 1333–1348 (2007).

Kunegis, J. Konect: The koblenz network collection. In Proceedings of the 22nd International Conference on World Wide Web, 1343–1350 (2013).

Zachary, W. W. An information flow model for conflict and fission in small groups. J. Anthropol. Res. 33, 452–473 (1977).

Read, K. E. Cultures of the central highlands, new guinea. Southwest. J. Anthropol. 10, 1–43 (1954).

Newman, M. E. Finding community structure in networks using the eigenvectors of matrices. Phys. Rev. E 74, 036104 (2006).

Newman, M. E. The structure of scientific collaboration networks. Proc. Natl. Acad. Sci. 98, 404–409 (2001).

Newman, M. E. J. & Reinert, G. Estimating the number of communities in a network. Phys. Rev. Lett. 117, 78301 (2016).

Saldaña, D. F., Yu, Y. & Feng, Y. How many communities are there. J. Comput. Graph. Stat. 26, 171–181 (2017).

Chen, K. & Lei, J. Network cross-validation for determining the number of communities in network data. J. Am. Stat. Assoc. 113, 241–251 (2018).

Acknowledgements

This work was supported by the High level personal project of Jiangsu Province (JSSCBS20211218).

Author information

Authors and Affiliations

Contributions

H.Q. is the sole author of the paper.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Qing, H. Degree-corrected distribution-free model for community detection in weighted networks. Sci Rep 12, 15153 (2022). https://doi.org/10.1038/s41598-022-19456-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-022-19456-2

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.