Abstract

Preoperative neutrophil–lymphocyte ratio (NLR), has shown a predictive value in living donor liver transplantation (LDLT). However, the change in the NLR during LDLT has not been fully investigated. We aimed to compare graft survival between the NLR increase and decrease during LDLT. From June 1997 to April 2019, we identified 1292 adult LDLT recipients with intraoperative NLR change. The recipients were divided according to NLR change: 103 (8.0%) in the decrease group and 1189 (92.0%) in the increase group. The primary outcome was graft failure in the first year. In addition, variables associated with NLR change during LDLT were evaluated. During 1-year follow-up, graft failure was significantly higher in the decrease group (22.3% vs. 9.1%; hazard ratio 1.87; 95% confidence interval 1.10–3.18; p = 0.02), but postoperative complications did not differ between two groups. This finding was consistent for the overall follow-up. Variables associated with NLR decrease included preoperative NLR > 4, model for end-stage liver disease score, intraoperative inotropic infusion and red blood cell transfusion, and operative duration. The least absolute shrinkage and selection operator model yielded similar results. NLR decrease during LDLT appeared to be independently associated with graft survival. Further studies are needed to confirm our findings.

Similar content being viewed by others

Introduction

Neutrophil–lymphocyte ratio (NLR) is a simple, inexpensive, readily available, and reproducible index that reflects the systemic inflammatory respone1. The NLR has been shown to have a predictive value in various clinical situations including cardiovascular disease, cancer, and liver cirrhosis2,3,4. These findings were also consistently reported in surgical patients, and a perioperative high NLR was associated with adverse events after cardiac surgery and recurrence after cancer resection5,6,7,8,9,10.

Liver transplantation is an established treatment modality for end-stage liver disease and unresectable hepatocellular carcinoma (HCC) with or without cirrhotic change. Living donor liver transplantation (LDLT) provides a survival benefit as well as reduced waiting time for the candidates11,12. In liver transplant candidates, high NLR is a predictor of mortality13, and preoperative elevation of NLR also showed significant correlations with higher mortality and recurrence rate after liver transplantation for HCC14,15. Based on these previous findings, preoperative NLR was suggested to be helpful in selecting adequate HCC recipients for LDLT14. However, the current clinical value of NLR in LDLT seems to be limited to using preoperative value to predict outcome.

Postoperative NLR independently predicts mortality and adverse outcomes such as acute kidney injury and cardiovascular events in various surgeries and is associated with recurrence rate after cancer resection8,9,10,16,17. Furthermore, previous studies showed an efficacy of controlling intraoperative inflammation as indicated by the NLR to improve postoperative outcomes18. Therefore, we hypothesized that the change in NLR during LDLT could reflect operative burden and be associated with graft survival. In this study, we enrolled LDLT recipients with available NLR change data during the surgery and compared the incidence of graft failure. We also calculated the attributable fraction (AF) of each variable on graft failure and evaluated the variables that are associated with NLR change during LDLT using logistic regression and the least absolute shrinkage and selection operator (LASSO) models. Our finding may provide valuable information on the association between intraoperative NLR change and clinical outcome in LDLT and a direction for perioperative management and future studies.

Results

Baseline characteristics

From June 1997 to April 2019, a total of 1349 cases of adult-to-adult LDLT were performed in our institution. From the entire cohort, 1306 cases of LDLT in which pre- and post-surgical NLR data were available were included, and 14 re-transplantation cases were excluded. Finally, 1292 LDLT recipients were enrolled for the study. Smooth plots for the change of odds ratio (OR) was construced and showed a significant association when the reference value was an absolute NLR change of 0 (Fig. 1), so we divided the recipients into two groups according to an absolute decrease or increase of NLR change during LDLT: 103 (8.0%) recipients in the decrease group and 1189 (92.0%) in the increase group. The baseline characteristics are summarized in Table 1. The decrease group had less males and was generally younger than the increase group. Those in the decrease group showed higher incidences of alcoholic use, hepatorenal syndrome, and encephalopathy. Those in this group also had longer preoperative intensive care unit (ICU) stays with higher model for end-stage liver disease (MELD) and Child–Pugh scores. Preoperative NLR value as well as the incidence of NLR > 4 was higher in the decrease group, but an absolute value of postoperative NLR was lower in the decrease group. For operative variables, the use of inotropic infusion was less frequent in the decrease group, and packed red blood cell transfusion was more frequent in the decrease group. The operative duration was longer for the decrease group.

Smooth plots of the change of odds ratio for 1-year graft failure when the reference value was (A) absolute NLR change of 0, (B) median value of absolute NLR change, (C) 80% of absolute NLR change, (D) NLR percentage change of 0, (E) median value of NLR percentage change, and (F) 40% of NLR percentage change. (NLR percent change defined as [postoperative NLR − preoperative NLR]/preoperative NLR).

Clinical outcomes

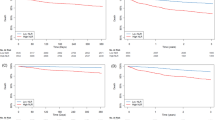

Clinical outcomes according to the change in NLR during LDLT are summarized in Table 2. For 1-year follow-up, the median durations were 365 [365–365] days in both groups. After adjustments, the incidence and risk of graft failure within 1 year were significantly higher in the decrease group [22.2% vs. 9.1%; hazard ratio (HR) 1.87; 95% confidence interval (CI) 1.11–3.21; p = 0.02] (Table 2) (Fig. 2A). The causes of graft failure in the both groups are summarized in Table S1, supporting information. The incidences of postoperative complication and graft rejection did not differ after adjustments during 1-year follow-up. A subgroup analysis revealed that there was no significant interaction (Fig. 3). The AF of significant variables on 1-year graft failure were 8.5% for NLR decrease and 14.5% for preoperative ICU stay (Table 3). In the sensitivity analysis, effects of an unmeasured confounder on the observed association was computed assuming that the prevalence of this confounder was 40%. The association was significant under all circumstances (Table S2, supporting information). Additionally, the observed association was significant before and after November 2010 (Table S3, supporting information).

For outcomes during the overall follow-up period, the median duration was 5.64 [2.01–10.40] years in the increase group and 7.04 [1.02–14.77] years in the decrease group (p = 0.54). As shown in the 1-year follow-up, the incidence of graft failure in the decrease group was higher during the overall follow-up period (49.5% vs. 26.0%; HR 1.62; 95% CI 1.15–2.28; p = 0.01) (Table 2) (Fig. 2B). Additionally, the composite of life-threatening complications and death during overall follow-up was significantly increased by NLR decrease during LDLT.

Variables associated with NLR decrease during LDLT

In the logistic regression model, variables that were significantly associated with NLR decrease during LDLT included preoperative NLR > 4 (OR 9.17; 95% CI 4.92–18.09; p < 0.001), MELD score (OR 1.05; 95% CI 1.01–1.09; p = 0.02), no use of inotropic continuous infusion (OR 0.23; 95% CI 0.13–0.39; p < 0.001), intraoperative packed red blood cell transfusion (OR 2.71; 95% CI 1.67–7.20; p = 0.03), and operative duration (OR 1.00; 95% CI 1.00–1.01; p < 0.001) (Table 4). The LASSO model showed similar results to the logistic regression analysis and added that age, encephalopathy, cirrhotic disease, and preoperative ICU stay were also associated with NLR decrease (Fig. 4).

Discussion

The main findings of the present study were: (1) the NLR value decreased during LDLT in 8.0% of the recipients, (2) the incidence of graft failure was significantly higher in the recipients with NLR decrease during LDLT, and (3) NLR decrease during LDLT was associated with preoperative variables such as NLR > 4 and MELD score and intraoperative variables such as use of inotropic infusion, packed red blood cell transfusion, and operative duration. These findings indicate that the change in NLR during LDLT may be associated with pre- and intraoperative conditions and may independently predict graft failure regardless of an absolute value.

Several biomarkers have been identified to detect and measure systemic inflammation, most of which consume additional time and cost1. NLR has the advantage of being inexpensive and readily available by daily measurements, and substantial evidence supports the association with clinical outcomes of acute and chronic conditions1,19,20,21. In previous studies on surgical patients, postoperative elevation of NLR has consistently shown associations with higher incidence of complication in cardiac and non-cardiac surgeries and recurrence after cancer surgeries8,9,10,16. Because there has been no previous report regarding postoperative NLR in LDLT, we initially evaluated the change of risk for graft failure according to postoperative NLR and different reference values of NLR change during LDLT. Based on our initial analysis, the recipients were divided into the increase and decrease groups by using dynamics of NLR during the surgical procedures instead of using an absolute value of postoperative NLR. Indeed, inflammatory markers have shown limited clinical utilities when using an absolute value in the postoperative period because an inflammatory response during the surgical procedure is inevitable. This response is characterized by a rise in circulating neutrophils accompanied by a fall in lymphocytes22.

In this study, we evaluated the change of NLR during surgical procedures instead of an absolute value and demonstrated that decreased NLR during LDLT was associated with increased incidence of graft failure despite the lower absolute value of postoperative NLR. Our explanation for this result is that, the increase of NLR could be regarded as a normal reaction to surgical injuries23,24, and the decrease of NLR during the surgical procedure, on the other hand, may be a surrogate marker that indicates generally poor condition of recipients who could not adequately respond to metabolic stress from LDLT procedures. Indeed, hematologic abnormalities are known to be associated with poor prognosis in cirrhotic patients25, and the cause of graft failure also supports our hypothesis. Compared with the increase group, the incidence of bleeding associated with graft failure was higher for the decrease group, and more graft failure in the decrease group was caused by pulmonary and cardiac complications, which are more closely related to general condition of patients, rather than graft rejection or surgical complication. However, determination of the degree of NLR increase that could be considered as normal requires further investigation. This is also true for the determination of whether an enormous increase in NLR is associated with adverse outcomes.

In addition to the aforementioned explanation on cirrhotic recipients, a separate explanation may be needed for the recipients with non-cirrhotic HCC. The prognostic impact of inflammatory response has discrete underlying mechanisms for cirrhotic disease and HCC. In cirrhotic disease, the alterations in the intestinal barrier along with increased luminal aerobic gram-negative bacilli, bacterial translocation, bacteremic events, and endotoxemia lead to increased inflammatory cytokine production which has prognostic significance26. In contrast, the effect of the inflammatory response on carcinogenesis plays a key role in the reoccurrence of HCC15. In previous studies, high NLR was associated with mortality of the overall liver transplant candidates13. However, in the recipients who actually underwent transplantation, preoperative NLR was mainly associated with outcomes of liver transplantation for HCC14,15. In the subgroup analysis, no significant interaction was observed for the association between NLR decrease and graft failure; however, considering a low incidence of NLR decrease, a larger study is needed to evaluate the generalizability of this finding.

Preoperative NLR elevation seems to play a complex role in our analysis. Preoperative NLR elevation was a strong predictor of NLR decrease during LDLT, but the calculated AF on graft failure was not significant in the multivariable model after retaining the intraoperative NLR decrease. Variables associated with NLR decrease during LDLT also included MELD score, supporting our explanation that the progression of disease and underlying state of the recipients may be involved in this association. Of note, intraoperative use of inotropic infusion and packed red blood cell transfusion also appeared to affect the incidence of NLR decrease. This finding suggests the possibility that adequate intraoperative management could prevent NLR decrease, but this requires further investigation. The control of perioperative inflammation resulted in improved outcomes of various cancer surgeries18, but whether prevention of intraoperative NLR decrease could improve outcome in LDLT or whether postoperative immunosuppression should be adjusted in the recipients with postoperative NLR decrease also requires investigation.

Our results should be appraised considering the following limitations. First, as a single-center retrospective study, our results may have been affected by confounding factors. Although relevant variables were adjusted for, the effect of unmeasured variables may have remained. A well-designed, prospective study with a larger number of patients may be needed to confirm our finding. Also, due to the long study period, advancements in surgical techniques and postoperative management could also have biased the results. Another limitation is that due to the low incidence of NLR decrease during LDLT, our results may have been biased despite the statistical adjustments and the larger number of the recipients than previous studies. Despite these limitations, this is the first study to demonstrate the negative impacts of NLR decrease during LDLT.

Conclusion

The incidence of graft failure was significantly increased in the recipients with a NLR decrease during LDLT. Further studies are needed to confirm our findings.

Methods

Study population, data collection, and study endpoints

This retrospective observational cohort study was approved by the Institutional Review Board at our institution (Samsung Medical Center 2019-12-144) and was conducted according to the Declaration of Helsinki. The need for written informed consent was waived by the Institutional Review Board at our institution considering the minimal risk for the participants and retrospective nature of the study.

We reviewed the entire cohort of liver transplantation at our institution between June 1997 and April 2019 and identified adult-to-adult LDLT recipients. Cases were required to have available NLR data for pre- and postoperative period. This data were required to be obtained between before the anesthetic induction and immediately after arrival into the ICU. In recipients with multiple liver transplantations, only the first transplantation was enrolled for analysis. In the enrolled recipients, smooth plots of the change of OR for 1-year graft failure were constructed according different values of an absolute NLR change and NLR percent change, defined as (postoperative NLR − preoperative NLR)/preoperative NLR. According to the results of smooth plots, recipients were divided according to an absolute NLR change value of 0 into NLR increase or decrease groups. Clinical, laboratory, and outcome data were collected by a trained study coordinator who was not otherwise involved in this study, and NLR and its change were calculated by another investigator who was blinded to clinical outcomes. The data from all recipients were analyzed anonymously.

The primary endpoint of this study was graft failure during 1-year follow-up. Graft failure was defined as death or re-transplantation, and recipients who underwent re-transplantation before death were regarded as both death and re-transplantation in the table. One secondary endpoint was graft failure during the overall follow-up. Another secondary endpoint was the composite of complications according to Clavien–Dindo classification and graft rejection during overall follow-up. The classification was briefly defined as follows: IIIa, complications requiring interventions without general anesthesia; IIIb, complications requiring interventions under general anesthesia; IV, life-threatening complications; V, death27. Graft rejection was confirmed by biopsy when clinically suspected.

Donor selection and surgical procedures

Donor selection criteria and surgical procedures of our institution have been previously described28. In brief, our criteria included adult younger than 65 years old, a body mass index lower than 35, biochemistries within normal range, and adequate size of graft and expected remnant liver of more than 30%. The presence of any conditions related to increased risk to the donor were excluded.

Most of the grafts were from the right side and consisted of 5 to 8 segments according to the Couinaud’s classification system. The surgical margin of the graft was determined after considering anatomical characteristics. For donors, full mobilization of the liver was achieved by dissecting the ligaments around the liver, and cholecystectomy was performed. After identifying bifurcations of the hepatic duct, portal vein, and hepatic artery, the intraparenchymal hepatic vein was identified with the aid of intraoperative ultrasound. The bile duct was transected after completing parenchymal dissection, and the graft liver was removed.

Harvested graft liver was implanted into the recipient using a piggyback technique. The right hepatic vein was initially anastomosed; if necessary, anastomosis of the inferior hepatic vein followed. After portal vein anastomosis, the hepatic vein and portal vein were unclamped to reperfuse the graft liver. After reperfusion, segment veins were anastomosed to the inferior vena cava using a cryopreserved allovascular graft, and the hepatic artery and biliary tract were then anastomosed. All recipients were routinely transferred to the ICU.

Anesthetic care and perioperative management

Standardized anesthesia according to the institutional protocol was performed in all recipients. Under the standard monitoring of vital signs (peripheral capillary oxygen saturation, 5-lead electrocardiography, and non-invasive arterial blood pressure), the induction of anesthesia was achieved using thiopental sodium and maintained with isoflurane titrated to a bispectral index of 40 to 60. The radial artery, femoral artery and vein, and internal jugular vein were cannulated for direct hemodynamic monitoring and blood tests. The initial arterial blood gas analysis and complete blood cell test were performed before the surgical incision and repeated throughout the surgical procedure. Mechanical ventilation was set with a tidal volume of 8 to 10 mL/kg based on ideal body weight using a mixture of medical air and oxygen at a fresh gas flow rate of 2 L/min, and respiratory rate was continuously adjusted to maintain normocapnea. Remifentanil was used in response to hemodynamic changes. Intravenous fluids were infused to maintain the central venous pressure at a minimum of 5 mmHg. Continuous infusion of inotropic drugs, such as dopamine, norepinephrine, and vasopressin, was administered to maintain mean arterial pressure at a minimum of 70 mmHg. Bolus injection of epinephrine was given when sudden hemodynamic instability was anticipated or had occurred. Indication for intraoperative transfusion of packed red blood cell was blood hemoglobin < 8.0 g/dL. To maintain normothermia, a warm blanket and a fluid warmer were used; the room temperature was thermostatically set at 24 °C.

The full blood test was immediately performed at the arrival into the ICU, and daily routine blood tests were performed during the hospital stay. Oxygenation, nutritional support, and early feeding and ambulation were encouraged while being closely monitored for early detection of postoperative complications such as bleeding, thrombosis, and biliary leakage or stricture.

Statistical analysis

To divide the recipients according to NLR change during LDLT, we constructed six smooth plots of the change of OR for 1-year graft failure when the reference value was absolute NLR change of 0, median value of absolute NLR change, 80% of absolute NLR change, NLR percentage change of 0, median value of NLR percentage change, and 40% of NLR percentage change. NLR percent change defined as [postoperative NLR − preoperative NLR]/preoperative NLR. Differences between the groups for continuous data were compared using the t-test or the Mann–Whitney test when applicable and presented as mean ± standard deviation. Categorical data were presented numerically and compared by using χ2 or Fishers exact test. Kaplan–Meier estimates were used to construct survival curves for each group and compared with the log-rank test. We used a multivariable Cox regression analysis to adjust for variables between the two groups. Variables for the multivariable analysis were selected based on clinical relevance or p < 0.05. Retained variables include age, sex, MELD score, operative duration, alcoholics, hepatorenal syndrome, hypertension, preoperative ICU treatment, viral disease, cirrhotic disease, HCC, preoperative NLR > 4, donor sex, and donor age. The results were reported as HR with 95% CI. For sensitivity analysis, we estimated the potential impact of unmeasured confounders29. Considering the long study period, a sensitivity analysis was also performed for the former and latter cases. We stratified the recipients in a chronological order and divided them at the median number. The significance of the observed association between NLR change and 1-year graft failure was evaluated in recipients before and after November 2010.

Subgroup analysis was also conducted to reveal hidden interaction with relevant variables. To compare the impact of NLR decrease on graft failure with other variables, we calculated the AF for each variable based on the results of the Cox proportional hazards model30. This measure represents the proportional reduction of the outcome within a population that would occur if the incidence of the variable was reduced to zero. Multivariable logistic regression analysis was used to identify variables associated with NLR change during LDLT and was reported as odds ratio (OR) with 95% CI. The LASSO model, a regression analysis that fits a generalized linear model via penalized maximum likelihood and select variables, was used for more accurate evaluation31. All statistical analyses were performed with R 3.6.1 (Vienna, Austria; http://www.R-project.org/). All tests were 2-tailed, and p < 0.05 was considered statistically significant.

Abbreviations

- NLR:

-

Neutrophil–lymphocyte ratio

- LDLT:

-

Living donor liver transplantation

- HCC:

-

Hepatocellular carcinoma

- ICU:

-

Intensive care unit

References

Imtiaz, F. et al. Neutrophil lymphocyte ratio as a measure of systemic inflammation in prevalent chronic diseases in Asian population. Int. Arch. Med. 5, 2. https://doi.org/10.1186/1755-7682-5-2 (2012).

Biyik, M. et al. Blood neutrophil-to-lymphocyte ratio independently predicts survival in patients with liver cirrhosis. Eur. J. Gastroenterol. Hepatol. 25, 435–441. https://doi.org/10.1097/MEG.0b013e32835c2af3 (2013).

Proctor, M. J. et al. A derived neutrophil to lymphocyte ratio predicts survival in patients with cancer. Br. J. Cancer 107, 695–699. https://doi.org/10.1038/bjc.2012.292 (2012).

Afari, M. E. & Bhat, T. Neutrophil to lymphocyte ratio (NLR) and cardiovascular diseases: an update. Expert Rev. Cardiovasc. Ther. 14, 573–577. https://doi.org/10.1586/14779072.2016.1154788 (2016).

Paramanathan, A., Saxena, A. & Morris, D. L. A systematic review and meta-analysis on the impact of pre-operative neutrophil lymphocyte ratio on long term outcomes after curative intent resection of solid tumours. Surg. Oncol. 23, 31–39. https://doi.org/10.1016/j.suronc.2013.12.001 (2014).

Gibson, P. H. et al. Preoperative neutrophil–lymphocyte ratio and outcome from coronary artery bypass grafting. Am. Heart J. 154, 995–1002. https://doi.org/10.1016/j.ahj.2007.06.043 (2007).

Yamanaka, T. et al. The baseline ratio of neutrophils to lymphocytes is associated with patient prognosis in advanced gastric cancer. Oncology 73, 215–220. https://doi.org/10.1159/000127412 (2007).

Gibson, P. H. et al. Usefulness of neutrophil/lymphocyte ratio as predictor of new-onset atrial fibrillation after coronary artery bypass grafting. Am. J. Cardiol. 105, 186–191. https://doi.org/10.1016/j.amjcard.2009.09.007 (2010).

Koo, C. H. et al. Neutrophil, lymphocyte, and platelet counts and acute kidney injury after cardiovascular surgery. J. Cardiothorac. Vasc. Anesth. 32, 212–222. https://doi.org/10.1053/j.jvca.2017.08.033 (2018).

Kubo, T. et al. Impact of the perioperative neutrophil-to-lymphocyte ratio on the long-term survival following an elective resection of colorectal carcinoma. Int. J. Colorectal Dis. 29, 1091–1099. https://doi.org/10.1007/s00384-014-1964-1 (2014).

Kawasaki, S. et al. Living related liver transplantation in adults. Ann. Surg. 227, 269–274. https://doi.org/10.1097/00000658-199802000-00017 (1998).

Shah, S. A. et al. Reduced mortality with right-lobe living donor compared to deceased-donor liver transplantation when analyzed from the time of listing. Am. J. Transplant. 7, 998–1002. https://doi.org/10.1111/j.1600-6143.2006.01692.x (2007).

Leithead, J. A., Rajoriya, N., Gunson, B. K. & Ferguson, J. W. Neutrophil-to-lymphocyte ratio predicts mortality in patients listed for liver transplantation. Liver Int. 35, 502–509. https://doi.org/10.1111/liv.12688 (2015).

Motomura, T. et al. Neutrophil–lymphocyte ratio reflects hepatocellular carcinoma recurrence after liver transplantation via inflammatory microenvironment. J. Hepatol. 58, 58–64. https://doi.org/10.1016/j.jhep.2012.08.017 (2013).

Halazun, K. J. et al. Negative impact of neutrophil–lymphocyte ratio on outcome after liver transplantation for hepatocellular carcinoma. Ann. Surg. 250, 141–151. https://doi.org/10.1097/SLA.0b013e3181a77e59 (2009).

Chung, J. et al. Neutrophil/lymphocyte ratio in patients undergoing noncardiac surgery after coronary stent implantation. J. Cardiothorac. Vasc. Anesth. 34, 1516–1525. https://doi.org/10.1053/j.jvca.2019.10.009 (2020).

Cook, E. J. et al. Post-operative neutrophil–lymphocyte ratio predicts complications following colorectal surgery. Int. J. Surg. 5, 27–30. https://doi.org/10.1016/j.ijsu.2006.05.013 (2007).

Forget, P. et al. Neutrophil:lymphocyte ratio and intraoperative use of ketorolac or diclofenac are prognostic factors in different cohorts of patients undergoing breast, lung, and kidney cancer surgery. Ann. Surg. Oncol. 20(Suppl 3), S650-660. https://doi.org/10.1245/s10434-013-3136-x (2013).

Liu, H. et al. Neutrophil–lymphocyte ratio: a novel predictor for short-term prognosis in acute-on-chronic hepatitis B liver failure. J. Viral Hepat. 21, 499–507. https://doi.org/10.1111/jvh.12160 (2014).

Azab, B. et al. Neutrophil–lymphocyte ratio as a predictor of adverse outcomes of acute pancreatitis. Pancreatology 11, 445–452. https://doi.org/10.1159/000331494 (2011).

Zahorec, R. Ratio of neutrophil to lymphocyte counts–rapid and simple parameter of systemic inflammation and stress in critically ill. Bratisl. Lek. Listy 102, 5–14 (2001).

Solaini, L. et al. Limited utility of inflammatory markers in the early detection of postoperative inflammatory complications after pancreatic resection: Cohort study and meta-analyses. Int. J. Surg. 17, 41–47. https://doi.org/10.1016/j.ijsu.2015.03.009 (2015).

Dionigi, R. et al. Effects of surgical trauma of laparoscopic versus open cholecystectomy. Hepatogastroenterology 41, 471–476 (1994).

O’Mahony, J. B. et al. Depression of cellular immunity after multiple trauma in the absence of sepsis. J. Trauma 24, 869–875. https://doi.org/10.1097/00005373-198410000-00001 (1984).

Qamar, A. A. & Grace, N. D. Abnormal hematological indices in cirrhosis. Can. J. Gastroenterol. 23, 441–445. https://doi.org/10.1155/2009/591317 (2009).

Giron-Gonzalez, J. A. et al. Implication of inflammation-related cytokines in the natural history of liver cirrhosis. Liver Int. 24, 437–445. https://doi.org/10.1111/j.1478-3231.2004.0951.x (2004).

Dindo, D., Demartines, N. & Clavien, P. A. Classification of surgical complications: a new proposal with evaluation in a cohort of 6336 patients and results of a survey. Ann. Surg. 240, 205–213. https://doi.org/10.1097/01.sla.0000133083.54934.ae (2004).

Park, J. et al. A retrospective analysis of re-exploration after living donor right lobe liver transplantation: incidence, causes, outcomes, and risk factors. Transpl. Int. 32, 141–152. https://doi.org/10.1111/tri.13335 (2019).

Groenwold, R. H., Nelson, D. B., Nichol, K. L., Hoes, A. W. & Hak, E. Sensitivity analyses to estimate the potential impact of unmeasured confounding in causal research. Int. J. Epidemiol. 39, 107–117. https://doi.org/10.1093/ije/dyp332 (2010).

Ruckinger, S., von Kries, R. & Toschke, A. M. An illustration of and programs estimating attributable fractions in large scale surveys considering multiple risk factors. BMC Med. Res. Methodol. 9, 7. https://doi.org/10.1186/1471-2288-9-7 (2009).

Ribbing, J., Nyberg, J., Caster, O. & Jonsson, E. N. The lasso–a novel method for predictive covariate model building in nonlinear mixed effects models. J. Pharmacokinet. Pharmacodyn. 34, 485–517. https://doi.org/10.1007/s10928-007-9057-1 (2007).

Funding

None.

Author information

Authors and Affiliations

Contributions

Conceptualization: S.H.L., J.P., and G.S.K.; Data curation: M.S.G., J.S.K., S.H., G.S.C., J.W.J., and J.K.; Formal analysis: J.S.K., S.H.; Methodology: S.H.L., J.P., and G.S.K.; Software: S.H.L.; Validation: J.P.; Investigation: M.S.G.; Writing-original draft: S.H.L., J.P.; Writing-review and editing: M.S.G., J.S.K., S.H., G.S.C., J.W.J., and G.S.K.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Park, J., Lee, SH., Gwak, M.S. et al. Association between neutrophil–lymphocyte ratio change during living donor liver transplantation and graft survival. Sci Rep 11, 4199 (2021). https://doi.org/10.1038/s41598-021-83814-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-83814-9

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.