Abstract

This paper proposed a non-segmentation radiological method for classification of benign and malignant thyroid tumors using B mode ultrasound data. This method aimed to combine the advantages of morphological information provided by ultrasound and convolutional neural networks in automatic feature extraction and accurate classification. Compared with the traditional feature extraction method, this method directly extracted features from the data set without the need for segmentation and manual operations. 861 benign nodule images and 740 malignant nodule images were collected for training data. A deep convolution neural network VGG-16 was constructed to analyze test data including 100 malignant nodule images and 109 benign nodule images. A nine fold cross validation was performed for training and testing of the classifier. The results showed that the method had an accuracy of 86.12%, a sensitivity of 87%, and a specificity of 85.32%. This computer-aided method demonstrated comparable diagnostic performance with the result reported by an experienced radiologist based on American college of radiology thyroid imaging reporting and data system (ACR TI-RADS) (accuracy: 87.56%, sensitivity: 92%, and specificity: 83.49%). The automation advantage of this method suggested application potential in computer-aided diagnosis of thyroid cancer.

Similar content being viewed by others

Introduction

Thyroid cancer is the most common endocrine cancer, and its incidence has increased rapidly worldwide, especially in Asian countries1,2. Most of thyroid cancers show as thyroid nodules, which are usually detected by chance in the neck examination with ultrasonography because of other disorders3,4. When using high-resolution ultrasound, the prevalence of thyroid nodules is as high as 19–68% in a randomly selected population. Since most of the nodules are benign and the percentage of malignant ones is relatively low (7–15%), it is of great importance to distinguish benign and malignant thyroid nodules1,5,6. When doctors notice the presence of nodules, they will do a systematical assessment of the thyroid gland. It includes a set of bioanalysis of thyroid from blood tests, such as thyroxine (T4), triiodothyronine (T3), etc. But this usually cannot predict whether it is a benign or malignant nodule7,8. With the development of high-frequency ultrasound technology, systematic ultrasound examination of the neck can be carried out to identify the nature of the nodules9. This examination allows doctors to measure the number, size, and shape of nodules and to detect other possible abnormalities. Thyroid ultrasound provides information about the structure and characteristics of the nodules, which is helpful in the diagnosis of various types of thyroid nodules, including composition, echo, shape, margin and echoic foci10,11. Yet, this technique is also based on subjective assessments, ultrasound-guided fine needle aspiration (FNA) is recommended for the differential diagnosis of thyroid benign and malignant nodules1.

Deep convolution neural network (DCNN)12,13,14 is a kind of artificial intelligence method, which has been applied in more and more research fields15,16,17. It has new applications in dermatology18, ophthalmology19, radiology20,21 and other fields22,23,24. In recent years, the research of DCNN in the field of radiology shows that the performance of this algorithm is equivalent to that of radiologist. With the continuous development of this field, the possible types and quantities of deep learning are also increasing25. Compared with the traditional feature extraction method, DCNN method directly extracts features from the data set without the need for segmentation and complex manual operations26,27.

The objective of the current work was to design a computer-aided system based on DCNN to automatically classify the benign and malignant nodules based on thyroid ultrasound images. With B mode ultrasound data, this method aimed to combine the advantages of morphological information provided by ultrasound and convolutional neural networks in automatic feature extraction and accurate classification. The validity of this method was verified by comparing the current experimental results with results obtained by an experienced radiologist based on American college of radiology thyroid imaging reporting and data system (ACR TI-RADS)28,29,30.

Results

A total of 1,810 images from 1,452 subjects were obtained, of which 840 were malignant and 970 were benign. Detailed information of the collected cases are shown in Table 1. The total number of malignant nodule images is 840 (1,810, 46.4%), which includes 740 (1601, 46.22%) malignant nodule images in training group and 100 (209, 47.8%) malignant nodule images in testing group. The percentage of malignant and benign nodules between the training group and the test group is not statistically significant (Table 2).

The experimental steps are illustrated in Fig. 1. In the current work, the proposed DCNN model was used to analyze the thyroid ultrasound images. FNA and surgical results were taken as the reference.

For causing no additional workload for the radiologist, we used a bound box of a nodule by enclosing calipers (used in clinics for nodule measurement), and we did not need to draw the boundary of the nodule by a radiologist. The deep convolution neural network VGG-1631 for large-scale target recognition was evaluated, and the nodule recognition based on ultrasound image was fine tuned.

We performed validation of the performance of the classifier using a nine folded cross validation. As shown in Table 3, for the training set of differentiating benign and malignant nodules, the areas under the receiver operating characteristic curves (AUC) of the algorithm is 0.9054 (95% confidence interval (CI) 0.8773, 0.9336). The accuracy is 86.27% (95% CI 84.11%, 88.43%), the sensitivity was 87%, and the specificity is 86.42% (95% CI 83.10% 89.74%).

As shown in Fig. 2, for the test set of differentiating benign and malignant nodules, the AUC of our proposed method is 0.9157, the accuracy is 86.12%, the sensitivity is 87%, and the specificity is 85.32%. The AUC of the experienced radiologist diagnosis is 0.8879. The cut off value of TI-RADS is 4 reported by the radiologist, which is corresponding to the top-left point on receiver operating characteristic curve. The accuracy, sensitivity and specificity reported by the radiologist according to ACR TI-RADS are 87.56%, 92% and 83.49% respectively. Statistical analysis32,33 shows that there is no significant difference between our algorithm and the result reported by the experienced radiologist (p > 0.1).

Discussion

Ultrasound diagnosis of thyroid nodules is time-consuming and labor-intensive, and has interreader variability. In this research, we developed a deep learning algorithm to provide management recommendations for thyroid nodules, based on ultrasound image observations, and compared the results with those obtained by radiologist who follows the guidelines of ACR TI-RADS. With the thyroid nodule classification system proposed in this paper based on deep neural networks, experimental results on ultrasound images indicated that this method would achieve comparable classification performance to the result reported by the experienced radiologist. In the present work, we applied deep neural network to the dataset (1601/209 for training/testing). 1601 training data included 861 benign nodule images and 740 malignant nodule images. The 209 data included 109 benign nodule images and 100 malignant nodule images. The experimental results showed that the accuracy, the sensitivity and the specificity of this method achieved 86.12%, 87% and 85.32%, respectively.

Our findings supported increasing evidence that deep learning could be applied to the thyroid clinical diagnosis. After training, through a similarity activation map analysis, the DCNN model could be used to pinpoint malignant thyroid nodules. DCNN models, together with machine learning methods based on traditional feature extraction were used to identify the malignancy of thyroid nodules with ultrasound images. Ma et al.24 used DCNN to analyze 8,148 hand-labeled thyroid nodules and obtained 83.0% (95% CI 82.3–83.7) thyroid nodule diagnostic accuracy. This experiment required big data set for training. Xia et al.34 obtained the accuracy of distinguishing benign and malignant nodules by 87.7%, with extreme learning machine and radiology features collected from 203 ultrasound images of 187 thyroid patients. This method had a relatively lower specificity and need to draw nodule boundary by radiologist, which brought a lot of work to doctors. Pereira et al. reported that the accuracy of the DCNN model in distinguishing 946 malignant and benign thyroid nodules from 165 patients was 83%35. Chi et al.23 used the imaging features extracted by deep convolutional neural network and performed binary tasks for classifying TI-RADS category 1 and category 2 from the other categories, and reached more than 99% accuracy. Although the performance seemed to be excellent, it was a greatly simplified task of predicting category 1 and category 2. Also, the research subjects did not have FNA and surgery results to be compared with.

In the present study, all the patients with thyroid cancers in training and test data sets had FNA or surgery results. Furthermore, to avoid additional workload for the radiologist, we did not need to draw the boundary of the nodule by a radiologist. In another study of Mateusz Buda et al. 2019, 1,377 thyroid nodules were used in 1,230 patients with complete imaging data and clear cytological or histological diagnosis36. For 99 test nodules, the proposed deep learning algorithm achieved a sensitivity of 13/15 (87%: 95% confidence interval: 67%, 100%), which was the same as the expert and higher than 5 of 9 radiologists. The specificity of the deep learning algorithm achieved 44/84 (52%; 95% CI 42%, 62%)), which was similar to the consensus of experts (43/84; 51%; 95% CI 41%, 62%; p = 0.91), higher than the other 9 radiologists. The average sensitivity and specificity of the 9 radiologists were 83% (95% CI 64%, 98%) and 48% (95% CI 37%, 59%). Our experiment had a comparative sensitivity and higher specificity.

In summary, the proposed DCNN diagnosis algorithm could be used to effectively classify benign and malignant thyroid nodules, and exhibited comparable diagnostic performance to the results reported by the experienced radiologist according to TI-RADS. This method might enable potential applications in computer-aided diagnosis of thyroid cancer. However, the present study still had some limitations. For instance, we did not find the accuracy of the computer-aided platform proposed in the work had connection with tumor size and cancer subtypes. The number of cases enrolled in the current study were small. More types of patients should be validated, and the accuracy of the proposed model should be further verified and improved.

Methods

Research cohort

This retrospective study was approved by the institutional review board of the First Affiliated Hospital of Nanjing Medical University and informed consent was obtained from all patients. All study methodologies were carried out in accordance with relevant guidelines and regulations. From January 2018 to September 2019, a group of patients with thyroid nodules who took ultrasound examination before surgery or biopsy were included in the retrospective study. The inclusion criteria were determined as follows: (a) age > 18 years; (b) not received hormone therapy, chemotherapy, or radiation therapy; (c) thyroid nodule diameter > 5 mm. Images without diagnostic, indeterminate cytologic or histological results were excluded. The diagnosis of a malignant nodule was made when malignancy was confirmed on surgical specimen by core-needle biopsy (CNB) or FNA cytology. A benign nodule was made when any one of the following criteria was met: (a) confirmation using a surgical specimen; (b) benign FNA cytology findings; or (c) US findings of very low suspicion9; and (d) Cystic or almost complete cystic nodules and spongy nodules (mainly composed of more than 50% of the small cystic space).

The database of 1,810 thyroid disease images was evaluated by two experts. B-ultrasonic examination was carried out by several commercial US equipment: (1) Esaote MyLab twice (Genova, Italy). (2) GE LOGIQ E9 (USA). (3) Philips EPIQ 5 (Amsterdam, the Netherlands). (4) Philips EPIQ 7 (Amsterdam, Netherlands) (5) Siemens S3000 (Buffalo, USA). (6) Supersonic imagine (aixplorer, Aix-en-Provence, France), etc. A 5–13 MHz wide-band linear array probe was utilized with a central working frequency of 7.5 MHz. The patient was placed in a supine position, expose the anterior cervical region, and then scanned laterally, longitudinally, and obliquely.

Pathological reference

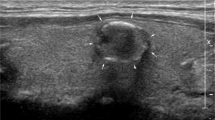

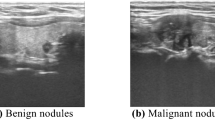

It is known that ultrasound-guided FNA has high specificity and sensitivity in the diagnosis of thyroid benign and malignant nodules, so that it can be used as a reference for the differential diagnosis of thyroid benign and malignant nodules. Therefore, in the present work, FNA was taken as the reference after B-mode ultrasound diagnosis. The final pathological diagnosis of a benign or malignant thyroid nodule was classified if a thyroid nodule had a benign or malignant cytology (or histology, if available). Under the guidance of ultrasound of interventional radiologist, 25G needle was used. After the location of the nodule was determined under the guidance of ultrasound, several samples in the nodule were obtained by using the needle in the ultrasound scanning plane. Three or four biopsies were fixed in BD CytoRich Red Preservative fluid (Becton, Dickinson and company, Mebane, USA), and then sedimentation-based cytologic examination was taken. All slides were reviewed and explained by three experienced cytotechnologists who reported thyroid cytopathology with reference to the Bethesda system. If the nodule had undergone core needle biopsy or surgical resection, the histologic results should be used instead of cytological examination. Figure 3a shows the B mode ultrasound image of benign thyroid nodule. Figure 3b shows the B mode ultrasound image of malignant thyroid nodule. Figure 3c shows the FNA smear micrograph of benign nodule. Figure 3d shows the FNA smear micrograph of malignant nodule. Cytologic images were obtained based on the Pap staining.

The B mode ultrasonography and FNAs of thyroid nodules: (a) B mode ultrasound image of benign thyroid nodule; (b) B mode ultrasound image of malignant thyroid nodule; (c) micrograph of a FNA smear of benign thyroid nodule with magnification power 200; and (d) micrograph of a FNA smear of malignant thyroid nodule with magnification power 400.

Algorithm

In this paper, deep convolution neural network VGG-16 was fine-tuned and evaluated based on ultrasound image for the thyroid nodule diagnosis. Convolutional neural network included five convolution and pooling operation modules for extracting complex features from each input image. These features were flattened into a single vector. The output of the model was a collection of continuous variables that represented the predicted probabilities for each category (range 0.0–1.0) and were treated as discrete probability distributions. The final classification was calculated as a probability-weighted classes.

The input of this network was subjected to a stack of convolutions and 3 × 3 filter was pushed to a depth of 16 to 19 weighted layers. A bunch of convolutions were followed by three fully connected layers (viz., 16 layers with learnable weights, 13 convolutions and 3 fully connected layers). These networks were fine-tuned using training sets containing benign and malignant samples to identify nodules. This was done by extracting all layers except the last fully connected layer from the pre-trained network and adding new fully connected layers and softmax. The obtained supervised training performed nodule classification tasks with expected results and reduced computational complexity. Figure 4 is the Network Structure of the intelligent platform.

Statistical analyses

We performed a t-test of the hypothesis that the data in the vector X came from a distribution with mean zero, and returned the result of the test in H. H = 0 indicated that the null hypothesis could not be rejected at the 5% significance level. H = 1 indicated that the null hypothesis should be rejected at the 5% level. The data were assumed to come from a normal distribution with unknown variance.

References

Haugen, B. R. et al. 2015 American thyroid association management guidelines for adult patients with thyroid nodules and differentiated thyroid cancer: The American thyroid association guidelines task force on thyroid nodules and differentiated thyroid cancer. Thyroid26(1), 1–133 (2016).

Vaccarella, S. et al. Worldwide thyroid-cancer epidemic? The increasing impact of overdiagnosis. N. Engl. J. Med.375(7), 614–617 (2016).

Hoang, J. K. et al. Interobserver variability of sonographic features used in the American college of radiology thyroid imaging reporting and data system. Am. J. Roentgenol.211(1), 162–167 (2018).

Hong, Y. et al. Conventional US, elastography, and contrast enhanced US features of papillary thyroid microcarcinoma predict central compartment lymph node metastases. Sci. Rep.5, 7748 (2015).

Gharib, H. et al. American Association of Clinical Endocrinologists, American College of Endocrinology, and Associazione Medici Endocrinologi Medical Guidelines for Clinical Practice for the diagnosis and management of thyroid nodules—2016 update. Endocr. Pract.22, 622–639 (2016).

Guth, S., Theune, U., Aberle, J., Galach, A. & Bamberger, C. M. Very high prevalence of thyroid nodules detected by high frequency (13MHz) ultrasound examination. Eur. J. Clin. Investig.39, 699–706 (2009).

Dayan, C. M., Okosieme, O. E. & Taylor, P. Thyroid dysfunction. In Clinical Biochemistry: Metabolic and Clinical Aspects 3rd edn (eds Marshall, W. J. et al.) (Elsevier, Amsterdam, 2014).

Nygaard, B., Jensen, E. W., Kvetny, J., Jarlov, A. & Faber, J. Effect of combination therapy with thyroxine (T4) and 3,5,3’-triiodothyronine versus T4 monotherapy in patients with hypothyroidism, a double-blind, randomised cross-over study. Eur. J. Endocrinol.161(6), 895–902 (2019).

Tessler, F. N. et al. ACR thyroid imaging, reporting and data system (TI-RADS): White paper of the ACR TI-RADS committee. J. Am. Coll. Radiol.14(5), 587–595 (2017).

Hoang, J. K. et al. Reduction in thyroid nodule biopsies and improved accuracy with American college of radiology thyroid imaging reporting and data system. Radiology287(1), 185–193 (2018).

Griffin, A. S. et al. Improved quality of thyroid ultrasound reports after implementation of the ACR thyroid imaging reporting and data system nodule lexicon and risk stratification system. J. Am. Coll. Radiol.15(5), 743–748 (2018).

Lecun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature521(7553), 436–444 (2015).

Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw.61, 85–117 (2015).

Ramachandran, R., Rajeev, D. C., Krishnan, S. G. & Subathra, P. Deep learning an overview. IJAER10(10), 25433–25448 (2015).

Wang, P., et al. Large-scale continuous gesture recognition using convolutional neural networks. IEEE Inter. Conf. on Pattern Recognition (2016).

Ren, S., He, K., Girshick, R. & Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. Adv. Neural. Inf. Process. Syst. 91–99 (2015).

Memisevic, R. & Hinton, G. E. Learning to represent spatial transformations with factored higher-order Boltzmann machines. Neural Comput.22(6), 1473 (2010).

Esteva, A. et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature542(7639), 115–118 (2017).

Gulshan, V. et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA316(22), 2402–2410 (2016).

Erickson, B. J., Korfiatis, P., Akkus, Z. & Kline, T. L. Machine learning for medical imaging. Radiographics37(2), 505–515 (2017).

Mazurowski, M. A., Buda, M., Saha, A. & Bashir, M. R. Deep learning in radiology: An overview of the concepts and a survey of the state of the art with focus on MRI. J. Magn. Reson. Imaging49(4), 939–954 (2019).

Lee, H. et al. Fully automated deep learning system for bone age assessment. J. Digit. Imaging30(4), 427–441 (2017).

Chi, J. et al. Thyroid nodule classification in ultrasound images by fine-tuning deep convolutional neural network. J. Digit. Imaging30(4), 477–486 (2017).

Ma, J., Wu, F., Zhu, J., Xu, D. & Kong, D. A pre-trained convolutional neural network based method for thyroid nodule diagnosis. Ultrasonics73, 221–230 (2017).

Dargan, S., Kumar, M., Ayyagari, M. R. & Kumar, G. A survey of deep learning and its applications: A new paradigm to machine learning. Arch. Comput. Methods Eng. https://doi.org/10.1007/s11831-019-09344-w (2019).

Shin, H. C. et al. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging.35(5), 1285–1298 (2016).

Anwar, S. M. et al. Medical image analysis using convolutional neural networks: A review. J. Med. Syst.42(11), 226 (2018).

Moon, W. J. et al. Benign and malignant thyroid nodules: US differentiation—Multicenter retrospective study. Radiology247, 762–770 (2008).

Choi, S. H., Kim, E., Kwak, J. Y., Kim, M. J. & Son, E. J. Interobserver and intraobserver variations in ultrasound assessment of thyroid nodules. Thyroid20, 167–172 (2010).

Park, C. S. et al. Observer variability in the sonographic evaluation of thyroid nodules. J. Clin. Ultrasound38, 287–293 (2010).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. Proc. Int. Conf. Learn. Representations (2015).

DeLong, E. R., DeLong, D. M. & Clarke-Pearson, D. L. Comparing areas under two or more correlated receiver operating characteristics curves: A nonparamentric approach. Biometrics44(3), 837–845 (1988).

Sun, X. & Xu, W. Fast implementation of DeLong’s algorithm for comparing the areas under correlated receiver operating characteristic curves. IEEE Signal. Proc. Lett.21(11), 1389–1393 (2014).

Xia, J. et al. Ultrasound-based differentiation of malignant and benign thyroid Nodules: An extreme learning machine approach. Comput. Methods Progr. Biomed.147, 37–49 (2017).

Pereira, C., Dighe, M., Alessio A. M. Comparison of machine learned approaches for thyroid nodule characterization from shear wave elastography images. Proc. SPIE Med. Imaging Comput. Aided Diagn. 105751X (2018).

Buda, M. et al. Management of thyroid nodules seen on US images: Deep learning may match performance of radiologist. Radiology292(3), 695–701 (2019).

Acknowledgements

This work was supported by the Jiangsu Province Key Research & Development Plan (No. BE2018703), and the Hunan Province Major Scientific and Technological Achievements Transformation Project (2019GK4046).

Author information

Authors and Affiliations

Contributions

Design/data analysis, H.Y. and X.W.C; data acquisition and interpretation J.H, D X, J.C. and X.H.Y; propose of the project, D.Z. manuscript drafting or manuscript revision, H.Y., J.H. and D.Z; approval of final version of submitted manuscript, all authors; agrees to ensure any questions related to the work are appropriately resolved, all authors.

Corresponding authors

Ethics declarations

Competing interests

All authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ye, H., Hang, J., Chen, X. et al. An intelligent platform for ultrasound diagnosis of thyroid nodules. Sci Rep 10, 13223 (2020). https://doi.org/10.1038/s41598-020-70159-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-020-70159-y

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.