Abstract

A pulsed-laser three-dimensional imaging system inspired by compound and human hybrid eye is proposed. A diffractive optical element is used to enlarge field of view (FOV) of transmitting system and a receiving system consisting of a non-uniform microlens array, an aperture array, and an avalanche photodiode array is designed. The non-uniform microlens array is arranged on a curved surface to mimic large FOV feature of the compound eye. Meanwhile, the non-uniform microlens array is modeled to mimic space-variant resolution property of the human eye. On the basis of the proposed system, some simulation experiments are carried out. Results show that the entire FOV is up to 52°, and the resolution is 30 × 18. The proposed system has a high resolution in the center FOV and a low resolution in the peripheral FOV. The rotation and scaling invariances of the human eye are verified on the proposed system. The signal-to-noise ratio (SNR) increases with the increase in the number of rings and the maximum SNR locates at the outmost periphery area. This work is beneficial to the design of the pulsed laser three-dimensional imaging system with large FOV, high speed, and high resolution.

Similar content being viewed by others

Introduction

In recent years, pulsed-laser three-dimensional (3D) imaging has become as an active imaging technology and has received much attention due to its simplicity in principle, ability of anti-interference, and long imaging distance range1,2. Different from two-dimension planar imaging, pulsed-laser 3D imaging is slightly affected by target illumination and background reflectance, and it can obtain depth information of targets3. Therefore, this imaging technology is widely used in various applications, including target recognition4, robotics5, terrain visualization6, medical diagnostics7, and vehicle navigation8. A pulsed-laser 3D imaging system with a large field of view (FOV), high imaging speed and high imaging resolution is critical and exhibits a huge potential in many applications, such as industry, medical and military areas7,9,10. To the best of our knowledge, the imaging principle divides the pulsed-laser 3D imaging system into two categories: scanning and non-scanning types11. For scanning pulsed-laser 3D imaging system (e.g., MEMS, scanning mirror, galvanometer scanner, and Risley prism pair), the FOV largely depends on scan range of scanning components and the capability to obtain a high resolution image relies largely on the high-resolution scanning angle and a large number of scanning steps of the scanning components, which results in a high time consumption12,13,14,15,16. Therefore, it suffers from a low imaging speed that limits its imaging efficiency. Moreover, mechanical robustness is a substantially challenging issue for this type of pulsed-laser 3D imaging system. Unlike scanning pulsed-laser 3D imaging system, non-scanning pulsed-laser 3D imaging system can obtain images with only a single laser pulse in a short period. The FOV of non-scanning pulsed-laser3D imaging system mainly depends on receiving optical system. Imaging with a FOV over 90° has been achieved with fisheye lenses, catadioptric lens, and rotating cameras17,18. However, the imaging system requires bulky and costly multiple-lenses and stringent alignment. Moreover, it suffers from a severe aberration, which will deteriorate the imaging quality19. The non-scanning pulsed-laser 3D imaging system permits a high imaging speed in the absence of the scanning component, but the image resolution depends on the pixel number of a detector array. A large pixel number of the detector array is necessary to obtain an image with high resolution20,21,22,23,24,25. Actually, high resolution within the entire FOV is unnecessary, i.e., the resolution through the entire FOV does not have to be the same because high resolution image requires large data bandwidth, which limits the image speed. The analysis above shows that a tradeoff exists among large FOV, high speed, and high resolution; they cannot be satisfied simultaneously by the existing pulsed-laser 3D imaging systems26.

Compound and human eyes are two exquisite and outstanding animal eyes created by nature27. On one hand, the compound eye has a remarkable feature of large FOV because its ommatidia are distributed on a hemispherical curved surface28,29. Each ommatidium only transfers a small part of the FOV and the final FOV is the cumulation result of all ommatidium. On the other hand, one unique merit of the human eye is the space-variant resolution property, i.e., high resolution in the center of the FOV and low resolution in the peripheral of the FOV30,31. Benefiting from this property, it can compress image information in the periphery of the FOV, i.e., the uninterested area meanwhile maintains the image information in the central of the FOV, i.e., the interested area clear32,33. Hence, the space-variant resolution property improves imaging speed and possess high resolution in the interested area. The space-variant resolution property demonstrates that the relationship between the retina and visual cortex is subjected to an approximate logarithmic-polar transformation (LPT)34. It can convert a Cartesian image of the retina to a LPT image of the visual cortex. The LPT of the human eye has two remarkable properties, i.e., rotation and scaling invariance, which are beneficial in further reducing redundant data and compressing the data35. It is particularly helpful for pattern recognition, motion estimation and object tracking. To the best of our knowledge, there have been a few previous studies on the large FOV feature of the compound eye36,37,38 and the space-variant resolution property of the human eye39,40,41, respectively. However, the hybrid of the compound and human eyes has not yet been studied. Inspired by bionic technology, our group previously proposed an imaging system combining of these two eyes, but it is a passive imaging system and belongs to two-dimensional planar imaging30,42. Unlike our previous studies, we study a pulsed-laser 3D imaging method combined the large FOV feature of the compound eye and the space-variant resolution property of the human eye simultaneously.

To obtain the large FOV, high imaging speed, and high resolution, a novel imaging method inspired the compound eye and the human eye for the pulsed-laser 3D imaging system is proposed. A receiving system containing several non-uniform microlenses is designed to mimic the space-variant property of the human eye. The aim of this design is to achieve high resolution in the center of the FOV meanwhile compress the image information in the periphery of the FOV for improving imaging speed. Moreover, these non-uniform microlenses are distributed on a curved surface to imitate the large FOV feature of the compound eye.

Methods

Principle

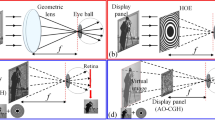

Figure 1 depicts the principle of the proposed 3D pulsed laser imaging system inspired by the compound and human hybrid eye. The system includes a field programmable gate array (FPGA), a pulse laser, a transmitting system, a target, a receiving system, and a readout integrated circuit (ROIC). The working flow is described as follows.

Principle of the proposed system. DOE-diffractive optical element, ROIC-readout integrated circuit, APD-avalanche photodiode, NUMLA-non-uniform microlens array. A DOE is used to enlarge the FOV of transmitting system. The reflected or scattered pulsed laser echo beam is focused on the APD array by NUMLA.

(1) The FPGA emits an electronic pulse to trigger the pulsed laser, and then the pulsed laser launches a pulsed laser beam under the command of the electronic pulse. This moment is regarded as the start timing moment for a timer in the FPGA.

(2) The pulsed laser beam is collimated and expanded by a transmitting system. A diffractive optical element (DOE) is used in front of the transmitting system to enlarge the FOV of the transmitting system and homogenize the intensity of the transmitting pulsed laser beam. Then, the transmitting pulsed laser beam projects and illuminates to a target surface.

(3) The transmitting pulsed laser beam is reflected or scattered by the target surface and the reflected or scattered pulsed laser echo beam is received by a receiving system. The receiving system consists of a non-uniform microlens array (NUMLA), an APD array, and an aperture array. The NUMLA consists of N ring microlenses and there are M single microlenses present in each ring. These microlenses (N×M) segment the echo beam into the different optical channels and are detected by the corresponding APD array. An aperture array ensures that one microlens corresponds to one APD detector and prevents optical crosstalk between adjacent optical channels.

(4) The APD array responses the echo beam and outputs the electronic signal. The electronic signals are collected and processed by the ROIC. The ROIC can obtain the stop timing moments of the processed electronic signals, and these moments are recorded by the timer in the FPGA. The time of flight between the target and the pulsed laser is obtained through subtraction of the stop timing moments and the start timing moment. Hence, a 3D image of the target is obtained by the proposed system.

System modeling

As mentioned in the principle section, the receiving system is a core component of the proposed imaging system. Therefore, we present the detailed modeling of the receiving system. The receiving system combining the large FOV feature of the compound eye and the space-variant resolution property of the human eye simultaneously is shown in Fig. 2. The microlenses of the NUMLA are distributed on a curved surface to mimic the large FOV feature of the compound eye. Each microlens of the NUMLA is an optical channel mimicking the ommatidia of the compound eye. Each optical channel only transfers a portion of the FOV, and the large FOV is achieved by adding a portion of the FOV of these microlenses.

Figure 3 shows the geometrical model of the receiving system. Different from our previous model30, the space-variant resolution, i.e., the retina-like property of the human eye in the proposed method is that the distance between the main optical axis and the central point distribution of the sampling area for these microlenses meets a geometric sequence growth.

Geometrical model of the receiving system. We suppose that the radius of the curved surface is R, and the distance between the target plane and the NUMLA is L. α0 is the angle between the blind area and the main optical axis, and αn is the angle between the main optical axis and the n-th ring microlens of the NUMLA.

We assume that the blind radius of the target plane is r0, and the distance between the main optical axis and the central point of sampling area for the n-th ring microlens is rn. The position of the n-th ring microlens on the curved surface is described as43.

Meanwhile, the optical parameters of these microlenses on the curved surface are non-uniform. For the n-th ring microlens, the optical parameters can be calculated by

where Dn, fn, hn, gn, nr, Δd, and v denote the aperture, focal length, thickness, curvature radius, refractive index of the n-th ring microlens, size of the APD detector and image distance, respectively. Because the microlens is placed on the curved surface, the sample area of a single microlens on the target plane is an ellipse30. For the n-th ring microlens, the semi-major lna and semi-minor axis lnb of the ellipse can be obtained by

From the analysis above, the entire geometrical model of the receiving system can be established. For pulsed-laser 3D imaging, the transmitting laser pulse beam is a function of Gaussian shape in terms of time-domain, which is written as

where Et is the original pulse energy, and τ is the transmitting pulse width. The transmitting pulsed laser beam projects to the target. For an area target with uniform illumination and reflectivity, the power scattered by the target in the backward direction is given by3

where ρπ is the target reflectivity per steradian in the backward direction, It is the intensity of the transmitted pulse beam at the target location, and At is the area of the target. Under the assumptions that the target is normal and its scattering is Lambertion, i.e., ρπ = ρt/π, where ρt is the total hemispherical reflectivity. The transmitter intensity is flat over the entire illuminated region at the target plane, i.e., I t = Ptar/Aillum. PTar is the optical power at the target location, and Aillum is the area illuminated. They can be obtained by

where ηtrans, ηatm, ηDOE, wL, and θ are the transmitting system efficiency, one-way atmospheric transmission, over efficiency of the DOE, beam radius at the target plane, and full diffusion angle of the DOE, respectively. 〈Wturb〉 is the increased beam radius caused by atmospheric turbulence, which can be expressed as44

where 〈 〉 denotes the ensemble average, l0 is the inner-scale size of turbulence, and Cn2 is the index of refraction structure constant. The scale of Cn2 represents the intensity of the atmospheric turbulence.

The one-way atmospheric transmission ηatm affected by the atmospheric condition can be evaluated by Beer-Lamber law, which is written as

where I(λ, L) is optical intensity at target plane, I(λ, 0) is optical intensity at transmitter location, and δT(λ) is extinction coefficient. The extinction coefficient has the following relationship with the laser wavelength and visibility45.

where Rv is the meteorological visibility in km−1, λ is the optical beam wavelength in nanometers, and q is the correction factor. The correction factor depends on the meteorological visibility and can be written as

The total optical power received by the n-th ring APD detector is given by

By substituting Eqs (5) and (6) into Eq. (11), we can obtain the pulsed echo profile for the n-th ring APD detector as

In Eq. (12), λt is the pulse width after broadening by the atmospheric turbulence, which is expressed as44

where L0 is the outer-scale size of turbulence.

SNR analysis

SNR is an important parameter of pulsed-laser 3D imaging because it affects the detection range and accuracy. The SNR equation of the proposed system for the n-th ring APD detector can be expressed by46

where Pnsig is the signal power output by the n-th ring APD detector, Pth is the mean-squared thermal-noise power, Pa is the mean-squared noise power added by the electronic amplifier, Pdark is the mean-squared dark-current-noise power generated by the leakage current, Pshot is the mean-squared signal shot noise power, and Pback is the output power of background noise produced by solar illumination.

The signal power output by the APD detector is given by

where M is the current gain of the APD detector, ρD = ηDe/hf is the current responsivity of the APD detector, ηD is the quantum efficiency of the APD detector, e is the electron charge, h is Planck’s constant, f = c/λ is the frequency of light, and RL is the effective load resistance of the APD detector.

The terms of the noises in Eq. (14) are described as

where k is Boltzmann’s constant, B is the electrical bandwidth of the system, T is the temperature in Kelvin, Ta is the effective noise temperature, Idark is the dark current, Fex is the excess-noise factor, Sirr is the solar irradiance, Δλ is the optical bandwidth of the receiver, and ΩR is the receiver FOV of the APD detector.

By substituting Eqs (15) and (16) into Eq.(14), we can obtain the expression of the SNR of the APD detector, which can be written as

Results

We carry out simulation experiments to verify the effectiveness of the proposed imaging system. The experiments include imaging with large FOV to mimic the compound eye and imaging with space-variant resolution, rotation and the scale invariances to imitate the retina-like property of the human eye. We also analyze the SNR of the proposed imaging system.

Simulation parameters

Table 1 shows the relevant parameters of the simulation experiments. In accordance with these parameters, the entire geometrical structure parameters of the receiving system can be obtained using Eqs (1 and 2), including the location distributions of the microlenses on the curved surface (the angle between the main optical axis and the microlens) and the optical parameters (the focal length, curvature radius, aperture, and thickness) of the microlenses of the NUMLA. The beam-expanding ratio of the transmitting system is 3×. The full diffusion angle of the DOE is about 62º. The pulse energy of the pulsed laser should be larger than that calculated by Eq. (11) when the power of the pulsed echo profile equals the minimum detectable input power of the APD detector. A 1550 nm wavelength of the pulsed laser is chosen because of its eye-safe wavelength. The simulated distance (1 m) is less than that of traditional pulsed-laser 3D imaging system because that the FOV of the proposed system is up to 52°. Under this FOV, it is a challenge to find a target with appropriate sizes to be imaged at a long distance. For example, when the distance is 100 m and the FOV is 52°, the appropriate circumradius of the target is up to about 49 m (100 m×tan(52°/2)). Therefore, we select this distance (1 m) as the simulated distance. Users can adjust the FOV according to the desired distance and the size of the imaged target. The numbers of rings and sections of the microlens are set to 18 and 30 for achieving a large optical factor.

Figures 4 and 5 show the results of the angle between the main optical axis and the microlens and the optical parameters of these microlenses, respectively. From Fig. 4, we find that the angle increases from 0.8° to 26° when the number of rings increases from 1 to 18. Therefore, the entire FOV of the proposed imaging system is 52°(2 × 26°). The figure also shows that the location distributions of the microlenses on the curved surface are non-uniform. The distribution of the microlens near the main optical axis is more compact than that of the microlens far from the main optical axis.

As shown in Fig. 5, we find that: (1) The focal length increases from 130.4 mm to 132.2 mm when the number of rings increases from 1 to 18. (2) The curvature radius increases from 60.6 mm to 61.4 mm when the number of rings increases from 1 to 18. (3) The aperture increases from 4 mm to 164 mm when the number of rings increases from 1 to 18. It indicates that the FOV for the microlens that far from the main optical axis is larger than that near the main optical axis. (4) The thickness increases from 0.1 mm to 68 mm when the number of rings increases from 1 to 18.

Imaging with a large FOV and space-variant resolution

We carry out a simulation experiment to verify that the proposed system possesses the large FOV feature of the compound eye, i.e., imaging with large FOV, and has the space-variant resolution property of the human eye. Two targets (a tank model and a car model) are used in the simulation experiment. The sizes of the tank and the car models are 0.9 m × 0.36 m × 0.25 m (length × width × height) and 0.46 m × 0.46 m × 0.36 m (length × width × height) respectively, as shown in Fig. 6(a). The reflectivities of the tank and the car models are approximately 40%, and 10%, respectively. The FOV of the proposed system is 52°, thus, the beam diameter is approximately 1 m when the distance between the targets and the transmitting system is 1 m. Therefore, the beam diameter is capable to cover the area of these two targets for imaging. The tank model is regarded as the interested target, and the car model is the uninterested target.

From the principle of the proposed system and the parameters in Table 1, the sampling areas of each microlens on the target plane can be obtained and are shown in Fig. 6(b). From Fig. 6(b), we find that these sampling areas are ellipses and the resolution is the highest in the central area as well as decreases monotonically toward the periphery area. We can also find that there are some overlaps and blind areas between adjacent sampling areas. The optical fill factor is up to 91% by substituting Eq. (3) into our previous paper30.

The APD array receives the echo beam in the sampling area and generates the electronic pulse signal. We use typical peak discriminator to obtain distance information by the time of flight for simplifying the simulation experiments. The number of the APD array is the same as that of the microlenses. Hence, the APD array also consists of 18 rings and 32 sections in each ring. Each detector of the APD array achieves the distance information of the corresponding ellipse sampling area of the targets. The axes of traditional LPT image (eccentricity and angle) can be represented by rings and sections respectively in this paper because rn already meets the geometric sequence growth according to Eq. (1). Therefore, the LPT image of the distance information is obtained by circularly readout, i.e., the sections and rings are referred to as the horizontal and vertical axes respectively. Figure 7(a) shows the LPT image of the two targets. Through the transformation from the LPT to Cartesian coordinate, the reversed LPT image can be achieved and is shown in Fig. 7(b). Figure 7(b) shows that the circumcircle radius of the tank model target imaged by the proposed system is approximately 0.55 m. Therefore, the entire FOV of the proposed system is more than 38° (2 × arctan [0.55/(R + L))]), which indicates that the proposed system can realize imaging with a large FOV mimicking the compound eye. The simulated entire FOV of the proposed system (38°) is narrower than that of the theoretical designed (52°) because the tank model is fully covered by the 17 ring microlenses and no target is in the FOV of the outermost ring microlenses, as shown in Fig. 5(a). The center of the interested tank model has a high resolution and the uninterested car model has a low resolution. Therefore, the high resolution benefits the recognition of the tank model, whereas the low resolution of the uninterested car model provides context and situation awareness. The results indicate that the proposed system can realize imaging with space-variant resolution mimicking the retina-like property of the human eye.

Rotation and scaling invariance

We carry out the verification experiments on the proposed system to test the rotation and scaling invariance properties under the aforementioned simulation conditions in this section. The two targets (tank and car models) are clockwise rotated by 36°, as shown in Fig. 8(a) to test the rotation invariance. We also decrease the sizes of the tank and the car models to 0.7 times their original size to test the scaling invariance, as shown in Fig. 8(b). Figure 9 shows the LPT and reversed LPT images before and after rotating 36°, and Fig. 10 shows the LPT and reversed LPT images before and after scaling.

Comparison between Fig. 9(a,c) shows that the LPT image after rotating the targets by 36° has not changed significantly. Only a shift of 3 pixels is observed along the sections axis. The shift pixel is theoretically obtained as 3 (36°/360° × M) using simple calculation. The results show that the simulation result is in good agreement with the theoretical result. Figure 9(b,d) show the difference between the reversed LPT image before and after rotating is that the targets are rotated by 36°, which indicates the proposed system has the rotation invariance of the human eye.

Comparison between Fig. 10(a,b) shows that the LPT image after scaling the targets to 0.7 times their original size also has not changed. Only a shift of 2 pixels is found along the sections axis. The shift pixel along the sections axis is theoretically obtained as 1.72, which is equal to −1 × log (0.7)/log (q), where q is the increase coefficient in Eq. (1) and equals 1.23. The radius of the circumcircle covering the two targets before scaling is 0.55 m as shown in Fig. 10(c) and that after scaling is 0.36 m as shown in Fig. 10(d). The scaling ratio is 0.65, and the relative error is approximately 7% [(0.7−0.65)/0.7]. The results show that the simulation result is in good agreement with the theoretical result, which indicates that the proposed system has the scaling invariance of the human eye.

SNR

To achieve the SNR of the proposed system, typical parameters of the APD detector in Eq.(14) are set as follows: ηD = 0.9, RL = 50 Ω, B = 1/(2τ), T = 300 K, Ta = 175 K, Idark = 100 nA, Fex = 10, Sirr = 266 W/m2/μm, Δλ = 4 nm, e = 1.602 × 10−19 C, h = 6.63 × 10−34 J·s, and k = 1.38 × 10−23 J/K46. Figure 11 shows the results of the SNR of the proposed system in each ring. As shown in the figure, the SNR increases from 3.6 to 7.2 × 107 when the number of rings increases from 1 to 18. The SNR increases as the aperture of the microlenses increases at the outer rings, i.e., the maximum SNR is at the outmost periphery area. Therefore, the laser beam profile should be adjusted to produce the best SNR at the center area. The ratio of the SNR of the pulsed echo profile power between the last ring and the first ring is up to 2 × 107. The reason is mainly that the area of the receiver between the two rings is Arec18/Arec1 = [π × (D18/2)2]/[π × (D1/2)2] = 1.77 × 103, and the ratio of the area of the target between the two rings is At18/At1 = (π × la18lb18)/(π × la1 lb1) = 1.4 × 103.

Discussion

A pulsed-laser 3D imaging system with large FOV inspired by the compound and human hybrid eye is proposed on the basis of the aforementioned results and analysis. From the modeling of the receiving system, we can find that the FOV of the proposed system depends largely on many factors including the radius of the curved surface, the distance between the target plane and the NUMLA, blind radius and the numbers of the rings and sections. The imaging resolution of the proposed system depends on the numbers of the rings and sections. To ensure the ranging accuracy, the bandwidth of the APD detector should be larger than the reciprocal of the pulse width of the pulsed laser. From a practical point of view, the selections of these parameters can be adjusted by the users depending on the actual situation. After these aforementioned parameters are set, the FOV and the resolution of the proposed system are determined. It is worth noting that the proposed system still possesses the FOV feature of the compound eye and the space-variant resolution property of the human eye under variation in the distance between the target and NUMLA. When the distance varies, the FOV is the same as before and the proposed system retains the space-variant resolution property and the increase coefficient between adjacent rings. However, the sampling area of each microlens of the NUMLA on the target plane is different from that before the variation. We suppose that the target plane moves closer to the NUMLA with ΔL, and then the blind radius and sampling area can be obtained by the following equation

where \({r^{\prime} }_{0}\) and \({r^{\prime} }_{n}\) are the blind radius and the distance between the main optical axis and the central point of sampling area for n-th ring microlens, respectively.

In the aforementioned simulation experiment, the tank model is regarded as the interested target and the car model is the uninterested target. A rotation platform can be used to ensure that the blind area of the receiving system aims at the interested target in a practical situation. For example, when we regard the car model as the interested target and the tank model as the uninterested target, the LPT and reversed LPT images of the two targets are shown in Fig. 12. As shown in this figure, a large number of pixels lie in the interested car model and the car model is easy to be recognized. Fewer pixels represent uninterested the tank mode. Although it is difficult to recognize what it is, it indicates that something exists around the interested car model. If the users want to recognize it, then the blind area of the receiving system should be adjusted to locate it on the target using the rotation platform. Noting that the reversed LPT image has a slight distortion because the overlap areas are twice interpreted. Therefore, we should optimize the structural parameters to reduce the overlap areas.

Unlike the mechanical scanning type Lidar, the proposed system is free of scanning component and uses an APD detector array to detect the pulsed echo signal. Therefore, it can increase the imaging speed significantly. Different from that the traditional flash Lidar using a single lens as the receiver, the proposed method employs microlens array as the receiver. The microlens array is arranged on the curved surface to obtain a large field of view, and the optical parameters of the microlens array are non-uniform to mimic the space-variant resolution property of the human eye. In additional, Compared with the flash Lidar using a single APD integrated array, the proposed system utilizes discrete components to compose the APD array. This system can easily increase the number of the APD detector as required and effectively decrease the electrical crosstalk of adjacent APD detectors due to the existence of the aperture array. The latter task is often difficult for the single APD integrated array. A comparative analysis between this work and some current state-of-art flash Lidar systems is conducted to help understand the characteristics of the proposed system, and the results are listed in Table 2. Notably, the imaging speed mainly depends on the repetition rate of the pulsed laser, the response time of the APD detector, and the processing speed of the ROIC. The imaging speed is evaluated by the number of frames per second. The time used for outputting one frame is the time from when the pulsed laser transmits the pulsed to the time when the FPGA outputs an image. The imaging speed of the proposed system is in the same orders of magnitude as that of the other systems because these effect factors on the imaging speed of the proposed system are the same order of magnitude as that of the other systems. The resolution of the proposed system may be insufficient to recognize the target. Therefore, if the users want to recognize the targets effectively, a higher resolution may be needed. However, increasing the resolution requires a larger number of the microlens array and the APD detector array, and this requirement raises the manufacturing difficulty and cost. Therefore, the users should select reasonable resolution depending on the practical application.

Conclusions

In this study, a pulsed-laser 3D imaging inspired by the compound and human hybrid eye is proposed. The principle of the proposed system is introduced and the mathematical model of the receiving system is developed. The receiving system has 18 × 30 (rings × sections) microlenses that are distributed on a curved surface to mimic the large FOV feature of the compound eye. Meanwhile, the location distribution of these microlens on the curved surface and the optical parameters of these microlens are non-uniform to mimic the space-variant resolution property of the human eye. Simulation experiments are carried out on the proposed system, including imaging with a large FOV and space-variant resolution as well as rotation and scaling invariances. The results show that the entire FOV of the proposed system is up to 52°, and the system has a high resolution in the center FOV and a low resolution in the peripheral FOV. The rotation and scaling invariances are verified, and the results indicate that the proposed system has these two invariances of the human eye. The SNR of the proposed system is analyzed, and the results show that the SNR increases with the increase in the number of rings and the maximum SNR locates at the outmost periphery area. The main purpose of this paper is to provide the modeling and the simulation verification of the proposed pulsed-laser 3D imaging system inspired by compound and human hybrid eye. Our future works include performing experimental validation to obtain the distance and the intensity images.

References

Pawlikowska, A. M., Halimi, A., Lamb, R. A. & Buller, G. S. Single-photon three-dimensional imaging at up to 10 kilometers range. Opt Express 25, 11919 (2017).

Kong, H. J., Kim, T. H., Jo, S. E. & Oh, M. S. Smart three-dimensional imaging ladar using two Geiger-mode avalanche photodiodes. Opt Express 19, 19323 (2011).

McManamon, P. F. Review of ladar: a historic, yet emerging, sensor technology with rich phenomenology. Opt Eng 51, 89801 (2012).

Ludwig, D., Kongable, A., Albrecht, H. T. & Fetzer, G. J. Identifying targets under trees: jigsaw 3D ladar test results. Proceedings of SPIE - The International Society for Optical Engineering 5086, 16 (2003).

Misu, K. & Miura, J., Specific Person Detection and Tracking by a Mobile Robot Using 3D LIDAR and ESPAR Antenna. 302 705 (2016).

Brent, S. Mapping the world in 3D. Nat Photonics 7, 429 (2010).

Housden, R. J. et al. Extended-field-of-view three-dimensional transesophageal echocardiography using image-based X-ray probe tracking. Ultrasound in Medicine & Biology 39, 993 (2013).

Soloviev, A. & Haag, M. U. D. Three-Dimensional Navigation with Scanning Ladars: Concept & Initial Verification. IEEE Transactions on Aerospace & Electronic Systems 46, 14 (2010).

Wang, K., Li, F., Zeng, H. & Yu, X. Three-dimensional flame measurements with large field angle. Opt Express 25, 21008 (2017).

Berginc, G. & Jouffroy, M. 3D laser imaging. Piers Online 7, 411 (2011).

Zhang, Z., Zhao, Y., Zhang, Y., Wu, L. & Su, J. A real-time noise filtering strategy for photon counting 3D imaging lidar. Opt Express 21, 9247 (2013).

Cao, J. et al. Design and realization of retina-like three-dimensional imaging based on a MOEMS mirror. Opt Laser Eng 82, 1 (2016).

Degnan, J. J. Scanning, Multibeam, Single Photon Lidars for Rapid, Large Scale, High Resolution, Topographic and Bathymetric Mapping. Remote Sens-Basel 8, 958 (2016).

Hofmann, U. et al. presented at the International Conference on Optical Mems and Nanophotonics, (2012).

Niclass, C. et al. Design and characterization of a 256 × 64-pixel single-photon imager in CMOS for a MEMS-based laser scanning time-of-flight sensor. Opt Express 20, 11863 (2012).

McCarthy, A. et al. Long-range time-of-flight scanning sensor based on high-speed time-correlated single-photon counting. Appl Optics 48, 6241 (2009).

Jeong, K., Kim, J. & Lee, L. P. Biologically Inspired Artificial Compound Eyes. Science 312, 557 (2006).

Wu, D. et al. Bioinspired Fabrication of High‐Quality 3D Artificial Compound Eyes by Voxel‐Modulation Femtosecond Laser Writing for Distortion‐Free Wide‐Field‐of‐View Imaging. Adv Opt Mater 2, 751 (2015).

Abraham, S. & Förstner, W. Fish-eye-stereo calibration and epipolar rectification. Isprs Journal of Photogrammetry & Remote Sensing 59, 278 (2005).

https://www.princetonlightwave.com/wp-content/uploads/2017/06/PLI-Falcon-II-.pdf.

Verghese, S. et al. presented at the SPIE Defense, Security, and Sensing (2009).

Dutton, N. A. W. et al. A SPAD-Based QVGA Image Sensor for Single-Photon Counting and Quanta Imaging. Ieee T Electron Dev 63, 189 (2015).

Gyongy, I. et al. A 256 × 256, 100-kfps, 61% Fill-Factor SPAD Image Sensor for Time-Resolved Microscopy Applications. Ieee T Electron Dev PP 1 (2018).

Bronzi, D. et al. 100 000 Frames/s 64 × 32 Single-Photon Detector Array for 2-D Imaging and 3-D Ranging. Ieee J Sel Top Quant 20, 354 (2014).

Augusto, R. X. et al. Presented at the 2018 IEEE International Solid - State Circuits Conference - (ISSCC) (2018).

Pfennigbauer, M., Ullrich, A. & Carmo, J. P. D. High precision, accuracy, and resolution of 3D laser scanner employing pulsed time-of-flight measurement. Proc Spie 8037, 803708 (2011).

Land, M. F. & Nilsson, D. Animal eyes (Oxford University Press, 2012).

Lee, W., Jang, H., Park, S., Song, Y. M. & Lee, H. COMPU-EYE: a high resolution computational compound eye. Opt Express 24, (2013 (2016).

Li, L. & Yi, A. Y. Development of a 3D artificial compound eye. Opt Express 18, 18125 (2010).

Cheng, Y. et al. Compound eye and retina-like combination sensor with a large field of view based on a space-variant curved micro lens array. Appl Optics 56, 3502 (2017).

Cao, J. et al. Modeling and simulations of three-dimensional laser imaging based on space-variant structure. Optics & Laser Technology 78, Part B 62 (2016).

Song, H., Chui, T. Y., Zhong, Z., Elsner, A. E. & Burns, S. A. Variation of cone photoreceptor packing density with retinal eccentricity and age. Invest Ophthalmol Vis Sci 52, 7376 (2011).

Jurie, F. A new log-polar mapping for space variant imaging.: Application to face detection and tracking. Pattern Recogn 32, 865 (1999).

Traver, V. J. & Bernardino, A. A review of log-polar imaging for visual perception in robotics. Robot Auton Syst 58, 378 (2010).

Xia, W., Han, S., Cao, J. & Yu, H. Target recognition of log-polar ladar range images using moment invariants. Opt Laser Eng 88, 301 (2017).

Zhang, H. et al. Development of a low cost high precision three-layer 3D artificial compound eye. Opt Express 21, 22232 (2013).

Brückner, A. et al. Thin wafer-level camera lenses inspired by insect compound eyes. Opt Express 18, 24379 (2010).

Nakamura, T., Horisaki, R. & Tanida, J. Computational superposition compound eye imaging for extended depth-of-field and field-of-view. Opt Express 20, 27482 (2012).

Dumas, D. et al. Infrared camera based on a curved retina. Opt Lett 37, 653 (2012).

Sandini, G., Questa, P., Scheffer, D. & Diericks, B. A retina-like CMOS sensor and its applications[C]. Sensor Array and Multichannel Signal Processing Workshop (2000).

Wang, F., Cao, F., Bai, T. & Su, Y. Optimization of retina-like sensor parameters based on visual task requirements. Opt Eng 52, 43206 (2013).

Cheng, Y. et al. Reducing defocus aberration of a compound and human hybrid eye using liquid lens. Appl Optics 57, 1679 (2018).

Cao, J. et al. Modeling and simulations on retina-like sensors based on curved surface. Appl Optics 55, 5738 (2016).

Hao, Q. et al. Analytical and numerical approaches to study echo laser pulse profile affected by target and atmospheric turbulence. Opt Express 24, 25026 (2016).

Dordova, L. & Wilfert, O., presented at the International Conference on Laser and Fiber-Optical Networks Modeling, (2010).

Overbeck, J. A., Salisbury, M. S., Mark, M. B. & Watson, E. A. Required energy for a laser radar system incorporating a fiber amplifier or an avalanche photodiode. Appl Optics 34, 7724 (1995).

Akiyama, A. et al. Optical fiber imaging laser radar. Proc Spie 44, 116 (2005).

Shonkwiler, R. Compact 3D flash lidar video cameras and applications. Proceedings of SPIE - The International Society for Optical Engineering 7684, 768405 (2010).

Albota, M. A. et al. Three-dimensional imaging laser radars with Geiger-mode avalanche photodiode arrays. Lincoln Laboratory Journal 13, 351 (2002).

Zach, G., Davidovic, M. & Zimmermann, H. A 16 x 16 Pixel Distance Sensor With In-Pixel Circuitry That Tolerates 150 klx of Ambient Light. Ieee J Solid-St Circ 45, 1345 (2010).

Zhou, G. et al. Flash Lidar Sensor Using Fiber-Coupled APDs. Ieee Sens J 15, 4758 (2015).

Acknowledgements

This work was supported by the National Natural Science Foundation of China (No.61605008), Beijing Natural Science Foundation (No. 4182058), National Key Foundation for Exploring Scientific Instrument (2014YQ350461), and Beijing Institute of Technology Research Fund Program for Young Scholars.

Author information

Authors and Affiliations

Contributions

Y.C. and J.C. conceived the idea and proposed the system. Q.H. supervised the research. Y.C. and F.H.Z. performed the simulation experiments. Y.C. wrote the manuscript. All authors read and revised the manuscript.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cheng, Y., Cao, J., Zhang, F. et al. Design and modeling of pulsed-laser three-dimensional imaging system inspired by compound and human hybrid eye. Sci Rep 8, 17164 (2018). https://doi.org/10.1038/s41598-018-35098-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-018-35098-9

Keywords

Comments

By submitting a comment you agree to abide by our Terms and Community Guidelines. If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.