Abstract

The growing threat of vector-borne diseases, highlighted by recent epidemics, has prompted increased focus on the fundamental biology of vector-virus interactions. To this end, experiments are often the most reliable way to measure vector competence (the potential for arthropod vectors to transmit certain pathogens). Data from these experiments are critical to understand outbreak risk, but – despite having been collected and reported for a large range of vector-pathogen combinations – terminology is inconsistent, records are scattered across studies, and the accompanying publications often share data with insufficient detail for reuse or synthesis. Here, we present a minimum data and metadata standard for reporting the results of vector competence experiments. Our reporting checklist strikes a balance between completeness and labor-intensiveness, with the goal of making these important experimental data easier to find and reuse in the future, without much added effort for the scientists generating the data. To illustrate the standard, we provide an example that reproduces results from a study of Aedes aegypti vector competence for Zika virus.

Similar content being viewed by others

Introduction

Vector competence is an arthropod vector’s ability to transmit a pathogen after exposure to the pathogen1,2,3. It combines the intrinsic potential of a pathogen to successfully enter and replicate within the vector, and then disseminate to, replicate within, and release from the vector’s salivary glands into the saliva at sufficiently high concentration to initiate infection in the next vertebrate host. Quantifying this process at each step within the vector is fundamental to understanding and predicting vector-borne disease transmission.

Due to the inherent complexity of arboviral transmission, experimental studies of vector competence are also necessarily complex, and may report a number of types of data. Experimental settings add additional constraints, as controlled laboratory conditions are themselves inherently complex, and vector competence is highly responsive to some of these conditions (e.g., the temperature at which experiments take place). While the complexity and requisite scientific skills make these experiments challenging, their importance and value – particularly in response to vector-borne disease outbreaks of international concern – cannot be overstated, and has led to increasing numbers of these experiments. However, the complexity of the experiments, and the variety of conditions under which they are conducted, make it difficult to meticulously share (and synthesize) all relevant metadata, especially with consistent enough terminology to compare results across studies4. Because primary data are not reported in a standardized manner, opportunities are being lost to advance science and public health.

Here, we propose a minimum data standard for reporting the results of vector competence experiments. The motivation to create and disseminate data standards for reporting is part of a broad effort across scientific disciplines to preserve data for future use, recover existing data that may be unsearchable for many reasons, and establish open principles for harmonizing those data to better leverage the effort of the larger community of research5,6,7,8,9,10. In particular, the FAIR (Findability, Accessibility, Interoperability, and Reusability) guiding principles11,12 were created to improve the infrastructure supporting the reuse of scholarly data, including public data archiving13. These principles aim to maximize the value of research investments and digital publishing, and have been adopted into both efforts to synthesize and populate databases for use by the scientific community, and into the language of a growing number of funders’ reporting requirements. Tailoring FAIR principles to different subfields of scientific research requires consideration of the specific kinds of data that are regularly generated, and how they would best be reported. For example, the recently published minimum data standard MIReAD (Minimum Information for Reusable Arthropod abundance Data) aims to improve the transparency and reusability of arthropod abundance data14, thereby improving the benefits reaped from data sharing, and reducing the cost of obtaining research results. Importantly, these data standards do not aim to provide guidance on how experiments are conducted, nor guide research, but provide a reporting standard flexible enough to accommodate the outputs of most of these experiments.

In this paper, we characterize the key steps of vector competence experiments, and the data generated at each stage, as a means to establish common guidelines for data reporting that follow FAIR principles. Due to the long history of experimental work with mosquito vectors (and the incomparable role it plays in efforts to decrease the global burden of vector-borne disease), we propose a minimum data standard focused on capturing results from studies that test pairs of mosquitoes and arboviruses. However, we intentionally aimed to make these standards flexible, extendable, and adaptable, and therefore applicable to additional systems (e.g., experiments with ticks and other vectors, or mechanical transmission components of Chagas disease by triatomine insects).

Methods

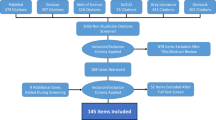

Tables 1–4 provide a standard checklist for data that arise from, and metadata about, vector competence experiments (and a blank Excel file with these columns is available as Supplementary File 1, for researchers to use directly as a template when reporting primary data along with publications). We have designed these standards with a particular focus on applicability to mosquito-borne arboviruses, and on capturing aspects of experimental design that are known confounders (e.g., rearing and experimental temperature, or inoculation route and dose)15,16,17,18,19. While reviewing the literature to design the standard, we found that many of the rates reported (e.g., transmission rate) are derived from discrete and detailed experimental information, yet the original raw data may never be reported, and is often impossible to reconstruct from provided bar or line charts. Moreover, the derived quantities often follow different calculations, with (usually intentional but) very different biological meaning (e.g., the difference between ‘dissemination rate’ and ‘disseminated infection rate’ which are often used interchangeably) (Fig. 1)20. Given these choices, it may be misleading to directly compare derived rates across studies. To avoid this problem, we suggest that reporting raw numbers of both vectors tested and those found positive for each basic metric may prevent confusion across study terminology, while still allowing derived rates to be calculated and reported in publications.

(A) For mosquito-borne viruses, vector competence experiments follow a relatively standardized format. Mosquitoes are inoculated with a virus through intrathoracic inoculation or by feeding on a live host or a prepared blood meal; infection and dissemination are measured by testing different mosquitoes tissues; and transmission is measured either by testing saliva or salivary glands, or by allowing mosquitoes to feed on a susceptible host and infect them. (B) The results are best understood as rates, but each rate might be reported in several formats; this is further complicated if only a subset of mosquitoes are tested at each stage (e.g., if some mosquitoes die between stages of the experiment). As a result, reporting only denominators leaves much to be desired. Instead–as our data standard reflects–the clearest presentation of raw data is to report total counts of tested and positive mosquitoes at each stage. (“+” indicates how many mosquitoes test positive out of the total sample). Created with BioRender.com.

Finally, we note that our goal here is only to provide a minimum standard for even the most basic experiment; more specialized designs may require additional columns, and bespoke solutions to those problems may, as they are developed, become future standardized templates. For example, experimental designs focused on coinfection with additional microbes (e.g., Wolbachia; insect-specific viruses, or ISVs) will likely need to reproduce many of the “Virus metadata” variables as a set of “Coinfection metadata” variables, and might also require additional fields. Once standardized, this could be incorporated into a future version of the base template, encouraging more researchers to assay and report the microbes present in laboratory populations, and thereby reducing unquantified heterogeneity among experimental designs.

Results

To illustrate the data standard in practice, we revisit a study by Calvez et al.21 of vector competence for Zika virus in Aedes mosquitoes relevant to Pacific islands. Unlike many studies, which report results in a mix of summary tables and bar or line graphs, Calvez et al. provided very detailed summaries of raw data in their supplementary tables (Table 5). Because they report results in a structured format, with detailed data on the experimental results, other studies have been able to gather their findings alongside other studies (e.g., Table 6). However, these aggregate datasets often lack important dimensions of metadata. To illustrate how researchers might report primary results in the future, we present a metadata-complete version of the results from Calvez et al., that meets the minimum data standard we propose, as interpreted from both their Methods and Supplementary Table 1 (Fig. 2)22. In rare cases where information was unavailable (e.g., detailed locality information on the origin of mosquitoes), we use “none” to indicate that no data was provided.

The same dataset (Table 5) in a metadata-complete format with standardized columns, reporting (a) ID’s for experimental group, and vector species and vector metadata; (b) virus species and viral metadata; (c) experimental protocols; and (d) the standard results in infection/dissemination/transmission, with clear data on diagnostics and denominators.

Discussion

Vector competence experiments can have very real-world and urgent applications, informing how health decision-makers assess risks like “Are temperate vectors permissive to a tropical outbreak spreading north?”23,24 or “Is an ongoing epizootic likely to spill over into humans?”25,26 However, a lack of standardized data reporting is a barrier to reuse and synthesis in this growing field4. In turn, current efforts largely remain disconnected from one another, without any central repository that immortalizes these studies’ findings. Some studies have begun to scale this gap: one study compiled a table of results from several dozen studies of Aedes aegypti and various arboviruses (see Table 6)27. More recently, another study compiled a dataset of 68 experimental studies that tested 111 combinations of Australian mosquitoes and arboviruses, and analyzed biological signals in the aggregated data28. These types of efforts are painstaking, requiring substantial manual curation of metadata, and hundreds more experiments are reported in the literature, yet remain unsynthesized due to this barrier.

Going forward, adopting a data reporting standard might make it easier for researchers to share data in reusable formats, and – in doing so – would support the creation of a database following this format. This could also help explain or resolve the issue of why results across studies are inconsistent, especially historical studies and newer studies, which often use newer and more sensitive techniques (e.g., qRT-PCR as compared to PFU). Storing these data in aggregate would facilitate formal meta-analysis and create new opportunities for quantitative modeling. It would also have practical benefits for researchers, assisting them in disseminating their findings, and potentially reducing duplication of research. To that point, a recent synthetic study found that while some combinations (e.g., Ae. aegypti and Zika virus) are extremely well studied, over 90% of mosquito-virus pairs might never have been tested experimentally. Standardizing data more broadly might help researchers identify and fill these gaps, simultaneously supporting infectious disease preparedness and fundamental research into the science of the host-virus network29.

Data availability

All example data and a blank template for reporting are available on Github at github.com/viralemergence/comet-standard.

Code availability

No code is used in this manuscript.

References

Reeves, W. C. Epidemiology and Control of Mosquito-borne Arboviruses in California, 1943–1987. (California Mosquito and Vector Control Association, 1990).

Tabachnick, W. J. Genetics of Insect Vector Competence for Arboviruses. in Advances in Disease Vector Research (ed. Harris, K. F.) 93–108 (Springer New York, 1994).

Hardy, J. L., Houk, E. J., Kramer, L. D. & Reeves, W. C. Intrinsic factors affecting vector competence of mosquitoes for arboviruses. Annu. Rev. Entomol. 28, 229–262 (1983).

Azar, S. R. & Weaver, S. C. Vector Competence: What Has Zika Virus Taught Us? Viruses 11, 867.

Poisot, T., Mounce, R. & Gravel, D. Moving toward a sustainable ecological science: don’t let data go to waste! Ideas Ecol. Evol. 6 (2013).

Rund, S. S. C., Moise, I. K., Beier, J. C. & Martinez, M. E. Rescuing Troves of Hidden Ecological Data to Tackle Emerging Mosquito-Borne Diseases. Journal of the American Mosquito Control Association 35, 75–83.

Culley, T. M. The frontier of data discoverability: Why we need to share our data. Appl. Plant Sci. 5, 1700111 (2017).

Gibb, R. et al. Data Proliferation, Reconciliation, and Synthesis in Viral Ecology. Bioscience 71, 1148–1156 (2021).

Renaut, S., Budden, A. E., Gravel, D., Poisot, T. & Peres-Neto, P. Management, Archiving, and Sharing for Biologists and the Role of Research Institutions in the Technology-Oriented Age. Bioscience 68, 400–411 (2018).

Dallas, T., Carlson, C., Stephens, P., Ryan, S. J. & Onstad, D. insectDisease: programmatic access to the Ecological Database of the World’s Insect Pathogens. Ecography, in press.

Wilkinson, M. D. et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci Data 3, 160018 (2016).

Wilkinson, M. D. et al. A design framework and exemplar metrics for FAIRness. Sci Data 5, 180118 (2018).

Roche, D. G. et al. Troubleshooting public data archiving: suggestions to increase participation. PLoS Biol. 12, e1001779 (2014).

Rund, S. S. C. et al. MIReAD, a minimum information standard for reporting arthropod abundance data. Sci Data 6, 40 (2019).

Richards, S. L., Mores, C. N., Lord, C. C. & Tabachnick, W. J. Impact of extrinsic incubation temperature and virus exposure on vector competence of Culex pipiens quinquefasciatus Say (Diptera: Culicidae) for West Nile virus. Vector Borne Zoonotic Dis. 7, 629–636 (2007).

Mordecai, E. A. et al. Thermal biology of mosquito-borne disease. Ecol. Lett. 22, 1690–1708 (2019).

Richards, S. L., Anderson, S. L., Lord, C. C. & Tabachnick, W. J. Effects of Virus Dose and Extrinsic Incubation Temperature on Vector Competence of Culex nigripalpus (Diptera: Culicidae) for St. Louis Encephalitis Virus. J. Med. Entomol. 49, 1502–1506 (2014).

Dodson, B. L., Kramer, L. D. & Rasgon, J. L. Effects of larval rearing temperature on immature development and West Nile virus vector competence of Culex tarsalis. Parasit. Vectors 5, 199 (2012).

Kay, B. H., Fanning, I. D. & Mottram, P. Rearing temperature influences flavivirus vector competence of mosquitoes. Med. Vet. Entomol. 3, 415–422 (1989).

Christofferson, R. C., Chisenhall, D. M., Wearing, H. J. & Mores, C. N. Chikungunya viral fitness measures within the vector and subsequent transmission potential. PLoS One 9, e110538 (2014).

Calvez, E. et al. Zika virus outbreak in the Pacific: Vector competence of regional vectors. PLoS Negl. Trop. Dis. 12, e0006637 (2018).

Carlson, C. J. viralemergence/comet-standard: Preprint release (v0.1). Zenodo https://doi.org/10.5281/zenodo.6711614 (2022).

Gendernalik, A. et al. American Aedes vexans Mosquitoes are Competent Vectors of Zika Virus. Am. J. Trop. Med. Hyg. 96, 1338–1340 (2017).

Blagrove, M. S. C. et al. Potential for Zika virus transmission by mosquitoes in temperate climates. Proc. Biol. Sci. 287, 20200119 (2020).

Holicki, C. M. et al. German Culex pipiens biotype molestus and Culex torrentium are vector-competent for Usutu virus. Parasit. Vectors 13, 625 (2020).

Goddard, L. B., Roth, A. E., Reisen, W. K. & Scott, T. W. Vector competence of California mosquitoes for West Nile virus. Emerg. Infect. Dis. 8, 1385–1391 (2002).

Souza-Neto, J. A., Powell, J. R. & Bonizzoni, M. Aedes aegypti vector competence studies: A review. Infect. Genet. Evol. 67, 191–209 (2019).

Kain, M. P. et al. Not all mosquitoes are created equal: A synthesis of vector competence experiments reinforces virus associations of Australian mosquitoes. PLoS Neglected Tropical Diseases https://doi.org/10.1371/journal.pntd.0010768 (in press).

Albery, G. F. et al. The science of the host–virus network. Nature Microbiology 6, 1483–1492 (2021).

Acknowledgements

This work was supported by funding to Verena (viralemergence.org) from the U.S. National Science Foundation, including NSF BII 2021909 and NSF BII 2213854. SJR was additionally supported by NSF DBI 2016265 CIBR: VectorByte: A Global Informatics Platform for studying the Ecology of Vector-Borne Diseases.

Author information

Authors and Affiliations

Contributions

Conceptualization: C.J.C. and S.J.R.; data collection: V.Y.W.; literature synthesis and first drafting: V.Y.W., C.J.C., S.J.R.; writing and editing: all authors.

Corresponding authors

Ethics declarations

Competing Interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this license, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Wu, V.Y., Chen, B., Christofferson, R. et al. A minimum data standard for vector competence experiments. Sci Data 9, 634 (2022). https://doi.org/10.1038/s41597-022-01741-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41597-022-01741-4

This article is cited by

-

Evaluating vector competence for Yellow fever in the Caribbean

Nature Communications (2024)

-

Health risks associated with argasid ticks, transmitted pathogens, and blood parasites in Pyrenean griffon vulture (Gyps fulvus) nestlings

European Journal of Wildlife Research (2023)