Abstract

The design of robots that interact autonomously with the environment and exhibit complex behaviours is an open challenge that can benefit from understanding what makes living beings fit to act in the world. Neuromorphic engineering studies neural computational principles to develop technologies that can provide a computing substrate for building compact and low-power processing systems. We discuss why endowing robots with neuromorphic technologies – from perception to motor control – represents a promising approach for the creation of robots which can seamlessly integrate in society. We present initial attempts in this direction, highlight open challenges, and propose actions required to overcome current limitations.

Similar content being viewed by others

Opportunities and challenges

Neuromorphic circuits and sensorimotor architectures represent a key enabling technology for the development of a unique generation of autonomous agents endowed with embodied neuromorphic intelligence. We define intelligence as the ability to efficiently interact with the environment, to plan adequate behaviour based on the correct interpretation of sensory signals and internal states, for accomplishing its goals, to learn and predict the effects of its actions, and to continuously adapt to changes in unconstrained scenarios. Ultimately, embodied intelligence allows the robot to interact swiftly with the environment in a wide range of conditions and tasks1. Doing this “efficiently” means performing robust processing of information with minimal use of resources such as power, memory and area, while coping with noise, variability, and uncertainty. These requirements entail finding solutions which improve performance and increase robustness in a way that is different from the standard engineering approach of adding general purpose computing resources, redundancy, and control structures in the system.

Current progress in both machine learning and computational neuroscience is producing impressive results in Artificial Intelligence (AI)2,3,4. However, conventional computing and robotic technologies are still far from performing as well as humans or other animals in tasks that require embodied intelligence1,5. Examples are spatial perception tasks for making long-term navigation plans, coupled with fine motor control tasks that require fast reaction times, and adaptation to external conditions. Within this context, a core requirement for producing intelligent behaviour is the need to process data on multiple timescales. This multi-scale approach is needed to support immediate perception analysis, hierarchical information extraction and memorisation of temporally structured data for life-long learning, adaptation and memory reorganisation. While conventional computing can implement processes on different timescales by means of high-precision (e.g. 32-bit floating point) numerical parameters and long-term storage of data in external memory banks, this results in power consumption figures and area/volume requirements of the corresponding computational substrate that are vastly worse than those of biological neural networks6.

The neuromorphic engineering approach employs mixed-signal analogue/digital hardware that supports the implementation of neural computational primitives inspired by biological intelligence that are radically different from those used in classical von Neumann architectures7. This approach provides energy-efficient and compact solutions that can support the implementation of intelligence and its embodiment on robotic platforms8. However, adopting this approach in robotics requires overcoming several barriers that often discourage the research community from following this promising avenue. The challenges range from the system integration of full-custom neuromorphic chips with sensors, conventional computing modules and motors, to the “programming” of the neural processing systems integrated on neuromorphic chips, up to the need for a principled framework for implementing and combining computational primitives, functions and operations in these devices using neural instead of digital representations.

Both conventional and neuromorphic robotics face the challenge of developing robust and adaptive modules to solve a wide range of tasks especially in applications in human-robot collaboration settings. Both will benefit from a framework designed to combine such modules to deliver a truly autonomous artificial agent. In this perspective, we discuss the current challenges of robotics and neuromorphic technology, and suggest possible research directions for overcoming current roadblocks and enabling the construction of intelligent robotic systems of the future, powered by neuromorphic technology.

Requirements for intelligent robots

Recent developments in machine learning, supported by increasingly powerful and accessible computational resources, led to impressive results in robotics-specific applications2,3,4. Nevertheless, except for the case of precisely calibrated robots performing repetitive operations in controlled environments, autonomous operations in natural settings are still challenging due to the variability and unpredictability of the dynamic environments in which they act.

The interaction with uncontrolled environments and human collaborators requires the ability to continuously infer, predict and adapt to the state of the environment, of humans, and of the robotic platform itself, as described in Box 1. Current machine learning, deep networks, and AI methods for robotics are not best suited for these types of scenarios and their use still has critical roadblocks that hinder their full exploitation. These methods typically require high computational (and power) resources: for example deep networks have a very large number of parameters, they need to be trained with very large datasets, and require a large amount of training time, even when using large Graphics Processing Unit (GPU) clusters. The datasets used are mostly disembodied, while ideally, for robotic applications, they would need to be tailored9 and platform specific. This is especially true for end-to-end reinforcement learning, where the dataset depends on the robot plant and actuation. Data acquisition and dataset creation are expensive and time consuming. While virtual simulations can partially improve this aspect, transfer learning techniques do not always solve the problem of adapting pre-trained architectures to real-world applications. Off-line training on large datasets with thousands of parameters also implies the use of high performance, powerful but expensive and power-hungry computing infrastructures. Inference suffers less from this problem and can be run on less demanding, embedded platforms, but at the cost of very limited or no adaptation abilities, thus making the system brittle to real-world, ever-changing scenarios10.

The key requirements in robotics are hence to reduce or possibly eliminate the need for data- and computation-hungry algorithms, making efficient use of sensory data, and to develop solutions for continuous online learning where robots can acquire new knowledge by means of weak- or self-supervision. An important step toward this goal is moving from static (or frame-based) to dynamic (or event-based) computing paradigms, able to generalise and adapt to different application scenarios, users, robots, and goals.

Neuromorphic perception addresses these problems right from the sensory acquisition level. It uses novel bio-inspired sensors that efficiently encode sensory signals with asynchronous event-based strategies11. It also adopts computational primitives that extract information from the events obtained from the sensors, relying on a diverse set of spike-driven computing modules.

Neuromorphic behaviour follows control policies that adapt to different environmental and operating conditions by integrating multiple sensory inputs, using event-based computational primitives to accomplish a desired task.

Both neuromorphic perception and behaviour are based on computational primitives that are derived from models of neural circuits in biological brains and that are therefore very well suited for being implemented using mixed signal analogue/digital circuits12. This offers an efficient technological substrate for neuromorphic perception and actions in robotics. Examples are context-dependent cooperative and competitive information processing, and learning and adaptation at multiple temporal scales13,14.

The development and integration of neuromorphic perception and behaviour using hardware neuromorphic computational primitives has the final goal of designing a robot with end-to-end neuromorphic intelligence as shown in Fig. 1.

In the next sections, we present an overview of the neuromorphic perception, action planning, and cognitive processing strategies, highlighting features and problems of the current state of the art in these domains. We conclude with a road map and a “call for action” to make progress in the field of embodied neuromorphic intelligence.

Neuromorphic perception

Robots typically include many sensors that gather information about the external world, such as cameras, microphones, pressure sensors (for touch), lidars, time-of-flight sensors, temperature sensors, force-torque sensor,s or proximity sensors. In conventional setups, all sensors measure their corresponding physical signal and sample it at fixed temporal intervals, irrespective of the state and dynamics of the signal itself. They typically provide a series of static snapshots of the external world. When the signal is static, they keep on transmitting redundant data, but with no additional information, and can miss important samples when the signal changes rapidly, with a trade-off between sampling rate (for capturing dynamic signals) and data load. Conversely, in most neuromorphic sensory systems, the sensed signal is sampled and converted into digital pulses (or “events”, or “spikes”) only when there is a large enough change in the signal itself, using event-based time encoding schemes15,16 such as pulse-density or sigma-delta modulation17. The data acquisition is hence adapted to the signal dynamics, with the event rate increasing for rapidly changing stimuli and decreasing for slowly changing ones. This type of encoding does not lose information18,19,20 and is extremely effective in scenarios with sparse activity. This event-representation is key for efficient, fast, robust and highly-informative sensing. The technological improvement comprises a reduced need for data transmission, storage and processing, coupled with high temporal resolution – when needed – and low latency. This is extremely useful for real time robotic applications.

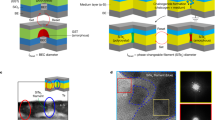

Starting from the design of motion sensors and transient imagers21, the first event-driven vision sensors with enough resolution, low noise and sensor mismatch – the Dynamic Vision Sensor (DVS)22 and Asynchronous Temporal Imaging Sensor (ATIS)23 – triggered the development of diverse algorithms for event-driven visual processing and their integration on robotic platforms24. These sensor information encoding methods break decades of static frame encoding as used by conventional cameras. Their novelty calls for the development of a new principled approach to event-driven perception. The event-driven implementation of machine vision approaches vastly outperforms conventional algorithmic solutions in specific tasks such as fast object tracking25, optical flow26,27,28 or stereo29 and Simultaneous Localisation and Mapping (SLAM)30. However, these algorithms and their hardware implementations still suffer from task specificity and limited adaptability.

These event-driven sensory-processing modules will progressively substitute their frame-based counterparts in robotic pipelines (see Fig. 2). However, despite the promising results, the uptake of event-driven sensing in robotics is still difficult due to the mindset change that is required to work with streams of events, instead of static frames. Furthermore, this new data representation calls for the development of new ad hoc interfaces, communication protocols (described in Box 2 and Fig. 3) and software libraries for handling events. Open source JAVA31 and C++32,33 libraries have already been developed, also within two of the main robotic middlewares – ROS and YARP – but they require additional contributions from a large community to grow and reach the maturity needed for successful adoption in robotics. Eventually, a hybrid approach that combines frame-based and event-driven modules, and that fosters the growth of the community revolving around it, could favour a more widespread adoption in the robotics domain. However, this hybrid neuromorphic/traditional design strategy would not fully exploit all the advantages of the neuromorphic paradigm.

a the iCub robot (picture ©IIT author Duilio Farina) is a platform for integrating neuromorphic sensors. Magenta boxes show neuromorphic sensors that acquire continuous physical signals and encode them in spike trains (vision, audition, touch). All other sensors, that monitor the state of the robot and of its collaborators, rely on clocked acquisition (green boxes), that can be converted to spike encoding by means of Field Programmable Gate Arrays (FPGAs) or sub-threshold mixed-mode devices. b The output of event-driven sensors can be sent to Spiking Neural Networks (SNNs) (with learning and recurrent connections) for processing. VISION box in (a): Event-driven vision sensors produce “streams of events” (green for light to dark changes, magenta for dark to light changes). The trajectory of a bouncing ball can be observed continuously over space, with microsecond temporal resolution (black rectangles represent sampling of a 30 fps camera). Table: Event-driven vision sensors evolved from the Dynamic Vision Sensor (DVS) with only “change detecting” pixels - to higher resolution versions with absolute light intensity measurements. The Dynamic and Active pixel VIsion Sensor (DAVIS)131 acquires intensity frames at low frame rate simultaneously to the “change detection” (with minor cross talk and artefacts on the event stream during the frame trigger). The Asynchronous Temporal Imaging Sensor (ATIS)132 samples absolute light intensity only for those pixels that detect a change. The CeleX5 offers either frame-based or event-driven readout (with a few milliseconds delay between the two, resulting in loss of event stream data during a frame acquisition). Similar to the DAVIS, the Rino3 captures events and intensity frames simultaneously, however, it employs a synchronised readout architecture as opposed to the asynchronous readout typically found in other event-driven sensors. The ultimate solution combining frames and events is yet to be found. Merging two stand-alone sensors in a single optical setup poses severe challenges in terms of the development of optics that trade-off luminosity with bulkiness. Merging two types of acquisition on the same sensor limits the fill-in factor and increases noise and interference between frames and events.

Working towards the implementation of robots with full neuromorphic vision, the neuromorphic and computational neuroscience communities have started in-depth work on perceptive modules for stereo vision34 and vergence35, attention36, and object recognition37. These algorithms can run on neuromorphic computing substrates for exploiting efficiency, adaptability and low latency.

The roadmap of neuromorphic sensor development started with vision, loosely inspired by biological photo-transduction, and audition, inspired by the cochlea, and only later progressed to touch and olfaction. The event-driven acquisition principle is extremely valuable also when applied to other sensory modalities, especially those characterised by temporally and spatially localised activation, such as tactile, auditory, and force-torque modalities, those requiring extremely low-latency for closed-loop control, such as encoders and Inertia Measurement Units (IMUs), non-biological like sensors that augment the ability to monitor the environment, such as lidar, time-of-flight, 3D, and proximity sensors, and sensors that help the robot to monitor the state of human beings, e.g. Electromyography (EMG), Electroencephalography (EEG), centre of mass, etc.38.

Available cochlear implementations rely either on sub-threshold mixed-mode silicon devices39,40 (as do the vision sensors), or on Field Programmable Gate Arrays (FPGAs)41. They have been applied mostly to sound source localisation and auditory attention, based on the extremely precise temporal footprint of left and right signals42,43, and, lately, on audio-visual speech recognition44. Their integration on robots, however, is still very limited: as in event-driven vision, they require application development tools, and a way in which they can be exploited in speech processing.

The problem of tactile perception is further complicated by three factors. First, by the sheer number of available different physical transducers. Second, by the difficulty in interfacing the transducers to silicon readout devices. This is unlike the situation in vision, where silicon photo-diodes can capture light and are physically part of the readout device. Third, there are the engineering challenges in integrating tactile sensors on robotic platforms, comprising miniaturisation, and design and implementation on flexible and durable materials with good mechanical properties, wiring, and robustness. Very few native neuromorphic tactile sensors have been developed so far45,46,47,48 and none has been stably integrated as part of a robotic platform, besides lab prototypes. While waiting for these sensors to be integrated on robots, existing integrated clock-based sensing can be used to support the development of event-driven robotics applications. In this “soft” neuromorphic approach, the front end clocked samples are converted to event-based representation by means of algorithms implemented in software49,50,51 or embedded on Digital Signal Processors (DSPs)52 or FPGAs53,54. The same approach is valuable also in other sensory modalities, such as proprioception55,56, to support the development of event-driven algorithms and validate their use in robotic applications. However, it is not optimal in terms of size, power, and latency.

For all sensory modalities, the underlying neuromorphic principle is that of “change detection”, a high level abstraction that captures the essence of biological sensory encoding. It is also a well defined operation that allows algorithms and methods to extract information from data streams15 to be formalised. Better understanding the sophisticated neural encoding of the properties of the sensed signal and their relation to behavioural decisions of the subject57 – and their implementation in the design of novel neuromorphic sensors – would enhance the capability of artificial agents to extract relevant information and take appropriate decisions.

Neuromorphic behaviour

To interact efficiently with the environment, robots need to choose the most appropriate behaviour, relying on attention, allocation, anticipation, reasoning about other agents, planning the correct sequence of actions and movements based on their understanding of the external world and of their own state. Biological intelligent behaviour couples the ability to perform such high level tasks with the estimation, from experience, of the consequences of future events for generating goal-oriented actions.

A hypothesis for how intelligent behaviour is carried out by the mammalian nervous system is the existence of a finite set of computational primitives used throughout the cerebral cortex. Computational primitives are building blocks that can be assembled to extract information from multiple sensory modalities and coordinate a complex set of motor actions that depend on the goal of the agent and on the contingent scenario (e.g. presence of obstacles, human collaborators, tools).

The choice of the most appropriate behaviour, or action, in the neuromorphic domain is currently limited to proof-of-concept models. Box 3 reviews the state-of-the-art of robots with sensing and processing implemented on neuromorphic devices. Most implementations consist of a single bi-stable network discriminating between ambiguous external stimuli58 and selecting one of two possible actions. Dynamic Field Theory (DFT) is the reference framework for modelling such networks, where the basic computational element is a Dynamic Neural Field (DNF)59, computationally equivalent to a soft Winner-Take-All (WTA). As described in Box 4, WTA networks are one of the core computational primitives that can be implemented in neuromorphic hardware. Therefore, DNF represents an ideal framework which can translate intelligent models into feasible implementations in a language compatible with neuromorphic architectures60. The current challenge in such systems is to develop a multi-area and multi-task spiking neuron model of the cortical areas involved in decision making under uncertainty.

Different branches of robotics have tackled this challenge by exploring biologically inspired embodied brain architectures to implement higher-level functions61 to provide robots with skills to interact with the real world in real-time. These architectures are required to learn sensorimotor skills through interaction with their environment and via incremental developmental stages62,63.

Once the appropriate behaviour is selected, it has to be translated into a combination of actions, or dynamic motor primitives, to generate rich sets of complex movements and switching behaviours, for example switching between different rhythmic motions such as walking, generated via a Central Pattern Generator (CPG), and swimming64. The stability and capability of these systems in generating diverse actions is formally proven65. This motivates their adoption and further progress to biological plausibility with spiking implementations66. As a result, robots benefit from the biology of animal locomotor skills and can be used as tools for testing animal locomotion and motor control models and how they are affected by sensory feedback67.

Despite taking its inspiration from neural computation, robotics inspired by neural systems has only recently started to use Spiking Neural Networks (SNNs) and biologically plausible sensory input, and the corresponding computational substrate that can support SNNs and learning. Neuromorphic technologies move one step further in this direction. In recent years there has been substantial progress in developing large-scale brain inspired computing technologies68,69,70,71 that allow the exploration of the computational role of different neural processing primitives to build intelligent systems72,73,74. Although knowledge of the neural activity underlying those functions is increasing, we are not yet able to explicitly and quantitatively connect intelligence to neural architectures and activity. This hinders the configuration of large systems to achieve effective behaviour and action planning. An example of an attempt to develop tools to use spiking neurons as a basis to implement mathematical functions is the “Neural Engineering Framework (NEF)”75, that has been successfully deployed to implement adaptive motor control for a robotics arm76. The NEF formalisation allows the use of neurons as computational units, implementing standard control theory, but overlooks the brain architectures and canonical circuits that implement the same functionalities.

Current research on motor control implementation based on brain computational primitives mainly focuses on the translation of well-established robotic controllers into SNNs that run on neuromorphic devices56,77,78,79. Although the results show the potential of this technology, these implementations still need to follow a hybrid approach in which neuromorphic modules have to be interfaced to standard robotics ones. In the example cited above, motors are driven via embedded controllers with proprietary algorithms and closed/inaccessible electronic components. There is therefore the need to perform spike encoding of continuous sensory signals measured by classical sensors, and to perform decoding from spike trains to signals compatible with classical motor controllers. This inherently limits the performance of hybrid systems that would benefit from being end-to-end event-based. In this respect, the performance of the standard motor controller and its spiking counterpart cannot be benchmarked on the same robotic task, because of the system-level interfacing issues. To make inroads toward the design of fully neuromorphic end-to-end robotic systems, it is essential to design new event-based sensors (e.g. IMU, encoders, pressure) to complement the ones already available (e.g. audio, video, touch). In addition, motors or actuators should be directly controlled by spike trains, moving from Pulse Width Modulation (PWM) to Pulse Frequency Modulation (PFM)80,81,82. Furthermore, the end-to-end neuromorphic robotic system could benefit from substituting the current basic methods used in robotics (e.g. Model Predictive Control (MPC), Proportional Integral Derivative (PID)) with more biologically plausible ones (e.g. motorneuron – Golgi – muscle spindle architectures83) that can be directly implemented by the spiking neural network circuits present on neuromorphic processors. The drawback of this approach, however, lies in the limited resolution and noisy computing substrate used in these processors, as well as in the lack of an established control theory that uses the linear and non-linear operators present in spiking neural networks (e.g. integration, adaptation, rectification). The proposed biologically inspired control strategies would probably benefit from the use of bio-inspired actuators, such as tendons48, agonist-antagonist muscles84, soft actuators85. While offering more compliant behaviour, these introduce non-linearities that are harder to control with traditional approaches, but match the intrinsic properties of biological actuation, driven by networks of neurons and synapses.

Computational primitives for intelligent perception and behaviour

In addition to the adoption of neuromorphic sensors, the implementation of fully end-to-end neuromorphic sensorimotor systems requires fundamental changes in the way signals are processed and computation is carried out. In particular, it requires replacing the processing that is typically done using standard computing platforms, such as microcontrollers, DSPs, or FPGA devices, with computational primitives that can be implemented using neuromorphic processing systems. That is to say, computational primitives implemented by populations of spiking neurons that act on the signals obtained from both internal and external sensors, that learn to predict their statistics, that process and transform the continuous streams of sensory inputs into discrete symbols, and that represent internal states and goals. By supporting these computational primitives in the neuromorphic hardware substrate, such an architecture would be capable of carrying out sensing, planning and prediction. It would be able to produce state-dependent decisions and motor commands to drive robots and generate autonomous behaviour. This approach would allow the integration of multiple neuromorphic sensory-processing systems distributed and embedded in the robot body, closing the loop between sensing and action in real-time, with adaptive, low-latency, and low power consumption features.

Realising a hardware substrate that emulates the physics or biological neural processing systems and using it to implement these computational primitives can be considered as a way to implement embodied intelligence. In this respect one could consider these hardware computational primitives as “elements of cognition”86, that could bridge the research done on embodied neuromorphic intelligence with that of cognitive robotics87.

Several examples of neuromorphic processing systems that support the implementation of brain-inspired computational primitives by emulating the dynamics of real neurons for signal processing and computation have already been proposed42,69,88. Rather than using serial, bit-precise, clocked, time-multiplexed representations, these systems make use of massively parallel in-memory computing analogue circuits. Recently, there has also been substantial progress in developing large-scale brain-inspired computing technologies that follow this parallel in-memory computing strategy, in which silicon circuits can be slowed down to the time-scales relevant for robotic applications69,71,89. By implementing computational primitives through the dynamics of multiple parallel arrays of neuromorphic analogue circuits, it is possible to bypass the need to use clocked, time-multiplexed circuits that decouple physical time from processing time, and to avoid the infamous von Neumann bottleneck problem7,8,90, which requires to shuffle data back and forth at very high clock-rates from external memory to the time-multiplexed processing unit. Although the neuromorphic approach significantly reduces power consumption, it requires circuits and processing elements that can integrate information over temporal scales that are well matched to those of the signals that are being sensed. For example, the control of robotic joint movements, the sensing of voice commands, or tracking of visual targets or human gestures would require the synapse and neural circuits to have time constants in the range of 5 ms to 500 ms. In addition to the technological challenge of implementing compact and reliable circuit elements that can have such long-lasting memory traces, there is an important theoretical challenge for understanding how to use such non-linear dynamical systems to carry out desired state-dependent computations. Unlike conventional computing approaches, the equivalent of a “compiler” tool that allows the mapping of a desired complex computation or behaviour into a “machine-code”-level configuration of basic computing units such as dynamic synapses or Integrate-and-Fire neurons is still lacking. One way to tackle this challenge, is to identify a set of brain-inspired neural primitives that are compatible with the features and limitations of the neuromorphic circuits used to implement them12,91,92,93,94 and that can be combined and composed in a modular way to achieve the desired high-level computational primitive functionality. Box 4 lists a proposed dictionary of such primitives.

In addition, the computational requirements of robotic systems have to treat also sensors and actuators as computational primitives that shape the encoding of the sensory signal and of the movements depending on their physical shape (e.g. composite eyes, versus retina-like foveated or uniform vision sensors, brushless and DC-motors versus soft actuators), location (e.g. binocular versus monocular vision, non-uniform distribution of tactile sensors and location of the motor with respect to the body part that has to be moved) and local computation (e.g. feature extraction in sensors or low-level closed-loop control).

Based on the required outcome, neural circuits can be endowed with additional properties that implement useful non-linearities, such as Spike Frequency Adaptation (SFA) or refractory period settings. These building blocks can be further combined to produce computational primitives such as soft WTA networks95,96,97,98,99, neural oscillators100, or state-dependent computing networks7,12,101, to recognise or generate sequences of actions8,78,102,103,104,105,106,107. By combining these with sensing and actuation neural primitives, they can produce rich behaviour useful in robotics.

WTA networks

WTA networks represent a common “canonical” circuit motive, found throughout multiple parts of the neocortex108,109. Theoretical studies have shown that such networks provide elementary units of computation that can stabilise and de-noise the neuronal dynamics108,110,111. These features have been validated with neuromorphic SNN implementations to generate robust behaviour in closed sensorimotor loops97,101,112,113,114. WTA networks composed of n units can be used to represent n valued variables, with population coding. In this way it is possible to couple multiple WTA networks among each other and implement networks of relations among different variables115,116 (e.g. to represent the relationship between a given motor command value and the desired joint angle78). As WTA networks can create sustained activation to keep a neuronal state active even after the input to the network is removed, they provide a model of working memory100,102,117,118. WTA dynamics create stable attractors are computationally equivalent to DNF that enable behaviour learning in a closed sensorimotor loop in which the sensory input changes continually as the agent generates action. In order to learn a mapping between a sensory state and its consequences, or a precondition and an action, the sensory state before the action needs to be stored in a neuronal representation. This can be achieved by creating a reverberating activation in a neuronal population that can be sustained for the duration of the action even if the initial input ceases. The sustained activity can be used to update sensorimotor mappings when a rewarding or punishing signal is obtained60,119. Finally, these attractor-based representations can bridge the neuron circuit dynamics with the robot behavioural time scales in a robust way8,118,120, and be exploited to develop more complex embedded neuromorphic intelligent systems. However, to reach this goal, it is necessary to develop higher-level control strategies and theoretical frameworks that are compatible with mixed signal neuromorphic hardware, which have compositionality and modularity properties.

State-dependent intelligent processing

State-dependent intelligent processing is a computational framework that can support the development of more complex neuromorphic intelligent systems. In biology, real neural networks perform state-dependent computations using WTA-type working memory structures maintained by recurrent excitation and modulated by feedback inhibition121,122,123,124,125,126. Specifically, modelling studies of state-dependent processing in cortical networks have shown how coupled WTA networks can reproduce the computational properties of Finite State Machines (FSMs)101,123,127. An FSM is an abstract computing machine that can be in only one of its n possible states, and that can transition between states upon receiving an appropriate external input. True FSMs can be robustly implemented in digital computers that can rely on bit-precise encoding. However, their corresponding neural implementations built using neuromorphic SNN architectures, are affected by noise and variability, very much like their biological counterparts. In addition to exploiting the stabilising properties of WTA networks, the solution that neuromorphic engineers found to implement robust and reliable FSM state-dependent processing with noisy silicon neuron circuits is to resort to dis-inhibition mechanisms analogous to the ones found in many brain areas128,129. These hardware state-dependent processing SNNs have been denoted as Neural State Machines (NSMs)101,105. They represent a primitive structure for implementing state-dependent and context-dependent computation in spiking neural networks. Multiple NSMs can interact with each other in a modular way and can be used as building blocks to construct complex cognitive computations in neuromorphic agents105,130.

Neuromorphic sensors, computational substrates and actuators are combined to build autonomous agents endowed with embodied intelligence, by means of brain-like asynchronous, digital communication. Existing agents range from monolithic implementations - whereby sensor is directly connected to a neuromorphic computing device - to modular implementations, where distributed sensors and processing devices are connected by means of a middleware abstraction layer, trading off compactness and task-specific implementations with flexibility. Both approaches would benefit from the standardisation of the communication protocol (discussed in Box 2).

Outlook

Embodied neuromorphic intelligent agents are on their way. They promise to interact more smoothly with the environment and with humans by incorporating brain-inspired computing methods. They are being designed to take autonomous decisions and execute corresponding actions in a way that takes into account many different sources of information, reducing uncertainty and ambiguity from perception, and continuously learning and adapting to changing conditions.

In general, the overall system design of traditional robotics and even current neuromorphic approaches is still far from any biological inspiration. A real breakthrough in the field will happen if the whole system design is based on biological computational principles, with a tight interplay between the estimation of the surroundings and the robot’s own state, and decision making, planning and action. Scaling to more complex tasks is still an open challenge and requires further development of perception and behaviour, and further co-design of computational primitives that can be naturally mapped onto neuromorphic computing platforms and supported by the physics of its electronic components. At the system level, there is still a lack of understanding on how to integrate all sensing and computing components in a coherent system that forms a stable perception useful for behaviour. Additionally, the field is lacking a notion of how to exploit the intricate non-linear properties of biological neural processing systems, for example to integrate adaptation and learning at different temporal scales. This is both on the theory/algorithmic level and on the hardware level, where novel technologies could be exploited, for such requirements.

The roadmap towards the success of neuromorphic intelligent agents encompasses the growth of the neuromorphic community with a cross-fertilisation with other research communities, as discussed in Box 5, Box 6.

The characteristics of neuromorphic computing technology so far have been demonstrated by proof of concept applications. It nevertheless holds the promise to enable the construction of power-efficient and compact intelligent robotic systems, capable of perceiving, acting, and learning in challenging real-world environments. A number of issues need to be addressed before this technology is mature to solve complex robotic tasks and can enter mainstream robotics. In the short term, it will be imperative to develop user-friendly tools for the integration and programming of neuromorphic devices to enable a large community of users and the adoption of the neuromorphic approach by roboticists. The path to follow can be similar to the one adopted by robotics, with open source platforms and development of user-friendly middleware. Similarly, the community should rely on a common set of guiding principles for the development of intelligence using neural primitives. New information and signal processing theories should be developed following these principles also for the design of asynchronous, event-based processing in neuromorphic hardware and neuronal encoding circuits. This should be done with the cross-fertilisation of the neuromorphic community with computational neuroscience and information theory; furthermore interaction with materials and (soft-)robotics communities will better define the application domain and the specific problems for which neuromorphic approaches can make a difference. Eventually, the application of a neuromorphic approach to robotics will find solutions that are applicable in other domains, such as smart spaces, automotive, prosthetics, rehabilitation, and brain-machine interfaces, where different types of signals may need to be interpreted, to make behavioural decisions and generate actions in real-time.

Change history

11 March 2022

A Correction to this paper has been published: https://doi.org/10.1038/s41467-022-29119-5

References

Barrett, L. Beyond the Brain: How Body and Environment Shape Animal 5and Human Minds (Princeton University Press, 2011). https://doi.org/10.1515/9781400838349. Barrett provides an in-depth overview on what shapes human and animal’s intelligent behaviour, exploiting their brains, but also bodies and environment. She describes how physical structure contributes to cognition, and how it employs materials and resources in specific environments.

LeCun, Y., Bengio, Y. & Hinton, G. Deep learning. Nature 521, 436–444 (2015).

Schmidhuber, J. Deep learning in neural networks: an overview. Neural Netw. 61, 85–117 (2015).

Sejnowski, T. J. The unreasonable effectiveness of deep learning in artificial intelligence. Proc. Natl Acad. Sci. (2020). https://www.pnas.org/content/early/2020/01/23/1907373117.full.pdf.

Jordan, M. I. Artificial intelligence—the revolution hasn’t happened yet. Harvard Data Sci. Rev. 1 (2019-07-01). https://hdsr.mitpress.mit.edu/pub/wot7mkc1.

Silver, D. et al. Mastering the game of go with deep neural networks and tree search. Nature 529, 484–489 (2016).

Indiveri, G. & Liu, S.-C. Memory and information processing in neuromorphic systems. Proc. IEEE 103, 1379–1397 (2015).

Indiveri, G. & Sandamirskaya, Y. The importance of space and time for signal processing in neuromorphic agents. IEEE Signal Process. Mag. 36, 16–28 (2019).

Pasquale, G., Ciliberto, C., Odone, F., Rosasco, L. & Natale, L. Are we done with object recognition? the icub robot’s perspective. Robot. Autonomous Syst. 112, 260–281 (2019).

Hadsell, R., Rao, D., Rusu, A. & Pascanu, R. Embracing change: continual learning in deep neural networks. Trends Cogn. Sci. 24, 1028–1040 (2020).

Liu, S.-C. & Delbruck, T. Neuromorphic sensory systems. Curr. Opin. Neurobiol. 20, 288–295 (2010).

Chicca, E., Stefanini, F., Bartolozzi, C. & Indiveri, G. Neuromorphic electronic circuits for building autonomous cognitive systems. Proc. IEEE 102(September), 1367–1388 (2014). A description of neuromorphic computational primitives, their implementation in mixed-mode subthreshold CMOS circuits, and their computational relevance in supporting cognitive functions.

Qiao, N. et al. A reconfigurable on-line learning spiking neuromorphic processor comprising 256 neurons and 128k synapses. Front. Neurosci. 9, 141 (2015).

Qiao, N., Bartolozzi, C. & Indiveri, G. An ultralow leakage synaptic scaling homeostatic plasticity circuit with configurable time scales up to 100 ks. IEEE Transactions on Biomedical Circuits and Systems 11, 1271–1277 (2017).

Lazar, A. A. & Tóth, L. T. Perfect recovery and sensitivity analysis of time encoded bandlimited signals. IEEE Transactions on Circuits and Systems I: Regular Papers. 51, 2060–2073 (2004).

Karen, A., Scholefield, A., & Vetterli M. Sampling and reconstruction of bandlimited signals with multi-channel time encoding. IEEE Transactions on Signal Processing 68, 1105–1119 (2020).

Singh Alvarado, A., Rastogi, M., Harris, J. G. & Príncipe, J. C. The integrate-and-fire sampler: a special type of asynchronous σ-δ modulator. In 2011 IEEE International Symposium of Circuits and Systems (ISCAS), 2031–2034 (2011).

Akolkar, H. et al. What can neuromorphic event-driven precise timing add to spike-based pattern recognition? Neural Comput. 27, 561–593 (2015).

Bartolozzi, C. et al. Event-driven encoding of off-the-shelf tactile sensors for compression and latency optimisation for robotic skin. In 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 166–173 (2017-09).

Scheerlinck, C. et al. Fast image reconstruction with an event camera. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) (2020-03).

Kramer, J. An integrated optical transient sensor. IEEE Trans. Circuits Syst. II: Analog Digital Signal Process. 49, 612–628 (2002).

Lichtsteiner, P., Posch, C. & Delbruck, T. A 128x128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE J. Solid-State Circuits 43, 566–576 (2008). This paper describes the first event-driven sensor used outside the designer’s lab. The DVS usability (robust hardware and friendly open source software) pushed the field of neuromorphic vision.

Posch, C., Matolin, D. & Wohlgenannt, R. A QVGA 143 dB dynamic range frame-free PWM image sensor with lossless pixel-level video compression and time-domain CDS. IEEE J. Solid-State Circuits 46, 259–275 (2011).

Gallego, G. et al. Event-based vision: a survey. IEEE Transactions on Pattern Analysis and Machine Intelligence 44, 154–180 (2020). Comprehensive review of the plethora of different approaches used i event-driven vision, from adapting computer vision and DL, to biologically inspired vision.

Glover, A., Vasco, V. & Bartolozzi, C. A controlled-delay event camera framework for on-line robotics. In 2018 IEEE International Conference on Robotics and Automation (2018-05).

Benosman, R., Ieng, S.-H., Clercq, C., Bartolozzi, C. & Srinivasan, M. Asynchronous frameless event-based optical flow. Neural Netw. 27, 32–37 (2012).

Gallego, G., Rebecq, H. & Scaramuzza, D. A unifying contrast maximization framework for event cameras, with applications to motion, depth, and optical flow estimation. In IEEE Int. Conf. Comput. Vis. Pattern Recog.(CVPR), vol. 1 (2018).

Zhu, A. Z., Yuan, L., Chaney, K. & Daniilidis, K. Unsupervised event-based learning of optical flow, depth, and egomotion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2019-06).

Zhou, Y., Gallego, G. & Shen, S. Event-based stereo visual odometry. IEEE Transactions on Robotics 37, 1–18 (2021).

Vidal, A. R., Rebecq, H., Horstschaefer, T. & Scaramuzza, D. Ultimate SLAM? combining events, images, and imu for robust visual SLAM in hdr and high-speed scenarios. IEEE Robot. Autom. Lett. 3, 994–1001 (2018).

Delbruck, T. Jaer open source project. http://jaerproject.org (2007).

Glover, A., Vasco, V., Iacono, M. & Bartolozzi, C. The event-driven software library for yarp with algorithms and icub applications. Front. Robot. AI. 4, 73 (2017).

Mueggler, E., Huber, B. & Scaramuzza, D. Event-based, 6-DOF pose tracking for high-speed maneuvers. In Intelligent Robots and Systems (IROS), 2014 IEEE/RSJ International Conference on, 2761–2768 (IEEE, 2014).

Osswald, M., Ieng, S.-H., Benosman, R. & Indiveri, G. A spiking neural network model of 3Dperception for event-based neuromorphic stereo vision systems. Sci. Rep. 7, 1–11 (2017).

Vasco, V. et al. Vergence control with a neuromorphic icub. In IEEE-RAS International Conference on Humanoid Robots (Humanoids 2016), 732–738 (2016-11).

Iacono, M. et al. Proto-object based saliency for event-driven cameras. In 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 805–812 (2019).

Illing, B., Gerstner, W. & Brea, J. Biologically plausible deep learning. but how far can we go with shallow networks? Neural Netw. 118, 90–101 (2019).

Romano, F. et al. The codyco project achievements and beyond: toward human aware whole-body controllers for physical human robot interaction. IEEE Robot. Autom. Lett. 3, 516–523 (2018).

Hamilton, T. J., Jin, C., Van Schaik, A. & Tapson, J. An active 2-d silicon cochlea. IEEE Trans. Biomed. circuits Syst. 2, 30–43 (2008).

Liu, S.-C., van Schaik, A., Minch, B. A. & Delbruck, T. Asynchronous binaural spatial audition sensor with 2 × 64 × 4 channel output. Biomed. Circuits Syst., IEEE Trans. 8, 453–464 (2014). Latest version of event-based cochlea. It only outputs data in response to energy at its input.

Jiménez-Fernández, A. et al. A binaural neuromorphic auditory sensor for fpga: a spike signal processing approach. IEEE Trans. Neural Netw. Learn. Syst. 28, 804–818 (2017).

Schoepe, T. et al. Neuromorphic sensory integration for combining sound source localization and collision avoidance. In 2019 IEEE Biomedical Circuits and Systems Conference (BioCAS), 1–4 (2019).

Anumula, J., Ceolini, E., He, Z., Huber, A. & Liu, S. An event-driven probabilistic model of sound source localization using cochlea spikes. In 2018 IEEE International Symposium on Circuits and Systems (ISCAS), 1–5 (2018).

Li, X., Neil, D., Delbruck, T. & Liu, S. Lip reading deep network exploiting multi-modal spiking visual and auditory sensors. In 2019 IEEE International Symposium on Circuits and Systems (ISCAS), 1–5 (2019).

Caviglia, S., Pinna, L., Valle, M. & Bartolozzi, C. Spike-based readout of posfet tactile sensors. IEEE Trans. Circuits Syst. I – Regul. Pap. 64, 1421–1431 (2016).

John, R. et al. Self healable neuromorphic memtransistor elements for decentralized sensory signal processing in robotics. Nat. Commun. 11, 4030 (2020). Neuromorphic tactile system encompassing healable materials and memristive elements to perform proof-of-concept edge tactile sensing, demonstrated in a robotic task that is further applicable to prosthetic applications.

Wan, C. et al. An artificial sensory neuron with tactile perceptual learning. Adv. Mater. 30, 1801291 (2018).

Lee, J.-H., Chung, Y. S. & Rodrigue, H. Long shape memory alloy tendon-based soft robotic actuators and implementation as a soft gripper. Sci. Rep. 9, 1–12 (2019).

Rongala, U., Mazzoni, A., Camboni, D., Carrozza, M. & Oddo, C. Neuromorphic artificial sense of touch: Bridging robotics and neuroscience. In Bicchi A., B. W. (ed.) Robotics Research. Springer Proceedings in Advanced Robotics, chap. 3 (Springer, Cham., 2018).

Ward-Cherrier, B., Pestell, N. & Lepora, N. F. Neurotac: A neuromorphic optical tactile sensor applied to texture recognition. In International conference on Robotics and Automation (ICRA) 2020 (2020).

Nguyen, H. et al. Dynamic texture decoding using a neuromorphic multilayer tactile sensor. In 2018 IEEE Biomedical Circuits and Systems Conference (BioCAS), 1–4 (2018).

Bergner, F., Dean-Leon, E. & Cheng, G. Design and realization of an efficient large-area event-driven e-skin. Sensors 20, (2020). https://www.mdpi.com/1424-8220/20/7/1965.

Motto Ros, P., Laterza, M., Demarchi, D., Martina, M. & Bartolozzi, C. Event-driven encoding algorithms for synchronous front-end sensors in robotic platforms. IEEE Sens. J. 19, 7149–7161 (2019).

Lee, D.-H., Zhang, S., Fischer, A. & Bengio, Y. Difference target propagation. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 498–515 (Springer, 2015).

Zhao, J. et al. Closed-loop spiking control on a neuromorphic processor implemented on the icub. IEEE J. Emerg. Sel. Top. Circuits Syst. 10, 546–556 (2020). Example of the use of Spiking Neural Networks for the implementation of a cooperative/collaborative network for the control of a single joint of the iCub humanoid robot.

Kreiser, R. et al. An on-chip spiking neural network for estimation of the head pose of the iCub robot. Front. Neurosci. 14, 551 (2020).

Panzeri, S., Harvey, C. D., Piasini, E., Latham, P. E. & Fellin, T. Cracking the neural code for sensory perception by combining statistics, intervention, and behavior. Neuron 93, 491–507 (2017). Computational neuroscience that can support neuromorphic computing. Panzeri and colleagues explore the information content of spike patterns and their correlate with information about the input stimulus and about the behavioural choice of the subject. Understanding the encoding and decoding of the neural code can provide insights on how to design efficient and powerful Spiking Neural Network for robotics.

Milde, M. B., Dietmüller, A., Blum, H., Indiveri, G. & Sandamirskaya, Y. Obstacle avoidance and target acquisition in mobile robots equipped with neuromorphic sensory-processing systems. In International Symposium on Circuits and Systems, (ISCAS) (IEEE, 2017).

Zibner, S. K. U., Faubel, C., Iossifidis, I. & Schoner, G. Dynamic neural fields as building blocks of a cortex-inspired architecture for robotic scene representation. IEEE Trans. Autonomous Ment. Dev. 3, 74–91 (2011). Theory of Dynamic Neural Fields and this can be used to develop cognitive robots. DNF is one of the proposed computational frameworks that can support the principled design of neuromorphic intelligent robots.

Sandamirskaya, Y. Dynamic neural fields as a step toward cognitive neuromorphic architectures. Front. Neurosci. 7, 276 (2014).

Falotico, E. et al. Connecting artificial brains to robots in a comprehensive simulation framework: the neurorobotics platform. Front. Neurorobotics 11, 2 (2017).

Patacchiola, M. & Cangelosi, A. A developmental cognitive architecture for trust and theory of mind in humanoid robots. IEEE Transactions on Cybernetics PP(99), 1–13 (2020).

Richter, M., Sandamirskaya, Y. & Schöner, G. A robotic architecture for action selection and behavioral organization inspired by human cognition. In Intelligent Robots and Systems (IROS), 2012 IEEE/RSJ International Conference on, 2457–2464 (IEEE, 2012).

Ijspeert, A., Crespi, A., Ryczko, D. & Cabelguen, J. From swimming to walking with a salamander robot driven by a spinal cord model. Science 315, 1416–1420 (2007).

M. Wensing, P. & Slotine, J.-J. Sparse control for dynamic movement primitives. IFAC-PapersOnLine 50, 10114–10121 (2017).

Tieck, J. C. V. et al. Generating pointing motions for a humanoid robot by combining motor primitives. Front. Neurorobotics 13, 77 (2019).

Ijspeert, A. J. Amphibious and sprawling locomotion: from biology to robotics and back. Annu. Rev. Control, Robot., Autonomous Syst. 3, 173–193 (2020).

Furber, S., Galluppi, F., Temple, S. & Plana, L. The SpiNNaker project. Proc. IEEE 102, 652–665 (2014).

Moradi, S., Qiao, N., Stefanini, F. & Indiveri, G. A scalable multicore architecture with heterogeneous memory structures for dynamic neuromorphic asynchronous processors (DYNAPs). Biomed. Circuits Syst., IEEE Trans. 12, 106–122 (2018). Mixed-signal analog/digital multi-core neuromorphic processor for implementing spiking neural networks with biologically realistic dynamics.

Davies, M. et al. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38, 82–99 (2018).

Neckar, A. et al. Braindrop: a mixed-signal neuromorphic architecture with a dynamical systems-based programming model. Proc. IEEE 107, 144–164 (2019).

Rhodes, O. et al. spynnaker: A software package for running pynn simulations on spinnaker. Front. Neurosci. 12, 816 (2018).

Lin, C.-K. et al. Mapping spiking neural networks onto a manycore neuromorphic architecture. In Proceedings of the 39th ACM SIGPLAN Conference on Programming Language Design and Implementation PLDI, 78–89 (ACM, 2018).

Stefanini, F., Sheik, S., Neftci, E. & Indiveri, G. Pyncs: a microkernel for high-level definition and configuration of neuromorphic electronic systems. Front. Neuroinfo. 8, 73 (2014).

Eliasmith, C. & Anderson, C. Neural engineering: Computation, representation, and dynamics in neurobiological systems (The MIT Press, 2004).

DeWolf, T., Stewart, T. C., Slotine, J.-J. & Eliasmith, C. A spiking neural model of adaptive arm control. Proc. R. Soc. B: Biol. Sci. 283, 20162134 (2016). Neural Engineering Framework applied to the adaptive control of a robotic arm. NEF is one of the mathematical frameworks that could support the development of neuromorphic robotics.

Stagsted, R. K. et al. Event-based PID controller fully realized in neuromorphic hardware: a one dof study. In Intelligent Robots and Systems (IROS), 2010 IEEE/RSJ International Conference on (2020).

Zhao, J., Donati, E. & Indiveri, G. Neuromorphic implementation of spiking relational neural network for motor control. In International Conference on Artificial Intelligence Circuits and Systems (AICAS), 2020, 89–93 (IEEE, 2020).

Linares-Barranco, A., Perez-Peña, F., Jimenez-Fernandez, A. & Chicca, E. ED-Biorob: a neuromorphic robotic arm with FPGA-based infrastructure for bio-inspired spiking motor controllers. Front. Neurorobotics 14, 590163 (2020).

Jimenez-Fernandez, A. et al. A neuro-inspired spike-based PID motor controller for multi-motor robots with low cost FPGAs. Sensors 12, 3831–3856 (2012).

Perez-Peña, F., Leñero-Bardallo, J. A., Linares-Barranco, A. & Chicca, E. Towards bioinspired close-loop local motor control: a simulated approach supporting neuromorphic implementations. In 2017 IEEE International Symposium on Circuits and Systems (ISCAS) (2017).

Donati, E., Perez-Pefia, F., Bartolozzi, C., Indiveri, G. & Chicca, E. Open-loop neuromorphic controller implemented on VLSI devices. In Biomedical Robotics and Biomechatronics (BIOROB), 7th IEEE International Conference on, 827–832 (2018-08).

Shadmehr, R. et al. The computational neurobiology of reaching and pointing: a foundation for motor learning (MIT press, 2005).

Huang, X. et al. Highly dynamic shape memory alloy actuator for fast moving soft robots. Adv. Mater. Technol. 4, 1800540 (2019).

Schaffner, M. et al. 3d printing of robotic soft actuators with programmable bioinspired architectures. Nat. Commun. 9, 1–9 (2018).

Schöner, G. Dynamical systems approaches to cognition. In Sun, R. (ed.) The Cambridge Handbook of Computational Psychology, 101–126 (Cambridge University Press, 2008).

Yang, C., Wu, Y., Ficuciello, F., Wang, X. & Cangelosi, A. Guest editorial: special issue on human-friendly cognitive robotics. IEEE Trans. Cogn. Developmental Syst. 13, 2–5 (2021).

Milde, M. B. et al. Obstacle avoidance and target acquisition for robot navigation using a mixed signal analog/digital neuromorphic processing system. Front. Neurorobotics 11, 28 (2017).

Thakur, C. S. et al. Large-scale neuromorphic spiking array processors: a quest to mimic the brain. Front. Neurosci. 12, 891 (2018). Review of large-scale emulators of neural networks that also discuss promising applications.

Backus, J. Can programming be liberated from the von Neumann style?: a functional style and its algebra of programs. Commun. ACM 21, 613–641 (1978).

Neckar, A. et al. Braindrop: a mixed-signal neuromorphic architecture with a dynamical systems-based programming model. Proc. IEEE 107, 144–164 (2018).

Payvand, M., Nair, M. V., Müller, L. K. & Indiveri, G. A neuromorphic systems approach to in-memory computing with non-ideal memristive devices: from mitigation to exploitation. Faraday Discuss. 213, 487–510 (2019).

Dalgaty, T. et al. Hybrid neuromorphic circuits exploiting non-conventional properties of RRAM for massively parallel local plasticity mechanisms. APL Mater. 7, 081125 (2019).

Chicca, E. & Indiveri, G. A recipe for creating ideal hybrid memristive-CMOS neuromorphic processing systems. Appl. Phys. Lett. 116, 120501 (2020). Guidelines and specifications for the integration of memristive devices on neuromorphic chips and their relevance in the design of truly low-power and compact building blocks to support always-on learning in neuromorphic computing systems.

Horiuchi, T. A spike-latency model for sonar-based navigation in obstacle fields. Circuits Syst. I: Regul. Pap., IEEE Trans. 56, 2393–2401 (2009).

Oster, M., Douglas, R. & Liu, S.-C. Computation with spikes in a winner-take-all network. Neural Comput. 21, 2437–2465 (2009).

Häfliger, P. Adaptive WTA with an analog VLSI neuromorphic learning chip. IEEE Trans. Neural Netw. 18, 551–572 (2007).

Mostafa, H. & Indiveri, G. Sequential activity in asymmetrically coupled winner-take-all circuits. Neural Comput. 26, 1973–2004 (2014).

Indiveri, G. A current-mode hysteretic winner-take-all network, with excitatory and inhibitory coupling. Analog Integr. Circuits Signal Process. 28(September), 279–291 (2001).

Donati, E., Krause, R. & Indiveri, G. Neuromorphic pattern generation circuits for bioelectronic medicine. In 2021 10th International IEEE/EMBS Conference on Neural Engineering (NER), 1117–1120 (2021).

Giulioni, M. et al. Robust working memory in an asynchronously spiking neural network realized in neuromorphic VLSI. Front. Neurosci. 5 (2012). http://www.frontiersin.org/neuromorphic_engineering/10.3389/fnins.2011.00149/abstract.

Neftci, E. et al. Synthesizing cognition in neuromorphic electronic systems. Proc. Natl Acad. Sci. 110, E3468–E3476 (2013). In this paper, one of the cited computational primitives (the Winner-Take-All) is used as building block to implement a cognitive function, performing real-time context-dependent classification of motion patterns observed by a silicon retina/decision making.

Kreiser, R., Aathmani, D., Qiao, N., Indiveri, G. & Sandamirskaya, Y. Organising sequential memory in a neuromorphic device using dynamic neural fields. Front. Neurosci. 12, 717 (2018).

Duran, B. & Sandamirskaya, Y. Learning temporal intervals in neural dynamics. IEEE Trans. Cogn. Developmental Syst. 10, 359–372 (2018).

Liang, D. & Indiveri, G. A neuromorphic computational primitive for robust context-dependent decision making and context-dependent stochastic computation. IEEE Trans. Circuits Syst. II: Express Briefs 66, 843–847 (2019).

Liang, D. & Indiveri, G. Robust state-dependent computation in neuromorphic electronic systems. In Biomedical Circuits and Systems Conference, (BioCAS), 2017, 108–111 (IEEE, 2017-10).

Risi, N., Aimar, A., Donati, E., Solinas, S. & Indiveri, G. A spike-based neuromorphic architecture of stereo vision. Front. Neurorobotics 14, 93 (2020).

Douglas, R. J. & Martin, K. A. Neuronal circuits of the neocortex. Annu. Rev. Neurosci. 27, 419–451 (2004).

Douglas, R. & Martin, K. Recurrent neuronal circuits in the neocortex. Curr. Biol. 17, R496–R500 (2007).

Maass, W. On the computational power of winner-take-all. Neural Comput. 12, 2519–2535 (2000).

Rutishauser, U., Douglas, R. & Slotine, J. Collective stability of networks of winner-take-all circuits. Neural Comput. 23, 735–773 (2011).

Indiveri, G. Neuromorphic analog VLSI sensor for visual tracking: Circuits and application examples. IEEE Trans. Circuits Syst. II 46, 1337–1347 (1999).

Indiveri, G. Modeling selective attention using a neuromorphic analog VLSI device. Neural Comput. 12, 2857–2880 (2000).

Bartolozzi, C. & Indiveri, G. Selective attention in multi-chip address-event systems. Sensors 9, 5076–5098 (2009).

Cook, M. & Bruck, J. Networks of relations for representation, learning, and generalization (2005). https://resolver.caltech.edu/CaltechPARADISE:2005.ETR071.

Cook, M., Jug, F., Krautz, C. & Steger, A. Unsupervised learning of relations. In Artificial Neural Networks–ICANN 2010, 164–173 (Springer, 2010).

Hahnloser, R. Emergence of neural integration in the head-direction system by visual supervision. Neuroscience 120, 877–891 (2003).

Johnson, J. S., Spencer, J. P. & Schöner, G. Moving to higher ground: The dynamic field theory and the dynamics of visual cognition. N. Ideas Psychol. 26, 227–251 (2008).

Sandamirskaya, Y. & Conradt, J. Increasing autonomy of learning sensorimotortransformations with dynamic neural fields. In International Conference on Robotics and Automation (ICRA), Workshop “Autonomous Learning" (2013).

Sandamirskaya, Y., Zibner, S. K., Schneegans, S. & Schöner, G. Using dynamic field theory to extend the embodiment stance toward higher cognition. N. Ideas Psychol. 31, 322–339 (2013).

Douglas, R., Koch, C., Mahowald, M., Martin, K. & Suarez, H. Recurrent excitation in neocortical circuits. Science 269, 981–985 (1995).

Compte, A., Brunel, N., Goldman-Rakic, P. S. & Wang, X.-J. Synaptic mechanisms and network dynamics underlying spatial working memory in a cortical network model. Cereb. Cortex 10, 910–923 (2000).

Dayan, P. Simple substrates for complex cognition. Front. Neurosci. 2, 255 (2008).

Harris, K. D. & Thiele, A. Cortical state and attention. Nat. Rev. Neurosci. 12, 509 (2011).

Cheng-yu, T. L., Poo, M.-m & Dan, Y. Burst spiking of a single cortical neuron modifies global brain state. Science 324, 643–646 (2009).

Schölvinck, M. L., Saleem, A. B., Benucci, A., Harris, K. D. & Carandini, M. Cortical state determines global variability and correlations in visual cortex. J. Neurosci. 35, 170–178 (2015).

Rutishauser, U. & Douglas, R. State-dependent computation using coupled recurrent networks. Neural Comput. 21, 478–509 (2009).

Hangya, B., Pi, H.-J., Kvitsiani, D., Ranade, S. P. & Kepecs, A. From circuit motifs to computations: mapping the behavioral repertoire of cortical interneurons. Curr. Opin. Neurobiol. 26, 117–124 (2014).

Letzkus, J. J., Wolff, S. B. & Lüthi, A. Disinhibition, a circuit mechanism for associative learning and memory. Neuron 88, 264–276 (2015).

Liang, D. et al. Robust learning and recognition of visual patterns in neuromorphic electronic agents. In Artificial Intelligence Circuits and Systems Conference, (AICAS), 2019 (IEEE, 2019-03).

Brandli, C., Berner, R., Yang, M., Liu, S.-C. & Delbruck, T. A 240 × 180 130 dB 3 μs latency global shutter spatiotemporal vision sensor. IEEE J. Solid-State Circuits 49, 2333–2341 (2014).

Posch, C. et al. Live demonstration: Asynchronous time-based image sensor (atis) camera with full-custom ae processor. In International Symposium on Circuits and Systems, (ISCAS), 1392 (IEEE, 2010).

Ajoudani, A. et al. Progress and prospects of the human–robot collaboration. Autonomous Robots 42, 957–975 (2018).

Siva, S. & Zhang, H. Robot perceptual adaptation to environment changes for long-term human teammate following. The International Journal of Robotics Research 0278364919896625.

Tirupachuri, Y. et al. Towards partner-aware humanoid robot control under physical interactions. In (eds Bi, Y., Bhatia, R. & Kapoor, S.) Intelligent Systems and Applications, 1073–1092 (Springer International Publishing, 2020). Example paper on the complexity of the physical interaction of robots and humans, i.e. two highly dynamical systems that need to cooperate to achieve a common goal in unconstrained scenarios.

Udupa, S., Kamat, V. R. & Menassa, C. C. Shared autonomy in assistive mobile robots: a review. Disability and Rehabilitation: Assistive Technology 1–22 (2021). Review of the progress in the field of assistive mobile robotics that highlights the need for adaptation to the user intentions (to give full control to the user) and to the varying environment (for safety).

Magaña, O. A. V. et al. Fast and continuous foothold adaptation for dynamic locomotion through cnns. IEEE Robot. Autom. Lett. 4, 2140–2147 (2019).

Gjorgjieva, J., Drion, G. & Marder, E. Computational implications of biophysical diversity and multiple timescales in neurons and synapses for circuit performance. Curr. Opin. Neurobiol. 37, 44–52 (2016).

Marom, S. Neural timescales or lack thereof. Prog. Neurobiol. 90, 16–28 (2010).

Abbott, L., Sen, K., Varela, J. & Nelson, S. Synaptic depression and cortical gain control. Science 275, 220–223 (1997).

Shapley, R. & Enroth-Cugell, C. Chapter 9 visual adaptation and retinal gain controls. Prog. Retinal Res. 3, 263–346 (1984).

Turrigiano, G., Leslie, K., Desai, N., Rutherford, L. & Nelson, S. Activity-dependent scaling of quantal amplitude in neocortical neurons. Nature 391, 892–896 (1998).

Deiss, S., Douglas, R. & Whatley, A. A pulse-coded Communications infrastructure for neuromorphic systems. In (eds Maass, W. & Bishop, C.) Pulsed Neural Networks, chap. 6, 157–78 (MIT Press, 1998).

Boahen, K. A burst-mode word-serial address-event link – II: Receiver design. IEEE Trans. Circuits Syst. I 51, 1281–91 (2004).

Serrano-Gotarredona, R. et al. AER building blocks for multi-layer multi-chip neuromorphic vision systems. In (eds Becker, S., Thrun, S. & Obermayer, K.) Advances in Neural Information Processing Systems, vol. 15 (MIT Press, 2005-12).

Rast, A. D. et al. Transport-independent protocols for universal AER communications. In International Conference on Neural Information Processing, 675–684 (Springer, 2015).

Ros, P. M., Crepaldi, M., Bartolozzi, C. & Demarchi, D. Asynchronous DC-free serial protocol for event-based AER systems. In 2015 IEEE International Conference on Electronics, Circuits, and Systems (ICECS), 248–251 (2015-12).

Waniek, N., Biedermann, J. & Conradt, J. Cooperative SLAM on small mobile robots. 2015 IEEE International Conference on Robotics and Biomimetics (ROBIO) 1810–1815 (2015).

Hwu, T., Krichmar, J. & Zou, X. A complete neuromorphic solution to outdoor navigation and path planning. Circuits and Systems (ISCAS), 2017 IEEE International Symposium on 1–4 (2017).

Tang, G. & Michmizos, K. P. Gridbot: an autonomous robot controlled by a spiking neural network mimicking the brain’s navigational system. Proceedings of the International Conference on Neuromorphic Systems 1–8 (2018).

Kreiser, R., Pienroj, P., Renner, A. & Sandamirskaya, Y. Pose estimation and map formation with spiking neural networks: towards neuromorphic slam. Intelligent Robots and Systems (IROS), 2018 IEEE/RSJ International Conference on (2018). Example of Spiking Neural Networks implemented on neuromorphic chips for the continuous estimation of pose and map formation, towards the implementation of SLAM.

Glatz, S., Martel, J., Kreiser, R., Qiao, N. & Sandamirskaya, Y. Adaptive motor control and learning in a spiking neural network realised on a mixed-signal neuromorphic processor. 2019 International Conference on Robotics and Automation (ICRA) 9631–9637 (2019).

Naveros, F., Luque, N. R., Ros, E. & Arleo, A. VOR adaptation on a humanoid icub robot using a spiking cerebellar model. IEEE Trans. Cybern. 50, 4744–4757 (2019).

Dupeyroux, J., Hagenaars, J. J., Paredes-Vallés, F. & de Croon, G. C. H. E. Neuromorphic control for optic-flow-based landing of MAVs using the loihi processor. 2021 IEEE International Conference on Robotics and Automation (ICRA) 96–102 (2021).

Yan, Y. et al. Comparing Loihi with a SpiNNaker 2 prototype on low-latency keyword spotting and adaptive robotic control. Neuromorphic Computing and Engineering (2021). http://iopscience.iop.org/article/10.1088/2634-4386/abf150.

Zaidel, Y., Shalumov, A., Volinski, A., Supic, L. & Tsur, E. E. Neuromorphic NEF-based inverse kinematics and PID control. Front. Neurorobotics 15, 631159 (2021).

Strohmer, B., Manoonpong, P. & Larsen, L. B. Flexible spiking cpgs for online manipulation during hexapod walking. Front. Neurorobotics 14, 41 (2020).

Gutierrez-Galan, D., Dominguez-Morales, J., Perez-Peña, F., Jimenez-Fernandez, A. & Linares-Barranco, A. Neuropod: a real-time neuromorphic spiking cpg applied to robotics. Neurocomputing 381, 10–19 (2020). Demonstration of how spiking neural networks can implement the Central Pattern Generator primitive in hardware and used for legged robot locomotion.

Chan, V., Liu, S.-C. & van Schaik, A. AER EAR: A matched silicon cochlea pair with address event representation interface. IEEE Trans. Circuits Syst. I, Spec. Issue Sens. 54, 48–59 (2007).

Hodgkin, A. & Huxley, A. A quantitative description of membrane current and its application to conduction and excitation in nerve. J. Physiol. 117, 500–44 (1952).

Mahowald, M. & Douglas, R. A silicon neuron. Nature 354, 515–518 (1991).

Indiveri, G. Neuromorphic bistable VLSI synapses with spike-timing-dependent plasticity. Adv. Neural Inf. Process. Syst. (NIPS) 15, 1091–1098 (2003).

Indiveri, G. et al. Neuromorphic silicon neuron circuits. Front. Neurosci. 5, 1–23 (2011).

Izhikevich, E. Simple model of spiking neurons. IEEE Trans. Neural Netw. 14, 1569–1572 (2003).

Mihalas, S. & Niebur, E. A generalized linear integrate-and-fire neural model produces diverse spiking behavior. Neural Comput. 21, 704–718 (2009).

Bartolozzi, C. & Indiveri, G. Synaptic Dynamics in Analog VLSI. Neural Comput 19, 2581–2603 (2007).

Boegerhausen, M., Suter, P. & Liu, S.-C. Modeling short-term synaptic depression in silicon. Neural Comput. 15(February), 331–348 (2003).

Ramachandran, H., Weber, S., Aamir, S. A. & Chicca, E. Neuromorphic circuits for short-term plasticity with recovery control. 2014 IEEE International Symposium on Circuits and Systems (ISCAS) 858–861 (2014).

Indiveri, G. Synaptic plasticity and spike-based computation in VLSI networks of integrate-and-fire neurons. Neural Inf. Process. - Lett. Rev. 11, 135–146 (2007).

Mitra, S., Fusi, S. & Indiveri, G. Real-time classification of complex patterns using spike-based learning in neuromorphic VLSI. Biomed. Circuits Syst., IEEE Trans. 3, 32–42 (2009).

Wang, R. M., Hamilton, T. J., Tapson, J. C. & van Schaik, A. A neuromorphic implementation of multiple spike-timing synaptic plasticity rules for large-scale neural networks. Front. Neurosci. 9, 180 (2015).

Payvand, M. & Indiveri, G. Spike-based plasticity circuits for always-on on-line learning in neuromorphic systems. IEEE International Symposium on Circuits and Systems (ISCAS) 1–5 (2019).

Azghadi, M. R., Iannella, N., Al-Sarawi, S., Indiveri, G. & Abbott, D. Spike-based synaptic plasticity in silicon: Design, implementation, application, and challenges. Proc. IEEE 102, 717–737 (2014).

Huayaney, F. L. M., Nease, S. & Chicca, E. Learning in silicon beyond STDP: a neuromorphic implementation of multi-factor synaptic plasticity with Calcium-based dynamics. IEEE Trans. Circuits Syst. I: Regul. Pap. 63, 2189–2199 (2016).

Brink, S. et al. A learning-enabled neuron array IC based upon transistor channel models of biological phenomena. Biomed. Circuits Syst., IEEE Trans. 7, 71–81 (2013).

Xia, Q. & Yang, J. J. Memristive crossbar arrays for brain-inspired computing. Nat. Mater. 18, 309 (2019).

Boybat, I. et al. Neuromorphic computing with multi-memristive synapses. Nat. Commun. 9, 2514 (2018).

Covi, E. et al. Analog memristive synapse in spiking networks implementing unsupervised learning. Front. Neurosci. 10, 1–13 (2016).

Roxin, A. & Fusi, S. Efficient partitioning of memory systems and its importance for memory consolidation. PLOS Computational Biol. 9, 1–13 (2013).

Hassenstein, B. & Reichardt, W. Systemtheoretische analyse der zeit-, reihenfolgen-und vorzeichenauswertung bei der bewegungsperzeption des rüsselkäfers chlorophanus. Z. f.ür. Naturforsch. B 11, 513–524 (1956).

Chicca, E., Lichtsteiner, P., Delbruck, T., Indiveri, G. & Douglas, R. Modeling orientation selectivity using a neuromorphic multi-chip system. International Symposium on Circuits and Systems, (ISCAS) 1235–1238 (2006).

Saal, H. P., Delhaye, B. P., Rayhaun, B. C. & Bensmaia, S. J. Simulating tactile signals from the whole hand with millisecond precision. Proc. Natl Acad. Sci. 114, E5693–E5702 (2017). Paper on the implementation of a simulator of the tactile perception of the human hand. The models used and the output of such a simulator are paramount to the design of neuromorphic system that can use a faithful simulation of the spike patterns given a certain stimulus, and of neuromorphic sensors that can replicate the same behaviour.

Douglas, R., Martin, K. & Whitteridge, D. A canonical microcircuit for neocortex. Neural Comput. 1, 480–488 (1989).

Binzegger, T., Douglas, R. & Martin, K. Topology and dynamics of the canonical circuit of cat V1. Neural Netw. 22, 1071–1078 (2009).

Mante, V., Sussillo, D., Shenoy, K. V. & Newsome, W. T. Context-dependent computation by recurrent dynamics in prefrontal cortex. Nature 503, 78–84 (2013).

Marcus, G. et al. The atoms of neural computation. Science 346, 551–552 (2014).

Davies, M. Benchmarks for progress in neuromorphic computing. Nat. Mach. Intell. 1, 386–388 (2019).

Mueggler, E., Rebecq, H., Gallego, G., Delbruck, T. & Scaramuzza, D. The event-camera dataset and simulator: Event-based data for pose estimation, visual odometry, and SLAM. Int. J. Robot. Res. 36, 142–149 (2017).

Serrano-Gotarredona, T. & Linares-Barranco, B. Poker-dvs and mnist-dvs. their history, how they were made, and other details. Front. Neurosci. 9 (2015). http://www.frontiersin.org/neuromorphic_engineering/10.3389/fnins.2015.00481/abstract.

Orchard, G. et al. Hfirst: a temporal approach to object recognition. IEEE Trans. pattern Anal. Mach. Intell. 37, 2028–2040 (2015).

Amir, A. et al. A low power, fully event-based gesture recognition system. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 7243–7252 (2017).

Calabrese, E. et al. DHP19: Dynamic vision sensor 3D human pose dataset. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (2019).

Acknowledgements

The authors wish to thank Adrian M. Whatley for insightful comments on the manuscript. G.I. was supported from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme grant agreement No 724295. C.B. was supported by the EU MSCA ITN project NeuTouch “Understanding neural coding of touch as enabling technology for prosthetics and robotics” GA 813713.

Author information

Authors and Affiliations

Contributions

C.B., G.I. and E.D. contributed to the structuring of the Perspective. C.B. contributed mainly to the Introduction, Neuromorphic Perception, boxes and Outlook. G.I. contributed mostly to Computational primitives for intelligent perception and behaviour. E.D. contributed to Neuromorphic Behaviour, figures and boxes. All authors reviewed the whole manuscript. The pictures of iCub in Fig. 1 and 2 are owned by IIT.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Florentin Woergoetter and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions