Abstract

Sickle cell retinopathy is often initially asymptomatic even in proliferative stages, but can progress to cause vision loss due to vitreous haemorrhages or tractional retinal detachments. Challenges with access and adherence to screening dilated fundus examinations, particularly in medically underserved areas where the burden of sickle cell disease is highest, highlight the need for novel approaches to screening for patients with vision-threatening sickle cell retinopathy. This article reviews the existing literature on and suggests future research directions for coupling artificial intelligence with multimodal retinal imaging to expand access to automated, accurate, imaging-based screening for sickle cell retinopathy. Given the variability in retinal specialist practice patterns with regards to monitoring and treatment of sickle cell retinopathy, we also discuss recent progress toward development of machine learning models that can quantitatively track disease progression over time. These artificial intelligence-based applications have great potential for informing evidence-based and resource-efficient clinical diagnosis and management of sickle cell retinopathy.

摘要

镰状细胞性视网膜病变最初甚至在增殖期通常无症状, 但随病情进展, 可由于玻璃体出血或牵拉性视网膜脱离而导致视力丧失。获取广角眼底检查所面临的挑战, 尤其是在医疗资源匮乏、镰状细胞病负担重的地区还面临着挑战, 采用新方法以筛查威胁视力的镰状细胞性视网膜病变患者具有必要性。本文回顾了现有文献, 并提出了未来的研究方向, 将人工智能与多模视网膜成像技术相结合, 以扩大对镰状细胞性视网膜病变的自动化、准确、基于影像的筛查。考虑到视网膜专家在监测和管理镰状细胞性视网膜病变模式的差异性, 我们还讨论了机器学习模型的最新进展, 该模型可以随着时间的推移定量地跟踪疾病进展。这些基于人工智能的应用对于镰状细胞性视网膜病变基于循证及资源有效的临床诊断和管理具有巨大潜力。

Similar content being viewed by others

Introduction

Sickle cell disease is the most common genetically inherited haematologic disorder, with a birth prevalence of over 300,000 new cases per year worldwide [1]. The most common causes of vision loss in patients with sickle cell disease stem from complications of proliferative sickle cell retinopathy (PSR), a condition in which chronic peripheral retinal microvascular occlusion and ischaemia stimulate the proliferation of sea fan neovascularization, which can cause vision-threatening vitreous haemorrhage or tractional retinal detachment [2, 3]. School- and working-age patients are disproportionately susceptible to these vision-threatening changes; by the age of 26 years, up to 43% of patients with Haemoglobin SC disease and 14% of patients with Haemoglobin SS disease have been found to develop PSR [4].

To enable early detection and possible prophylactic treatment with scatter laser photocoagulation prior to development of vision loss, consensus guidelines published in 2014 by an expert panel on multi-organ management of sickle cell disease included a strong recommendation to commence routine screening with dilated fundus examinations at age 10 years [5]. However, the globally low rates of adherence to these guidelines, as revealed by publications from Toronto [6], Jamaica [3], and Saudi Arabia [7], underscore the need for new approaches to increasing access and adherence to screening retinal examinations for sickle cell retinopathy (SCR). Moreover, a recent survey of practicing retina specialists demonstrated variable practice patterns for treating vision-threatening PSR, highlighting a need to build evidence-based treatment guidelines through existing data and clinical trials [8]. There is rapidly growing literature on the use of artificial intelligence to aid automated classification of retinal photographs for a variety of retinal conditions. The recent FDA approval of the artificial-intelligence-enabled IDx-DR [9] and EyeArt systems [10, 11] for screening for referrable diabetic retinopathy from fundus photographs has paved the way for application of similar systems to augment screening for other retinal diseases, such as SCR.

This review aims to summarize published literature on and highlight future research directions for the possible coupling of artificial intelligence and multimodal retinal imaging to enhance the diagnosis and management of SCR. For the purposes of this review, artificial intelligence refers to the broad field that includes the subsets of machine learning, whereby machines learn from data without human supervision, and deep learning, the subset of machine learning that employs multi-layered neural networks that mimic human cognitive processing without human supervision [12].

Search methodology

We evaluated the current literature on artificial intelligence and sickle cell retinopathy by searching PubMed and Google for studies published in English up to 30 December 2020, using keywords such as ‘deep learning’, ‘machine learning’, ‘artificial intelligence’ and ‘sickle cell retinopathy’. We also used reference lists and performed similar searches using specific search terms such as ‘fluorescein angiography’, ‘optical coherence tomography’ and ‘optical coherence tomography angiography’ to find applications of artificial intelligence to these types of retinal imaging in other retinal diseases.

Results

Artificial intelligence for screening for sickle cell retinopathy from ultra-widefield fundus photographs

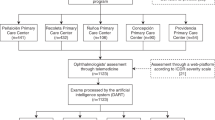

One of the most important potential applications of artificial intelligence to SCR lies in the use of automated interpretation of ultra-widefield fundus photographs (UWF-FPs) to enhance access and adherence to screening for the disease. The combination of automated artificial-intelligence-based algorithms with point-of-care fundus photography, which could be obtained at primary care or haematology office visits as has been previously demonstrated for diabetic retinopathy screening [9], may help consolidate care for visually asymptomatic patients with sickle cell disease who have difficulty juggling routine ophthalmology visits with school or work obligations and medical appointments or hospitalizations associated with their systemic disease burden [13]. Moreover, the convenience and scalability of automated screening systems have the potential to help overcome disparities in access to retinal screening examinations in Africa and other medically underserved regions of the world, where the prevalence of sickle cell disease far exceeds the availability of retinal specialists [14].

UWF-FPs are particularly amenable to artificial intelligence-based detection of SCR, given their ability to image the retinal periphery in a single frame and established advantages for SCR staging. For example, several retrospective and prospective studies have reported that graders of UWF-FPs more often detect nonproliferative SCR than clinicians performing dilated fundus examinations on the same patients [15,16,17]. Although the current standard of care for SCR screening relies on expert clinician interpretation of dilated fundus examinations and ancillary retinal imaging, well-validated artificial intelligence algorithms may offer unique advantages in terms of increased speed, accessibility and accuracy of image classification compared to human interpretation [18, 19].

Toward the goal of using artificial intelligence to automate screening for vision-threatening SCR from retinal photographs, our group recently developed a deep learning convolutional neural network that achieved 97.4% sensitivity and 97.0% sensitivity compared to retinal specialists in classifying Optos (Optos plc, Dunfermline, UK) UWF-FPs from patients with sickle cell haemoglobinopathy for presence or absence of sea fan neovascularization [20]. We trained, validated and tested this convolutional neural network using 1182 images from 190 adult patients with sickle cell haemoglobinopathy imaged at the Wilmer Eye Institute, of whom 53% were women, 94% were of Black American or African descent, 63% had Haemoglobin SS disease, 24% had Haemoglobin SC disease, and 30% had sea fan neovascularization in one or both eyes captured on Optos UWF-FP. Because some patients had multiple imaging encounters and most patient eyes had more than one image per eye included in this study, the performance of the deep learning algorithm was also assessed after clustering images from the same eyes and imaging encounters, yielding a sensitivity of 100% and specificity of 94.3% in detecting sea fan neovascularization from ‘eye encounters’.

The promising performance of this first application of deep learning to the automated detection of PSR from fundus photographs sets the stage for further work validating and refining our algorithm for real-world screening for SCR. A few limitations merit follow-up study. First, a common limitation to many deep learning algorithms is their “black box” nature, meaning that there is not a direct means for determining how the algorithms generated their final output classifications from the input images [19, 21,22,23]. Without transparency into the features of the input images that determine the final outputs, a concern is that potentially erroneous confounding features could affect the performance of the deep learning algorithm. For example, if a particular type of imaging artefact is often associated with sea fan neovascularization in a certain dataset, it is possible that a deep learning algorithm could learn to recognize the imaging artefact rather than sea fans themselves when classifying fundus photographs for presence or absence of PSR. To address this concern, a variety of saliency map visualization techniques have been developed to generate colorized heatmaps that visually highlight pixels of the input image that may be most important for the convolutional neural network output [24]. In our study, we used two different saliency map visualization techniques, called Guided Grad-CAM [25] and SmoothGrad [26], to assess which parts of the input UWF-FPs, when changed, most affected the final convolutional neural network output classifications of presence or absence of sea fan neovascularization [20]. On average, both saliency map types highlighted the relative importance of the peripheral retina, and especially temporal peripheral retina, for the convolutional neural network output classifications, which is consistent with the clinically observed predilection for sea fan neovascularization to arise in the temporal retinal periphery (Fig. 1) [2]. While this correspondence between the saliency map findings and clinical experience is encouraging, it is important to keep in mind the absence of standardized methods for reporting and comparing visualization methods for explaining how deep learning algorithms translate inputs into outputs.

The Optos image (left) is from a patient with Haemoglobin SC-associated proliferative sickle cell retinopathy. The color-coded Guided Grad-CAM (centre) and SmoothGrad (right) saliency maps highlight in red the regions of the input fundus photograph (including the temporal retina and in particular the two sea fan neovascularization complexes) most relevant to our convolutional neural network [20] for correctly classifying this fundus photograph as having sea fan neovascularization.

A second challenge for interpreting the performance of a deep learning model for classifying UWF-FPs for presence or absence of referable SCR lies in the need to assess whether UWF-FPs can reliably image with high resolution the areas of peripheral retina where sea fan neovascularization is most likely to occur. Peripheral retinal visualization can be limited on Optos UWF-FPs by eyelid artefact, peripheral image blurring and peripheral distortion [27]. In our study, 10% of included patients had at least one Optos colour UWF-FP graded as not having sea fan neovascularization but also a corresponding UWF-FA revealing an area of leakage not well captured on the UWF-FP due to image artefact, poor image resolution and/or image decentration [20]. Since repeated imaging from a single session, particularly with different centration, can increase the likelihood of adequately capturing peripheral retinal pathology, further research is needed to determine the best protocol for repeated images to optimize the sensitivity of detecting referable SCR. With larger multicentre databases of UWF-FPs, it should be possible to use machine learning algorithms to automate classification of UWF-FPs as being of sufficient or insufficient quality for grading of SCR. To the best of our knowledge, deep learning has previously been applied to the problems of automated retinal image quality classification for posterior pole fundus [28, 29] and Retcam images [30], but an analogous automated system for ultra-widefield images awaits development.

A third area important to assess before applying a deep learning algorithm for detection of PSR to real-world settings is its generalizability to different ethnic populations, patient ages and imaging systems. The patient population included in our study [20] was of predominantly Black American or African descent, consistent with the population with the highest rate of sickle cell disease worldwide [1]. Further research is needed to assess whether different fundus pigmentation patterns in patient populations from different ethnic backgrounds may differentially affect the sensitivity and specificity of machine learning models for detecting SCR from fundus photographs. In addition, given the recommendations to begin screening for SCR in childhood, it is important to assess the performance of deep learning models for sickle cell screening in the paediatric population. In particular, paediatric UWF-FPs are especially susceptible to the challenges of eyelid artefacts and image decentration, although a single-institutional prospective study of children aged 3 to 17 years suggested that paediatric UWF-FPs can capture an average of 50% greater retinal area than indirect ophthalmoscopic examination [31]. Finally, although the literature on ultra-widefield imaging in SCR has been dominated to date by Optos imaging, more research is needed to assess the potential value of the more recently developed Clarus (CLARUS 500, Carl Zeiss Meditec AG, Jena, Germany) ultra-widefield imaging system for imaging pathologic changes in SCR [27]. Relative advantages of the Clarus compared to Optos are true-colour imaging and reduced eyelid or eyelash artefact, but relative disadvantages of the Clarus are a brighter light flash and a smaller field of capture of 133° from individual images – although montaging increases the overall field of view [27]. One study reported that Optos images tend to capture retinal vascular detail in the superotemporal quadrant better than montaged Clarus images, which in turn may better capture retinal vascular detail in the inferonasal quadrant [32]. The implications of these findings for imaging of SCR (which preferentially affects the temporal retina) merit further study.

Going forward, machine learning methods may be particularly powerful for analysing longitudinal progression of pathologic vascular findings from UWF-FPs of eyes with SCR. In retinopathy of prematurity, deep learning has been used to develop a quantitative retinopathy of prematurity vascular severity score from Retcam fundus photographs that has been shown to correlate with disease severity, progression and post-treatment regression [33,34,35]. In diabetic retinopathy, deep learning has been used to show that baseline 7-field fundus photographs can predict future 2-step worsening on the Early Treatment Diabetic Retinopathy Severity Scale at 12-month follow-up with 91% sensitivity and 65% specificity [36]. With adequate long-term data correlating UWF-FPs with stage and need for treatment for vision-threatening PSR, it will be valuable to explore what machine learning may be able to add to the clinically established Goldberg [2] and Penman [37] sickle cell staging systems for predicting which patients are most at risk of losing vision from SCR.

Artificial intelligence for analysing ultra-widefield fluorescein angiography to improve sickle cell retinopathy staging

Although fluorescein angiography (FA) is invasive and more time- and resource-intensive than fundus photography, and thus less practical for large-scale SCR screening purposes, FA is the current gold standard for staging and monitoring of SCR [27]. Application of machine learning methods to large numbers of FAs collected from patients with sickle cell disease may offer new insights into retinal vascular features useful for monitoring and predicting SCR progression. A better understanding of features that may predict the rate of progression of SCR could in turn aid the development of future evidence-based consensus guidelines about recommended frequency of monitoring of patients with advanced nonproliferative or early proliferative SCR [8].

Thus far, deep learning has been used to augment the creation of retinal vessel masks from FA images, which can subsequently be used to aid automated computation of quantitative metrics such as vessel length, vessel area, ischaemic index (the percentage of total visualized area with nonperfusion) and geodesic index (the shortest distance from the optic nerve centre to the edge of the peripheral vasculature) in ultra-widefield FAs (UWF-FAs) from patients with sickle cell disease [38]. In a recent study by Sevgi et al. of 74 eyes from 45 patients with sickle cell haemoglobinopathy with two imaging visits spaced at least 3 months (and a mean of 23.0 ± 15.1) months apart, the mean ischaemic index was shown to increase and mean vessel area and geodesic index were seen to decrease over time [38]. A nonsignificant trend toward a more rapid increase in ischaemic index over time was observed among patients with Haemoglobin SC than in the Haemoglobin SS or other Haemoglobin S variant cohorts. The authors noted that deep learning algorithms were superior to alternative image processing techniques in capturing detailed pathologic changes of SCR in the skeletonized retinal vessel masks (Fig. 2). In the future, larger longitudinal studies may be useful for correlating the rates of change of quantitative markers of ischaemia with the risk of development of sea fan neovascularization and vision-threatening complications of PSR.

Given the value of FA in identifying leakage as a marker of PSR, future work aided by machine learning could also help quantify patterns of progression as well as identify pathologic vascular precursors to leakage on FA. Using manual grading methods, Barbosa et al. previously used ImageJ to retrospectively assess extent and intensity of leakage in patients with PSR treated with scatter laser photocoagulation [39]. Automated methods could improve the speed and reproducibility with which leakage can be quantified serially on FAs from eyes with SCR, with or without treatment. For the related but distinct condition of diabetic retinopathy, Ehlers et al. developed an automated algorithm for segmenting retinal vessels and then detecting and quantifying leakage from Optos FA images [40]. This algorithm merits formal validation in images from patients with SCR. A deep learning algorithm that Sevgi et al. [41] have recently presented for automated selection of early and late phase FA images with maximum visualized retinal vessel area and similar captured fields of view between the early and late phases may also prove very useful in longitudinal analysis of FAs from eyes with sickle cell disease, aiding discovery of machine-learning-assisted methods for staging and quantifying vascular abnormalities in SCR.

Artificial intelligence for using optical coherence tomography angiography to improve sickle cell retinopathy screening and staging

In contrast to fundus images, which only highlight the major retinal vessels, or FA, which is relatively time-consuming and invasive, OCT angiography (OCTA) allows for relatively rapid, noninvasive imaging of retinal vasculature with capillary-level resolution as well as the ability to separately analyse different retinal layers. The emergence of widefield swept-source OCTA (SS-OCTA) with montages of scans taken with different visual fixation points has shown promise for replacing or complementing UWF-FA in the staging of diabetic retinopathy, including identification of areas of neovascularization [42,43,44,45,46,47]. Compared to spectral-domain OCTA systems, SS-OCTA uses a tuneable light source with a longer wavelength and more advanced sensor, enabling high-speed acquisition of images with a wider field of view and greater depth of tissue penetration [48]. To date, there have been limited reports using SS-OCTA in SCR, and these studies have focused on macular rather than peripheral findings [49, 50]. Because neovascularization in PSR tends to develop more peripherally than neovascularization in proliferative diabetic retinopathy, further work is needed to assess how widefield SS-OCTA compares to UWF-FA in capturing peripheral pathology in patients with sickle cell disease (Fig. 3). If widefield SS-OCTA can be optimized for detection of the peripheral changes of SCR, the combination of artificial intelligence with widefield SS-OCTA and UWF-FP could be a powerful noninvasive screening tool in the future for PSR.

A montage of five 12 × 12 mm swept-source optical coherence tomography angiography scans enables a non-invasive, non-contact widefield view (>50 degrees), including details of temporal peripheral vascular pathology beyond the macula. Areas of decreased flow signal inferiorly are due to blockage from overlying vitreous haemorrhage. (Image courtesy of Ian C. Han, MD).

Meanwhile, machine learning is already being used to identify and classify eyes with SCR from pathologic changes visible on OCTA imaging of the macula. Alam et al. have previously taken the approach of identifying optimal combinations of quantitative parameters extracted from macular OCTA images that can distinguish between eyes with and without sickle cell-related vascular changes and also distinguish different severities of SCR [51]. Numerous studies have highlighted such changes as decreased superficial and deep capillary plexus vessel density [52], greater deep capillary plexus foveal avascular zone area [50] and greater foveal avascular zone acircularity [53] in eyes with sickle cell disease than controls. Alam et al. found that application of machine learning methods to a combination of 6 OCTA quantitative parameters (blood vessel tortuosity, blood vessel diameter, vessel perimeter index, foveal avascular zone area, foveal avascular zone contour irregularity and parafoveal avascular density) – previously confirmed by the same authors to significantly differ between eyes with SCR and control eyes [54] – was found to yield superior performance than any individual OCTA parameter alone [51]. Their support vector machine classifier achieved 100% sensitivity and 100% specificity for distinguishing between 6×6 mm spectral-domain macular OCTAs from eyes with SCR and healthy controls [51]. Importantly, this classifier also achieved 97% sensitivity and 95% specificity in distinguishing between eyes with Goldberg stage II and Goldberg stage III SCR as staged by retinal specialists from dilated fundus examinations, suggesting a correlation between macular and peripheral vascular changes in SCR. A prospective study by our group demonstrating a significant correlation between macular OCTA vessel density measurements (both in the deep capillary plexus and temporal superficial capillary plexus) and peripheral nonperfusion as quantified by the ischaemic index on UWF-FA further supports the idea that macular OCTA may be a viable proxy for peripheral OCTA in identifying eyes at risk of vision loss from PSR [55].

Whereas Alam et al. used machine learning to classify SCR using preselected quantitative metrics derived from OCTA images, an alternative approach is to use deep learning to classify SCR based on the OCTA images themselves rather than extracted metrics. For example, in age-related macular degeneration, a convolutional neural network has been shown to achieve 100% sensitivity and 95% specificity compared to a retinal specialist grader in automatically classifying OCTAs as having or not having choroidal neovascularization [56]. Similar convolutional neural network algorithms could be used in the future to classify diagnostically or prognostically relevant OCTA findings in eyes with SCR. Given the many potential artefacts that can hinder OCTA interpretation [57], it will be important in future work to build on previously developed deep learning models for automation of vessel segmentation [58,59,60,61] and classification of OCTA image quality [62].

Future opportunities for applications of artificial intelligence to optical coherence tomography of sickle cell retinopathy

In addition to the application of artificial intelligence to the imaging modalities discussed above, there is great potential for automated analysis of optical coherence tomography (OCT) scans of patients with SCR. Multiple structural OCT changes have been observed in SCR, such as foveal splaying, inner and/or outer retinal thinning and choroidal thinning [63,64,65,66,67,68,69]. Not only may these structural changes have visual consequences in terms of decreased retinal sensitivity on microperimetry [70] and impaired contrast and colour vision [71], but a previously reported association between macular retinal thinning on OCT and the ischaemic index on UWF-FA highlights a potential correlation between macular structural and peripheral vascular pathology [72]. The ability to capture high-quality registered volume scans and the availability of longitudinal OCT imaging and normative data compared to more nascent technology such as OCTA make automated analysis of OCT particularly appealing for following microstructural changes in SCR over time. A recent longitudinal prospective study reported higher rates of macular OCT thinning over time in patients with SCR compared to age- and race-matched controls [73]. We anticipate that machine learning can be used to automate identification and tracking of OCT-based biomarkers of SCR progression, drawing on the rapidly growing literature using deep learning to diagnose and localize pathologic findings from OCT such as diabetic macular oedema [74,75,76], age-related macular degeneration [77], serous retinal detachments [78], cavitations in macular telangiectasia [79] and epiretinal membranes [80].

Conclusions

The integration of artificial intelligence with multimodal imaging holds great promise for improvement of multiple facets of the diagnosis and management of SCR – from screening for PSR to automating detection and quantification of pathologic vascular changes that may help predict risk of future vision loss and need for treatment. While considerable literature on artificial intelligence in ophthalmology has been devoted to the classification of conditions using individual imaging methods, some studies have also highlighted an opportunity to improve performance by simultaneously incorporating multiple types of imaging [81, 82]. Also, it is important to keep in mind that patients with sickle cell disease may have comorbid ocular conditions such as diabetic retinopathy and glaucoma that may be detectable from retinal imaging and merit referral to an ophthalmologist [83]. Future work is needed to determine how best to integrate simultaneous screening for multiple ocular diseases from multimodal imaging.

In regions of the world where access to retinal specialists is limited, strategic deployment of artificial intelligence may allow automated interpretation of imaging obtained at non-ophthalmology medical offices or community screening centres to help identify patients with sickle cell disease most in need of referral to retinal specialists for further evaluation and management of their SCR. When access to or availability of retinal specialists is not the limiting factor, the combination of artificial intelligence and point-of-care imaging can still help to reduce the burden of medical visits for patients with sickle cell disease, improving adherence to routine screening for SCR. In children, where ultra-widefield imaging may provide superior peripheral retinal visualization to dilated fundus examinations limited by patient cooperation [31], retinal imaging is an especially attractive screening approach. Given the high cost of currently commercially available imaging devices, cost-effectiveness analyses and technological advancements toward portable and less expensive imaging options will be critical to realizing the global public health potential of artificial intelligence for screening purposes.

Equally important to the potential benefits of artificial intelligence for reducing the dependence on in-person retinal specialist visits for SCR screening are the opportunities for artificial intelligence to help inform clinical practice in the care of patients with SCR. Global migration patterns are changing the population distribution of SCR, such that ophthalmic providers with limited prior exposure to SCR may find automated imaging interpretation algorithms to be a particularly useful aid for enhancing recognition of clinically relevant vascular changes. In addition, the currently used Goldberg and Penman sickle cell classification systems were developed through astute clinical observations and based on fundus findings and non-widefield FA from relatively small subsets of patients decades ago [2, 37]. While expert clinicians have the ability to make broad inferences based on small data, artificial intelligence-based approaches have distinct advantages when learning from large amounts of data [84]. By harnessing the power of modern computational methods and retinal imaging techniques, it may be possible to develop more quantitative and nuanced staging systems for SCR, which can be potentially combined with additional systemic clinical data to assess personalized risks to individual patients of future vision loss from PSR. Due to frequent asymptomatic auto-infarction of sea fan neovascularization [85], criteria for timing and methodology of treatment for PSR are still controversial, guided by a single randomized trial of scatter photocoagulation from three decades ago [86]. Artificial intelligence may improve our ability to study clinical and imaging data to identify best-practice patterns for managing SCR.

One important prerequisite for the development of robust artificial intelligence, and especially deep learning, methods for classification of SCR is to have adequately large datasets that are well-labelled with a reliable gold standard [84]. Current clinical practice patterns are highly variable with respect to the frequency and types of imaging obtained as part of routine screening or follow-up of SCR [8]. Our hope is that greater awareness of the diagnostic and prognostic utility of multimodal imaging in SCR, and also of the opportunities for using deep learning to process large numbers of images collected across the world to make new discoveries, will lead to increased imaging of as well as research into artificial intelligence-assisted analysis of images and clinical data from patients with sickle cell disease.

References

Wastnedge E, Waters D, Patel S, Morrison K, Goh MY, Adeloye D, et al. The global burden of sickle cell disease in children under five years of age: a systematic review and meta-analysis. J Glob Health. 2018;8:021103.

Goldberg MF. Classification and pathogenesis of proliferative sickle retinopathy. Am J Ophthalmol. 1971;71:649–65.

Moriarty BJ, Acheson RW, Condon PI, Serjeant GR. Patterns of visual loss in untreated sickle cell retinopathy. Eye (Lond). 1988;2:330–5.

Downes SM, Hambleton IR, Chuang EL, Lois N, Serjeant GR, Bird AC. Incidence and natural history of proliferative sickle cell retinopathy: observations from a cohort study. Ophthalmology. 2005;112:1869–75.

Yawn BP, Buchanan GR, Afenyi-Annan AN, Ballas SK, Hassell KL, James AH, et al. Management of sickle cell disease: summary of the 2014 evidence-based report by expert panel members. JAMA. 2014;312:1033–48.

Gill HS, Lam WC. A screening strategy for the detection of sickle cell retinopathy in pediatric patients. Can J Ophthalmol. 2008;43:188–91.

Alshehri AM, Feroze KB, Amir MK. Awareness of ocular manifestations, complications, and treatment of sickle cell disease in the Eastern Province of Saudi Arabia: a cross-sectional study. Middle East Afr J Ophthalmol. 2019;26:89–94.

Mishra K, Bajaj R, Scott AW. Variable practice patterns for management of sickle cell retinopathy. Ophthalmol Retina. 2020;S2468-6530:30481–4.

Abramoff MD, Lavin PT, Birch M, Shah N, Folk JC. Pivotal trial of an autonomous AI-based diagnostic system for detection of diabetic retinopathy in primary care offices. NPJ Digit Med. 2018;1:39.

Lim J, Bhaskaranand M, Ramachandra C, Bhat S, Solanki K, Sadda S. Artificial intelligence screening for diabetic retinopathy: analysis from a pivotal multi-center prospective clinical trial. In: Paper presented atARVO Imaging in the Eye Conference; 2019 Apr 27; Vancouver, BC, Canada.

Bhaskaranand M, Ramachandra C, Bhat S, Cuadros J, Nittala MG, Sadda SR, et al. The value of automated diabetic retinopathy screening with the EyeArt system: a study of more than 100,000 consecutive encounters from people with diabetes. Diabetes Technol Ther. 2019;21:635–43.

Mayro EL, Wang M, Elze T, Pasquale LR. The impact of artificial intelligence in the diagnosis and management of glaucoma. Eye (Lond). 2020;34:1–11.

Shah N, Bhor M, Xie L, Halloway R, Arcona S, Paulose J, et al. Treatment patterns and economic burden of sickle-cell disease patients prescribed hydroxyurea: a retrospective claims-based study. Health Qual Life Outcomes. 2019;17:155.

Dean WH, Buchan JC, Gichuhi S, Faal H, Mpyet C, Resnikoff S, et al. Ophthalmology training in sub-Saharan Africa: a scoping review. Eye (Lond). 2021;35:1066–83.

Alabduljalil T, Cheung CS, VandenHoven C, Mackeen LD, Kirby-Allen M, Kertes PJ, et al. Retinal ultra-wide-field colour imaging versus dilated fundus examination to screen for sickle cell retinopathy. Br J Ophthalmol. 2020. [Epub ahead of print]

Bunod R, Mouallem-Beziere A, Amoroso F, Capuano V, Bitton K, Kamami-Levy C, et al. Sensitivity and specificity of ultrawide-field fundus photography for the staging of sickle cell retinopathy in real-life practice at varying expertise level. J Clin Med. 2019;8:1660.

Han IC, Zhang AY, Liu TYA, Linz MO, Scott AW. Utility of ultra-widefield retinal imaging for the staging and management of sickle cell retinopathy. Retina. 2019;39:836–43.

McKenna M, Chen T, McAneney H, Vazquez Membrillo MA, Jin L, Xiao W, et al. Accuracy of trained rural ophthalmologists versus non-medical image graders in the diagnosis of diabetic retinopathy in rural China. Br J Ophthalmol. 2018;102:1471–6.

Scruggs BA, Chan RVP, Kalpathy-Cramer J, Chiang MF, Campbell JP. Artificial intelligence in retinopathy of prematurity diagnosis. Transl Vis Sci Technol. 2020;9:5.

Cai S, Parker F, Urias MG, Goldberg MF, Hager GD, Scott AW. Deep learning detection of sea fan neovascularization from ultra-widefield color fundus photographs of patients with sickle cell hemoglobinopathy. JAMA Ophthalmol. 2021;139:206–13.

Nielsen KB, Lautrup ML, Andersen JKH, Savarimuthu TR, Grauslund J. Deep learning-based algorithms in screening of diabetic retinopathy: a systematic review of diagnostic performance. Ophthalmol Retin. 2019;3:294–304.

Rahimy E. Deep learning applications in ophthalmology. Curr Opin Ophthalmol. 2018;29:254–60.

Ting DSW, Pasquale LR, Peng L, Campbell JP, Lee AY, Raman R, et al. Artificial intelligence and deep learning in ophthalmology. Br J Ophthalmol. 2019;103:167–75.

Ting DSW, Peng L, Varadarajan AV, Keane PA, Burlina PM, Chiang MF, et al. Deep learning in ophthalmology: the technical and clinical considerations. Prog Retin Eye Res. 2019;72:100759.

Selvaraju RR, Cogswell M, Das A, Vedantam R, Parikh D, Batra D. Grad-CAM: visual explanations from deep networks via gradient-based localization. In: Proceedings of the IEEE international conference on computer vision. 2017. pp. 618–26.

Smilkov D, Thorat N, Kim B, Viégas F, Wattenberg M. SmoothGrad: removing noise by adding noise. 2017. Preprint at https://arxiv.org/abs/1706.03825.

Linz MO, Scott AW. Wide-field imaging of sickle retinopathy. Int J Retin Vitreous. 2019;5:27.

Saha SK, Fernando B, Cuadros J, Xiao D, Kanagasingam Y. Automated quality assessment of colour fundus images for diabetic retinopathy screening in telemedicine. J Digit Imaging. 2018;31:869–78.

Zago GT, Andreao RV, Dorizzi B, Teatini, Salles EO. Retinal image quality assessment using deep learning. Comput Biol Med. 2018;103:64–70.

Coyner AS, Swan R, Brown JM, Kalpathy-Cramer J, Kim SJ, Campbell JP, et al. Deep learning for image quality assessment of fundus images in retinopathy of prematurity. AMIA Annu Symp Proc. 2018;2018:1224–32.

Ramkumar HL, Koduri M, Conger J, Robbins SL, Granet D, Freeman WR, et al. Comparison of digital widefield retinal imaging with indirect ophthalmoscopy in pediatric patients. Ophthalmic Surg Lasers Imaging Retin. 2019;50:580–5.

Matsui Y, Ichio A, Sugawara A, Uchiyama E, Suimon H, Matsubara H, et al. Comparisons of effective fields of two ultra-widefield ophthalmoscopes, Optos 200Tx and Clarus 500. Biomed Res Int. 2019;2019:7436293.

Campbell JP, Kim SJ, Brown JM, Ostmo S, Chan RVP, Kalpathy-Cramer J, et al. Evaluation of a deep learning-derived quantitative retinopathy of prematurity severity scale. Ophthalmology. 2020;S0161–6420:31027–7.

Gupta K, Campbell JP, Taylor S, Brown JM, Ostmo S, Chan RVP, et al. A quantitative severity scale for retinopathy of prematurity using deep learning to monitor disease regression after treatment. JAMA Ophthalmol. 2019;137:1029–36.

Taylor S, Brown JM, Gupta K, Campbell JP, Ostmo S, Chan RVP, et al. Monitoring disease progression with a quantitative severity scale for retinopathy of prematurity using deep learning. JAMA Ophthalmol. 2019;137:1022–28.

Arcadu F, Benmansour F, Maunz A, Willis J, Haskova Z, Prunotto M. Deep learning algorithm predicts diabetic retinopathy progression in individual patients. NPJ Digit Med. 2019;2:92.

Penman AD, Talbot JF, Chuang EL, Thomas P, Serjeant GR, Bird AC. New classification of peripheral retinal vascular changes in sickle cell disease. Br J Ophthalmol. 1994;78:681–9.

Sevgi DD, Scott AW, Martin A, Mugnaini C, Patel S, Linz MO, et al. Longitudinal assessment of quantitative ultra-widefield ischaemic and vascular parameters in sickle cell retinopathy. Br J Ophthalmol. 2020. [Epub ahead of print]

Barbosa J, Malbin B, Le K, Lin X. Quantifying areas of vascular leakage in sickle cell retinopathy using standard and widefield fluorescein angiography. Ophthalmic Surg Lasers Imaging Retin. 2020;51:153–8.

Ehlers JP, Wang K, Vasanji A, Hu M, Srivastava SK. Automated quantitative characterisation of retinal vascular leakage and microaneurysms in ultra-widefield fluorescein angiography. Br J Ophthalmol. 2017;101:696–9.

Sevgi DD, Hach J, Srivastava SK, Wykoff C, O’connell M, Whitney J, et al. Automated quality optimized phase selection in ultra-widefield angiography using retinal vessel segmentation with deep neural networks. Investig Ophthalmol Vis Sci. 2020;61:PB00125-PB.

Couturier A, Rey PA, Erginay A, Lavia C, Bonnin S, Dupas B, et al. Widefield OCT-angiography and fluorescein angiography assessments of nonperfusion in diabetic retinopathy and edema treated with anti-vascular endothelial growth factor. Ophthalmology 2019;126:1685–94.

Cui Y, Zhu Y, Wang JC, Lu Y, Zeng R, Katz R, et al. Comparison of widefield swept-source optical coherence tomography angiography with ultra-widefield colour fundus photography and fluorescein angiography for detection of lesions in diabetic retinopathy. Br J Ophthalmol. 2021;105:577–81.

Khalid H, Schwartz R, Nicholson L, Huemer J, El-Bradey MH, Sim DA, et al. Widefield optical coherence tomography angiography for early detection and objective evaluation of proliferative diabetic retinopathy. Br J Ophthalmol. 2021;105:118–23.

Russell JF, Al-Khersan H, Shi Y, Scott NL, Hinkle JW, Fan KC, et al. Retinal nonperfusion in proliferative diabetic retinopathy before and after panretinal photocoagulation assessed by widefield OCT angiography. Am J Ophthalmol. 2020;213:177–85.

Russell JF, Flynn HW Jr., Sridhar J, Townsend JH, Shi Y, Fan KC, et al. Distribution of diabetic neovascularization on ultra-widefield fluorescein angiography and on simulated widefield OCT angiography. Am J Ophthalmol. 2019;207:110–20.

Sawada O, Ichiyama Y, Obata S, Ito Y, Kakinoki M, Sawada T, et al. Comparison between wide-angle OCT angiography and ultra-wide field fluorescein angiography for detecting non-perfusion areas and retinal neovascularization in eyes with diabetic retinopathy. Graefes Arch Clin Exp Ophthalmol. 2018;256:1275–80.

Potsaid B, Baumann B, Huang D, Barry S, Cable AE, Schuman JS, et al. Ultrahigh speed 1050nm swept source/Fourier domain OCT retinal and anterior segment imaging at 100,000 to 400,000 axial scans per second. Opt Express. 2010;18:20029–48.

Jung JJ, Chen MH, Frambach CR, Rofagha S, Lee SS. Spectral domain versus swept source optical coherence tomography angiography of the retinal capillary plexuses in sickle cell maculopathy. Retin Cases Brief Rep. 2018;12:87–92.

Mokrane A, Gazeau G, Levy V, Fajnkuchen F, Giocanti-Auregan A. Analysis of the foveal microvasculature in sickle cell disease using swept-source optical coherence tomography angiography. Sci Rep. 2020;10:11795.

Alam M, Thapa D, Lim JI, Cao D, Yao X. Computer-aided classification of sickle cell retinopathy using quantitative features in optical coherence tomography angiography. Biomed Opt Express. 2017;8:4206–16.

Ong SS, Linz MO, Li X, Liu TYA, Han IC, Scott AW. Retinal thickness and microvascular changes in children with sickle cell disease evaluated by optical coherence tomography (OCT) and OCT angiography. Am J Ophthalmol. 2020;209:88–98.

Lynch G, Scott AW, Linz MO, Han I, Andrade Romo JS, Linderman RE, et al. Foveal avascular zone morphology and parafoveal capillary perfusion in sickle cell retinopathy. Br J Ophthalmol. 2020;104:473–9.

Alam M, Thapa D, Lim JI, Cao D, Yao X. Quantitative characteristics of sickle cell retinopathy in optical coherence tomography angiography. Biomed Opt Express. 2017;8:1741–53.

Han IC, Linz MO, Liu TYA, Zhang AY, Tian J, Scott AW. Correlation of ultra-widefield fluorescein angiography and OCT angiography in sickle cell retinopathy. Ophthalmol Retin. 2018;2:599–605.

Wang J, Hormel TT, Gao L, Zang P, Guo Y, Wang X, et al. Automated diagnosis and segmentation of choroidal neovascularization in OCT angiography using deep learning. Biomed Opt Express. 2020;11:927–44.

Spaide RF, Fujimoto JG, Waheed NK, Sadda SR, Staurenghi G. Optical coherence tomography angiography. Prog Retin Eye Res. 2018;64:1–55.

Gao M, Guo Y, Hormel TT, Sun J, Hwang TS, Jia Y. Reconstruction of high-resolution 6x6-mm OCT angiograms using deep learning. Biomed Opt Express. 2020;11:3585–600.

Kadomoto S, Uji A, Muraoka Y, Akagi T, Tsujikawa A Enhanced visualization of retinal microvasculature in optical coherence tomography angiography imaging via deep learning. J Clin Med. 2020;9:1322.

Lo J, Heisler M, Vanzan V, Karst S, Matovinovic IZ, Loncaric S, et al. Microvasculature segmentation and intercapillary area quantification of the deep vascular complex using transfer learning. Transl Vis Sci Technol. 2020;9:38.

Prentasic P, Heisler M, Mammo Z, Lee S, Merkur A, Navajas E, et al. Segmentation of the foveal microvasculature using deep learning networks. J Biomed Opt. 2016;21:75008.

Lauermann JL, Treder M, Alnawaiseh M, Clemens CR, Eter N, Alten F. Automated OCT angiography image quality assessment using a deep learning algorithm. Graefes Arch Clin Exp Ophthalmol. 2019;257:1641–8.

Jin J, Miller R, Salvin J, Lehman S, Hendricks D, Friess A, et al. Funduscopic examination and SD-OCT in detecting sickle cell retinopathy among pediatric patients. J AAPOS. 2018;22:197–201. e1

Cai CX, Han IC, Tian J, Linz MO, Scott AW. Progressive retinal thinning in sickle cell retinopathy. Ophthalmol Retin. 2018;2:1241–8. e2

Hoang QV, Chau FY, Shahidi M, Lim JI. Central macular splaying and outer retinal thinning in asymptomatic sickle cell patients by spectral-domain optical coherence tomography. Am J Ophthalmol. 2011;151:990–4. e1

Lim JI, Cao D. Analysis of retinal thinning using spectral-domain optical coherence tomography imaging of sickle cell retinopathy eyes compared to age- and race-matched control eyes. Am J Ophthalmol. 2018;192:229–38.

Lim WS, Magan T, Mahroo OA, Hysi PG, Helou J, Mohamed MD. Retinal thickness measurements in sickle cell patients with HbSS and HbSC genotype. Can J Ophthalmol. 2018;53:420–4.

Mathew R, Bafiq R, Ramu J, Pearce E, Richardson M, Drasar E, et al. Spectral domain optical coherence tomography in patients with sickle cell disease. Br J Ophthalmol. 2015;99:967–72.

Pahl DA, Green NS, Bhatia M, Lee MT, Chang JS, Licursi M, et al. Optical coherence tomography angiography and ultra-widefield fluorescein angiography for early detection of adolescent sickle retinopathy. Am J Ophthalmol. 2017;183:91–8.

Chow CC, Genead MA, Anastasakis A, Chau FY, Fishman GA, Lim JI. Structural and functional correlation in sickle cell retinopathy using spectral-domain optical coherence tomography and scanning laser ophthalmoscope microperimetry. Am J Ophthalmol. 2011;152:704–11. e2

Martin GC, Denier C, Zambrowski O, Grevent D, Bruere L, Brousse V, et al. Visual function in asymptomatic patients with homozygous sickle cell disease and temporal macular atrophy. JAMA Ophthalmol. 2017;135:1100–5.

Ghasemi Falavarjani K, Scott AW, Wang K, Han IC, Chen X, Klufas M, et al. Correlation of multimodal imaging in sickle cell retinopathy. Retina 2016;36:S111–S7. Suppl 1

Lim JI, Niec M, Sun J, Cao D Longitudinal assessment of retinal thinning in adults with and without sickle cell retinopathy using spectral-domain optical coherence tomography. JAMA Ophthalmol. 2021;139:330–7.

Guo Y, Hormel TT, Xiong H, Wang J, Hwang TS, Jia Y. Automated segmentation of retinal fluid volumes from structural and angiographic optical coherence tomography using deep learning. Transl Vis Sci Technol. 2020;9:54.

Wu Q, Zhang B, Hu Y, Liu B, Cao D, Yang D, et al. Detection of morphologic patterns of diabetic macular edema using a deep learning approach based on optical coherence tomography images. Retina. 2021;41:1110–7.

Zhang Q, Liu Z, Li J, Liu G. Identifying diabetic macular edema and other retinal diseases by optical coherence tomography image and multiscale deep learning. Diabetes Metab Syndr Obes. 2020;13:4787–800.

Lee CS, Baughman DM, Lee AY. Deep learning is effective for the classification of OCT images of normal versus age-related macular degeneration. Ophthalmol Retin. 2017;1:322–7.

Yoon J, Han J, Park JI, Hwang JS, Han JM, Sohn J, et al. Optical coherence tomography-based deep-learning model for detecting central serous chorioretinopathy. Sci Rep. 2020;10:18852.

Loo J, Cai CX, Choong J, Chew EY, Friedlander M, Jaffe GJ, et al. Deep learning-based classification and segmentation of retinal cavitations on optical coherence tomography images of macular telangiectasia type 2. Br J Ophthalmol. 2020. [Epub ahead of print]

Lo YC, Lin KH, Bair H, Sheu WH, Chang CS, Shen YC, et al. Epiretinal membrane detection at the ophthalmologist level using deep learning of optical coherence tomography. Sci Rep. 2020;10:8424.

van Grinsven MJ, Buitendijk GH, Brussee C, van Ginneken B, Hoyng CB, Theelen T, et al. Automatic identification of reticular pseudodrusen using multimodal retinal image analysis. Invest Ophthalmol Vis Sci. 2015;56:633–9.

Wisely CE, Wang D, Henao R, Grewal DS, Thompson AC, Robbins CB, et al. Convolutional neural network to identify symptomatic Alzheimer’s disease using multimodal retinal imaging. Br J Ophthalmol. 2020. [Epub ahead of print]

Ting DSW, Cheung CY, Lim G, Tan GSW, Quang ND, Gan A, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA 2017;318:2211–23.

Rajkomar A, Dean J, Kohane I. Machine learning in medicine. N. Engl J Med. 2019;380:1347–58.

Condon PI, Serjeant GR. Behaviour of untreated proliferative sickle retinopathy. Br J Ophthalmol. 1980;64:404–11.

Farber MD, Jampol LM, Fox P, Moriarty BJ, Acheson RW, Rabb MF, et al. A randomized clinical trial of scatter photocoagulation of proliferative sickle cell retinopathy. Arch Ophthalmol. 1991;109:363–7.

Funding

AWS is supported by private philanthropy from Gail C. and Howard Woolley.

Author information

Authors and Affiliations

Contributions

SC was responsible for literature review and drafting of the manuscript and figures. ICH and AWS were responsible for critical review of the manuscript. All authors have read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interest.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cai, S., Han, I.C. & Scott, A.W. Artificial intelligence for improving sickle cell retinopathy diagnosis and management. Eye 35, 2675–2684 (2021). https://doi.org/10.1038/s41433-021-01556-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1038/s41433-021-01556-4